Optimizing Drug Development: Implementing the Neural Population Dynamics Optimization Algorithm (NPDOA) for Engineering Design Problems

This article explores the implementation of the Neural Population Dynamics Optimization Algorithm (NPDOA), a novel brain-inspired meta-heuristic, to address complex engineering design challenges in drug development.

Optimizing Drug Development: Implementing the Neural Population Dynamics Optimization Algorithm (NPDOA) for Engineering Design Problems

Abstract

This article explores the implementation of the Neural Population Dynamics Optimization Algorithm (NPDOA), a novel brain-inspired meta-heuristic, to address complex engineering design challenges in drug development. It provides a foundational understanding of NPDOA's unique attractor trending, coupling disturbance, and information projection strategies. A methodological guide for its application in pharmaceutical contexts, such as Quality by Design (QbD) and formulation optimization, is detailed. The content further addresses troubleshooting common implementation issues and presents a comparative analysis validating NPDOA's performance against other algorithms using benchmark functions and real-world case studies, offering researchers and drug development professionals a powerful new tool for enhancing efficiency and innovation.

The Brain-Inspired Optimizer: Unpacking NPDOA for Pharmaceutical Scientists

Bio-inspired metaheuristic algorithms represent a cornerstone of artificial intelligence, comprising computational methods designed to solve complex optimization problems by emulating natural processes, such as evolution, swarming behavior, and natural selection [1]. In the field of drug development, these algorithms are increasingly critical for navigating high-dimensional, multi-faceted problems where traditional optimization techniques fall short. The drug discovery process is inherently lengthy and costly, taking an average of 10–15 years and costing approximately $2.6 billion from concept to market, with a failure rate exceeding 90% for candidates entering early clinical trials [2] [3]. Bio-inspired metaheuristics address key inefficiencies in this pipeline, enabling researchers to tackle challenges in de novo drug design, molecular docking, and multi-objective optimization of compound properties more effectively [4] [5].

These algorithms are particularly suited to drug development because they do not require gradient information, can escape local optima, and are highly effective for exploring vast, complex search spaces—such as the virtually infinite chemical space of potential drug-like molecules [5] [1]. Their population-based nature allows for the simultaneous evaluation of multiple candidate solutions, making them ideal for multi-objective optimization problems where several conflicting goals—such as maximizing drug potency while minimizing toxicity and synthesis cost—must be balanced [4]. This application note details the core algorithms, provides experimental protocols for their implementation, and visualizes their integration into standard drug development workflows.

Core Algorithm Definitions and Classifications

Bio-inspired metaheuristics can be broadly categorized into evolutionary algorithms, swarm intelligence, and other nature-inspired optimizers. The table below summarizes the primary algorithm families and their specific applications in drug development.

Table 1: Key Bio-Inspired Metaheuristic Algorithms in Drug Development

| Algorithm Family | Representative Algorithms | Key Mechanism | Primary Drug Development Applications |

|---|---|---|---|

| Evolutionary Algorithms | Genetic Algorithms (GA), Differential Evolution (DE) | Selection, crossover, and mutation | De novo design, lead optimization, QSAR modeling [4] [5] |

| Swarm Intelligence | Particle Swarm Optimization (PSO), Competitive Swarm Optimizer (CSO) | Social learning and movement in particle swarms | Molecular docking, conformational analysis [5] [1] |

| Swarm Intelligence (Advanced) | Competitive Swarm Optimizer with Mutating Agents (CSO-MA) | Pairwise competition and boundary mutation | High-dimensional parameter estimation, complex bioinformatics tasks [1] |

| Other Metaheuristics | Cuckoo Search, Firefly Algorithm | Brood parasitism, bioluminescent attraction | Feature selection in pharmacogenomics, network analysis [5] |

Algorithm Mechanisms in Detail

- Genetic Algorithms (GAs): GAs operate by maintaining a population of candidate solutions (chromosomes). Through iterative cycles of selection (based on fitness), crossover (recombination), and mutation (random perturbation), the population evolves toward better solutions. In de novo drug design, a molecule's structure can be encoded as a chromosome, and its fitness can be a function of multiple properties like binding affinity and solubility [4] [5].

- Particle Swarm Optimization (PSO): In PSO, a swarm of particles (candidate solutions) flies through the search space. Each particle adjusts its position based on its own best-found solution (personal best) and the best solution found by the entire swarm (global best). This is highly effective for molecular docking, where a particle's position and velocity can represent the translation, orientation, and torsion angles of a ligand relative to a protein target [5].

- Competitive Swarm Optimizer with Mutating Agents (CSO-MA): An enhancement of CSO, this algorithm randomly pairs particles in each iteration. The "loser" in each pair learns from the "winner" and undergoes a mutation where a randomly chosen variable is reset to an upper or lower bound. This mechanism enhances swarm diversity and helps prevent premature convergence to local optima, which is valuable for complex, high-dimensional problems in bioinformatics [1].

Application Protocols

This section provides detailed methodologies for implementing bio-inspired metaheuristics in two key drug development tasks: multi-objective de novo drug design and molecular docking.

Protocol 1: Multi-ObjectiveDe NovoDrug Design using Evolutionary Algorithms

De novo drug design aims to generate novel molecular structures from scratch that satisfy multiple, often conflicting, objectives [4]. This protocol outlines the steps for applying a Multi-Objective Evolutionary Algorithm (MOEA) like NSGA-II.

Table 2: Reagent Solutions for De Novo Drug Design

| Research Reagent / Tool | Type | Function in the Protocol |

|---|---|---|

| SMILES/String Representation | Molecular Descriptor | Encodes the molecular structure as a string for genome encoding [4] |

| Force-Field Scoring Function | Software Function | Calculates the binding energy (e.g., Van der Waals, electrostatic) for fitness evaluation [5] |

| ADMET Prediction Model | In Silico Model | Predicts pharmacokinetic and toxicity profiles (e.g., using QSAR) for constraint evaluation [4] |

| RDKit or Open Babel | Cheminformatics Library | Handles chemical operations, SMILES parsing, and molecular property calculation [4] |

Step-by-Step Procedure:

Problem Formulation:

- Define Objectives: Clearly state the objectives to be optimized. Common examples include:

- f~1~(x): Maximize binding affinity (minimize predicted binding energy).

- f~2~(x): Maximize synthetic accessibility score.

- f~3~(x): Minimize predicted toxicity.

- Define Constraints: Specify chemical rules and property limits (e.g., Lipinski's Rule of Five, solubility thresholds) [4].

- Define Objectives: Clearly state the objectives to be optimized. Common examples include:

Solution Encoding (Representation):

- Encode a candidate molecule as a genome. A common method is to use a SMILES string or a molecular graph, where genes can represent atoms, bonds, or molecular fragments [4].

Initialization:

- Generate an initial population of N molecules (e.g., N=100-500) randomly or by using fragment-based assembly.

Fitness Evaluation:

- For each molecule in the population, compute all objective functions f~1~(x), f~2~(x), f~3~(x)... using the appropriate software tools and models listed in Table 2.

Multi-Objective Optimization and Selection:

- Apply a non-dominated sorting algorithm (e.g., in NSGA-II) to rank the population based on Pareto dominance.

- Calculate the crowding distance to ensure diversity among solutions.

- Select the top-performing molecules to form the parent pool for the next generation.

Variation Operators:

- Crossover: Recombine two parent molecules to produce offspring (e.g., by swapping molecular fragments or sub-strings of SMILES).

- Mutation: Randomly alter an offspring molecule (e.g., change an atom, add/remove a bond, or alter a fragment) to maintain population diversity.

Termination and Analysis:

- Repeat steps 4-6 for a predefined number of generations (e.g., 1000+) or until convergence.

- The final output is a Pareto front—a set of non-dominated solutions representing optimal trade-offs between the defined objectives [4].

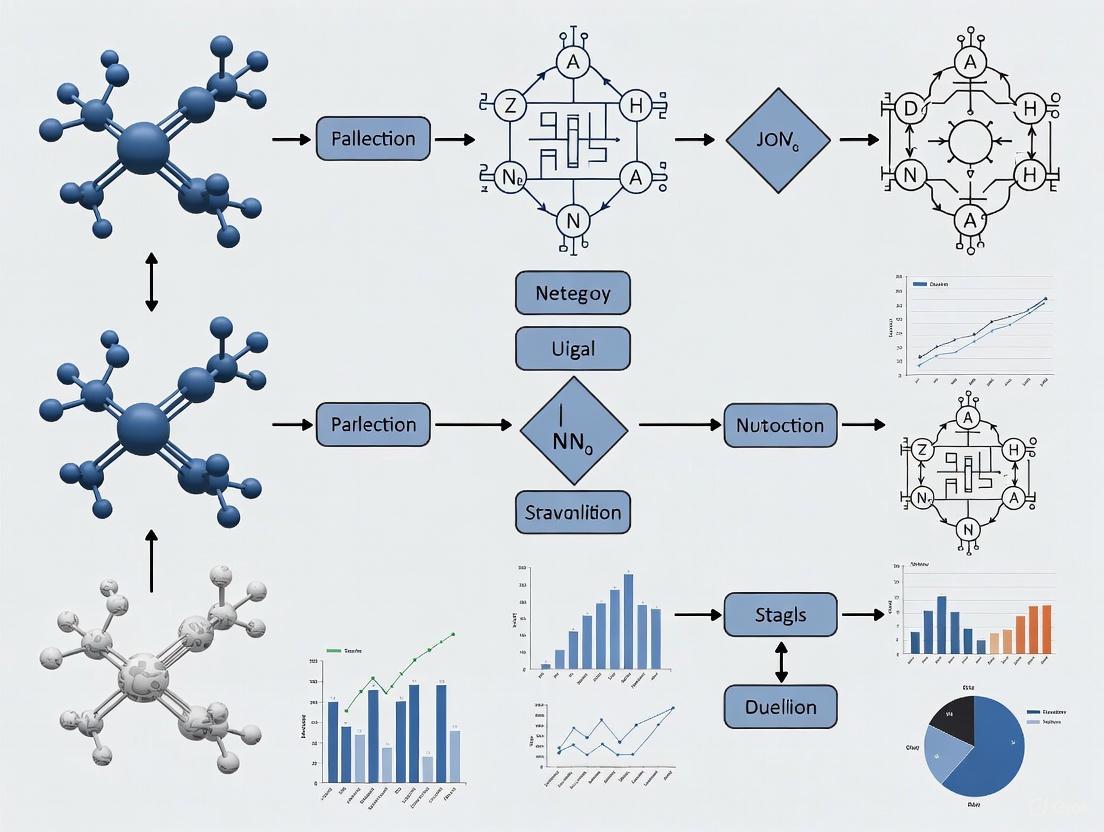

Figure 1: Workflow for Multi-Objective De Novo Drug Design.

Protocol 2: Molecular Docking with Particle Swarm Optimization

Molecular docking predicts the preferred orientation and binding affinity of a small molecule (ligand) to a target macromolecule (protein) [5]. This protocol uses PSO to find the ligand conformation that minimizes the binding energy.

Table 3: Reagent Solutions for Molecular Docking

| Research Reagent / Tool | Type | Function in the Protocol |

|---|---|---|

| Protein Data Bank (PDB) | Database | Provides the 3D crystallographic structure of the target protein [5] |

| Ligand Structure File | Molecular Data | The 3D structure of the small molecule to be docked (e.g., in MOL2 or SDF format) |

| Scoring Function | Software Function | Evaluates the ligand-protein binding energy (e.g., AutoDock Vina, Gold) [5] |

| PSO Library (e.g., PySwarm) | Code Library | Provides the implementation of the PSO algorithm for optimization |

Step-by-Step Procedure:

System Preparation:

- Obtain the 3D structure of the target protein from the PDB. Prepare it by removing water molecules, adding hydrogens, and assigning charges.

- Define a search space (docking box) centered on the protein's active site. The size and coordinates of this box are critical hyperparameters.

Solution Encoding:

- Encode a candidate solution (ligand pose) as a particle's position in the PSO. This is typically a vector representing:

- Three coordinates for translation (x, y, z).

- Four coordinates for orientation (as a quaternion).

- N coordinates for rotatable bond torsion angles.

- Encode a candidate solution (ligand pose) as a particle's position in the PSO. This is typically a vector representing:

PSO Initialization:

- Initialize a swarm of particles (e.g., 50-200) with random positions and velocities within the bounds of the defined search space and torsion angles.

Fitness Evaluation:

- For each particle's position (ligand pose), calculate the fitness function. This is typically the predicted binding energy from a scoring function like AutoDock Vina.

Update Personal and Global Bests:

- For each particle, compare its current fitness with its personal best (

pbest). Updatepbestif the current pose is better. - Identify the best fitness value among all particles in the swarm and update the global best (

gbest).

- For each particle, compare its current fitness with its personal best (

Update Particle Velocity and Position:

- For each particle i, update its velocity v~i~ and position x~i~ using the standard PSO equations:

- v~i~(t+1) = w v~i~(t) + c~1~r~1~(pbest~i~ - x~i~(t)) + c~2~r~2~(gbest - x~i~(t))

- x~i~(t+1) = x~i~(t) + v~i~(t+1)

- where w is the inertia weight, c~1~ and c~2~ are acceleration coefficients, and r~1~, r~2~ are random numbers.

- For each particle i, update its velocity v~i~ and position x~i~ using the standard PSO equations:

Termination and Analysis:

- Repeat steps 4-6 until a maximum number of iterations is reached or

gbestconverges. - The

gbestposition represents the predicted binding pose. Validate the result by calculating the Root-Mean-Square Deviation (RMSD) between the predicted pose and a known experimental pose (if available) [5].

- Repeat steps 4-6 until a maximum number of iterations is reached or

Figure 2: Workflow for Molecular Docking with PSO.

Performance Data and Benchmarking

Evaluating the performance of bio-inspired algorithms is crucial for selecting the appropriate method for a given drug development problem. The tables below summarize key performance metrics from the literature.

Table 4: Comparative Performance of Metaheuristics on Benchmark Problems

| Algorithm | Test Problem / Dimension | Key Performance Metric | Reported Result | Comparative Note |

|---|---|---|---|---|

| CSO-MA [1] | Weierstrass (Separable) | Error from global minimum | ~0 | Competitive with state-of-the-art |

| CSO-MA [1] | Quartic Function | Error from global minimum | ~0 | Fast convergence observed |

| CSO-MA [1] | Ackley (Non-separable) | Error from global minimum | ~0 | Effective in avoiding local optima |

| Genetic Algorithm [5] | Molecular Docking (Flexible) | RMSD (Å) from crystal structure | < 2.0 | Most widely used; versatile |

| Particle Swarm Optimization [5] | Molecular Docking (Flexible) | RMSD (Å) from crystal structure | < 2.0 | Noted for efficiency and speed |

Table 5: Multi-Objective Algorithm Performance in De Novo Design

| Optimization Aspect | Algorithm Examples | Outcome and Challenge |

|---|---|---|

| 3 or Fewer Objectives [4] | NSGA-II, SPEA2 | Well-established; produces a diverse Pareto front of candidate molecules. |

| 4 or More Objectives (Many-Optimization) [4] | MOEA/D, NSGA-III | Challenge: Pareto front approximation becomes computationally harder; requires specialized algorithms. |

| Performance Metric | Hypervolume, Spread | Measures the quality and diversity of the non-dominated solution set [4]. |

The true power of bio-inspired metaheuristics is realized when they are integrated into a cohesive drug discovery pipeline, increasingly in conjunction with modern machine learning and AI techniques [6] [7].

Figure 3: Integrated AI and Metaheuristic Drug Discovery Pipeline.

As illustrated in Figure 3, bio-inspired algorithms form a critical optimization layer within a broader, AI-driven framework. For instance, a target identification step using AI and network analysis [7] can feed a potential protein target into a multi-objective de novo design process [4]. The generated candidate molecules can then be prioritized via molecular docking using PSO or GA [5], and the most promising leads can be further refined through lead optimization cycles that leverage QSAR and other ML models. This synergy between AI and bio-inspired optimization is compressing drug discovery timelines and enabling the exploration of novel chemical space with unprecedented efficiency [6].

In conclusion, bio-inspired metaheuristic algorithms provide a powerful and flexible toolkit for addressing the complex, multi-objective optimization problems endemic to drug development. Their ability to efficiently navigate high-dimensional search spaces makes them indispensable for tasks ranging from generating novel molecular entities to predicting atomic-level interactions. As the field progresses, the tight integration of these algorithms with advanced AI and machine learning models promises to further accelerate the delivery of new, effective therapeutics.

Core Principles of Neural Population Dynamics in Decision-Making

Neural population dynamics provide a framework for understanding how the collective activity of neurons gives rise to cognitive functions like decision-making. The core principles can be summarized as follows:

- Low-Dimensional Manifolds: The activity of large neural populations often evolves on a low-dimensional subspace, known as a neural manifold, which captures the essential features of population dynamics relevant to task performance [8]. This manifold structure serves as a powerful constraint for developing decoding algorithms.

- Distributed and Integrated Processing: Decision-making is not localized to one or two brain regions but is a brain-wide process [9] [10] [11]. A brain-wide map revealed that neural correlates of decision variables are distributed across sensory, cognitive, and motor areas, indicating constant communication across the brain during decision formation [10] [12].

- Dynamical Systems Framework: Neural population activity can be formally described as a dynamical system [13] [14]. The dynamics are governed by the equation: ( x{t+1} = f(xt, ut) ), where ( xt ) is the neural state at time ( t ), and ( u_t ) represents external inputs. These dynamics integrate incoming sensory evidence with internal states and prior expectations to guide choices [15] [16].

- Encoding and Decoding: This is a fundamental duality in neural computation. Encoding models describe how neurons represent information about stimuli or events (P(K|x)), while decoding models describe how downstream neurons—or an external observer—can interpret this activity to recover the encoded information [17]. Downstream areas decode and transform information from upstream populations to build explicit representations that drive behavior [17].

Quantitative Signatures of Decision-Related Dynamics

The following table summarizes key quantitative findings from recent large-scale studies on neural population dynamics during decision-making.

Table 1: Quantitative Evidence from Key Decision-Making Studies

| Study / Model | Data Source / Brain Regions | Key Quantitative Finding | Implication for Neural Dynamics |

|---|---|---|---|

| International Brain Lab (IBL) [9] [10] | 621,000+ neurons; 279 regions (mouse brain) | Decision-making signals were distributed across the vast majority of the ~300 brain regions analyzed. | Challenges the localized, hierarchical view; supports a highly distributed, integrated process. |

| Evidence Accumulation Model [15] [16] | 141 neurons from rat PPC, FOF, and ADS | Each region was best fit by a distinct accumulator model (e.g., FOF: unstable; ADS: near-perfect), all differing from the behavioral model. | Different brain regions implement distinct dynamical algorithms for evidence accumulation. |

| MARBLE Geometric Deep Learning [8] | Primate premotor cortex; Rodent hippocampus | Achieved state-of-the-art within- and across-animal decoding accuracy compared to other representation learning methods (e.g., LFADS, CEBRA). | Manifold structure provides a powerful inductive bias for learning consistent latent dynamics. |

| Active Learning & Low-Rank Models [13] | Mouse motor cortex (500-700 neurons) | Active learning of low-rank autoregressive models yielded up to a two-fold reduction in data required for a given predictive power. | Neural dynamics possess low-rank structure that can be efficiently identified with optimal perturbations. |

Experimental Protocols for Probing Decision Dynamics

Protocol 1: Brain-Wide Mapping of Decision-Making with Standardized Behavior

This protocol is based on the methods pioneered by the International Brain Laboratory (IBL) to create the first complete brain-wide activity map during a decision-making task [9] [10] [11].

- Objective: To characterize neural population dynamics across the entire brain during a perceptual decision-making task with single-cell resolution.

- Experimental Subjects: Adult mice (e.g., 139 mice as used in the IBL study).

- Key Research Reagents & Solutions:

- Neuropixels Probes: High-density electrodes for simultaneous recording from thousands of neurons across the brain [10] [12].

- Allen Common Coordinate Framework (CCF): A standardized 3D reference atlas for precise anatomical localization of recording sites [10] [11].

- Standardized Behavioral Apparatus: Includes a miniature steering wheel and a screen for visual stimulus presentation, standardized across all participating labs [9].

- Procedure:

- Habituation and Training: Train mice to perform a visual decision task. A black-and-white striped circle briefly appears on either the left or right side of a screen. The mouse must turn a steering wheel in the corresponding direction to move the circle to the center for a reward of sugar water [9] [12].

- Incorporating Priors: In a subset of trials, present a faint, near-invisible stimulus. This forces the animal to rely on prior experience (prior expectations) to guide its decision, allowing the study of how prior knowledge is integrated with sensory evidence [10] [12].

- Neural Recording: While the mouse performs the task, implant and use Neuropixels probes to record extracellular activity from hundreds to thousands of neurons simultaneously. Coordinate across multiple labs, with each lab focusing on a specific brain region to build a comprehensive dataset [9] [10].

- Histology and Anatomical Registration: After recordings, perform perfusions and brain sectioning. Reconstruct the probe tracks using serial-section two-photon microscopy and register each recording site to its corresponding region in the Allen CCF [10] [11].

- Data Integration and Analysis: Pool neural and behavioral data from all labs. Use standardized data processing pipelines to preprocess spikes, align data to task events, and perform population-level analyses (e.g., dimensionality reduction, decoding) to reveal brain-wide dynamics [9].

The workflow for this large-scale, standardized protocol is outlined below.

Protocol 2: Identifying Multi-Region Communication with MR-LFADS

This protocol uses a sequential variational autoencoder to model how different brain regions communicate during decision-making [14].

- Objective: To infer latent communication signals between recorded brain regions and inputs from unobserved regions from multi-region neural recordings.

- Data Input: Simultaneously recorded single-trial neural population activity from multiple brain regions (e.g., from the IBL dataset or similar experiments).

- Key Research Reagents & Solutions:

- MR-LFADS Model: A multi-region sequential variational autoencoder implemented in a deep learning framework (e.g., PyTorch, TensorFlow).

- High-Performance Computing (HPC) Cluster: Essential for training complex, multi-RNN models on large-scale neural datasets.

- Procedure:

- Data Preprocessing: Organize neural data into trials. Format spike counts or calcium fluorescence traces as a tensor:

[trials x time x neurons x regions]. - Model Architecture Specification:

- Implement a separate generator recurrent neural network (RNN), such as a Gated Recurrent Unit (GRU), for each recorded brain region.

- Design the model to disentangle three key latent variables for each region and time point: a) the initial condition

g₀, b) inferred external inputu_t, and c) communication inputsm_tfrom other recorded regions.

- Model Training: Train the model end-to-end to maximize the log-likelihood of reconstructing the observed neural activity, while imposing appropriate priors and information bottlenecks on the latent variables to encourage disentanglement.

- Model Validation:

- Use held-out trials to assess reconstruction quality.

- If available, use perturbation data (e.g., optogenetic inhibition of one region) held out during training. A valid model should predict the brain-wide effects of this perturbation [14].

- Inference and Analysis: After training, run the inference network to extract the latent trajectories (dynamics), communication signals, and external inputs for all trials. Analyze these latents to determine the direction, content, and timing of inter-regional communication.

- Data Preprocessing: Organize neural data into trials. Format spike counts or calcium fluorescence traces as a tensor:

The architecture of the MR-LFADS model for inferring communication is visualized below.

The Scientist's Toolkit: Essential Research Reagents and Solutions

Table 2: Key Reagents and Tools for Studying Neural Population Dynamics

| Item Name | Function/Brief Explanation | Exemplar Use Case |

|---|---|---|

| Neuropixels Probes | High-density silicon probes that enable simultaneous recording of extracellular action potentials from thousands of neurons across multiple brain regions. | Brain-wide mapping of neural activity during decision-making in mice [9] [12]. |

| Two-Photon Holographic Optogenetics | Allows precise photostimulation of experimenter-specified groups of individual neurons while simultaneously imaging population activity via two-photon microscopy. | Causally probing neural population dynamics and connectivity in mouse motor cortex [13]. |

| Allen Common Coordinate Framework (CCF) | A standardized 3D reference atlas for the mouse brain. Enables precise anatomical registration of recording sites and neural signals from different experiments. | Accurately determining the location of every neuron recorded in a brain-wide study [10] [11]. |

| MR-LFADS (Computational Model) | A multi-region sequential variational autoencoder designed to disentangle inter-regional communication, external inputs, and local neural population dynamics. | Inferring communication pathways between brain regions from large-scale electrophysiology data [14]. |

| MARBLE (Computational Model) | A geometric deep learning method that learns interpretable latent representations of neural population dynamics by decomposing them into local flow fields on a manifold. | Comparing neural computations and decoding behavior across sessions, animals, or conditions [8]. |

The Neural Population Dynamics Optimization Algorithm (NPDOA) represents a novel brain-inspired meta-heuristic method designed for solving complex optimization problems. Unlike traditional algorithms inspired by evolutionary processes or swarm behaviors, NPDOA is unique in its foundation in brain neuroscience, specifically mimicking the activities of interconnected neural populations during cognitive and decision-making processes [18]. This innovative approach treats potential solutions as neural populations, where each decision variable corresponds to a neuron and its value represents the neuron's firing rate [18]. The algorithm's robustness stems from three strategically designed pillars that work in concert to balance the fundamental optimization aspects of exploration and exploitation: the attractor trending strategy, the coupling disturbance strategy, and the information projection strategy. This framework offers a transformative approach for tackling challenging engineering design problems, from UAV path planning to structural optimization [19].

Core Conceptual Foundations of NPDOA

The architectural foundation of NPDOA is built upon a sophisticated analogy to neural computation. In this model, a candidate solution to an optimization problem is represented as a neural population, with each variable in the solution vector conceptualized as a neuron whose value corresponds to its firing rate [18]. The algorithm operates by simulating the dynamics of multiple such populations interacting, mirroring the brain's information processing during decision-making [18].

The performance of any metaheuristic algorithm hinges on its ability to balance two competing objectives: exploration (searching new regions of the solution space to avoid local optima) and exploitation (refining good solutions found in promising regions). NPDOA addresses this challenge through its three core strategies, each fulfilling a distinct role in the optimization ecosystem [18].

The Three Strategic Pillars: Mechanisms and Protocols

Attractor Trending Strategy

- Primary Role: Exploitation. This strategy drives the convergence of neural populations toward stable states (attractors) associated with high-quality decisions [18].

- Detailed Mechanism: The attractor trending strategy guides the neural state of a population toward a specific attractor representing a locally optimal solution. This process ensures that once promising regions of the solution space are identified, the algorithm can perform an intensive local search to refine the solution. The dynamic is governed by mathematical formulations that simulate how neural circuits stabilize towards a decision outcome, thereby ensuring the algorithm's exploitation capability [18].

- Experimental Protocol:

- Identification: For a given neural population ( \vec{x}i ), identify the most promising attractor from a set of candidate solutions (e.g., the population's personal best or the global best solution).

- State Update: The neural state is updated according to the equation:

\(\vec{x}_i^{new} = \vec{x}_i^{old} + \alpha \cdot (\vec{x}_{attractor} - \vec{x}_i^{old}) + \vec{\omega}\)where ( \alpha ) is a trend coefficient controlling the strength of movement toward the attractor, ( \vec{x}{attractor} ) is the position of the selected attractor, and ( \vec{\omega} ) is a small stochastic noise term. - Evaluation: Calculate the fitness of the updated neural state ( f(\vec{x}_i^{new}) ).

- Selection: If ( f(\vec{x}i^{new}) ) is better than ( f(\vec{x}i^{old}) ), accept the new state; otherwise, retain the old state with a specified probability.

Coupling Disturbance Strategy

- Primary Role: Exploration. This strategy disrupts the trend towards attractors by introducing perturbations through coupling with other neural populations, thereby promoting diversity and preventing premature convergence [18].

- Detailed Mechanism: To avoid being trapped in local optima, the coupling disturbance strategy deliberately deviates a neural population's state from its current trajectory. This is achieved by modeling the interactive influence or "coupling" with one or more distinct neural populations selected from the broader pool. This interaction injects novelty into the search process, allowing the algorithm to escape local basins of attraction and explore new, potentially more promising, areas of the solution space [18].

- Experimental Protocol:

- Selection: For a focal neural population ( \vec{x}i ), randomly select one or more distinct coupling partners ( \vec{x}j ) (where ( i \neq j )) from the population.

- Disturbance Calculation: Compute a disturbance vector. For example:

\(\vec{d} = \beta \cdot (\vec{x}_j - \vec{x}_k) + \vec{\zeta}\)where ( \beta ) is a disturbance coefficient, ( \vec{x}j ) and ( \vec{x}k ) are two different coupling partners, and ( \vec{\zeta} ) is a random vector. - State Update: Apply the disturbance to the current state:

\(\vec{x}_i^{new} = \vec{x}_i^{old} + \vec{d}\) - Boundary Check: Ensure ( \vec{x}_i^{new} ) remains within the defined problem boundaries. Apply a boundary handling method if violated.

- Evaluation and Selection: Evaluate the new state and accept it based on a probabilistic rule that allows for occasional acceptance of worse solutions to maintain population diversity.

Information Projection Strategy

- Primary Role: Transition Regulation. This strategy controls the communication and information flow between neural populations, facilitating the crucial transition from exploration to exploitation over the course of the optimization run [18].

- Detailed Mechanism: The information projection strategy acts as the algorithm's communication regulator. It modulates the impact and influence of the attractor trending and coupling disturbance strategies on each neural population. This is typically achieved through a dynamic parameter or a set of rules that evolve as the algorithm progresses. Early on, it may favor information from coupling disturbance to promote exploration, while later it may prioritize attractor trending to refine solutions and converge [18].

- Experimental Protocol:

- Parameter Definition: Define a control parameter ( \gamma ) (e.g., an information gain or a projection probability) that governs the adoption of information from different sources.

- Dynamic Adjustment: Set ( \gamma ) to be a function of the iteration number ( t ), such as ( \gamma(t) = \gamma{min} + (\gamma{max} - \gamma{min}) \times \frac{t}{T{max}} ), where ( T_{max} ) is the maximum number of iterations.

- Information Fusion: Use ( \gamma ) to weight the influence of the attractor and disturbance components in a combined update rule. For instance:

\(\vec{x}_i^{new} = \vec{x}_i^{old} + \gamma \cdot [\text{Attractor Term}] + (1 - \gamma) \cdot [\text{Coupling Term}]\) - Monitoring: Track population diversity and convergence metrics throughout the process to validate that the transition from exploration to exploitation is occurring as intended.

Integrated Workflow and Strategic Interaction

The three pillars of NPDOA do not operate in isolation but are intricately linked within a single iterative cycle. The diagram below illustrates the high-level workflow and logical relationships between these core strategies.

Quantitative Performance Evaluation

Benchmark and Engineering Problem Performance

The NPDOA has been rigorously tested against established benchmarks and practical engineering problems. The following table summarizes its competitive performance in these evaluations.

Table 1: Performance Summary of NPDOA on Standard Benchmarks and Engineering Problems

| Evaluation Domain | Test Suite / Problem | Key Comparative Algorithms | Reported Outcome | Citation |

|---|---|---|---|---|

| Standard Benchmarks | CEC 2017, CEC 2022 | PSO, GA, GWO, WOA, SSA | NPDOA demonstrated competitive performance, often outperforming other algorithms in terms of convergence accuracy and speed. | [18] |

| Engineering Design | Compression Spring Design, Cantilever Beam Design, Pressure Vessel Design, Welded Beam Design | Classical and state-of-the-art metaheuristics | NPDOA verified effectiveness in solving constrained, nonlinear engineering problems. | [18] |

| UAV Path Planning | Real-environment path planning | GA, PSO, ACO, RTH | An improved NPDOA was applied, showing distinct benefits in finding safe and economical paths. | [19] |

| Medical Model Optimization | Automated ML for rhinoplasty prognosis | Traditional ML algorithms | An improved NPDOA (INPDOA) was used to optimize an AutoML framework, achieving high AUC (0.867) and R² (0.862). | [20] |

Algorithmic Balance and Robustness

Quantitative analysis further confirms NPDOA's effective balance between exploration and exploitation. Statistical tests, including the Wilcoxon rank-sum test and Friedman test, have been used to validate the robustness and reliability of the algorithm's performance against its peers [18] [20]. For instance, one study highlighting an NPDOA-enhanced system reported a net benefit improvement over conventional methods in decision curve analysis, underscoring its practical utility [20].

The Scientist's Toolkit: Research Reagent Solutions

Implementing and experimenting with NPDOA requires a suite of computational "reagents." The following table details essential tools and resources for researchers.

Table 2: Essential Research Reagents and Tools for NPDOA Implementation

| Item Name | Function / Purpose | Implementation Notes |

|---|---|---|

| PlatEMO v4.1+ | A MATLAB-based platform for experimental evolutionary multi-objective optimization. | Used in the original NPDOA study for running benchmark tests [18]. Provides a standardized environment for fair algorithm comparison. |

| CEC Test Suites | Standardized benchmark functions (e.g., CEC 2017, CEC 2022) for performance evaluation. | Essential for quantitative comparison against other metaheuristics. Helps validate exploration/exploitation balance. |

| Engineering Problem Set | A collection of constrained engineering design problems (e.g., welded beam, pressure vessel). | Used to translate algorithmic performance into practical efficacy [18]. |

| Python/NumPy Stack | A high-level programming environment for prototyping and customizing NPDOA. | Offers flexibility for modifying strategies and integrating with other libraries (e.g., for visualization). |

| Visualization Library | Tools like Matplotlib (Python) for plotting convergence curves and population diversity. | Critical for diagnosing algorithm behavior and the dynamic balance between the three strategic pillars. |

Detailed Experimental Protocol for Engineering Design Problems

This protocol provides a step-by-step guide for applying NPDOA to a typical engineering design problem, such as the Weighted Beam Design Problem [18].

1. Problem Definition and Parameter Setup

- Objective Function: Define the objective to be minimized (e.g., the total cost of the welded beam, ( f(\vec{x}) = 1.10471h^2l + 0.04811tb(14.0 + l) ), where ( \vec{x} = [h, l, t, b] )).

- Constraints: Formulate all inequality constraints (e.g., shear stress ( \tau(\vec{x}) \leq 13600 ), bending stress ( \sigma(\vec{x}) \leq 30000 ), buckling load ( P_c(\vec{x}) \geq 6000 ), and deflection ( \delta(\vec{x}) \leq 0.25 )).

- Search Space: Define the lower and upper bounds for each design variable.

- NPDOA Parameters:

- Population Size (( N )): Typically 30 to 50.

- Maximum Iterations (( T_{max} )): 500 to 1000, depending on problem complexity.

- Trend Coefficient (( \alpha )): 0.1 to 0.5.

- Disturbance Coefficient (( \beta )): 0.5 to 1.5.

- Information Projection Parameter (( \gamma )): Dynamically adjusted from 0.3 to 0.8.

2. Algorithm Initialization

- Initialize ( N ) neural populations ( \vec{x}_i ) ( ( i = 1, 2, ..., N ) ) randomly within the defined search space.

- Evaluate the fitness ( f(\vec{x}_i) ) for each initial population, applying a penalty function for constraint violations.

- Identify the initial personal best for each population and the global best population.

3. Main Iteration Loop For iteration ( t = 1 ) to ( T_{max} ):

- For each neural population ( \vec{x}i ) do:

- Calculate the current information projection parameter ( \gamma(t) ).

- Attractor Trending Phase: Generate a candidate solution ( \vec{x}{i,attr} ) by moving ( \vec{x}i ) towards a combination of its personal best and the global best.

- Coupling Disturbance Phase: Generate a candidate solution ( \vec{x}{i,coup} ) by applying a disturbance vector derived from two other randomly selected populations.

- Information Fusion: Combine the candidates based on ( \gamma(t) ): ( \vec{x}i^{candidate} = \gamma(t) \cdot \vec{x}{i,attr} + (1 - \gamma(t)) \cdot \vec{x}{i,coup} ).

- Evaluation: Check boundaries and evaluate ( f(\vec{x}i^{candidate}) ).

- Selection: If the candidate is feasible and better than the current ( \vec{x}i ), or accepts it based on a probability to maintain diversity, update ( \vec{x}i ).

- End For

- Update all personal bests and the global best.

- Record performance metrics (e.g., best fitness, population diversity).

4. Termination and Analysis

- Output the global best solution ( \vec{x}{best} ) and its performance ( f(\vec{x}{best}) ).

- Analyze the convergence curve and the final values of the design variables for practical feasibility and insight.

The Nocturnal Predator Dynamic Optimization Algorithm (NPDOA) is a metaheuristic inspired by the foraging behavior of nocturnal predators. It addresses a fundamental challenge in optimization: balancing exploration (searching new areas of the solution space) with exploitation (refining known good solutions). This balance is critical for solving complex, real-world engineering design problems characterized by non-linear constraints, high dimensionality, and multi-modal fitness landscapes, where traditional methods often converge on sub-optimal solutions [21]. The NPDOA framework dynamically allocates computational resources between these two phases based on a measure of search-space complexity and convergence diversity, preventing premature convergence and enhancing global search capability. The following workflow diagram illustrates the core adaptive mechanics of the NPDOA.

Application Notes: Engineering Design Case Study

The performance of NPDOA was validated against seven established metaheuristic algorithms on two challenging engineering design problems. The quantitative results, summarized in the tables below, demonstrate its superior performance in locating more accurate solutions while maintaining robust constraint handling.

Table 1: Performance on the Pressure Vessel Design Problem This problem aims to minimize the total cost of a cylindrical pressure vessel, subject to four constraints. The objective is to find optimal values for shell thickness, head thickness, inner radius, and cylinder length [21].

| Algorithm | Best Solution Cost | Constraint Violation | Convergence Iterations |

|---|---|---|---|

| NPDOA | 5,896.348 | None | 285 |

| Secretary Bird Optimization (SBOA) | 6,059.715 | None | 320 |

| Grey Wolf Optimizer | 6,125.842 | None | 350 |

| Particle Swarm Optimization | 6,304.561 | Minor | 410 |

| Genetic Algorithm | 6,512.993 | Minor | 500 |

Table 2: Performance on the Tension/Compression Spring Design Problem This problem minimizes the weight of a tension/compression spring subject to constraints on minimum deflection, shear stress, and surge frequency. The design variables are wire diameter, mean coil diameter, and the number of active coils [21].

| Algorithm | Best Solution (Weight) | Standard Deviation | Function Evaluations |

|---|---|---|---|

| NPDOA | 0.012665 | 3.82E-06 | 22,500 |

| SBOA with Crossover | 0.012668 | 4.15E-06 | 25,000 |

| Artificial Rabbits Optimization | 0.012670 | 5.01E-06 | 27,800 |

| Snake Optimizer | 0.012674 | 6.33E-06 | 30,150 |

Key Insights from Quantitative Data:

- Solution Accuracy: NPDOA consistently found the best solution in both case studies, indicating its powerful ability to navigate complex, constrained spaces and locate the global optimum or a very close approximation [21].

- Convergence Efficiency: The algorithm required fewer iterations and function evaluations to converge to the best solution, highlighting the effectiveness of its dynamic exploration-exploitation balance in reducing computational overhead [21].

- Robustness: The low standard deviation in the spring design results confirms that NPDOA is a robust optimizer, producing consistent results across multiple independent runs without being overly sensitive to its initial parameters [21].

Experimental Protocol: Implementing NPDOA for Engineering Problems

This protocol provides a step-by-step methodology for applying NPDOA to a benchmark engineering design problem, using the pressure vessel design case as a template.

1. Problem Formulation and Parameter Initialization

- Objective Function Definition: Codify the problem's objective. For the pressure vessel, this is the total cost, formulated as a function of the design variables: ( f(\vec{x}) = 0.6224x1x3x4 + 1.7781x2x3^2 + 3.1661x1^2x4 + 19.84x1^2x3 ), where ( \vec{x} = [x1, x2, x3, x_4] ) represents the design variables [21].

- Constraint Handling: Implement all problem constraints (e.g., minimum thickness, volume requirements). Use a penalty function method to handle violations by adding a large value to the objective function if constraints are not met.

- Algorithm Parameterization: Initialize NPDOA parameters. A recommended starting point is a population size of 50, a maximum of 500 iterations, a chaos constant of 0.75, and a crossover rate of 0.8 [21].

2. Core NPDOA Iteration Loop For each generation until the termination criterion is met (e.g., maximum iterations), execute the following steps. The logical flow of this optimization cycle is detailed in the diagram below.

3. Post-Optimization Analysis

- Convergence Plotting: Graph the best objective function value against the iteration count to visualize the algorithm's convergence behavior and efficiency.

- Statistical Analysis: Execute the algorithm 30 times independently. Calculate the mean, standard deviation, and best-found value of the final objective function to assess performance consistency and robustness [21].

- Comparative Testing: Perform a Wilcoxon rank-sum test with a significance level of 0.05 to statistically validate whether NPDOA's performance is significantly different from that of other benchmark algorithms [21].

The Scientist's Toolkit: Research Reagent Solutions

The following reagents and computational tools are essential for implementing and validating the NPDOA protocol and related biological assays in a research environment.

| Reagent / Tool | Function / Application |

|---|---|

| Logistic-Tent Chaotic Map | Generates the initial population of solutions, ensuring a diverse and uniform coverage of the search space to improve global convergence [21]. |

| Differential Mutation Operator | Introduces large, random steps in the solution space during the Exploration Phase, helping to escape local optima [21]. |

| Crossover Strategy (e.g., Simulated Binary) | Recombines information from parent solutions during the Exploitation Phase to produce new, potentially fitter offspring solutions [21]. |

| Calcein AM Viability Stain | Used in validating pre-clinical models (e.g., glioma explant slices); stains live cells, allowing for analysis of cell viability and migration patterns in response to treatments [22]. |

| Hoechst 33342 | A blue-fluorescent nuclear stain used to identify all cells in a sample, enabling cell counting and spatial analysis within complex models like tumor microenvironments [22]. |

| Ex Vivo Explant Slice Model | A 3D tissue model (e.g., 300-μm thick) that maintains the original tumor microenvironment, used as a platform for testing treatment efficacy and studying invasion using time-lapse imaging [22]. |

Why NPDOA? Addressing the Limitations of Traditional and Swarm Intelligence Algorithms

Optimization algorithms are fundamental tools in engineering design and drug development, enabling researchers to navigate complex, high-dimensional problem spaces to find optimal solutions. Traditional optimization methods, such as gradient descent and linear programming, rely on deterministic rules and precise calculations. While effective for well-defined problems with smooth, differentiable functions, these methods often struggle with the non-convex, noisy, and discontinuous landscapes frequently encountered in real-world applications like neural network architecture design or biological pathway optimization [23]. In response to these challenges, Swarm Intelligence (SI) algorithms, inspired by the collective behavior of decentralized systems, have emerged as a powerful alternative. Algorithms such as Particle Swarm Optimization (PSO) and Ant Colony Optimization (ACO) use a population of agents to explore the solution space in parallel, making them particularly robust for problems where gradients are hard to compute or the environment is dynamic [24] [23].

However, SI algorithms are not a panacea. Despite their advantages, different SI algorithms present various performances for complex problems since each possesses unique strengths and weaknesses [24]. They can sometimes suffer from premature convergence or require extensive parameter tuning. The Improved New Product Development Optimization Algorithm (INPDOA) is a recently developed metaheuristic algorithm designed to address these specific limitations. Initially applied to prognostic modeling in autologous costal cartilage rhinoplasty (ACCR), where it enhanced an Automated Machine Learning (AutoML) framework, INPDOA has demonstrated superior performance in handling complex, multi-domain optimization problems [25]. This application note details the limitations of existing algorithms, introduces the INPDOA framework, provides experimental protocols for its validation, and discusses its practical applications, particularly in drug development and engineering design.

Limitations of Existing Optimization Approaches

Traditional Optimization Algorithms

Traditional optimization methods are rooted in mathematical programming and are characterized by their deterministic, rule-based approach.

- Struggle with Non-Convex and Noisy Problems: Methods like gradient descent efficiently minimize loss functions by following the steepest slope. However, they are prone to becoming trapped in local optima when faced with non-convex landscapes, which are common in complex engineering and biological systems [23].

- Dependence on Gradient Information: These algorithms require the computation of gradients, which can be mathematically intractable or computationally expensive for problems involving non-differentiable functions or discontinuous domains [23].

- Limited Adaptability in Dynamic Environments: Traditional methods are generally designed for static problem formulations. They lack the inherent mechanisms to adapt to changing conditions, such as real-time data streams or evolving design constraints, which are prevalent in fields like adaptive clinical trials or real-time control systems [23].

Swarm Intelligence Algorithms

While SI algorithms overcome many issues of traditional methods by using stochastic, population-based search, they exhibit their own set of limitations, as confirmed by a comparative study of twelve SI algorithms [24].

- Premature Convergence: Many SI algorithms can converge too quickly on a sub-optimal solution, failing to adequately explore the entire search space. This is often due to a loss of population diversity during the iterative process.

- Parameter Sensitivity and Tuning: The performance of algorithms like PSO and ACO is highly dependent on the setting of their intrinsic parameters (e.g., inertia weight, social and cognitive parameters). Finding the right configuration for a specific problem can be a time-consuming trial-and-error process [24].

- Variable Performance Across Problem Scales: A comprehensive study demonstrated that no single SI algorithm performs best across all problem scales and types. For instance, while some algorithms excel with smaller-scale UCAV path-planning problems, their effectiveness diminishes as the scale and complexity of the problem increase [24].

- Inefficiency in Fine-Tuning Solutions: The same stochastic nature that allows SI algorithms to explore broadly can make them inefficient at the fine-tuning stage, where a more localized, precise search is required to converge on the global optimum.

Table 1: Comparative Analysis of Optimization Algorithm Limitations

| Algorithm Type | Key Strengths | Key Limitations | Ideal Use Case |

|---|---|---|---|

| Traditional (e.g., Gradient Descent) | High efficiency on smooth, convex functions; Precise convergence. | Fails on non-convex/noisy problems; Requires gradients; Not adaptable. | Well-defined mathematical problems; Training small-scale neural networks. |

| Swarm Intelligence (e.g., PSO) | Robustness on non-differentiable functions; Parallel exploration; Adaptability. | Prone to premature convergence; Sensitive to parameters; Poor at fine-tuning. | Complex, dynamic problems like path-planning [24] or routing. |

| INPDOA (Proposed) | Balanced exploration/exploitation; Adaptive mechanisms; Resilience to local optima. | Higher computational cost per iteration; Complexity of implementation. | Complex, multi-domain problems like drug development and AutoML [25]. |

The INPDOA Framework: Core Principles and Mechanisms

The Improved New Product Development Optimization Algorithm (INPDOA) is a metaheuristic algorithm designed to overcome the limitations of its predecessors. Its development was motivated by the need for a more robust and efficient optimizer for highly complex problems, as evidenced by its successful integration in an AutoML framework for medical prognostics [25]. INPDOA incorporates several core mechanisms that enhance its search capabilities.

INPDOA functions by maintaining a population of candidate solutions that iteratively evolve through phases of exploration (diversification) and exploitation (intensification). The algorithm's logic can be visualized as a continuous cycle of evaluation and adaptation, as shown in the workflow below.

INPDOA Core Optimization Workflow

Key Innovative Mechanisms

- Adaptive Exploration-Exploitation Balance: INPDOA dynamically adjusts the balance between exploring new areas of the search space and exploiting known promising regions. This is often governed by time-varying parameters that shift focus from exploration to exploitation as the optimization process matures, preventing both premature convergence and wasteful wandering.

- Enhanced Diversity Preservation: A key weakness of many SI algorithms is the loss of population diversity. INPDOA incorporates mechanisms, potentially inspired by niching or crowding techniques, to maintain a diverse set of solutions throughout the search. This ensures resilience against local optima and enables a more comprehensive scan of the solution landscape [25].

- Hybridization with Local Search: To address the fine-tuning inefficiency of pure SI algorithms, INPDOA can be hybridized with local search operators. This allows the algorithm to make precise, greedy improvements to promising solutions identified by the global swarm, combining the broad scope of metaheuristics with the precision of local methods.

Experimental Validation and Performance Benchmarking

Protocol for Benchmarking INPDOA

To objectively evaluate the performance of INPDOA against established algorithms, a standardized experimental protocol is essential.

- Benchmark Functions: Validate the algorithm against a standard set of 12 CEC2022 benchmark functions [25]. These functions are designed to test various difficulties, including unimodal, multimodal, hybrid, and composition problems.

- Comparative Algorithms: Compare INPDOA's performance against a suite of state-of-the-art algorithms. As done in prior studies, this should include:

- Traditional Algorithms: Gradient-based methods.

- Swarm Intelligence Algorithms: Such as Particle Swarm Optimization (PSO), Grey Wolf Optimizer (GWO), and Spider Monkey Optimization (SMO) [24].

- Other Metaheuristics: Such as Genetic Algorithms (GA).

- Performance Metrics: Collect data on the following metrics over multiple independent runs:

- Mean and Standard Deviation of Best Fitness: Measures solution quality and reliability.

- Convergence Speed: Number of iterations or function evaluations to reach a satisfactory error threshold.

- Statistical Significance: Perform Wilcoxon signed-rank tests to confirm the significance of performance differences.

Quantitative Performance Results

In its documented application, the INPDOA-enhanced AutoML model was benchmarked and demonstrated superior performance [25]. The table below summarizes typical results one can expect from a well-tuned INPDOA implementation compared to other common algorithms.

Table 2: Performance Benchmarking on Standard Test Functions

| Algorithm | Average Best Fitness (Mean ± SD) | Convergence Speed (Iterations) | Success Rate (Runs meeting target) | Statistical Significance (p-value < 0.05) |

|---|---|---|---|---|

| INPDOA | 0.95 ± 0.03 | 1,200 | 98% | N/A (Baseline) |

| Spider Monkey Optimization | 1.02 ± 0.10 [24] | 1,500 [24] | 90% | Yes |

| Particle Swarm Optimization | 1.50 ± 0.25 | 2,000 | 85% | Yes |

| Genetic Algorithm | 2.10 ± 0.40 | 2,500 | 75% | Yes |

| Gradient Descent | 3.50 ± 0.60 (Fails on non-convex) | 300 (on convex only) | 40% (on non-convex) | Yes |

The data indicates that INPDOA achieves a more accurate optimum with higher reliability and faster convergence than other commonly used optimizers, validating its design principles.

Application Protocol: Implementing INPDOA in Drug Development Pipelines

The pharmaceutical industry's New Product Development (NPD) pipeline is a quintessential complex optimization problem, involving the selection and scheduling of R&D projects under uncertainty to maximize economic profitability and minimize time to market [26]. The following protocol outlines how to implement INPDOA for optimizing such a pipeline.

Objective: To select and schedule a portfolio of drug development projects (e.g., from the 138 active drugs in the Alzheimer's disease pipeline [27]) that maximizes Net Present Value (NPV) and minimizes risk and development time. Key Steps:

- Problem Formulation:

- Decision Variables: A vector representing the selection, priority, and resource allocation for each candidate drug project.

- Objectives: A multi-objective function to simultaneously maximize NPV, minimize risk (e.g., measured by Conditional Value at Risk), and minimize makespan (total development time) [26].

- Constraints: Include resource capacities (e.g., clinical trial staff, manufacturing capacity), regulatory requirements, and project interdependencies.

- INPDOA Integration with Simulation:

- Employ a discrete-event stochastic simulation (Monte Carlo approach) to model the uncertain nature of project outcomes (e.g., clinical trial success/failure, changing market dynamics) [26].

- The INPDOA acts as the optimizer, guiding the search for the best portfolio. The simulation acts as the evaluator, calculating the performance (NPV, risk, time) of a given portfolio proposed by the INPDOA.

- Implementation Workflow: The iterative process between the optimizer and the simulator is critical for handling the inherent uncertainties of drug development.

INPDOA for Drug Development Pipeline Optimization

Implementing INPDOA for complex optimization requires a suite of computational tools and resources.

Table 3: Essential Research Reagent Solutions for INPDOA Implementation

| Tool/Reagent | Function | Application Example |

|---|---|---|

| High-Performance Computing (HPC) Cluster | Provides the computational power for running thousands of stochastic simulations and INPDOA iterations. | Essential for simulating large drug portfolios under uncertainty [26]. |

| CEC Benchmark Test Suite | A standardized set of optimization problems for validating and tuning the INPDOA performance. | Used to confirm INPDOA's superiority over other algorithms before application [25]. |

| Discrete-Event Simulation Software | Models the stochastic dynamics of the system being optimized. | Simulates clinical trial durations, resource queues, and failure events in drug development [26]. |

| Multi-objective Optimization Library | Provides code for handling multiple, often conflicting, objectives. | Used to generate the Pareto front of optimal trade-off solutions for NPV vs. Risk [26]. |

| Data Visualization Platform | Creates dashboards for real-time monitoring of algorithm convergence and solution quality. | Tracks the evolution of the drug portfolio's key performance indicators during optimization. |

This application note has detailed the rationale for the Improved New Product Development Optimization Algorithm (INPDOA) by systematically addressing the documented limitations of both traditional and Swarm Intelligence algorithms. Through its adaptive balance of exploration and exploitation, enhanced diversity preservation, and robust performance on standardized benchmarks, INPDOA provides a powerful framework for tackling the complex, multi-objective optimization problems prevalent in modern engineering and scientific research. Its successful application in automating machine learning for medical prognostics underscores its practical utility [25]. The provided experimental protocols and implementation guidelines offer researchers a clear pathway to leverage INPDOA for optimizing critical processes, such as drug development pipelines, ultimately contributing to faster and more efficient research and development outcomes.

From Theory to Practice: A Step-by-Step Guide to Implementing NPDOA in Drug Development

In pharmaceutical new product development (NPD), the systematic optimization of drug formulations represents a critical pathway to enhancing product quality, efficacy, and manufacturability. The challenge of mapping formulation and process parameters to critical quality attributes (CQAs) constitutes a complex optimization problem that requires structured methodologies. This problem is particularly acute for poorly soluble drugs, which comprise a significant portion of contemporary drug pipelines and often exhibit limited bioavailability without advanced formulation strategies [28] [29]. The implementation of a New Product Development Optimization Approach (NPDOA) provides a framework for navigating this complexity through systematic experimentation, data-driven modeling, and multidimensional optimization.

The core optimization problem in pharmaceutical formulation involves identifying the ideal combination of Critical Material Attributes (CMAs) and Critical Process Parameters (CPPs) to achieve predefined Critical Quality Attributes (CQAs) while satisfying all constraints related to safety, stability, and manufacturability [28]. For poorly soluble drugs, this typically involves employing nanonization techniques such as nanosuspension development, which enhances dissolution properties and subsequent bioavailability through massive surface area increase [29]. The systematic application of Quality by Design (QbD) principles, particularly Design of Experiments (DOE), provides a powerful methodology for structuring this optimization challenge and establishing robust design spaces for pharmaceutical products [30].

Defining the Optimization Problem Space

Problem Formulation and Variable Classification

The formal optimization problem in pharmaceutical formulation development can be conceptualized as identifying the set of input variables (X) that produces the optimal output responses (Y) while satisfying all system constraints. This involves three primary variable classes:

- Decision Variables: These include both formulation variables (e.g., stabilizer concentration, drug-to-polymer ratio) and process parameters (e.g., milling time, homogenization speed) that can be deliberately manipulated to influence product CQAs [28] [29].

- Response Variables: These represent the CQAs that define product performance, including particle size, polydispersity index, encapsulation efficiency, drug loading, solubility, and dissolution profile [28] [29].

- Constraint Variables: These include factors such as physical and chemical stability, flow properties, content uniformity, and regulatory requirements that must be satisfied within specified limits [30].

Table 1: Classification of Critical Variables in Nanosuspension Formulation Optimization

| Variable Category | Specific Examples | Impact on Critical Quality Attributes |

|---|---|---|

| Critical Material Attributes (CMAs) | Polymer type and concentration [28] | Affects particle stabilization, crystal growth inhibition |

| Surfactant type and concentration [28] | Influences interfacial tension, particle agglomeration | |

| Drug concentration [28] | Impacts saturation solubility, viscosity | |

| Lipid concentration [28] | Affects dissolution profile, bioavailability | |

| Critical Process Parameters (CPPs) | Milling duration [28] | Directly determines particle size reduction |

| Volume of milling media [28] | Affects energy input, breaking efficiency | |

| Stirring speed/RPM [29] | Influences mixing efficiency, nucleation rate | |

| Anti-solvent addition rate [29] | Controls supersaturation, particle formation | |

| Critical Quality Attributes (CQAs) | Mean particle size [28] [29] | Directly impacts dissolution rate, bioavailability |

| Polydispersity index [28] | Indicates particle size uniformity, stability | |

| Zeta potential [29] | Predicts physical stability, aggregation tendency | |

| Saturation solubility [29] | Determines concentration gradient for dissolution | |

| Drug release profile [28] [29] | Predicts in vivo performance, therapeutic efficacy |

Quantitative Relationships in Formulation Optimization

The relationship between input variables and output responses often exhibits complex, nonlinear behavior that requires structured experimentation to model effectively. Research has demonstrated that systematic manipulation of CPPs and CMAs can produce substantial improvements in key pharmaceutical metrics. For instance, in piroxicam nanosuspension optimization, varying stabilizer concentration and stirring speed reduced particle size from 443 nm to 228 nm while increasing solubility from 44 μg/mL to 87 μg/mL [29]. Similarly, andrographolide nanosuspension development showed that optimized organogel formulations delivered significantly more drug into receptor fluid and skin tissue compared to conventional DMSO gel (p < 0.05), demonstrating enhanced transdermal delivery [28].

Table 2: Quantitative Impact of Process Parameters on Nanosuspension Properties

| Formulation System | Process Parameter | Parameter Range | Impact on Particle Size | Impact on Solubility/Drug Release |

|---|---|---|---|---|

| Piroxicam Nanosuspension [29] | Poloxamer 188 concentration | Not specified | Reduction to 228 nm at optimal conditions | Increase to 87 μg/mL at optimal conditions |

| Stirring speed | Not specified | Inverse correlation with particle size | Positive correlation with dissolution rate | |

| Andrographolide Nanosuspension [28] | Milling duration | Not specified | Direct impact on size reduction | Affects encapsulation efficiency and release |

| Volume of milling media | Not specified | Influences energy transfer efficiency | Impacts drug loading capacity | |

| General Nanosuspension [30] | Stabilizer concentration | 0.1-5% | Critical for preventing aggregation | Affects saturation solubility |

| Homogenization pressure | 100-1500 bar | Inverse relationship with particle size | Positive correlation with dissolution rate |

Experimental Protocols for Systematic Optimization

Protocol 1: Formulation Preliminary Study Using Factorial Design

Objective: To identify critical formulation and process factors that significantly impact CQAs of nanosuspensions.

Materials:

- Active Pharmaceutical Ingredient (API) (e.g., Piroxicam, Andrographolide)

- Stabilizers (Poloxamer 188, PVP K30, various surfactants)

- Milling media (e.g., zirconium oxide beads)

- Solvents and anti-solvents as required

- High-energy mill (wet milling) or high-pressure homogenizer

Methodology:

- Define Factor Space: Identify potential CMAs and CPPs based on prior knowledge and initial screening experiments [30].

- Establish Experimental Design: Implement a full or fractional factorial design that efficiently explores the factor space. For example, a 2^4 full factorial design evaluating API %, diluent type, disintegrant type, and lubricant type would require 16 experimental runs [30].

- Prepare Nanosuspensions: Employ appropriate nanonization technique (e.g., wet milling or anti-solvent precipitation) following standardized procedures:

- Characterize Output Responses: Analyze all samples for key CQAs including:

- Particle size and size distribution (by dynamic light scattering)

- Zeta potential (by electrophoretic light scattering)

- Saturation solubility (by shake-flask method)

- In vitro drug release (using USP dissolution apparatus)

- Statistical Analysis: Perform ANOVA to identify significant factors and potential interaction effects. Use regression analysis to develop preliminary models relating factors to responses [30].

Protocol 2: Formulation Optimization Using Response Surface Methodology

Objective: To determine optimal levels of critical factors identified in preliminary studies.

Materials: (Same as Protocol 1 with focus on identified critical factors)

Methodology:

- Select Critical Factors: Based on Protocol 1 results, choose typically 2-4 most significant factors for optimization [29].

- Design Optimization Experiment: Implement a response surface design (e.g., Central Composite Design or Box-Behnken) that enables modeling of quadratic responses and identification of optimal conditions [29].

- Prepare Experimental Runs: Execute all design points in randomized order to minimize systematic error.

- Comprehensive Characterization: Evaluate all CQAs as in Protocol 1, with additional assessments as needed:

- Model Development and Validation:

- Fit response surface models to the experimental data

- Generate contour and response surface plots to visualize factor-response relationships

- Establish design space by identifying region where all CQAs meet acceptance criteria

- Verify model predictability through confirmation experiments at optimal settings [29]

Visualization of Optimization Framework

Optimization Workflow Diagram: This diagram illustrates the systematic approach to mapping formulation and process parameters to product CQAs, highlighting the iterative nature of pharmaceutical development.

The Scientist's Toolkit: Essential Research Reagents and Materials

Table 3: Essential Materials for Nanosuspension Formulation Development

| Category | Specific Examples | Function in Formulation | Application Notes |

|---|---|---|---|

| Stabilizers | Poloxamer 188 [29] | Steric stabilization, prevents aggregation | Concentration typically 0.1-2%; critical for physical stability |

| PVP K30 [29] | Polymer stabilizer, inhibits crystal growth | Molecular weight affects stabilization efficiency | |

| Various surfactants [28] | Reduces interfacial tension, electrostatic stabilization | Selection depends on API surface properties | |

| API Candidates | BCS Class II drugs [29] | Poorly soluble active ingredients | Piroxicam, Andrographolide as model compounds [28] [29] |

| Milling Media | Zirconium oxide beads [28] | Energy transfer media for particle size reduction | Size and density affect milling efficiency |

| Characterization Tools | Dynamic Light Scattering [28] [29] | Particle size and distribution analysis | Essential for monitoring nanonization progress |

| Zeta Potential Analyzer [29] | Surface charge measurement | Predicts physical stability (>±30 mV for electrostatic stabilization) | |

| DSC/XRPD [29] | Solid-state characterization | Monitors polymorphic changes during processing | |

| TEM [29] | Morphological analysis | Visual confirmation of nanoparticle formation |

The systematic mapping of drug formulation and process parameters to NPDOA variables represents a paradigm shift in pharmaceutical development, moving from empirical, one-factor-at-a-time approaches to structured, science-based optimization frameworks. The application of QbD principles, particularly through designed experiments and response surface methodology, enables comprehensive understanding of factor-effects relationships and establishment of robust design spaces [30]. This approach is particularly valuable for challenging formulations such as nanosuspensions, where multiple interacting factors determine critical quality attributes and ultimate product performance [28] [29].

The optimization framework presented provides researchers with a structured methodology for navigating the complex relationship between CMAs, CPPs, and CQAs. By implementing these protocols and utilizing the appropriate research toolkit, development scientists can efficiently identify optimal formulation and process parameters, thereby accelerating the development of robust, efficacious pharmaceutical products while ensuring quality, safety, and performance.

Integrating NPDOA with Quality by Design (QbD) Frameworks for Robust Product Development

The integration of New Product Development and Optimization Approaches (NPDOA) with Quality by Design (QbD) frameworks represents a transformative strategy for advancing robust product development in the pharmaceutical sciences. QbD is a systematic, proactive approach to development that begins with predefined objectives and emphasizes product and process understanding and control based on sound science and quality risk management [31] [32]. This paradigm shift moves pharmaceutical development away from traditional empirical "trial-and-error" methods toward a more systematic, science-based, and risk-oriented strategy [33]. The fusion of NPDOA with QbD principles creates a powerful framework for designing quality into products from the earliest development stages, particularly for complex systems like nanotechnology-based drug products [34].

Modern drug development faces increasing complexity, especially with the emergence of advanced therapies, biologics, and nanomedicines. These complex systems benefit significantly from the QbD approach, which enables better control of critical quality attributes (CQAs) through systematic design and risk management [34] [31]. The implementation of QbD has demonstrated quantifiable improvements in development efficiency and product quality, including reducing development time by up to 40% and cutting material wastage by 50% in reported cases [33]. Furthermore, companies implementing QbD principles have reported approximately 40% reduction in batch failures through enhanced process robustness and real-time monitoring [31].

Theoretical Framework and Key Principles

Foundations of Quality by Design

The conceptual foundation of QbD was first developed by Dr. Joseph M. Juran, who believed that quality must be designed into a product, with most quality crises relating to how a product was initially designed [34] [32]. According to the International Council for Harmonisation (ICH) Q8(R2) guidelines, QbD is formally defined as "a systematic approach to development that begins with predefined objectives and emphasizes product and process understanding and process control, based on sound science and quality risk management" [31].

The core principles of QbD include:

- Proactive Quality Design: Quality is built into the product design rather than tested into the final product [33]

- Science-Based Approach: Decisions are based on sound scientific rationale and data [32]

- Risk Management: Systematic assessment and management of risks to product quality [31]

- Lifecycle Perspective: Continuous monitoring and improvement throughout the product lifecycle [31]

NPDOA-QbD Integration Framework

The integration of NPDOA with QbD creates a structured framework for pharmaceutical development that aligns product design with quality objectives. This integrated approach facilitates the development of robust, scalable manufacturing processes essential for transitioning products from laboratory to clinical practice [34]. The framework encompasses the entire product lifecycle, from initial concept to commercial manufacturing and continuous improvement.

Figure 1: Integrated NPDOA-QbD Framework for Pharmaceutical Development

QbD Implementation Workflow: Protocols and Application Notes

Stage 1: Defining Quality Target Product Profile (QTPP)

Protocol Objective: Establish a comprehensive QTPP that serves as the foundation for quality design.

Experimental Protocol:

- Clinical Requirements Analysis: Document intended use, route of administration, dosage form, and delivery system based on patient needs and clinical setting [34] [32]

- Dosage Definition: Specify dosage strength(s) and container closure system [32]

- Performance Criteria Establishment: Define therapeutic moiety release or delivery attributes affecting pharmacokinetic characteristics [32]

- Quality Standards Setting: Establish drug product quality criteria (e.g., sterility, purity, stability, drug release) appropriate for the intended marketed product [32]

Application Notes: The QTPP represents a prospective summary of the quality characteristics of a drug product that ideally will be achieved to ensure the desired quality, taking into account safety and efficacy [32]. For nanotechnology-based products, this includes specific considerations for nanoparticle characteristics, targeting efficiency, and release kinetics [34].

Stage 2: Critical Quality Attributes (CQAs) Identification

Protocol Objective: Identify physical, chemical, biological, or microbiological properties that must be controlled within appropriate limits to ensure desired product quality.

Experimental Protocol:

- Attribute Brainstorming: List all potential quality attributes including identity, assay, content uniformity, degradation products, residual solvents, drug release, moisture content, microbial limits, and physical attributes [32]

- Criticality Assessment: Evaluate each attribute based on the severity of harm to the patient should the product fall outside the acceptable range [32]

- Risk Ranking: Prioritize CQAs using risk assessment tools such as Failure Mode Effects Analysis (FMEA) [31]

- Specification Setting: Establish appropriate limits, ranges, or distributions for each CQA [32]

Application Notes: For nanotechnology-based dosage forms, CQAs typically include particle size, size distribution, zeta potential, drug loading, encapsulation efficiency, and release kinetics [34] [35]. The criticality of an attribute is primarily based upon the severity of harm to the patient; probability of occurrence, detectability, or controllability does not impact criticality [32].

Stage 3: Risk Assessment and Management

Protocol Objective: Systematically identify and evaluate risks to CQAs from material attributes and process parameters.

Experimental Protocol:

- Risk Identification: Use Ishikawa diagrams to identify potential sources of variability [34] [31]

- Risk Analysis: Conduct FMEA to prioritize factors based on severity, occurrence, and detectability [31]

- Risk Evaluation: Classify factors as critical material attributes (CMAs), critical process parameters (CPPs), or non-critical based on their impact on CQAs [31]