NPDOA Parameter Tuning Guidelines: Optimizing Neural Population Dynamics for Drug Development

This article provides comprehensive guidelines for tuning the Neural Population Dynamics Optimization Algorithm (NPDOA), a metaheuristic algorithm inspired by neural population dynamics during cognitive activities.

NPDOA Parameter Tuning Guidelines: Optimizing Neural Population Dynamics for Drug Development

Abstract

This article provides comprehensive guidelines for tuning the Neural Population Dynamics Optimization Algorithm (NPDOA), a metaheuristic algorithm inspired by neural population dynamics during cognitive activities. Tailored for researchers, scientists, and drug development professionals, it covers the foundational principles of NPDOA, detailed methodologies for parameter configuration and application in biomedical contexts such as AutoML for prognostic modeling, strategies for troubleshooting common issues like local optima entrapment, and rigorous validation techniques against established benchmarks. The goal is to equip practitioners with the knowledge to effectively leverage NPDOA for enhancing optimization tasks in clinical pharmacology and oncology drug development, ultimately contributing to more efficient and robust drug discovery and dosage optimization processes.

Understanding NPDOA: Core Principles and Relevance to Biomedical Optimization

The Neural Population Dynamics Optimization Algorithm (NPDOA) is a metaheuristic optimization algorithm that models the dynamics of neural populations during cognitive activities [1]. As a nascent bio-inspired algorithm, it belongs to the broader category of swarm intelligence and population-based optimization methods. The algorithm's foundational metaphor draws from neuroscientific principles, simulating how groups of neurons interact and process information to arrive at optimal states. This innovative approach sets it apart from traditional optimization algorithms by mimicking the efficient computational processes observed in biological neural systems.

The core operational principle of NPDOA involves simulating two fundamental processes observed in neural populations: convergence toward attractor states and divergence through coupling with other neural groups [1]. The attractor trend strategy guides the neural population toward making optimal decisions, ensuring the algorithm's exploitation ability. Simultaneously, divergence from the attractor by coupling with other neural populations enhances the algorithm's exploration capability. Finally, an information projection strategy controls communication between neural populations, facilitating the transition from exploration to exploitation. This sophisticated balance between local refinement and global search allows NPDOA to effectively navigate complex solution spaces while avoiding premature convergence to local optima.

Quantitative Performance Analysis

Table 1: NPDOA Performance on Benchmark Functions

| Benchmark Suite | Dimension | Performance Metric | Comparative Algorithms | Result |

|---|---|---|---|---|

| CEC 2022 [2] | Not Specified | Optimization Accuracy | Traditional ML Algorithms | Outperformed |

| CEC 2017 [1] | 30, 50, 100 | Friedman Ranking | 9 State-of-the-Art Algorithms | Competitive |

| General Evaluation [1] | Multiple | Convergence Speed | Various Metaheuristics | High Efficiency |

| General Evaluation [1] | Multiple | Solution Accuracy | Various Metaheuristics | Reliable Solutions |

Table 2: Application Performance in Real-World Scenarios

| Application Domain | Specific Problem | Performance Outcome | Key Advantage |

|---|---|---|---|

| Surgical Prognostics [2] | ACCR Prognostic Modeling | AUC: 0.867, R²: 0.862 | Superior to traditional algorithms |

| Engineering Design [1] | Multiple Problems | Optimal Solutions | Practical effectiveness |

| UAV Path Planning [3] | Real-environment Planning | Improved Results | Successful application |

Experimental Protocols and Implementation Guidelines

Benchmarking Protocol

The standard experimental protocol for validating NPDOA performance employs a structured approach using established benchmark suites. Researchers should implement the following methodology to ensure comparable and reproducible results. First, select appropriate benchmark functions from standardized test suites, primarily CEC 2017 and CEC 2022, which provide diverse optimization landscapes with varying complexities and modalities [1]. The CEC 2022 benchmark functions were specifically used in developing an improved version of NPDOA (INPDOA), where it was validated against 12 CEC2022 benchmark functions [2].

For experimental setup, configure algorithm parameters including population size, maximum iterations, and termination criteria. The population size typically ranges from 30 to 100 individuals, while maximum iterations depend on problem complexity and computational budget. Execute multiple independent runs (typically 30) to account for stochastic variations in algorithm performance. During execution, track key performance indicators including convergence speed, solution accuracy, and computational efficiency. For comparative analysis, include state-of-the-art algorithms such as the Power Method Algorithm (PMA), improved red-tailed hawk algorithm (IRTH), and other recent metaheuristics [1] [3]. Finally, apply statistical tests including Wilcoxon rank-sum and Friedman test to confirm the robustness and reliability of observed performance differences [1].

Hyperparameter Tuning Methodology

Hyperparameter optimization is crucial for maximizing NPDOA performance. The tuning process should follow a systematic approach based on best practices in machine learning model configuration [4]. Define the hyperparameter search space (Λ) encompassing key parameters such as neural population size, attraction coefficients, divergence factors, and information projection rates. Select an appropriate hyperparameter optimization (HPO) method, considering options such as Bayesian optimization via Gaussian processes, random search, simulated annealing, or evolutionary strategies [4].

For the objective function (f(λ)), choose a metric that aligns with your optimization goals, such as convergence speed, solution quality, or algorithm stability. Conduct multiple trials (typically 100) to adequately explore the hyperparameter space, ensuring sufficient coverage of possible configurations [4]. Validate the tuned hyperparameters on unseen test problems to ensure generalizability beyond the tuning dataset. Document the entire process thoroughly, including the specific HPO method used, computational resources required, and final hyperparameter values, to ensure reproducibility and transparency in research reporting [4].

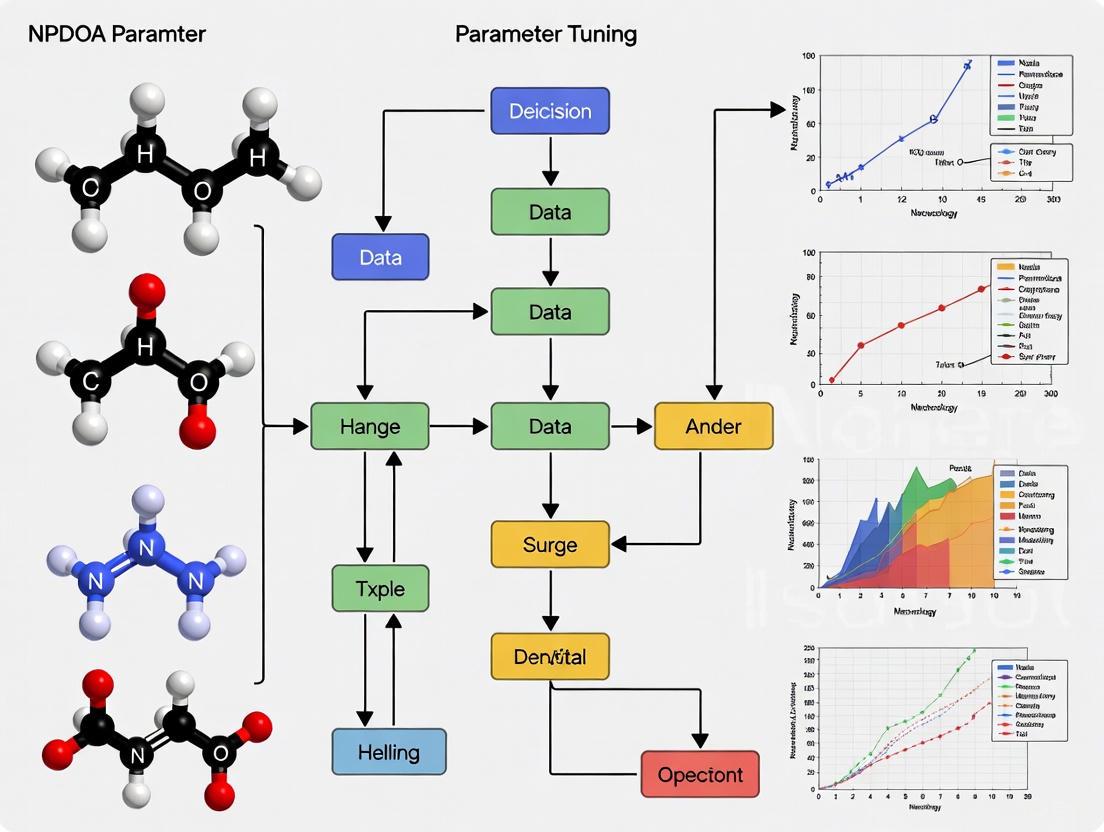

Algorithm Workflow and Signaling Pathways

NPDOA Computational Workflow

NPDOA Core Components

Research Reagent Solutions and Essential Materials

Table 3: Essential Research Tools for NPDOA Implementation

| Tool Category | Specific Solution | Function/Purpose | Implementation Example |

|---|---|---|---|

| Benchmark Suites | CEC 2017, CEC 2022 [2] [1] | Algorithm performance validation | Standardized function testing |

| Hyperparameter Optimization | Bayesian Optimization [4] | Automated parameter tuning | Gaussian Process, TPE |

| Statistical Analysis | Wilcoxon rank-sum, Friedman test [1] | Result significance verification | Performance comparison |

| Performance Metrics | AUC, R², Convergence curves [2] | Solution quality assessment | Model accuracy measurement |

| Programming Environment | MATLAB, Python [2] | Algorithm implementation | CDSS development |

Application Protocols for Domain-Specific Implementation

Medical Prognostic Modeling Protocol

The application of NPDOA in medical prognostic modeling, specifically for autologous costal cartilage rhinoplasty (ACCR), requires a specialized implementation protocol [2]. Begin with comprehensive data collection spanning demographic variables (age, sex, BMI), preoperative clinical factors (nasal pore size, prior nasal surgery history, preoperative ROE score), intraoperative/surgical variables (surgical duration, hospital stay), and postoperative behavioral factors (nasal trauma, antibiotic duration, folliculitis, animal contact, spicy food intake, smoking, alcohol use) [2]. Employ bidirectional feature engineering to identify critical predictors, which may include nasal collision within 1 month, smoking, and preoperative ROE scores [2].

For model development, implement the improved NPDOA (INPDOA) with metaheuristic enhancements for AutoML optimization [2]. Utilize SHAP (SHapley Additive exPlanations) values to quantify variable contributions and ensure model interpretability. Address class imbalance in training data using Synthetic Minority Oversampling Technique (SMOTE) while maintaining original distributions in validation sets to reflect real-world clinical scenarios [2]. Validate the model using appropriate metrics including AUC for classification tasks (e.g., complication prediction) and R² for regression tasks (e.g., ROE score prediction), with performance targets of AUC > 0.85 and R² > 0.85 based on established benchmarks [2]. Finally, develop a clinical decision support system (CDSS) for real-time prognosis visualization, ensuring reduced prediction latency for practical clinical utility [2].

Engineering Design Optimization Protocol

For engineering applications, NPDOA implementation follows a structured optimization workflow. First, formulate the engineering problem by defining design variables, constraints, and objective functions specific to the application domain (e.g., structural design, path planning, resource allocation). Initialize the neural population with feasible solutions distributed across the design space, ensuring adequate coverage of potential optimal regions. Execute the NPDOA iterative process with emphasis on balancing exploration and exploitation phases, leveraging the algorithm's inherent ability to transition between these modes through its information projection strategy [1].

Monitor convergence behavior using established metrics and benchmark against state-of-the-art algorithms including PMA, IRTH, and other recent metaheuristics [1] [3]. For path planning applications specifically, implement additional validation in realistic simulation environments with dynamic obstacles and multiple constraints [3]. Conduct sensitivity analysis to evaluate parameter influence on solution quality and algorithm performance. Document the optimization process thoroughly, including computational requirements, convergence history, and final solution characteristics, to facilitate reproducibility and practical implementation of optimized designs.

Neural population dynamics refer to the time evolution of activity patterns across large groups of neurons, which is fundamental to cognitive functions such as decision-making, working memory, and categorization [5]. This computational approach posits that the brain performs computations through structured time courses of neural activity shaped by underlying network connectivity [5]. The study of these dynamics has transcended neuroscience, inspiring novel optimization algorithms in computer science and engineering. The recently proposed Neural Population Dynamics Optimization Algorithm (NPDOA) exemplifies this cross-disciplinary transfer, implementing three core strategies derived from brain function: attractor trending, coupling disturbance, and information projection [6].

Core Principles and Neural Phenomena

Dynamical Constraints and Attractor States

Empirical evidence demonstrates that neural trajectories are remarkably robust and difficult to violate. A key study using a brain-computer interface (BCI) challenged monkeys to produce time-reversed neural trajectories in motor cortex. Animals were unable to violate natural neural time courses, indicating these dynamics reflect underlying network constraints essential for computation [5]. This inherent stability is a foundational principle for algorithm design.

Task-Dependent Encoding Formats

Neural populations exhibit flexible encoding strategies depending on cognitive demands. Research comparing one-interval categorization (OIC) and delayed match-to-category (DMC) tasks revealed that while the lateral intraparietal area (LIP) encodes categories in both tasks, the format differs significantly. During DMC tasks requiring working memory, encoding is more binary and abstract, whereas OIC tasks with immediate saccadic responses produce more graded, feature-preserving encoding [7]. This adaptability suggests effective algorithms should incorporate context-dependent representation formats.

Stable and Dynamic Working Memory Codes

Working memory involves both stable and dynamic neural population codes across the cortical hierarchy. Surprisingly, early visual cortex exhibits stronger dynamics than high-level frontoparietal regions during memory delays. In V1, population activity initially encodes a tuned "bump" for a peripheral target, then spreads inward toward foveal locations, effectively reforming the memory trace into a format more proximal to forthcoming behavior [8].

Table 1: Key Phenomena in Neural Population Dynamics

| Phenomenon | Neural Correlate | Functional Significance |

|---|---|---|

| Constrained Neural Trajectories | Motor cortex activity during BCI tasks | Reflects underlying network architecture; ensures computational reliability [5] |

| Task-Dependent Encoding | LIP activity during categorization tasks | Enables flexibility; binary encoding for memory tasks, graded for immediate decisions [7] |

| Working Memory Dynamics | V1 activity during memory delays | Reformats information from sensory features to behaviorally relevant abstractions [8] |

| Attractor Dynamics | Prefrontal cortex during working memory | Maintains information persistently through stable attractor states [6] |

Experimental Protocols for Studying Neural Dynamics

Delayed Match-to-Category (DMC) Task Protocol

Purpose: To investigate neural mechanisms of categorical working memory and decision-making [7].

Procedure:

- Fixation Period (500 ms): Subject maintains visual fixation.

- Sample Presentation (650 ms): A sample stimulus (e.g., random-dot motion pattern) appears in the receptive field.

- Delay Period (1000 ms): Blank screen requiring maintained information.

- Test Stimulus Presentation: Second stimulus appears; subject indicates category match/non-match.

- Manual Response: Subject releases touch bar for match, holds for non-match.

Key Measurements: Single-unit or population recording in LIP or PFC; analysis of categorical encoding format (binary vs. graded); population dynamics during delay period [7].

Neural Trajectory Flexibility Protocol

Purpose: To test constraints on neural population trajectories using BCI [5].

Procedure:

- Baseline Recording: Identify natural neural trajectories during standard BCI control.

- Mapping Manipulation: Alter BCI mapping to create conflict between natural and required trajectories.

- Time-Reversal Challenge: Specifically challenge subject to produce neural trajectories in reverse temporal order.

- Path-Following Tasks: Require subjects to follow prescribed paths through neural state space.

Key Measurements: Success rate in violating natural trajectories; persistence of dynamical structure across mapping conditions; neural trajectory analysis in high-dimensional state space [5].

Implementation in Optimization Algorithms

Neural Population Dynamics Optimization Algorithm (NPDOA)

The NPDOA translates neural principles into a metaheuristic optimization framework with three core strategies [6]:

- Attractor Trending Strategy: Drives solutions toward optimal decisions, ensuring exploitation capability by simulating convergence to stable neural states.

- Coupling Disturbance Strategy: Deviates solutions from attractors through simulated interference between neural populations, improving exploration ability.

- Information Projection Strategy: Controls communication between solution populations, enabling transition from exploration to exploitation phases.

In NPDOA, each solution is treated as a neural population, with decision variables representing neuronal firing rates. The algorithm simulates how interconnected neural populations evolve during cognitive tasks to find high-quality solutions to complex optimization problems [6].

Performance and Applications

NPDOA has demonstrated competitive performance on benchmark problems and practical engineering applications, including compression spring design, cantilever beam design, pressure vessel design, and welded beam design problems [6]. Its brain-inspired architecture provides effective balance between exploration and exploitation, addressing common metaheuristic limitations like premature convergence.

Visualization of Core Concepts

NPDOA Algorithm Structure

Task-Dependent Neural Encoding

Table 2: Essential Research Materials for Neural Dynamics Studies

| Resource/Reagent | Function/Application | Specifications |

|---|---|---|

| Multi-electrode Array | Neural population recording | 90+ units simultaneously; motor cortex implantation [5] |

| Causal GPFA | Neural dimensionality reduction | 10D latent states from population activity [5] |

| Brain-Computer Interface (BCI) | Neural manipulation and feedback | 2D cursor control from 10D neural states [5] |

| Random-Dot Motion Stimuli | Controlled visual input | 360° motion directions; categorical boundaries [7] |

| Recurrent Neural Network (RNN) Models | Mechanistic testing | Trained on OIC/DMC tasks; fixed-point analysis [7] |

| fMRI-Compatible Memory Task | Human neural dynamics | Memory-guided saccade paradigm; population receptive field mapping [8] |

Protocol for NPDOA Parameter Tuning

Purpose: To optimize NPDOA performance for specific problem domains based on neural principles.

Procedure:

- Attractor Strength Calibration: Set initial attractor strength to 0.3-0.5 for balanced exploitation.

- Coupling Coefficient Adjustment: Determine disturbance magnitude (0.1-0.3) based on problem multimodality.

- Projection Rate Scheduling: Implement adaptive projection rates: higher early (0.7-0.9) for exploration, lower later (0.3-0.5) for exploitation.

- Population Sizing: Set neural population count to 50-100 based on problem dimensionality.

- Iteration Mapping: Algorithm iterations correspond to neural trajectory time steps (100-500 for complex problems).

Validation: Test on CEC benchmark suites; compare with state-of-the-art algorithms using Friedman ranking; apply to real-world problems like mechanical design and resource allocation [6].

Key Algorithm Parameters and Their Theoretical Roles

The Neural Population Dynamics Optimization Algorithm (NPDOA) represents a significant advancement in metaheuristic optimization, drawing inspiration from brain neuroscience and the computational principles of neural populations [1] [9]. Unlike traditional algorithms inspired by evolutionary processes or swarm behaviors, NPDOA simulates the decision-making and information-processing mechanisms observed in neural circuits, positioning it as a promising approach for complex optimization challenges in scientific research and drug development.

Theoretical studies suggest that neural population dynamics exhibit optimal characteristics for navigating high-dimensional, non-convex search spaces common in biomedical applications [9]. The algorithm operates through coordinated interactions between neural populations, leveraging mechanisms such as attractor dynamics and information projection to balance exploration of new solutions and exploitation of promising regions [9]. This bio-inspired foundation makes NPDOA particularly suitable for problems with complex landscapes, such as molecular docking, pharmacokinetic optimization, and quantitative structure-activity relationship (QSAR) modeling.

Core Parameter Framework in NPDOA

The performance of NPDOA hinges on the appropriate configuration of its key parameters, which collectively govern the transition between exploration and exploitation phases. The table below outlines these fundamental parameters, their theoretical roles, and recommended value ranges based on experimental studies.

Table 1: Core Parameters of Neural Population Dynamics Optimization Algorithm

| Parameter Category | Specific Parameter | Theoretical Role | Recommended Range | Impact of Improper Tuning |

|---|---|---|---|---|

| Population Parameters | Population Size | Determines number of neural units in the optimization network; larger sizes improve exploration but increase computational cost | 50-100 for most problems [1] | Small size: Premature convergence; Large size: Excessive resource consumption |

| Number Sub-Populations | Controls modular architecture for specialized search strategies; enables parallel exploration of different solution regions | 3-5 groups [9] | Too few: Reduced diversity; Too many: Coordination difficulties | |

| Dynamics Parameters | Attractor Strength | Governs convergence toward promising solutions; higher values intensify exploitation around current best candidates | 0.3-0.7 (adaptive) [9] | Too strong: Premature convergence; Too weak: Slow convergence |

| Neural Coupling Factor | Regulates information exchange between sub-populations; facilitates diversity maintenance and global search | 0.4-0.8 [9] | Too strong: Reduced diversity; Too weak: Isolated search efforts | |

| Information Projection Rate | Controls transition from exploration to exploitation by modulating communication frequency between populations | Adaptive (decreasing) [9] | Too high: Early convergence; Too low: Failure to converge | |

| Stochastic Parameters | Perturbation Intensity | Introduces stochastic fluctuations to escape local optima; analogous to neural noise in biological systems | 0.05-0.2 [10] | Too high: Random walk; Too low: Trapping in local optima |

| Adaptation Frequency | Determines how often parameters are adjusted based on performance feedback | Every 100-200 iterations [10] | Too frequent: Instability; Too infrequent: Poor adaptation |

Interparameter Relationships and Synergies

The parameters in NPDOA do not operate in isolation but function as an interconnected system. Key synergistic relationships include:

Attractor Strength and Neural Coupling form a critical balance: while attractors promote convergence to current promising regions, neural coupling maintains diversity through controlled information exchange between sub-populations [9]. This relationship mirrors the balance between excitation and inhibition in biological neural networks.

Population Size and Perturbation Intensity exhibit an inverse relationship; larger populations can tolerate higher perturbation intensities without destabilizing the search process, as the system possesses sufficient diversity to absorb stochastic fluctuations [1] [10].

Information Projection Rate and Adaptation Frequency should be coordinated to ensure that parameter adjustments align with phase transitions in the optimization process. Experimental evidence suggests that adaptation is most effective when synchronized with reductions in the information projection rate [9].

Experimental Protocols for Parameter Tuning

Systematic Parameter Calibration Methodology

Establishing robust parameter settings for NPDOA requires a structured experimental approach. The following protocol outlines a comprehensive methodology for parameter tuning:

Table 2: Experimental Protocol for NPDOA Parameter Optimization

| Stage | Objective | Procedure | Metrics | Recommended Tools |

|---|---|---|---|---|

| Initial Screening | Identify promising parameter ranges | Perform fractional factorial design across broad parameter ranges | Convergence speed, solution quality | Experimental design software (JMP, Design-Expert) |

| Response Surface Analysis | Model parameter-performance relationships | Use central composite design around promising ranges from initial screening | Predictive R², adjusted R², model significance | Response surface methodology (RSM) packages |

| Convergence Profiling | Characterize algorithm behavior over iterations | Run multiple independent trials with candidate parameter sets; record fitness at intervals | Mean best fitness, success rate, convergence plots | Custom MATLAB/Python scripts with statistical analysis |

| Robustness Testing | Evaluate performance across diverse problem instances | Apply leading parameter candidates to benchmark problems with varied characteristics | Rank-based performance, Friedman test, Wilcoxon signed-rank test | CEC2017/CEC2022 test suites [1] [9] |

Problem-Specific Adaptation Framework

Different problem characteristics necessitate customized parameter strategies:

For high-dimensional problems (50+ dimensions): Increase population size (80-100) and neural coupling factor (0.6-0.8) to maintain adequate search diversity across the expanded solution space [1].

For multi-modal problems: Enhance perturbation intensity (0.1-0.2) and employ multiple sub-populations (4-5) to facilitate parallel exploration of different attraction basins [9].

For computationally expensive problems: Reduce population size (50-60) while increasing attractor strength (0.6-0.7) to prioritize exploitation and reduce function evaluations [10].

Visualization of NPDOA Parameter Interactions

The following diagrams illustrate the key relationships and workflows described in this document.

NPDOA Algorithm Workflow and Phase Transition

Parameter Interactions and Performance Relationships

Research Reagent Solutions for Experimental Validation

Table 3: Essential Research Materials for NPDOA Experimental Validation

| Category | Item | Specification | Theoretical Role | Application Context |

|---|---|---|---|---|

| Benchmark Suites | IEEE CEC2017 | 30+ scalable test functions with diverse characteristics | Provides standardized performance assessment across varied problem landscapes [1] [9] | Initial algorithm validation and comparative analysis |

| IEEE CEC2022 | Recent benchmark with hybrid and composition functions | Tests algorithm performance on modern, complex optimization challenges [1] | Advanced validation and real-world performance prediction | |

| Computational Framework | Parallel Computing Infrastructure | Multi-core CPUs/GPUs with distributed processing capability | Enables efficient execution of multiple sub-populations and independent runs [9] | Large-scale parameter studies and high-dimensional problems |

| Statistical Analysis Package | R, Python SciPy, or MATLAB Statistics Toolbox | Provides rigorous statistical validation of performance differences [1] [9] | Experimental results analysis and significance testing | |

| Evaluation Metrics | Convergence Profiling Tools | Custom scripts for tracking fitness progression | Quantifies convergence speed and solution quality over iterations [10] | Algorithm behavior analysis and parameter sensitivity studies |

| Solution Quality Metrics | Best, median, worst, and mean fitness values | Comprehensive assessment of algorithm reliability and performance [1] | Final performance evaluation and comparison | |

| Domain-Specific Testbeds | Engineering Design Problems | Constrained optimization with real-world limitations | Validates practical applicability beyond standard benchmarks [1] | Transferability assessment to applied research contexts |

| Biomedical Optimization Datasets | Molecular docking, pharmacokinetic parameters | Tests algorithm performance on target application domains [10] | Domain-specific validation and method customization |

This comprehensive parameter framework provides researchers with a structured approach to implementing and optimizing NPDOA for complex optimization tasks in drug development and scientific research. The experimental protocols and visualization tools facilitate effective algorithm configuration and performance validation across diverse application contexts.

The Critical Need for Advanced Optimization in Drug Development

The pursuit of safe, effective, and efficient drug development represents one of the most critical challenges in modern healthcare. Optimization in this context extends beyond mathematical abstractions to directly impact patient survival, quality of life, and therapeutic outcomes. Historically, drug development has relied on established paradigms such as the maximum tolerated dose (MTD) approach developed for chemotherapeutics. However, studies reveal that this traditional framework is poorly suited to modern targeted therapies and immunotherapies, with reports indicating that nearly 50% of patients enrolled in late-stage trials of small molecule targeted therapies require dose reductions due to intolerable side effects [11]. Furthermore, the U.S. Food and Drug Administration (FDA) has required additional studies to re-evaluate the dosing of over 50% of recently approved cancer drugs [11]. These statistics underscore a systematic failure in conventional dose optimization approaches that necessitates advanced methodologies.

This application note establishes the critical need for sophisticated optimization frameworks, such as those enabled by metaheuristic algorithms including the Neural Population Dynamics Optimization Algorithm (NPDOA), within pharmaceutical development. By framing drug development challenges as complex optimization problems, researchers can leverage advanced computational strategies to navigate high-dimensional parameter spaces with multiple constraints and competing objectives—ultimately accelerating the delivery of optimized therapies to patients [1] [6].

The Current Landscape: Limitations in Traditional Approaches

The MTD Paradigm and Its Shortcomings

The conventional 3+3 dose escalation design, formalized in the 1980s for cytotoxic chemotherapy agents, continues to dominate first-in-human (FIH) oncology trials despite significant advances in therapeutic modalities [11]. This approach determines the maximum tolerated dose (MTD) by treating small patient cohorts with escalating doses until dose-limiting toxicities emerge in approximately one-sixth of patients. This methodology suffers from several critical limitations:

- Ignores therapeutic efficacy: Dose escalation decisions rely solely on toxicity endpoints without evaluating anti-tumor activity [11]

- Poor representation of real-world treatment: Short treatment courses fail to mirror extended durations in late-stage trials and clinical practice [11]

- Misalignment with modern drug mechanisms: The framework doesn't account for fundamental differences in how targeted therapies and immunotherapies function [12]

- Suboptimal toxicity identification: Even for its intended purpose, the 3+3 design demonstrates poor performance in accurately identifying MTD [11]

The consequences of these limitations extend throughout the drug development lifecycle and into clinical practice. When the labeled dose is unnecessarily high, patients may experience severe toxicity without additional efficacy, leading to high rates of dose reduction and premature treatment discontinuation [12]. For modern oncology drugs that may be administered for years rather than months, even low-grade toxicities can significantly diminish quality of life and treatment adherence over time [12].

Quantitative Evidence of Optimization Failures

Table 1: Evidence Gaps in Traditional Dose Optimization Approaches

| Evidence Category | Finding | Implication |

|---|---|---|

| Late-Stage Trial Experience | Nearly 50% of patients on targeted therapies require dose reductions [11] | Initial dose selection poorly predicts real-world tolerability |

| Regulatory Re-evaluation | >50% of recently approved cancer drugs required post-marketing dose studies [11] | Insufficient characterization of benefit-risk profile during development |

| Post-Marketing Requirements | Specific risk factors increase likelihood of PMR/PMC for dose optimization [12] | Identifiable characteristics could trigger earlier optimization |

| Dose Selection Justification | 15.9% of first-cycle review failures for new molecular entities (2000-2012) [12] | Inadequate dose selection significantly impacts regulatory success |

Advanced Optimization Frameworks: Methodologies and Applications

Model-Informed Drug Development (MIDD)

Model-Informed Drug Development (MIDD) represents a paradigm shift in pharmaceutical optimization, applying quantitative modeling and simulation to support drug development and regulatory decision-making [13]. This framework provides a structured approach to integrating knowledge across development stages, from early discovery through post-market surveillance. The "fit-for-purpose" implementation of MIDD strategically aligns modeling tools with specific questions of interest and contexts of use throughout the development lifecycle [13].

MIDD encompasses diverse quantitative approaches, each with distinct applications in optimization challenges:

- Physiologically Based Pharmacokinetic (PBPK) Modeling: Mechanistic framework modeling drug disposition based on physiology and drug properties [13]

- Population Pharmacokinetics (PPK): Characterizes sources and correlates of variability in drug exposure among target patient populations [13]

- Exposure-Response (ER) Analysis: Quantifies relationships between drug exposure and efficacy or safety outcomes [13]

- Quantitative Systems Pharmacology (QSP): Integrative modeling combining systems biology with pharmacology to generate mechanism-based predictions [13]

These methodologies enable a more comprehensive understanding of the benefit-risk profile across potential dosing regimens, supporting optimized dose selection before committing to large, resource-intensive registrational trials [13].

Metaheuristic Algorithms in Drug Development Optimization

Metaheuristic algorithms offer powerful optimization capabilities for complex, high-dimensional problems in drug development. These algorithms can be categorized by their inspiration sources:

Table 2: Metaheuristic Algorithm Categories with Drug Development Applications

| Algorithm Category | Examples | Potential Drug Development Applications |

|---|---|---|

| Evolution-based | Genetic Algorithm (GA), Differential Evolution (DE) [1] | Clinical trial design optimization, patient stratification |

| Swarm Intelligence | Particle Swarm Optimization (PSO), Ant Colony Optimization (ACO) [1] [3] | Combination therapy dosing, study enrollment planning |

| Physics-inspired | Simulated Annealing (SA), Gravitational Search Algorithm (GSA) [1] | Molecular docking, chemical structure optimization |

| Human behavior-based | Teaching-Learning-Based Optimization (TLBO) [14] | Adaptive trial design, site selection optimization |

| Mathematics-based | Sine-Cosine Algorithm (SCA), Gradient-Based Optimizer (GBO) [1] | Pharmacokinetic modeling, dose-response characterization |

The Neural Population Dynamics Optimization Algorithm (NPDOA) represents a particularly promising approach inspired by brain neuroscience [6]. This algorithm simulates the activities of interconnected neural populations during cognition and decision-making through three core strategies:

- Attractor trending strategy: Drives neural populations toward optimal decisions, ensuring exploitation capability [6]

- Coupling disturbance strategy: Deviates neural populations from attractors by coupling with other neural populations, improving exploration ability [6]

- Information projection strategy: Controls communication between neural populations, enabling transition from exploration to exploitation [6]

This balanced approach to exploration and exploitation mirrors the challenges faced in dose optimization, where researchers must efficiently search vast parameter spaces while refining promising candidate regimens.

Experimental Protocols for Dose Optimization

Protocol 1: Exposure-Response Analysis for Dose Selection

Purpose: To characterize the relationship between drug exposure and efficacy/safety endpoints to support optimized dose selection.

Materials and Reagents:

- Patient pharmacokinetic sampling kits

- Validated drug concentration assay materials

- Clinical outcome assessment tools

- Statistical analysis software with nonlinear mixed-effects modeling capabilities

Procedure:

- Collect rich or sparse pharmacokinetic samples from patients across multiple dose levels

- Quantify drug concentrations using validated bioanalytical methods

- Record efficacy and safety endpoints at predefined timepoints

- Develop population pharmacokinetic model to characterize drug disposition and identify covariates

- Establish exposure-response models for primary efficacy and key safety endpoints

- Simulate outcomes across potential dosing regimens using developed models

- Identify doses that maximize therapeutic benefit while maintaining acceptable safety profile

Analysis: Quantitative comparison of simulated outcomes across dosing strategies, with identification of optimal balance between efficacy and safety.

Protocol 2: Model-Informed First-in-Human Dose Optimization

Purpose: To determine optimal starting dose and escalation scheme for first-in-human trials using integrated modeling approaches.

Materials and Reagents:

- Preclinical pharmacokinetic and pharmacodynamic data

- In vitro assay data for target binding and occupancy

- Physiological-based pharmacokinetic modeling software

- Statistical programming environment for simulation

Procedure:

- Integrate all available nonclinical data (pharmacology, toxicology, PK/PD)

- Develop physiological-based pharmacokinetic model from preclinical data

- Establish target exposure levels based on efficacy (target occupancy) and safety margins

- Simulate human pharmacokinetics using PBPK model with population variability

- Determine starting dose that achieves target engagement with sufficient safety margin

- Design dose escalation scheme informed by predicted human pharmacokinetics and variability

- Define biomarkers for monitoring target engagement and pharmacological effects in trial

- Establish criteria for dose escalation and de-escalation based on modeled expectations

Analysis: Comparison of model-predicted human exposure with therapeutic and safety target levels to justify starting dose and escalation scheme.

Diagram 1: FIH Dose Optimization Workflow

The Scientist's Toolkit: Essential Research Reagents and Solutions

Table 3: Key Research Reagent Solutions for Dose Optimization Studies

| Reagent/Resource | Function | Application Context |

|---|---|---|

| Validated Bioanalytical Assays | Quantification of drug and metabolite concentrations | Pharmacokinetic profiling, exposure assessment |

| Biomarker Assay Kits | Measurement of target engagement and pharmacological effects | Pharmacodynamic characterization, proof-of-mechanism |

| PBPK Modeling Software | Prediction of human pharmacokinetics from preclinical data | First-in-human dose prediction, drug-drug interaction assessment |

| Population Modeling Platforms | Nonlinear mixed-effects modeling of pharmacokinetic and pharmacodynamic data | Exposure-response analysis, covariate effect identification |

| Clinical Trial Simulation Tools | Simulation of trial outcomes under different design scenarios | Adaptive trial design, sample size optimization |

| Circulating Tumor DNA Assays | Monitoring of tumor dynamics through liquid biopsy | Early efficacy assessment, response monitoring |

Regulatory Landscape and Future Directions

The FDA's Project Optimus initiative, launched in 2021, aims to reform dose selection and optimization in oncology drug development [11] [12]. This initiative encourages sponsors to conduct randomized evaluations of multiple doses to characterize benefit-risk profiles before initiating registration trials [12]. The subsequent guidance document "Optimizing the Dosage of Human Prescription Drugs and Biological Products for the Treatment of Oncologic Diseases," published in August 2024, formalizes this approach [12].

Critical risk factors identified for postmarketing requirements related to dose optimization include when the labeled dose is the maximum tolerated dose, when there is an increased percentage of adverse reactions leading to treatment discontinuation, and when an exposure-safety relationship is established [12]. These identifiable risk factors provide opportunities for earlier implementation of advanced optimization strategies during development.

Future directions in the field include:

- Application of artificial intelligence and machine learning to enhance predictive modeling and pattern recognition in complex datasets [13]

- Development of innovative trial designs that efficiently evaluate multiple doses and combinations [11]

- Expansion of optimization frameworks to address combination therapies, which present unique challenges [15]

- Integration of patient-reported outcomes and preference data into benefit-risk assessments [11]

The critical need for advanced optimization in drug development is evident from both historical challenges and contemporary regulatory initiatives. The limitations of traditional approaches—demonstrated by high rates of post-approval dose modifications and patient toxicities—underscore the imperative for more sophisticated methodologies. Frameworks such as Model-Informed Drug Development and optimization algorithms including NPDOA provide powerful approaches to address these challenges. By implementing robust, quantitative optimization strategies throughout the development lifecycle, researchers can maximize therapeutic benefit while minimizing patient risk, ultimately accelerating the delivery of optimized treatments to those in need.

Linking Algorithm Performance to Project Optimus Goals for Dosage Optimization

The U.S. Food and Drug Administration's (FDA) Project Optimus represents a transformative initiative aimed at reforming the paradigm for dose optimization and selection in oncology drug development [16]. This initiative addresses critical limitations of the traditional maximum tolerated dose (MTD) approach, which, while suitable for cytotoxic chemotherapeutics, often leads to poorly characterized dosing regimens for modern targeted therapies and immunotherapies [11] [17]. The consequence of this misalignment is that a significant proportion of patients—nearly 50% for some targeted therapies—require dose reductions due to intolerable side effects, and over 50% of recently approved cancer drugs have required additional post-marketing studies to re-evaluate dosing [11] [17]. Project Optimus therefore emphasizes the selection of doses that maximize both efficacy and safety, requiring a more comprehensive understanding of the dose-exposure-toxicity-activity relationship [16] [18].

In this new framework, advanced computational algorithms have emerged as critical enablers for integrating and analyzing complex nonclinical and clinical data to support optimal dosage decisions [19]. The performance of these algorithms is no longer a mere technical consideration but is directly linked to the core goals of Project Optimus: identifying doses that provide the best balance of efficacy and tolerability, particularly for chronic administration of modern cancer therapeutics [16] [17]. From model-informed drug development (MIDD) approaches to innovative clinical trial designs and metaheuristic optimization algorithms, computational methods provide the quantitative foundation necessary to characterize therapeutic windows, predict drug behavior across doses, and ultimately improve patient outcomes through better-tolerated dosing strategies [19] [20].

Project Optimus Goals and Algorithmic Performance Metrics

Core Goals of Project Optimus

Project Optimus aims to address systemic issues in oncology dose selection through three primary mechanisms: education, innovation, and collaboration [16]. Its specific goals include communicating regulatory expectations through guidance and workshops, encouraging early engagement between drug developers and FDA Oncology Review Divisions, and developing innovative strategies for dose-finding that leverage nonclinical and clinical data, including randomized dose evaluations [16]. A fundamental shift promoted by the initiative is the movement away from dose selection based primarily on short-term toxicity data (the MTD paradigm) toward a more holistic approach that considers long-term tolerability, patient-reported outcomes, and the totality of efficacy and safety data [11].

This shift is necessitated by the changing nature of oncology therapeutics. Unlike traditional chemotherapies, targeted therapies and immunotherapies are often administered continuously over extended periods, making long-term tolerability and quality of life critical considerations [16] [17]. Furthermore, these agents frequently exhibit a plateau in their dose-response relationship once target saturation is achieved, meaning that doses higher than those necessary for target engagement may provide no additional efficacy while contributing unnecessary toxicity [17]. Project Optimus therefore encourages the identification of the minimum reproducibly active dose (MRAD) alongside the MTD to better characterize the therapeutic window [17].

Quantitative Performance Metrics for Optimization Algorithms

The evaluation of algorithms supporting Project Optimus goals requires specific quantitative metrics that align with the initiative's objectives. These metrics span pharmacokinetic, pharmacodynamic, efficacy, safety, and operational domains, providing a comprehensive framework for assessing algorithm performance in the context of dosage optimization.

Table 1: Key Performance Metrics for Dosage Optimization Algorithms

| Metric Category | Specific Metrics | Project Optimus Alignment |

|---|---|---|

| Pharmacokinetic (PK) | Maximum concentration (C~max~), Time to maximum concentration (T~max~), Trough concentration (C~trough~), Elimination half-life, Area under the curve (AUC) [19] | Characterizes drug exposure to identify dosing regimens that maintain therapeutic levels |

| Pharmacodynamic (PD) | Target expression, Target engagement/occupancy, Effect on PD biomarker [19] | Links drug exposure to biological effect for establishing pharmacologically active doses |

| Clinical Efficacy | Overall response rate, Effect on surrogate endpoint biomarker, Preliminary registrational endpoint data [19] | Provides evidence of antitumor activity across dose levels to inform efficacy considerations |

| Clinical Safety | Incidence of dose interruption, reduction, discontinuation; Grade 3+ adverse events; Time to toxicity; Duration of toxicity [19] | Quantifies tolerability profile to balance efficacy with safety, especially for chronic dosing |

| Patient-Reported Outcomes | Symptomatic adverse events, Impact of adverse events, Physical function, Quality of life [19] [18] | Incorporates patient experience into benefit-risk assessment, a key Project Optimus priority |

| Operational Efficiency | Computational time, Convergence speed, Solution accuracy, Stability across runs [1] | Ensures practical applicability in drug development timelines and decision-making processes |

Algorithm performance must be evaluated against these metrics to ensure they provide reliable, actionable insights for dose selection. For instance, exposure-response modeling must accurately predict the probability of adverse reactions as a function of drug exposure while simultaneously characterizing the relationship between exposure and efficacy measures [19]. The clinical utility index (CUI) framework provides a quantitative mechanism to integrate these diverse data points, weighting various efficacy and safety endpoints to support dose selection decisions [11].

Algorithm Classes and Methodologies for Dosage Optimization

Model-Informed Drug Development (MIDD) Approaches

Model-informed drug development approaches represent a cornerstone of the Project Optimus framework, providing quantitative methods to integrate and interpret complex data from multiple sources [19]. These approaches enable researchers to extrapolate drug behavior across doses, schedules, and populations, supporting more informed dosage decisions before conducting large, costly clinical trials.

Population Pharmacokinetics (PK) Modeling: This approach aims to describe the pharmacokinetics and interindividual variability for a given population, as well as the sources of this variability [19]. It can be used to select dosing regimens likely to achieve target exposure, transition from weight-based to fixed dosing regimens, and identify specific populations with clinically meaningful differences in PK that may require alternative dosing [19]. For example, population PK modeling and simulations were instrumental in the development of pertuzumab, where they supported the transition from a body weight-based dosing regimen used in the first-in-human trial to a fixed dosing regimen used in subsequent trials [19].

Exposure-Response (E-R) Modeling: E-R modeling aims to determine the clinical significance of observed differences in drug exposure by correlating exposure metrics with both efficacy and safety endpoints [19]. This approach can predict the probability of adverse reactions as a function of drug exposure and can be coupled with tumor growth models to understand antitumor response as a function of exposure [19]. E-R modeling is particularly valuable for simulating the potential benefit-risk profile of different dosing regimens, including those not directly studied in clinical trials [19].

Quantitative Systems Pharmacology (QSP): QSP models incorporate biological mechanisms and evaluate complex interactions to understand and predict both therapeutic and adverse effects of drugs with limited clinical data [19]. These models can integrate knowledge about biological pathways and may consider clinical data from other drugs within the same class to inform dosing strategies, such as designing regimens to reduce the risk of specific adverse events [19].

Physiologically-Based Pharmacokinetic (PBPK) Modeling: While not explicitly detailed in the search results, PBPK modeling represents another important MIDD approach that incorporates physiological parameters and drug-specific properties to predict PK behavior across populations and dosing scenarios.

Metaheuristic Optimization Algorithms

Metaheuristic algorithms offer powerful capabilities for solving complex optimization problems where traditional mathematical approaches may be insufficient. These algorithms are particularly valuable for exploring high-dimensional parameter spaces and identifying optimal solutions across multiple, potentially competing objectives.

Power Method Algorithm (PMA): A recently proposed metaheuristic algorithm inspired by the power iteration method for computing dominant eigenvalues and eigenvectors [1]. PMA incorporates strategies such as stochastic angle generation and adjustment factors to effectively address complex optimization problems. The algorithm demonstrates notable balance between exploration and exploitation, effectively avoiding local optima while maintaining high convergence efficiency [1]. Quantitative analysis reveals that PMA surpasses nine state-of-the-art metaheuristic algorithms on benchmark functions, with average Friedman rankings of 3, 2.71, and 2.69 for 30, 50, and 100 dimensions, respectively [1].

Improved Red-Tailed Hawk (IRTH) Algorithm: This multi-strategy improved algorithm enhances the original RTH algorithm through a stochastic reverse learning strategy based on Bernoulli mapping, a dynamic position update optimization strategy using stochastic mean fusion, and a trust domain-based optimization method for frontier position updating [3]. These improvements enhance exploration capabilities, reduce the probability of becoming trapped in local optima, and improve convergence speed while maintaining accuracy [3].

Neural Population Dynamics Optimization Algorithm (NPDOA): This algorithm models the dynamics of neural populations during cognitive activities, using an attractor trend strategy to guide the neural population toward making optimal decisions (exploitation) while coupling with other neural populations to enhance exploration capability [1] [3]. The algorithm employs an information projection strategy to control communication between neural populations, facilitating the transition from exploration to exploitation [3].

Table 2: Comparison of Metaheuristic Algorithm Performance on Benchmark Problems

| Algorithm | Key Mechanisms | Strengths | Validation |

|---|---|---|---|

| Power Method Algorithm (PMA) [1] | Power iteration with random perturbations; Random geometric transformations; Balanced exploration-exploitation | High convergence efficiency; Effective at avoiding local optima; Strong mathematical foundation | CEC 2017 & CEC 2022 test suites (49 functions); 8 real-world engineering problems |

| Improved Red-Tailed Hawk (IRTH) [3] | Stochastic reverse learning; Dynamic position update; Trust domain-based frontier updates | Enhanced exploration; Reduced local optima trapping; Improved convergence speed | IEEE CEC2017 test set; UAV path planning applications |

| Neural Population Dynamics Optimization (NPDOA) [1] [3] | Attractor trend strategy; Neural population coupling; Information projection | Balanced exploration-exploitation; Biologically-inspired decision making | Benchmarking against state-of-the-art algorithms |

Clinical Trial Design and Analysis Algorithms

Project Optimus has catalyzed innovation in clinical trial design, moving beyond traditional algorithm-based designs like the 3+3 design toward more sophisticated model-based approaches [20]. These new designs generate richer data for characterizing the dose-response relationship and require specialized algorithms for implementation and analysis.

Model-Based Escalation Designs: Designs such as the Bayesian Optimal Interval (BOIN) design allow for more continuous enrollment and dosing decisions based on the latest safety data [20]. These designs often incorporate backfilling to existing dose cohorts to collect additional PK, PD, and efficacy data at dose levels below the current escalation point [20]. Compared to traditional 3+3 designs, model-based approaches provide more nuanced dose-escalation/de-escalation decision-making by responding to efficacy measures and late-onset toxicities, not just short-term safety data [11].

Adaptive and Seamless Trial Designs: Adaptive designs allow for modifications to the trial based on emerging data, while seamless designs combine traditionally distinct development phases (e.g., phase 1 and 2) into a single trial [19] [11]. These designs can increase operational efficiency and enable the collection of more long-term safety and efficacy data to better inform dosing decisions [11]. Algorithms for adaptive randomization, sample size recalculation, and interim analysis are critical for implementing these complex designs.

Biomarker-Driven Enrollment Algorithms: With the emphasis on comprehensive PK sampling and analysis plans in each protocol [20], algorithms for patient stratification and biomarker-guided enrollment are increasingly important. These algorithms help ensure that the right patients are treated at the optimal dose, particularly for therapeutics where patient factors may significantly influence drug exposure or response.

Experimental Protocols and Workflows

Integrated Workflow for Algorithm-Driven Dosage Optimization

The following workflow diagram illustrates the integrated process for applying computational algorithms to dosage optimization within the Project Optimus framework:

Dosage Optimization Workflow illustrates the comprehensive process from data collection through final dose selection, highlighting the integration of multiple algorithm classes and data types to support Project Optimus goals.

Protocol for Exposure-Response Modeling in Dose Optimization

Objective: To develop quantitative models characterizing the relationship between drug exposure, efficacy endpoints, and safety endpoints to identify the optimal dose balancing therapeutic benefit and tolerability.

Materials and Equipment:

- Population PK model output (parameter estimates, variability components)

- Individual exposure metrics (AUC, C~max~, C~trough~)

- Efficacy endpoints (tumor response, biomarker changes, PFS)

- Safety endpoints (adverse event incidence, severity, timing)

- Statistical software (R, SAS, NONMEM, Monolix)

Procedure:

- Data Preparation: Compile individual exposure metrics from population PK analysis alongside corresponding efficacy and safety endpoints. Ensure consistent time alignment between exposure metrics and response measures.

- Exploratory Analysis: Create scatter plots of exposure metrics versus efficacy and safety endpoints to visualize potential relationships. Calculate summary statistics by exposure quartiles.

- Model Structure Selection:

- For continuous endpoints: Consider linear, Emax, or sigmoidal Emax models

- For binary endpoints: Consider logistic regression models

- For time-to-event endpoints: Consider parametric survival models or Cox proportional hazards models with exposure as a time-varying covariate

- Model Estimation: Use appropriate estimation techniques (maximum likelihood, Bayesian methods) to obtain parameter estimates for the selected model structure.

- Model Evaluation: Assess model adequacy using:

- Diagnostic plots (observations vs. predictions, residuals)

- Visual predictive checks

- Bootstrap confidence intervals for parameters

- Model Application:

- Simulate expected efficacy and safety outcomes across a range of doses

- Identify exposure targets associated with desired efficacy and acceptable safety

- Calculate clinical utility index values for different dosing regimens

- Sensitivity Analysis: Evaluate robustness of conclusions to model assumptions and parameter uncertainty.

Interpretation: The exposure-response model should provide quantitative estimates of the probability of efficacy and adverse events across the dose range under consideration. Model outputs should directly inform the selection of doses for randomized comparison in later-stage trials.

Protocol for Implementing Metaheuristic Algorithms in Dose Optimization

Objective: To identify optimal dosing regimens that balance multiple competing objectives (efficacy, safety, tolerability, convenience) using metaheuristic optimization algorithms.

Materials and Equipment:

- Quantitative models linking dose to outcomes (PK/PD models, exposure-response models)

- Objective function defining optimal balance of efficacy and safety

- Computational environment for algorithm implementation (Python, MATLAB, R)

- High-performance computing resources (for complex algorithms or large simulations)

Procedure:

- Problem Formulation:

- Define decision variables (dose amount, frequency, duration)

- Specify constraints (maximum allowable dose, practical dosing intervals)

- Formulate objective function incorporating efficacy, safety, and other relevant endpoints with appropriate weighting

- Algorithm Selection: Choose appropriate metaheuristic algorithm based on problem characteristics:

- For continuous variables with smooth response surfaces: Consider PMA, NPDOA

- For problems with multiple local optima: Consider IRTH with enhanced exploration capabilities

- For mixed discrete-continuous problems: Consider modified versions of above algorithms

- Algorithm Configuration:

- Set population size and initialization strategy

- Define algorithm-specific parameters (e.g., adjustment factors for PMA, trust domain radius for IRTH)

- Specify termination criteria (maximum iterations, convergence tolerance)

- Implementation:

- Code algorithm structure and objective function evaluation

- Incorporate constraints using appropriate methods (penalty functions, constraint handling techniques)

- Implement parallelization if applicable for efficient computation

- Execution and Monitoring:

- Run multiple independent algorithm executions to assess robustness

- Monitor convergence behavior and solution diversity

- Track computational efficiency (time to solution, function evaluations)

- Solution Analysis:

- Identify best-performing dosing regimens

- Assess sensitivity of solutions to weighting factors in objective function

- Evaluate trade-offs between competing objectives using Pareto front analysis (if multi-objective optimization)

Interpretation: The algorithm should identify one or more dosing regimens that optimize the balance between efficacy and safety according to the predefined objective function. Results should be interpreted in the context of model uncertainty and clinical practicalities.

Successful implementation of algorithm-driven dosage optimization requires both wet-lab and computational resources. The following table details key components of the research toolkit for Project Optimus-aligned dose optimization studies.

Table 3: Essential Research Reagents and Computational Resources for Dosage Optimization

| Category | Item | Specification/Purpose | Application in Dosage Optimization |

|---|---|---|---|

| Bioanalytical Reagents | Ligand-binding assay reagents | Quantification of drug concentrations in biological matrices | PK parameter estimation for exposure-response modeling |

| Target engagement assays | Measurement of target occupancy or modulation | Pharmacodynamic endpoint for establishing biological activity | |

| Biomarker assay kits | Quantification of predictive/response biomarkers | Patient stratification and efficacy endpoint measurement | |

| Computational Resources | PK/PD modeling software | (e.g., NONMEM, Monolix, Phoenix WinNonlin) | Population PK and exposure-response analysis |

| Statistical computing environments | (e.g., R, Python with relevant libraries) | Data analysis, visualization, and algorithm implementation | |

| High-performance computing | Parallel processing capabilities | Execution of complex metaheuristic algorithms and simulations | |

| Data Management | Electronic data capture systems | Clinical trial data management | Centralized, high-quality data for analysis |

| Laboratory information management systems | Bioanalytical data tracking | Integration of biomarker and PK data with clinical endpoints | |

| Clinical Assessment Tools | Patient-reported outcome instruments | Validated quality of life and symptom assessments | Incorporation of patient experience into benefit-risk assessment |

| Standardized toxicity grading | NCI CTCAE or similar criteria | Consistent safety evaluation across dose levels |

The integration of advanced computational algorithms with the regulatory framework of Project Optimus represents a paradigm shift in oncology dose optimization. By linking algorithm performance directly to Project Optimus goals, drug developers can leverage these powerful tools to identify doses that maximize therapeutic benefit while minimizing unnecessary toxicity. The successful implementation of this approach requires appropriate algorithm selection, rigorous validation against relevant metrics, and integration across multiple data types and development phases.

As the field continues to evolve, several areas warrant particular attention: the development of algorithms specifically designed for combination therapies, improved methods for incorporating patient preferences and heterogeneity into optimization frameworks, and strategies for balancing computational complexity with regulatory interpretability. Furthermore, the "No Free Lunch" theorem reminds us that no single algorithm will outperform all others across every optimization problem [1], emphasizing the need for careful algorithm selection tailored to specific drug characteristics and development objectives.

By embracing the framework outlined in these application notes, researchers can systematically apply computational algorithms to address the fundamental challenges of dosage optimization, ultimately leading to better-tolerated, more effective cancer treatments that improve patient outcomes and quality of life.

A Step-by-Step Guide to NPDOA Parameter Configuration and Implementation

In both computational algorithm development and clinical drug development, the core process begins with the precise definition of optimization objectives. For metaheuristic algorithms like the Neural Population Dynamics Optimization Algorithm (NPDOA), objectives are quantified through benchmark functions that test exploration, exploitation, and convergence properties. In clinical development, objectives are defined through carefully selected endpoints that evaluate efficacy, safety, and therapeutic benefit. This document establishes a unified framework for defining optimization objectives across these domains, providing researchers with structured methodologies for parameter tuning and objective validation.

Table 1: Core Optimization Parallels Across Domains

| Domain | Objective Definition | Success Metrics | Constraint Handling |

|---|---|---|---|

| Computational Algorithms | Benchmark functions (CEC 2017/2022) | Convergence speed, accuracy, stability | Boundary constraints, feasibility |

| Clinical Development | Primary/secondary endpoints | Statistical significance, effect size | Safety parameters, eligibility criteria |

Computational Objective Definition: Benchmark Functions and Testing Frameworks

Standardized Benchmark Function Suites

Algorithm performance validation requires comprehensive testing against established benchmark suites that provide standardized optimization objectives. The IEEE CEC 2017 and CEC 2022 test suites contain diverse function types including unimodal, multimodal, hybrid, and composition functions that test different algorithm capabilities [1] [3]. These functions provide known optima against which algorithm performance can be quantitatively measured.

For the NPDOA, which utilizes an attractor trend strategy to guide populations toward optimal decisions while maintaining exploration through neural population divergence [3], benchmark selection should align with the algorithm's biological inspiration. Functions with deceptive optima, high dimensionality, and complex landscapes particularly test the balance between exploration and exploitation that neural dynamics aim to achieve.

Experimental Protocol: Computational Benchmarking

Purpose: To quantitatively evaluate algorithm performance against established benchmarks for parameter tuning and validation.

Materials and Reagents:

- High-performance computing cluster or workstation

- Benchmark function implementation (CEC 2017/2022 suites)

- Algorithm implementation (NPDOA base code)

- Statistical analysis software (R, Python, or MATLAB)

Procedure:

- Function Selection: Select 20-30 functions from CEC 2017 and CEC 2022 test suites representing diverse problem landscapes

- Parameter Initialization: Initialize NPDOA parameters based on biological plausibility (neural population size, coupling strength, projection parameters)

- Iteration Setup: Configure algorithm for 50 independent runs per function with varying random seeds

- Execution: Run optimization procedures with comprehensive tracking of convergence metrics

- Data Collection: Record final accuracy, convergence speed, success rates, and stability metrics

- Statistical Analysis: Perform Wilcoxon rank-sum and Friedman tests to compare against state-of-the-art algorithms

Validation Criteria:

- Statistical superiority over at least 5 contemporary algorithms

- Consistent performance across function types and dimensionalities

- Robust convergence characteristics in high-dimensional spaces

Clinical Objective Definition: Endpoints and Regulatory Alignment

Endpoint Selection and Validation Frameworks

Clinical optimization requires precise definition of endpoints that reliably measure therapeutic effect. The FDA's Project Optimus has catalyzed a paradigm shift from maximum tolerated dose (MTD) determination toward optimized dosing that maximizes both safety and efficacy [11] [12]. This initiative encourages randomized evaluation of benefit/risk profiles across a range of doses before initiating registration trials.

Traditional oncology dose optimization relied on the 3+3 design that identified MTD as the primary objective but proved suboptimal for targeted therapies and immunotherapies where the exposure-response relationship may be non-linear or flat [12]. Studies show that nearly 50% of patients enrolled in late-stage trials of small molecule targeted therapies require dose reductions due to intolerable side effects, and the FDA has required additional studies to re-evaluate the dosing of over 50% of recently approved cancer drugs [11].

Quantitative Risk Factors for Dose Optimization

Recent comprehensive analysis of oncology drugs approved between 2010-2023 identified specific risk factors that necessitate postmarketing requirements or commitments for dose optimization [12]. Multivariate logistic regression revealed three significant predictors:

Table 2: Risk Factors for Dose Optimization Requirements in Oncology Drugs

| Risk Factor | Adjusted Odds Ratio | Clinical Implications |

|---|---|---|

| MTD as labeled dose | 7.14 (p = 0.017) | Higher rates of adverse reactions leading to treatment discontinuation |

| Exposure-safety relationship established | 6.67 (p = 0.024) | Clear correlation between drug exposure and safety concerns |

| Increased percentage of adverse reactions leading to treatment discontinuation | 1.07 (p = 0.017) | Per 1% increase in discontinuation due to adverse events |

Experimental Protocol: Clinical Dose Optimization

Purpose: To determine the optimal dose balancing efficacy and safety for novel therapeutic agents.

Materials and Reagents:

- Investigational drug product (multiple dose levels)

- Clinical trial supply chain infrastructure

- Electronic data capture system

- Biomarker assay platforms (e.g., ctDNA measurement)

- Pharmacokinetic sampling equipment

Procedure:

- Dose Selection: Identify 3-4 candidate doses based on phase Ib data and modeling

- Trial Design: Implement randomized dose comparison in expansion cohorts

- Endpoint Assessment: Evaluate efficacy endpoints (ORR, PFS) and safety endpoints (AE profiles, discontinuation rates)

- Biomarker Integration: Incorporate biomarker data (e.g., ctDNA dynamics) to assess biological activity

- Exposure-Response Analysis: Characterize relationships between drug exposure, efficacy, and safety

- Benefit-Risk Integration: Apply clinical utility indices to quantitatively compare dose levels

Validation Criteria:

- Dose with optimal benefit-risk profile across multiple endpoints

- Consistent exposure-response relationships across patient subgroups

- Minimal requirement for dose modifications due to toxicity

Integrated Optimization Strategies

Adaptive Trial Designs for Efficient Optimization

Seamless clinical trial designs combine traditionally distinct development phases, allowing for more rapid enrollment, faster decision-making, and accumulation of long-term safety and efficacy data to better inform dosing decisions [11]. These designs are particularly valuable for NPDOA parameter tuning where initial population dynamics may require mid-study adjustment based on interim performance.

The integration of real-world data (RWD) with causal machine learning (CML) techniques addresses limitations of traditional randomized controlled trials by providing broader insight into treatment effects across diverse populations [21]. These approaches can identify patient subgroups with varying responses to specific treatments, enabling more precise optimization objectives.

Protocol Complexity Management

Effective optimization requires management of protocol complexity, which directly impacts trial execution efficiency. The Protocol Complexity Tool (PCT) provides a framework with 26 questions across 5 domains (operational execution, regulatory oversight, patient burden, site burden, and study design) to quantify and manage complexity [22]. Implementation has demonstrated statistically significant correlations between complexity scores and key trial metrics:

- 75% site activation: rho = 0.61; p = 0.005

- 25% participant recruitment: rho = 0.59; p = 0.012

Post-implementation of complexity reduction strategies, 75% of trials showed reduced complexity scores, primarily in operational execution and site burden domains [22].

The Scientist's Toolkit: Essential Research Reagents

Table 3: Key Research Reagents and Computational Tools

| Item | Function | Application Context |

|---|---|---|

| CEC 2017/2022 Benchmark Suites | Standardized test functions for algorithm validation | Computational optimization |

| Protocol Complexity Tool (PCT) | 26-item assessment across 5 complexity domains | Clinical trial design |

| Circulating Tumor DNA (ctDNA) Assays | Dynamic biomarker for tumor response assessment | Oncology dose optimization |

| Population PK/PD Modeling Software | Quantitative analysis of exposure-response relationships | Clinical pharmacology |

| Clinical Utility Index Framework | Multi-criteria decision analysis for benefit-risk assessment | Dose selection |

| Causal Machine Learning Algorithms | Treatment effect estimation from real-world data | Comparative effectiveness research |

This document establishes a unified framework for optimization objective definition across computational and clinical domains. For NPDOA parameter tuning, this translates to careful selection of benchmark functions that test specific algorithm capabilities, complemented by rigorous statistical validation against state-of-the-art alternatives. In clinical development, the framework emphasizes dose optimization based on comprehensive benefit-risk assessment across multiple endpoints, moving beyond the traditional MTD paradigm.

The convergence of computational and clinical optimization approaches enables more efficient drug development, with computational methods informing clinical trial design and clinical data validating computational predictions. This integrated approach ultimately accelerates the development of safe, effective therapies through precisely defined and rigorously validated optimization objectives.

The Neural Population Dynamics Optimization Algorithm (NPDOA) is a metaheuristic algorithm that models the dynamics of neural populations during cognitive activities to solve complex optimization problems [1]. As with all metaheuristic algorithms, its performance is profoundly influenced by the specific values of its internal control parameters. Sensitivity analysis is the study of how uncertainty in the output of a model can be apportioned to different sources of uncertainty in the model input [23]. For NPDOA, this translates to understanding how variations in its parameters affect key performance metrics such as convergence speed, solution accuracy, and robustness across different problem domains. This systematic identification of influential parameters provides crucial insights for developing effective parameter tuning guidelines, ensuring that researchers and practitioners can reliably extract high performance from the algorithm without exhaustive manual tuning. The "No Free Lunch" theorem establishes that no single algorithm performs best across all optimization problems [1], making parameter sensitivity analysis essential for adapting NPDOA to specific application domains, including those in pharmaceutical research and drug development.

Sensitivity Analysis Methodologies for Metaheuristic Algorithms

Sensitivity analysis techniques can be broadly categorized into local and global methods, each with distinct advantages for analyzing algorithm parameters.

Local vs. Global Sensitivity Analysis

Local sensitivity analysis is performed by varying model parameters around specific reference values, with the goal of exploring how small input perturbations influence model performance. While computationally efficient, this approach has significant limitations for analyzing metaheuristics like NPDOA, as it explores only a small region of the parameter space and cannot properly account for interactions between parameters [23]. If the model's factors interact, local sensitivity analysis will underestimate their importance. Given that metaheuristic algorithms are inherently nonlinear, local methods are insufficient for comprehensive parameter analysis.

Global sensitivity analysis varies uncertain factors within the entire feasible space, revealing the global effects of each parameter on the model output, including any interactive effects [23]. This approach is essential for NPDOA, as it allows researchers to understand how parameters interact across the algorithm's execution. Global methods are preferred for models that cannot be proven linear, making them ideally suited for the complex, nonlinear dynamics present in population-based optimization algorithms. The three primary application modes for global sensitivity analysis include:

- Factor Prioritization: Identifying which uncertain parameters have the greatest impact on output variability.

- Factor Fixing: Determining which parameters have negligible effects and can be fixed at nominal values.