Multimodal LLMs for Brain MRI Sequence Classification: A Comprehensive Review for Biomedical Researchers

This article provides a comprehensive analysis of the application of Multimodal Large Language Models (MLLMs) in classifying brain MRI sequences, a critical task for medical imaging workflows and AI-driven diagnostics.

Multimodal LLMs for Brain MRI Sequence Classification: A Comprehensive Review for Biomedical Researchers

Abstract

This article provides a comprehensive analysis of the application of Multimodal Large Language Models (MLLMs) in classifying brain MRI sequences, a critical task for medical imaging workflows and AI-driven diagnostics. We explore the foundational principles enabling MLLMs to process and interpret radiological images, detail the current methodologies and real-world applications being developed, and critically examine performance benchmarks from recent comparative studies. The content further addresses significant challenges such as model hallucinations and sequence misclassifications, presenting emerging optimization strategies. Designed for researchers, scientists, and drug development professionals, this review synthesizes validation data and outlines a forward-looking perspective on the integration of MLLMs into clinical and biomedical research pipelines, emphasizing the balance between technological potential and the necessity for robust, clinically-safe implementation.

The Rise of Multimodal AI in Radiology: Core Principles and Clinical Promise

Multimodal Large Language Models (MLLMs) represent a significant evolution in artificial intelligence, extending the capabilities of text-only large language models (LLMs) to process and integrate diverse data types. In clinical medicine, particularly in visually intensive disciplines like radiology, MLLMs can concurrently process various imaging types (e.g., CT, MRI, X-ray) alongside textual data such as radiology reports and clinical notes from electronic health records (EHRs) [1]. Their core capability lies in integrating and aligning this heterogeneous information across modalities, often mapping them into a shared representational space [1]. This synergy allows for a more comprehensive understanding than unimodal approaches permit, enabling complex cross-modal tasks such as radiology report generation (RRG) from images and visual question answering (VQA) that incorporates both imaging and clinical context [1]. This document frames the exploration of MLLMs within the specific research context of brain MRI sequence classification, providing application notes and detailed experimental protocols for researchers and drug development professionals.

Technical Foundations of MLLMs

Core Architectural Components

A typical MLLM architecture comprises several key components [1]:

- Modality-Specific Encoders: These transform complex data types—such as images, audio, and video—into simpler, meaningful representations. Pre-trained models, like Contrastive Language-Image Pre-training (CLIP), are often employed to align visual data with corresponding textual descriptions [1].

- Multimodal Connector: This is a learnable interface that bridges the modality gap between non-text data (e.g., images) and natural language. It translates the outputs of specialized encoders into a format interpretable by the LLM. Connector types include projection-based (using multi-layer perceptrons), query-based (using trainable tokens), and fusion-based (using cross-attention mechanisms) [1].

- Pre-trained LLM: This serves as the cognitive backbone, providing powerful reasoning capabilities acquired from training on vast text corpora. The LLM processes the fused multimodal information to generate coherent responses [1].

Standardized Training Pipeline

MLLMs are typically developed through a sequential, multi-stage training pipeline [1]:

- Pre-training: A multimodal connector learns to align visual and textual representations using large-scale image-text pairs.

- Instruction Tuning: The model is fine-tuned with diverse natural language instructions and multimodal inputs to reliably follow complex directives.

- Alignment Tuning: The model's outputs are optimized to better reflect human preferences, often through reinforcement learning from human feedback (RLHF), to improve response quality and reliability and reduce hallucinations [1].

Quantitative Performance in Brain MRI Sequence Classification

Evaluating the ability of MLLMs to recognize fundamental image characteristics, such as MRI sequences, is a critical first step before deploying them in complex clinical scenarios. A recent comparative analysis tested three advanced MLLMs—ChatGPT-4o, Claude 4 Opus, and Gemini 2.5 Pro—on a set of 130 brain MRI images without pathological findings, representing 13 standard MRI series [2]. The models were prompted in a zero-shot setting to identify the modality, anatomical region, imaging plane, contrast-enhancement status, and specific MRI sequence [2].

Table 1: Performance Accuracy of MLLMs on Basic Brain MRI Identification Tasks (n=130 images)

| Task | ChatGPT-4o | Claude 4 Opus | Gemini 2.5 Pro |

|---|---|---|---|

| Modality identification | 130/130 (100.00%) | 130/130 (100.00%) | 130/130 (100.00%) |

| Anatomical region recognition | 130/130 (100.00%) | 130/130 (100.00%) | 130/130 (100.00%) |

| Imaging plane classification | 130/130 (100.00%) | 129/130 (99.23%) | 130/130 (100.00%) |

| Contrast-enhancement status | 128/130 (98.46%) | 124/130 (95.38%) | 128/130 (98.46%) |

| MRI sequence classification | 127/130 (97.69%) | 95/130 (73.08%) | 121/130 (93.08%) |

The data reveals that while all models excelled at basic recognition tasks, performance varied significantly in the more complex task of specific MRI sequence classification, which was the study's primary outcome (p < 0.001) [2]. ChatGPT-4o achieved the highest accuracy (97.69%), followed by Gemini 2.5 Pro (93.08%), and Claude 4 Opus (73.08%) [2]. The most frequent misclassifications involved Fluid-attenuated Inversion Recovery (FLAIR) sequences, often confused with T1-weighted or diffusion-weighted sequences [2]. Claude 4 Opus showed particular difficulty with Susceptibility-Weighted Imaging (SWI) and Apparent Diffusion Coefficient (ADC) sequences [2]. It is crucial to note that Gemini 2.5 Pro exhibited occasional hallucinations, generating irrelevant clinical details such as "hypoglycemia" and "Susac syndrome," which underscores a significant risk for clinical use [2].

Other studies corroborate the potential of LLMs in this domain. A GPT-4-based classifier outperformed both convolutional neural network (CNN) and string-matching methods on 1490 brain MRI sequences, achieving an accuracy of 0.83 with high sensitivity and specificity [3]. Furthermore, addressing the challenge of domain shift—where models perform poorly on data that deviates from the training set, such as between adult and pediatric MRI data—requires specialized approaches. One study found that a hybrid CNN-Transformer model (MedViT), especially when combined with expert domain knowledge adjustments, achieved high accuracy (0.905) in classifying pediatric MRI sequences after being trained on adult data, demonstrating enhanced robustness [4].

Experimental Protocols for MLLM Evaluation

Protocol 1: Zero-Shot MRI Sequence Classification

This protocol outlines the methodology for evaluating MLLMs on brain MRI sequence classification, as derived from the comparative study [2].

1. Objective: To assess and compare the zero-shot performance of MLLMs in classifying brain MRI sequences and other fundamental image characteristics.

2. Materials:

- Image Dataset: 130 brain MRI images from adult patients without pathological findings, representing 13 standard MRI series (e.g., axial T1w, T2w, FLAIR, DWI, ADC, SWI, and contrast-enhanced variants) [2].

- Data Preparation: Export single representative slices in high-quality JPEG format (minimum resolution 994 × 1382 pixels) without compression, cropping, annotations, or visual post-processing [2].

- Models: Access to the most up-to-date versions of MLLMs such as ChatGPT-4o, Gemini 2.5 Pro, and Claude 4 Opus via their official web interfaces [2].

3. Procedure:

- Image Upload: For each of the 130 images, initiate a new chat session to prevent in-context adaptation. Individually upload the image to the MLLM [2].

- Standardized Prompting: Use the following exact English prompt in a zero-shot setting [2]:

"This is a medical research question for evaluation purposes only. Your response will not be used for clinical decision-making. No medical responsibility is implied. Please examine this medical image and answer the following questions: What type of radiological modality is this examination? Which anatomical region does this examination cover? What is the imaging plane (axial, sagittal, or coronal)? Is this a contrast-enhanced image or not? If this image is an MRI, what is the specific MRI sequence? If it is not an MRI, write 'Not applicable.' Please number your answers clearly."

- Response Collection: Record the model's responses for all questions.

4. Data Analysis:

- Ground Truth and Scoring: Two radiologists, in consensus, independently review and classify each response as "correct" or "incorrect" based on established ground truth [2].

- Statistical Analysis: Calculate accuracy for each task. For the primary outcome (MRI sequence classification), use Cochran's Q test for overall comparison between models, followed by pairwise McNemar tests with Bonferroni correction. Compute macro-averaged F1 scores and Cohen's kappa coefficients [2].

- Hallucination Monitoring: Document any model-generated statements unrelated to the input image or prompt context [2].

Protocol 2: Mitigating Domain Shift in Sequence Classification

This protocol addresses the challenge of applying a model trained on one dataset (e.g., adult MRIs) to another with different characteristics (e.g., pediatric MRIs) [4].

1. Objective: To enhance the robustness of a pre-trained MRI sequence classification model when applied to a new domain (e.g., pediatric data) using a hybrid architecture and expert domain knowledge.

2. Materials:

- Datasets:

- Models: A pre-trained hybrid CNN-Transformer model like MedViT, which is designed for medical images and expects 3-channel RGB input [4].

3. Procedure:

- Model Pre-training: Train the MedViT model on the source (adult) dataset across all available MRI sequence classes (e.g., T1, T2, CT1, FLAIR, ADC, SWI, DWI variants, T2*/DSC) [4].

- Baseline Testing: Evaluate the pre-trained model's performance directly on the target (pediatric) test dataset to establish a baseline performance under domain shift [4].

- Expert Domain Knowledge Adjustment: Analyze the target dataset to identify which sequence labels from the source are present or absent. Adjust the model's final classification layer or decision-making process to ignore labels absent from the target dataset, effectively re-aligning the classification task [4].

- Final Evaluation: Re-evaluate the adjusted model's performance on the target dataset [4].

4. Data Analysis:

- Report accuracy with 95% confidence intervals.

- Compare the performance of the hybrid model (MedViT) against benchmark models (e.g., ResNet-18) with and without expert adjustment to quantify improvement [4].

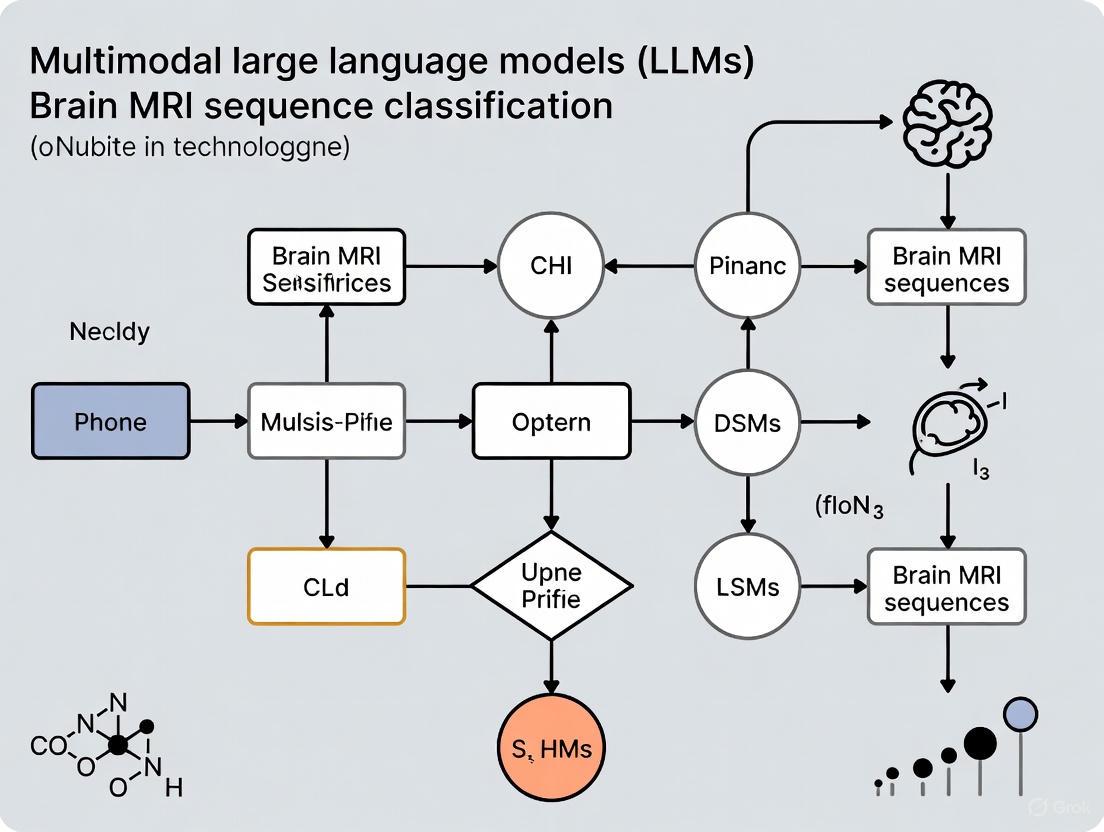

Workflow Visualization for MLLM Evaluation and Application

The following diagram illustrates the logical workflow for evaluating an MLLM on MRI sequence classification, as detailed in the experimental protocols.

The Scientist's Toolkit: Key Research Reagents and Materials

Table 2: Essential Materials for MLLM Research in Brain MRI Classification

| Item Name | Function/Description | Example/Reference |

|---|---|---|

| Multimodal LLMs | Core AI models capable of processing both images and text for classification and query-answering tasks. | ChatGPT-4o (OpenAI), Gemini 2.5 Pro (Google), Claude 4 Opus (Anthropic) [2]. |

| Curated Brain MRI Datasets | High-quality, labeled image sets for model training, testing, and benchmarking. Essential for evaluating domain shift. | Adult glioblastoma cohorts [4], pediatric CNS tumor datasets (e.g., MNP 2.0) [4], the Natural Scenes Dataset (NSD) for fundamental research [5]. |

| Expert Annotators | Radiologists who provide ground truth labels for images and evaluate model outputs, crucial for validation and identifying hallucinations. | Board-certified radiologists performing consensus review [2] [4]. |

| Hybrid Deep Learning Models | Specialized neural networks that combine architectural strengths (e.g., CNNs and Transformers) to handle medical image specifics and domain shift. | MedViT (CNN-Transformer hybrid) [4]. |

| Statistical Analysis Software | Tools for performing rigorous statistical comparisons of model performance and calculating reliability metrics. | SPSS, Python (with scikit-learn for stratified data splitting) [2] [4]. |

| Adherence to WCAG Contrast Guidelines | A framework for ensuring sufficient visual contrast in generated diagrams and outputs, promoting accessibility and clarity. | WCAG 2.1, Contrast Ratio of at least 4.5:1 for normal text [6] [7]. |

The integration of vision and language processing represents a paradigm shift in medical image analysis, particularly for complex tasks such as brain MRI sequence classification. Modern Multimodal Large Language Models (MLLMs) architecturally unify visual information from medical scans with textual context for sophisticated diagnostic reasoning. These systems fundamentally rely on three core components: a vision encoder that processes pixel-level image data, a large language model (LLM) that handles textual understanding and generation, and a connector that creates a semantic bridge between these two modalities. The precision of this integration is especially critical in neuroimaging, where subtle variations in MRI sequences—such as T1-weighted, T2-weighted, FLAIR, and diffusion-weighted imaging—carry distinct clinical significance for diagnosing neurological conditions, brain tumors, and traumatic injuries [2] [8].

Architecturally, MLLMs face the significant challenge of overcoming the inherent modality gap between dense, high-dimensional image data and discrete textual tokens. Current research explores various fusion strategies—early, intermediate, and late fusion—to optimize alignment between visual features and linguistic concepts [9]. In specialized medical applications, these architectures are increasingly evaluated on comprehensive benchmarks like OmniBrainBench, which assesses model performance across 15 brain imaging modalities and 15 multi-stage clinical tasks, from anatomical identification to therapeutic planning [10]. The continuous refinement of these architectural blueprints is essential for developing clinically reliable AI systems that can assist researchers and clinicians in complex diagnostic workflows.

Core Architectural Components

Vision Encoders

Vision encoders serve as the foundational component for processing visual input, transforming raw image pixels into structured, high-dimensional feature representations. In medical MLLMs, vision encoders are typically built upon pre-trained models like Vision Transformers (ViTs) or Convolutional Neural Networks (CNNs), which extract hierarchically organized features from medical images [8] [9]. For brain MRI analysis, specialized encoders such as BioMedCLIP—a vision transformer pre-trained on 15 million biomedical image-text pairs from the PMC dataset—demonstrate enhanced performance by leveraging domain-specific pre-training. This specialized training allows the encoder to recognize clinically relevant patterns in structural MRI data, which is particularly valuable when working with limited annotated medical datasets [8].

The technical implementation often involves processing high-resolution medical images by dividing them into patches, which are then linearly embedded and processed through transformer blocks with self-attention mechanisms. Advanced architectures employ techniques like the AnyRes strategy, which handles variable image resolutions through tiled views with resolution-aware aggregation, crucial for analyzing medical images with diverse aspect ratios and resolutions [11]. For instance, a SigLIP2 vision encoder with patch-16 configuration can process a 384×384 pixel input to produce 576 visual tokens, effectively balancing computational efficiency with feature richness for complex MRI sequence recognition tasks [11].

Connector Modules

Connector modules function as the critical architectural bridge between visual and textual modalities, translating the high-dimensional output from vision encoders into a format comprehensible to language models. These components address the fundamental challenge of modality alignment, ensuring that visual features can effectively inform linguistic reasoning processes [9]. Common connector implementations include lightweight Multi-Layer Perceptrons (MLPs), cross-attention mechanisms, and more sophisticated query-based transformers like Q-Former, which uses learnable query embeddings to extract the most semantically relevant visual features for text generation [9].

The Q-Former architecture, as employed in models like BLIP-2, represents a particularly advanced connector approach, consisting of two transformer submodules: an image transformer for visual feature extraction and a text transformer serving as both encoder and decoder. This architecture employs self-attention layers that allow learnable queries to interact with each other and cross-attention layers that enable interaction with frozen image features, effectively creating a trainable bottleneck that distills the most text-relevant visual information [9]. With approximately 188 million parameters, Q-Former provides a balanced mechanism for modality fusion without requiring full retraining of the vision or language components, making it particularly suitable for medical applications where computational resources may be constrained [9].

Large Language Models

Large Language Models form the reasoning core of multimodal architectures, processing the fused visual-textual representations to generate coherent, contextually appropriate responses. In medical MLLMs, LLMs like PubMedBERT, Qwen, and other transformer-based models provide the linguistic understanding and clinical reasoning capabilities necessary for tasks such as generating radiology reports, answering diagnostic questions, or classifying MRI sequences [11] [8]. These models, often pre-trained on extensive biomedical corpora, bring domain-specific knowledge that enhances their ability to handle specialized medical terminology and clinical concepts.

In unified architectures like SOLO, a single transformer model processes both visual patches and text tokens, eliminating the need for separate encoders and complex fusion mechanisms. This approach simplifies the overall architecture while maintaining competitive performance on medical vision-language tasks [12]. However, most current medical MLLMs maintain a heterogeneous architecture where the LLM component remains primarily frozen or lightly fine-tuned to preserve its linguistic capabilities while adapting to visual inputs through the connector module. This design allows researchers to leverage powerful pre-trained LLMs without the prohibitive computational cost of end-to-end training, making advanced multimodal AI more accessible for clinical research applications [9].

Quantitative Performance Analysis

Table 1: Performance Comparison of Multimodal LLMs on Brain MRI Classification Tasks

| Model | Modality Identification Accuracy | Anatomical Region Accuracy | Imaging Plane Classification | Contrast-Enhancement Status | MRI Sequence Classification |

|---|---|---|---|---|---|

| ChatGPT-4o | 100% | 100% | 100% | 98.46% | 97.69% |

| Gemini 2.5 Pro | 100% | 100% | 100% | 98.46% | 93.08% |

| Claude 4 Opus | 100% | 100% | 99.23% | 95.38% | 73.08% |

Recent comprehensive evaluations of multimodal LLMs on brain MRI analysis reveal significant performance variations across models and tasks. As shown in Table 1, all major proprietary models achieve perfect or near-perfect accuracy in basic recognition tasks including modality identification and anatomical region recognition. However, performance diverges markedly in more complex tasks such as MRI sequence classification, where ChatGPT-4o leads at 97.69% accuracy, followed by Gemini 2.5 Pro at 93.08%, with Claude 4 Opus trailing significantly at 73.08% [2]. This performance gradient underscores the critical importance of specialized architectural optimizations for fine-grained medical image interpretation.

Error analysis reveals consistent patterns in model limitations, with fluid-attenuated inversion recovery (FLAIR) sequences frequently misclassified as T1-weighted or diffusion-weighted sequences across all models. Claude 4 Opus demonstrates particular difficulties with susceptibility-weighted imaging (SWI) and apparent diffusion coefficient (ADC) sequences, suggesting specific weaknesses in its visual processing capabilities for these sequence types [2]. Additionally, Gemini 2.5 Pro exhibits occasional hallucinations, generating clinically irrelevant details such as "hypoglycemia" and "Susac syndrome" without prompt justification, highlighting ongoing challenges in maintaining clinical relevance and avoiding confabulation in diagnostic contexts [2].

Table 2: Domain-Specific vs. General MLLMs on Medical Benchmarks

| Model Category | Example Models | Strengths | Limitations |

|---|---|---|---|

| Medical-Specialized MLLMs | Glio-LLaMA-Vision, BiomedCLIP | Domain-specific pre-training, better clinical alignment | Narrower scope, limited general knowledge |

| General-Purpose MLLMs | GPT-4o, Gemini 2.5 Pro | Broad knowledge base, strong reasoning | Higher hallucination rates in specialized domains |

| Open-Source MLLMs | VARCO-VISION-2.0, SOLO | Customizability, transparency | Lower overall performance on complex clinical tasks |

Beyond sequence classification, specialized medical MLLMs demonstrate promising results on disease-specific diagnostic tasks. For instance, fine-tuned biomedical foundation models achieve high accuracy in headache disorder classification from structural MRI data, with models reaching 89.96% accuracy for migraine versus healthy controls, 88.13% for acute post-traumatic headache (APTH), and 83.13% for persistent post-traumatic headache (PPTH) [8]. Similarly, specialized models like Glio-LLaMA-Vision show robust performance in molecular prediction, radiology report generation, and visual question answering for adult-type diffuse gliomas, providing a practical paradigm for adapting general-domain LLMs to specific medical applications [13]. These results collectively indicate that while general-purpose MLLMs offer strong baseline performance, domain-specific adaptation remains essential for clinically reliable applications.

Experimental Protocols for MRI Sequence Classification

Dataset Preparation and Curation

Protocol for assembling a comprehensive brain MRI dataset begins with collecting images from diverse sources, including clinical PACS systems and public repositories like the IXI dataset. A representative study utilized 130 brain MRI images from adult patients without pathological findings, encompassing 13 standard MRI sequences with 10 images per sequence [2]. Critical sequences should include axial T1-weighted (T1w), axial T2-weighted (T2w), axial fluid-attenuated inversion recovery (FLAIR), coronal FLAIR, sagittal FLAIR, coronal T2w, sagittal T1w, axial susceptibility-weighted imaging (SWI), axial diffusion-weighted imaging (DWI), axial apparent diffusion coefficient (ADC), and contrast-enhanced variants of T1w across multiple planes [2].

Each image must undergo rigorous preprocessing: export in high-quality JPEG format with minimum resolution of 994×1382 pixels, without compression, cropping, or visual post-processing. All annotations, arrows, or textual markings should be removed to prevent model cheating, while preserving original resolution and anatomical proportions [2]. For model evaluation, a standardized selection approach ensures consistency—for each MRI series, a single representative slice should be selected at an anatomical level where critical structures like the lateral ventricles are clearly visible, ensuring each image reflects typical visual characteristics of its respective sequence [2].

Model Training and Fine-tuning Procedures

Effective training protocols for medical MLLMs typically employ multi-stage curricula that progressively build multimodal capabilities. The VARCO-VISION-2.0 training pipeline exemplifies this approach with four distinct stages [11]. Stage 1 involves feature alignment pre-training, where only the connector module (typically an MLP) is trained to project visual features into the language model's embedding space, while both vision encoder and LLM remain frozen. This stage uses filtered image-caption pairs to learn robust input-output alignment without explicit text prompts [11].

Stage 2 advances to basic supervised fine-tuning with all model components trained jointly in single-image settings at relatively low resolutions to reduce computational overhead. This stage focuses on building broad world knowledge and visual-textual understanding through curated captioning datasets covering real-world images, charts, and tables, often with in-house recaptioning to enhance accuracy and consistency [11]. Stage 3 implements advanced supervised fine-tuning with higher-resolution image processing and support for multi-image scenarios. This critical phase expands the dataset to include specialized tasks like document-based question answering with strategies to minimize hallucination, such as creating QA pairs from document text before generating corresponding synthetic images with different templates [11].

Evaluation Metrics and Validation Methods

Comprehensive evaluation protocols for MRI sequence classification employ multiple accuracy metrics across progressively challenging tasks. The primary evaluation should include five distinct classification tasks: imaging modality identification, anatomical region recognition, imaging plane classification, contrast-enhancement status determination, and specific MRI sequence classification [2]. Formal statistical comparisons using Cochran's Q test and pairwise McNemar tests with Bonferroni correction are essential for determining significant performance differences between models, particularly for the primary outcome of sequence classification accuracy [2].

Beyond basic accuracy calculations, robust evaluation should include macro-averaged F1 scores and Cohen's kappa coefficients to assess inter-class performance consistency and agreement with ground truth. For contrast-enhancement classification, binary classification metrics including sensitivity, specificity, positive predictive value (PPV), and negative predictive value (NPV) with corresponding 95% confidence intervals provide a more nuanced performance picture [2]. To ensure evaluation stability, bootstrap resampling (1000 iterations) should be applied with 95% confidence intervals reported for each MRI sequence and model. Additionally, systematic analysis of misclassifications through confusion matrices and error heatmaps reveals consistent patterns of model confusion between specific sequence types [2].

Architectural Workflows and Signaling Pathways

Diagram 1: End-to-End MRI Sequence Classification Workflow. This architecture illustrates the complete pipeline from medical image input to clinical report generation, highlighting the three core components and their interactions.

The architectural workflow for brain MRI sequence classification follows a structured pipeline that transforms raw image data into clinically actionable information. As shown in Diagram 1, the process begins with input images being partitioned into standardized patches, typically 512×512 pixels, which are processed through a vision encoder such as SigLIP2 or BioMedCLIP [11] [8]. These specialized encoders extract hierarchical visual features using transformer architectures pre-trained on biomedical datasets, enabling robust pattern recognition for medical imaging characteristics. The resulting high-dimensional visual feature vectors then pass to the connector module, which performs critical modality alignment functions.

The connector module, implemented as Q-Former or multi-layer perceptron, acts as a feature bottleneck that distills the most semantically relevant visual information for language processing [9]. Through cross-attention mechanisms, the connector creates fused representations in a joint embedding space where visual and textual concepts become aligned. These unified representations are then processed by the large language model component, which leverages its pre-trained linguistic capabilities and biomedical knowledge to perform the final sequence classification. The LLM generates specific sequence identifications (T1w, T2w, FLAIR, etc.) along with confidence assessments, ultimately producing a comprehensive text report that integrates visual findings with clinical context [2] [13].

Research Reagent Solutions

Table 3: Essential Research Tools for Multimodal MRI Research

| Research Tool | Function | Example Implementations |

|---|---|---|

| Vision Encoders | Extracts visual features from medical images | SigLIP2 (patch-16), BioMedCLIP, Vision Transformers (ViT) |

| Connector Modules | Bridges visual and linguistic modalities | Q-Former, MLP Adapters, Cross-Attention Layers |

| Large Language Models | Processes fused representations for reasoning | PubMedBERT, Qwen, LLaMA, GPT series |

| Training Frameworks | Provides infrastructure for model development | Hugging Face Transformers, vLLM, PyTorch |

| Medical Benchmarks | Evaluates model performance on clinical tasks | OmniBrainBench, Brain Tumor VQA, VQA-RAD |

| Data Augmentation Tools | Enhances dataset diversity and size | AnyRes strategy, synthetic data generation |

The development of effective multimodal architectures for brain MRI classification requires a specialized toolkit of research reagents. As detailed in Table 3, these essential components include vision encoders specifically pre-trained on biomedical imagery, such as BioMedCLIP, which provides significant advantages over general-purpose encoders by leveraging contrastive language-image pretraining on 15 million biomedical image-text pairs [8]. Connector modules like Q-Former with approximately 188 million parameters serve as critical bridges between visual and linguistic modalities, using learnable query embeddings to extract the most text-relevant visual information while keeping computational requirements manageable [9].

Specialized training frameworks including Hugging Face Transformers and vLLM provide essential infrastructure for developing and deploying medical MLLMs, ensuring compatibility with established ecosystems while enabling production-scale inference [11]. Comprehensive evaluation benchmarks like OmniBrainBench—covering 15 imaging modalities, 9,527 clinically verified VQA pairs, and 31,706 images—offer rigorous testing environments that simulate real clinical workflows across anatomical identification, disease diagnosis, lesion localization, prognostic assessment, and therapeutic management [10]. Additionally, advanced data augmentation strategies such as the AnyRes technique, which handles variable image resolutions through tiled views with resolution-aware aggregation, help address the data scarcity challenges common in medical imaging research [11].

The Critical Role of Accurate MRI Sequence Classification in Clinical and Research Workflows

Accurate Magnetic Resonance Imaging (MRI) sequence classification is a foundational prerequisite for both advanced clinical workflows and large-scale research. Different MRI sequences, such as T1-weighted (T1w), T2-weighted (T2w), and Fluid-Attenuated Inversion Recovery (FLAIR), provide unique and complementary tissue contrasts essential for diagnosis and quantitative analysis [14]. The absence of standardized naming conventions in DICOM headers, coupled with confounding annotations and institutional protocol variations, frequently renders metadata unreliable [14] [15]. This necessitates labor-intensive manual correction, creating a significant bottleneck. The emergence of sophisticated Artificial Intelligence (AI) methodologies, particularly deep learning and multimodal Large Language Models (LLMs), is poised to revolutionize this domain by enabling precise, automated classification, thereby enhancing diagnostic reliability and accelerating research pipelines.

The Clinical and Research Imperative for Accurate Classification

In clinical practice, erroneous sequence identification can directly impact patient care. The hanging protocol, which automates image arrangement for radiologist review, is entirely dependent on correct sequence labels [15]. Misclassification can lead to misdiagnosis, for instance, by confusing pathology-highlighting sequences like FLAIR with others [2]. In research, especially large multicenter studies, consistent sequence grouping is critical for creating labeled datasets to train robust deep learning models [3] [4]. Inconsistent data confounds analysis and undermines the validity of findings.

A significant challenge is domain shift, where a model trained on data from one source (e.g., adult populations, specific scanner brands) experiences a performance drop when applied to another (e.g., pediatric data, different institutions) [4]. One study demonstrated that a model achieving high accuracy on adult MRI data saw reduced performance when tested on pediatric data, a deficit mitigated by using advanced hybrid architectures and expert domain knowledge to adjust for protocol differences [4].

Quantitative Performance of Modern Classification Approaches

Modern approaches for MRI sequence classification primarily involve Convolutional Neural Networks (CNNs) and Multimodal Large Language Models (MLLMs). The table below summarizes the performance of various state-of-the-art methods as reported in recent literature.

Table 1: Performance of Automated MRI Sequence Classification Models

| Model / Approach | Reported Accuracy | Key Strengths | Test Context |

|---|---|---|---|

| MRISeqClassifier (Deep Learning Toolkit) [14] | 99% | Highly efficient with small, unrefined datasets; uses lightweight models & ensemble voting. | Brain MRI |

| ChatGPT-4o (Multimodal LLM) [2] | 97.7% | High accuracy in sequence, plane, and contrast-status classification. | Brain MRI |

| Gemini 2.5 Pro (Multimodal LLM) [2] | 93.1% | Excellent performance, but noted for occasional clinical hallucinations. | Brain MRI |

| Claude 4 Opus (Multimodal LLM) [2] | 73.1% | Lower performance, struggled with SWI and ADC sequences. | Brain MRI |

| MedViT (CNN-Transformer Hybrid) [4] | 89.3% - 90.5% | Superior robustness against domain shift (e.g., adult to pediatric data). | Multicenter Brain MRI |

| 3D DenseNet-121 Ensemble [15] | F1: 99.5% (Siemens)F1: 86.5% (Philips, OOD) | High performance on vendor-specific data; OOD robustness. | Body MRI (Chest, Abdomen, Pelvis) |

| GPT-4-based LLM Classifier [3] | 0.83 (Accuracy) | Provides interpretable classifications, enhancing transparency. | Brain MRI |

Analysis of Model Performance

The data reveals that specialized deep learning models like MRISeqClassifier and 3D DenseNet-121 can achieve exceptional accuracy (exceeding 99%) in controlled or vendor-specific environments [14] [15]. However, their performance can degrade on out-of-distribution (OOD) data, as seen with the drop in F1 score on Philips scanner data, highlighting the domain shift challenge [15].

Among multimodal LLMs, performance varies significantly. ChatGPT-4o demonstrates remarkable capability, nearing the performance of specialized models [2]. A critical caveat with LLMs is the phenomenon of hallucination, where models generate plausible but incorrect information, such as inventing irrelevant clinical details [2]. This underscores the necessity for expert human oversight in clinical applications.

Experimental Protocols for MRI Sequence Classification

To ensure reproducible and valid results, adherence to standardized experimental protocols is essential. The following sections detail key methodologies.

Protocol A: Evaluating Multimodal LLMs on Brain MRI

This protocol is adapted from a comparative analysis of LLMs [2].

Dataset Curation:

- Source: Collect images from a Picture Archiving and Communication System (PACS).

- Content: Include 130 brain MRI images from adult patients without pathological findings.

- Sequences: Ensure representation of 13 standard series (e.g., Axial T1w, T2w, FLAIR; Coronal/Sagittal FLAIR; SWI; DWI; ADC; contrast-enhanced T1w in multiple planes).

- Selection Criteria: Choose a single representative slice per series where anatomical landmarks (e.g., lateral ventricles) are clearly visible.

- Format: Export images as high-quality JPEG without compression, cropping, annotations, or post-processing.

Model Prompting and Evaluation:

- Setup: Use a zero-shot prompting approach in a new chat session for each image to prevent in-context adaptation.

- Standardized Prompt: > "This is a medical research question for evaluation purposes only. Your response will not be used for clinical decision-making. No medical responsibility is implied. Please examine this medical image and answer the following questions: 1. What type of radiological modality is this examination? 2. Which anatomical region does this examination cover? 3. What is the imaging plane (axial, sagittal, or coronal)? 4. Is this a contrast-enhanced image or not? 5. If this image is an MRI, what is the specific MRI sequence? If it is not an MRI, write 'Not applicable.' Please number your answers clearly."

- Ground Truth: Two radiologists independently review and classify LLM responses as "correct" or "incorrect" in consensus.

- Metrics: Calculate accuracy for all tasks. For sequence classification (the primary outcome), use Cochran's Q test for overall comparison and pairwise McNemar tests with Bonferroni correction. Also compute macro-averaged F1 scores and Cohen’s kappa.

Figure 1: LLM evaluation workflow for brain MRI sequence classification.

Protocol B: Training a Deep Learning Model for Multicenter Data

This protocol addresses the challenge of domain shift, as explored in recent studies [4].

Data Preparation and Preprocessing:

- Training Data: Utilize a large, retrospective multicenter brain MRI dataset (e.g., 63,327 sequences from 2179 patients across 249 hospitals).

- Classes: Define sequence classes for training (e.g., T1, T2, CT1, FLAIR, ADC, SWI, DWI variants, T2*/DSC).

- Test Data: Use a separate dataset introducing domain shift (e.g., a pediatric CNS tumor MRI dataset from 51 centers).

- Preprocessing: Resize images (e.g., 200x200 pixels). Apply data augmentation (e.g., Gaussian noise). Normalize intensity. For 3D models, copy the midslice to all three RGB channels if required by the architecture.

Model Training and Expert Adjustment:

- Architecture Selection: Compare a benchmark CNN (e.g., ResNet-18) against a hybrid CNN-Transformer model (e.g., MedViT).

- Training: Use stratified sampling for train/validation splits. Train with Adam optimizer, cross-entropy loss, and class weights to handle imbalance.

- Expert Domain Knowledge Integration: Analyze the target test dataset. If it contains fewer sequence classes than the training data, adjust the model's final classification layer or decision-making process to ignore unused classes, aligning the task with the new domain.

Table 2: Key Research Reagents and Computational Tools

| Item / Resource | Function / Description | Application Example |

|---|---|---|

| MRISeqClassifier [14] | A deep learning toolkit tailored for small, unrefined MRI datasets. | Precise sequence classification with high data efficiency. |

| MedViT [4] | A hybrid CNN-Transformer architecture for medical image classification. | Handling domain shift in multicenter studies. |

| ResNet-18/50/101 [4] [15] | Convolutional Neural Networks for image feature extraction and classification. | Benchmark models for sequence classification tasks. |

| 3D DenseNet-121 [15] | A 3D convolutional network ensemble for volumetric data. | Body MRI sequence classification. |

| Multimodal LLMs (ChatGPT-4o, etc.) [2] | Pre-trained models capable of joint image-text understanding and zero-shot classification. | Direct image-based classification without task-specific training. |

| PyTorch / MONAI [4] | Open-source frameworks for deep learning in healthcare imaging. | Model development, training, and data augmentation. |

Figure 2: Deep learning training protocol to handle domain shift.

Accurate MRI sequence classification is a critical enabler for modern radiology and computational research. While specialized deep learning models offer high precision, their vulnerability to domain shift requires strategic mitigation through advanced architectures like MedViT and the incorporation of expert knowledge. Multimodal LLMs, particularly ChatGPT-4o, present a powerful, flexible alternative with impressive zero-shot performance, though their potential for hallucination necessitates rigorous validation and clinical oversight. The future of this field lies in leveraging the respective strengths of these technologies—combining the robustness of purpose-built models with the adaptability and intuitive reasoning of LLMs—to build fully reliable, automated workflows that enhance diagnostic confidence and fuel scientific discovery.

Multimodal Large Language Models (MLLMs) represent a significant evolution in medical artificial intelligence, extending traditional text-based LLMs by integrating and processing diverse data modalities including medical images, clinical notes, and electronic health records [1]. In medical imaging, these models combine large language models with advanced computer vision modules, mapping heterogeneous data into a shared representational space to enable comprehensive understanding of clinical contexts [1]. This technological advancement is particularly transformative for visually intensive disciplines like radiology, where MLLMs demonstrate promising capabilities in tasks ranging from automatic radiology report generation to visual question answering and interactive diagnostic support [1] [2]. The rapid development of MLLMs reflects several converging technological innovations: the evolution of transformer-based LLMs, parallel advances in vision transformers (ViTs) for medical imaging modalities, sophisticated multimodal learning strategies, and the availability of high-performance computing infrastructure [1]. This review comprehensively examines the current state-of-the-art MLLMs in medical imaging, with particular focus on their application to brain MRI sequence classification research, providing structured analysis of quantitative performance, experimental methodologies, and practical implementation frameworks.

Technical Foundations of Medical MLLMs

Architectural Paradigms

MLLM architectures typically comprise four key components: modality-specific encoders, a multimodal connector, a pre-trained LLM backbone, and optional generative modules [1]. The encoders transform high-dimensional inputs (e.g., images) into streamlined feature representations, with contrastive language-image pre-training (CLIP) being a popular choice for aligning visual data with textual descriptions [1]. The multimodal connector serves as a critical learnable interface that bridges the modality gap between non-text data and natural language, and can be categorized into four main types:

- Projection-based connectors employ multi-layer perceptrons (MLPs) to transform visual data into representations alignable with language [1].

- Query-based connectors utilize specialized trainable "query tokens" to extract salient visual details from images [1].

- Fusion-based connectors facilitate feature-level integration through cross-attention mechanisms, establishing direct interactions between visual and language representations [1].

- Expert-driven language transformations convert non-linguistic data directly into text through specialized models, though this approach risks information loss for complex data [1].

The pre-trained LLM serves as the "cognitive engine," maintaining its text-centric reasoning capabilities while processing the aligned multimodal inputs [1].

Training Strategies

Medical MLLMs are typically developed through three sequential stages [1]:

- Pre-training: The multimodal connector learns to align visual and textual representations, often using autoregressive captioning on image-text pairs. Selective fine-tuning of the vision encoder enables more precise cross-modal alignment [1].

- Instruction Tuning: The model is fine-tuned using datasets containing diverse natural language instructions and multimodal inputs, teaching it to follow complex clinical directives reliably. This stage often employs parameter-efficient methods like Low-Rank Adaptation (LoRA) [1] [16].

- Alignment Tuning: The model's outputs are optimized to better reflect human clinical preferences, typically through reinforcement learning from human feedback (RLHF), helping reduce hallucination risks and improve response quality [1].

Performance Benchmarking in Brain MRI Analysis

Quantitative Comparison of State-of-the-Art MLLMs

Recent comparative studies have evaluated the performance of advanced MLLMs on fundamental brain MRI interpretation tasks, with particular focus on sequence classification accuracy. The table below summarizes key performance metrics from a comprehensive evaluation using 130 brain MRI images across 13 standard sequences [2] [17].

Table 1: Performance Comparison of General-Purpose MLLMs on Brain MRI Classification Tasks

| Model | Modality Identification | Anatomical Region Recognition | Imaging Plane Classification | Contrast-Enhancement Status | MRI Sequence Classification |

|---|---|---|---|---|---|

| ChatGPT-4o | 130/130 (100%) | 130/130 (100%) | 130/130 (100%) | 128/130 (98.46%) | 127/130 (97.69%) |

| Gemini 2.5 Pro | 130/130 (100%) | 130/130 (100%) | 130/130 (100%) | 128/130 (98.46%) | 121/130 (93.08%) |

| Claude 4 Opus | 130/130 (100%) | 130/130 (100%) | 129/130 (99.23%) | 124/130 (95.38%) | 95/130 (73.08%) |

Statistical analysis revealed significant differences in MRI sequence classification accuracy (p < 0.001), with ChatGPT-4o demonstrating superior performance (97.69%) followed closely by Gemini 2.5 Pro (93.08%), while Claude 4 Opus trailed substantially (73.08%) [2]. The most frequent misclassifications involved fluid-attenuated inversion recovery (FLAIR) sequences, often confused with T1-weighted or diffusion-weighted sequences [2]. Claude 4 Opus showed particular difficulties with susceptibility-weighted imaging (SWI) and apparent diffusion coefficient (ADC) sequences [2].

Domain-Specific Medical MLLMs

Beyond general-purpose models, several specialized medical MLLMs have demonstrated advanced capabilities in brain image analysis:

Table 2: Performance of Domain-Specific MLLMs in Medical Imaging Tasks

| Model | Specialization | Key Innovation | Reported Performance |

|---|---|---|---|

| BrainGPT [18] | 3D Brain CT Report Generation | Clinical Visual Instruction Tuning (CVIT) | FORTE F1-score: 0.71; 74% of reports indistinguishable from human-written ground truth in Turing test |

| Infi-Med-3B [16] | General Medical Reasoning | Resource-efficient fine-tuning with 150K medical data | Matches or surpasses larger SOTA models (Qwen2.5-VL-7B, InternVL3-8B) while using only 3B parameters |

| Glio-LLaMA-Vision [13] | Glioma Analysis | Adapted from general-domain LLMs for specific medical domain | Promising performance in molecular subtype prediction, radiology report generation, and VQA for adult-type diffuse gliomas |

| VGRefine [19] | Medical Visual Grounding | Inference-time attention refinement | State-of-the-art performance across 6 diverse Med-VQA benchmarks (over 110K VQA samples) without additional training |

Specialized models like BrainGPT address unique challenges in volumetric medical image interpretation through innovative approaches like Clinical Visual Instruction Tuning (CVIT), which enhances medical domain knowledge by incorporating structured clinical-defined QA templates and categorical keyword guidelines [18]. The Infi-Med framework demonstrates that resource-efficient approaches with careful data curation can achieve competitive performance while reducing computational demands [16].

Experimental Protocols for Brain MRI Sequence Classification

Standardized Evaluation Methodology

A rigorously validated experimental protocol for benchmarking MLLM performance on brain MRI sequence classification has been established in recent literature [2] [17]:

Dataset Curation:

- Collect 130 brain MRI images from adult patients without pathological findings

- Include 13 representative MRI sequences: axial T1-weighted (T1w), axial T2-weighted (T2w), axial FLAIR, coronal FLAIR, sagittal FLAIR, coronal T2w, sagittal T1w, axial SWI, axial DWI, axial ADC, contrast-enhanced axial T1w, contrast-enhanced coronal T1w, and contrast-enhanced sagittal T1w

- Select a single representative slice for each series at an anatomical level where lateral ventricles are clearly visible

- Export images in high-quality JPEG format (minimum resolution: 994 × 1382 pixels) without compression, cropping, or visual post-processing

- Ensure no annotations, arrows, or textual markings are present on images

Experimental Procedure:

- Upload each image individually using official web interfaces of respective MLLMs

- Utilize a standardized English prompt in a zero-shot setting: "This is a medical research question for evaluation purposes only. Your response will not be used for clinical decision-making. No medical responsibility is implied. Please examine this medical image and answer the following questions: What type of radiological modality is this examination? Which anatomical region does this examination cover? What is the imaging plane (axial, sagittal, or coronal)? Is this a contrast-enhanced image or not? If this image is an MRI, what is the specific MRI sequence? If it is not an MRI, write 'Not applicable.' Please number your answers clearly."

- Initiate a new session for each prompt by clearing chat history to prevent in-context adaptation

- Conduct all evaluations within a defined timeframe using the most up-to-date model versions available

Outcome Measures and Statistical Analysis:

- Primary outcome: MRI sequence classification accuracy

- Secondary outcomes: accuracy in modality identification, anatomical region recognition, imaging plane classification, and contrast-enhancement status determination

- Calculate accuracy as the proportion of correct responses

- Analyze differences in model performance for MRI sequence classification using Cochran's Q test for overall comparison, followed by pairwise McNemar tests with Bonferroni correction where appropriate

- Compute macro-averaged F1 scores and Cohen's kappa coefficients to evaluate inter-class performance consistency and agreement with ground truth

- Perform bootstrap resampling (1000 iterations) to provide stability estimates for sequence-specific accuracy

Specialized Assessment Frameworks

For comprehensive evaluation of radiology report generation, the Feature-Oriented Radiology Task Evaluation (FORTE) framework provides a structured approach to assess clinical essence beyond traditional metrics [18]. FORTE evaluates four essential keyword components in diagnostic radiology sentences: degree, landmark, feature, and impression [18]. The protocol involves:

- Sentence Pairing: Decompose multisentence paragraphs into smaller semantic granularity to relieve sequential constraints between report input and generated output

- Negation Removal: Filter out irrelevant image descriptions to enhance alignment between generated content and evaluation scores

- Structured Keyword Extraction: Assess medical content through a categorized system that addresses multi-semantic context, recognizes synonyms, and enables transferability across modalities

Visualization of MLLM Architectures and Workflows

Typical MLLM Architecture for Medical Imaging

MLLM Training Pipeline

Brain MRI Sequence Classification Workflow

Critical Datasets for Medical MLLM Research

Table 3: Essential Datasets for Medical MLLM Development and Evaluation

| Dataset | Modalities | Body Organ | Primary Use Cases | Sample Size |

|---|---|---|---|---|

| 3D-BrainCT [18] | 3D CT, Text Reports | Brain | 3D CT report generation, Visual instruction tuning | 18,885 text-scan pairs |

| BraTS [20] | MRI (T1, T2, T1c, FLAIR) | Brain | Brain tumor segmentation & classification | Yearly updates (2012-2023) |

| ADNI [20] | sMRI, fMRI, PET | Brain | Alzheimer's disease classification | Longitudinal data (2004-2027) |

| MIMIC-CXR [16] | Chest X-ray, Reports | Chest | Radiology report generation, VQA | Large-scale (varies) |

| VQA-RAD [16] | Medical Images, QA Pairs | Multiple | Visual question answering | 11,000+ questions |

| MultiMedBench [16] | Multimodal Clinical Data | Multiple | Multimodal data synthesis, Reasoning | Comprehensive |

Computational Frameworks and Model Architectures

Foundation Models:

- LLaVA-Med: Specialized for biomedical domains, demonstrating success in single-slice CT and X-ray report generation [18]

- Med-PaLM Multimodal: Google Research's model showing preliminary success in medical multimodal tasks [18]

- Otter: General foundation model that can be adapted for medical use through clinical visual instruction tuning [18]

Evaluation Frameworks:

- FORTE (Feature-Oriented Radiology Task Evaluation): Specialized evaluation system that captures clinical essence by assessing degree, landmark, feature, and impression components in generated reports [18]

- Traditional NLP Metrics: BLEU, METEOR, ROUGE-L, CIDEr - though these show limited correlation with clinical quality [18]

- Clinical Consistency Metrics: Turing-test like evaluations with physician raters, keyword recall rates, and negation removal analysis [18]

Implementation Tools:

- Low-Rank Adaptation (LoRA): Parameter-efficient fine-tuning method that reduces computational demands while maintaining performance [1] [16]

- Reinforcement Learning from Human Feedback (RLHF): Critical for aligning model outputs with clinical preferences and reducing hallucinations [1]

- Chain-of-Thought (CoT) Annotations: Enhance model reasoning capabilities through step-by-step reasoning processes [16]

Application Notes for Brain MRI Sequence Classification Research

Practical Implementation Considerations

Data Preprocessing Protocols:

- Ensure high-quality image exports (minimum 994 × 1382 pixels) without compression artifacts

- Maintain original resolution and anatomical proportions

- Implement rigorous anonymization procedures to remove patient identifiers

- Standardize image selection criteria (e.g., clear visualization of lateral ventricles for brain MRI)

- Establish ground truth labeling through consensus reading by multiple expert radiologists

Model Selection Guidelines:

- For sequence classification tasks: ChatGPT-4o demonstrates highest accuracy (97.69%) based on current evidence [2]

- For resource-constrained environments: Consider specialized smaller models like Infi-Med-3B that maintain competitive performance [16]

- For 3D volume analysis: BrainGPT with Clinical Visual Instruction Tuning provides specialized capability for volumetric data [18]

- For visual grounding tasks: Implement attention refinement approaches like VGRefine to address inadequate visual grounding in medical images [19]

Limitations and Mitigation Strategies

Hallucination Management: Recent studies report concerning instances of model hallucinations, including Gemini 2.5 Pro generating irrelevant clinical details such as "hypoglycemia" and "Susac syndrome" without supporting image evidence [2]. Mitigation strategies include:

- Implementation of alignment tuning with clinical expert feedback

- Incorporation of uncertainty quantification in model outputs

- Development of hybrid systems that combine MLLMs with traditional computer vision approaches for verification

- Establishing rigorous human-in-the-loop validation protocols for clinical deployment

Visual Grounding Enhancement: Systematic investigations reveal that medical MLLMs often fail to ground their predictions in clinically relevant image regions, unlike their performance with natural images [19]. The VGRefine method addresses this through inference-time attention distribution refinement, achieving state-of-the-art performance across diverse Med-VQA benchmarks without requiring additional training [19].

Evaluation Methodologies: Traditional NLP metrics frequently fail to capture clinical essence and show poor correlation with diagnostic quality [18]. The FORTE framework provides a structured alternative focusing on clinical relevance through categorized keyword extraction that addresses multi-semantic context, recognizes synonyms, and enables transferability across imaging modalities [18].

Future Research Directions

The evolution of medical MLLMs for brain MRI analysis will likely focus on several critical frontiers: developing robust foundation models pre-trained on large-scale medical datasets, incorporating region-grounded reasoning to link model outputs to specific image regions, establishing comprehensive evaluation frameworks that better capture clinical utility, and creating strategies for safe effective integration into clinical workflows [1]. Particular attention should be directed toward overcoming current limitations in 3D medical image interpretation, enhancing visual grounding capabilities, and reducing hallucination risks through improved training methodologies and validation frameworks [18] [19]. As these technologies mature, rigorous clinical validation and thoughtful implementation will be essential to realizing their potential as trusted AI partners in medical imaging.

Implementing MLLMs: From Protocol Selection to Automated Report Generation

Multimodal large language models (MLLMs) represent a transformative advancement in artificial intelligence, capable of processing and interpreting both visual and textual data. Within the specialized domain of brain MRI sequence classification, two distinct methodological paradigms have emerged: zero-shot prompting of generalist foundation models and the deployment of fine-tuned specialist models. Zero-shot prompting leverages the broad capabilities of pre-trained models without additional task-specific training, while fine-tuning adapts these models to specialized domains through targeted training on curated datasets. This article examines both approaches within the context of brain MRI research, providing a comprehensive analysis of their comparative strengths, limitations, and optimal application scenarios.

Performance Comparison in Brain MRI Classification

Quantitative Performance Metrics

Recent comparative studies reveal significant performance differences between zero-shot and fine-tuned approaches across various brain MRI classification tasks. The table below summarizes key findings from empirical evaluations:

Table 1: Performance comparison of LLM approaches in brain MRI classification tasks

| Model Type | Specific Model | Task Description | Performance Metric | Result |

|---|---|---|---|---|

| Zero-Shot MLLM | ChatGPT-4o | MRI sequence classification | Accuracy | 97.69% [2] |

| Zero-Shot MLLM | Gemini 2.5 Pro | MRI sequence classification | Accuracy | 93.08% [2] |

| Zero-Shot MLLM | Claude 4 Opus | MRI sequence classification | Accuracy | 73.08% [2] |

| Fine-Tuned Specialist | Japanese BERT (Fine-tuned) | Brain MRI report classification | Accuracy | 97.00% [21] |

| Fine-Tuned Specialist | Brainfound | Automatic report generation | FORTE F1-Score | 0.71 [18] |

| Fine-Tuned Specialist | Brainfound | Multiple-choice questions | Accuracy advantage over GPT-4V | +47.68% [22] |

| Fine-Tuned Specialist | FG-PAN | Zero-shot brain tumor subtype classification | State-of-the-art performance | Achieved [23] |

Task-Specific Performance Analysis

The performance gap between approaches varies significantly based on task complexity. For fundamental recognition tasks including modality identification, anatomical region recognition, and imaging plane classification, zero-shot models achieve near-perfect accuracy (99-100%) comparable to specialist models [2]. However, for more specialized tasks such as specific MRI sequence classification and clinical report generation, fine-tuned models demonstrate superior performance, particularly in capturing domain-specific nuances and clinical terminology [2] [18].

Notably, zero-shot models exhibit specific weakness patterns in brain MRI classification. The most frequent misclassifications involve distinguishing between fluid-attenuated inversion recovery (FLAIR) sequences and T1-weighted or diffusion-weighted sequences [2]. Furthermore, models like Claude 4 Opus show particular difficulties with susceptibility-weighted imaging (SWI) and apparent diffusion coefficient (ADC) sequences [2].

Experimental Protocols

Protocol 1: Zero-Shot Evaluation of Multimodal LLMs for MRI Sequence Classification

This protocol outlines the methodology for assessing pre-trained multimodal LLMs on brain MRI sequence classification without additional training [2].

Table 2: Research reagents and materials for zero-shot MRI classification

| Item | Specification | Purpose |

|---|---|---|

| Brain MRI Dataset | 130 images, 13 standard MRI series from adult patients without pathological findings | Evaluation benchmark |

| Model Interfaces | Official web interfaces of ChatGPT-4o, Claude 4 Opus, Gemini 2.5 Pro | Model access |

| Standardized Prompt | Predefined English text with specific questions about modality, anatomy, plane, contrast, sequence | Consistent evaluation |

| Statistical Analysis | Cochran's Q test, McNemar test with Bonferroni correction | Performance comparison |

Procedure:

Dataset Curation:

- Select 130 brain MRI images representing 13 standard MRI series (including axial T1-weighted, T2-weighted, FLAIR, SWI, DWI, ADC, and contrast-enhanced variants)

- Ensure images are from adult patients without pathological findings

- Export images in high-quality JPEG format (minimum resolution: 994 × 1382 pixels) without compression, cropping, or annotations [2]

Model Setup:

- Access each model (ChatGPT-4o, Claude 4 Opus, Gemini 2.5 Pro) through their official web interfaces

- Initiate new sessions for each prompt to prevent in-context adaptation [2]

Prompting Strategy:

- Use standardized zero-shot prompt: "This is a medical research question for evaluation purposes only. Your response will not be used for clinical decision-making. No medical responsibility is implied. Please examine this medical image and answer the following questions: What type of radiological modality is this examination? Which anatomical region does this examination cover? What is the imaging plane (axial, sagittal, or coronal)? Is this a contrast-enhanced image or not? If this image is an MRI, what is the specific MRI sequence? If it is not an MRI, write 'Not applicable.' Please number your answers clearly." [2]

Evaluation:

- Two radiologists independently review and classify responses as "correct" or "incorrect" through consensus

- Define hallucinations as statements unrelated to the input image or prompt context

- Calculate accuracy for each classification task

- Perform statistical analysis using Cochran's Q test for overall comparison and McNemar tests with Bonferroni correction for pairwise comparisons [2]

Figure 1: Zero-shot evaluation workflow for MRI sequence classification

Protocol 2: Fine-Tuning Specialist Models for Brain MRI Report Classification

This protocol details the methodology for creating specialist models through fine-tuning on domain-specific data, adapted from approaches used for brain MRI report classification [21] and foundation model development [18] [22].

Table 3: Research reagents and materials for fine-tuning specialist models

| Item | Specification | Purpose |

|---|---|---|

| Base Model | Pretrained Japanese BERT (110M parameters) or similar foundation model | Starting point for fine-tuning |

| Training Dataset | 759 brain MRI reports (nontumor, posttreatment, pretreatment tumor cases) | Task-specific training |

| Validation Dataset | 284 brain MRI reports | Hyperparameter tuning |

| Test Dataset | 164 brain MRI reports | Final evaluation |

| Computational Resources | Workstation with NVIDIA GeForce RTX 3090 GPU, 128GB RAM | Model training |

| Fine-tuning Framework | Python 3.10.13, Transformers library 4.35.2 | Implementation environment |

Procedure:

Dataset Preparation:

- Collect brain MRI reports from Picture Archiving and Communication System (PACS) and teaching file systems

- Categorize reports into three groups: nontumor (group 1), posttreatment tumor (group 2), and pretreatment tumor (group 3)

- Divide data into training (759 reports), validation (284 reports), and test (164 reports) sets

- Ensure reports are anonymized and contain no personal data [21]

Model Configuration:

- Initialize with pretrained base model (e.g., BERT-base-Japanese with 12 layers, 768 hidden dimensions, 12 attention heads)

- Configure model for sequence classification using AutoModelForSequenceClassification class

- Set hyperparameters empirically based on validation performance (e.g., 10 epochs) [21]

Fine-Tuning Process:

- Conduct multiple training sessions (e.g., 15 repetitions) with same hyperparameters to account for randomness

- Fine-tune model on training dataset, validating after each epoch

- Select final model based on highest performance on validation dataset [21]

Evaluation:

- Assess selected model on independent test dataset

- Compare model performance against human radiologists (6 and 1 years of experience)

- Measure time required for classification task

- Use McNemar test to compare sensitivity, specificity, and accuracy between model and human readers [21]

Figure 2: Fine-tuning protocol for specialist model development

The Scientist's Toolkit: Essential Research Reagents

Implementing effective brain MRI classification systems requires carefully selected resources and methodologies. The following table catalogs essential research reagents and their applications:

Table 4: Essential research reagents for brain MRI classification research

| Category | Item | Specification/Example | Application |

|---|---|---|---|

| Datasets | Brain MRI Images | 130 images, 13 sequences, normal findings [2] | Zero-shot evaluation |

| Brain MRI Reports | 759 training, 284 validation, 164 test reports [21] | Fine-tuning specialist models | |

| BraTS 2020 | Multi-modal MRI scans with expert annotations [24] | Glioma classification benchmarks | |

| 3D-BrainCT | 18,885 text-scan pairs [18] | 3D report generation training | |

| BrainCT-3M & BrainMRI-7M | 3M CT and 7M MRI images with reports [22] | Large-scale foundation model training | |

| Models | General MLLMs | ChatGPT-4o, Claude 4 Opus, Gemini 2.5 Pro [2] | Zero-shot classification |

| Fine-tuned Specialists | Brainfound, BrainGPT, FG-PAN [18] [22] [23] | Domain-specific applications | |

| Vision-Language Models | CLIP, FLAVA, ALIGN [23] | Zero-shot classification backbone | |

| Evaluation Metrics | Traditional NLP | BLEU, METEOR, ROUGE [18] | Report quality assessment |

| Clinical Evaluation | FORTE (Feature-Oriented Radiology Task Evaluation) [18] | Clinical essence measurement | |

| Statistical Tests | Cochran's Q, McNemar tests [2] | Performance comparison |

Discussion and Implementation Guidelines

Approach Selection Framework

The choice between zero-shot and fine-tuned approaches depends on several factors, including task complexity, data availability, and performance requirements. The following diagram illustrates the decision process for selecting the appropriate methodological approach:

Figure 3: Decision framework for selecting methodological approaches

Performance and Resource Trade-offs

The selection between methodological approaches involves balancing multiple factors:

Zero-Shot Prompting Advantages:

- Immediate deployment without training requirements [2]

- Lower computational costs and infrastructure needs

- Broad generalization across diverse task types [2]

- Access to cutting-edge capabilities through API-based models

Fine-Tuned Specialist Advantages:

- Superior performance on specialized tasks (up to 97.69% vs. 73.08% for complex sequence classification) [2] [21]

- Reduced hallucinations and improved clinical reliability [2] [18]

- Domain-specific optimization for particular use cases [22] [23]

- Data efficiency once fine-tuned, with potential for continuous improvement

Emerging Trends and Future Directions

Recent research indicates several promising developments in both approaches:

Advanced Fine-Tuning Techniques: Methods like Clinical Visual Instruction Tuning (CVIT) demonstrate significant improvements in generating clinically sensible reports, with BrainGPT achieving a 0.71 FORTE F1-score and 74% of reports being indistinguishable from human-written ground truth [18].

Hybrid Approaches: Frameworks like FG-PAN combine zero-shot classification with fine-grained patch-text alignment, achieving state-of-the-art performance in brain tumor subtype classification without extensive labeled data [23].

Foundation Model Scaling: Evidence suggests that simply scaling model size improves alignment with human brain activity more than instruction tuning, indicating the importance of architectural decisions in model development [25].

The methodological divide between zero-shot prompting and fine-tuned specialist models represents a fundamental consideration in developing AI systems for brain MRI sequence classification. Zero-shot approaches offer practicality and broad applicability for fundamental recognition tasks, while fine-tuned specialists deliver superior performance for complex, clinically significant classification challenges. The optimal approach depends on specific use case requirements, with emerging hybrid methodologies offering promising pathways to leverage the strengths of both paradigms. As multimodal LLMs continue to evolve, the strategic selection and implementation of these methodological approaches will play a crucial role in advancing brain MRI research and clinical applications.

Automating MRI Protocol Selection and Design with Multi-Agent LLM Systems

The integration of Large Language Models into radiology represents a paradigm shift, moving beyond narrative report generation to tackle complex, procedural tasks. The automation of Magnetic Resonance Imaging protocol selection and design, a critical yet time-consuming process in the clinical workflow, stands as a prime candidate for this transformation. Traditional protocoling consumes a significant portion of radiologists' time—approximately 6.2% to 17% of their work shift—and is prone to human error, with studies indicating that over 37% of protocoling-related issues are amenable to automation [26] [27]. Early machine learning approaches demonstrated feasibility but often struggled with institutional specificity and the nuanced reasoning required for protocol selection. The advent of Multimodal LLMs and sophisticated AI architectures, particularly Multi-Agent LLM Systems, now offers a path toward more intelligent, context-aware, and autonomous solutions. These systems can process complex clinical indications, integrate institutional guidelines, and even generate pulse sequences, thereby promising to enhance efficiency, standardize protocols, reduce errors, and free up expert radiologists for higher-level diagnostic duties. This document outlines the application notes and experimental protocols for implementing such systems, with a specific focus on brain MRI within the broader context of multimodal LLM research for sequence classification.

Research into AI-driven MRI protocoling spans traditional machine learning, convolutional neural networks, and the latest large language models. The tables below summarize the performance of various approaches, providing a benchmark for the current state of the technology.

Table 1: Performance of Traditional Machine Learning and Deep Learning Models in Automated Protocoling

| Model Type | Modality | Task | Dataset Size | Number of Protocols/Sequences | Reported Accuracy | Citation |

|---|---|---|---|---|---|---|

| Support Vector Machine | MRI & CT | Protocol Selection | ~700,000 reports | 293 (MRI) | 86.9% (MRI) | [28] |

| Convolutional Neural Network | Prostate MRI | DCE Sequence Decision | 300 training, 100 validation | Binary (bpMRI vs. mpMRI) | AUC: 0.88 | [29] |

| ResNet-18 | Brain MRI | Sequence Classification | 10,771 exams, 43,601 MRIs | 9 sequence classes | Benchmark for domain shift | [4] |

| MedViT (CNN-Transformer) | Brain MRI | Sequence Classification under Domain Shift | 10,771 exams (Adult) → 2,383 (Pediatric) | 6 sequence classes | 0.905 (after expert adjustment) | [4] |

Table 2: Performance of Large Language Models in MRI Protocoling and Sequence Recognition

| Model | Task | Key Enhancement | Performance | Comparison | Citation |

|---|---|---|---|---|---|

| GPT-4o | Brain MRI Sequence Recognition | Zero-shot prompting | 97.7% sequence accuracy | Outperformed other MLLMs | [17] |

| Gemini 2.5 Pro | Brain MRI Sequence Recognition | Zero-shot prompting | 93.1% sequence accuracy | Occasional hallucinations | [17] |

| Claude 4 Opus | Brain MRI Sequence Recognition | Zero-shot prompting | 73.1% sequence accuracy | Lower accuracy on SWI/ADC | [17] |

| GPT-4o | Neuroradiology Protocol Selection | Retrieval-Augmented Generation (RAG) | 81% sequence prediction accuracy | Matched radiologists (81% ± 0.21, P=0.43) | [27] |

| LLaMA 3.1 405B | Neuroradiology Protocol Selection | Retrieval-Augmented Generation (RAG) | 70% sequence prediction accuracy | Lower than GPT-4o (P<0.001) | [27] |

| Multi-Agent LLM System | MR Exam Design | Multi-Agent Framework | Demonstrated feasibility | Automated protocol/sequence design from health record | [13] |

Experimental Protocols for Multi-Agent LLM Systems in MRI Protocoling

Protocol 1: Implementing a Multi-Agent LLM System with RAG for Protocol Selection

1. Objective: To establish and validate a multi-agent LLM system capable of accurately selecting institution-specific MRI brain protocols based on a patient's clinical presentation, leveraging Retrieval-Augmented Generation to ensure recommendations adhere to local guidelines.

2. Background: A primary challenge in automated protocoling is the lack of standardization across institutions. LLMs, in their base form, lack knowledge of local protocols and are prone to hallucination. A study by Wagner et al. demonstrated that a context-aware, RAG-based pipeline can streamline protocol selection, minimizing manual input and training needs [13].

3. Materials and Reagents:

- Computing Hardware: Workstation with high-speed internet connection and/or access to cloud computing services (e.g., AWS, Google Cloud) for running LLM APIs.

- Software & Libraries: Python 3.12+, LangChain framework, Replicate API (for open-source models like LLaMA), OpenAI API.

- LLMs: Proprietary model (e.g., GPT-4o) and/or a powerful open-source model (e.g., LLaMA 3.1 405B).

- Embedding Model: OpenAI's "text-embedding-ada-002" or an equivalent open-source model.

- Data: A curated, institution-specific protocol guideline document (PDF or text format) detailing all available brain MRI protocols and their corresponding sequences.

4. Workflow Procedure:

- Step 1: Data Preprocessing. Convert the institutional protocol guideline PDF into plain text. Use a recursive character text splitter (e.g., from LangChain) to segment the document into chunks of ~400 characters with no overlap, ensuring each protocol is a distinct unit [27].

- Step 2: Vector Database Creation. Use the chosen embedding model to convert each text chunk into a vector representation. Store all vectors in a vector database (e.g., FAISS, Chroma) to enable efficient similarity search [27].

- Step 3: Multi-Agent System Design.

- Agent A: "Clinical Indication Interpreter": This agent's role is to extract key clinical entities from the free-text clinical question (e.g., "rule out multiple sclerosis," "evaluate for acute stroke"). It summarizes the patient's presentation into structured keywords.

- Agent B: "Protocol Retriever": This agent takes the keywords from Agent A and queries the vector database. It retrieves the top K (e.g., 4) most relevant protocol guidelines based on semantic similarity [27].

- Agent C: "Protocol Selector & Justifier": This final agent receives the retrieved protocol guidelines and the original clinical question. It is prompted to select the single most appropriate protocol from the retrieved options and output the MRI sequences exactly as listed. It can also be instructed to provide a brief justification for its selection.