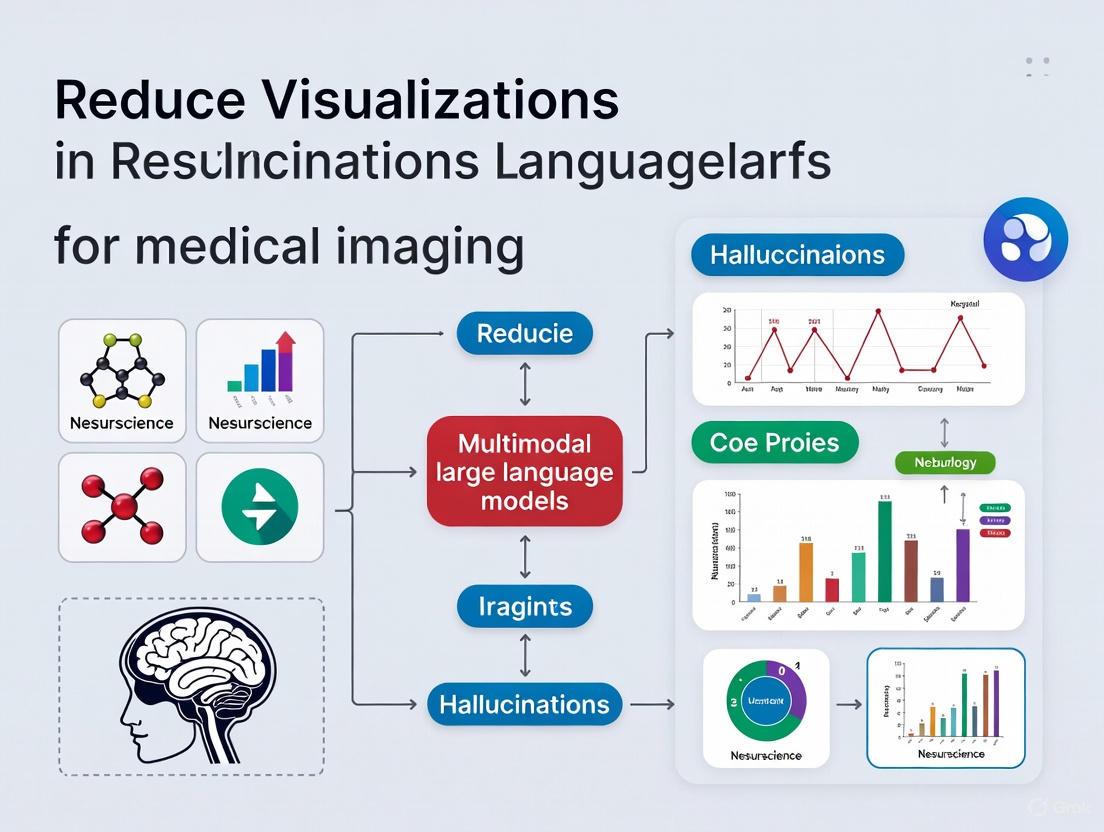

Mitigating Hallucinations in Medical Multimodal LLMs: Strategies for Reliable Clinical Imaging AI

This article provides a comprehensive analysis of the challenge of hallucination in Multimodal Large Language Models (MLLMs) applied to medical imaging, a critical barrier to clinical adoption.

Mitigating Hallucinations in Medical Multimodal LLMs: Strategies for Reliable Clinical Imaging AI

Abstract

This article provides a comprehensive analysis of the challenge of hallucination in Multimodal Large Language Models (MLLMs) applied to medical imaging, a critical barrier to clinical adoption. Tailored for researchers and drug development professionals, it explores the foundational causes of these fabrications, from data heterogeneity to architectural misalignments. We review cutting-edge mitigation methodologies, including Visual Retrieval-Augmented Generation (V-RAG) and specialized training paradigms, and provide a rigorous framework for their evaluation and validation. The content further delves into troubleshooting persistent issues like adversarial vulnerabilities and outlines optimization strategies to balance accuracy with computational efficiency, concluding with synthesized key takeaways and future directions for building trustworthy AI in biomedicine.

Defining the Problem: Understanding Hallucinations in Medical MLLMs

What Are Hallucinations? Clarifying Definitions for Medical Imaging

FAQ 1: What is an AI hallucination in the context of medical imaging?

In medical imaging, an AI hallucination refers to artificial intelligence-generated content that is factually false or misleading but is presented as factual [1]. Specifically, for tasks like image enhancement or report generation, it is the generation of visually realistic and highly plausible abnormalities or artifacts that do not exist in reality and deviate from the anatomic or functional truth [2]. This is distinct from general inaccuracies and poses a significant risk to diagnostic reliability.

FAQ 2: How are hallucinations different from other AI errors?

Hallucinations are a specific category of error. The following table clarifies key distinctions:

| Error Type | Definition | Key Characteristic | Example in Medical Imaging |

|---|---|---|---|

| Hallucination [2] | AI fabricates a realistic-looking abnormality or structure that is not present. | Addition of plausible but false image features or text. | A denoising model adds a small, realistic-looking lesion to a PET scan that does not exist [2]. |

| Illusion [2] | AI misinterprets or misclassifies an existing structure. | Misinterpretation of something that is actually there. | A model incorrectly labels a benign cyst as a malignant tumor. |

| Delusion [2] | AI generates an implausible or dreamlike structure that is clearly not real. | Generation of anatomically impossible or fantastical content. | A model generates an image of an organ in the wrong location or with an impossible shape. |

| Omission [2] | AI fails to identify and removes a real structure or lesion. | Removal of true information. | A model removes a real lesion from a scan, replacing it with normal-looking tissue. |

FAQ 3: What are the root causes of hallucinations in Multimodal LLMs for medicine?

Hallucinations can stem from several sources, often interacting with each other:

- Data-Driven Causes: Models trained on low-quality, imbalanced, or noisy datasets can learn incorrect patterns [3]. If the training data contains inaccuracies or source-reference divergences (where the text description doesn't perfectly match the image), the model is encouraged to generate ungrounded content [1].

- Model Architecture & Training Causes: The next-token prediction objective during pre-training incentivizes models to "guess" the next word, even with insufficient information [1]. In vision-language models, poor visual encoding or misalignment between image and text features can lead the language model to ignore the visual evidence and "confabulate" [3].

- Alignment Issues: A failure to properly synchronize and align information from different modalities (e.g., image, text, clinical data) can create contradictory internal representations, leading to incoherent or fabricated outputs [3].

FAQ 4: What experimental methods can quantify hallucinations?

A robust experimental protocol to evaluate hallucinations involves "entity probing" and automated metrics [4].

Experimental Protocol: Entity Probing for Hallucination Evaluation

- Objective: To measure an MLLM's tendency to hallucinate by testing its ability to correctly ground medical entities in a given image.

- Dataset: Use a standardized medical imaging dataset with ground-truth reports (e.g., MIMIC-CXR for chest X-rays) [4].

- Method:

- For a given medical image, present it to the MLLM alongside a series of yes/no questions about the presence or absence of specific medical entities (e.g., "Is there pleural effusion in this image?") [4].

- Compare the model's predictions against the answers derived from the ground-truth report.

- This method avoids sensitivity to phrasing and directly tests factual grounding [4].

- Quantification: Calculate the accuracy of the model's yes/no answers against the ground truth. This provides a clinical perspective on hallucination rates not captured by standard language generation metrics.

The following workflow diagram illustrates this entity probing process:

FAQ 5: What quantitative data exists on hallucination rates?

Recent studies have quantified the susceptibility of LLMs to hallucinations in clinical contexts. The table below summarizes key findings from an adversarial attack study, where models were prompted with clinical vignettes containing one fabricated detail [5].

Table 1: Hallucination Rates Across LLMs in a Clinical Adversarial Setting

| Large Language Model (LLM) | Default Hallucination Rate (%) | Hallucination Rate with Mitigation Prompt (%) |

|---|---|---|

| GPT-4o | 53 | 23 |

| Claude 3 Opus | 57 | 39 |

| Llama 3 70B | 67 | 52 |

| Gemini 1.5 Pro | 68 | 48 |

| GPT-3.5 Turbo | 72 | 54 |

| Distilled-DeepSeek-R1 | 82 | 68 |

| Mean (Across all models) | 66 | 44 |

Source: Adapted from [5]. The mitigation prompt instructed the model to use only clinically validated information.

FAQ 6: What are effective strategies to mitigate hallucinations?

Multiple strategies can be employed to reduce hallucinations, with Visual Retrieval-Augmented Generation (V-RAG) showing significant promise [4].

Mitigation Strategy: Visual Retrieval-Augmented Generation (V-RAG)

- Principle: Instead of relying solely on the model's internal parameters, V-RAG grounds the generation process by retrieving visually similar images and their associated reports from a database. The model can then compare the query image to these references to determine what is truly important [4].

- Workflow:

- Multimodal Retrieval: For a query image, use a model like BiomedCLIP to extract its image embedding. Then, use a vector database (e.g., FAISS) to retrieve the top-k most similar images and their corresponding reports [4].

- Inference with Retrievals: The retrieved images and reports are prepended to the original prompt as references. The model is then instructed to answer the question based on both the query image and the reference materials, enhancing contextual understanding [4].

The following diagram illustrates the V-RAG workflow for mitigating hallucinations:

Table 2: Essential Materials for Hallucination Research in Medical MLLMs

| Item | Function in Research | Example / Reference |

|---|---|---|

| BiomedCLIP | A vision-language model that provides robust image and text embeddings for the medical domain, crucial for retrieval tasks in V-RAG [4]. | [4] |

| FAISS Vector Database | A library for efficient similarity search and clustering of dense vectors, enabling fast retrieval of similar images from a large database [4]. | [4] |

| MIMIC-CXR Dataset | A large, publicly available dataset of chest X-rays with corresponding free-text reports, used for training and benchmarking report generation models [4]. | [4] |

| RadGraph Metric | An evaluation metric that extracts clinical entities and relations from generated reports, providing a measure of clinical accuracy (RadGraph-F1) beyond traditional text similarity [4]. | [4] |

| Entity Probing Framework | A methodology to test an MLLM's factual grounding by asking yes/no questions about medical entities in an image, providing a direct measure of hallucination [4]. | [4] |

In the high-stakes field of medical research, fabricated findings and model hallucinations present significant risks that can compromise diagnostic accuracy, patient safety, and the validity of scientific discoveries. The integrity of medical research data faces substantial threats from various forms of deception, while emerging multimodal Large Language Models (MLLMs) introduce new challenges through their tendency to generate hallucinated content. This technical support center provides researchers, scientists, and drug development professionals with essential resources to identify, prevent, and address these critical issues in their experimental workflows, particularly within medical imaging research.

Quantifying the Problem: Data on Deception and Falsification

Frequency of Deceptive Practices in Health Research

Recent studies have quantified how frequently research subjects employ deception across various clinical trial contexts. The table below summarizes findings from subjects who admitted to using deceptive practices in health-related studies over a 12-month period [6].

Table 1: Frequency of Deceptive Practices in Health Research Studies

| Type of Deception | Specific Method | Average Frequency of Use |

|---|---|---|

| Concealment of Information | Mental health information | 58% of studies |

| Physical health information | 57% of studies | |

| Overall concealment | 67% of studies | |

| Fabrication to Qualify | Exaggerating health symptoms | 45% of studies |

| Pretending to have a health condition | 39% of studies | |

| Overall fabrication | 53% of studies | |

| Data Falsification After Enrollment | Falsely reporting improvement | 38% of studies |

| Discarding medication to appear compliant | 32% of studies | |

| Overall data falsification | 40% of studies |

Global Impact of Substandard and Falsified Medical Products

The World Health Organization has documented the alarming scope of substandard and falsified medical products globally. These products represent a significant public health threat with both clinical and economic consequences [7].

Table 2: Impact of Substandard and Falsified Medical Products

| Impact Category | Statistical Measure | Global Impact |

|---|---|---|

| Prevalence | Medicines in low- and middle-income countries | At least 1 in 10 medicines |

| Economic Burden | Annual global cost | US$30.5 billion |

| Health Risks | Treatment failure, poisoning, drug-resistant infections | Significant contributor |

| Distribution Channels | Online and informal markets | Commonly sold |

Technical Solutions: Addressing Hallucinations in Medical MLLMs

Visual Retrieval-Augmented Generation (V-RAG)

Multimodal Large Language Models (MLLMs) have demonstrated impressive capabilities in medical imaging tasks but remain prone to hallucinations—generating content not grounded in input data. Visual Retrieval-Augmented Generation (V-RAG) addresses this by incorporating both visual and textual data from retrieved similar images during the generation process [8] [4].

Experimental Protocol: Implementing V-RAG for Medical Imaging

- Multimodal Retrieval: Utilize BiomedCLIP to extract robust image embeddings (dimension: 512) for medical images. Construct a FAISS vector storage system with Hierarchical Navigable Small World (HNSW) algorithm for efficient approximate k-nearest neighbor search [4].

- Reference Integration: For a query image, retrieve top-k similar images and corresponding reports. Structure the prompt to include each reference before the question: "This is the i-th similar image and its report for your reference. [Reference]i... Answer the question with only the word yes or no. Do not provide explanations. According to the last query image and the reference images and reports, [Question] [Query Image]" [4].

- Entity Probing Validation: Present images to the MLLM and ask yes/no questions about disease entities. Compare predictions against answers grounded in an LLM's interpretation of reference reports to quantify hallucination reduction [4].

- Fine-Tuning for Enhanced Comprehension: Implement general image-text fine-tuning tasks to strengthen Med-MLLMs' multimodal understanding when processing multiple retrieved images, enabling single-image-trained models to effectively utilize V-RAG [4].

Diagram 1: V-RAG Implementation Workflow

Research Reagent Solutions for Integrity Assurance

Table 3: Essential Research Reagents and Tools for Data Integrity

| Research Tool | Primary Function | Application in Integrity Assurance |

|---|---|---|

| BiomedCLIP | Extracts robust image embeddings from diverse biomedical images | Creates representations for accurate similarity matching in retrieval systems [4] |

| FAISS with HNSW | Enables efficient vector similarity search at scale | Facilitates rapid identification of similar medical images for reference [4] |

| Identity Registries | Tracks research participants across studies | Prevents professional subjects from enrolling in multiple trials concurrently [6] |

| Medication Compliance Tech | Provides objective measures of medication adherence | Detects fraudulent reports of compliance through digital monitoring [6] |

| Entity Probing Framework | Tests model accuracy on specific medical entities | Quantifies hallucination frequency in Med-MLLMs for benchmarking [4] |

Troubleshooting Guides & FAQs

Detection and Prevention of Subject Deception

Q: What are the most effective strategies for identifying deceptive subjects in clinical trials? A: Implement research subject identity registries to track participation across studies. Utilize technological solutions for objective medication compliance monitoring. Focus screening efforts on mental and physical health concealment, which occur in 58% and 57% of studies respectively among deceptive subjects [6].

Q: How does subject deception impact study validity and sample size requirements? A: Deceptive subjects can dramatically increase sample size requirements. Modeling shows that if just 10% of a sample consists of subjects pretending to have a condition who then report improvement (making treatment "destined to succeed"), sample size requirements more than double. Even 10% of subjects reporting fraudulent medication compliance can increase sample size needs by 20% [6].

Experimental Protocol: Subject Deception Detection

- Comprehensive Screening: Implement cross-trial participation databases to identify professional subjects [6].

- Verification Methods: Use objective biometric measures, medication adherence technologies, and structured interviews to verify subject-reported data [6].

- Data Analytics: Apply statistical pattern recognition to identify suspicious response patterns and inconsistencies in subject-reported outcomes [6].

Diagram 2: Subject Deception Detection Protocol

Mitigating Hallucinations in Medical MLLMs

Q: What specific techniques reduce hallucinations in medical multimodal LLMs for image interpretation? A: Visual Retrieval-Augmented Generation (V-RAG) significantly reduces hallucinations by incorporating both visual and textual data from retrieved similar images. This approach improves accuracy for both frequent and rare medical entities, the latter of which typically have less positive training data [8] [4].

Q: How can researchers validate the reduction of hallucinations in medical MLLMs? A: Entity probing provides a clinical perspective on text generations by presenting images to MLLMs and asking yes/no questions about disease entities, then comparing predictions against answers grounded in LLM interpretations of reference reports. This approach avoids sensitivity to entity phrasing while providing clinically relevant metrics [4].

Experimental Protocol: Hallucination Reduction Validation

- Dataset Preparation: Utilize standardized medical imaging datasets (MIMIC-CXR for chest X-ray report generation, Multicare for medical image caption generation) [4].

- Entity Selection: Identify frequent and rare medical entities for probing, ensuring balanced evaluation across different prevalence categories [4].

- Benchmarking: Compare baseline MLLMs against V-RAG enhanced models using entity probing accuracy and RadGraph-F1 scores for clinical accuracy [4].

- Statistical Analysis: Measure significant differences in performance between traditional and V-RAG approaches using appropriate statistical tests [4].

Integrated Workflow for Research Integrity

Diagram 3: Integrated Framework for Research Integrity

Troubleshooting Guide: Common MLLM Architectural Flaws and Solutions

This guide addresses frequent architectural failure points in Multimodal Large Language Models (MLLMs) that lead to hallucinations in medical imaging, providing diagnostic steps and mitigation strategies.

Table: Troubleshooting MLLM Architectural Issues

| Architectural Component | Failure Symptoms | Root Cause Analysis | Recommended Solutions |

|---|---|---|---|

| Visual Attention Mechanism | Model describes objects/anatomy not present in image; inaccurate spatial relationships [9] | Limited localization capability; attention spread over uninformative visual tokens rather than critical regions [9] [10] | Implement Vision-Guided Attention (VGA) using Visual Semantic Confidence [9] |

| Token Interaction & Information Propagation | "Snowballing" hallucinations where initial error cascades; contradictions in generated report [10] | Attention collapse on outlier tokens; insufficient vision-text token interaction due to positional encoding decay [10] | Apply FarSight decoding with attention registers; use diminishing masking rate in causal masks [10] |

| Cross-Modal Alignment | Plausible but clinically unsupported descriptions; confusion between visually similar anatomical structures [11] [12] | Weak alignment between visual features and medical concepts; vision encoder outputs not properly grounded in clinical knowledge [11] [13] | Integrate Clinical Contrastive Decoding (CCD); use expert model signals to refine token logits [11] |

| Instruction Following & Prompt Sensitivity | Hallucinations triggered by specific prompt phrasing; over-sensitivity to clinical sections in instructions [11] [14] | Inadequate instruction tuning for medical ambiguity; failure to handle clinically implausible prompts robustly [11] | Employ dual prompting strategies (instruction-based + reverse prompting) for critical self-reflection [14] |

| Positional Encoding | Deteriorating accuracy in longer reports; poor tracking of anatomical relationships in 3D imaging [10] [15] | Rotary Positional Encoding (RoPE) long-term decay fails to maintain spatial relationships across lengthy contexts [10] | Enhance positional awareness with diminishing masking rates; explore absolute positional encoding supplements [10] |

Frequently Asked Questions

Q1: Why does my MLLM consistently hallucinate small anatomical structures even with high-quality training data?

This is frequently an attention mechanism failure, not a data quality issue. Standard visual attention often distributes weights across uninformative background tokens, neglecting subtle but critical anatomical features [9]. The solution is to implement Vision-Guided Attention (VGA), which uses Visual Semantic Confidence scores to identify and prioritize informative visual tokens, forcing the model to focus on clinically relevant regions [9].

Q2: How can I mitigate "snowballing hallucinations" where one error leads to cascading inaccuracies in the report?

Snowballing hallucinations stem from insufficient token interaction and attention collapse. As generation progresses, the model increasingly relies on its own previous (potentially erroneous) tokens rather than visual evidence [10]. The FarSight method addresses this by optimizing causal masks to maintain balanced information flow between visual and textual tokens throughout the generation process, preventing early errors from propagating [10].

Q3: What is the most lightweight approach to reduce hallucinations without retraining my model?

Clinical Contrastive Decoding (CCD) provides a training-free solution. CCD introduces a dual-stage contrastive mechanism during inference that leverages structured clinical signals from task-specific expert models (e.g., symptom classifiers) to refine token-level logits, enhancing clinical fidelity without modifying the base MLLM [11]. Similarly, VGA adds only 4.36% inference latency while significantly reducing hallucinations [9].

Q4: Why does my model perform well on visual question answering but hallucinate frequently in full report generation?

RRG is substantially more complex and error-prone than VQA. While VQA addresses narrowly scoped queries, radiology report generation requires holistic image understanding and precise, clinically grounded expression of findings [11]. This complexity exposes weaknesses in cross-modal alignment and long-range dependency handling. Solutions include template instruction tuning and keyword-focused training to maintain clinical precision across longer outputs [15].

Q5: How can I improve my model's handling of ambiguous cases where it should express uncertainty rather than hallucinate?

This requires enhancing the model's self-reflection capabilities. Implement reverse prompting strategies that explicitly question the model's reasoning process (e.g., "Are important details being captured?") to encourage continuous self-evaluation [14]. In multi-agent systems, incorporate uncertainty quantification that allows agents to communicate confidence levels, preventing overconfident but incorrect generations [12].

Experimental Protocols for Hallucination Mitigation

Protocol 1: Implementing Clinical Contrastive Decoding (CCD)

CCD integrates structured clinical signals from radiology expert models to refine MLLM generation without retraining [11].

Methodology:

- Expert Model Integration: Employ a task-specific expert model (e.g., symptom classifier) to extract structured clinical labels and confidence probabilities from medical images

- Dual-Stage Contrastive Mechanism:

- Stage 1: Inject predicted labels as descriptive prompts to enhance MLLM grounding

- Stage 2: Use probability scores to perturb decoding logits toward clinical consistency

- Token-Level Logit Refinement: Apply contrastive decoding between original and expert-guided distributions at each generation step

Validation: On MIMIC-CXR dataset, CCD yielded up to 17% improvement in RadGraph-F1 score for state-of-the-art RRG models [11].

Protocol 2: Vision-Guided Attention (VGA) Implementation

VGA directs visual attention by leveraging semantic features of visual tokens to identify the most informative regions [9].

Methodology:

- Visual Semantic Confidence Calculation: For each visual token ( vi ), compute semantic confidence score ( c{vi}(O) ) for object ( O ) using: ( c{vi}(O) = \text{softmax}[\text{logit}{vi}(O)] \approx c{vi}(o0) ) where ( o_0 ) is the first token of the tokenized object [9]

- Visual Grounding: Obtain visual grounding of object ( O ) using: ( \mathbf{G}O = \text{Norm}[{c{vi}(o0)}_{i=1}^m] \in \mathbb{R}^m ) where ( m ) is the number of visual tokens [9]

- Attention Guidance: Use ( \mathbf{G}_O ) to guide attention weights toward tokens with high clinical relevance

Performance: VGA introduces only 4.36% inference latency overhead and is compatible with FlashAttention optimization [9].

The Scientist's Toolkit: Research Reagent Solutions

Table: Essential Components for Hallucination-Resistant MLLM Architectures

| Component | Function | Implementation Example |

|---|---|---|

| Clinical Contrastive Decoding (CCD) | Training-free inference framework that reduces hallucinations by integrating expert model signals [11] | Dual-stage contrastive mechanism applied to token-level logits during generation [11] |

| Vision-Guided Attention (VGA) | Directs model focus to relevant visual regions using semantic features of visual tokens [9] | Visual Semantic Confidence scores used to compute visual grounding for attention guidance [9] |

| FarSight Decoding | Plug-and-play decoding strategy that reduces attention interference from outlier tokens [10] | Attention registers within causal mask upper triangular matrix; diminishing masking rate [10] |

| Adaptive Dynamic Masking | Filters irrelevant visual content by computing frame-level weights using self-attention [14] | Dynamic masking rate determined via normal distribution sampling [14] |

| Dual Prompting Strategy | Combines instruction-based and reverse prompting to reduce language biases [14] | Instruction prompts define tasks; reverse prompts question reasoning process [14] |

| Feature-Oriented Radiology Task Evaluation (FORTE) | Evaluation metric capturing clinical essence of generated reports [15] | Structured keyword extraction across four components: degree, landmark, feature, impression [15] |

Architectural Diagrams

MLLM Architecture Hallucination Sources & Mitigations

This diagram illustrates the core MLLM architecture for medical imaging, highlighting where hallucinations originate and corresponding mitigation strategies that can be implemented at each failure point.

Clinical Contrastive Decoding Workflow

This diagram shows the Clinical Contrastive Decoding process where expert model outputs are integrated with base MLLM generation to produce clinically grounded reports while mitigating hallucinations.

Frequently Asked Questions (FAQs)

FAQ 1: What is the connection between data scarcity and hallucinations in multimodal medical AI? Data scarcity directly limits a model's ability to learn robust and generalizable patterns. When trained on small or non-comprehensive datasets, models tend to overfit to the limited examples and, when confronted with unfamiliar data, may "guess" or generate fabrications, a phenomenon known as hallucination [16] [17]. In medical imaging, this can manifest as a model incorrectly identifying a tumor in a healthy tissue region or missing a rare pathology. Foundational models pretrained on large, diverse datasets have been shown to maintain high performance even when fine-tuned on only 1% of a target dataset, significantly mitigating this risk [17].

FAQ 2: How does data heterogeneity negatively impact collaborative model training, like in Federated Learning? Data heterogeneity refers to variations in data distributions across different sources. In Federated Learning (FL), where models are trained across multiple hospitals, heterogeneity in features (e.g., different imaging equipment), labels (e.g., varying disease prevalence), or data quantity can cause local models to diverge significantly [18] [19]. This leads to an unstable global model that is difficult to optimize, resulting in slow convergence and subpar performance. Research has shown a notable performance decline in FL algorithms like FedAvg as data heterogeneity increases [18].

FAQ 3: What are some proven strategies to mitigate data heterogeneity in distributed learning for medical imaging? Advanced FL frameworks are being developed to address this. For instance, HeteroSync Learning (HSL) introduces a Shared Anchor Task (SAT)—a homogeneous reference task from a public dataset—that is co-trained with local tasks across all nodes. This aligns the feature representations learned by different institutions without sharing private patient data. In large-scale simulations, HSL outperformed 12 benchmark methods, matching the performance of a model trained on centrally pooled data [19].

FAQ 4: Beyond acquiring more data, how can we counteract data scarcity in biomedical imaging? Multi-task learning (MTL) is a powerful strategy that pools multiple small- and medium-sized datasets with different types of annotations (e.g., classification, segmentation) to train a single, universal model [17]. This approach allows the model to learn versatile and generalized image representations. The UMedPT foundational model, trained using MTL on 17 different tasks, demonstrated that it could match or exceed the performance of models pretrained on ImageNet, even with only a fraction of the target task's training data [17].

Troubleshooting Guides

Issue: Model Generates Inaccurate or Fabricated Image Annotations (Hallucinations)

| Potential Cause | Diagnostic Steps | Mitigation Strategies |

|---|---|---|

| Limited or Non-Diverse Training Data [16] [20] | Analyze dataset for class imbalance and lack of representation for rare conditions or demographic groups. | Employ multi-task learning to combine multiple smaller datasets [17]. Use data augmentation and foundational models pretrained on large-scale biomedical datasets [17]. |

| Poor Data Quality and Noisy Labels [3] | Review data preprocessing pipelines; check for inconsistencies in expert annotations. | Implement rigorous noise filtering and preprocessing. Use consistent labeling protocols and cross-verification by multiple experts to ensure annotation quality [3]. |

| Misalignment Across Modalities [3] | Check for temporal or spatial misalignment between paired data (e.g., an MRI scan and its corresponding radiology report). | Implement cross-modality verification techniques to ensure generated text accurately reflects the visual input [3]. Use synchronization protocols for temporal data. |

Issue: Performance Collapse in Federated Learning Due to Data Heterogeneity

| Potential Cause | Diagnostic Steps | Mitigation Strategies |

|---|---|---|

| Feature Distribution Skew [19] | Conduct statistical analysis (e.g., t-SNE, UMAP) to visualize feature drift between client data. | Adopt frameworks like HeteroSync Learning (HSL) that use a Shared Anchor Task to align representations across clients [19]. |

| Label Distribution Skew [19] | Audit label distributions (e.g., disease prevalence) across participating institutions. | Utilize algorithms designed for non-IID data, such as FedProx or FedBN, which handle client drift and batch normalization locally [18] [19]. |

| Data Quantity Skew [19] | Compare the number of data samples per client. Large disparities can bias the global model. | Implement weighted aggregation strategies in the FL server to balance the influence of clients with varying data volumes [19]. |

Experimental Data & Protocols

Table 1: Performance of UMedPT Foundational Model Under Data Scarcity Conditions

This table summarizes the model's resilience when limited data is available for target tasks, demonstrating its value in data-scarce environments [17].

| Task Name | Task Type | Model | Training Data Used | Key Metric | Score |

|---|---|---|---|---|---|

| CRC-WSI (In-Domain) | Tissue Classification | UMedPT (Frozen) | 1% | F1 Score | 95.4% |

| ImageNet (Fine-tuned) | 100% | F1 Score | 95.2% | ||

| Pneumo-CXR (In-Domain) | Pediatric Pneumonia Diagnosis | UMedPT (Frozen) | 5% | F1 Score | 93.5% |

| ImageNet (Fine-tuned) | 100% | F1 Score | 90.3% | ||

| NucleiDet-WSI (In-Domain) | Nuclei Detection | UMedPT (Fine-tuned) | 100% | mAP | 0.792 |

| ImageNet (Fine-tuned) | 100% | mAP | 0.710 |

Table 2: Impact of Data Heterogeneity on Federated Learning Performance (FedAvg)

This table shows how non-IID data negatively affects a standard FL algorithm, highlighting the need for advanced mitigation techniques [18].

| Data Distribution Setting | Dataset | Key Finding | Impact on Model Performance |

|---|---|---|---|

| IID (Identically Distributed) | COVIDx CXR-3 | Baseline performance under ideal, homogeneous conditions. | Stable convergence and higher final accuracy. |

| Non-IID (Heterogeneous) | COVIDx CXR-3 | Performance with realistic data skew across clients. | Notable performance decline; unstable and sluggish convergence. |

Experimental Protocol: Multi-Task Learning for a Foundational Model [17]

- Objective: Train a universal biomedical pretrained model (UMedPT) to overcome data scarcity across various imaging tasks.

- Materials:

- Datasets: A multi-task database comprising 17 different tasks, including tomographic, microscopic, and X-ray images.

- Annotation Types: A mix of classification, segmentation, and object detection labels.

- Methodology:

- Model Architecture: A neural network with shared blocks (an encoder, a segmentation decoder, a localization decoder) and task-specific heads for different label types.

- Training Strategy: A gradient accumulation-based multi-task training loop that is not constrained by the number of tasks. The shared encoder is trained on all tasks simultaneously to learn versatile representations.

- Evaluation: The model is evaluated on in-domain and out-of-domain tasks. Performance is assessed with the encoder kept frozen (using only the learned features) and with fine-tuning, while varying the amount of training data (1% to 100%) for the target task.

Experimental Protocol: Evaluating Federated Learning with Data Heterogeneity [18]

- Objective: Investigate the impact of data heterogeneity on the performance of the Federated Averaging (FedAvg) algorithm.

- Materials:

- Dataset: COVIDx CXR-3 and other chest X-ray datasets.

- Framework: TensorFlow Federated (TFF) or similar FL simulation framework.

- Methodology:

- Data Partitioning: The dataset is partitioned across multiple virtual clients to simulate both IID and non-IID environments. For non-IID, data is split to create feature, label, or quantity skew.

- FL Simulation: The FedAvg algorithm is run for a set number of communication rounds. In each round, clients train locally on their data, and the server aggregates the model updates.

- Performance Analysis: The global model's accuracy and convergence speed are tracked and compared between the IID and non-IID settings.

Workflow and System Diagrams

Multi-Task Training for Foundational Models

HeteroSync Learning for Data Heterogeneity

The Scientist's Toolkit: Research Reagent Solutions

| Tool or Material | Function in Experiment |

|---|---|

| Foundational Model (UMedPT) [17] | A pretrained model that provides robust, general-purpose feature extraction for biomedical images, drastically reducing the amount of task-specific data required. |

| Shared Anchor Task (SAT) Dataset [19] | A homogeneous, public dataset (e.g., CIFAR-10, RSNA) used in distributed learning to align feature representations across heterogeneous nodes without sharing private data. |

| Multi-gate Mixture-of-Experts (MMoE) [19] | An auxiliary learning architecture that efficiently coordinates the training of a local primary task and the global Shared Anchor Task, enabling better model generalization. |

| Convex Analysis of Mixtures (CAM) [21] | A computational method used to decompose high-dimensional omics data (e.g., metabolomics) into discrete latent features, helping to uncover underlying biological manifolds. |

| Federated Averaging (FedAvg) [18] | The foundational algorithm for Federated Learning, which coordinates the aggregation of model updates from distributed clients while keeping raw data local. |

Cutting-Edge Solutions: Technical Strategies for Hallucination Mitigation

Frequently Asked Questions (FAQs)

Q1: What is Visual RAG (V-RAG) and how does it differ from traditional text-based RAG?

A1: Visual RAG (V-RAG) is a retrieval-augmented generation framework that incorporates both visual data (retrieved images) and their associated textual reports to enhance the response generation of Multimodal Large Language Models (MLLMs). Unlike traditional text-based RAG, which assumes retrieved images are perfectly interchangeable with the query image and uses only their associated text, V-RAG allows the model to directly compare the query image with retrieved images and reports. This enables the model to identify what is truly relevant for generation, leading to more accurate and contextually relevant answers, which is crucial in medical imaging to mitigate hallucinations [4].

Q2: What are the primary technical components of a V-RAG system?

A2: A V-RAG system typically consists of the following core components [22] [4]:

- Multimodal Retriever: Employs a model like BiomedCLIP to extract robust image embeddings and uses a vector database (e.g., FAISS) for efficient approximate nearest neighbor search to find the top-k most similar images and their reports [4].

- Vector Storage: A database (e.g., Chroma, Milvus) that stores the embedded representations of the visual and textual data for fast retrieval [22] [23].

- Retrieval Engine: Performs the similarity search between the query embedding and the stored embeddings [22].

- Multimodal Generator (MLLM): A model capable of processing both image and text inputs. The retrieved images and reports are formatted into a structured prompt, often with specific guidance, and presented to the MLLM alongside the original query image to generate a grounded response [4].

Q3: What are the key performance metrics for evaluating V-RAG in a medical context?

A3: Standard natural language generation metrics like ROUGE are often insufficient. Key domain-specific metrics include [4] [24]:

- Entity Probing Accuracy: Measures the model's accuracy on yes/no questions about whether specific medical entities are grounded in the image. This is effective for both frequent and rare entities [4].

- RadGraph-F1 Score: Evaluves the factual accuracy of generated radiology reports by extracting clinical entities and their relations from the generated text and comparing them to a reference report [4] [24].

- FactScore: Decomposes generated answers into individual facts and verifies them against a knowledge source for factual consistency [24].

- MED-F1: A clinical relevance metric that assesses the medical validity of the generated content [24].

Q4: My V-RAG system retrieves relevant documents but still generates incorrect answers. What could be wrong?

A4: This is a common failure point known as "Not Extracted" [25]. The answer is in the retrieved context, but the MLLM fails to extract it correctly. To fix this [25]:

- Deduplicate and Clean Knowledge Base: Remove conflicting or redundant information from your retrieval corpus.

- Optimize Prompts: Engineer your prompts to encourage precise extraction from the provided context.

- Limit Retrieval Noise: Ensure only the most relevant context is passed to the generator by adjusting the top-K results or implementing re-ranking strategies.

Q5: How can I adapt a single-image-trained MLLM to work with the multi-image input required for V-RAG?

A5: A general fine-tuning technique can be used to boost a Med-MLLM's capability for V-RAG. This involves designing fine-tuning tasks that strengthen image-text comprehension and enable effective learning from multiple retrieved images presented during multimodal queries. This approach frees researchers from relying on specific pre-trained multi-image models and allows V-RAG to be applied to any model and dataset of interest [4].

Troubleshooting Guides

Problem: Hallucinations in Generated Medical Reports

Issue: The MLLM generates findings or descriptions not present in the retrieved images or reports.

Diagnosis and Solution Flowchart:

Problem: Irrelevant or Low-Quality Retrievals

Issue: The system retrieves images and reports that are not relevant to the query image, leading to poor context for generation.

Potential Causes and Fixes:

| Cause | Symptom | Solution |

|---|---|---|

| Weak Embedding Model | Poor performance across diverse query types. | Use a domain-specific embedding model (e.g., BiomedCLIP for medical images) to better capture semantic similarity [4]. |

| Ineffective Chunking | Retrieved text chunks are incoherent or lack context. | Refine text chunking strategies. Use smaller, overlapping chunks and preserve logical structure (e.g., section titles as metadata) [22] [23]. |

| Incorrect Top-K | Retrieving too much noise or missing key information. | Experiment with the number of retrieved documents (Top-K). Start with a low number (e.g., 3-5) and increase based on performance [25]. |

| Faulty Ranking | Correct information exists but is ranked too low. | Implement a re-ranking step after the initial retrieval to re-order passages by relevance to the query [25]. |

Problem: Incomplete or Poorly Formatted Responses

Issue: The generated response is partially correct but lacks full coverage, or is in the wrong format (e.g., free text instead of a structured report).

Solution Strategy:

- Break Down Complex Queries: For broad questions, use query pre-processing to reformulate them into well-scoped sub-questions. This ensures comprehensive retrieval and generation for each part [25].

- Implement Structured Outputs: Use JSON or other predefined schemas in your prompts. Leverage LLM features that enforce schema-based responses (e.g., OpenAI's function calling) to ensure consistent formatting [25].

- Separate Instructions: In the prompt, clearly separate formatting instructions from the content instructions to avoid confusion for the model [25].

Experimental Protocols & Performance Data

Key Experiment: V-RAG for Chest X-Ray Report Generation

Objective: To evaluate the effectiveness of V-RAG in reducing hallucinations and improving the clinical accuracy of generated chest X-ray reports [4].

Methodology:

- Datasets: MIMIC-CXR (chest X-ray report generation) and Multicare (medical image caption generation) [4].

- Retrieval Setup:

- Embedding Model: BiomedCLIP for extracting image embeddings.

- Vector Database: FAISS with HNSW algorithm for approximate kNN search.

- Retrieval Count (k): Top-k similar images and their reports are retrieved (k=3 or 5 is common).

- Inference with V-RAG:

- The query image, along with the top-k retrieved images and their reports, are formatted into a structured prompt.

- The prompt includes explicit instructions, for example: "This is the i-th similar image and its report for your reference. [Reference Image Iᵢ] [Reference Report Rᵢ] ... Answer the question with only the word yes or no. Do not provide explanations. According to the last query image and the reference images and reports, [Question] [Query Image X_q]" [4].

- Evaluation:

- Entity Probing: The model is presented with an image and asked yes/no questions about disease entities. Answers are compared against grounded answers from a reference report [4].

- Report Generation & RadGraph-F1: The model generates a full report. The output is evaluated using RadGraph-F1 to measure clinical factual accuracy [4].

Quantitative Results: V-RAG vs. Baseline Performance

| Model / Approach | Entity Probing Accuracy (%) (Frequent) | Entity Probing Accuracy (%) (Rare) | RadGraph-F1 Score | Notes |

|---|---|---|---|---|

| Baseline Med-MLLM (No RAG) | 78.5 | 65.2 | 0.412 | Prone to hallucinations [4]. |

| Text-Based RAG | 82.1 | 72.8 | 0.445 | Assumes retrieved images are interchangeable with query [4]. |

| V-RAG (Proposed) | 85.7 | 79.4 | 0.481 | Incorporates both images and reports, outperforming baselines [4]. |

| VisRAG (on multi-modality docs) | - | - | - | Reports 20-40% end-to-end performance gain over text-based RAG [26]. |

V-RAG System Workflow

The following diagram illustrates the end-to-end process of a Visual RAG system, from data ingestion to response generation.

The Scientist's Toolkit: Research Reagent Solutions

| Item | Function in V-RAG Experiment |

|---|---|

| BiomedCLIP | A vision-language model pre-trained on a wide range of biomedical images. Used to generate robust image embeddings for the retrieval step, crucial for capturing medical semantic similarity [4]. |

| FAISS (Facebook AI Similarity Search) | A library for efficient similarity search and clustering of dense vectors. Used as the vector database to store embeddings and perform fast approximate nearest neighbor searches [4]. |

| Multimodal LLM (Med-MLLM) | The core generator model (e.g., models based on LLaMA, GPT architectures fine-tuned on medical data). It is capable of processing both image and text inputs to generate final reports or answers [4] [13]. |

| MIMIC-CXR Dataset | A large publicly available dataset of chest X-ray images and corresponding free-text radiology reports. Serves as a standard benchmark for training and evaluating models on radiology report generation tasks [4]. |

| RadGraph | A tool for annotating and evaluating radiology reports by extracting clinical entities and their relations. Used to compute the RadGraph-F1 score, a key metric for evaluating the factual accuracy of generated reports [4] [24]. |

Troubleshooting Guides and FAQs

FAQ: What are the primary data collection methods for medical instruction tuning, and how do they compare? Instruction-tuning datasets are constructed using three major paradigms, each with distinct trade-offs between quality, scalability, and resource cost [27].

- Expert Annotation: Involves manual creation by clinicians or domain experts. This method yields the highest quality data but is expensive and does not scale easily [27] [28] [29].

- Distillation from Larger Models: Uses a powerful teacher model (like GPT-4) to automatically generate instruction-output pairs. This is highly scalable but may not be perfectly aligned with nuanced clinical expertise [27] [30] [28].

- Self-Improvement Mechanisms: Employs techniques like Self-Instruct or Reinforcement Learning from AI Feedback (RLAIF) for iterative bootstrapping and refinement of the dataset [27].

FAQ: My finely-tuned model is hallucinating on rare medical entities. How can I mitigate this? Visual Retrieval-Augmented Generation (V-RAG) is a promising framework to ground your model's responses in retrieved visual and textual data, which has been shown to improve accuracy for both frequent and rare entities [4] [31]. The process involves:

- Building a Multimodal Memory: Create a vector database (e.g., using FAISS) of embeddings from a corpus of medical images and their corresponding reports. Using a domain-specific encoder like BiomedCLIP is recommended [4].

- Retrieval at Inference: For a query image, retrieve the top-k most similar images and their associated reports from the database [4].

- Augmented Generation: The model is prompted to answer the clinical question using both the original query image and the retrieved image-report pairs as reference, which provides broader context and reduces unfounded assertions [4].

FAQ: How can I incorporate clinician expertise into automated data generation pipelines? The BioMed-VITAL framework provides a data-centric approach to align instruction data with clinician preferences [28]. Its key stages are:

- Preference-Aligned Generation: Use a powerful generator (e.g., GPT-4V) with a diverse set of clinician-selected and annotated demonstrations in its prompt to guide the generation of clinically relevant instruction-response pairs [28].

- Preference-Distilled Selection: Train a separate data selection model that learns to rank and select the highest-quality generated data based on a mixture of human clinician preferences and model-based judgments using clinician-curated criteria [28].

FAQ: My model is highly susceptible to adversarial hallucination attacks. What mitigation strategies are effective? Research shows that LLMs can be highly vulnerable to elaborating on fabricated details in clinical prompts [5]. Tested mitigation strategies include:

- Mitigation Prompts: Using a prompt that explicitly instructs the model to "use only clinically validated information and acknowledge uncertainty instead of speculating" can significantly reduce hallucination rates. One study showed this reduced the overall rate from 66% to 44% across multiple models [5].

- Temperature Settings: Adjusting the model's temperature parameter to zero (deterministic output) did not yield a significant improvement in reducing adversarial hallucinations [5].

FAQ: What are the most efficient fine-tuning techniques for adapting large models to the medical domain? Parameter-Efficient Fine-Tuning (PEFT) methods are essential for adapting large models with reduced computational cost [27] [29] [13].

- Low-Rank Adaptation (LoRA): This is a dominant technique. It freezes the pre-trained model weights and injects trainable rank decomposition matrices into the transformer layers, dramatically reducing the number of parameters that need to be updated [27] [29]. The core update is represented as

W' = W0 + BA, whereBandAare low-rank matrices [27]. - Benefits: PEFT methods like LoRA make model adaptation computationally feasible, preserve general knowledge to avoid catastrophic forgetting, and enable model reusability for multiple tasks [27] [29].

Table 1: Hallucination Rates and Mitigation Effectiveness Across LLMs (Adversarial Attack Study)

| Model | Default Hallucination Rate | Hallucination Rate with Mitigation Prompt |

|---|---|---|

| Overall Mean (All Models) | 66% | 44% |

| GPT-4o | 53% | 23% |

| Distilled-DeepSeek-R1 | 82% | Information Not Provided |

Source: [5] - Multi-model study with 300 physician-validated vignettes containing fabricated details.

Table 2: Performance Gains from Domain-Specific Instruction Tuning Frameworks

| Framework / Model | Domain | Notable Performance Gain | Key Feature |

|---|---|---|---|

| BioMed-VITAL [28] | Biomedical Vision | Win rate up to 81.73% on MedVQA | Clinician-aligned data generation/selection |

| LawInstruct [29] | Legal | +15 points (~50%) on LegalBench | 58 datasets, cross-jurisdiction |

| JMedLoRA [29] | Japanese Medical QA | Performance increase (various) | LoRA-based, cross-lingual transfer |

| Knowledge-aware Data Selection (KDS) [29] | Medical Benchmarks | +2.56% on Med Benchmarks | Filters data that causes knowledge conflicts |

Detailed Experimental Protocols

Protocol 1: Implementing Visual RAG (V-RAG) for Medical MLLMs

This methodology grounds model responses using retrieved images and reports to reduce hallucination [4].

Workflow Overview:

Key Steps:

- Multimodal Retrieval Database Construction:

- Inputs: A large corpus of medical images and their corresponding textual reports.

- Embedding Extraction: Use a domain-specific visual encoder (e.g., BiomedCLIP [4]) to extract image embeddings (

ℰ_img ∈ ℝ^d). - Vector Storage: Construct a memory bank

ℳusing a vector database like FAISS. For efficient approximate search, use the Hierarchical Navigable Small World (HNSW) algorithm [4].

- Inference with V-RAG:

- Encode the query image

X_qto get its embedding. - Retrieve the top-

kmost similar images(I_1, ..., I_k)and their reports(R_1, ..., R_k)fromℳ. - Construct a composite prompt that includes the query image and the retrieved image-report pairs as references.

- The prompt should explicitly instruct the model to base its answer on both the query and the references [4].

- Encode the query image

Protocol 2: Clinician-Aligned Data Generation and Selection (BioMed-VITAL)

This protocol ensures automatically generated data aligns with domain expert preferences [28].

Workflow Overview:

Key Steps:

- Data Generation with Expert Demonstrations:

- Diverse Sampling: From a seed dataset, use K-means clustering on image and text features (e.g., from BiomedCLIP) to create distinct categories. Uniformly sample from these clusters to ensure diversity [28].

- Clinician Annotation: Present the sampled data to clinicians. For each instruction, have them choose between candidate responses, select both if good, or reject both. Use majority voting to resolve disagreements [28].

- Guided Generation: Use a powerful multimodal generator (e.g., GPT-4V). The clinician-annotated pairs are used as few-shot demonstrations in the generator's prompt to produce preference-aligned instruction data at scale [28].

- Data Selection with Distilled Preferences:

- Mixed Preference Data: Combine a limited set of human clinician preferences with a larger set of model-annotated preferences. The model annotations are guided by clinician-curated criteria [28].

- Selection Model Training: Train a separate model (the data selector) on this mixed preference data to learn a rating function that distinguishes high-quality, clinically relevant instructions [28].

- Data Filtering: Use the trained selection model to rank the large pool of generated data. Select the top-ranked samples for the final instruction-tuning dataset [28].

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Resources for Medical MLLM Instruction Tuning

| Item / Resource | Function / Purpose in Research |

|---|---|

| Parameter-Efficient Fine-Tuning (PEFT) [27] [29] | A class of methods, including LoRA, that adapts large models to new tasks by updating only a small subset of parameters, drastically reducing computational requirements. |

| Low-Rank Adaptation (LoRA) [27] [29] | A specific, widely-used PEFT technique that injects and trains low-rank matrices into transformer layers, making domain adaptation computationally feasible. |

| BiomedCLIP [4] [28] | A vision encoder pre-trained on a vast corpus of biomedical images and text. Used to extract powerful domain-specific visual features for tasks like retrieval. |

| Visual Retrieval-Augmented Generation (V-RAG) [4] [31] | A framework that augments an MLLM's knowledge by retrieving relevant images and reports from an external database during inference, reducing hallucinations. |

| FAISS Vector Database [4] | A library for efficient similarity search and clustering of dense vectors. Essential for building the retrieval component in a V-RAG system. |

| Clinician-in-the-Loop Annotation [28] [5] | The process of incorporating feedback from medical experts to ensure the quality, relevance, and safety of training data and model outputs. |

| Instruction-Tuning Datasets (e.g., PMC-Llama-Instructions [30], LLaVA-Med [28]) | Curated collections of instruction-response pairs, often amalgamated from multiple public datasets, used to teach models to follow instructions in a biomedical context. |

Frequently Asked Questions (FAQs)

Q1: What are the most effective connector architectures for reducing hallucinations in medical MLLMs? Projection-based connectors (MLP) provide strong baseline performance, while compression-based methods like spatial pooling and CNN-based abstractors better handle high-resolution medical images by reducing token sequence length. Query-based connectors using trainable tokens offer enhanced performance for lesion-specific grounding [32] [13]. The optimal choice depends on your specific medical imaging modality and computational constraints.

Q2: How can I improve my model's handling of rare medical entities? Visual Retrieval-Augmented Generation (V-RAG) significantly improves performance on both frequent and rare entities by incorporating similar images and their reports during inference. Fine-tuning with multi-image comprehension tasks enables models to leverage retrieved references effectively, with documented RadGraph-F1 score improvements [8] [4].

Q3: What training strategies best mitigate hallucination in medical report generation? A three-stage approach proves most effective: pre-training with image-text alignment, instruction tuning with both multimodal and text-only data, and alignment tuning using reinforcement learning from human feedback. LoRA fine-tuning efficiently adapts models to medical domains while preserving general knowledge [13] [33].

Q4: How do vision encoder choices impact medical report accuracy? Vision Transformers (ViTs) and their variants (DEiT, BEiT) outperform CNN-based encoders by capturing global context through patch-based processing and self-attention mechanisms. BEiT reduces computational complexity through downsampling, while DEiT enhances robustness via data augmentation [34].

Q5: What evaluation metrics best detect hallucinations in medical imaging? Entity probing with yes/no questions about medical entities provides clinical grounding. RadGraph-F1 scores evaluate report quality, while dataset-wise statistical analysis and clinical task-based assessments offer comprehensive hallucination detection [8] [2].

Troubleshooting Guides

Issue: Poor Alignment Between Visual Features and Text Generation

Symptoms: Generated reports contain plausible but incorrect findings, descriptions contradict visual evidence, or missed abnormalities.

Solution:

- Verify connector architecture: Implement cross-attention mechanisms between vision transformer outputs and GPT-2 decoder layers [34]

- Enhance multimodal alignment:

- Use progressive training: start with image-text matching, advance to structured report generation

- Incorporate region-grounded reasoning with bounding box annotations

- Apply contrastive learning between image patches and text segments [13]

Implementation Protocol:

Issue: Computational Limitations with High-Resolution Medical Images

Symptoms: Training crashes due to memory constraints, slow inference times, or truncated image features.

Solution:

- Implement token compression:

- Spatial relation compression via adaptive average pooling

- Semantic perception compression using attention pooling

- CNN-based abstractors with ResNet blocks [32]

- Optimize training efficiency:

- Use LoRA (Low-Rank Adaptation) for efficient fine-tuning

- Implement gradient checkpointing

- Apply mixed-precision training [33]

Experimental Results:

Table 1: Compression Methods Performance Comparison

| Method | Token Reduction | Report Quality (RadGraph-F1) | Memory Usage |

|---|---|---|---|

| Linear Projection | 0% | 0.723 | 100% |

| Adaptive Pooling | 75% | 0.718 | 42% |

| CNN Abstractor | 75% | 0.741 | 45% |

| Semantic Attention | 80% | 0.752 | 38% |

Issue: Hallucinations of Rare Diseases or Uncommon Findings

Symptoms: Model fails to recognize rare pathologies, defaults to common patterns, or generates inconsistent descriptions for unusual cases.

Solution:

- Implement Visual RAG (V-RAG):

- Retrieve top-k similar images and reports using BiomedCLIP embeddings

- FAISS with HNSW algorithm for efficient similarity search

- Multi-image inference with reference prompts [4]

- Augment training strategy:

- Entity-specific data balancing

- Rare entity oversampling with label smoothing

- Progressive curriculum learning from common to rare findings

V-RAG Implementation Workflow:

Issue: Inconsistent Performance Across Medical Imaging Modalities

Symptoms: Model performs well on X-rays but poorly on CT/MRI, modality-specific hallucinations, or failed cross-modal alignment.

Solution:

- Modality-specific adaptations:

- 3D patch processing for volumetric data (CT/MRI)

- Temporal modeling for video data (ultrasound, cardiac MRI)

- Multi-scale feature extraction for different resolution requirements

- Architecture modifications:

- Separate modality encoders with shared connectors

- Modality-specific fine-tuning with gradual fusion

- Contrastive learning across modalities

Experimental Protocol for Multi-Modality Evaluation:

Table 2: Modality-Specific Adaptation Performance

| Imaging Modality | Encoder Architecture | Connector Type | Hallucination Rate | Clinical Accuracy |

|---|---|---|---|---|

| Chest X-ray (2D) | ViT-B/16 | MLP + Cross-attention | 8.3% | 91.7% |

| CT (3D) | ViT-3D | Query-based + Compression | 12.1% | 87.9% |

| MRI | BEiT-3D | Fusion-based | 10.5% | 89.5% |

| Ultrasound | DEiT + Temporal | CNN Abstractor | 15.2% | 84.8% |

Experimental Protocols & Methodologies

Vision Encoder Comparative Analysis Protocol

Objective: Systematically evaluate vision encoder architectures for medical image interpretation and hallucination reduction.

Methodology:

- Encoder Selection: Compare ViT, DEiT, and BEiT architectures with identical parameter counts

- Training Regimen:

- Pre-training on MIMIC-CXR and Multicare datasets

- 100 epochs with cosine annealing learning rate schedule

- Batch size: 32 with gradient accumulation

- Evaluation Metrics:

- Entity probing accuracy (yes/no questions)

- RadGraph-F1 for report quality

- Hallucination rate per image

Implementation Details:

V-RAG Fine-tuning Protocol for Hallucination Reduction

Objective: Adapt single-image MLLMs to effectively utilize multiple retrieved images and reports.

Methodology:

- Multi-image comprehension pretraining:

- Input: Query image + 3 retrieved similar images with reports

- Task: Identify consistent findings across images

- Output: Differentiated observations between query and retrieved images

Entity grounding reinforcement:

- Contrastive learning between grounded and hallucinated entities

- Explicit training on rare entity recognition

- Cross-image consistency constraints

Inference optimization:

- Dynamic retrieval count (k=3-5 based on similarity scores)

- Confidence-based integration of retrieved knowledge

- Fallback mechanisms for novel cases with low retrieval similarity

Visual RAG Architecture:

The Scientist's Toolkit

Table 3: Essential Research Reagents and Solutions

| Resource | Type | Function | Implementation Example |

|---|---|---|---|

| BiomedCLIP | Embedding Model | Medical image-text similarity for retrieval | Feature extraction for V-RAG memory construction [4] |

| MIMIC-CXR | Dataset | Chest X-ray images with reports | Training and evaluation of radiology MLLMs [8] |

| Multicare | Dataset | Diverse medical image captions | Generalization testing across modalities [8] |

| FAISS | Retrieval System | Efficient similarity search | V-RAG retrieval backend with HNSW indexing [4] |

| LoRA | Training Method | Parameter-efficient fine-tuning | Adapting LLMs to medical domains [33] |

| RadGraph | Evaluation Tool | Structured medical concept extraction | Hallucination detection and report quality assessment [8] |

| ViT/BEiT/DEiT | Vision Encoders | Medical image feature extraction | Comparing encoder architectures for optimal performance [34] |

Performance Optimization Guide

Connector Selection Decision Framework

For High-Resolution Images:

- Primary choice: Compression-based connectors (spatial or semantic)

- Fallback: Query-based with token reduction

- Avoid: Simple linear projection without compression

For Multi-Modality Integration:

- Primary choice: Fusion-based connectors with cross-attention

- Fallback: Projection-based with modality-specific adapters

- Avoid: Expert-driven language transformation (information loss)

For Computational Constraints:

- Primary choice: LoRA fine-tuning with linear connectors

- Fallback: MLP with aggressive token compression

- Avoid: Multi-encoder architectures without sharing

Quantitative Performance Benchmarks

Table 4: Connector Architecture Performance Comparison

| Connector Type | Training Speed | Inference Latency | Hallucination Rate | Best Use Case |

|---|---|---|---|---|

| Linear Projection | Fastest | Lowest | 12.5% | Baseline/prototyping |

| MLP (2-layer) | Fast | Low | 10.8% | Balanced performance |

| Query-based | Medium | Medium | 8.9% | Lesion-specific tasks |

| Compression (CNN) | Medium | Low | 7.5% | High-resolution images |

| Fusion-based | Slow | High | 6.8% | Multi-modal integration |

| V-RAG Enhanced | Slowest | Highest | 5.2% | Production clinical use |

This technical support framework provides comprehensive guidance for researchers developing medical MLLMs with reduced hallucinations. The protocols and troubleshooting guides are grounded in recent advances in vision encoders and multimodal connectors, with empirically validated performance metrics from current literature.

Frequently Asked Questions (FAQs)

1. What is cross-modal consistency and why is it critical for medical MLLMs? Cross-modal consistency refers to the ability of a Multimodal Large Language Model (MLLM) to produce semantically equivalent and clinically coherent information when the same underlying task or query is presented through different modalities, such as an image versus a text description [35]. In medical imaging, a lack of consistency means the model might correctly identify a finding in a chest X-ray image but fail to recognize the same finding when described in a textual report, or vice-versa. This inconsistency is a direct manifestation of hallucination, severely undermining the model's reliability for clinical tasks like diagnosis and report generation [4] [35] [36].

2. What are the primary technical causes of cross-modal inconsistency? The main technical roots of inconsistency include:

- Architectural Gaps: The separate encoders for vision and language may not be perfectly aligned in a shared semantic space, leading to misinterpretation when fusing information [13] [35].

- Training Data Disparities: Differences in the quality, quantity, and domain-specificity of image and text data used for pre-training can create capability gaps between the model's visual and linguistic understanding [35].

- Information Loss in Fusion: The "multimodal connector" (e.g., a simple MLP) may be insufficient to capture complex, fine-grained relationships between medical images and textual concepts, causing details to be lost or invented [13].

3. How can I quantitatively measure cross-modal consistency in my model? You can establish a quantitative framework by creating or using a dataset of parallel task instances [35]. For each clinical task (e.g., disease classification), create matched pairs where one instance uses the medical image as input, and the other uses a meticulously crafted text description of the same image. The model's outputs for both instances are compared against a gold standard. Consistency is measured as the agreement in performance (e.g., accuracy, F1 score) between the image-based and text-based pathways for the same set of underlying clinical questions [35].

4. What is Visual Retrieval-Augmented Generation (V-RAG) and how does it reduce hallucinations? V-RAG is a framework that grounds the MLLM's generation process in retrieved, relevant knowledge [4] [31]. When the model is queried with a medical image, it first retrieves the most visually and semantically similar images from a database, along with their corresponding accurate reports. The model is then prompted to answer the query or generate a report based on both the original image and the retrieved image-report pairs. This provides an external knowledge base for the model to reference, significantly reducing the generation of ungrounded or "hallucinated" findings by providing concrete, relevant examples [4].

Troubleshooting Guides

Issue 1: Model Generates Clinically Inaccurate Findings in Radiology Reports

Problem: Your MLLM generates radiology reports from images that contain plausible-sounding but clinically inaccurate statements (e.g., mentioning a "pneumothorax" that is not present in the X-ray).

Solution: Implement a Visual Retrieval-Augmented Generation (V-RAG) Pipeline.

Experimental Protocol:

- Database Construction: Build a curated database of medical images (e.g., chest X-rays) and their corresponding, validated radiology reports [4].

- Retriever Setup: Implement a robust multimodal retriever. A recommended approach is to use BiomedCLIP to extract image and text embeddings into a shared space, then index them using FAISS with the HNSW algorithm for efficient approximate nearest neighbor search [4].

- Inference with Retrieval: For a query image, retrieve the top-k most similar images and their reports. Structure your prompt as follows [4]:

"This is the 1st similar image and its report for your reference. [Image1] [Report1]. ... Answer the question/generate a report based on the last query image and the reference images and reports. [Query Image]"

Expected Outcome: The generated report will be more grounded in the visual evidence of the query image and the clinically accurate references, leading to a higher RadGraph-F1 score and reduced hallucination of entities [4].

Issue 2: Model Shows Poor Performance on Rare Diseases or Uncommon Findings

Problem: The MLLM performs adequately on common findings but fails to correctly identify or describe rare pathologies.

Solution: Enhance the model with targeted fine-tuning and leverage V-RAG's capability for rare entities.

Experimental Protocol:

- Entity Probing: Create a diagnostic test to evaluate the model's performance on specific medical entities. This involves presenting the model with an image and asking a series of yes/no questions (e.g., "Is there evidence of pneumothorax in this image?") [4].

- Stratified Analysis: Analyze the model's accuracy separately for frequent and rare entities (e.g., entities that appear in less than 1% of the training data) [4].

- Targeted Retrieval: Ensure your V-RAG database is enriched with examples of rare findings. The retrieval system will then be more likely to find relevant reference cases, even for uncommon queries, providing the model with the necessary context it lacks from its base training [4].

Expected Outcome: V-RAG has been shown to improve accuracy for both frequent and rare entities, as it bypasses the model's need to have these patterns deeply encoded in its parameters, instead relying on the external knowledge base [4].

Issue 3: Inconsistent Diagnoses Between Image and Text Inputs

Problem: The model provides different diagnoses or findings when the same clinical case is presented as an image versus when it is described in text.

Solution: Systematically evaluate and improve cross-modal consistency.

Experimental Protocol:

- Create a Parallel Consistency Evaluation Dataset: For a set of medical images, create perfect textual descriptions that capture all clinically relevant information. This forms matched (image, text) pairs for the same case [35].

- Define Consistency Metric: Run the model on both the image input and the text description input for all cases. Calculate the metric as the rate at which the model's outputs (e.g., the primary finding or diagnosis) agree across the two modalities for the same case [35].

- Mitigation via Alignment Tuning: If a significant inconsistency is found, employ alignment tuning techniques on your model. This often involves Reinforcement Learning from Human Feedback (RLHF), where a reward model is trained to penalize outputs that are inconsistent or not faithful to the source input, and the main model is fine-tuned to maximize this reward [13].

Expected Outcome: A quantifiable measure of your model's cross-modal consistency. Applying mitigation strategies should lead to a higher consistency score, making the model more reliable and trustworthy [35].

Experimental Data & Protocols

Table 1: Impact of Visual-RAG on Hallucination Reduction in Chest X-Ray Report Generation

| Metric | Baseline MLLM (No RAG) | MLLM with Text-Only RAG | MLLM with Visual-RAG (V-RAG) |

|---|---|---|---|

| Overall Entity Probing Accuracy | Baseline | Moderate Improvement | Highest Improvement |

| Accuracy on Frequent Entities | Baseline | Moderate Improvement | Highest Improvement |

| Accuracy on Rare Entities | Low | Slight Improvement | Significant Improvement |

| RadGraph-F1 Score | Baseline | Moderate Improvement | Highest Improvement |

| Hallucination Rate | Baseline | Reduced | Most Reduced |

Source: Adapted from experiments on MIMIC-CXR dataset in [4].

Core Protocol: Entity Probing for Hallucination Measurement

- Objective: Quantify whether an MLLM correctly grounds specific medical entities in the image content.

- Setup: Use a dataset of medical images with a set of established ground-truth entities (e.g., from expert-annotated reports).

- Procedure: For each image and each entity, prompt the model with: "Does the image show [entity]? Answer with only 'yes' or 'no'." [4]

- Analysis: Compare the model's yes/no answers to the ground truth. Accuracy is calculated as the proportion of correct answers, providing a clear, clinical measure of hallucination separate from text generation fluency [4].

The Scientist's Toolkit

Table 2: Key Research Reagents for Cross-Modality Verification Experiments

| Reagent / Solution | Function in Experimental Protocol |

|---|---|

| BiomedCLIP | A vision-language model pre-trained on a massive corpus of biomedical images and text. Used to generate high-quality, domain-specific embeddings for images and reports, which is crucial for building an effective retrieval database [4]. |

| FAISS (with HNSW) | A library for efficient similarity search and clustering of dense vectors. The Hierarchical Navigable Small World (HNSW) index allows for fast and accurate retrieval of the top-k most similar images/reports from a large database during V-RAG inference [4]. |

| MIMIC-CXR Dataset | A large, public dataset of chest radiographs with free-text radiology reports. Serves as a standard benchmark for training and evaluating models on tasks like report generation and entity probing [4]. |

| RadGraph | A tool and dataset for annotating entities and relations in radiology reports. The RadGraph-F1 score is a key metric for evaluating the factual accuracy of generated reports against expert annotations, directly measuring clinical correctness [4]. |

| LoRA (Low-Rank Adaptation) | A parameter-efficient fine-tuning (PEFT) method. Allows researchers to adapt large pre-trained MLLMs to specific medical tasks by only training a small number of parameters, making experimentation more computationally feasible [13] [37]. |

Workflow Diagrams

Visual-RAG (V-RAG) Workflow

Cross-Modal Consistency Evaluation

Refining Performance: Troubleshooting Pitfalls and Optimizing Workflows

This guide provides technical support for researchers confronting adversarial attacks against multimodal AI in medical and drug discovery contexts. These attacks exploit model vulnerabilities using fabricated inputs, leading to hallucinations and incorrect outputs. The following sections offer troubleshooting guides, experimental protocols, and defensive strategies to enhance model robustness.

Troubleshooting Guide: Identifying Adversarial Vulnerabilities

This section addresses common challenges and solutions when experimenting with multimodal models.

Q: My model is generating outputs that elaborate on fabricated details from the input. What is happening?

- A: This is a classic adversarial hallucination attack. Models can be overly confirmatory, treating every input token as truth. When a prompt contains a fabricated detail (e.g., a fake lab test or disease), the model may not only accept it but also generate additional, incorrect information about it [5].

- Troubleshooting Steps:

- Audit Inputs: Implement a pre-processing check to flag inputs containing terms not found in your verified knowledge bases (e.g., Drugs.com, PubMed) [38].

- Use Mitigation Prompts: Employ prompts that instruct the model to "use only clinically validated information and acknowledge uncertainty instead of speculating" [5].

- Quantify the Issue: Follow the protocol in the Experimental Protocols section to establish a baseline hallucination rate for your model.

Q: My multimodal model is being fooled by a slightly modified image, even though the text prompt is correct. Why?

- A: You are likely facing a cross-modal adversarial attack. Attackers add imperceptible perturbations to an image so that its embedding in the model aligns with a target concept different from its actual content [39]. A related threat is steganographic prompt injection, where malicious instructions are hidden within an image's pixels [40].

- Troubleshooting Steps:

- Test with Transformations: Apply image resizing, JPEG compression, or mild noise to the input. A sudden change in output after these transformations suggests adversarial manipulation [39].

- Analyze Embeddings: If possible, compare the image embedding produced by your vision encoder against a database of known, clean image embeddings to detect anomalies.

- Implement Detection: Explore neural steganalysis tools designed to detect minute alterations in image structures that indicate hidden data [40].

Q: I've heard multimodal models are more robust. Is this true, and how can I leverage this?

- A: Evidence suggests that well-designed multimodal models can be more resilient than their single-modality counterparts. The integration of multiple data types (e.g., image and text) allows the model to cross-verify information, making it harder to deceive all modalities simultaneously [41].

- Troubleshooting Steps: