Improving fMRI Test-Retest Reliability: A Comprehensive Guide for Biomarker Development and Clinical Translation

This article provides a comprehensive examination of test-retest reliability in functional magnetic resonance imaging (fMRI) for researchers and drug development professionals. Converging evidence indicates poor to moderate reliability for common fMRI measures, with meta-analyses reporting mean intraclass correlation coefficients (ICCs) of 0.397 for task-based activation and 0.29 for edge-level functional connectivity. We explore foundational concepts of reliability measurement, methodological factors influencing consistency, optimization strategies for improving reliability, and validation approaches across different contexts. The content synthesizes recent evidence on how analytical decisions, acquisition parameters, and processing pipelines impact reliability, providing practical guidance for enhancing fMRI's utility in clinical trials and biomarker development.

Improving fMRI Test-Retest Reliability: A Comprehensive Guide for Biomarker Development and Clinical Translation

Abstract

This article provides a comprehensive examination of test-retest reliability in functional magnetic resonance imaging (fMRI) for researchers and drug development professionals. Converging evidence indicates poor to moderate reliability for common fMRI measures, with meta-analyses reporting mean intraclass correlation coefficients (ICCs) of 0.397 for task-based activation and 0.29 for edge-level functional connectivity. We explore foundational concepts of reliability measurement, methodological factors influencing consistency, optimization strategies for improving reliability, and validation approaches across different contexts. The content synthesizes recent evidence on how analytical decisions, acquisition parameters, and processing pipelines impact reliability, providing practical guidance for enhancing fMRI's utility in clinical trials and biomarker development.

Understanding fMRI Reliability: Why It Matters for Clinical and Research Applications

The Critical Importance of Reliability in fMRI Biomarker Development

FAQs: Understanding fMRI Reliability

What does "test-retest reliability" mean in the context of fMRI? Test-retest reliability refers to the consistency of fMRI measurements when the same individual is scanned under the same conditions at different time points. It is essential for ensuring that observed brain activity patterns are stable traits of the individual rather than random noise. High reliability is particularly crucial for studies investigating individual differences in brain function, as it directly impacts the ability to detect brain-behavior relationships [1].

Why is the reliability of fMRI biomarkers a major concern? Poor reliability drastically reduces effect sizes and statistical power for detecting associations with behavioral measures or clinical conditions. This reduction can lead to failures in replicating findings and undermines the utility of fMRI for predictive and individual-differences research. Essentially, even with large sample sizes, a biomarker with poor reliability will struggle to detect true effects [1].

Can fMRI measures be highly reliable? Yes, but it depends heavily on what is being measured. While the average activity in individual brain regions often shows poor reliability, multivariate measures—which combine patterns of activity across multiple brain areas using machine learning—can demonstrate good to excellent reliability. For example, some multivariate neuromarkers for conditions like cardiovascular risk or pain have shown same-day test-retest reliabilities above 0.70 [2].

Do all biomarkers need high long-term test-retest reliability? No. This is a common misconception. The U.S. Food and Drug Administration identifies several biomarker categories. Some, like prognostic biomarkers, require long-term stability. Others, such as diagnostic, monitoring, or pharmacodynamic biomarkers, are designed to detect dynamic states and therefore require low within-person measurement error but not necessarily high stability across weeks or months [2].

Troubleshooting Guides: Addressing Common Reliability Challenges

Problem: Poor reliability in individual brain regions

Symptoms

- Low intraclass correlation coefficients (ICCs) for task-related activation in regions of interest.

- Inconsistent brain-behavior correlations across studies.

- Inability to replicate findings in longitudinal studies.

Solutions

- Shift to Multivariate Measures: Instead of relying on single regions, use machine learning to create patterns distributed across multiple brain areas. These neuromarkers can show significantly higher reliability [2].

- Increase Data Per Participant: Collect more data per individual. Studies have shown that reliability increases with scan duration. For resting-state functional connectivity, longer scans (e.g., 20-30 minutes) substantially improve phenotypic prediction accuracy [3].

- Optimize Task Design: Simpler tasks and block designs (compared to event-related designs) may yield more reliable activation, especially in regions with stronger overall activation [1].

Problem: High within-subject variability across scanning runs

Symptoms

- Large differences in activation patterns for the same individual within a single scanning session.

- Low split-half reliability estimates.

Solutions

- Minimize Motion: Subject movement has a pronounced negative effect on reliability. Implement rigorous motion correction protocols during preprocessing. One study found that participants with the lowest motion had reliability estimates 2.5 times higher than those with the highest motion [1].

- Employ Advanced Denoising: Use techniques like multirun spatial ICA (e.g., FIX denoising) to ameliorate the impact of subject movement and other noise sources [1].

- Ensure Adequate Trial Count: For event-related designs, ensure a sufficient number of trials. For error-related brain activity, achieving stable estimates typically requires 6-8 error trials for fMRI measures and 4-6 trials for ERP measures [4].

Problem: Low reliability in multicenter studies

Symptoms

- Inconsistent results across different scanners and sites.

- Poor generalizability of biomarkers.

Solutions

- Account for Scanner and Protocol Effects: A 2025 multicenter study revealed hierarchical variations in functional connectivity, with significant contributions from scanner, protocol, and participant factors. Use statistical models that can account for these sources of variance [5].

- Utilize Ensemble Classifiers: Machine learning approaches like ensemble sparse classifiers can suppress site-related variances and prioritize disease-related signals, improving the reliability of diagnostic biomarkers in multicenter settings [5].

- Implement Harmonized Protocols: When possible, use standardized imaging protocols across sites to minimize protocol-related variability [5].

Quantitative Data on fMRI Reliability

Table 1: Summary of Reported fMRI Reliability Metrics

| Measure Type | Average Reliability (ICC) | Key Influencing Factors | Reference |

|---|---|---|---|

| Task-fMRI (Regional Activity) | 0.088 (short-term), 0.072 (long-term) | Motion, task design, developmental stage | [1] |

| Edge-Level Functional Connectivity | 0.29 (pooled mean) | Network type (within-network > between-network), scan length | [6] |

| Multivariate fMRI Biomarkers | 0.73 - 0.82 (examples) | Machine learning method, number of features | [2] |

| Error-Processing fMRI | Stable with 6-8 trials | Number of error trials, sample size (~40 participants) | [4] |

Table 2: Effect of Scan Duration on Phenotypic Prediction Accuracy

| Total Scan Duration (Sample Size × Scan Time) | Prediction Accuracy (Cognitive Factor Score) | Recommendation |

|---|---|---|

| Low (e.g., 200 pts × 14 min) | ~0.33 | Under-powered |

| Medium (e.g., 700 pts × 14 min) | ~0.45 | Improved, but not cost-optimal |

| High (e.g., 200 pts × 58 min) | ~0.40 | Improved, but less efficient than larger N |

| Optimal (Theoretical) | Highest | ~30 min scan time per participant is most cost-effective [3] |

Experimental Protocols for Reliability Optimization

Protocol: Assessing and Improving Task-fMRI Reliability

This protocol is based on methodologies used to evaluate the Adolescent Brain Cognitive Development (ABCD) Study data [1].

Data Acquisition:

- Tasks: Use well-established paradigms such as the Stop Signal Task (SST) for response inhibition, Monetary Incentive Delay (MID) for reward processing, and nBack for working memory.

- Design: Include two runs per task within the same session to assess within-session reliability.

- Duration: Each run should be approximately 5 minutes. However, for new studies, consider longer runs if focused on individual differences.

Reliability Analysis:

- Model: Use Linear Mixed-Effect models (LME) to estimate reliability, controlling for confounds like scanner site and family structure.

- Metric: Calculate the ratio of non-scanner related stable variance to all variances.

- Motion Quantification: Include rigorous motion quantification as a covariate, as it is a major source of unreliable variance.

Denoising Pipeline:

- Apply advanced denoising techniques, such as multirun spatial ICA followed by FIX cleaning, to reduce the impact of motion and other artifacts.

Protocol: Developing a Reliable Multivariate Biomarker

This protocol is derived from commentaries and studies on multivariate neuromarkers [2] [5].

Feature Extraction: Do not rely on average activity in pre-defined ROIs. Instead, extract activation estimates from a whole-brain map or a large set of brain regions.

Machine Learning Training:

- Algorithm: Use sparse machine learning algorithms (e.g., ensemble sparse classifiers) or kernel ridge regression.

- Validation: Employ nested cross-validation to avoid overfitting. The outer loop assesses generalizability, while the inner loop optimizes model hyperparameters.

- Feature Selection: The algorithm should weight and select features (connections or voxels) that are most stable and diagnostic, effectively suppressing unreliable variance [5].

Reliability Assessment:

- Calculate test-retest reliability (e.g., using intraclass correlation) of the resulting continuous biomarker score across separate sessions in a hold-out sample, not just its classification accuracy.

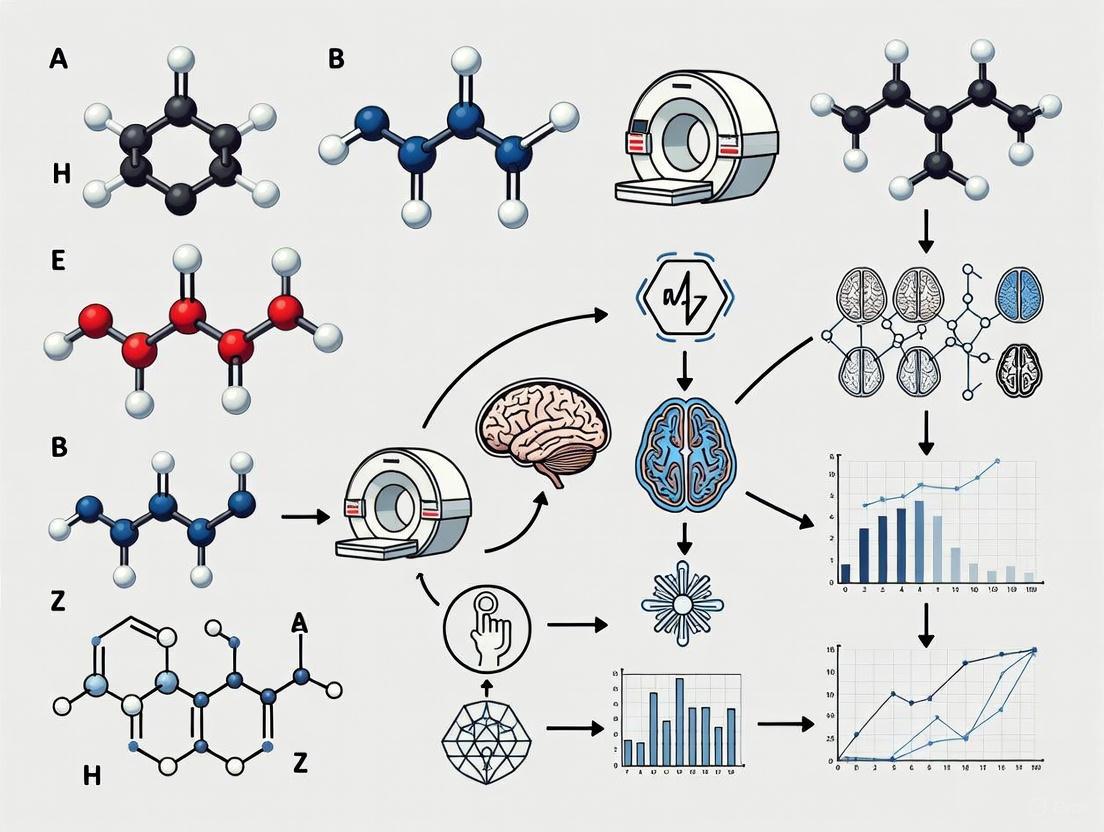

Workflow and Pathway Diagrams

The Scientist's Toolkit

Table 3: Essential Resources for fMRI Reliability Research

| Tool / Resource | Function | Example / Note |

|---|---|---|

| Linear Mixed-Effects (LME) Models | Statistical analysis that partitions variance and estimates reliability while controlling for confounds (site, family). | Preferred over simple ICC for complex, multi-site datasets like ABCD [1]. |

| Multivariate Machine Learning | Creates reliable biomarkers by integrating signals across many brain voxels or connections. | Kernel Ridge Regression, Ensemble Sparse Classifiers [2] [5]. |

| Advanced Denoising Pipelines | Removes non-neural noise from fMRI data to improve signal quality. | Multirun Spatial ICA combined with FIX [1]. |

| Traveling Subjects Dataset | A dataset where the same subjects are scanned across multiple sites and scanners. | Critical for quantifying and correcting for scanner-related variance in multicenter studies [5]. |

| Optimal Scan Time Calculator | A tool to help design cost-effective studies by balancing sample size and scan duration. | Available online based on findings from [3]. |

Troubleshooting Guide: Common fMRI Reliability Challenges

FAQ 1: How reliable are common task-fMRI and functional connectivity measures?

The Problem: Many researchers assume that widely used fMRI measures possess inherent reliability suitable for individual-differences research or clinical biomarker development. However, quantitative synthesis of the evidence reveals significant reliability challenges.

The Evidence: Meta-analytic evidence demonstrates that both task-fMRI and resting-state functional connectivity measures show concerningly low test-retest reliability in their current implementations:

Table: Meta-Analytic Evidence of fMRI Reliability

| fMRI Measure Type | Pooled Mean Reliability (ICC) | Quality of Reliability | Sample Size | Citation |

|---|---|---|---|---|

| Common Task-fMRI Measures | ICC = 0.397 | Poor | 90 experiments (N=1,008) | [7] |

| Resting-State Functional Connectivity (Edge-Level) | ICC = 0.29 | Poor | 25 studies | [8] |

| HCP Task-fMRI (11 common tasks) | ICC = 0.067 - 0.485 | Poor | N=45 | [7] |

ICC = Intraclass Correlation Coefficient. ICC quality thresholds: <0.4 = Poor; 0.4-0.59 = Fair; 0.6-0.74 = Good; ≥0.75 = Excellent.

The Solution: Recognize that standard fMRI measures were primarily designed to detect robust within-subject effects and group-level averages, not to precisely measure individual differences [9]. When planning studies focused on individual variability, explicitly select and optimize paradigms for reliability rather than simply adopting traditional cognitive neuroscience tasks.

FAQ 2: What factors most significantly impact fMRI reliability, and how can I control for them?

The Problem: Uncontrolled methodological and physiological variables introduce noise, reducing the signal-to-noise ratio of reliable individual differences in the BOLD signal.

The Evidence: Research has identified several key factors that systematically influence reliability estimates:

Table: Factors Influencing fMRI Reliability and Practical Recommendations

| Factor Category | Impact on Reliability | Evidence-Based Recommendation |

|---|---|---|

| Data Quantity | Reliability increases with more data per subject [9]. | Implement extended aggregation: acquire more trials, more scans, or longer resting-state scans per participant. |

| Functional Networks | Reliability varies across brain systems [8]. | Focus analyses on networks with higher inherent reliability (e.g., Frontoparietal, Default Mode) or account for network-specific reliability in models. |

| Subject State | Eyes-open, awake, active recordings show higher reliability than resting states [8]. | Standardize and monitor participant state (e.g., alertness) during scanning. |

| Test-Retest Interval | Shorter intervals generally yield higher reliability [8]. | Minimize time between repeated scans for reliability assessments. |

| Physiological Confounds | The BOLD signal is influenced by vascular physiology, not just neural activity [10]. | Measure and control for heart rate, blood pressure, respiration, and caffeine intake. Consider multi-echo fMRI to better isolate BOLD from noise. |

| Preprocessing & Analysis | Specific pipelines can introduce bias or spurious correlations [11]. | Use validated pipelines (e.g., with surrogate data methods). For connectivity, consider full correlation-based measures with shrinkage. |

The Solution: Adopt a "precision fMRI" (pfMRI) framework that prioritizes data quality and quantity per individual. Proactively control for known sources of physiological and methodological variance through experimental design and advanced processing techniques [9] [12].

Diagram: An Integrated Workflow for Improving fMRI Reliability. The red path highlights a sample protocol prioritizing reliability at each stage.

FAQ 3: Can I use fMRI for clinical trials or drug development given these reliability concerns?

The Problem: The pharmaceutical industry seeks objective biomarkers for CNS drug development, but the unreliable nature of many fMRI measures hinders their regulatory acceptance and clinical utility.

The Evidence:

- Regulatory agencies like the FDA and EMA require demonstrated precision, reproducibility, and a clear context of use for any biomarker in drug development [13].

- While fMRI has potential roles in demonstrating target engagement, dose-response relationships, and disease modification, no fMRI biomarker has yet been fully qualified for regulatory decision-making [13] [14].

- The fundamental limitation is that poor test-retest reliability establishes a low upper limit for a measure's predictive validity [8] [9].

The Solution:

- For trials using fMRI, invest in extensive site standardization and operator training to minimize technical variability.

- Focus on fMRI paradigms that show modifiable and reproducible readouts in the context of the specific drug's mechanism.

- Collect data supporting the biomarker's validity within a specific context of use (e.g., patient stratification, pharmacodynamic response) [13].

The Scientist's Toolkit: Essential Reagents & Materials

Table: Key Methodological Solutions for Reliable fMRI

| Tool Category | Specific Example | Primary Function | Key Benefit |

|---|---|---|---|

| Acquisition Hardware | Ultra-High Field Scanners (7T+) | Increases BOLD contrast-to-noise ratio and spatial resolution [12]. | Enables single-subject mapping of fine-scale functional architecture. |

| Pulse Sequences | Multi-Echo fMRI | Acquires data at multiple echo times, allowing for better separation of BOLD signal from noise [9]. | Improves sensitivity and specificity through advanced denoising. |

| Processing Algorithms | Multi-Echo Independent Component Analysis (ME-ICA) | Identifies and removes non-BOLD noise components from fMRI data [9]. | Significantly enhances functional connectivity reliability. |

| Analysis Framework | Reliability Modeling | Statistically models known sources of measurement error to derive more reliable latent variables [9]. | Increases validity of individual differences measurements. |

| Experimental Design | Naturalistic Paradigms (e.g., movie-watching) | Presents dynamic, engaging stimuli that modulate brain states more consistently than rest [15]. | Can yield more robust and reliable individual difference measures. |

| Data Aggregation | Precision fMRI (pfMRI) Protocols | Involves collecting large amounts of data per individual (e.g., >100 mins) [9] [12]. | Allows random noise to average out, revealing stable individual patterns. |

Interpreting Intraclass Correlation Coefficients (ICCs) in Neuroimaging Contexts

Frequently Asked Questions

What does the ICC quantify in fMRI research? The Intraclass Correlation Coefficient (ICC) quantifies test-retest reliability by measuring the proportion of total variance in fMRI data that can be attributed to stable differences between individuals [16]. It is a dimensionless statistic bracketed between 0 and 1 [17].

Why is my voxel-wise or edge-level ICC considered 'poor'? Meta-analyses have demonstrated that univariate fMRI measures inherently exhibit low test-retest reliability at the voxel or individual edge level. The pooled mean ICC for functional connectivity edges is 0.29, classified as 'poor' [6]. Similarly, task-based activation often shows poor reliability [16].

My data shows a strong group-level effect, but poor ICC. Is this possible? Yes. A strong group-level effect (high mean activation) indicates a consistent response across participants but does not guarantee that the measure can reliably differentiate between individuals, which is what ICC assesses [17].

How can I improve the ICC reliability of my fMRI measure? You can improve reliability by using shorter test-retest intervals, acquiring more data per subject, analyzing stronger and more robust BOLD signals (e.g., within-network cortical edges), and choosing appropriate analytical approaches such as using beta coefficients instead of contrast scores [16] [18] [6].

Should I use a 'consistency' or 'agreement' ICC model? This choice depends on your research question [19]. Use an agreement ICC (e.g., ICC(2,1)) when the absolute values and measurement scales are consistent across sessions and you care about absolute agreement. Use a consistency ICC (e.g., ICC(3,1)) when you are only interested in whether the relative ranking of subjects is preserved across sessions, even if the mean of the measurements shifts [16] [19].

Is reliability (ICC) the same as validity? No. Reliability provides an upper bound for validity, but they are distinct concepts [16]. A measure can be reliable but not valid (e.g., a thermometer that consistently reads 0°C is reliable but not valid). The ultimate goal is a measure that is both reliable and valid [16] [17].

Troubleshooting Low ICC Values

This guide helps you diagnose and address common causes of low reliability in fMRI studies.

| Troubleshooting Step | Description | Key References |

|---|---|---|

| 1. Inspect Your Measure | For task-based fMRI, calculate ICC using beta coefficients from your GLM, not difference scores (contrasts). Contrasts have low between-subject variance, which artificially deflates ICC [18]. | [18] |

| 2. Optimize Study Design | Use shorter test-retest intervals (e.g., days or weeks instead of months). Ensure participants are in similar states (e.g., both eyes-open awake). Collect more data per subject (longer scans/more runs) [16] [6]. | [16] [6] |

| 3. Enhance Signal Quality | Focus analyses on brain regions with stronger, more reliable signals (e.g., cortical regions vs. subcortical). Prioritize within-network connections over between-network connections for connectivity studies [18] [6]. | [18] [6] |

| 4. Check ICC Model Selection | Ensure you are using an ICC model that correctly accounts for the facets (sources of error) in your design. For most test-retest studies with sessions considered a random sample, ICC(2,1) is a robust starting point [16] [19]. | [16] [19] |

| 5. Consider Multivariate Methods | Univariate measures (single voxels/edges) are often unreliable. Explore multivariate approaches like brain-based predictive models or network-level analyses (e.g., ICA), which can aggregate signal and improve both reliability and validity [16]. | [16] |

Quantitative Data and Methodologies

| fMRI Measure | Pooled Mean ICC | Reliability Classification | Key Context |

|---|---|---|---|

| Functional Connectivity (Edge-Level) | 0.29 (95% CI: 0.23 - 0.36) | Poor | Based on a meta-analysis of 25 studies [6]. |

| Task-Based Activation | Low (varies) | Poor | Multiple converging reports and a meta-analysis confirm generally poor reliability for univariate measures [16]. |

| Structural MRI | Relatively High | Fair/Good | Structural measures are generally more reliable than functional measures [16]. |

ICC Interpretation Guidelines

| ICC Range | Conventional Interpretation | Implication for fMRI |

|---|---|---|

| < 0.40 | Poor | Limited utility for discriminating between individuals at a single measurement level [16] [18]. |

| 0.40 - 0.59 | Fair | May be acceptable for group-level inferences, but caution is advised for individual-level applications [18]. |

| 0.60 - 0.74 | Good | Suitable for many research contexts, including some individual differences studies [16]. |

| ≥ 0.75 | Excellent | Indicates a measure stable enough for clinical applications or tracking individual changes over time [16]. |

Experimental Factors Influencing ICC

The following table summarizes factors that have been empirically shown to influence the test-retest reliability of fMRI measures [16] [6].

| Factor | Effect on Reliability | Practical Recommendation |

|---|---|---|

| Test-Retest Interval | Shorter intervals generally yield higher ICC. | Minimize the time between scanning sessions where possible [16] [6]. |

| Paradigm/State | Active tasks often show higher reliability than resting state. "Eyes open" rest is more reliable than "eyes closed" [6]. | Prefer active tasks for individual differences research. Standardize the awake state during rest [16] [6]. |

| Data Quantity | More within-subject data (longer scan times, more runs) improves reliability. | Acquire as much data as feasibly possible per subject [6]. |

| Signal Strength | Stronger BOLD responses and higher magnitude functional connections are more reliable. | Focus on robust, well-characterized signals and networks [18] [6]. |

| Analytical Choice | Using beta coefficients is more reliable than using contrast scores. Multivariate patterns can be more reliable than univariate ones [18]. | Use beta coefficients for task reliability; explore multivariate methods [16] [18]. |

| Brain Region | Cortical regions and within-network connections are typically more reliable than subcortical regions and between-network connections [18] [6]. | Be aware of regional limitations; cortical signals are generally more reliable [18]. |

The Scientist's Toolkit

Key Research Reagents and Computational Solutions

| Item Name | Function/Brief Explanation |

|---|---|

| ICC Analysis Toolbox | A MATLAB toolbox for calculating various reliability metrics, including different ICC models, Kendall's W, RMSD, and Dice coefficients [20]. |

| ISC Toolbox | A toolbox for performing Inter-Subject Correlation analysis, which can be used to assess reliability in naturalistic fMRI paradigms [21]. |

| Beta Coefficients (β) | The raw parameter estimates from a first-level GLM, representing the strength of task-related activation. Preferred over contrast scores for reliability analysis [18]. |

| Intra-Class Effect Decomposition (ICED) | A structural equation modeling framework that decomposes reliability into multiple orthogonal error sources (e.g., session, day, site), providing a nuanced view of measurement error [17]. |

| Independent Component Analysis (ICA) | A data-driven multivariate method to identify coherent functional networks. Network-level measures derived from ICA can show higher reliability than voxel-wise analyses [18]. |

Workflow for a Reliability-Focused fMRI Analysis

The following diagram outlines a recommended workflow for designing and analyzing a test-retest fMRI study with reliability in mind.

Logical Relationship Between Variance, Reliability, and Validity

This diagram illustrates the core statistical concepts behind the ICC and its relationship to validity, helping to clarify common points of confusion.

FAQs: Core Concepts and Definitions

Q1: What is the practical difference between test-retest and between-site reliability?

- Test-Retest Reliability assesses the consistency of measurements when the same individual is scanned repeatedly on the same scanner under similar conditions. It confirms that a protocol can produce stable results for an individual over time [22] [23].

- Between-Site Reliability assesses the consistency of measurements across different scanners and locations. It is essential for determining if data from multiple sites in a multicenter study can be meaningfully merged [22] [24]. High between-site reliability is often harder to achieve than test-retest reliability due to additional variance introduced by different hardware and software [22].

Q2: Why is multisession data acquisition often recommended?

Employing multiple scanning sessions or runs significantly improves reliability. This is analogous to the Spearman-Brown prediction formula in classical test theory, where increasing the number of measurements enhances reliability [22]. Averaging across multiple runs helps to average out random noise, leading to more stable and reproducible estimates of the brain's signal [22] [25].

Q3: How does the choice of fMRI metric influence reliability?

The type of measure extracted from the fMRI data is a critical factor. Studies have found that:

- Percent Signal Change (PSC) often shows higher reliability than measures like contrast-to-noise ratio (CNR), which have an estimate of noise in the denominator [22].

- Multivariate patterns derived from machine learning can exhibit good-to-excellent reliability, whereas the average activation in a single brain region is often much less reliable [2].

- Median values from a Region of Interest (ROI) are typically more reliable than maximum values, as the latter can be unduly influenced by outliers [22].

Troubleshooting Guides

Guide 1: Addressing Poor Between-Site Reliability

Problem: Data collected from different MRI scanners are inconsistent, preventing effective data pooling in a multicenter study.

Solutions:

- Increase ROI Size: Using larger, functionally-derived Regions of Interest (ROIs) can help compensate for anatomical and functional misalignment across sites, markedly improving between-site reliability [22].

- Adjust for Smoothness Differences: Differences in image reconstruction filters can lead to varying image smoothness between scanners. Statistically adjusting for these smoothness differences has been shown to increase reliability for certain metrics [22].

- Harmonize Acquisition Protocols: Use standardized sequences and parameters across all sites. Ensure all sites undergo rigorous quality assurance testing. A study using Siemens scanners across sites demonstrated that a harmonized protocol could yield excellent reliability for quantitative metrics like R1 and MTR [26].

Guide 2: Improving Test-Retest Reliability of Functional Connectivity

Problem: Resting-state functional connectivity (RSFC) measures show poor consistency across scanning sessions.

Solutions:

- Increase Scan Duration: Reliability asymptotically increases with more data. Aim for longer scan durations (e.g., 10-15 minutes or more) per session to substantially improve reliability [23] [27] [25].

- Focus on Stronger Connections and Specific Networks: Reliability is positively associated with connection strength. Analyses focused on established, stronger connections within known brain subnetworks (e.g., the default-mode network) are generally more reliable than analyses of the whole brain or weak connections [28] [23].

- Control for Head Motion: Head motion is a major source of artefactual variance. Implement strict motion control and correction protocols during both data acquisition and preprocessing [29] [25].

- Consider Task-Based Paradigms: For brain networks engaged by a specific task, using that task during the fMRI scan (instead of rest) can enhance FC reliability within those specific networks by increasing the signal variability related to the function of interest [25].

Guide 3: Optimizing Acquisition Sequences for Reliability

Problem: An advanced, high-resolution multiband fMRI sequence is yielding low signal-to-noise ratio (SNR) and unreliable results.

Solutions:

- Prioritize SNR over Extreme Resolution: Small voxel sizes (e.g., 2 mm isotropic) drastically reduce SNR. For standard volumetric analyses that include spatial smoothing, a slightly larger voxel size (e.g., 2.5-3 mm) can provide a better SNR/reliability balance, especially if total scan time per subject is limited [30].

- Optimize Repetition Time (TR): Very short TRs (e.g., < 1 second) reduce T1-weighting and can lower SNR. A TR in the range of 1-1.5 seconds is often a good compromise for conventional BOLD fMRI studies [30].

- Use Multiband Acceleration Judiciously: High multiband acceleration factors can introduce artefacts and signal dropout in medial temporal and subcortical regions. Use the minimum acceleration factor necessary to achieve your target TR and coverage to mitigate these issues [30].

The following table summarizes reliability findings for various fMRI metrics and conditions from the cited literature.

Table 1: Reliability Benchmarks for Different fMRI Metrics

| Modality / Metric | Reliability Type | ICC / Correlation Value | Interpretation & Context |

|---|---|---|---|

| Task-fMRI (Sensorimotor) | Between-Site (Initial) | Low ICC | Strong site and site-by-subject variance [22] |

| Task-fMRI (Sensorimotor) | Between-Site (Optimized) | Increased by 123% | After ROI size increase, smoothness adjustment, and more runs [22] |

| Quantitative R1, MTR, DWI | Between-Site | ICC > 0.9 | Excellent reliability with a harmonized multisite protocol [26] |

| Resting-State FC | Between-Site | ICC ~ 0.4 | Moderate reliability [26] |

| Structural Connectivity (SC) | Test-Retest | CoV: 2.7% | Highest reproducibility among connectivity estimates [28] |

| Functional Connectivity (FC) | Test-Retest | CoV: 5.1% | Lower reproducibility than structural connectivity [28] |

| Edge-Level RSFC (Aphasia) | Test-Retest | Fair Median ICC | With 10-12 min scans; better in subnetworks than whole brain [23] |

| ABCD Task-fMRI | Test-Retest | Avg: 0.088 | Poor reliability in children; motion had a pronounced negative effect [29] |

| Multivariate fMRI Biomarkers | Test-Retest | r = 0.73 - 0.82 | Good-to-excellent same-day reliability [2] |

| Brain-Network Temporal Variability | Test-Retest | ICC > 0.4 | At least moderate reliability with optimized window parameters [27] |

Abbreviations: ICC (Intraclass Correlation Coefficient); CoV (Coefficient of Variation); R1 (Longitudinal Relaxation Rate); MTR (Magnetization Transfer Ratio); DWI (Diffusion Weighted Imaging); FC (Functional Connectivity); RSFC (Resting-State Functional Connectivity.

Multicenter Reliability Study (fBIRN Phase 1) [22] [24]

- Objective: To estimate test-retest and between-site reliability of fMRI assessments.

- Subjects: Five healthy males scanned at 10 different MRI scanners on two separate occasions.

- Task: A simple block-design sensorimotor task.

- Analysis: FIR deconvolution with FMRISTAT to derive impulse response functions. Six functionally-derived ROIs covering visual, auditory, and motor cortices were used.

- Key Dependent Variables: Percent signal change (PSC) and contrast-to-noise ratio (CNR).

- Reliability Assessment: Intraclass correlation coefficients (ICCs) derived from a variance components analysis.

Visual Workflow: Pathway to Reliable fMRI

Diagram Title: Pathway to Reliable fMRI

The Scientist's Toolkit

Table 2: Essential Reagents and Solutions for fMRI Reliability Research

| Tool / Solution | Primary Function | Application Note |

|---|---|---|

| Intraclass Correlation Coefficient (ICC) | Quantifies measurement agreement and consistency [22]. | Use ICC(2,1) for absolute agreement between sites/scanners when merging data is the goal [22] [26]. |

| Functionally-Defined ROIs | Spatially constrained regions for signal extraction. | Larger ROIs improve between-site reliability by mitigating misalignment [22]. |

| Multiband fMRI Sequences | Accelerates data acquisition [26] [30]. | Use judiciously; high acceleration factors can reduce SNR and cause signal dropout [30]. |

| Variance Components Analysis | Decomposes sources of variability in data [22]. | Critical for identifying whether variance stems from subject, site, session, or their interactions. |

| Temporal Signal-to-Noise Ratio (tSNR) | Measures quality of BOLD time series [25]. | A key factor influencing functional connectivity reliability; varies across the brain and with tasks [25]. |

| Harmonized Acquisition Protocol | Standardizes scanning parameters across sites [26]. | Foundation for any successful multicenter study to ensure data comparability [26]. |

FAQs: Addressing Researcher Questions on fMRI Reliability

What does "test-retest reliability" mean in the context of fMRI? Test-retest reliability refers to the extent to which an fMRI measurement produces a similar value when repeated under similar conditions. It is a numerical representation of the consistency of your measurement tool. High reliability is crucial for drawing meaningful conclusions about individual differences and for the development of clinical biomarkers [25] [18].

My task-based fMRI results have poor reliability at the individual level. Is this normal? This is a common challenge, but it is not an insurmountable flaw of fMRI as a whole. Reports of poor individual-level reliability are often tied to specific analytical choices, such as using contrast scores (difference between two task conditions) instead of beta coefficients (a direct measure of functional activation from a single condition). Contrasts can have low between-subject variance and introduce error, leading to suppressed reliability estimates. Switching to beta coefficients has been shown to yield higher Intraclass Correlation Coefficient (ICC) values [18].

I use resting-state fMRI. Is it immune to reliability issues? No. While resting-state fMRI (rsfMRI) is a powerful tool, its reliability can be compromised by statistical artifacts. Standard preprocessing steps, particularly band-pass filtering (e.g., 0.009–0.08 Hz), can introduce biases that inflate correlation estimates and increase false positives. The reliability of functional connectivity measurements is also fundamentally constrained by factors like scan length and head motion [25] [11].

Can I improve the reliability of my existing dataset? Yes, a primary method is through extended data aggregation. The reliability of fMRI measures asymptotically increases with a greater number of timepoints acquired. If your dataset includes multiple task or rest runs, concatenating them can boost your reliability estimates [25] [15].

Is fMRI reliable enough for use in drug development? It has significant potential but meets challenges. For fMRI to be widely adopted in clinical trials, its readouts must be both reproducible and modifiable by pharmacological agents. While no fMRI biomarker has yet been fully qualified by regulatory agencies like the FDA or EMA, consortia are actively working toward this goal. Its value is highest for providing objective data on central nervous system (CNS) penetration, target engagement, and pharmacodynamic effects in early-phase trials [13] [31].

Troubleshooting Guides: Diagnosing and Solving Reliability Problems

Problem: Low ICC in Task-Based fMRI Analysis

Symptoms: Low Intraclass Correlation Coefficient (ICC) values (e.g., below 0.4, which is considered "poor") when assessing test-retest reliability for individual brain regions [18].

Diagnosis Checklist:

- Are you using contrast scores? Check your first-level model. Using a contrast of Condition A vs. Condition B is common but problematic for reliability.

- Is your scan length too short? Reliability increases with more data. A typical 10-minute scan is often insufficient for robust individual differences research [25] [15].

- Is the task engaging the regions you are analyzing? Reliability is not uniform across the brain; it is highest in regions strongly activated by the task [25] [18].

Solutions:

- Use Beta Coefficients: For reliability analysis, extract β coefficients from your first-level General Linear Model (GLM) for a single condition of interest, rather than a contrast. One study found this simple switch significantly increased ICC values [18].

- Adopt Multivariate Measures: Instead of relying on the average signal from a single region of interest (ROI), use multivariate pattern analysis or brain-state classifiers. These measures, optimized with machine learning, can show good-to-excellent reliability, even when single-region measures fail [2].

- Aggregate More Data: Collect more data per participant. If possible, design studies with longer scan times or multiple sessions. "Precision fMRI" studies may use 5+ hours of data per person to achieve high-fidelity individual brain maps [15].

Problem: Inconsistent Resting-State Functional Connectivity Results

Symptoms: High variation in functional connectivity matrices between sessions for the same participant; connectivity strengths that are not reproducible.

Diagnosis Checklist:

- Check your band-pass filter settings. The standard frequency filters (e.g., 0.009–0.08 Hz) can artificially inflate correlation estimates and lead to false positives [11].

- Review data quality. High head motion is a primary confound that systematically alters the functional connectome and reduces measurement validity [25].

- Consider the signal-to-noise ratio. Regions with low temporal signal-to-noise ratio (tSNR), such as the orbitofrontal cortex, have inherently lower reliability [25].

Solutions:

- Statistical Correction: Adjust your preprocessing pipeline. Align your sampling rate with the analyzed frequency band and consider using surrogate data methods to account for autocorrelation, which helps control for false positives [11].

- Robust Denoising: Implement advanced denoising techniques, such as multi-echo ICA, which can help separate BOLD signal from non-BOLD noise more effectively [15].

- Increase Scan Duration: Just as with task-based fMRI, the reliability of functional connectivity measures improves with longer scan times. Aim for acquisitions longer than 10 minutes [25].

Quantitative Data on fMRI Reliability

Table 1: Reliability Coefficients of Different fMRI Measures

This table summarizes the range of test-retest reliability (often measured by ICC) for common fMRI measures, highlighting how the choice of measure impacts reliability.

| fMRI Measure | Typical Reliability (ICC Range) | Key Influencing Factors |

|---|---|---|

| Univariate Task Activation (single ROI) | Poor to Fair (0.1 - 0.5) [2] [18] | Scan length, task design, head motion, analytical approach (contrasts vs. betas) |

| Multivariate Pattern Analysis | Good to Excellent (0.7 - 0.9+) [2] | Pattern stability, machine learning model, amount of training data |

| Resting-State Functional Connectivity | Variable (Poor to Good) [25] | Scan length, network location, head motion, preprocessing pipeline |

| Network-Level Analysis (ICA) | Generally higher than voxel-wise [18] | Data-driven approach, stability of large-scale networks |

Table 2: Impact of Experimental Factors on Reliability

This table outlines how specific design and methodological choices can either degrade or enhance the reliability of your fMRI data.

| Factor | Impact on Reliability | Evidence-Based Recommendation |

|---|---|---|

| Scan Length | Positive asymptotic relationship [25] [15] | Aggregate data across longer scans or multiple sessions (e.g., >30 mins total). |

| Task vs. Rest | Region-specific effect [25] | Use tasks that strongly engage your network of interest; reliability there will be higher than at rest. |

| Analytical Variable (Beta vs. Contrast) | Beta coefficients > Contrasts [18] | Use β coefficients from first-level GLM for reliability calculations on single conditions. |

| Signal Variability (tSD) | Strong positive driver [25] | Temporal standard deviation (tSD) of the BOLD signal is a key marker; higher tSD often associates with higher reliability. |

Experimental Protocols for Reliability

Protocol: Assessing Test-Retest Reliability with ICC

Purpose: To quantitatively evaluate the consistency of an fMRI measure within the same individuals across multiple sessions.

Materials: fMRI dataset with at least two scanning sessions per participant, acquired under identical or very similar conditions (e.g., same scanner, sequence, and task).

Methodology:

- Data Acquisition: Acquire your fMRI data (task or rest) across two or more sessions. The time between sessions (e.g., same day, weeks apart) should be chosen based on the stability you wish to measure.

- Preprocessing: Process all data through a standardized, robust pipeline (e.g., including motion correction, normalization, and for rsfMRI, careful filtering).

- Feature Extraction: For the measure of interest (e.g., amygdala activation during an emotion task), extract the values for each participant at each session.

- Critical Step: Extract β coefficients for a specific condition, not contrast scores, to maximize between-subject variance [18].

- Statistical Analysis: Calculate the Intraclass Correlation Coefficient (ICC). The ICC(2,1) form is commonly used, which partitions variance into between-subject and within-subject components. Values are interpreted as:

- Poor: < 0.4

- Fair: 0.4 - 0.59

- Good: 0.6 - 0.74

- Excellent: > 0.75 [18]

Protocol: Precision fMRI for Individual Brain Mapping

Purpose: To create highly reliable functional brain maps for individual participants, suitable for clinical biomarker discovery or brain-behavior mapping.

Materials: Access to an MRI scanner; participants willing to undergo extended scanning.

Methodology:

- Study Design: Plan for densely sampled data. This involves collecting many hours of fMRI data per participant, spread across multiple sessions (e.g., 10 sessions of 1+ hour each) [15].

- Data Collection: Acquire a mixture of data types, including multiple task states (engaging different systems) and resting-state data. This allows for comprehensive mapping.

- Data Processing: Process data at the individual level, avoiding group-level averaging that obscures individual differences. Use surface-based analysis and custom registration for optimal alignment [15].

- Data Aggregation: Concatenate all fMRI time series (from both task and rest) for each participant. This massive aggregation drives reliability into the high asymptotic range.

- Validation: Use split-half reliability (e.g., correlating connectomes from sessions 1-5 with sessions 6-10) to confirm the high fidelity of the individual maps [25].

Visualizing the Pathways to Reliability

Diagram: Factors Determining fMRI Reliability

The Scientist's Toolkit: Essential Reagents & Materials

Table 3: Key Research Reagent Solutions for Reliability

| Tool / Material | Function | Role in Enhancing Reliability |

|---|---|---|

| Densely-Sampled Datasets (e.g., Midnight Scan Club) | Provides a benchmark and testbed for reliability methods. | Enables the study of reliability asymptotic limits and region-specific task effects [25]. |

| Multivariate Pattern Analysis | A class of machine learning algorithms applied to brain activity patterns. | Extracts more reliable, distributed brain signatures than univariate methods, improving biomarker potential [2]. |

| Multi-echo fMRI Sequence | An acquisition sequence that collects data at multiple echo times. | Allows for superior denoising (e.g., via multi-echo ICA), isolating BOLD signal from non-BOLD noise [15]. |

| Intraclass Correlation (ICC) | A statistical model quantifying test-retest reliability. | The standard metric for assessing measurement consistency; crucial for evaluating new methods [18]. |

| Independent Component Analysis (ICA) | A data-driven method to separate neural networks from noise. | Improves reliability by identifying coherent, large-scale networks that are stable across sessions [18]. |

Methodological Strategies for Enhancing fMRI Reliability in Experimental Designs

A guide to enhancing the reliability and power of your fMRI research

This resource addresses frequently asked questions on optimizing key fMRI acquisition parameters, framed within the broader goal of improving test-retest reliability in neuroimaging research. The guidance synthesizes recent empirical findings to help researchers, scientists, and drug development professionals design more powerful, reproducible, and cost-effective studies.

Frequently Asked Questions

Q1: How does scan duration impact prediction accuracy and reliability in brain-wide association studies (BWAS)?

Longer scan durations significantly improve phenotypic prediction accuracy and data reliability. A 2025 large-scale study in Nature demonstrated that prediction accuracy increases with the total scan duration (calculated as sample size × scan time per participant) [3].

The study, analyzing 76 phenotypes across nine datasets, found that for scans up to 20 minutes, accuracy increases linearly with the logarithm of the total scan duration [3].

- Cost-Benefit Analysis: When accounting for overhead costs per participant (e.g., recruitment), longer scans can be more economical than larger sample sizes for improving prediction performance. The research indicates that 10-minute scans are cost-inefficient, and 30-minute scans are, on average, the most cost-effective, yielding 22% savings over 10-minute scans [3].

- Practical Recommendation: The study recommends a scan time of at least 30 minutes for resting-state whole-brain BWAS. For task-fMRI, the most cost-effective scan time may be shorter, while for subcortical-to-whole-brain BWAS, it may be longer [3].

Q2: What are the key temporal factors in session planning that can influence fMRI reliability?

The timing of your scan session is not arbitrary; several temporal factors can modulate resting-state brain connectivity and topology, potentially confounding results.

A study of over 4,100 adolescents found that the time of day, week, and year of scanning were correlated with topological properties of resting-state connectomes [32].

- Time of Day: Scanning later in the day was negatively correlated with multiple whole-brain and network-specific topological properties (with the exception of a positive correlation with modularity). These effects, while spatially extensive, were generally small [32].

- Time of Week and Year: Being scanned on a weekend (vs. a school day) or during summer vacation (vs. the school year) was associated with topological differences, particularly in visual, somatomotor, and temporoparietal networks. The effect sizes varied from small to large [32].

- Impact on Cognition: Including these scan time parameters in models eliminated some associations between connectome properties and performance on cognitive tasks, suggesting they should be treated as confounders in analyses relating brain function to behavior [32].

Q3: Which analytical measures provide better test-retest reliability for task-based fMRI?

The choice of which neural activation measure to use in reliability calculations has a substantial impact on the resulting Intraclass Correlation Coefficient (ICC).

Research indicates that using β coefficients from a first-level General Linear Model (GLM) provides higher test-retest reliability than using contrasts (difference scores between conditions) [18].

- Rationale: Contrasts often have low between-subject variance and the two measures being subtracted are highly correlated, introducing error and resulting in lower reliability estimates [18].

- Empirical Support: A 2022 study found that ICC values were higher when calculated using β coefficients for both voxel-wise and network-level (ICA) analyses. Using contrasts may underlie the low reliability estimates frequently reported in the literature [18].

Q4: How should I approach the trade-off between sample size and scan duration per participant?

Sample size and scan time are initially interchangeable but with diminishing returns. Investigators must often choose between scanning more participants for a shorter time or fewer participants for a longer time [3].

The following workflow outlines a data-driven approach to planning your study parameters, based on empirical models:

A theoretical model shows that prediction accuracy increases with both sample size (N) and total scan duration. For a fixed total scanning time (e.g., 6,000 minutes), prediction accuracy decreases as scan time per participant increases, but the reduction is modest for shorter scan times [3].

- Key Insight: While longer scans can compensate for smaller sample sizes (and vice versa), the required increase in scan time becomes progressively larger as the duration extends. Sample size is ultimately more important for generalizability, but longer scans can be a cost-effective way to boost prediction power [3].

- Resource for Planning: An online reference is available for future study design based on this model: Optimal Scan Time Calculator [3].

The table below summarizes key quantitative relationships derived from empirical studies to aid in experimental design and parameter selection.

| Relationship | Empirical Finding | Practical Implication | Source |

|---|---|---|---|

| Scan Duration vs. Prediction Accuracy | Accuracy increases with the logarithm of total scan duration (Sample Size × Scan Time). Diminishing returns observed, especially beyond 20-30 min. | For a fixed budget, longer scans (≥20 min) are initially interchangeable with a larger sample size for boosting accuracy. | [3] |

| Optimal Cost-Effective Scan Time | On average, 30-minute scans are the most cost-effective, yielding 22% cost savings over 10-minute scans. | 10-minute scans are cost-inefficient. Overshooting the optimal scan time is cheaper than undershooting it. | [3] |

| Sample Size vs. Scan Time Trade-off | For a fixed 6,000 min of total scan time, prediction accuracy decreases as scan time per participant increases. | When total resource is fixed, a larger sample size with shorter scans generally yields higher accuracy than a small sample with very long scans. | [3] |

The Scientist's Toolkit: Essential Reagents & Materials

The following table details key methodological "reagents" and considerations for designing an fMRI study with high test-retest reliability.

| Item / Solution | Function / Role in Experiment | Technical Notes |

|---|---|---|

| Optimal Scan Time Calculator | An online tool to model the trade-off between sample size and scan duration for maximizing prediction power. | Based on empirical models from large datasets (ABCD, HCP). Use for study planning and power analysis [3]. |

| β Coefficients (GLM) | A direct measure of functional activation for a single task condition; used as a superior input for test-retest reliability (ICC) calculations. | Provides higher ICC values than contrast scores due to greater between-subject variance [18]. |

| ICC(2,1) | A specific intraclass correlation coefficient model used to assess test-retest reliability, reflecting absolute agreement with a random facet for raters (sessions). | A recommended starting point for estimating the reliability of single measurements in fMRI [16]. |

| Scan Time Covariates | Variables related to the timing of the scan session that should be included in statistical models to control for confounding variance. | Includes time of day, day of week (school vs. weekend), and time of year (school vs. vacation) [32]. |

| Phantom Scans | Regular quality assurance (QA) scans on a standardized object to monitor scanner stability and identify hardware-related artefacts over time. | Critical for longitudinal and multi-site studies to ensure measurement consistency [33]. |

Detailed Experimental Protocols

Protocol 1: Designing a BWAS with Optimal Scan Duration

This protocol is adapted from the large-scale analysis published in Nature (2025) [3].

- Define Phenotype and Dataset: Select the phenotypic trait for prediction and choose an appropriate dataset (e.g., resting-state or task-fMRI from HCP, ABCD, etc.).

- Calculate Functional Connectivity: For each participant, calculate a whole-brain functional connectivity matrix (e.g., 419x419) using the first T minutes of fMRI data. Vary T from short (e.g., 2 min) to the maximum available in intervals (e.g., 2 min).

- Predict Phenotype: Use a machine learning model (e.g., Kernel Ridge Regression) with the connectivity matrices as features to predict the phenotype. Employ a nested cross-validation procedure.

- Systematically Vary Parameters: Repeat the prediction across different training sample sizes (N) and scan durations (T).

- Model the Relationship: Fit a logarithmic or theoretical model to the resulting prediction accuracies to characterize the relationship between N, T, and accuracy. Use this model or the provided online calculator to determine the optimal scan time and sample size for a new study.

Protocol 2: Assessing Test-Retest Reliability with ICC

This protocol guides the measurement of fMRI reliability, drawing from best practices in the field [16] [18].

- Data Acquisition: Collect fMRI data from participants at two separate time points (test and retest). The interval should be chosen based on the research question (e.g., short-term for scanner stability, long-term for developmental traits).

- Image Processing & First-Level Analysis: Preprocess the data (motion correction, normalization, etc.). For task-based data, run a first-level GLM for each participant and session to obtain β coefficient maps for conditions of interest.

- Define the Measure of Interest: Extract the neural measure for reliability assessment. For univariate analysis, this could be the mean β value from an ROI. For functional connectivity, it could be the correlation strength of a specific edge or network.

- Choose an ICC Model: Select an appropriate ICC model. ICC(2,1) is often a robust choice for assessing absolute agreement between sessions, modeling the session facet as random [16].

- Calculate and Interpret ICC: Compute the ICC for the measure across participants. Interpret values using established benchmarks: poor (<0.4), fair (0.4-0.59), good (0.6-0.74), excellent (≥0.75) [16].

Troubleshooting Guide: Frequently Asked Questions

Smoothing Kernel Selection

1. How does the size of the smoothing kernel affect my functional connectivity results?

Smoothing kernel size directly modifies functional connectivity networks and network parameters by altering node connections. Studies show different kernel sizes (0-10mm FWHM) produce varying effects on resting-state versus task-based fMRI analyses [34].

Table 1: Effects of Smoothing Kernel Size on Group-Level fMRI Metrics [34]

| Kernel Size (FWHM) | Effect on Functional Networks | Impact on Signal-to-Noise Ratio | Effect on Spatial Specificity |

|---|---|---|---|

| 0mm (no smoothing) | Maximum spatial specificity | Lowest SNR | No blurring |

| 2-4mm | Minimal network alteration | Moderate SNR improvement | Mild blurring |

| 6-8mm | Balanced network enhancement | Good SNR improvement | Moderate blurring |

| 10mm+ | Significant network modification | Highest SNR | Substantial blurring |

Research indicates that kernel selection represents a trade-off: larger kernels improve SNR and group-level analysis sensitivity but reduce spatial specificity and may obscure small activation regions, which is particularly problematic for clinical applications like presurgical planning [35] [36].

2. What is the recommended smoothing kernel for clinical applications requiring high spatial specificity?

For clinical applications where individual-level accuracy is critical (e.g., presurgical planning), use minimal smoothing (2-4mm FWHM) or adaptive spatial smoothing methods [36]. The standard 8mm kernel often used in neuroscience group studies is inappropriate for clinical applications where individual variability must be preserved [36]. Advanced methods like Deep Neural Networks (DNNs) can provide adaptive spatial smoothing that incorporates brain tissue properties for more accurate characterization of brain activation at the individual level [35].

Motion Correction Strategies

3. What are the main types of motion artifacts in fMRI, and how do they affect data quality?

Motion artifacts represent one of the greatest challenges in fMRI, causing two primary problems [37]:

- Spatial misalignment: Voxels represent different brain locations over time, complicating time-series analysis

- Signal artifacts: Motion disrupts EPI acquisition assumptions, creating large-magnitude signal changes that can dwarf biological signals of interest

Table 2: Motion Correction Strategies and Their Applications

| Strategy Type | Method Examples | Best Use Cases | Limitations |

|---|---|---|---|

| Prospective Correction | Real-time position updating, navigator echoes [37] | High-motion populations, children | Not widely available, requires specialized sequences |

| Retrospective Correction | Rigid-body registration, FSL MCFLIRT, SPM realign [38] | Standard research protocols | Cannot fully correct spin-history effects |

| Physiological Noise Correction | RETROICOR, CompCor [39] | Studies sensitive to cardiac/respiratory effects | Requires additional monitoring equipment |

| Advanced Methods | Multi-echo fMRI, fieldmap correction [39] | High-quality data acquisition | Increased acquisition and processing complexity |

4. How can I improve test-retest reliability through motion correction?

Optimizing your preprocessing pipeline significantly impacts test-retest reliability. Key strategies include [40]:

- Implementing rigorous motion parameter regression (6-24 parameters)

- Incorporating noise regressors from white matter and CSF signals

- Applying global signal regression cautiously, as it impacts connectivity measures

- Using high-pass filtering to remove low-frequency drifts

Research shows that preprocessing optimization can improve intra-class correlation coefficients (ICC) from modest levels (0.5-0.6) to more reliable values, though the optimal pipeline depends on your specific research goals [40].

Pipeline Optimization

5. How do I balance smoothing and motion correction in my preprocessing pipeline?

Create an optimized, standardized pipeline that maintains consistency across all subjects [34]:

- Perform quality assurance before any processing to identify fatal artifacts [41]

- Apply slice timing correction to account for acquisition time differences between slices [38]

- Implement rigid-body motion correction using a standardized reference volume [38]

- Choose smoothing parameters based on your specific research question and need for spatial specificity [36]

- Apply consistent parameters across all subjects in your study [34]

Experimental Protocols for Method Validation

Protocol 1: Evaluating Smoothing Kernel Impact

Objective: Systematically assess how kernel size affects your specific data and research question [34].

Methodology:

- Process identical dataset with multiple kernel sizes (0, 2, 4, 6, 8, 10mm FWHM)

- For each kernel size, calculate:

- Graph theory metrics (betweenness centrality, global/local efficiency, clustering coefficient)

- Principal Component Analysis parameters (kurtosis, skewness)

- Independent Component Analysis components

- Compare network structures and connectivity patterns across kernel sizes

- Select kernel that optimizes SNR while preserving spatial specificity needed for your hypothesis

Protocol 2: Motion Correction Quality Assessment

Objective: Validate motion correction efficacy and identify residual motion artifacts [37] [40].

Methodology:

- Extract six rigid-body motion parameters (3 translation, 3 rotation)

- Calculate framewise displacement (FD) and DVARS metrics

- Identify motion-contaminated volumes exceeding threshold (typically FD > 0.2-0.5mm)

- Implement censoring (scrubbing) or include motion parameters as regressors

- Verify correction efficacy by examining:

- Residual motion-related correlations in denoised data

- Relationship between motion and connectivity measures

- Group differences in motion parameters that may confound results

Research Reagent Solutions

Table 3: Essential Tools for fMRI Preprocessing Optimization

| Tool Category | Specific Solutions | Function | Implementation Considerations |

|---|---|---|---|

| Preprocessing Pipelines | fMRIPrep, SPM, FSL, AFNI | Automated standardized preprocessing | fMRIPrep provides consistent, reproducible processing [42] |

| Spatial Smoothing Tools | Gaussian filtering, Adaptive DNN methods [35] | Noise reduction, SNR improvement | DNN methods preserve spatial specificity better than isotropic smoothing [35] |

| Motion Correction Methods | MCFLIRT (FSL), Realign (SPM), RETROICOR [39] | Head motion artifact reduction | RETROICOR specifically targets physiological noise from cardiac/respiratory cycles [39] |

| Quality Metrics | Framewise displacement, DVARS, tSNR | Quantify data quality and preprocessing efficacy | Critical for identifying problematic datasets and validating corrections |

Workflow Visualization

fMRI Preprocessing Decision Pathway

Smoothing Kernel Selection Guide

FAQs on Paradigm Selection and Optimization

FAQ 1: What is the fundamental difference between a blocked and an event-related design?

A blocked design presents a condition continuously for an extended time interval (a block) to maintain cognitive engagement, with different task conditions alternating over time [43]. In contrast, an event-related design presents discrete, short-duration events. These events can be presented with randomized timing and order, allowing for the analysis of individual trial responses and reducing a subject's expectation effects [43]. A "rapid" event-related design uses an inter-stimulus-interval (ISI) shorter than the duration of the hemodynamic response function (HRF), which is typically 10-12 seconds [43].

FAQ 2: Which design has higher statistical power for detecting brain activation?

Blocked designs are historically recognized for their high detection power and robustness [43]. They produce a relatively large blood-oxygen-level-dependent (BOLD) signal change compared to baseline [43]. However, some direct comparisons, particularly in patient populations like those with brain tumors, have found that rapid event-related designs can provide maps with more robust activations in key areas like language cortex, suggesting comparable or even higher detection power in certain clinical applications [43].

FAQ 3: How does paradigm choice impact test-retest reliability, a critical issue in fMRI research?

Converging evidence demonstrates that standard univariate fMRI measures, including voxel- and region-level task-based activation, show poor test-retest reliability as measured by intraclass correlation coefficients (ICC) [16]. This is a major challenge for the field. The reliability of task-based activation can be influenced by task type [16]. Optimizing task design is therefore cited as an urgent need to improve the utility of fMRI for studying individual differences, including in drug development [29].

FAQ 4: For a pre-surgical language mapping study, which design is more sensitive?

A study comparing designs for a vocalized antonym generation task in brain tumor patients found a relatively high degree of discordance between blocked and event-related activation maps [43]. In general, the event-related design provided more robust activations in putative language areas, especially for the patients. This suggests that event-related designs may generate more sensitive language maps for pre-surgical planning [43].

FAQ 5: What are the key trade-offs I should consider when choosing a design?

The table below summarizes the core trade-offs between the two design types.

Table 1: Key Characteristics of Blocked vs. Event-Related fMRI Designs

| Characteristic | Blocked Design | Event-Related Design |

|---|---|---|

| Detection Power | High, robust [43] | Can be comparable or higher in some contexts [43] |

| BOLD Signal Change | Large relative to baseline [43] | Smaller for individual events |

| Trial Analysis | Not suited for single-trial analysis | Allows analysis of individual trial responses [43] |

| Subject Expectancy | More susceptible to expectation effects | Less sensitive to habituation and expectation [43] |

| Head Motion | More sensitive to head motion [43] | Less sensitive to head motion [43] |

| HRF Estimation | Not designed for precise HRF estimation | Effective at estimating the hemodynamic impulse response [43] |

Troubleshooting Common Experimental Issues

Issue 1: My fMRI activation maps are unreliable across sessions.

- Potential Cause: Low test-retest reliability of univariate measures is a known, widespread challenge in fMRI [16] [29].

- Solutions:

- Consider Multivariate Approaches: Some evidence suggests multivariate approaches may improve both reliability and validity [16].

- Minimize Head Motion: Motion has a pronounced negative effect on reliability. Ensure participants are comfortably restrained and use real-time motion correction if available. Studies show the lowest motion quartile of participants can have reliability estimates 2.5 times higher than the highest motion quartile [29].

- Optimize Design Timing: For event-related designs, use a jittered ISI to help de-convolve overlapping hemodynamic responses and improve statistical efficiency [43].

- Increase Session Length: Acquiring more data per subject can improve signal-to-noise ratio and reliability.

Issue 2: I need to isolate the brain's response to a specific, brief cognitive event.

- Potential Cause: A blocked design averages activity over a long period, making it unsuitable for parsing discrete cognitive processes.

- Solution: Switch to an event-related design. This paradigm is specifically suited for detecting transient variations in the hemodynamic response to individual trials or trial types, allowing you to model the brain's response to isolated events [43] [16].

Issue 3: My participants are becoming habituated or predicting the task sequence.

- Potential Cause: The predictable nature of a standard blocked design can lead to habituation and anticipation effects, which may confound the neural signal of interest.

- Solution: Implement a rapid event-related design with a jittered ISI. The randomized order and timing of trials help to minimize these confounding effects [43].

Issue 4: I am working with a clinical population that has difficulty performing tasks for long periods.

- Potential Cause: Blocked designs often require sustained cognitive engagement over 20-30 second periods, which can be challenging for some patient groups.

- Solution: An event-related design may be more tolerable. The presentation of brief, discrete trials can be easier for patients to handle, and the design is generally less sensitive to head motion, which is a common issue in clinical populations [43].

Experimental Protocols for Paradigm Comparison

Protocol: Direct Comparison of Blocked and Event-Related Designs for Language fMRI

This protocol is adapted from a study investigating design efficacy for pre-surgical planning [43].

1. Task Paradigm:

- Task: Vocalized antonym generation.

- Stimuli: Visually presented words.

- Control Condition: Vocalized reading of visually presented words.

2. Experimental Designs:

- Blocked Design: Alternating 30-second task blocks and 30-second control blocks.

- Event-Related Design: Rapid presentation of individual trial events (e.g., 3-second duration) with a jittered inter-stimulus-interval (ISI) randomized around a mean of 5-6 seconds.

3. Image Acquisition:

- Scanner: 3.0 Tesla MRI system.

- Sequence: Single-shot gradient-echo echo-planar imaging (EPI) for BOLD fMRI.

- Parameters: TR=2000 ms, TE=40 ms, flip angle=90°, voxel size=2×2×4 mm³.

- Structural Scan: High-resolution T1-weighted 3D-SPGR sequence.

4. Data Analysis:

- Preprocessing: Standard pipeline including motion correction, spatial smoothing, and normalization.

- Statistical Modeling:

- Blocked Design: Use a boxcar regressor convolved with a hemodynamic response function (HRF) to model task vs. control periods.

- Event-Related Design: Model each trial as a discrete event convolved with the HRF.

- Comparison Metrics:

- Visual Inspection: Qualitatively assess activation maps for robustness and coverage in putative language areas (e.g., Broca's and Wernicke's).

- Laterality Index: Calculate a quantitative measure of language lateralization for each design.

- Clinical Concordance: Compare fMRI results with gold-standard invasive maps from Wada testing or intra-operative cortical stimulation, if available [43].

The Scientist's Toolkit

Table 2: Essential Research Reagents and Materials for fMRI Paradigm Development

| Item Name | Function / Description | Key Considerations |

|---|---|---|

| 3.0 Tesla MRI Scanner | High-field magnetic resonance imaging system for BOLD fMRI data acquisition. | Higher field strength provides improved signal-to-noise ratio [43]. |

| Echo-Planar Imaging (EPI) | Fast MRI sequence for capturing rapid whole-brain images during task performance. | Essential for measuring the temporal dynamics of the BOLD signal [43]. |

| Stimulus Presentation Software | Software to deliver visual, auditory, or other stimuli to the participant in the scanner. | Must be capable of precise timing synchronization with the scanner's TR and support jittered designs. |

| Response Recording Device | Apparatus (e.g., button box, fMRI-compatible microphone) to record participant behavior. | Critical for monitoring task performance and ensuring engagement; must be MRI-safe [43]. |

| High-Resolution Structural Sequence | T1-weighted 3D sequence (e.g., SPGR, MPRAGE) for anatomical reference. | Used for spatial normalization and localization of functional activations [43]. |

| Jittered ISI Protocol | A trial presentation sequence with randomized intervals between stimuli. | A key component of rapid event-related designs to improve efficiency and de-convolve HRFs [43]. |

Workflow and Signaling Diagrams

Blocked vs. Event-Related Design Workflow

fMRI Reliability Optimization Pathway

Contrast Selection and Parameterization Approaches for Improved Consistency

Frequently Asked Questions

Q1: Why is the test-retest reliability of my fMRI task activations poor, and how can contrast selection improve it? Poor test-retest reliability can stem from several factors related to how brain activity is modeled and contrasted. To improve consistency, focus on selecting contrasts that capture stable, trait-like neural responses. Research indicates that the magnitude and type of contrast significantly impact reliability. For instance, contrasts modeled on the average BOLD response to a specific event (e.g., a simple decision vs. baseline) typically show greater reliability than those using parametrically modulated regressors (e.g., responses weighted by risk level) [44]. Furthermore, ensure your model includes key parameters known to affect analytical variability, such as appropriate motion regressors and hemodynamic response function (HRF) modeling [45].

Q2: What specific parameters in my subject-level GLM most impact the consistency of my results across sessions? Your choices in the General Linear Model (GLM) at the subject level are critical. Key parameters identified as major sources of analytical variability include [45]:

- Spatial Smoothing: The size of the smoothing kernel (FWHM) affects signal-to-noise and spatial specificity.

- Motion Regressors: The number of motion parameters included as nuisance regressors (e.g., 6, 24, or none) can significantly alter results.

- HRF Modeling: The presence or absence of HRF derivatives (temporal and/or dispersion) in the model flexibly accounts for variations in the hemodynamic response timing and shape. The combination of these choices, along with the software package used (e.g., FSL or SPM), can lead to substantially different group-level results, impacting the consistency of your findings [45].

Q3: How can I design an fMRI task to maximize its long-term reliability for a longitudinal or clinical trial study? To maximize long-term reliability, choose a task with minimal practice effects so that behavioral performance and neural correlates remain stable over time. For example, a probabilistic classification learning task has shown high long-term (13-month) test-retest reliability in frontostriatal activation because the specific materials to be learned can be changed across sessions, limiting performance gains from practice [46]. Additionally, select task contrasts that have been empirically demonstrated to show good reliability, such as the decision period in a risk-taking task (e.g., the Balloon Analogue Risk Task), which often shows higher reliability than outcome evaluation periods [44].

Troubleshooting Guides

Issue: Low Intra-class Correlation (ICC) in Key ROIs

Problem: The brain activation in your regions of interest (ROIs) is not stable across multiple scanning sessions for the same participants, leading to low ICC values.

Solution:

- Verify Contrast Design: Re-evaluate your primary contrast. Use a categorical "task vs. baseline" contrast instead of a parametrically modulated one if you are in the early stages of establishing reliability [44].

- Optimize ROI Definition:

- Define your ROIs based on significant group-level activations from an independent localizer task or dataset, rather than using an entire anatomical parcel. Using only the significantly activated vertices within a parcel has been shown to improve reliability [44].

- If using a pre-defined parcellation, ensure the regions are functionally relevant to your task.

- Control for Motion: Rigorously account for participant motion, as it has a documented moderate negative effect on test-retest reliability. Include a comprehensive set of motion parameters (e.g., 24 regressors: 6 rigid-body, their derivatives, and squares) in your subject-level GLM [45] [44].

- Check Analytical Pipeline: Ensure consistency in your processing pipeline across all sessions. Use containerized computing (e.g., Docker/Singularity) to guarantee identical software environments and versions [45].

Issue: High Analytical Variability Across Research Teams

Problem: Different research groups analyzing the same dataset arrive at different conclusions due to varying analytical pipelines.

Solution:

- Standardize Critical Parameters: Based on large-scale comparisons, explicitly define and report these parameters in your methods [45]:

- Software (FSL, SPM, AFNI)

- Spatial smoothing kernel size (e.g., 5mm or 8mm FWHM)

- Number of motion regressors (0, 6, or 24)

- HRF modeling (with or without derivatives)