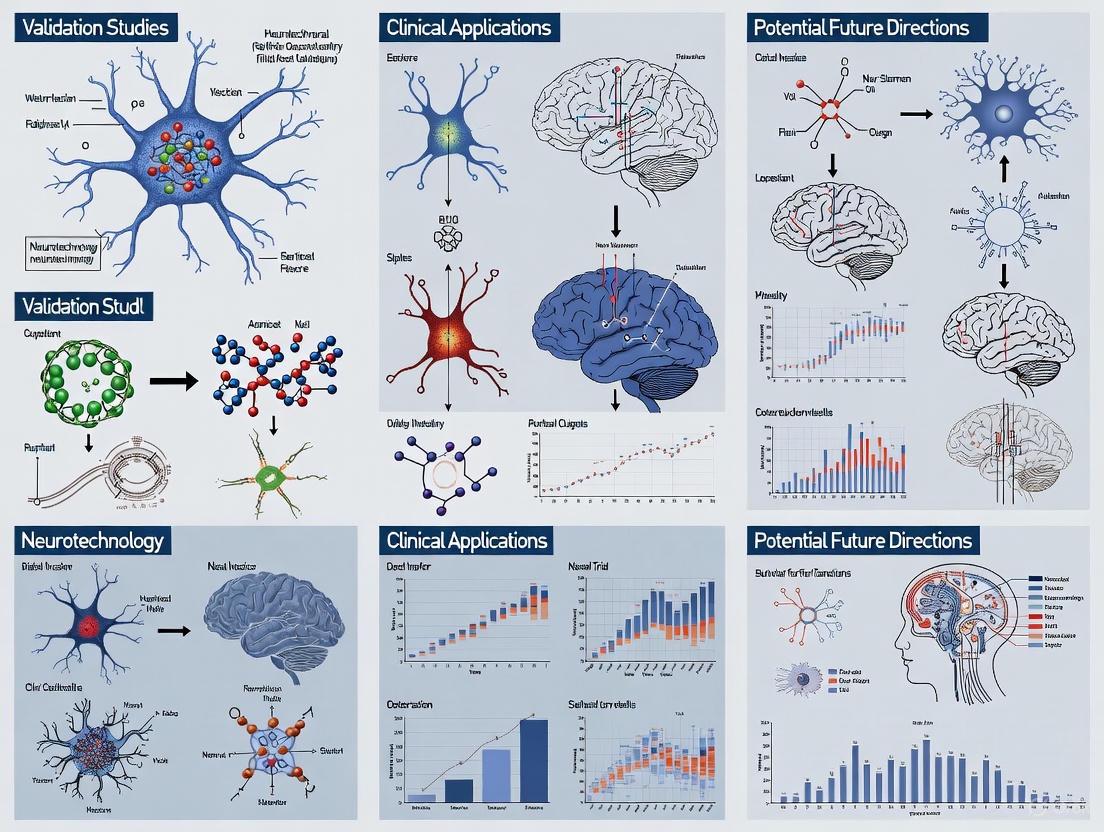

From Lab to Clinic: Validating Neurotechnology for Real-World Medical Applications

This article provides a comprehensive roadmap for the clinical validation of neurotechnology, tailored for researchers, scientists, and drug development professionals.

From Lab to Clinic: Validating Neurotechnology for Real-World Medical Applications

Abstract

This article provides a comprehensive roadmap for the clinical validation of neurotechnology, tailored for researchers, scientists, and drug development professionals. It explores the foundational principles of neurotechnology, including brain-computer interfaces (BCIs) and neuromodulation, and details the methodological approaches for their application in treating conditions from Parkinson's disease to paralysis. The content further addresses critical troubleshooting and optimization challenges, such as signal quality and data privacy, and concludes with robust frameworks for clinical validation and comparative analysis of emerging technologies, offering a holistic guide for translating innovative neurotechnologies into safe and effective clinical tools.

The Landscape of Modern Neurotechnology: Core Principles and Clinical Potential

Neurotechnology represents a rapidly advancing field dedicated to understanding the brain and developing treatments for neurological disorders. It encompasses a suite of tools for monitoring, interpreting, and modulating neural activity. This guide objectively compares the performance of key neurotechnology domains—neuroimaging, neuromodulation, and brain-computer interfaces (BCIs)—within the critical context of clinical validation research. For researchers and drug development professionals, validating the efficacy and reliability of these technologies is a foundational step in translating laboratory innovations into approved therapies. The following sections provide a structured comparison of their clinical applications, supported by experimental data and detailed methodologies, to inform robust validation study design.

Core Domains of Neurotechnology

Neurotechnology can be broadly categorized into three primary domains, each with distinct purposes, mechanisms, and clinical applications.

Neuroimaging: This domain involves technologies for visualizing brain structure and function. Its primary purpose is diagnosis and the provision of biofeedback. Modalities include Magnetic Resonance Imaging (MRI), functional MRI (fMRI), and Electroencephalography (EEG). A key clinical application is the AI-assisted detection of abnormalities from brain MRI scans for early diagnosis of tumors and other pathologies [1].

Neuromodulation: This involves technologies that alter neural activity through targeted stimulation. Its purpose is therapeutic treatment. Modalities include Transcranial Direct Current Stimulation (tDCS) and Functional Electrical Stimulation (FES). A prominent clinical application is upper limb motor recovery in stroke patients [2].

Brain-Computer Interfaces (BCIs): BCIs establish a direct communication pathway between the brain and an external device. Their purpose is to restore function and facilitate rehabilitation. They can be invasive (e.g., implanted chips) or non-invasive (e.g., EEG-based). BCIs are applied clinically to restore communication for individuals with severe paralysis and to drive neurorehabilitation after stroke [3] [4] [5].

The global market dynamics reflect the maturation of these fields. The broader neurotechnology sector is projected to grow from $15.77 billion in 2025 to nearly $30 billion by 2030. Within this, the BCI market specifically is projected to reach $1.27 billion in 2025 and grow to $2.11 billion by 2030, largely driven by demand in healthcare and rehabilitation [3].

Performance Comparison in Clinical Applications

Quantitative performance data is essential for evaluating the clinical viability of neurotechnologies. The following tables summarize key metrics from recent studies, focusing on two primary application areas: motor rehabilitation and diagnostic imaging.

Table 1: Performance in Post-Stroke Upper Limb Rehabilitation

This table compares the efficacy of various interventions, including BCIs, neuromodulation, and their combinations, as measured by the Fugl-Meyer Assessment for Upper Extremity (FMA-UE), a standard metric for motor function.

| Intervention | Comparison Intervention | Mean Difference (MD) in FMA-UE Score (95% CI) | Key Findings & Clinical Significance |

|---|---|---|---|

| BCI-FES [2] | Conventional Therapy (CT) | MD = 6.01 (2.19, 9.83) | Significantly superior to conventional therapy, indicating a clinically meaningful improvement in motor function. |

| BCI-FES [2] | FES alone | MD = 3.85 (2.17, 5.53) | Outperforms peripheral electrical stimulation alone, highlighting the value of central, intention-driven control. |

| BCI-FES [2] | tDCS alone | MD = 6.53 (5.57, 7.48) | Significantly more effective than non-invasive brain stimulation alone in this analysis. |

| BCI-FES + tDCS [2] | BCI-FES | MD = 3.25 (-1.05, 7.55) | Not statistically significant, but a positive trend suggests potential synergistic effects from combined modalities. |

| BCI-FES + tDCS [2] | tDCS | MD = 6.05 (-2.72, 14.82) | Not statistically significant, though the large MD suggests a potentially strong effect requiring further study. |

A network meta-analysis ranking the cumulative efficacy of these interventions for upper limb recovery placed BCI-FES + tDCS first (98.9%), followed by BCI-FES (73.4%), tDCS (33.3%), FES (32.4%), and Conventional Therapy (12.0%) [2]. This suggests that integrated approaches are the most promising for neurorehabilitation.

Table 2: Performance in Brain Abnormality Diagnosis via MRI

This table compares the performance of different AI/ML models in classifying normal versus abnormal brain MRI scans, a key application of neuroimaging.

| Model Type | Specific Model | Accuracy | Key Strengths & Limitations |

|---|---|---|---|

| Deep Learning (Transfer Learning) | ResNet-50 (with ImageNet weights) [1] | ~95% | Achieves high accuracy and F1-score; demonstrates the power of leveraging pre-trained models, especially with limited data. |

| Deep Learning (Custom) | Custom CNN [1] | High (exact % not specified) | Performs well and can be tailored to specific data characteristics, but may require more data than transfer learning. |

| Traditional Machine Learning | SVM (RBF kernel) [1] | Relatively Poor | Struggles to learn complex, high-dimensional features in image data compared to deep learning models. |

| Traditional Machine Learning | Random Forest [1] | Relatively Poor | Similar to SVM, insufficient for complex image characteristics without extensive feature engineering. |

It is crucial to interpret these results with caution. The cited study used a large, balanced synthetic dataset of 10,000 images to overcome the common challenge of limited and imbalanced real-world medical data [1]. Performance must be validated with real-world clinical MRI data before clinical application can be established.

Experimental Protocols for Validation

Robust experimental methodologies are the bedrock of clinical validation. Below are detailed protocols for key experiments cited in this guide.

Protocol 1: Validating BCI-FES for Stroke Rehabilitation

This protocol outlines a clinical trial framework for assessing the efficacy of a combined BCI-FES system [2].

- Participant Recruitment: Enroll adult stroke patients (≥1 month post-stroke) with upper limb motor dysfunction (Brunnstrom stage ≥ II). Exclude patients with severe cognitive impairment or complete paralysis.

- Study Design: Randomized Controlled Trial (RCT). Participants are randomly assigned to an intervention group (e.g., BCI-FES) or a control group (e.g., Conventional Therapy, FES alone, or tDCS alone).

- Intervention Protocol:

- BCI-FES Setup: An EEG cap is placed on the patient's scalp to record brain signals. The BCI system is calibrated to detect movement intention from the motor cortex associated with the affected limb.

- Training Sessions: During each session, when the system detects motor intention, it automatically triggers the FES to stimulate the paralyzed muscles, creating a closed-loop system.

- Parameters: Typical sessions last 60-90 minutes, conducted 3-5 times per week for 4-8 weeks.

- Primary Outcome Measure: The Fugl-Meyer Assessment for Upper Extremity (FMA-UE) is administered before, immediately after, and at follow-up intervals after the intervention.

- Data Analysis: The mean difference (MD) in FMA-UE score change between groups is calculated and analyzed using a Bayesian framework for network meta-analysis to rank treatment efficacy.

The following workflow diagram illustrates the closed-loop nature of this BCI-FES protocol:

Protocol 2: AI Model Development for MRI Classification

This protocol details the methodology for developing and validating a deep learning model to classify brain MRI images as normal or abnormal [1].

- Dataset Sourcing & Preprocessing:

- Dataset: Use a large-scale dataset (e.g., 10,000 synthetic MRI images from the National Imaging System/AI Hub), with a balanced number of normal and abnormal cases.

- Preprocessing: All images are normalized to a standard size. Data augmentation techniques (rotation, translation, flipping) are applied to the training set to increase diversity and reduce overfitting.

- Data Splitting: The dataset is randomly split into training (80%), validation (10%), and test (10%) sets using stratified sampling to maintain class balance.

- Model Training & Comparison:

- Deep Learning Models: A custom Convolutional Neural Network (CNN) is trained from scratch. A ResNet-50 model, pre-trained on ImageNet, is fine-tuned on the MRI dataset (transfer learning).

- Traditional ML Models: Features are extracted from images, and classifiers like Support Vector Machine (SVM) with an RBF kernel and Random Forest are trained.

- Model Evaluation:

- All models are evaluated on the held-out test set.

- Performance metrics including accuracy, sensitivity, specificity, and F1-score are calculated.

- Validation: The top-performing model should undergo further validation using external, real-world clinical datasets to assess generalizability.

The workflow for this AI model development process is shown below:

The Scientist's Toolkit: Research Reagent Solutions

Successful neurotechnology research relies on a suite of core tools and platforms. The following table details essential components for building and validating neurotechnology systems.

Table 3: Essential Research Tools and Platforms

| Tool Category | Specific Examples | Function in Research |

|---|---|---|

| Signal Acquisition Hardware | EEG systems with scalp caps [5], fMRI scanners [6], Implantable electrodes (e.g., from Paradromics, Synchron) [3] | Records raw neural data (electrical or hemodynamic) from the brain for subsequent analysis and decoding. |

| Stimulation Hardware | Functional Electrical Stimulation (FES) systems [2], Transcranial Direct Current Stimulation (tDCS) devices [2] | Applies targeted energy (electrical current) to modulate neural activity or directly activate muscles. |

| Computational & AI Platforms | Custom CNN architectures, Pre-trained models (ResNet-50) [1], SVM & Random Forest classifiers [1] | Processes and decodes complex neural signals; classifies data; generates control commands for external devices. |

| Data & Analysis Platforms | Public neuroimaging datasets (e.g., BraTS) [1], Bayesian analysis frameworks (e.g., gemtc in R) [2] |

Provides standardized data for training and benchmarking; enables sophisticated statistical comparison of intervention efficacy. |

| Integrated BCI Software | Platforms from OpenBCI, Neurable [3] | Provides end-to-end software solutions for processing neural signals, implementing BCI paradigms, and connecting to output devices. |

| gamma-Glutamyl-lysine | epsilon-(gamma-Glutamyl)-lysine | Crosslink Biomarker | High-purity epsilon-(gamma-Glutamyl)-lysine for transglutaminase & fibrosis research. For Research Use Only. Not for human or veterinary use. |

| N-hydroxypipecolic acid | 1-Hydroxypiperidine-2-carboxylic Acid | | High-purity 1-Hydroxypiperidine-2-carboxylic acid for peptide & medicinal chemistry research. For Research Use Only. Not for human or veterinary use. |

Future Directions and Synthesis

The field of neurotechnology is moving toward multimodal integration, as evidenced by the superior ranking of combined BCI-FES and tDCS therapy [2]. The future of clinical validation will hinge on optimizing these synergistic protocols. Furthermore, artificial intelligence is now an indispensable component, driving advances from the analysis of neural signals in BCIs to the automated interpretation of medical images [7] [1] [8].

For researchers and drug development professionals, this signifies a strategic shift. Validating neurotechnologies requires a focus not only on standalone devices but also on how they combine to promote neuroplasticity. The integration of explainable AI (XAI) will be critical for building clinical trust [1]. As the market grows, successful translation will depend on rigorous, data-driven comparisons of these powerful tools, as outlined in this guide, to establish the evidence base required for regulatory approval and widespread clinical adoption.

The evolution of Brain-Computer Interfaces (BCIs) represents a transformative journey in neurotechnology, transitioning from fundamental observations of electrical activity in the brain to sophisticated systems that enable direct communication between the brain and external devices. This progression is characterized by critical milestones that have expanded our understanding of neural mechanisms while simultaneously advancing clinical applications for neurological disorders. The validation of these technologies within clinical research frameworks is paramount for translating laboratory innovations into tangible patient benefits, particularly for individuals with motor disabilities, speech impairments, and sensory deficits [4]. Modern BCI systems, whether non-invasive or invasive, operate on a core principle: establishing a direct pathway that converts neural signals into functional outputs, thereby changing the ongoing interactions between the brain and its external or internal environments [9]. This comparative guide objectively traces the historical trajectory of BCI development, with a specific focus on the technological and methodological shifts from early electroencephalography (EEG) to contemporary invasive neural interfaces, providing researchers and clinical professionals with a structured analysis of performance metrics, experimental protocols, and the essential toolkit driving this rapidly advancing field.

Historical Progression of BCI Technology

The development of brain-computer interfaces spans over a century, marked by foundational discoveries and technological breakthroughs that have progressively enhanced our ability to record and interpret neural signals.

Early Foundations and Non-Invasive EEG Beginnings

The conceptual origins of BCI technology are rooted in the 18th century with Luigi Galvani's pioneering experiments on bioelectricity, which demonstrated that electrical impulses could stimulate muscle contractions [10]. This foundational work paved the way for Richard Caton, who in 1875, first recorded electrical currents from the exposed cortical surfaces of rabbits and monkeys, providing the first evidence of brain electrical activity [10]. The single most significant milestone in non-invasive brain recording came in 1924 when German psychiatrist Hans Berger recorded the first human electroencephalogram (EEG), identifying the oscillating patterns known as "alpha waves" and establishing EEG as a viable tool for measuring brain activity [10]. The 1930s saw substantial refinements by Edgar Adrian and B.H.C. Matthews, who validated the correlation between rhythmic brain activity and function, while the 1950s and 1960s introduced critical standardization through Herbert Jasper's 10-20 system of electrode placement, which enhanced reproducibility and diagnostic accuracy in both clinical and research settings [11] [10]. The digital revolution of the 1970s and 1980s transformed EEG capabilities, enabling superior data storage, analysis, and signal processing, while the 1990s introduced high-density EEG (HD-EEG) systems that offered significantly improved spatial resolution for mapping brain functions [10].

The Shift to Invasive Neural Interfaces

While non-invasive EEG provided a safe and accessible method for monitoring brain activity, its limitations in signal resolution and specificity prompted the development of invasive interfaces for more sophisticated applications. The first major breakthrough in invasive BCIs was the development of the Utah array at the University of Utah in the 1980s [9] [12]. This device, a bed of 100 rigid needle-shaped electrodes, was first implanted in humans during clinical trials in the 1990s and became the gold standard for research, enabling individuals to control computers and robotic arms using their thoughts [12]. However, the Utah array's design caused significant limitations, including immune responses, scarring, and inflammation due to its penetration of brain tissue, resulting in a poor "butcher ratio"—a term describing the number of neurons killed relative to the number recorded from [12]. This challenge catalyzed the next wave of innovation, leading to the formation of specialized companies like Blackrock Neurotech (2008) and Paradromics (2015), which sought to refine the invasive approach [9] [12]. The contemporary landscape, as of 2025, features a diverse ecosystem of companies pursuing distinct strategies to optimize the trade-offs between signal fidelity, safety, and invasiveness, including Neuralink, Synchron, Precision Neuroscience, and significant international efforts such as China's first-in-human clinical trial led by the Chinese Academy of Sciences [9] [13].

The following timeline visualizes the key technological and methodological shifts that have defined the evolution of BCI from its early foundations to the modern era:

Comparative Analysis of Modern BCI Approaches

Modern BCI systems can be broadly categorized into non-invasive and invasive approaches, each with distinct operational principles, performance characteristics, and clinical applications. The fundamental divide between these approaches represents a core trade-off between accessibility and signal quality [12].

Non-Invasive BCI Technologies

Non-invasive BCIs, primarily using electroencephalography (EEG), remain the most accessible form of brain-computer interfacing. These systems detect electrical activity from the scalp surface without any surgical intervention. Recent advances have demonstrated remarkable capabilities; for instance, a 2025 study published in Nature Communications achieved real-time robotic hand control at the individual finger level using EEG-based motor imagery [14]. The study involved 21 able-bodied participants and achieved decoding accuracies of 80.56% for two-finger tasks and 60.61% for three-finger tasks using a deep neural network architecture, specifically EEGNet-8.2, with fine-tuning mechanisms enhancing performance across sessions [14]. Other non-invasive modalities include functional near-infrared spectroscopy (fNIRS), which uses light to measure blood flow changes in the brain, and magnetoencephalography (MEG), which detects magnetic fields generated by neural activity [15] [12]. While these methods avoid the risks of surgery, they face inherent challenges such as signal attenuation from the skull and scalp, limited spatial resolution, and a lower signal-to-noise ratio compared to invasive methods [14].

Invasive BCI Technologies

Invasive BCIs involve the surgical implantation of electrode arrays directly into or onto the brain tissue, providing superior signal quality and spatial resolution by bypassing the signal-filtering effects of the skull [12]. As of mid-2025, multiple venture-backed companies are advancing diverse invasive approaches through clinical trials [9]:

- Neuralink: Develops a coin-sized implant with thousands of micro-electrodes threaded into the cortex by a robotic surgeon. As of June 2025, the company reported that five individuals with severe paralysis are using the device to control digital and physical devices with their thoughts [9].

- Synchron: Employs a minimally invasive endovascular approach with its Stentrode device, which is delivered via blood vessels through the jugular vein and lodged in the motor cortex's draining vein. This method avoids craniotomy and has been tested in multiple patients who used it to control computers for texting and other functions [9] [12].

- Precision Neuroscience: Co-founded by a Neuralink alumnus, this company developed an ultra-thin electrode array called "Layer 7" that is designed to be inserted through a small slit in the dura mater, conforming to the cortical surface without penetrating brain tissue. In April 2025, it received FDA 510(k) clearance for commercial use with implantation durations of up to 30 days [9].

- Chinese BCI Initiative: In March 2025, a collaborative team from the Chinese Academy of Sciences and Huashan Hospital launched China's first-in-human clinical trial of an invasive BCI. The device features ultra-flexible neural electrodes "approximately 1% of the diameter of a human hair" and is about half the size of Neuralink's implant, according to the research team. The system completes the entire decoding process within tens of milliseconds, and the team aims to enable robotic arm control with the potential for market entry by 2028 [13].

The table below provides a structured comparison of the key performance metrics and characteristics of these modern BCI approaches:

Table 1: Performance Comparison of Modern BCI Technologies

| Technology / Company | Signal Type & Invasiveness | Key Performance Metrics | Primary Clinical Applications | Notable Advantages |

|---|---|---|---|---|

| EEG-based BCI [14] | Non-invasive (Scalp EEG) | 80.56% accuracy (2-finger), 60.61% (3-finger); Latency: Real-time | Motor rehabilitation, robotic control, communication | Completely non-invasive, portable, low-cost, established safety profile |

| Neuralink [9] | Invasive (Cortical microelectrodes) | High-bandwidth, thousands of recording channels; 5 human patients as of 6/2025 | Severe paralysis, motor control, communication | Ultra-high channel count, high spatial and temporal resolution |

| Synchron [9] [12] | Minimally Invasive (Endovascular) | Stable long-term recordings; No serious adverse events in 4-patient trial over 12 months | Paralysis, computer control for texting and communication | Avoids open-brain surgery, lower surgical risk, zero "butcher ratio" |

| Precision Neuroscience [9] | Invasive (Epicortical surface array) | FDA 510(k) cleared for up to 30-day implantation (4/2025) | Communication for ALS patients, motor control | "Peel and stick" implantation, minimal tissue damage, high-resolution signals |

| Chinese BCI (CEBSIT) [13] | Invasive (Ultra-flexible electrodes) | Decoding latency < tens of milliseconds; Device size: ~50% smaller than Neuralink | Spinal cord injury, amputations, ALS | Minimal tissue damage, miniaturized form factor, rapid decoding |

Experimental Protocols and Methodologies

The validation of BCI technologies, particularly for clinical applications, relies on rigorous experimental protocols designed to assess both safety and functional efficacy. The methodologies vary significantly between non-invasive and invasive approaches but share common elements of signal acquisition, processing, and output generation.

Protocol for Non-Invasive EEG BCI (Robotic Hand Control)

A landmark 2025 study demonstrated real-time, individual finger control of a robotic hand using non-invasive EEG [14]. The experimental workflow involved multiple systematic stages:

- Participants and Task Design: The study involved 21 able-bodied participants with prior BCI experience. Each participant performed both Movement Execution (ME) and Motor Imagery (MI) of individual fingers (thumb, index, pinky) on their dominant right hand.

- Signal Acquisition: EEG signals were acquired using a high-density electrode cap following the standard 10-20 placement system. The recording parameters included appropriate sampling rates and bandpass filtering to capture relevant neural oscillatory activity.

- Signal Processing and Decoding: The core of the methodology utilized a deep learning approach with the EEGNet-8.2 architecture. This convolutional neural network was specifically optimized for EEG-based BCI systems. The model was first pre-trained on a base dataset and then fine-tuned using same-day data from the first half of each online session to address inter-session variability.

- Real-Time Control and Feedback: The decoded output was converted into control commands for a robotic hand. Participants received two forms of feedback: (1) visual feedback on a screen where the target finger changed color (green for correct, red for incorrect), and (2) physical feedback from the robotic hand, which moved the corresponding finger in real time. The feedback period began one second after trial onset.

- Performance Validation: Task performance was quantified using majority voting accuracy, calculated as the percentage of trials where the predicted class (based on the majority vote of classifier outputs over multiple segments) matched the true target class. Precision and recall for each finger class were also computed to evaluate decoding robustness [14].

Protocol for Invasive BCI Clinical Trials

Invasive BCI trials follow stringent clinical and regulatory protocols focused on patient safety and device functionality. The recent Chinese first-in-human trial provides a representative model of this process [13]:

- Patient Selection and Surgical Planning: The first participant was a male who lost all four limbs in an electrical accident 13 years prior. Preoperative planning involved multiple imaging and positioning methods to identify the precise target region in the motor cortex, with planning accuracy required to the millimeter.

- Minimally Invasive Implantation: The surgical team utilized minimally invasive neurosurgical techniques to reduce risks and shorten recovery time. The ultra-flexible neural electrodes (approximately 1% the diameter of a human hair) were implanted into the designated motor cortex area using high-precision navigation.

- Signal Acquisition and Device Operation: The coin-sized implant (26mm diameter, <6mm thick) was designed to acquire high-fidelity single-neuron signals stably. The system's core functionality is real-time decoding, completing the entire process of neural signal extraction, movement intent interpretation, and control command generation within tens of milliseconds.

- Safety and Efficacy Monitoring: Post-implantation, the patient's health and device function were continuously monitored. As of the report, the device had operated stably with no infection or electrode failure. The functional outcome was demonstrated by the patient's ability to play chess and racing games using only his mind.

- Functional Progression and Long-Term Goals: Following initial validation, the research team planned to progress to more complex tasks, including controlling a robotic arm to grasp objects and eventually exploring control of complex devices like robot dogs to expand the patient's life boundaries [13].

The following diagram illustrates the core signal processing workflow common to both invasive and non-invasive BCI systems, highlighting the closed-loop nature of modern BCIs:

The Scientist's Toolkit: Essential Research Reagents and Materials

The advancement and validation of BCI technologies rely on a sophisticated ecosystem of hardware, software, and analytical tools. The following table details key components of the modern BCI research toolkit, their specific functions, and their relevance to experimental protocols.

Table 2: Essential Research Tools and Reagents for BCI Development and Validation

| Tool/Reagent Category | Specific Examples | Function & Application in BCI Research |

|---|---|---|

| Electrode Technologies | Wet/Gel Electrodes [15], Dry Electrodes [15], Utah Array [9] [12], Ultra-Flexible Micro-Electrodes [13] | Signal acquisition; Dry electrodes improve usability for consumer applications, while flexible micro-electrodes minimize tissue damage in invasive BCIs. |

| Signal Acquisition Systems | High-Density EEG Systems [10], Neuroelectrics Starstim & Enobio [10], Blackrock Neurotech Acquisition Systems [9] | Amplification, digitization, and initial processing of raw neural signals; HD-EEG provides improved spatial resolution for non-invasive mapping. |

| Decoding Algorithms | EEGNet & Variants [14], Support Vector Machines (SVM) [16], Long Short-Term Memory (LSTM) Networks [16] | Feature extraction and classification of neural signals; Deep learning models (e.g., EEGNet) automatically learn features from raw data, boosting performance. |

| Validation Metrics | Classification Accuracy [16] [14], Precision & Recall [14], Latency (Milliseconds) [13], "Butcher Ratio" [12] | Quantifying BCI performance and safety; Accuracy and latency measure efficacy, while the "butcher ratio" assesses invasiveness and tissue damage. |

| Clinical Trial Platforms | FDA IDE (Investigational Device Exemption) [9], First-in-Human Trial Protocols [13], Integrated Human Brain Research Networks [17] | Regulatory frameworks for translating devices from lab to clinic; Ensure patient safety, ethical standards, and scientific rigor during clinical validation. |

| 2-(1-Adamantyl)quinoline-4-carboxylic acid | 2-(1-Adamantyl)quinoline-4-carboxylic Acid|CAS 119778-65-3 | High-purity 2-(1-Adamantyl)quinoline-4-carboxylic acid for research. Explore its application as a DPP-IV inhibitor scaffold. For Research Use Only. Not for human or veterinary use. |

| 5,6-Dimethoxyisobenzofuran-1(3H)-one | 5,6-Dimethoxyisobenzofuran-1(3H)-one | Research Chemical | High-purity 5,6-Dimethoxyisobenzofuran-1(3H)-one for research applications. A key synthon in organic synthesis. For Research Use Only. Not for human or veterinary use. |

The historical evolution from early EEG to modern invasive BCIs reveals a clear trajectory toward higher-fidelity neural interfaces with expanding clinical applications. The field stands in 2025 at a pivotal juncture, comparable to where gene therapies were in the 2010s, poised on the cusp of transitioning from experimental research to regulated clinical use [9]. Future validation efforts will be shaped by several key trends. The integration of artificial intelligence and deep learning will continue to enhance decoding algorithms, potentially bridging the performance gap between non-invasive and invasive methods [16] [14]. Furthermore, the development of personalized digital prescription systems that deliver customized therapeutic strategies via digital platforms represents a promising frontier for clinical neurotechnology [4]. Large-scale government initiatives, such as the NIH BRAIN Initiative, continue to play a crucial role in accelerating this progress by fostering interdisciplinary collaborations and addressing the ethical implications of neuroscience research [17]. As these technologies mature, the focus for researchers and clinical professionals will increasingly shift toward standardized validation protocols, long-term safety studies, and the development of robust regulatory pathways that ensure these revolutionary interfaces can safely and effectively improve the lives of patients with neurological disorders.

Neurotechnology encompasses a suite of methods and electronic devices that interface with the nervous system to monitor or modulate neural activity [18]. This field has evolved from foundational discoveries in the 18th century to sophisticated systems that now enable direct communication between the brain and external devices [18]. Brain-Computer Interfaces (BCIs), a remarkable technological advancement in neurology and neurosurgery, effectively convert central nervous system signals into commands for external devices, offering revolutionary benefits for patients with severe communication and motor impairments [19]. These systems create direct communication pathways that bypass normal neuromuscular pathways, allowing interaction through thought alone [18]. The architecture of any BCI consists of four sequential components: signal acquisition (measuring brain signals), feature extraction (distinguishing relevant signal characteristics), feature translation (converting features into device commands), and device output (executing functions like cursor movement or prosthetic control) [18].

The classification of neural interfaces primarily revolves around their level of invasiveness and anatomical placement, which directly correlates with their signal quality, spatial resolution, and risk profile. Non-invasive systems are positioned on the scalp surface and represent the safest but lowest-fidelity approach. Partially invasive systems are implanted within the skull but rest on the brain surface without penetrating neural tissue. Fully invasive systems penetrate the brain parenchyma to record from individual neurons, offering the highest signal quality at the cost of greater surgical risk and potential for scar tissue formation [18]. This technological spectrum presents researchers and clinicians with critical trade-offs between signal fidelity, safety, and practical implementation that must be carefully balanced for specific applications.

Comparative Analysis of Neurotechnology Systems

The selection of an appropriate neural interface methodology requires careful consideration of technical specifications, performance characteristics, and implementation challenges. The tables below provide a comprehensive comparison of the three major categories of neurotechnology systems across multiple dimensions relevant to research and clinical applications.

Table 1: Technical Specifications and Performance Benchmarks

| Parameter | Non-Invasive Systems | Partially Invasive Systems | Fully Invasive Systems |

|---|---|---|---|

| Spatial Resolution | 1-10 cm (limited by skull/skin) [18] | 1-10 mm (higher than EEG) [18] | 50-500 μm (single neuron level) [18] |

| Temporal Resolution | Millisecond level (excellent) [18] | Millisecond level (excellent) | Millisecond level (excellent) |

| Signal Fidelity | Low (attenuated by skull/skin) [18] | Medium (superior signal-to-noise) [18] | High (direct neural recording) [18] |

| Primary Technologies | EEG, fNIRS, MEG [15] [18] | ECoG, Stentrode [9] [18] | Utah Array, Neuralace, Microelectrodes [15] [9] |

| Penetration Depth | Superficial (scalp surface) | Cortical surface (subdural) [18] | Brain parenchyma [18] |

| Risk Profile | Minimal risk [3] | Moderate risk (surgical implantation) [3] | High risk (tissue damage, scarring) [18] |

Table 2: Research and Clinical Implementation Considerations

| Consideration | Non-Invasive Systems | Partially Invasive Systems | Fully Invasive Systems |

|---|---|---|---|

| Target Applications | Research, neurofeedback, sleep monitoring, consumer applications [15] [3] | Speech decoding, motor control, epilepsy monitoring [9] [20] | Paralysis treatment, advanced motor control, neural decoding [19] [9] |

| Regulatory Status | Widely approved for clinical and consumer use [3] | FDA Breakthrough Designations (e.g., Synchron) [3] [9] | Experimental (human trials ongoing) [9] |

| Market Share (2024) | ~76.5% of BCI market [3] | Emerging segment | Niche (research-focused) |

| Longevity/Stability | Stable for short-term use | Months to years [9] | Years (but signal degradation possible) [18] |

| Key Advantages | Safety, accessibility, ease of use [3] | Balance of signal quality and safety [9] | Highest bandwidth and precision [9] |

| Key Limitations | Poor spatial resolution, noise susceptibility [18] | Limited to surface signals, surgical risk [9] | Tissue damage, scar formation, highest risk [18] |

Table 3: Representative Companies and Platforms by Interface Type

| Interface Category | Representative Companies/Platforms | Technology Specifics | Development Stage |

|---|---|---|---|

| Non-Invasive | OpenBCI, Neurable, NextMind (Snap Inc.) [3] | EEG-based headsets | Consumer/Research |

| Partially Invasive | Synchron (Stentrode) [9], Precision Neuroscience (Layer 7) [9] | Endovascular stent electrodes [9], cortical surface film [9] | Human trials [9], FDA clearance for temporary use [9] |

| Fully Invasive | Neuralink [15] [9], Blackrock Neurotech [15] [9], Paradromics [3] [9] | Utah array [9], neural threads [9], high-channel-count arrays [9] | Human trials [9] |

Experimental Protocols for Neural Signal Decoding

General BCI Workflow and Signal Processing

The fundamental experimental workflow for brain-computer interfaces follows a standardized sequence from signal acquisition to device output, with variations depending on the specific technology platform. The diagram below illustrates this core processing pipeline, which is consistent across invasive, partially invasive, and non-invasive systems, though implementation details differ significantly.

This closed-loop design forms the foundation for most modern BCI experiments. In the signal acquisition phase, different recording technologies capture neural activity: EEG systems use scalp electrodes (typically 1-128 channels), ECoG systems employ electrode grids or strips placed on the cortical surface (20-256 channels), and invasive microelectrode arrays record from dozens to thousands of individual neurons [18]. The preprocessing stage applies bandpass filtering (typically 0.5-300 Hz for EEG/ECoG, 300-7500 Hz for spike sorting), removes artifacts (e.g., ocular, muscular, or line noise), and segments data into analysis epochs. Feature extraction transforms raw signals into meaningful neural representations, which may include power spectral densities in standard frequency bands (theta: 4-8 Hz, alpha: 8-12 Hz, beta: 13-30 Hz, gamma: 30-200 Hz), spike rates and waveforms, or cross-channel coherence metrics [20].

Cross-Patient Decoding Protocol Using Connectomics

Recent advances in generalizable neural decoding have addressed a critical limitation of traditional BCI systems: their reliance on patient-specific training data. The py_neuromodulation platform represents a methodological innovation that enables cross-patient decoding through connectomic mapping [20]. The experimental protocol for this approach involves several sophisticated steps that integrate neuroimaging with electrophysiological signal processing.

The experimental methodology begins with electrode localization using pre- or post-operative magnetic resonance imaging (MRI) coupled with computed tomography (CT) scans to co-register recording contacts to standard Montreal Neurological Institute (MNI) space. This spatial normalization enables normative connectome mapping, where each electrode's location is enriched with structural connectivity data from diffusion tensor imaging (DTI) tractography and/or functional connectivity from resting-state fMRI databases. Researchers then perform performance-connectivity correlation analysis to identify network "fingerprints" predictive of successful decoding—for movement decoding, this typically reveals optimal connectivity to primary sensorimotor cortex, supplementary motor area, and thalamocortical pathways [20].

The core innovation lies in a priori channel selection, where individual recording channels are selected based on their network similarity to the optimal template rather than patient-specific calibration. Finally, feature embedding using contrastive learning approaches (e.g., 5-layer convolutional neural networks with InfoNCE loss function) transforms neural features into lower-dimensional representations that show exceptional consistency across participants [20]. This protocol has demonstrated significant above-chance decoding accuracy for movement detection (rest vs. movement) without patient-specific training across Parkinson's disease and epilepsy cohorts, achieving balanced accuracy of 0.8 and movement detection rate of 0.98 in the best channel per participant [20].

Key Experimental Considerations and Controls

When implementing BCI experiments, researchers must account for several critical factors that significantly impact decoding performance:

- Disease Severity Effects: In Parkinson's disease studies, movement decoding performance shows a significant negative correlation with clinical symptom severity (UPDRS-III; Spearman's rho = -0.36; P = 0.02), suggesting that neurodegeneration impacts neural encoding [20].

- Stimulation Artifacts: Therapeutic deep brain stimulation (130 Hz STN-DBS) can deteriorate sample-wise decoding performance, and models trained separately for OFF and ON stimulation conditions outperform models trained on either condition alone [20].

- Validation Methodologies: Rigorous cross-validation strategies are essential, including within-participant temporal validation, leave-one-movement-out validation, and critical for clinical translation, leave-one-participant-out or even leave-one-cohort-out validation [20].

- Performance Metrics: Balanced accuracy is preferred over raw accuracy for imbalanced datasets (e.g., more rest than movement samples), complemented by movement detection rates defined as 300 ms of consecutive movement classification [20].

Research Reagent Solutions for Neural Interface Studies

The experimental workflows described above rely on specialized tools, platforms, and methodologies that constitute the essential "research reagent solutions" for neural interface studies. The table below details key resources available to researchers in this field.

Table 4: Essential Research Tools and Platforms for Neural Interface Studies

| Resource Category | Specific Tools/Platforms | Primary Function | Research Application |

|---|---|---|---|

| Signal Processing Platforms | py_neuromodulation [20] | Modular feature extraction for invasive neurophysiology | Machine learning-based brain signal decoding |

| Digital Brain Atlases | EBRAINS Research Infrastructure [21] | Multiscale computational modeling, digital brain twins | Personalizing virtual brain models for clinical applications |

| Electrode Technologies | Utah Array (Blackrock) [9], Neural Threads (Neuralink) [9], Stentrode (Synchron) [9] | Neural signal acquisition at various resolutions | Chronic recording, BCI control, clinical neuromodulation |

| Feature Extraction Methods | Oscillatory dynamics, waveform shape, aperiodic activity, Granger causality, phase amplitude coupling [20] | Quantifying diverse aspects of neural signaling | Identifying biomarkers for brain states and behaviors |

| Normative Connectomes | HCP, UK Biobank, local database derivatives [20] | Providing standardized structural/functional connectivity maps | Cross-patient decoding, target identification for neuromodulation |

| Clinical BCI Platforms | BrainGate [18], Neuralink Patient Registry [9] | Feasibility studies in human participants | Translational research for severe neurological conditions |

Clinical Applications and Validation Studies

Medical Applications with Strongest Evidence

The most clinically validated application of neurotechnology to date is Deep Brain Stimulation (DBS), which received FDA approval for essential tremor in 1997, Parkinson's disease in 2002, and dystonia in 2003 [18]. DBS employs surgically implanted electrodes that deliver electrical current to precise brain regions, effectively reducing tremors and other Parkinson's symptoms [18]. For individuals with paralysis, the BrainGate clinical trial—the largest and longest-running BCI trial—has reported positive safety results in patients with quadriparesis from spinal cord injury, brainstem stroke, and motor neuron disease [18]. Recent advances include speech BCIs that infer words from complex brain activity at 99% accuracy with <0.25 second latency, enabling communication for completely locked-in patients [9].

The clinical translation landscape has accelerated dramatically, with numerous companies conducting human trials as of 2025. Neuralink reports five individuals with severe paralysis now using their interface to control digital and physical devices [9]. Synchron has implanted its Stentrode device in patients who can control computers, including texting, using thought alone, with no serious adverse events at 12-month follow-up [9]. Blackrock Neurotech has the most extensive human implantation experience, with its Utah array helping patients with paralysis gain mobility and independence [18].

Emerging Clinical Applications

Beyond motor restoration, neurotechnology shows promise for several emerging clinical applications:

- Psychiatric Disorders: Connectomics-informed decoding has revealed network targets for emotion decoding in left prefrontal and cingulate circuits in deep brain stimulation patients with major depression [20].

- Epilepsy Management: Closed-loop neurostimulation systems can detect seizure precursors and deliver responsive stimulation, with decoding approaches showing opportunities to improve seizure detection in responsive neurostimulation [20].

- Stroke Rehabilitation: BCIs are being investigated to facilitate neural plasticity and recovery of motor function after stroke by creating artificial connections between brain activity and peripheral stimulation or robotic assistance.

- Sleep Disorders: Neurotechnology applications include deep brain stimulation for treatment-resistant sleep disorders and closed-loop systems that modulate neural circuits based on sleep-stage detection.

Validation Frameworks and Regulatory Considerations

The validation of neurotechnologies for clinical applications requires rigorous frameworks that address both efficacy and safety. The FDA Breakthrough Device designation has been granted to multiple BCI companies, including Paradromics, reflecting recognition of the potential for these technologies to address unmet needs in life-threatening or irreversibly debilitating conditions [3] [9]. Clinical validation typically proceeds through staged feasibility studies—first testing safety and basic functionality in small patient cohorts, then expanding to demonstrate clinical benefits in controlled trials.

For invasive technologies, long-term safety profiles are particularly important, as tissue response to chronic implantation can lead to signal degradation over time due to glial scarring [18]. The development of standardized performance metrics is essential for cross-technology comparisons, with parameters such as information transfer rate (bits per minute), accuracy, latency, and longevity serving as key benchmarks [19]. As the field progresses toward broader clinical adoption, regulatory science must evolve to address unique challenges in neural interfaces, including the ethics of neuroenhancement, brain data privacy, and appropriate use of brain data in various applications [3] [18].

The neurotechnology spectrum encompasses a diverse range of systems with complementary strengths and limitations. Non-invasive interfaces offer safety and accessibility but limited spatial resolution; partially invasive systems balance signal quality with reduced risk; and fully invasive technologies provide the highest fidelity signals at the cost of greater surgical risk and potential for tissue damage [18]. The choice between these approaches depends fundamentally on the specific clinical or research application, with non-invasive methods currently dominating the market (~76.5% share in 2024) while invasive platforms offer the most promise for advanced medical applications [3].

Recent methodological innovations, particularly in cross-patient decoding using connectomic approaches [20] and the development of standardized processing platforms like py_neuromodulation, are addressing critical barriers to clinical adoption. The integration of brain signal decoding with neuromodulation therapies represents a frontier in precision medicine, enabling dynamic adaptation of neurotherapies in response to individual patient needs [20]. As the field advances, key challenges remain in ensuring long-term stability of neural interfaces, developing robust decoding algorithms that generalize across patients and conditions, and establishing ethical frameworks for the use of these transformative technologies [3]. With continued progress in neurotechnology development and validation, these approaches hold immense potential to restore function for patients with neurological disorders and fundamentally expand our understanding of brain function.

Neurotechnology is rapidly transitioning from experimental research to validated clinical applications, offering novel therapeutic strategies for some of the most challenging neurological disorders. This evolution is marked by a shift from generalized symptomatic treatment toward precision neuromodulation approaches that adapt to real-time neural signals. The validation of these technologies through rigorous clinical experimentation is establishing a new paradigm for treating neurological conditions based on direct circuit manipulation and neural decoding. This comparison guide objectively analyzes the performance metrics, experimental protocols, and clinical validation data for emerging neurotechnologies across five core clinical domains: Parkinson's disease, epilepsy, chronic pain, paralysis, and mental health disorders, providing researchers with critical insights for guiding future development efforts.

Performance Comparison Tables

Table 1: Clinical Performance Metrics of Neurotechnologies by Disorder

| Clinical Target | Technology Type | Key Efficacy Metrics | Study Parameters | Reported Outcomes |

|---|---|---|---|---|

| Parkinson's Disease | Adaptive Deep Brain Stimulation (aDBS) [22] | Motor symptom reduction, Stimulation efficiency | AI-guided closed-loop system | ≈50% reduction in severe symptoms [22] |

| Wearable Sensors for Rehabilitation Quantification [23] | Body surface temperature change, Activity indices | Stretching and treadmill exercises | Significant temperature increase vs. other methods [23] | |

| Digital Symptom Diaries [23] | Compliance rate, Accuracy vs. clinical examination | "MyParkinson's" app vs. paper tracking | Substantially better compliance & accuracy; 65% patient preference for digital [23] | |

| Epilepsy | Closed-loop Neurostimulation (enCLS Device) [24] | Seizure prevention in drug-resistant epilepsy | Early network stimulation | Prototype development phase [24] |

| Responsive Neurostimulation [25] | Seizure frequency reduction, Consciousness preservation | Thalamic stimulation during seizures | Restoration of consciousness during seizures [25] | |

| Cenobamate (Drug-Resistant Focal Epilepsy) [25] | Seizure freedom rate | Pharmacological intervention | Hope for drug-resistant cases, especially with early use [25] | |

| Chronic Pain | Advanced Neuromodulation Therapies [26] | Pain reduction scores, Functional improvement | Targeted electrical nerve stimulation | Improved precision & remote monitoring capabilities [26] |

| Next-Generation Regenerative Medicine [26] | Tissue repair markers, Pain reduction | PRP, Stem cell injections | More potent formulations & expanded applications [26] | |

| Paralysis | Intracortical Brain-Computer Interface (BCI) [22] | Movement accuracy, Task completion | Thought-controlled virtual drone | Successful navigation of 18 virtual rings in <3 minutes [22] |

| Brain-Spine 'Digital Bridge' [22] | Functional mobility restoration | Wireless motor cortex to spinal cord interface | Walking, stair climbing, standing via thought [22] | |

| Endovascular BCI (Stentrode) [22] | Communication device control accuracy | Motor cortex recording via blood vessels | Text, email, smart home control by patients with paralysis [22] | |

| Speech Restoration BCI [22] | Word decoding accuracy, Communication speed | Implant in speech-related cortex | 97% accuracy for ALS speech; 80 words/minute [22] |

Table 2: Technical Specifications and Implementation Status

| Technology Platform | Invasiveness Level | Key Technological Features | Clinical Trial Stage | Regulatory Status |

|---|---|---|---|---|

| Implantable DBS Systems [22] | Invasive (deep brain) | Closed-loop sensing, Adaptive stimulation | Expanded human trials | CE mark for aDBS in Europe; FDA approvals pending [22] |

| Fully Implanted BCIs (e.g., Neuralink N1) [27] [22] | Invasive (cortical) | 1024 electrodes, 64 threads, Wireless data/power | PRIME Study (early feasibility) | FDA approval for in-human trials [27] [22] |

| Endovascular BCIs (e.g., Stentrode) [22] | Minimally invasive (via blood vessels) | Motor cortex recording, No open-brain surgery | COMMAND Trial | FDA Breakthrough Device designation [22] |

| Cortical Surface Arrays (e.g., ECoG) [22] | Partially invasive (brain surface) | 253-electrode grid, High-resolution signal capture | Human clinical trials | Research use / investigational devices [22] |

| Wearable Sensors [23] | Non-invasive | Body temperature, Motion/activity tracking | Clinical validation studies | Commercially available for research [23] |

| Focused Ultrasound (fUS) [25] | Non-invasive / Minimally invasive | Blood-brain barrier opening, Tissue ablation | Animal models / early human | Pre-clinical / experimental stage [25] |

Experimental Protocols and Methodologies

Adaptive Deep Brain Stimulation for Parkinson's Disease

The validation of aDBS systems employs sophisticated protocols to establish causal links between neural activity and symptom expression. In recent trials, researchers implanted DBS systems with sensing capabilities in target structures such as the subthalamic nucleus. The experimental workflow involves simultaneous recording of local field potentials and quantitative assessment of motor symptoms (e.g., tremor, bradykinesia) using standardized clinical rating scales. Machine learning algorithms, particularly those derived from artificial intelligence, are trained to detect pathological beta-band oscillatory activity correlated with symptom severity. In the closed-loop condition, the system automatically adjusts stimulation parameters in response to these neural biomarkers, contrasting with traditional continuous stimulation. The validation protocol includes double-blind crossover assessments where neither patients nor evaluators know the stimulation mode (adaptive versus conventional), with primary efficacy endpoints focusing on symptom reduction and battery consumption metrics [22].

Closed-Loop Seizure Detection and Prevention in Epilepsy

The Epileptic-Network Closed-loop Stimulation Device (enCLS) represents a next-generation approach to seizure control currently in development. The experimental methodology involves building and validating a working prototype system capable of early seizure network intervention. Researchers utilize large datasets of intracranial EEG recordings from patients undergoing monitoring for drug-resistant epilepsy, particularly from high-volume centers with extensive responsive neurostimulation experience. The protocol focuses on advanced brain network modeling to identify pre-seizure neural states that can be targeted for preventive stimulation. The system is being designed to apply stimulation at the earliest detected stages of seizure generation, aiming to prevent clinical manifestation rather than terminate established seizures. Validation metrics include accurate detection of pre-ictal states, stimulation efficacy in aborting seizure development in computational models, and system safety profiles. This research aims to translate findings from animal models into a clinically viable device for future human trials [24] [25].

Brain-Computer Interface Validation for Paralysis

BCI performance is validated through rigorously controlled experiments with defined functional outcomes. For motor restoration, intracortical implants (such as the N1 implant) are placed in the hand and arm region of the motor cortex. Participants with cervical spinal cord injury or ALS are asked to attempt specific movements while neural signals are recorded. The experimental protocol involves several phases: initial calibration to map neural activity patterns to movement intentions, supervised training with real-time feedback, and finally assessment of independent device control. For communication BCIs, validation involves measuring accuracy and speed of intended speech decoding or cursor control. Performance is quantified using information transfer rate (bits per minute) and accuracy compared to intended commands. Studies typically employ cross-validation techniques, where data from some sessions trains the algorithm and separate held-out sessions test generalization. For sensory restoration protocols, researchers deliver calibrated tactile or thermal stimuli to prosthetic limbs while recording corresponding neural stimulation through the BCI, measuring participants' ability to correctly identify stimulus location and type [27] [22].

Signaling Pathways and System Workflows

Closed-Loop Neuromodulation Pathway

Closed-Loop Neuromodulation Pathway

Brain-Computer Interface Workflow

Brain-Computer Interface Workflow

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Research Materials and Platforms

| Research Tool Category | Specific Examples | Research Application & Function |

|---|---|---|

| Implantable Neurostimulators | aDBS with sensing capability [22], Responsive Neurostimulation (RNS) System [25] | Closed-loop neuromodulation; delivers therapeutic stimulation in response to detected neural biomarkers. |

| Neural Signal Acquisition Systems | N1 Implant [22], ECoG Arrays [22], Stentrode [22] | High-fidelity recording of neural populations; provides data for decoding algorithms and biomarker discovery. |

| AI & Data Analysis Platforms | Machine Learning Decoders [22], Brain Network Modeling Tools [24] | Translates neural signals into commands; identifies pathological network states for intervention. |

| Wearable Motion Sensors | Inertial Measurement Units (IMUs) [23], Surface Temperature Sensors [23] | Quantifies motor symptoms and rehabilitation outcomes; provides objective movement metrics. |

| Digital Phenotyping Tools | "MyParkinson's" Digital Diary [23], Other Health Applications | Tracks symptom progression and medication response electronically; minimizes recall bias. |

| Minimally Invasive Delivery Systems | R1 Surgical Robot [27], Endovascular Catheters [22] | Precisely implants electrodes with minimal tissue disruption; enables safer implantation procedures. |

| 2-Bromo-3,5,5-trimethylcyclohex-2-EN-1-one | 2-Bromo-3,5,5-trimethylcyclohex-2-EN-1-one | RUO | High-purity 2-Bromo-3,5,5-trimethylcyclohex-2-EN-1-one for research. A versatile synthon in organic chemistry. For Research Use Only. Not for human or veterinary use. |

| (5-Fluoro-1H-indol-3-YL)methanamine | (5-Fluoro-1H-indol-3-yl)methanamine|CAS 113188-82-2 | High-purity (5-Fluoro-1H-indol-3-yl)methanamine for antimicrobial and pharmaceutical research. For Research Use Only. Not for human use. |

The global neurotechnology market is experiencing unprecedented growth, propelled by a convergence of technological innovation, increasing prevalence of neurological disorders, and substantial investment from both public and private sectors. This expansion represents a paradigm shift in how researchers, scientists, and drug development professionals approach the diagnosis, treatment, and management of conditions affecting the nervous system. Neurotechnology, defined as technical development that enables the investigation and treatment of neurological processes, has evolved from a niche field to a mainstream therapeutic and diagnostic domain [28]. The market's trajectory underscores its critical role in addressing some of the most challenging neurological conditions, including Alzheimer's disease, Parkinson's disease, epilepsy, and chronic pain syndromes.

The significance of this market expansion lies in its potential to transform patient outcomes through innovative solutions that either complement or surpass traditional pharmacological approaches. Current therapeutic approaches for neurological disorders primarily focus on managing symptoms rather than addressing underlying pathology, creating a substantial unmet medical need [29]. Neurotechnology offers promising alternatives through devices that can record, stimulate, or translate neural activity, providing new avenues for restoration of function and quality of life improvement. This growth is not merely quantitative but qualitative, with advancements in brain-computer interfaces, neurostimulation devices, and neuroprosthetics redefining the boundaries of neurological care [30].

Framing this expansion within the context of neurotechnology validation and clinical applications research provides crucial insights for professionals navigating this rapidly evolving landscape. The transition from experimental prototypes to clinically validated tools requires rigorous evaluation methodologies and standardized protocols. This comparison guide examines the key growth drivers, investment patterns, and clinical validation pathways that are shaping the neurotechnology ecosystem, with particular emphasis on quantitative metrics that enable objective assessment of technological and commercial trajectories.

Market Size and Growth Projections

The neurotechnology market demonstrates robust growth across multiple forecasting models, with consistent double-digit compound annual growth rates (CAGR) projected through the mid-2030s. This expansion reflects both increasing adoption of existing technologies and the emergence of novel platforms that address previously unmet clinical needs. The table below synthesizes market size estimates and growth projections from leading industry analyses:

| Source | 2024/2025 Market Size | 2034/2035 Projected Market Size | CAGR | Key Segments |

|---|---|---|---|---|

| Precedence Research | $15.30 billion (2024) | $52.86 billion (2034) | 13.19% (2025-2034) | Neurostimulation, neurosensing, neuroprostheses [31] [28] |

| Towards Healthcare | $15.35 billion (2024) | $53.18 billion (2034) | 13.23% (2025-2034) | Neurostimulation, neuroprostheses [32] |

| Future Market Insights | $17.8 billion (2025) | $65.0 billion (2035) | 13.8% (2025-2035) | Pain management, cognitive disorders, epilepsy [33] |

| IMARC Group | $12.6 billion (2024) | $31.1 billion (2033) | 10.01% (2025-2033) | Imaging modalities, neurostimulation [34] |

Regional analysis reveals distinct growth patterns and adoption rates. North America dominated the market with a 36-37% share in 2024, driven by advanced healthcare infrastructure, favorable regulatory frameworks, and significant investment in research and development [31] [32]. The United States neurotechnology market alone was valued at $3.86 billion in 2024 and is predicted to reach $13.60 billion by 2034, rising at a CAGR of 13.42% [28]. However, the Asia-Pacific region is projected to witness the fastest growth during forecast periods, fueled by growing investments in medical technology, rising neurological disorder cases, and government initiatives supporting healthcare innovation [31]. China and India are particularly notable, with CAGRs of 18.6% and 17.3% respectively through 2035 [33].

Segment-level analysis provides further insight into market dynamics. The neurostimulation segment held the largest market share in 2024, valued for its applications in treating conditions such as epilepsy, movement disorders, chronic pain, and Parkinson's disease [32] [28]. Meanwhile, the neuroprostheses segment is estimated to grow at the fastest rate during forecast periods, representing the cutting edge of neural interface technology [32]. Among conditions, pain management currently commands the largest market share (22.8% in 2025), while Parkinson's disease treatment is expected to register the highest growth rate, supported by technological breakthroughs in deep brain stimulation [31] [33]. For end-users, hospitals accounted for the largest market share (47.3% in 2025), but homecare facilities are anticipated to grow at the fastest CAGR, signaling a shift toward decentralized care models [31] [33].

Key Growth Drivers and Investment Landscape

Primary Market Catalysts

The expansion of the neurotechnology market is underpinned by several interconnected factors that create a favorable environment for innovation and commercialization. Understanding these drivers is essential for researchers and drug development professionals seeking to identify promising areas for investment and development.

Rising Prevalence of Neurological Disorders: The global burden of neurological conditions continues to increase, with more than three billion individuals worldwide affected by neurological disorders in 2021 according to a study published by The Lancet Neurology [34]. This growing patient population creates substantial demand for effective diagnostic and therapeutic solutions. Age-related neurodegenerative diseases are particularly significant, with the Alzheimer's Association reporting that an estimated 6.9 million people in the United States aged 65 and older were living with Alzheimer's in 2024 [34]. The aging global population ensures continued expansion of this addressable market.

Technological Advancements: Breakthroughs in multiple domains are accelerating neurotechnology capabilities. Miniaturization of medical electronics has enabled the development of wearable and implantable devices with improved patient compliance and functionality [33]. Artificial intelligence and machine learning integration enhance diagnostic accuracy and enable predictive modeling for neurological diseases [32] [34]. Improvements in signal processing algorithms allow for more sophisticated interpretation of neural data. These technological innovations are collectively expanding the applications and effectiveness of neurotechnologies.

Substantial Investment and Funding: The neurotechnology sector has experienced a significant influx of capital from diverse sources. Between 2014 and 2021, investments in neurotechnology companies increased by 700%, totaling €29.20 billion [35]. This funding ecosystem includes venture capital, government grants, and strategic corporate investments. Notable recent funding rounds include Precision Neuroscience's $102 million Series C round in December 2024 and INBRAIN Neuroelectronics' $50 million Series B round in October 2024 [32]. Such substantial financial support enables extensive research and development activities and facilitates the translation of promising technologies from laboratory to clinical practice.

Regulatory Support and Policy Initiatives: Government agencies worldwide have implemented policies and programs to support neurotechnology development. The U.S. FDA's Breakthrough Devices Program has accelerated approvals for innovative neurotechnologies, including the world's first adaptive deep-brain stimulation system for Parkinson's patients [30]. Similarly, China's 2025–2030 action plan lists brain-computer interfaces among its strategic industries, backed by dedicated grant lines and commercialization incentives [30]. These regulatory frameworks create pathways for efficient translation of research innovations into clinically available tools.

Investment Distribution and Strategic Focus

Investment in neurotechnology is strategically distributed across multiple application areas and technology platforms, reflecting the diverse approaches to addressing neurological disorders. The table below outlines key investment areas and their respective focuses:

| Investment Area | Primary Focus | Notable Examples | Clinical Applications |

|---|---|---|---|

| Brain-Computer Interfaces (BCIs) | Developing direct communication pathways between the brain and external devices | Precision Neuroscience, Neuralink, Synchron | Restoring motor function, communication for paralyzed patients, cognitive enhancement [32] [30] |

| Neurostimulation Devices | Modulating neural activity through electrical stimulation | Medtronic's Inceptiv system, adaptive deep-brain stimulation | Parkinson's disease, chronic pain, depression, epilepsy [30] |

| Neuroprosthetics | Replacing or supporting damaged neurological functions | Cochlear implants, motor neuroprosthetics | Hearing loss, paralysis, limb loss [30] |

| Stem Cell Therapies | Regenerating damaged neural tissue through cell transplantation | Mesenchymal stem cells, neural stem cells, induced pluripotent stem cells | Parkinson's disease, Alzheimer's disease, spinal cord injury, stroke [29] |

| Digital Neurotherapeutics | Software-based interventions for neurological conditions | Cognitive training apps, digital biomarkers | Cognitive decline, mental health disorders, neurodevelopmental conditions [35] |

The investment landscape reflects a balanced approach between near-term clinical applications and longer-term transformative technologies. Neurostimulation devices, with their established clinical utility and reimbursement pathways, continue to attract substantial funding for iterative improvements and expansion into new indications [30]. Meanwhile, emerging areas such as BCIs and stem cell therapies receive significant venture capital backing despite longer regulatory pathways, reflecting investor confidence in their disruptive potential [29] [35].

Comparative Analysis of Key Neurotechnology Approaches

Device-Based Interventions

Device-based neurotechnologies represent the most mature segment of the market, with well-established clinical validation and commercialization pathways. The table below provides a comparative analysis of major device categories:

| Technology Category | Mechanism of Action | Primary Applications | Advantages | Limitations | Representative Clinical Evidence |

|---|---|---|---|---|---|

| Deep Brain Stimulation (DBS) | Implantation of electrodes that deliver electrical impulses to specific brain regions | Parkinson's disease, essential tremor, dystonia, OCD | Reversible, adjustable, proven long-term efficacy | Invasive surgical procedure, risk of infection, hardware complications, cost [36] | Significant improvement in motor symptoms in Parkinson's patients; reduction in medication-induced dyskinesias [36] |

| Spinal Cord Stimulation (SCS) | Delivery of electrical pulses to the spinal cord to modulate pain signals | Chronic pain syndromes, failed back surgery syndrome, complex regional pain syndrome | Minimally invasive, programmable, reduced opioid dependence | Lead migration, tolerance development, requires trial period [30] | Closed-loop systems enabled 84% of patients to achieve ≥50% pain reduction at 12 months [30] |

| Vagus Nerve Stimulation (VNS) | Electrical stimulation of the vagus nerve in the neck | Epilepsy, treatment-resistant depression, inflammatory conditions | Non-brain invasive, well-tolerated, complementary to medications | Hoarseness, cough, dyspnea, requires surgical implantation [30] | Reduced seizure frequency in refractory epilepsy; adjunctive benefit in depression [30] |

| Transcranial Magnetic Stimulation (TMS) | Non-invasive brain stimulation using magnetic fields | Depression, anxiety, migraine, neuropathic pain | Non-invasive, outpatient procedure, minimal side effects | Limited depth penetration, requires repeated sessions, cost [35] | FDA-cleared for major depression; emerging evidence for other neuropsychiatric conditions [35] |

| Brain-Computer Interfaces (BCIs) | Direct communication pathway between brain and external device | Paralysis, communication disorders, motor restoration | Direct neural interface, potential for functional restoration, non-invasive options available | Signal stability challenges, training required, limited real-world validation [31] [30] | Early demonstrations of thought-to-text communication; environmental control for paralyzed individuals [30] |

Biological and Pharmaceutical Approaches

Stem cell therapies represent a promising biological approach to neurological disorders, with distinct mechanisms and applications compared to device-based interventions. The table below compares major stem cell types used in neurological applications:

| Stem Cell Type | Source | Key Mechanisms | Advantages | Limitations | Clinical Applications |

|---|---|---|---|---|---|

| Embryonic Stem Cells (ESCs) | Inner cell mass of blastocysts | Cell replacement, paracrine signaling, immunomodulation | Pluripotency, extensive expansion capacity | Ethical concerns, tumorigenicity risk, immune rejection [29] | Preclinical models of Parkinson's, spinal cord injury; limited clinical translation [29] |

| Mesenchymal Stem Cells (MSCs) | Bone marrow, adipose tissue, umbilical cord | Paracrine signaling, immunomodulation, stimulation of endogenous repair | Multipotent, low immunogenicity, ethical acceptability | Limited differentiation potential, variability between sources [29] | Multiple sclerosis, stroke, ALS; clinical trials ongoing [29] |

| Neural Stem Cells (NSCs) | Fetal brain tissue, differentiated from ESCs/iPSCs | Cell replacement, trophic support, neural circuit integration | Committed neural lineage, site-appropriate integration | Limited sources, ethical concerns (fetal tissue), expansion challenges [29] | Huntington's disease, spinal cord injury, stroke; early clinical trials [29] |

| Induced Pluripotent Stem Cells (iPSCs) | Reprogrammed somatic cells | Cell replacement, disease modeling, drug screening | Patient-specific, no ethical concerns, pluripotent | Tumorigenicity risk, reprogramming efficiency, genomic instability [29] | Parkinson's disease modeling; autologous transplantation in early trials [29] |

Experimental Methodologies and Research Protocols

Clinical Validation Frameworks

Rigorous experimental methodologies are essential for validating neurotechnologies and establishing their clinical utility. The following protocols represent standardized approaches for evaluating key neurotechnology categories:

Protocol for Deep Brain Stimulation Efficacy Assessment in Parkinson's Disease:

- Objective: To evaluate the safety and efficacy of DBS in advanced Parkinson's disease using standardized rating scales and objective motor assessments.

- Patient Selection: Idiopathic Parkinson's disease patients with levodopa-responsive symptoms, disease duration >5 years, presence of motor complications despite optimal medication, absence of significant cognitive impairment or psychiatric comorbidities.

- Intervention: Bilateral implantation of DBS electrodes in subthalamic nucleus or globus pallidus interna connected to implantable pulse generator.

- Outcome Measures: Primary - Unified Parkinson's Disease Rating Scale (UPDRS) Part III (motor examination) score in medication-off state at 6 months post-implantation compared to baseline. Secondary - Quality of life measures (PDQ-39), levodopa-equivalent daily dose reduction, dyskinesia rating scales, neuropsychological assessments.

- Assessment Timeline: Baseline (pre-operative), 3 months, 6 months, 12 months, and annually thereafter.

- Statistical Analysis: Intention-to-treat analysis with repeated measures ANOVA for primary outcome, with appropriate corrections for multiple comparisons.

Protocol for Stem Cell Therapy in Spinal Cord Injury:

- Objective: To assess the safety and preliminary efficacy of stem cell transplantation in traumatic spinal cord injury.

- Patient Population: Adults with stable traumatic spinal cord injury (ASIA impairment scale A-C), 3-12 months post-injury, completed standard rehabilitation.

- Intervention: Intraspinal injection of allogeneic mesenchymal stem cells or neural precursor cells at injury site following laminectomy and durotomy.

- Control Group: Sham surgery (laminectomy without cell injection) or active comparator (standard care).

- Primary Outcomes: Safety (adverse events, serious adverse events), neurological status (ASIA impairment scale, International Standards for Neurological Classification of Spinal Cord Injury).

- Secondary Outcomes: Sensory and motor scores, electrophysiological measures (motor evoked potentials, sensory evoked potentials), imaging (MRI for lesion characteristics), quality of life measures.

- Follow-up Duration: 12-24 months with assessments at 1, 3, 6, 12, and 24 months post-procedure.

- Blinding: Double-blind design with independent outcome adjudication committee.

Signaling Pathways and Mechanisms of Action

Neurotechnologies exert their effects through modulation of specific neural pathways and mechanisms. The diagram below illustrates the primary signaling pathways targeted by major neurotechnology approaches:

Pathway diagram illustrating primary signaling and mechanistic pathways for major neurotechnology categories.

Research Reagents and Essential Materials

The following table details key research reagents and materials essential for neurotechnology development and validation:

| Reagent/Material | Function | Application Examples | Technical Considerations |

|---|---|---|---|

| Electroencephalography (EEG) Systems | Recording electrical activity of the brain | Brain-computer interfaces, seizure detection, cognitive state monitoring | Electrode type (wet/dry), channel count, sampling rate, portability [35] |

| Functional Magnetic Resonance Imaging (fMRI) | Measuring brain activity through blood flow changes | Localizing neural functions, treatment target identification, therapy monitoring | Spatial/temporal resolution, contrast mechanisms, analysis pipelines [34] |

| Neural Stem Cells | Differentiating into neuronal and glial lineages | Cell replacement therapy, disease modeling, drug screening | Source (fetal, iPSC-derived), expansion capacity, differentiation efficiency [29] |