Digital Twin Models for Predicting Neurological Disease Progression: From Foundations to Clinical Applications

This article provides a comprehensive examination of digital twin (DT) technology for predicting neurological disease progression, tailored for researchers, scientists, and drug development professionals.

Digital Twin Models for Predicting Neurological Disease Progression: From Foundations to Clinical Applications

Abstract

This article provides a comprehensive examination of digital twin (DT) technology for predicting neurological disease progression, tailored for researchers, scientists, and drug development professionals. It explores the foundational concepts defining DTs in neuroscience, contrasting them with digital models and shadows. The manuscript details cutting-edge methodological approaches, including AI and mechanistic model integration, and their specific applications in conditions like Alzheimer's disease, Parkinson's disease, and brain tumors, highlighting use cases in clinical trial optimization and personalized treatment planning. It critically addresses troubleshooting and optimization challenges, such as data integration, model validation, and computational scalability. Finally, the article assesses validation frameworks, regulatory acceptance, and performance metrics, offering a comparative analysis with traditional methods. The goal is to serve as a foundational resource for advancing the development and clinical translation of neurological digital twins.

Defining the Neurological Digital Twin: Core Concepts and Current Landscape

What is a Digital Twin? Distinguishing from Digital Models and Shadows

In the realm of modern technological research, the concepts of digital twins, digital models, and digital shadows represent a spectrum of virtual representations of physical entities. For researchers in neurology and drug development, precise distinctions between these terms are critical. A digital twin is more than a simple model; it is a dynamic, virtual representation that is interconnected with its physical counterpart through a continuous, bidirectional flow of data [1] [2]. This allows the digital twin not only to mirror the current state of a physical system but also to simulate future states, predict outcomes, and even influence the physical system itself [3]. This capability is particularly transformative for predicting neurological disease progression, offering a pathway to personalized, predictive medicine by creating a virtual replica of a patient's brain or autonomic nervous system [4] [5].

Core Definitions and Distinctions

Digital Model

A digital model is a static digital representation of a physical object, system, or process, such as a 3D computer-aided design (CAD) file or a mathematical algorithm [1] [6]. Its key characteristic is the absence of any automated data exchange with the physical entity it represents [3]. Any updates to the model based on changes in the physical world must be performed manually. In neuroscience, a digital model could be a static 3D reconstruction of a patient's brain from an MRI scan, used for surgical planning but not updating automatically [4].

Digital Shadow

A digital shadow represents a significant evolution from a digital model. It is a virtual representation that automatically receives and updates its state based on real-time data from its physical counterpart through sensors or IoT devices [1] [6]. However, this data flow is strictly one-way; the physical process influences the digital shadow, but the shadow cannot directly cause changes in the physical world [3]. For example, a digital shadow of a neuro-intensive care unit could monitor real-time intracranial pressure and EEG data from multiple patients, providing a live dashboard for clinicians to observe trends.

Digital Twin

A digital twin establishes a bidirectional, real-time connection between the physical and digital entities [1]. The physical entity provides operational data to the digital twin, and the twin, in turn, can send back commands, simulations, or insights to influence and optimize the physical entity [3]. This closed-loop, interactive relationship is the defining feature of a true digital twin. In neurological research, a digital twin of a patient's brain could use continuous data from wearables and clinical assessments to simulate the progression of a disease like Parkinson's and test the potential efficacy of different drug regimens in silico before recommending a specific therapy [5] [2].

Comparative Analysis

The table below summarizes the key differences:

| Feature | Digital Model | Digital Shadow | Digital Twin |

|---|---|---|---|

| Data Connection | None [3] | One-way (from physical to digital) [1] [3] | Two-way, bidirectional [1] [3] |

| Real-Time Updates | No [3] | Yes [3] | Yes [3] |

| Influence on Physical System | No [3] | No (monitoring only) [3] | Yes (can control/optimize) [3] |

| Primary Use Case | Design, prototyping, visualization [1] | Monitoring, reporting, predictive maintenance [1] [3] | Optimization, autonomous control, predictive analytics [1] [3] |

| Neurology Example | Static 3D brain model from MRI | Live dashboard of patient vitals from wearables | Simulating drug response & adapting therapy in real-time [5] |

Data Flow Relationships Among Digital Concepts

The Digital Twin in Neurological Disease Research

The Conceptual Framework

In neurological research, a digital twin is a dynamic, virtual replica of a patient's brain or specific neural systems [4] [5]. It is built and maintained using multimodal data and is designed to simulate, predict, and optimize health outcomes in the physical patient. The core value lies in its ability to perform "in-silico" experiments—testing countless hypotheses and treatment strategies on the virtual twin to identify the most promising ones for real-world application, thereby accelerating research and personalizing therapy [7].

Key Application Areas

- Personalized Disease Modeling: Creating patient-specific computational models of brain network dynamics to understand how pathologies like tumors or neurodegenerative diseases disrupt normal function [4]. These models can incorporate individual neuroimaging, genetic, and clinical data to simulate disease trajectories.

- Treatment Optimization and Virtual Clinical Trials: A digital twin can simulate a patient's response to various medications (e.g., pro-amatine or pyridostigmine for POTS) or neuromodulation strategies, guiding personalized dosing and reducing trial-and-error [5]. Furthermore, aggregating thousands of digital twins enables the design of in-silico clinical trials, drastically reducing the time and cost of bringing new neurological drugs to market [2].

- Symptom Prediction and Prevention: For conditions like Postural Tachycardia Syndrome (POTS), a digital twin can continuously monitor physiological signals (e.g., heart rate, respiration, end-tidal CO2) [5]. By identifying precursors to symptomatic events like cerebral hypoperfusion, the system can alert the patient or clinician to intervene preemptively, potentially preventing symptoms before they occur [5].

Experimental Protocols for Neurological Digital Twins

Protocol: Building a Digital Twin for a Neuro-Oncology Study

This protocol outlines the methodology for creating a patient-specific digital twin to model brain tumor growth and predict the impact of surgical interventions [4].

1. Hypothesis and Objective Definition:

- Define the clinical/research question (e.g., "How will resection of tumor X impact the functional connectivity of network Y?").

- Establish the primary outcome measures (e.g., prediction of post-surgical cognitive deficit).

2. Multimodal Data Acquisition and Ingestion:

- Structural MRI: Provides high-resolution anatomical data for constructing the base model [4].

- Functional MRI (fMRI): Measures blood-oxygen-level-dependent (BOLD) signals to map brain activity and functional connectivity between regions [4].

- Diffusion MRI (dMRI): Traces the movement of water molecules to map the white matter structural connectome—the "wiring" of the brain [4].

- Clinical and Neuropsychological Data: Includes patient-reported outcomes, quality of life assessments, and standardized cognitive test scores [4].

- Genomic Data: Tumor genomics or patient genetic markers when relevant.

3. Data Harmonization and Fusion:

- Spatial co-registration of all neuroimaging data into a common coordinate system.

- Data cleaning and normalization to account for variability in acquisition protocols.

- Multimodal fusion techniques to integrate structural, functional, and clinical data into a unified model [4].

4. Model Building and Personalization:

- Use a platform like The Virtual Brain (TVB) to create a personalized mathematical model of brain dynamics [4].

- The structural connectome from dMRI serves as the scaffold upon which neural mass models simulate population-level neuronal activity.

- Model parameters are iteratively adjusted so that the simulated fMRI activity patterns closely match the empirical fMRI data from the individual patient.

5. Simulation and Intervention Testing:

- Run the personalized model to establish a baseline simulation.

- Introduce a virtual intervention, such as "lesioning" the model by removing nodes and connections corresponding to the planned tumor resection.

- Run the post-intervention simulation to predict new patterns of network dynamics and functional outcomes.

6. Validation and Clinical Translation:

- Compare the model's predictions with the patient's actual post-surgical outcome.

- Use this feedback to refine and validate the model's accuracy for future use.

Digital Twin Model Creation Workflow

Protocol: Implementing a Digital Twin for Autonomic Disorder Management (POTS)

This protocol describes the framework for a continuous, adaptive digital twin for Postural Tachycardia Syndrome, focusing on real-time symptom prediction and intervention [5].

1. System Architecture and Component Setup:

- Monitoring Subsystem: Deploy wearable devices (e.g., chest patch for ECG, continuous blood pressure monitor, accelerometer) to stream heart rate, heart rate variability, and activity data. Optionally, include a capnometer for end-tidal CO2 monitoring [5].

- Modeling Subsystem: Implement a cloud-based analytics platform that integrates mechanistic models (e.g., of cardiovascular response to orthostatic stress) with AI/machine learning models trained on population and individual data.

- Simulation Subsystem: Develop a module capable of running short-term forecasts of physiological state based on current data trends.

- Interface Subsystem: Create a clinician dashboard integrated with Electronic Health Records (EHR) and a patient-facing mobile application for alerts and feedback.

2. Continuous Data Ingestion and Preprocessing:

- Establish secure, real-time data pipelines from wearables, EHR, and patient-reported outcome apps to the cloud platform.

- Implement data cleaning and filtering algorithms to handle artifacts and noise from sensor data.

- Perform data fusion to create a unified, time-synchronized view of the patient's state.

3. AI-Driven State Prediction and Alert Generation:

- The AI model continuously analyzes the incoming data stream. For instance, it detects patterns of inappropriate hyperventilation (a precursor to cerebral hypoperfusion and dizziness in POTS) [5].

- Upon detecting a pre-symptomatic pattern, the system triggers an alert hierarchy:

- Stage 1: A subconscious haptic vibration from the patient's wearable or smartphone, prompting them to slow their breathing without conscious effort.

- Stage 2: If the pattern persists, an auditory or visual alert through the mobile app with explicit instructions.

- Stage 3: An escalation alert sent to the clinician's dashboard for further investigation.

4. Intervention Simulation and Personalization:

- The clinician uses the dashboard to simulate the effect of lifestyle changes (e.g., a new exercise regimen) or medication adjustments on the digital twin before prescribing them to the patient [5].

- The digital twin's model is continuously updated based on the patient's response to actual interventions, creating a learning, adaptive system.

The Scientist's Toolkit: Key Research Reagents and Technologies

| Item | Function & Application in Neurological Digital Twins |

|---|---|

| The Virtual Brain (TVB) | An open-source neuroinformatics platform for constructing and simulating personalized brain network models based on individual connectome data [4]. |

| Multi-modal MRI Data | Structural MRI: For anatomy. fMRI: For functional connectivity. dMRI: For structural white matter connectivity. Forms the core imaging data for model building [4]. |

| Wearable Biometric Sensors | Devices (e.g., ECG patches, actigraphs) to collect continuous, real-time physiological and activity data for dynamic model updating, especially in outpatient settings [5]. |

| AI/ML Modeling Platforms (Python-based) | Platforms like TensorFlow or PyTorch, and ecosystem libraries, are used to develop AI-driven predictive models that learn from continuous patient data streams [5]. |

| Data Harmonization Tools | Software and pipelines (e.g., from the FMRIB Software Library - FSL) for co-registering and standardizing diverse neuroimaging data into a consistent format for analysis [4]. |

| IoT/Data Streaming Protocols | Communication protocols like MQTT and AMQP enable reliable, real-time transmission of sensor data from the physical patient to the cloud-based digital twin [8]. |

Technical and Ethical Considerations

Implementing digital twins in neurological research presents significant challenges. The data volume generated from continuous monitoring is immense, requiring robust infrastructure for storage, processing, and compression [5]. Model transparency is another hurdle; while AI models are powerful, their "black-box" nature can be a barrier to clinical trust and adoption. Efforts to develop explainable AI (XAI) are crucial [5]. Furthermore, data privacy and security are paramount when handling sensitive patient health information in real-time, necessitating stringent protocols and potentially decentralized data architectures like blockchain [5].

Ethically, the use of digital twins raises questions regarding patient consent for continuous data use, algorithmic bias, and the potential for a "responsibility gap" if autonomous actions taken by the system lead to adverse outcomes. A collaborative, interdisciplinary approach involving clinicians, data scientists, and ethicists is essential to navigate these challenges responsibly [4] [2].

The National Academies of Sciences, Engineering, and Medicine (NASEM) has established a rigorous definition for digital twins in healthcare that sets a crucial standard for research and clinical application. According to NASEM, a digital twin is "a set of virtual information constructs that mimics the structure, context, and behavior of a natural, engineered, or social system (or system-of-systems), is dynamically updated with data from its physical twin, has a predictive capability, and informs decisions that realize value" [9]. This definition emphasizes the bidirectional interaction between physical and virtual systems as central to the digital twin concept, distinguishing true digital twins from simpler digital models or shadows [10].

For researchers investigating neurological disease progression, the NASEM framework provides a structured approach for developing sophisticated computational models that can personalize treatments, dynamically adapt to patient changes, and generate accurate predictions of disease trajectories. The framework's emphasis on verification, validation, and uncertainty quantification (VVUQ) establishes critical standards for model reliability that are particularly important in the context of complex neurological disorders where treatment decisions have significant consequences [9].

Core Components of the NASEM Framework

Personalization: Creating Patient-Specific Computational Representations

Personalization requires developing virtual representations that reflect the unique characteristics of individual patients, moving beyond generalized population models. This involves integrating multi-scale data including genomics, clinical parameters, imaging findings, and lifestyle factors to create comprehensive patient profiles [11] [10].

In neurological applications, effective personalization has been demonstrated in multiple domains. For Alzheimer's disease research, conditional restricted Boltzmann machine (CRBM) models have been trained on harmonized datasets from 6,736 unique subjects, incorporating demographics, genetics, clinical severity measures, cognitive assessments, and laboratory measurements to create individualized predictions [12]. In Parkinson's disease management, digital twin-based healthcare systems have achieved 97.95% prediction accuracy for earlier identification from remote locations by incorporating patient-specific motor and non-motor symptoms [11]. For brain tumor management, hybrid approaches combining Semi-Supervised Support Vector Machine (S3VM) and improved AlexNet CNN have achieved feature recognition accuracy of 92.52% with impressive segmentation metrics by personalizing to individual tumor characteristics [11].

Table 1: Quantitative Outcomes of Personalized Digital Twin Approaches in Neurological Research

| Condition | Model Type | Personalization Data Sources | Achieved Accuracy | Clinical Application |

|---|---|---|---|---|

| Alzheimer's Disease | Conditional Restricted Boltzmann Machine (CRBM) | Demographics, genetics, clinical severity, cognitive measures, lab values | Partial correlation 0.30-0.46 with actual outcomes [12] | Prognostic covariate adjustment in clinical trials |

| Parkinson's Disease | Digital Twin Healthcare System | Motor symptoms, non-motor symptoms, remote monitoring data | 97.95% prediction accuracy [11] | Early disease identification and progression tracking |

| Brain Tumors | S3VM with AlexNet CNN | Imaging features, tumor characteristics, clinical parameters | 92.52% feature recognition accuracy [11] | Radiotherapy planning and treatment response prediction |

| Multiple Sclerosis | Physics-based Progression Model | Imaging, clinical assessments, biomarker data | Identified progression 5-6 years before clinical onset [11] | Disease progression forecasting and intervention timing |

Dynamic Updating: The Bidirectional Data Flow

Dynamic updating establishes a continuous feedback loop between the physical patient and their digital counterpart, enabling the model to evolve alongside the patient's changing health status. This bidirectional flow of information represents a fundamental distinction between true digital twins and static computational models [9] [10].

The implementation of dynamic updating in neurological disorders faces unique challenges, as updating a human system typically necessitates a "human-in-the-loop" approach rather than fully automated updates [9]. Successful implementations have overcome these challenges through various technological strategies. The Cardio Twin architecture demonstrates this capability in another domain with real-time electrocardiogram (ECG) monitoring achieving 85.77% classification accuracy and 95.53% precision through continuous data assimilation [11]. For respiratory monitoring, systems using ESP32 Wi-Fi Channel State Information sensors have achieved 92.3% accuracy in breathing rate estimation, while ML techniques demonstrated classification accuracies of 89.2% for binary-class and 83.7% for multi-class respiratory pattern recognition [11]. In diabetes management, the Exercise Decision Support System (exDSS) for type 1 diabetes provides personalized recommendations during exercise, increasing time in target glucose range from 80.2% to 92.3% through continuous monitoring and model adjustment [11].

Table 2: Dynamic Updating Methodologies and Performance Across Medical Domains

| Update Mechanism | Data Sources | Update Frequency | Performance Metrics | Relevance to Neurology |

|---|---|---|---|---|

| Wearable Sensor Networks | Movement patterns, vital signs, sleep metrics | Continuous real-time | 92.3% accuracy in breathing pattern classification [11] | Motor symptom tracking in Parkinson's, seizure detection in epilepsy |

| Periodic Clinical Assessment | Cognitive testing, imaging, laboratory values | Days to months | Correlation of 0.30-0.39 with 96-week cognitive changes [12] | Disease progression modeling in Alzheimer's and MS |

| Patient-Reported Outcomes | Symptom diaries, medication adherence, quality of life | Daily to weekly | 15% reduction in residual variance in clinical trials [12] | Tracking subjective symptoms in migraine, multiple sclerosis |

| Automated Imaging Analysis | MRI, CT, PET scans | Weeks to months | 16.7% radiation dose reduction while maintaining equivalent outcomes [11] | Tumor response assessment in neuro-oncology |

Predictive Power: Forecasting Disease Trajectories and Treatment Responses

Predictive capability represents the third essential component of the NASEM framework, enabling digital twins to forecast future health states and intervention outcomes under various scenarios. This predictive power transforms digital twins from descriptive tools to proactive decision-support systems [9].

In neurological applications, predictive digital twins have demonstrated significant potential across multiple domains. For Alzheimer's disease trials, AI-generated digital twins have reduced total residual variance by 9-15% while maintaining statistical power, potentially reducing control arm sample size requirements by 17-26% in future trials [12]. In neurodegenerative disease management, physics-based models integrating the Fisher-Kolmogorov equation with anisotropic diffusion have successfully simulated the spread of misfolded proteins across the brain, capturing both spatial and temporal aspects of disease progression [11]. For personalized radiotherapy planning in high-grade gliomas, digital twin approaches have demonstrated either increased tumor control or significant reductions in radiation dose (16.7%) while maintaining equivalent outcomes through accurate prediction of treatment response [11].

Application Notes: Implementing the NASEM Framework in Neurological Research

Protocol 1: Creating NASEM-Compliant Digital Twins for Alzheimer's Disease Clinical Trials

Background and Purpose: This protocol outlines the methodology for developing prognostic digital twins for Alzheimer's disease clinical trials, based on validated approaches that have received regulatory acceptance [12]. The digital twins serve as prognostic covariates to reduce statistical variance and improve trial power.

Materials and Equipment:

- Historical clinical trial datasets (minimum n=5,000 recommended)

- Target trial baseline characteristics including cognitive assessments, demographic data, and biomarker profiles

- Conditional Restricted Boltzmann Machine (CRBM) or equivalent deep learning architecture

- High-performance computing infrastructure with GPU acceleration

- Data harmonization pipelines for multi-source data integration

Procedure:

- Data Harmonization and Preprocessing

- Collect and harmonize data from historical clinical trials and observational studies

- Perform quality control including assessment of missing data patterns and variable distributions

- Create unified data schema encompassing demographics, genetics, clinical severity, cognitive measures, and functional assessments

Model Training and Validation

- Train CRBM model on historical integrated dataset using leave-one-trial-out cross-validation

- Validate model on independent clinical trial datasets not used in training

- Assess partial correlation between digital twin predictions and actual outcomes across multiple cognitive assessment scales

Prognostic Covariate Adjustment in Target Trial

- Generate digital twins for all participants in the target trial using baseline data

- Incorporate digital twin predictions as covariates in mixed-effects models for repeated measures

- Calculate variance reduction and sample size optimization achieved through covariate adjustment

Quality Control and Validation:

- Establish benchmarking against traditional statistical methods

- Verify consistency of correlation coefficients across validation datasets (target range: 0.30-0.46)

- Ensure computational reproducibility through containerized implementation

Expected Outcomes: Implementation of this protocol typically achieves 9-15% reduction in total residual variance, enabling sample size reductions of 9-15% overall and 17-26% in control arms while maintaining statistical power [12].

Protocol 2: Dynamic Digital Twins for Progressive Neurological Conditions

Background and Purpose: This protocol describes the development of dynamically updated digital twins for progressive neurological conditions such as Parkinson's disease and multiple sclerosis, enabling continuous adaptation to disease progression and treatment response.

Materials and Equipment:

- Multi-modal data streams (clinical assessments, wearable sensor data, imaging, patient-reported outcomes)

- Cloud-based data assimilation infrastructure

- Ensemble modeling framework combining mechanistic and machine learning approaches

- Real-time data processing pipelines with automated quality checks

Procedure:

- Initial Model Personalization

- Develop baseline model using comprehensive initial assessment data

- Incorporate individual neuroanatomy, functional connectivity, and clinical phenotype

- Establish individual-specific parameters for disease progression models

Dynamic Updating Implementation

- Implement automated data ingestion from continuous monitoring sources

- Establish model updating triggers based on data arrival and significance thresholds

- Deploy ensemble filtering techniques for state and parameter estimation

Predictive Forecasting and Intervention Planning

- Generate short-term and long-term forecasts of disease progression

- Simulate intervention effects under multiple scenarios

- Update interventional recommendations based on model predictions and confidence intervals

Quality Control and Validation:

- Implement rigorous VVUQ (verification, validation, and uncertainty quantification) processes

- Establish benchmarking against static models using historical datasets

- Deploy real-time monitoring of model performance metrics with alerting systems

Expected Outcomes: Successful implementation has demonstrated prediction accuracy of 97.95% for Parkinson's disease identification and enabled detection of progressive brain tissue loss 5-6 years before clinical symptom onset in multiple sclerosis [11].

Table 3: Essential Research Reagents and Computational Resources for Neurological Digital Twins

| Category | Specific Resources | Function/Application | Implementation Notes |

|---|---|---|---|

| Data Acquisition | Wearable sensors (actigraphy, physiological monitors) | Continuous real-world data collection for dynamic updating | Select devices with validated accuracy for target population [11] |

| Standardized cognitive assessment batteries | Longitudinal cognitive profiling for personalization and validation | Ensure cultural and linguistic appropriateness for diverse populations | |

| Multimodal neuroimaging protocols (MRI, PET, fMRI) | Structural and functional characterization for model initialization | Implement standardized acquisition protocols across sites | |

| Computational Infrastructure | High-performance computing clusters with GPU acceleration | Training of complex models (CRBM, deep neural networks) | Cloud-based solutions facilitate collaboration and scalability [12] |

| Federated learning frameworks | Multi-institutional collaboration while preserving data privacy | Essential for rare disease research with distributed patient populations | |

| Containerized deployment platforms (Docker, Singularity) | Reproducible model implementation and validation | Critical for regulatory submission and peer verification | |

| Modeling Approaches | Conditional Restricted Boltzmann Machines (CRBM) | Generation of prognostic digital twins for clinical trials | Particularly effective for Alzheimer's disease applications [12] |

| Physics-based progression models (Fisher-Kolmogorov equations) | Simulation of neurodegenerative protein spread | Captures spatiotemporal aspects of disease progression [11] | |

| Hybrid mechanistic-machine learning frameworks | Combining domain knowledge with data-driven insights | Balances interpretability with predictive power |

Technical Considerations and Implementation Challenges

Verification, Validation, and Uncertainty Quantification (VVUQ)

The NASEM framework emphasizes VVUQ as a critical standard for ensuring digital twin reliability [9]. However, current implementations show significant gaps in this area, with only two studies in a recent comprehensive review mentioning VVUQ processes [9]. For neurological applications, rigorous VVUQ should include:

- Verification: Ensuring the computational model correctly implements the intended mathematical framework

- Validation: Assessing how accurately the model represents real-world neurological processes

- Uncertainty Quantification: Characterizing and communicating uncertainties in model predictions

Implementation of comprehensive VVUQ requires specialized expertise in computational neuroscience, statistics, and domain-specific clinical knowledge. Establishing standardized VVUQ protocols for neurological digital twins remains an urgent research priority.

Computational and Infrastructure Requirements

The development of NASEM-compliant digital twins demands substantial computational resources. Successful implementations have leveraged:

- High-performance computing environments with parallel processing capabilities

- Secure data storage infrastructure for multimodal neurological data

- Automated pipelines for data preprocessing, quality control, and model updating

- Visualization platforms for interpreting complex model outputs for clinical decision support

Computational efficiency considerations are particularly important for real-time clinical applications, where model predictions must be available within clinically relevant timeframes.

The NASEM framework provides a rigorous foundation for developing digital twins that meet the complex challenges of neurological disease research. By emphasizing personalization, dynamic updating, and predictive power, the framework addresses critical needs in understanding and treating conditions such as Alzheimer's disease, Parkinson's disease, multiple sclerosis, and brain tumors.

Current research demonstrates the substantial potential of this approach, with documented improvements in prediction accuracy, clinical trial efficiency, and personalized treatment planning. However, full implementation across the NASEM criteria remains challenging, with only approximately 12% of current studies meeting all requirements [9]. Continued advancement in data integration, computational methods, and validation frameworks will be essential for realizing the full potential of digital twins in neurological research and clinical care.

The digital twin (DT) concept has undergone a significant transformation from its industrial origins to its current status as a cutting-edge tool in neurological research and clinical practice. First introduced by Grieves in 2002 as a "conceptual ideal" for product lifecycle management and later coined by NASA in 2010 for aerospace applications, the framework originally consisted of three core elements: a physical system, its virtual representation, and bidirectional information flow linking them [9] [11] [13]. This technology has since transcended its engineering roots, emerging as a transformative force in healthcare that enables highly personalized medicine through patient-specific models that simulate disease progression and treatment response [11].

In neurological applications, digital twins represent a paradigm shift from population-based medicine to truly individualized patient care. By creating virtual replicas of patient brains, researchers and clinicians can now run simulations to predict disease trajectories, optimize therapeutic interventions, and understand individual brain dynamics without risking patient safety [14]. This review examines the historical trajectory of digital twin technology and its current applications in neurology, providing detailed protocols and analytical frameworks for researchers working at the intersection of computational modeling and neurological disease progression.

From Industry to Clinic: The Healthcare Transformation

The transition of digital twins from industrial to medical applications represents one of the most significant technological migrations in recent scientific history. In clinical applications, DTs facilitate personalized medicine by enabling the construction of patient-specific models that integrate data from electronic health records (EHR), imaging modalities, and Internet of Things (IoT) devices to account for individual physiological and historical nuances [11]. This fundamental shift required adapting industrial concepts to the complexity and variability of human physiology, particularly the challenges of modeling the most complex biological system—the human brain.

According to a comprehensive scoping review of Human Digital Twins (HDTs) in healthcare, the National Academies of Sciences, Engineering, and Medicine (NASEM) has established a precise definition requiring that a digital twin must be personalized, dynamically updated, and have predictive capabilities to inform clinical decision-making [9]. This definition provides a crucial framework for distinguishing true digital twins from related concepts such as digital models (no automatic data exchange) and digital shadows (one-way data flow only) [9].

Table 1: Digital Twin Classification in Healthcare Based on NASEM Criteria

| Model Type | Prevalence in Healthcare Literature | Key Characteristics | Neurological Examples |

|---|---|---|---|

| True Digital Twin | 12.08% (18 of 149 studies) | Personalized, dynamically updated, enables decision support | Virtual Brain Twins for epilepsy surgery planning |

| Digital Shadow | 9.40% (14 studies) | One-way data flow from physical to virtual | Brain activity monitoring systems |

| Digital Model | 37.58% (56 studies) | Personalized but not dynamically updated | Static computational brain models |

| Virtual Patient Cohorts | 10.07% (15 studies) | Generated populations for in-silico trials | Synthetic populations for Alzheimer's trial optimization |

The table above illustrates that only a minority of models labeled as "digital twins" in healthcare literature actually meet the full NASEM criteria, highlighting the importance of precise terminology and methodological rigor in this emerging field [9].

Neurological Applications: Current Landscape

Digital twin technology has demonstrated remarkable potential across various neurological conditions, enabling unprecedented insights into disease progression and treatment planning. The applications span from neurodegenerative disorders to epilepsy and brain tumors, each with distinct methodological approaches and clinical implications.

Virtual Brain Twins (VBTs)

Virtual Brain Twins represent one of the most advanced implementations of digital twin technology in neuroscience. A VBT combines a person's structural brain data (from MRI or diffusion imaging) with functional data measuring brain activity (such as EEG, MEG, or fMRI) to build a computational model of their brain's network [14]. This network model simulates how different brain regions are connected and communicate through neural pathways, creating a personalized digital replica that can be used to test "what-if" scenarios in a safe, virtual environment before applying treatments or interventions to the patient [14].

The creation of a VBT involves three key steps: (1) Structural mapping where brain scans are processed to identify regions (nodes) and the white-matter connections between them (the connectome); (2) Model integration where each node is assigned a mathematical model representing the average activity of large groups of neurons, while the connectome defines how signals travel between regions; and (3) Personalization through data where functional brain recordings fine-tune the model using statistical inference, adapting it to the individual's physiology and condition [14].

Specific Neurological Applications

Table 2: Digital Twin Applications in Neurology

| Condition | Digital Twin Application | Reported Efficacy/Performance |

|---|---|---|

| Epilepsy | Virtual Epileptic Patient model identifies seizure origins and evaluates tailored surgical strategies | Enables precise intervention planning for drug-resistant cases [14] |

| Alzheimer's Disease | Physics-based models integrate Fisher-Kolmogorov equation with anisotropic diffusion to simulate misfolded protein spread | Captures spatial and temporal aspects of neurodegenerative disease progression [11] |

| Multiple Sclerosis | Modeling how lesions disrupt brain connectivity | Informs rehabilitation and therapy planning; reveals tissue loss begins 5-6 years before clinical onset [11] [14] |

| Parkinson's Disease | DT-based Healthcare Systems for remote monitoring and prediction | Achieves 97.95% prediction accuracy for earlier identification [11] |

| Brain Tumors | Hybrid approaches combining Semi-Supervised Support Vector Machine and improved AlexNet CNN | 92.52% feature recognition accuracy; personalized radiotherapy planning enables 16.7% radiation dose reduction while maintaining outcomes [11] |

Experimental Protocols and Methodologies

Protocol 1: Generating AI-Based Digital Twins for Alzheimer's Clinical Trials

This protocol outlines the methodology for creating and implementing AI-generated digital twins to enhance clinical trial efficiency in Alzheimer's disease research, based on the approach used in the AWARE trial analysis [12].

Materials and Data Requirements:

- Historical Training Data: Harmonized dataset combining control arms from 29 clinical trials (≥7,000 participants) and observational studies (1,728 participants) from sources like CODR-AD and ADNI [12]

- Baseline Variables: Demographics, genetics, clinical severity scores, component-level cognitive and functional measures, laboratory measurements [12]

- Target Trial Data: Baseline patient characteristics from the clinical trial being enhanced (e.g., AWARE trial with 453 MCI/mild AD patients) [12]

Methodological Steps:

Model Training:

- Train a Conditional Restricted Boltzmann Machine (CRBM) on the historical dataset after data harmonization

- CRBM is an unsupervised machine learning model with probabilistic neural networks

- Pre-train and validate the model prior to application to the target trial

Digital Twin Generation:

- Input baseline data from each trial participant into the trained CRBM

- Generate individualized predictions of each participant's clinical outcomes if they had received placebo

- Output includes comprehensive, longitudinal computationally generated clinical records

Statistical Integration:

- Incorporate DT predictions as prognostic covariates in statistical models using PROCOVA (prognostic covariate adjustment) or PROCOVA-Mixed-Effects Model for Repeated Measures (MMRM)

- Analyze treatment effects while accounting for expected placebo progression

- Assess variance reduction and potential sample size savings

Validation and Quality Control:

- Evaluate partial correlation coefficients between DTs and actual change scores from baseline (expected range: 0.30-0.46 based on validation studies) [12]

- Calculate total residual variance reduction (typically ~9% to 15% with DTs) [12]

- Determine potential sample size reductions while maintaining statistical power (total sample: ~9-15%; control arm: 17-26%) [12]

Protocol 2: Virtual Brain Twin Development for Surgical Planning

This protocol details the creation of personalized Virtual Brain Twins for neurological conditions requiring surgical intervention, such as drug-resistant epilepsy [14].

Data Acquisition and Preprocessing:

- Structural Imaging: Acquire high-resolution T1-weighted MRI and diffusion-weighted imaging (DWI) for connectome reconstruction

- Functional Data: Collect resting-state fMRI, task-based fMRI, EEG, and/or MEG data

- Clinical Phenotyping: Document seizure semiology, frequency, medication history, and prior interventions

Computational Modeling Pipeline:

Structural Connectome Reconstruction:

- Process structural MRI using FreeSurfer or similar pipeline for cortical parcellation

- Reconstruct white matter pathways from DWI using tractography (e.g., probabilistic tractography in FSL or MRtrix)

- Create adjacency matrix representing connection strengths between brain regions

Neural Mass Model Implementation:

- Implement a system of differential equations representing average neuronal population activity

- Parameters include synaptic gains, time constants, and propagation speeds

- Use The Virtual Brain (TVB) platform or custom MATLAB/Python code

Model Personalization and Fitting:

- Adjust model parameters to minimize difference between simulated and empirical functional data

- Apply Bayesian inference or similar optimization approaches

- Validate model by comparing simulated activity with held-out empirical data

Intervention Simulation:

- Simulate surgical resections by removing corresponding nodes/connections

- Test neuromodulation parameters (e.g., DBS, responsive neurostimulation)

- Predict post-intervention network dynamics and clinical outcomes

Validation Framework:

- Compare predicted seizure propagation pathways with intracranial EEG findings

- Assess accuracy of predicted clinical outcomes following intervention

- Evaluate model robustness through sensitivity analysis and uncertainty quantification

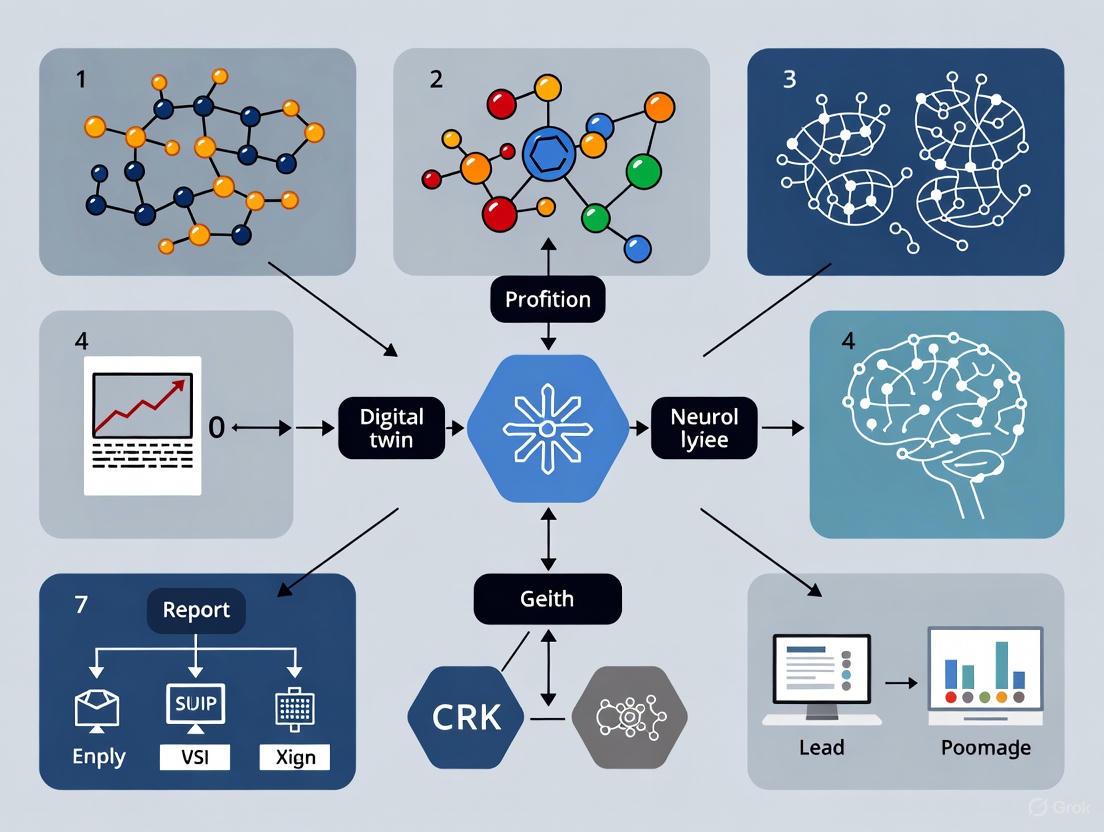

Visualization: Digital Twin Workflow in Neurological Research

Figure 1: Digital Twin Framework for Neurological Applications. This workflow diagram illustrates the bidirectional data flow between physical patient systems and their virtual counterparts, highlighting continuous data integration, computational modeling, and clinical application pathways.

Table 3: Essential Research Resources for Neurological Digital Twin Development

| Resource Category | Specific Tools/Platforms | Function/Purpose |

|---|---|---|

| Data Acquisition | MRI, diffusion MRI, EEG, MEG, fMRI | Capture structural and functional brain data for model personalization [14] |

| Computational Modeling | The Virtual Brain (TVB), Finite Element Analysis, Neural Mass Models | Implement mathematical representations of brain dynamics and physiology [14] |

| AI/ML Frameworks | Conditional Restricted Boltzmann Machines (CRBM), Deep Generative Models | Generate predictive digital twins and simulate disease progression [12] |

| Data Harmonization | CODR-AD, ADNI, Disease-specific Registries | Provide historical training data from clinical trials and observational studies [12] |

| Validation Frameworks | Verification, Validation, Uncertainty Quantification (VVUQ) | Ensure model reliability and predictive accuracy [9] |

| Clinical Integration | PROCOVA, PROCOVA-MMRM Statistical Methods | Incorporate digital twin predictions into clinical trial analysis [12] |

The migration of digital twin technology from industrial applications to neurological research represents a remarkable example of cross-disciplinary innovation. The historical trajectory demonstrates how core engineering concepts have been adapted to address the profound complexity of human brain function and neurological disease. Current applications in epilepsy, Alzheimer's disease, multiple sclerosis, Parkinson's disease, and brain tumors show consistent patterns of success in prediction accuracy, treatment optimization, and clinical decision support [11] [14].

Future development must address several critical challenges, including the need for more comprehensive validation frameworks, improved data integration across multiple scales, and solutions to computational scalability constraints. Furthermore, as noted in the scoping review by [9], only 12.08% of current healthcare digital twin implementations fully meet the NASEM criteria, indicating substantial room for methodological refinement. The continued evolution of this technology promises to accelerate the transition from reactive to predictive and preventive neurology, ultimately enabling truly personalized therapeutic strategies based on individual brain dynamics and disease trajectories.

Application Note: Digital Twin Modeling of Neurological Systems

Core Concept and Rationale

Digital twins (DTs) represent a transformative approach in neurological research and drug development, creating dynamic, virtual replicas of patient-specific physiological systems. These computational models integrate multi-scale data—from molecular pathways to whole-organ systems—to predict disease progression and treatment responses in silico. The fundamental value proposition lies in their ability to reduce clinical trial sample sizes by 9-15% while maintaining statistical power, and specifically decreasing control arm requirements by 17-26% through generating artificial intelligence-powered prognostic covariates [12]. This technology has received positive qualification opinions from regulatory agencies including the European Medicines Agency and U.S. Food and Drug Administration for clinical trial applications [12].

For neurological disorders specifically, digital twins address critical challenges including disease heterogeneity, high variability in clinical outcomes, and the limited generalizability of traditional randomized controlled trials. By creating patient-specific computational models that forecast individual placebo outcomes based on baseline characteristics, researchers can reduce statistical uncertainty and improve trial efficiency without compromising validity [12] [15]. The adaptive nature of digital twins—continuously updating with real-time data—distinguishes them from conventional simulations, enabling truly personalized predictive modeling in neurological care [5].

Quantitative Validation in Neurological Disorders

Table 1: Digital Twin Performance Metrics in Alzheimer's Disease Clinical Trials

| Performance Metric | AWARE Trial Results (Week 96) | Independent Trial Validation Range | Impact on Trial Efficiency |

|---|---|---|---|

| Partial Correlation with Cognitive Change | 0.30-0.39 | 0.30-0.46 | Improved prognostic accuracy |

| Residual Variance Reduction | 9-15% | N/A | Enhanced statistical power |

| Total Sample Size Reduction | 9-15% | N/A | Reduced recruitment burden |

| Control Arm Size Reduction | 17-26% | N/A | Ethical improvement |

| Primary Assessment Scales | CDR-SB, ADAS-Cog 14, MMSE, FAQ | Consistent across multiple trials | Multiple endpoint validation |

Data derived from application of digital twin methodology to the AWARE Phase 2 clinical trial (NCT02880956) in Alzheimer's Disease [12]

Protocol: Implementing Digital Twin Framework for Neurological Disease Modeling

Data Acquisition and Integration Protocol

Multi-Modal Data Sourcing

The foundational step in digital twin creation involves comprehensive data aggregation from diverse sources. Implement a structured acquisition protocol covering these data streams:

Clinical and Demographic Data: Extract from electronic health records including age, education, medical history, medication history, and family history. For Alzheimer's applications, ensure capture of baseline MMSE (22.2 ±4.6 typical in training cohorts), CDR-SB (2.7 ±2.2), and ADAS-Cog 14 scores [12].

Genetic and Molecular Profiling: Collect APOE ε4 genotype status, amyloid status (where available), and other relevant biomarker data. Historical training datasets typically contain 20-30% complete genetic information [12].

Wearable and Continuous Monitoring: Implement devices for real-time physiological tracking including heart rate, blood pressure, end-tidal CO₂, electroencephalogram, and cerebral blood flow measurements. Sample at minimum 1Hz frequency for autonomic function assessment [5].

Patient-Reported Outcomes: Standardize collection of symptom logs, activity levels, sleep patterns, stress measurements, and medication adherence using validated instruments.

Environmental Context: Document relevant environmental factors such as weather conditions, altitude, and temperature that may influence autonomic nervous system function [5].

Data Harmonization Procedure

- Execute ETL (Extract, Transform, Load) Pipeline to integrate heterogeneous data from clinical trials, observational studies, and real-world evidence sources.

- Apply syntactic normalization to standardize variable names, units, and formats across datasets.

- Implement semantic harmonization to resolve coding system differences (e.g., ICD-9 vs ICD-10, various cognitive assessment versions).

- Handle missing data using multiple imputation techniques validated for neurological datasets.

Model Training and Validation Protocol

Algorithm Selection and Configuration

For neurological applications, the Conditional Restricted Boltzmann Machine (CRBM) has demonstrated robust performance in generating digital twins:

Training Protocol:

- Pre-training Phase: Initialize CRBM model on harmonized historical dataset (6,736 unique subjects from CODR-AD and ADNI databases) [12].

- Feature Engineering: Incorporate 66+ harmonized variables spanning demographics, genetics, clinical severity, cognitive measures, and laboratory values.

- Transfer Learning: Fine-tune pre-trained weights on target trial population (e.g., 453 subjects for AWARE Alzheimer's trial).

- Hyperparameter Optimization: Conduct systematic search over learning rate (0.01-0.001), hidden units (50-500), and regularization parameters.

Validation Framework

Implement rigorous multi-level validation to ensure model reliability:

- Internal Validation: Cross-validation within training dataset using 80/20 split with 100 iterations.

- External Validation: Application to three independent clinical trials with correlation benchmarks of 0.30-0.46 against actual clinical outcomes [12].

- Prospective Validation: Blind prediction of ongoing trial outcomes before unblinding.

Experimental Workflow for Therapeutic Testing

In Silico Clinical Trial Implementation

Table 2: Research Reagent Solutions for Digital Twin Experiments

| Reagent/Category | Specification/Platform | Primary Function | Example Sources |

|---|---|---|---|

| Data Integration | CODR-AD, ADNI Databases | Historical training data with control arms | CPAD Consortium [12] |

| Modeling Framework | CRBM, Simply, Open Digital Twin Project | Core AI algorithms for twin generation | Open-source Python platforms [5] |

| Simulation Environment | PyTwin, TumorTwin-derived platforms | Running virtual treatment scenarios | Academic/industry collaborations [5] |

| Validation Tools | SHAP, PROCOVA-MMRM | Model interpretability and statistical adjustment | Regulatory guidance [12] [15] |

| Clinical Endpoints | CDR-SB, ADAS-Cog 14, MMSE, FAQ | Standardized outcome measures for validation | NIH/consortium standards [12] |

Protocol Execution Steps

Baseline Digital Twin Generation:

- Input comprehensive baseline data for each participant

- Generate individualized placebo progression forecasts using validated CRBM

- Establish pre-treatment prognostic scores for covariance adjustment

Virtual Cohort Simulation:

- Create synthetic control arms mirroring real participant characteristics

- Simulate virtual treatment groups by applying investigational drug effects

- Run 10,000+ in silico trial iterations with parameter variations

Integrated Analysis:

- Implement PROCOVA-Mixed Effects Model for Repeated Measures (PROCOVA-MMRM)

- Adjust for digital twin prognostics as covariates in primary analysis

- Calculate variance reduction and power improvements

Application Note: Pathway-Specific Targeting in Neurological Digital Twins

Multi-Scale Physiological Targeting

Digital twin technology enables unprecedented resolution in modeling neurological systems across multiple biological scales. The most effective implementations target specific physiological subsystems with appropriate modeling techniques:

Cellular Pathway Modeling: Approach signaling pathways not as fixed circuits but as plastic, proto-cognitive systems capable of memory and adaptation. This perspective enables modeling of phenomena like pharmacodynamic tolerance and sensitization at the molecular level [16]. Digital twins can simulate how repeated drug exposures alter pathway responsiveness over time, addressing significant challenges in chronic neurological disease management.

Autonomic Nervous System Implementation: For disorders like Postural Tachycardia Syndrome (POTS), digital twins create virtual replicas of autonomic cardiovascular control. These models integrate real-time data from wearables with mechanistic knowledge of cerebral blood flow regulation, orthostatic tachycardia mechanisms, and neurotransmitter dynamics [5]. The twins can predict cerebral hypoperfusion events by detecting precursor signals like inappropriate hyperventilation before symptom onset.

Whole-Brain System Integration: At the highest level, digital twins model brain-body physiology through hierarchical control loops spanning local, reflex, and central regulation levels [17]. This enables researchers to simulate how investigational therapies might affect the integrated system rather than isolated components.

Protocol Implementation for Autonomic Disorders

The following specialized protocol extends the general digital twin framework for autonomic nervous system applications:

Specialized Data Acquisition:

- Implement continuous non-invasive cardiac monitoring (heart rate, HRV)

- Measure end-tidal CO₂ as surrogate for cerebral blood flow regulation

- Record transcranial Doppler measurements when available

- Track symptom triggers and environmental context

Autonomic-Specific Modeling:

- Mechanistic Component: Implement known physiology of baroreflex function, respiratory-cardiovascular coupling, and cerebral autoregulation

- AI Component: Train machine learning algorithms on autonomic testing results (deep breathing, Valsalva, head-up tilt)

- Integration Layer: Fuse mechanistic and AI approaches through weighted consensus forecasting

Intervention Simulation:

- Test medication responses (e.g., pro-amatine, pyridostigmine) in silico before clinical use

- Simulate lifestyle interventions (exercise protocols, dietary modifications)

- Predict orthostatic tolerance under various environmental conditions

This specialized approach demonstrates how digital twin technology can be adapted to the unique challenges of specific neurological disease classes, from neurodegenerative conditions to autonomic disorders.

The convergence of systems medicine, the digital revolution, and social networking is catalyzing a profound shift in neurological care, embodied by the P4 medicine framework—Predictive, Preventive, Personalized, and Participatory. This approach represents a fundamental transformation from reactive to proactive medicine, examining patients through the lens of their interconnected biological parts and the dynamic functioning of their systems [18]. In neurological disciplines, disease emerges from the perturbation of informational networks that extend from the genome to molecules, cells, organs, the individual, and ultimately social networks [18]. The P4 approach leverages multidimensional data and machine-learning algorithms to develop interventions and monitor population health status with focus on wellbeing and healthy ageing, making it particularly relevant to neurological disorders [19].

Digital twin technology serves as the foundational engine for implementing P4 neurology in clinical practice and research. A digital twin is a dynamic, virtual replica of a patient's physiological system—in this context, the brain and nervous system—that continuously updates with real-time data to mirror the life cycle of its physical counterpart [5] [4]. This technology enables researchers and clinicians to move beyond traditional static models to interactive, adaptive systems that simulate brain dynamics in relation to pathology, therapeutic interventions, or external stimuli [4]. The integrative and adaptive nature of digital twins distinguishes them from conventional simulations; whereas simulations are typically isolated and designed for specific scenarios, digital twins are dynamic, continuously evolving models that reflect and respond to changes in the real-world system they represent [5].

The application of P4 medicine through digital twins is particularly suited to neurology due to the brain's complex network dynamics and the multifactorial nature of neurological diseases. Neurological conditions such as Postural Tachycardia Syndrome (POTS), Alzheimer's disease, brain tumors, and epilepsy exhibit complex pathophysiology that involves structural, functional, and network-level disturbances [5] [20] [4]. Digital twins offer a computational framework to model these interactions, providing a window into disease mechanisms and potential therapeutic avenues that align with the core principles of P4 medicine.

Digital Twin Technology: Enabling P4 Neurology

System Architecture and Core Components

The architecture of a neurological digital twin consists of several integrated subsystems that work in concert to create a comprehensive virtual representation. These components include: (1) Monitoring modules for continuous data acquisition from multiple sources; (2) Modeling engines that incorporate both mechanistic and artificial intelligence models of neural function; (3) Simulation platforms for running experiments and treatment scenarios; and (4) Patient-Physician interaction interfaces that facilitate communication between the patient, digital twin, and clinician [5].

This infrastructure enables the digital twin to serve as both a diagnostic and therapeutic tool. For instance, in managing Postural Tachycardia Syndrome (POTS), a digital twin can continuously monitor physiological signals such as heart rate, blood pressure, respiratory rate, and metabolic state. When the system detects inappropriate hyperventilation—a precursor to cerebral hypoperfusion and dizziness—it can alert the patient to slow their breathing through subconscious vibration signals, auditory cues, or by escalating the alert to the patient's physician for further investigation [5]. This closed-loop feedback system exemplifies the predictive and preventive dimensions of P4 medicine in clinical practice.

Data Integration and Personalization

The construction and maintenance of a high-fidelity digital twin requires continuous collection of patient data from diverse sources. Wearable devices provide real-time monitoring of vital signs like heart rate, blood pressure, end-tidal CO2, electroencephalogram patterns, and cerebral blood flow [5]. Electronic health records contribute historical data, including previous diagnoses, medical history, laboratory results, and medication records. Patient-reported outcomes capture self-reported data on symptoms, activity levels, sleep patterns, stress levels, and medication adherence. Additionally, environmental factors such as weather conditions that might influence neurological symptoms are integrated into the model [5].

Advanced neuroimaging forms a crucial data source for neurological digital twins. Magnetic resonance imaging (MRI) data, composed of 3D volumetric pixels (voxels), provides structural information about the brain [4]. Functional MRI (fMRI) measures blood flow related to neural activity, enabling simulation of brain functions and detection of patterns associated with specific cognitive tasks or disorders. Diffusion MRI (dMRI) traces water molecule movements in brain tissue, offering insights into structural connectivity between brain regions [4]. These multimodal data streams are fused to create a personalized, mathematical, dynamic brain model based on established biological principles, enabling simulation of how brain regions interact and respond to various stimuli, diseases, or potential neurosurgical interventions [4].

Table 1: Data Sources for Neurological Digital Twins

| Data Category | Specific Data Types | Application in Digital Twins |

|---|---|---|

| Clinical Data | Electronic health records, medical history, laboratory results, medication records | Establishing baseline health status and historical trends |

| Physiological Monitoring | Heart rate, blood pressure, respiratory rate, end-tidal CO2, cerebral blood flow | Real-time system status monitoring and anomaly detection |

| Neuroimaging | Structural MRI, functional MRI (fMRI), diffusion MRI (dMRI) | Mapping brain structure, function, and connectivity |

| Genetic & Molecular | Genome sequencing, proteomic data, biomarker profiles | Understanding individual susceptibility and molecular mechanisms |

| Patient-Reported Outcomes | Symptoms, activity levels, sleep patterns, medication adherence | Capturing subjective experience and quality of life metrics |

| Environmental Factors | Weather conditions, air quality, allergens | Contextualizing symptom triggers and exacerbating factors |

Application Notes: Implementing P4 Neurology

Predictive Applications: Modeling Disease Trajectories

The predictive power of P4 neurology leverages AI-driven digital twins to forecast individual patient disease progression and treatment responses. These models analyze vast amounts of research, clinical trials, and patient data to build evolving models that predict how a patient's condition might progress [5]. This capability empowers clinical teams to test hypotheses and develop new treatment plans more rapidly, potentially accelerating advances in both research and care [5].

In neuro-oncology, digital twins enable the modeling of tumor dynamics and their impact on brain function. By integrating neuroimaging data with genomic analyses and clinical outcomes, digital twins can simulate tumor effects on surrounding brain tissue and predict the efficacy of proposed treatments [4]. For example, platforms like The Virtual Brain (TVB) software integrate manifold data to construct personalized, mathematical, dynamic brain models based on established biological principles to simulate human-specific cognitive functions at the cellular and cortical level [4]. These simulations can elucidate topographical differences in neural activity responses, helping predict how brain tumors affect not only physical structures but also functional integrity.

Preventive Applications: Early Intervention Strategies

Prevention in P4 neurology operates through continuous monitoring and early detection of physiological deviations that precede clinical symptoms. Digital twins facilitate this by providing a platform for real-time physiological modeling and adaptive intervention simulations [5]. For instance, in managing autonomic disorders like POTS, digital twins can detect subtle warning signs—such as changes in heart rate or blood pressure variability—that precede acute episodes, thereby enabling preemptive interventions that reduce complications, hospitalizations, and improve outcomes [5].

The preventive capacity of digital twins also extends to lifestyle interventions. Because digital twins are continuously updated with real-time patient data, they can provide immediate feedback on how lifestyle changes affect a patient's health [5]. If a patient with a neurological condition begins an exercise program, the digital twin could monitor vital signs like heart rate, blood pressure, and respiratory pattern in real time, allowing adjustments to the plan as needed [5]. This dynamic feedback loop represents a significant advancement over traditional static lifestyle recommendations.

Personalized Applications: Precision Therapeutics

Personalization in P4 neurology represents the move from population-based treatment protocols to individually tailored interventions. Digital twins enable this by simulating patient-specific responses to medications and other therapies. For example, POTS patients often respond differently to medications like pro-amatine or pyridostigmine due to their unique autonomic profiles [5]. A digital twin can guide personalized dosing, reducing trial-and-error and minimizing side effects [5].

In the drug development pipeline, digital twins create highly detailed and dynamic virtual models of patients that enable researchers to simulate and predict how new drugs interact with different biological systems [15]. This approach is particularly valuable for neurological conditions where blood-brain barrier penetration, target engagement, and neuropharmacokinetics present unique challenges. During clinical trial simulation, digital twins can generate virtual cohorts that resemble real-world populations to model various dosing regimens, treatment plans, and patient selection factors, thereby improving trial design and success probability [15].

Participatory Applications: Engaging Patients as Partners

The participatory dimension of P4 neurology acknowledges patients as active partners in their health management rather than passive recipients of care. Digital twins facilitate this participation through intuitive interfaces that allow patients to visualize their health status, understand the potential outcomes of different choices, and actively contribute to their care decisions [18]. Patient-driven social networks will demand access to data and better treatments, further reinforcing this participatory model [18].

Digital twin platforms can enhance patient engagement by providing educational simulations that help patients understand their conditions and treatment rationales. For example, a patient with epilepsy might interact with a digital twin that visualizes how their seizure activity propagates through brain networks and how a proposed medication or neurostimulation approach would modulate this activity. This demystification of neurological disease empowers patients to actively participate in therapeutic decisions and adhere to treatment plans.

Table 2: P4 Applications in Neurological Conditions

| Neurological Condition | Predictive Application | Preventive Application | Personalized Application | Participatory Application |

|---|---|---|---|---|

| Postural Tachycardia Syndrome (POTS) | Forecast cerebral hypoperfusion episodes from respiratory patterns [5] | Preemptive breathing alerts to prevent dizziness [5] | Tailored medication dosing based on autonomic profile [5] | Patient interaction with digital twin for lifestyle adjustments [5] |

| Alzheimer's Disease & Related Dementias | Model disease progression using multifactorial risk algorithms [20] | Early detection through biomarker monitoring and lifestyle intervention [21] [20] | Customized drug combinations targeting multiple pathways [20] | Brain Care Score tools for patient-driven risk reduction [21] |

| Brain Tumors | Simulate tumor growth and functional impact on brain networks [4] | Optimization of surgical approaches to preserve function [4] | Individualized resection plans based on functional mapping [4] | Visualized surgical outcomes for informed consent [4] |

| Epilepsy | Identify seizure precursors from neurophysiological patterns | Adjust neuromodulation parameters preemptively | Tailored antiepileptic drug regimens | Patient-reported seizure diaries integrated with digital twin |

Experimental Protocols for Digital Twin Implementation

Protocol 1: Constructing a Base Digital Twin Framework

Objective: To create a foundational digital twin architecture for neurological applications that integrates multimodal data sources and supports basic simulation capabilities.

Materials and Equipment:

- High-performance computing infrastructure with GPU acceleration

- Data integration platform (e.g., Open Digital Twin Project, PyTwin, Simply) [5]

- Secure data storage solution compliant with healthcare regulations

- Wearable sensors for physiological monitoring (heart rate, blood pressure, activity)

- Neuroimaging capabilities (MRI, fMRI, dMRI)

- Laboratory information system access for genomic and biomarker data

Methodology:

- Data Acquisition and Harmonization: Collect multimodal patient data including structural neuroimaging (T1-weighted MRI), functional connectivity (resting-state fMRI), white matter tractography (dMRI), genetic profiling (APOE status for dementia risk), clinical history, and baseline cognitive assessments. Implement data harmonization protocols to manage variability across different imaging platforms and ensure consistency [4].

Model Initialization: Utilize established computational frameworks like The Virtual Brain (TVB) to construct an initial base model [4]. Parameterize the model using the collected patient data to create a personalized brain network model. This involves mapping individual connectivity matrices derived from dMRI data and adjusting neural mass model parameters to fit individual fMRI dynamics.

Model Validation and Refinement: Implement a validation pipeline where the digital twin's predictions are compared against actual patient outcomes. Use discrepancy measures to iteratively refine model parameters through machine learning approaches. Employ techniques such as SHapley Additive exPlanations (SHAP) to enhance model transparency and interpretability [15].

Interface Development: Create clinician and patient-facing interfaces that display the digital twin's status, simulations, and recommendations in an intuitive format. Ensure these interfaces integrate with existing electronic health record systems for seamless clinical workflow integration [5].

Quality Control Considerations:

- Implement rigorous data quality checks at each acquisition stage

- Establish protocols for handling missing or noisy data

- Conduct regular model performance assessments against holdout datasets

- Maintain audit trails of all model modifications and updates

Protocol 2: Clinical Trial Enhancement with Digital Twins

Objective: To employ digital twins as virtual control arms in neurological clinical trials, reducing recruitment needs while maintaining statistical power.

Materials and Equipment:

- Historical clinical trial data for model training

- AI-based digital twin platform capable of generating synthetic control arms

- Randomization and trial management system

- Statistical analysis software adapted for digital twin integration

Methodology:

- Digital Twin Development: Create digital twins for trial participants by training models on comprehensive historical control datasets derived from previous clinical trials, disease registries, and real-world evidence studies [15]. For each real participant enrolled in the experimental arm, generate a matched digital twin that simulates their expected disease progression under standard care.

Trial Design Implementation: Implement an adaptive trial design where the digital twins serve as a virtual control cohort. In this approach, each real participant receiving the experimental treatment is paired with a digital twin whose progression is projected under standard care, offering comparator data without exposing additional patients to a placebo [15].

Treatment Effect Estimation: Calculate treatment effects by comparing outcomes between the experimentally-treated patients and their digitally-modeled counterparts under control conditions. Employ Bayesian statistical methods that incorporate the uncertainty in digital twin predictions into the treatment effect estimates [22].

Validation and Calibration: Continuously validate digital twin predictions against any actual control patients enrolled in the trial (if applicable). Calibrate models based on any discrepancies to improve accuracy throughout the trial period.

Statistical Considerations:

- Conduct power analyses that account for the precision added by digital twins

- Implement bias detection algorithms to identify systematic prediction errors

- Pre-specified statistical analysis plans that define how digital twin data will be incorporated

- Sensitivity analyses to assess robustness of findings to model assumptions

The following diagram illustrates the fundamental workflow for creating and utilizing a digital twin in neurological research and care:

Protocol 3: Personalized Therapeutic Optimization for Neurological Disorders

Objective: To utilize digital twins for simulating individual patient responses to neurological therapies and optimizing treatment parameters.

Materials and Equipment:

- Validated digital twin platform with therapeutic simulation capabilities

- Drug databases with pharmacokinetic and pharmacodynamic parameters

- Neuromodulation equipment interfaces (for DBS, TMS, etc.)

- High-fidelity physiological monitoring systems

Methodology:

- Baseline Characterization: Conduct comprehensive phenotyping of the patient's neurological status using quantitative assessments, including cognitive testing, motor evaluation, autonomic function tests, and quality of life measures. Integrate these data with the patient's digital twin.

Intervention Simulation: Simulate the effects of potential therapeutic interventions on the digital twin. For pharmacological approaches, this involves modeling drug pharmacokinetics and pharmacodynamics based on the patient's metabolic profile and blood-brain barrier characteristics. For neuromodulation approaches, simulate the electrical field distributions and their effects on neural circuitry.

Response Prediction: Use the digital twin to forecast acute and long-term responses to each potential intervention, including both therapeutic effects and adverse events. For example, in Parkinson's disease, simulate how different deep brain stimulation parameters would affect network dynamics across the basal ganglia-thalamocortical circuit.

Optimization and Personalization: Employ optimization algorithms to identify intervention parameters that maximize predicted therapeutic benefit while minimizing adverse effects. This may include dose titration schedules for medications or parameter settings for neuromodulation devices.

Closed-Loop Refinement: Implement a closed-loop system where the digital twin is continuously updated with real-world patient response data, allowing for dynamic refinement of therapeutic recommendations over time.

Clinical Validation:

- Compare digital twin predictions with actual patient responses

- Conduct n-of-1 trials to validate personalized recommendations

- Assess clinical outcomes compared to standard care approaches

- Evaluate patient engagement and satisfaction with personalized recommendations

The Scientist's Toolkit: Essential Research Reagents and Solutions

Table 3: Essential Research Reagents and Computational Tools for Neurological Digital Twins

| Tool Category | Specific Examples | Function/Application | Key Characteristics |

|---|---|---|---|

| Computational Platforms | The Virtual Brain (TVB) [4] | Whole-brain simulation platform | Personalized brain network models, biologically realistic neural mass models |

| Open Digital Twin Project, PyTwin, Simply [5] | General digital twin development | Python-based, open-source, modular architecture for physical systems | |

| Data Acquisition Tools | Multimodal MRI (fMRI, dMRI) [4] | Brain structure and function mapping | Voxel-based data on brain structure, functional connectivity, and structural connectivity |

| Wearable physiological monitors [5] | Continuous vital sign monitoring | Real-time heart rate, blood pressure, respiratory rate, activity levels | |

| AI/Modeling Frameworks | Deep generative models [15] | Synthetic patient cohort generation | Replicate underlying structure of real-world populations for clinical trials |

| SHapley Additive exPlanations (SHAP) [15] | Model interpretability and transparency | Explains output of complex machine learning models | |

| Therapeutic Simulation | TumorTwin software [5] | Cancer care applications | Integrates medical imaging with mathematical modeling to tailor interventions |

| Pharmacokinetic/pharmacodynamic models [15] | Drug response prediction | Simulates medication absorption, distribution, metabolism, and effect |

The integration of P4 medicine principles with digital twin technology represents a paradigm shift in neurology, transitioning the field from reactive disease management to proactive health optimization. This approach leverages predictive modeling to forecast disease trajectories, preventive strategies to intercept pathological processes, personalized interventions tailored to individual biology and circumstances, and participatory engagement that empowers patients as active partners in their care.