Decoding Silence: How Speech BCIs Are Restoring Communication for ALS Patients

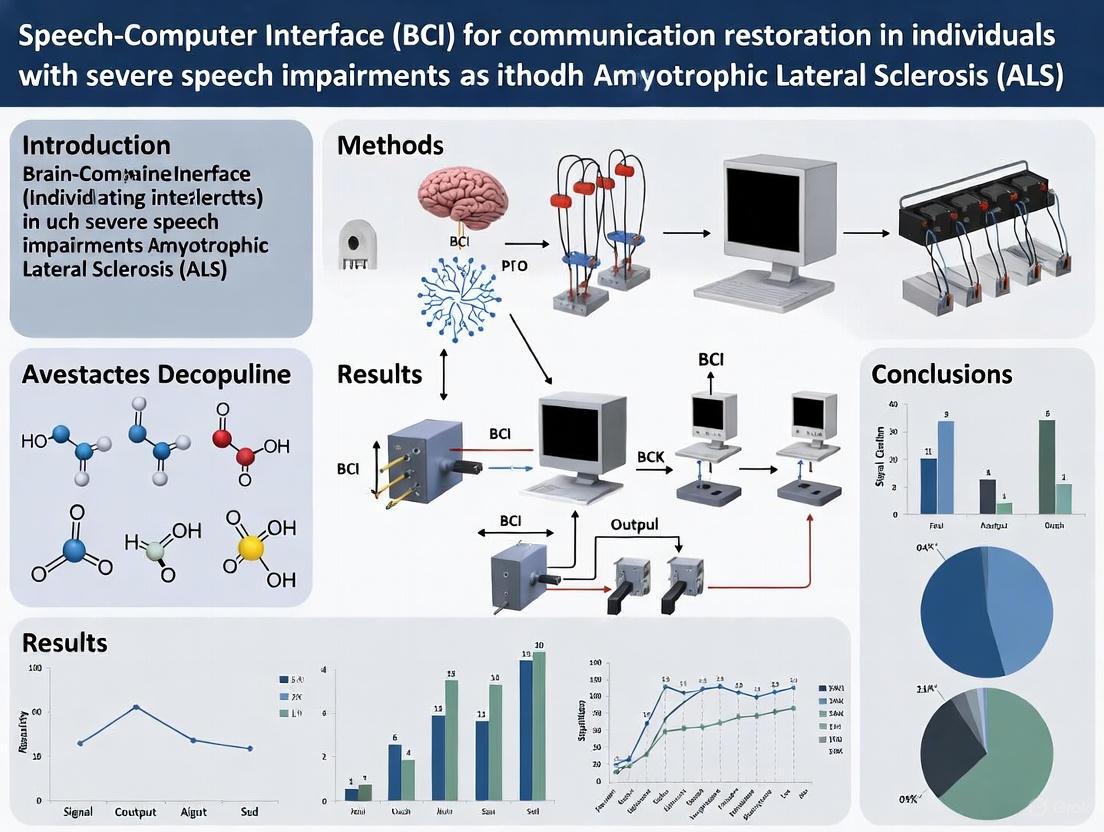

This article synthesizes the latest advancements in speech-decoding Brain-Computer Interfaces (BCIs) for restoring communication in individuals with Amyotrophic Lateral Sclerosis (ALS).

Decoding Silence: How Speech BCIs Are Restoring Communication for ALS Patients

Abstract

This article synthesizes the latest advancements in speech-decoding Brain-Computer Interfaces (BCIs) for restoring communication in individuals with Amyotrophic Lateral Sclerosis (ALS). It explores the foundational neuroscience of inner and attempted speech, details the methodological progress in invasive and non-invasive signal acquisition and AI-driven decoding, and addresses critical challenges in system optimization and long-term use. Furthermore, it provides a comparative analysis of current technologies from leading clinical trials and neurotech companies, validating the transition of these systems from laboratory proof-of-concept to viable, long-term assistive communication devices. The findings highlight a rapidly evolving field where high-accuracy, real-time speech synthesis is becoming a clinical reality, offering profound implications for researchers and clinicians in neuroscience and biomedical engineering.

The Neuroscience of Lost Speech: From Motor Commands to Inner Monologue

The mapping of neural correlates for speech within the motor cortex represents a critical frontier in neuroscience, with profound implications for developing brain-computer interfaces (BCIs) to restore communication in patients with neurological disorders such as amyotrophic lateral sclerosis (ALS). ALS progressively damages both upper and lower motor neurons, leading to severe speech impairment and eventual loss of communication [1] [2]. Understanding the precise functional organization of speech-related cortical regions enables the development of targeted neuroprosthetics that can decode articulation commands directly from neural signals. This document provides detailed application notes and experimental protocols for mapping articulatory functions in the motor cortex, framed within the context of speech-decoding BCI research for ALS communication restoration.

Functional Neuroanatomy of Speech Motor Control

The human motor cortex contains specialized regions responsible for planning, executing, and monitoring articulatory movements. Key areas include the primary motor cortex (M1), ventral sensorimotor cortex, premotor cortex, and supplementary motor area, which collectively coordinate the complex musculature involved in speech production.

Probabilistic Functional Mapping

Recent meta-analyses of direct electrical stimulation (DES) studies have generated probabilistic maps of speech-related functions. DES creates transient, focal disruptions in cortical processing, allowing researchers to identify critical sites for specific articulatory processes [3]. The table below summarizes key speech-related regions identified through DES mapping:

Table 1: Cortical Sites Critical for Speech Production Identified via DES

| Cortical Region | Brodmann Area | Function Disrupted | Probability Score |

|---|---|---|---|

| Ventral Precentral Gyrus | BA4 | Speech arrest, phonation | 0.82 |

| Dorsal Precentral Gyrus | BA4 | Lip/tongue movement | 0.76 |

| Posterior Superior Temporal Gyrus | BA22 | Naming, auditory processing | 0.71 |

| Ventral Premotor Cortex | BA6 | Articulatory planning | 0.68 |

| Insular Cortex | BA13 | Speech perseveration | 0.45 |

Laminar Organization and Pathological Markers

In ALS patients, the motor cortex exhibits distinctive pathological changes that can serve as biomarkers for upper motor neuron involvement. The motor band sign (MBS) appears on susceptibility-weighted imaging (SWI) as a hypointense band along the primary motor cortex, reflecting iron accumulation within activated microglia [2]. Quantitative assessment using the motor band hypointensity ratio (MBHR) has demonstrated diagnostic value, with a cutoff of ≤54.6% distinguishing ALS patients from controls with 90.0% sensitivity and 100% specificity [2].

Ultra-high-field 7T MRI has revealed a stratified "Oreo-fashion" layered pattern of the MBS in ALS patients, with signal intensity decreases in both superficial and deep cortical layers. This laminar-specific pattern likely reflects the cytoarchitectonic organization of M1, where ferritin-rich microglia predominate in middle and deep layers [2].

Quantitative Biomarkers of Cortical Dysfunction in ALS

Cortical Excitability Measures

Transcranial magnetic stimulation (TMS) provides non-invasive assessment of cortical excitability, with short-interval intracortical inhibition (SICI) emerging as the most sensitive parameter for detecting upper motor neuron dysfunction in ALS [1]. The table below summarizes key biomarkers and their diagnostic characteristics:

Table 2: Quantitative Biomarkers of Cortical Dysfunction in ALS

| Biomarker | Assessment Method | ALS Abnormality | Diagnostic Performance | Longitudinal Change |

|---|---|---|---|---|

| Averaged SICI | Threshold-tracking TMS | Reduction (<5.5%) | Sensitivity ~70%, Specificity ~70% [1] | Progressive decline over 12 months (p=0.004) [1] |

| MBHR | 7T SWI MRI | Decrease (≤54.6%) | Sensitivity 90.0%, Specificity 100% for definite ALS [2] | Correlates with disease progression rate (r=-0.51, p=0.0006) [2] |

| Motor Band Sign | Visual assessment on SWI | Presence of hypointense band | Prevalence: 90% definite ALS, 42.9% possible ALS [2] | Associated with faster progression (p=0.015) [2] |

SICI specifically reflects the integrity of GABAergic inhibitory interneurons in the motor cortex. In ALS, degeneration of parvalbumin-positive inhibitory interneurons and reduced GABAA receptor expression contribute to cortical hyperexcitability, which can be quantified through SICI measurements [1]. Longitudinal studies demonstrate that SICI values progressively decline over time, with the proportion of patients exhibiting clinically abnormal SICI (<5.5%) increasing by 50% in the dominant hemisphere over 12 months [1].

Experimental Protocols for Motor Cortex Mapping

Protocol 1: Threshold-Tracking TMS for SICI Assessment

Application: Quantification of cortical excitability in ALS diagnostic workup and therapeutic monitoring.

Materials:

- Magstim TMS unit with 90-mm circular coil

- EMG recording equipment for abductor pollicis brevis (APB)

- Magxite software with Sydney protocol implementation [1]

Procedure:

- Subject Preparation: Position subject comfortably with APB muscle in relaxed state. Apply EMG electrodes in belly-tendon configuration.

- Resting Motor Threshold (RMT) Determination: Determine stimulus intensity required to produce motor evoked potential (MEP) of 0.2 mV peak-to-peak amplitude in 50% of trials.

- SICI Protocol: Set conditioning stimulus to 70% RMT. Apply paired pulses at interstimulus intervals (ISI) of 1, 1.5, 2, 2.5, 3, 3.5, 4, 5, and 7 ms.

- Data Acquisition: For each ISI, record change in conditioned stimulus intensity required to evoke target MEP.

- Calculation: Compute averaged SICI as mean of SICI values across all ISIs (1-7 ms).

- Analysis: Compare averaged SICI to normative value (>5.5%). Values below threshold indicate cortical hyperexcitability [1].

Validation Notes: SICI has demonstrated sensitivity of ~70% for distinguishing ALS from mimic disorders. Longitudinal assessments should be performed at 3-6 month intervals to track disease progression [1].

Protocol 2: 7T SWI for Motor Band Sign Quantification

Application: Detection of upper motor neuron degeneration in ALS via iron-sensitive imaging.

Materials:

- 7T MRI scanner with SWI sequence

- Image processing software (e.g., SPM, FSL)

- Custom MATLAB scripts for MBHR calculation

Procedure:

- Subject Positioning: Position patient in head coil with immobilization to minimize motion.

- Sequence Acquisition: Acquise high-resolution SWI sequences with parameters: TR/TE = 35/20 ms, flip angle = 15°, slice thickness = 1.5 mm, matrix size = 384×384.

- ROI Definition: Manually delineate primary motor cortex (M1) on consecutive axial slices.

- Reference Selection: Identify adjacent subcortical white matter region as reference standard.

- Signal Intensity Measurement: Calculate mean signal intensity within M1 and reference region.

- MBHR Calculation: Compute MBHR as (mean signal intensity M1 / mean signal intensity reference) × 100%.

- Interpretation: MBHR ≤54.6% indicates positive MBS, suggestive of UMN involvement in ALS [2].

Validation Notes: 7T SWI demonstrates superior MBS detection rates compared to 3T SWI (7/8 vs. 4/8 patients). The protocol shows high interobserver consistency and correlates with clinical measures of UMN dysfunction [2].

Diagram 1: SICI Assessment Protocol

BCI Applications for Speech Restoration

Electrocorticographic Speech Synthesis

Recent advances in chronically implanted BCIs have demonstrated the feasibility of synthesizing intelligible speech directly from cortical signals in individuals with ALS. The following protocol outlines the methodology for online speech synthesis using electrocorticography (ECoG):

Materials:

- 64-channel ECoG grids (4 mm center-to-center spacing) implanted over speech motor cortex

- NeuroPort recording system (Blackrock Neurotech)

- Real-time signal processing pipeline with recurrent neural networks

- LPCNet vocoder for speech synthesis [4]

Procedure:

- Surgical Planning: Implant two ECoG arrays covering ventral sensorimotor cortex and dorsal laryngeal areas using anatomical landmarks and pre-operative fMRI.

- Signal Acquisition: Record ECoG signals at 1 kHz sampling rate with common average referencing.

- Feature Extraction: Compute high-gamma (70-170 Hz) power features using 50 ms windows with 10 ms frame shift.

- Voice Activity Detection: Implement neural voice activity detection (nVAD) using recurrent neural network to identify speech segments.

- Acoustic Mapping: Transform high-gamma features to Bark-scale cepstral coefficients and pitch parameters using bidirectional RNN.

- Speech Synthesis: Generate acoustic waveform using LPCNet vocoder with delayed auditory feedback [4].

Performance Metrics: This approach has achieved 80% intelligibility for synthesized words from a 6-word vocabulary, preserving the participant's original voice profile [4].

Inner Speech Decoding

Beyond attempted speech, recent research has successfully decoded inner speech (imagined speech without articulation) from motor cortex activity, offering a less physically demanding communication channel for severely paralyzed individuals.

Materials:

- Microelectrode arrays (Blackrock Neurotech) implanted in speech motor cortex

- Custom decoding software based on phoneme recognition algorithms

- Privacy protection modules for unintended thought detection

Procedure:

- Neural Recording: Implant microelectrode arrays in ventral precentral gyrus to record single-unit and multi-unit activity.

- Training Data Collection: Record neural activity during both attempted and imagined speech of predefined words and sentences.

- Decoder Training: Train machine learning algorithms to recognize repeatable patterns associated with phonemes, then stitch phonemes into sentences.

- Privacy Safeguards: Implement one of two privacy protection strategies:

- Real-time Implementation: Deploy trained model in real-time BCI system with text or speech output.

Performance Metrics: Inner speech decoding achieves error rates of 14-33% for 50-word vocabulary and 26-54% for 125,000-word vocabulary. Participants with severe weakness prefer imagined speech over attempted speech due to lower physical effort [5].

Diagram 2: ECoG Speech Synthesis Pipeline

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Materials for Motor Cortex Mapping and Speech BCI Research

| Research Tool | Specifications | Application | Key Features |

|---|---|---|---|

| TMS with Circular Coil | 90-mm circular coil, Magstim unit | Cortical excitability assessment | Compatible with Magxite software, Sydney protocol implementation [1] |

| High-Density ECoG Grids | 64 electrodes, 4mm spacing, 2mm diameter | Intracortical signal acquisition | Covers speech motor areas, compatible with NeuroPort system [7] [4] |

| Microelectrode Arrays | Blackrock Neurotech, <1mm size | Single-neuron recording for speech decoding | 256 electrodes, chronic implantation capability [8] |

| 7T MRI with SWI | Susceptibility-weighted imaging | Motor band sign detection | Identifies iron deposition in M1, quantitative MBHR measurement [2] |

| LPCNet Vocoder | Neural speech synthesis | Acoustic waveform generation | Real-time operation, preserves voice profile [4] |

| NeuroPort System | Blackrock Neurotech, 1kHz sampling | Neural data acquisition | 64-channel recording, real-time processing capability [7] |

The precise mapping of neural correlates for speech in the motor cortex provides the foundation for developing increasingly sophisticated BCIs to restore communication in ALS patients. The protocols and applications detailed herein highlight the rapid advancement in both diagnostic biomarkers of cortical dysfunction and therapeutic approaches for speech restoration. Future directions include the development of fully implantable, wireless BCI systems; improved decoding algorithms capable of handling larger vocabularies with higher accuracy; and the integration of real-time excitability modulation with speech decoding. As these technologies mature, they promise to transform the communicative capacity of individuals with severe speech impairments, ultimately restoring natural and fluent communication.

Brain-computer interfaces (BCIs) for speech restoration represent a transformative technology for individuals with paralysis resulting from conditions such as amyotrophic lateral sclerosis (ALS) or brainstem stroke. These systems traditionally rely on decoding attempted speech—the weakened neural commands sent to speech muscles. Recently, however, research has explored decoding inner speech (also called inner monologue), which is the imagination of speech without any physical movement [5] [6] [9].

Understanding the distinct neural patterns underlying these two processes is crucial for developing next-generation neuroprostheses. This application note synthesizes recent findings, provides structured quantitative comparisons, and outlines detailed experimental protocols to guide researchers in decoding both speech paradigms for communication restoration.

Comparative Neural Signatures and Decoding Performance

Neural Activity Patterns

Research indicates that both attempted and inner speech evoke detectable neural activity in the motor cortex, but with key distinctions. A Stanford University study found that inner speech produces patterns that are "a similar, but smaller, version of the activity patterns evoked by attempted speech" [6] [9]. The neural signals for attempted speech are generally stronger and more robust, making them easier to decode with higher accuracy [5].

Table 1: Comparative Analysis of Attempted vs. Inner Speech Neural Patterns

| Feature | Attempted Speech | Inner Speech |

|---|---|---|

| Neural Signal Strength | Stronger signals | Smaller, similar patterns [6] [9] |

| Primary Brain Regions | Motor cortex [6] [9] | Motor cortex (with potential additional regions) [6] [9] |

| Physical Effort Required | Higher, can be fatiguing [6] [9] | Lower, less physically demanding [6] [9] |

| Involuntary Vocalizations | Possible in partial paralysis [6] [9] | None |

| User Preference | Can be taxing for severely paralyzed users | Preferred for comfort and lower effort [5] |

Quantitative Decoding Performance

Decoding performance varies significantly between speech paradigms and vocabulary sizes. Error rates for inner speech decoding, while higher than for attempted speech, demonstrate the feasibility of this approach.

Table 2: Inner Speech Decoding Performance Metrics (50-word vocabulary) [5]

| Performance Metric | Value Range |

|---|---|

| Word Error Rate | 14% - 33% |

| Decoding Capability | Demonstrated for words and sentences |

Table 3: Inner Speech Decoding Performance Metrics (Large vocabulary) [5]

| Performance Metric | Value Range |

|---|---|

| Word Error Rate | 26% - 54% |

| Vocabulary Size | 125,000 words |

For attempted speech with real-time voice synthesis, as demonstrated in a UC Davis study, listeners could understand almost 60% of synthesized words correctly, compared to only 4% without the BCI [10]. The system achieved remarkably low latency, processing neural signals into audible speech in approximately one-fortieth of a second [10].

Experimental Protocols for Neural Data Acquisition and Decoding

Participant Selection and Surgical Implantation

Protocol Objective: To establish a participant cohort and implement the necessary neural recording hardware.

- Participant Criteria: Recruit individuals with severe speech and motor impairments due to ALS, brainstem stroke, or other neurological conditions [5] [6]. All participants should be enrolled under an approved clinical trial protocol (e.g., BrainGate2) [10].

- Surgical Implantation: Under sterile conditions, implant multiple microelectrode arrays (each smaller than a baby aspirin) into the speech motor cortex [6] [9]. These arrays typically contain dozens to hundreds of microelectrodes to record single and multi-unit activity.

- Signal Connection: Connect implanted arrays to a percutaneous pedestal, which interfaces with external amplification and recording systems via cables [6].

Data Collection Paradigms

Protocol Objective: To elicit and record neural signals associated with both speech paradigms.

- Attempted Speech Tasks: Present participants with text prompts on a screen and instruct them to attempt to speak the words or sentences aloud, even if no sound is produced [10]. Record simultaneous neural data.

- Inner Speech Tasks: Present the same text prompts but instruct participants to imagine saying the words without any attempted movement [5] [6]. Ensure proper blinding to prevent subtle muscle activation.

- Control Tasks: Include non-verbal cognitive tasks (e.g., sequence recall, mental counting) to assess the specificity of decoding algorithms and potential for unintentional thought decoding [5].

Signal Processing and Decoding Workflow

The following diagram illustrates the comprehensive workflow from neural signal acquisition to speech output, highlighting the parallel processing paths for attempted and inner speech.

Privacy Protection Protocols

Protocol Objective: To prevent unintended decoding of private inner thoughts.

- Selective Attention Training: For attempted speech BCIs, train the decoder to distinguish and ignore inner speech signals, effectively silencing unintended output [5] [6].

- Password-Protected Decoding: For inner speech BCIs, implement a trigger mechanism where the system only becomes active after detecting a specific, rarely-used passphrase (e.g., "as above, so below") imagined by the user [5] [6] [9]. This system has demonstrated >98% recognition accuracy for the keyword [5].

The Scientist's Toolkit: Research Reagent Solutions

Table 4: Essential Materials and Tools for Speech BCI Research

| Tool/Technology | Function/Purpose | Example Implementation |

|---|---|---|

| Microelectrode Arrays | Record neural activity from the motor cortex | Utah arrays or similar multi-electrode implants [5] [6] |

| Signal Processing Algorithms | Extract meaningful features from raw neural data | Machine learning models trained to recognize phoneme-level patterns [6] [9] |

| ERPCCA Toolbox | Decode event-related potentials for BCI applications | Open-source toolbox for MATLAB that implements Canonical Correlation Analysis [11] |

| Real-time Voice Synthesis | Convert decoded neural signals into audible speech | Algorithms that map neural activity to vocal tract parameters [10] |

| Privacy Protection Algorithms | Prevent unintended decoding of private thoughts | Keyword unlocking systems or selective attention training [5] [6] |

The distinct neural patterns of attempted and inner speech offer complementary pathways for restoring communication in severely paralyzed individuals. While attempted speech provides stronger, more decodable signals, inner speech represents a less fatiguing alternative that users may prefer.

Future research directions should focus on: (1) exploring brain regions beyond the motor cortex for higher-fidelity inner speech decoding; (2) developing fully implantable, wireless hardware systems; and (3) validating these technologies across larger and more diverse participant populations, including those with different etologies of speech loss [5] [6] [9]. As these technologies mature, they hold the promise of restoring fluent, natural, and comfortable communication—a fundamental human capacity—to those who have lost it.

Application Notes: Speech Neuroprosthetics for ALS

Current Landscape of Speech BCIs

Brain-computer interfaces represent a revolutionary approach for restoring communication in patients with amyotrophic lateral sclerosis (ALS) by bypassing compromised neuromuscular pathways. These systems translate neural signals directly into speech output, offering a vital communication channel for individuals who have lost the ability to speak.

Table 1: Performance Metrics of Recent Speech BCI Technologies

| Technology Type | Research Institution | Vocabulary Size | Word Error Rate | Output Speed | Key Features |

|---|---|---|---|---|---|

| Inner Speech Decoding | Stanford University [5] [6] | 50 words 125,000 words | 14-33% 26-54% | Not specified | Decodes attempted and inner speech; Privacy protection features |

| Real-time Voice Synthesis | UC Davis [10] [12] | Continuous speech | 40% (intelligibility ~60%) | Real-time (25ms delay) | Instant voice synthesis; Intonation control; Singing capability |

Neural Signal Acquisition and Processing

Speech BCIs utilize microelectrode arrays implanted in speech-related regions of the motor cortex to record neural activity patterns [5] [6]. These signals are processed through machine learning algorithms that identify repeatable patterns associated with speech attempts or imagination. The UC Davis system employs advanced AI algorithms that align neural firing patterns with speech sounds the participant attempts to produce, enabling accurate voice reconstruction from neural signals alone [10] [12].

Signal Interpretation and Output Generation

The translation of neural signals to speech output occurs through two primary approaches: text-based systems that display transcribed speech and voice synthesis systems that generate audible speech. The real-time voice synthesis system developed at UC Davis creates a digital vocal tract with minimal latency (approximately 25ms), allowing patients to participate in natural conversations with the ability to interrupt and emphasize words [10]. This represents a significant advancement over previous text-based systems, which created disruptive delays in conversation flow.

Experimental Protocols

Protocol 1: Inner Speech Decoding for BCI

Objective: To decode and translate inner speech (imagined speech without physical movement) into communicative output using intracortical signals [5] [6].

Procedure:

- Participant Preparation: Implant microelectrode arrays (Blackrock Neurotech) in speech motor cortex regions. For patients with ALS, ensure proper informed consent procedures are followed given the progressive nature of their condition [5] [6].

- Neural Signal Acquisition: Record extracellular potentials from approximately 200 neurons at 30kHz sampling rate. Use hardware filters (0.3-7,500 Hz bandpass) to remove noise and artifacts [5].

- Task Paradigm Execution:

- Present visual or auditory cues of target words/sentences

- Instruct participants to either attempt speech or imagine speaking (inner speech)

- Utilize a 50-word core vocabulary with expansion to 125,000 words

- Collect data across multiple sessions (5-10 sessions recommended) [5]

- Signal Processing:

- Extract spike rates in 20ms bins

- Perform common average referencing to reduce noise

- Apply principal component analysis for dimensionality reduction

- Machine Learning Decoding:

- Output Generation:

- Convert decoded phonemes to text or synthesized speech

- For inner speech, implement privacy safeguards (password protection) [6]

- Performance Validation:

- Calculate word error rates using standardized metrics

- Assess communication rate (words per minute)

- Compare attempted vs. inner speech decoding accuracy [5]

Protocol 2: Real-time Voice Synthesis BCI

Objective: To instantaneously translate attempted speech neural signals into synthesized voice output with naturalistic intonation and timing [10] [12].

Procedure:

- Electrode Implantation: Surgically implant four microelectrode arrays in speech-related temporal cortex regions using standard stereotactic procedures [10] [12].

- Neural Data Collection:

- Record from hundreds of neurons simultaneously during attempted speech

- Present sentences on screen for participant to attempt speaking

- Synchronize neural data with known speech targets

- Collect minimum of 20 hours of training data per participant [10]

- Algorithm Training:

- Align neural patterns with corresponding speech sounds

- Train deep learning models to map neural activity to vocal tract parameters

- Include prosodic features (pitch, emphasis) in training targets [10]

- Real-time Processing:

- Voice Synthesis:

- Use parametric speech synthesis (vocoder) approach

- Incorporate real-time pitch and timing modulation

- Allow participant control over speech cadence and intonation [10]

- Intelligibility Assessment:

Research Reagent Solutions

Table 2: Essential Materials for Speech BCI Research

| Category | Specific Product/Technology | Manufacturer/Developer | Primary Function |

|---|---|---|---|

| Neural Interfaces | Microelectrode Arrays | Blackrock Neurotech | High-density neural signal recording from cortex |

| Stentrode | Synchron | Minimally invasive electrode delivery via blood vessels [13] | |

| Graphene-based Electrodes | InBrain Neuroelectronics | High-resolution neural interface with improved signal quality [13] | |

| Fleuron Material Arrays | Axoft | Ultrasoft implants for reduced tissue scarring [13] | |

| Signal Processing | OpenNeuro Software Suite | Stanford University | Neural data preprocessing and feature extraction |

| Real-time BCI Decoding Software | UC Davis Neuroprosthetics Lab | Neural signal to speech conversion [10] | |

| BCI HID Profile | Apple | Native input protocol for BCI devices [13] | |

| Experimental Materials | Phoneme-balanced Word Lists | Modified IEEE Harvard Sentences | Standardized speech stimuli for training |

| Audio Recording Equipment | Professional studio microphones | High-fidelity speech recording for training data | |

| Surgical Components | StereoEEG Implantation Kit | Ad-Tech Medical | Standard equipment for intracranial electrode placement |

Emerging Technologies and Future Directions

The field of speech BCI is rapidly evolving with several promising technological developments. Flexible neural interfaces using novel materials like graphene and Fleuron polymer are showing potential for improved long-term signal stability and reduced tissue response [13] [14]. Companies including Synchron and Paradromics are advancing toward clinical trials of fully implantable, wireless BCI systems, with Paradromics expecting to launch its Connexus BCI study in late 2025 [13].

Integration with consumer technology platforms represents another significant advancement, with Apple's BCI Human Interface Device profile enabling native control of iPhones and iPads through neural interfaces [13]. This development could significantly accelerate the adoption and usability of speech BCIs in daily life for ALS patients.

Artificial intelligence continues to drive improvements in decoding algorithms, with modern systems employing sophisticated neural networks that can adapt to individual users' neural patterns and improve performance over time [10] [14]. These advances in both hardware and software components are paving the way for more natural, efficient, and accessible communication solutions for individuals with ALS.

Brain-computer interfaces (BCIs) for speech decoding represent a transformative technology for restoring communication in individuals with neurological conditions such as amyotrophic lateral sclerosis (ALS) [15] [16]. The core of any BCI system is its signal acquisition modality, which determines the quality, spatial resolution, and temporal resolution of the recorded neural data. Electrocorticography (ECoG), microelectrode arrays, and non-invasive electroencephalography (EEG) constitute the primary signal acquisition platforms, each offering distinct trade-offs between signal fidelity, invasiveness, and clinical applicability [17]. For speech neuroprosthetics research, selecting the appropriate acquisition foundation is paramount, as it directly impacts the ability to decode the complex, rapid neural patterns underlying speech production and perception. This application note provides a structured comparison of these modalities, detailed experimental protocols, and essential resource guidance to inform research and development in speech-decoding BCIs, with a specific focus on applications for ALS communication restoration.

Technical Comparison of Acquisition Modalities

The choice of signal acquisition technology involves balancing multiple engineering and clinical factors. The table below provides a quantitative comparison of these key parameters across the three primary modalities.

Table 1: Technical Comparison of Neural Signal Acquisition Modalities for Speech BCI

| Parameter | ECoG | Microelectrode Arrays | Non-Invasive EEG |

|---|---|---|---|

| Spatial Resolution | ~3 mm spatial spread [18] | Sub-millimeter to 400 µm pitch [19] | Centimetre-scale |

| Temporal Resolution | Millisecond | Millisecond | Millisecond |

| Signal-to-Noise Ratio | High | Very High | Low |

| Invasiveness | Invasive (subdural) | Invasive (penetrating or surface µECoG) | Non-invasive |

| Typical Electrode Count | 16-128 (clinical ECoG) | 256-1024+ [19] | 32-256 |

| Key Signals Recorded | Local field potentials, high-frequency activity [18] | Single/multi-unit activity, high-resolution LFP [19] [20] | Evoked potentials (P300), sensorimotor rhythms (SMR) [16] |

| Surgical Risk Profile | Medium (craniotomy required) | High (penetrating) to Medium (µECoG) [19] [20] | None |

| Long-Term Stability | High (>36 months) [21] | Variable; surface µECoG shows good stability [19] | Low (subject to daily variability) |

| Best Decoding Performance | Up to 97% word accuracy [22] | Under active investigation for speech [19] | Lower than invasive methods; requires extensive training [16] |

Experimental Protocols for Speech Decoding

ECoG-Based Speech Decoding Protocol

ECoG has demonstrated the most advanced performance in clinical speech decoding trials [22]. The following protocol outlines the key steps for acquiring and validating ECoG signals for a speech BCI.

Pre-Surgical Planning:

- Target Localization: Identify implantation targets based on the functional anatomy of speech. The left precentral gyrus (motor cortex) is a primary target for speech motor execution [22]. Other critical regions may include the superior temporal gyrus and inferior frontal regions.

- Electrode Selection: Standard clinical ECoG grid electrodes typically have a 2-3 mm diameter exposed disc contact [18]. For higher-density mapping, micro-electrocorticography (µECoG) arrays with 50-200 µm diameter electrodes and 300-400 µm pitch can be used [19].

Surgical Implantation:

- Procedure: Perform a craniotomy and durotomy to expose the cortical surface. Gently place the ECoG grid or strip electrodes subdurally onto the pial surface [18].

- Validation: Use intraoperative neurophysiological monitoring (e.g., somatosensory evoked potentials) to confirm grid placement relative to key anatomical landmarks.

Data Acquisition:

- Equipment: Use a high-resolution, clinically certified neural data acquisition system.

- Signals: Record signals typically sampled at 1000-3000 Hz. Apply a band-pass filter (e.g., 0.5-300 Hz) for local field potentials and a wider band for high-frequency activity [18].

- Reference: Choose a reference carefully (e.g., a quiet electrode outside the active region or a common average reference) to minimize noise.

Experimental Paradigm for Calibration:

- Prompted Speech: Present the participant with text or audio prompts of words, phonemes, or sentences to attempt to speak aloud. For individuals with severe dysarthria, the attempt to speak is sufficient [22].

- Data Collection: Record neural activity during hundreds to thousands of speech trials. The initial calibration may require only 30 minutes for a small vocabulary but expands to several hours for a >100,000-word vocabulary [22].

Signal Processing & Decoding:

- Feature Extraction: Common features include the amplitude of low-frequency signals and power in high-frequency bands (70-150 Hz) [22].

- Model Training: Train a recurrent neural network (RNN) or a similar sequence-to-sequence model to map the temporal evolution of ECoG features to the intended speech output (text or phonemes) [22].

- Real-Time Implementation: Implement the trained model in a real-time BCI system that provides continuous, closed-loop feedback to the user.

Microelectrode Array-Based Protocol for High-Density Mapping

High-density microelectrode arrays are used to investigate the fine-grained spatial organization of speech cortex [19]. The protocol below describes a minimally invasive approach using thin-film µECoG.

Array Design and Fabrication:

- Configuration: Use a modular, thin-film microelectrode array. A 1,024-channel array with 50 µm recording electrodes and a 400 µm inter-electrode pitch is a validated configuration [19].

- Materials: Fabricate arrays from flexible, biocompatible materials like polyimide or parylene-C to ensure conformability to the cortical surface.

Minimally Invasive Implantation:

- Cranial Micro-Slit Technique: Instead of a full craniotomy, use precision sagittal saw blades to create 500-900 µm wide slits in the skull. This approach is tangential to the cortical surface and minimizes invasiveness [19].

- Image Guidance: Utilize fluoroscopy or computed tomographic guidance for trajectory planning and array insertion. Monitor the insertion with neuroendoscopy.

- Placement: Insert the array subdurally and position it to cover regions of interest for speech processing. The entire procedure can be completed in under 20 minutes [19].

Multimodal Data Collection:

- Simultaneous Recording & Stimulation: The system should support both high-fidelity recording from all channels and focal cortical stimulation on designated channels for functional mapping [19].

- Task Design: Participants perform attempted speech and inner speech (imagined speaking) tasks [5]. The high spatial density allows for mapping the neural representation of phonemes and articulatory features.

Data Analysis for High-Density Data:

- Spatial Mapping: Analyze the data to characterize the spatial scales of sensorimotor and speech activity on the cortical surface. Decoding accuracy improves with both increased area coverage and spatial density [19].

- Decoding Models: Employ neural decoding models tailored to high-dimensional data to predict speech from the distributed pattern of neural activity.

The Scientist's Toolkit: Research Reagents & Materials

Successful execution of the protocols above requires a suite of specialized materials and tools. The following table details the essential components of a speech BCI research toolkit.

Table 2: Essential Research Materials for Speech BCI Development

| Item Name | Specifications / Examples | Primary Function | Key Considerations |

|---|---|---|---|

| ECoG Grid Electrode | Platinum disc contacts, 2.3 mm diameter, center-to-center spacing of 10 mm [18] [22] | Recording local field potentials from the cortical surface. | Standard for clinical epilepsy monitoring and speech BCI trials. |

| Thin-Film µECoG Array | 50 µm Pt electrodes, 400 µm pitch, 1024 channels on flexible polyimide substrate [19] | High-density mapping of cortical surface potentials. | Enables minimally invasive implantation; high spatial resolution. |

| Hybrid Electrode Array | Custom array integrating both microelectrodes and ECoG electrodes on the same platform [18] | Simultaneous recording of MUA, LFP, and ECoG from the same cortical region. | Allows direct comparison of signal spreads across modalities. |

| Fully Implantable BCI System | Wireless implantable device (e.g., WIMAGINE) with cortical surface electrodes [15] [21] | Chronic, long-term neural recording for at-home BCI use. | Provides stability over years; essential for real-world adoption [21]. |

| High-Channel Count Amplifier | 256 to 1024+ channel headstage, low-noise design | Amplifying and digitizing weak neural signals from high-density arrays. | System must scale with electrode count and maintain signal fidelity. |

| Cranial Micro-Slit Kit | Precision sagittal saw blades (<900 µm width) [19] | Minimally invasive surgical insertion of µECoG arrays. | Reduces surgical risk and procedural time compared to craniotomy. |

System Workflow and Signal Pathways

The following diagram illustrates the logical flow and data pathway from signal acquisition to decoded speech in an implanted BCI system.

The foundation of a successful speech-restoring BCI is its signal acquisition strategy. ECoG currently offers the most compelling balance of high signal quality and clinical feasibility, having demonstrated transformative results in individuals with ALS [22]. Microelectrode arrays, particularly minimally invasive µECoG, provide unparalleled spatial resolution for fundamental research into the neural code of speech and are a critical technology for next-generation BCIs [19]. Non-invasive EEG, while safe and accessible, faces significant challenges in signal quality that currently limit its efficacy for decoding the rapid, complex patterns of attempted speech, especially for users in the completely locked-in state [16]. The choice of modality must align with the research or clinical objective, whether it is achieving immediate clinical impact with current technology or pushing the boundaries of decoding performance and minimally invasive implantation with advanced engineering.

From Brain Signals to Synthesized Voice: AI Algorithms and Real-World Implementation

Restoring communication for individuals with advanced Amyotrophic Lateral Sclerosis (ALS) represents one of the most pressing applications for brain-computer interfaces (BCIs). Successful speech decoding requires high-fidelity signal acquisition from neural tissues, a capability that depends critically on the engineering and materials science behind invasive implants. The primary challenge involves creating biocompatible interfaces that can record high-bandwidth neural data over chronic timescales while minimizing tissue trauma [23]. Three companies—Paradromics, Neuralink, and Precision Neuroscience—have developed distinct technological approaches to address these challenges, each with different implications for signal acquisition quality, surgical scalability, and long-term reliability in speech decoding applications.

Comparative Technical Specifications

Table 1: Technical Comparison of High-Fidelity Neural Implants

| Feature | Paradromics Connexus | Neuralink N1 | Precision Layer 7 |

|---|---|---|---|

| Form Factor | Dime-sized titanium module with 421 microwires [24] [25] | Quarter-sized chip with 1024 flexible polymer threads [24] [26] | Ultra-thin flexible film ("brain film") [26] |

| Electrode Count | 421 electrodes [27] [25] | 1024 electrodes [24] | Not specified (high-density surface array) |

| Signal Acquisition Target | Individual neuron firing [24] [28] | Individual neuron firing [24] | Cortical surface signals (ECoG) [26] |

| Insertion Mechanism | EpiPen-like inserter; all electrodes placed <1 second [24] | Proprietary robotic surgeon [24] | Minimally invasive; slit in dura [26] |

| Key Materials | Platinum-iridium microwires; Hermetically sealed titanium body [24] | Flexible polymer threads [24] | Flexible bio-compatible polymer [26] |

| Data Rate (Reported) | 200+ bits per second (preclinical) [24] [25] | 4-10 bits per second (human trial) [24] | Not specified (surface recording) |

| Surgical Integration | Compatible with routine neurosurgery [24] | Requires specialized robotic surgery [24] | Fits between skull & brain [26] |

Table 2: Performance Metrics for Speech Decoding Applications

| Metric | Paradromics Connexus | Neuralink N1 | Precision Layer 7 |

|---|---|---|---|

| Human Trial Status | First human implant (May 2025); FDA trial approved [27] [25] | Multiple human implants (5+ reported) [26] | FDA 510(k) cleared for up to 30 days [26] |

| Target Speech Performance | ~60 words per minute (planned) [25] | Basic cursor control demonstrated [24] | Not specifically reported |

| Longevity Evidence | >2.5 years in sheep models [28] | Limited long-term human data | 30-day authorized implantation [26] |

| Neural Signal Resolution | Single-neuron recording [24] [28] | Single-neuron recording [24] | Population-level activity [26] |

Experimental Protocols for Speech Decoding

Protocol: Acute Intraoperative Validation of BCI Signal Acquisition

Objective: To validate the functionality and signal acquisition quality of a BCI device during temporary implantation in a human patient undergoing related neurosurgery [27].

Materials: Sterile BCI device (Connexus array), EpiPen-like insertion tool, neural signal amplification system, sterile field equipment, institutional review board (IRB) approval, patient informed consent.

Procedure:

- Patient Selection & Consent: Identify a patient already scheduled for relevant neurosurgical procedure (e.g., epilepsy resection) [27]. Obtain explicit informed consent for the temporary BCI implantation study.

- Device Preparation: Sterilize and initialize the BCI device and insertion instrument. Verify system functionality prior to implantation.

- Surgical Exposure: Utilize the standard clinical craniotomy procedure to access the brain region of interest.

- Device Implantation: Position the insertion tool perpendicular to the cortical surface. Deploy the electrode array using a rapid insertion mechanism (complete in <1 second for Connexus) [24].

- Signal Acquisition: Record neural activity for a predetermined period (e.g., 20 minutes) while the patient performs simple cognitive or motor tasks [27].

- Device Removal & Analysis: Carefully extract the device. Analyze signal-to-noise ratio, single-unit yield, and high-frequency content to validate performance.

Protocol: Chronic Speech Decoding for Communication Restoration

Objective: To decode attempted or imagined speech from chronically implanted BCI users for real-time communication application [10] [6].

Materials: FDA-approved implantable BCI system, recording hardware, computing system with decoding algorithms, personalized voice model (if available).

Procedure:

- Surgical Implantation: Perform the BCI implantation procedure according to the device-specific surgical protocol [24] [26].

- Post-operative Recovery: Allow appropriate healing time (weeks) before initiating recordings.

- Calibration Data Collection: Present words or phonemes on a screen. Instruct the participant to attempt to speak them aloud or imagine speaking them. Record simultaneous neural data [10] [6].

- Decoder Training: Use machine learning (e.g., recurrent neural networks) to map neural features to speech elements (phonemes or words) [10] [5].

- Real-Time Testing: Implement the trained decoder for real-time synthesis. Present novel sentences for the participant to attempt. Play the synthesized speech output through a speaker [10].

- Performance Quantification: Calculate intelligibility metrics (e.g., word error rate) and communication rate (words per minute) [10] [25].

Protocol: Differentiating Attempted Speech from Inner Speech

Objective: To train a BCI decoder to distinguish between attempted speech (for output) and private inner speech (for privacy protection) [6] [5].

Materials: Implanted BCI with multi-electrode arrays, stimulus presentation software, decoder with gating capability.

Procedure:

- Task-Blocked Data Collection: Present cues for either "Attempted Speech" (try to speak aloud) or "Inner Speech" (imagine speaking without attempt). Use identical word sets for both conditions [6].

- Neural Feature Extraction: Analyze neural signals from motor cortex to identify differential activation patterns between conditions [5].

- Classifier Training: Train a binary classifier to distinguish between the two speech states based on neural population activity.

- Gating Implementation: Implement the classifier to only activate the speech decoder when "Attempted Speech" is detected [6] [5].

- Validation: Test the system with alternating tasks to quantify unintended "leakage" of inner speech.

The Scientist's Toolkit: Research Reagents & Materials

Table 3: Essential Research Materials for High-Fidelity Neural Interfaces

| Material/Component | Function in BCI Research | Representative Examples |

|---|---|---|

| Platinum-Iridium Microwires | Chronic neural recording electrodes; balance conductivity and biocompatibility [24] | Paradromics Connexus BCI [24] |

| Flexible Polymer Substrates | Conform to brain tissue; reduce mechanical mismatch [24] [23] | Neuralink's electrode threads [24] |

| Hermetically Sealed Titanium | Protects electronics from body fluids; enables chronic implantation [24] [23] | Paradromics device housing [24] |

| Microelectrode Arrays | Record extracellular action potentials and local field potentials [10] [6] | Utah arrays, Blackrock Neurotech [26] |

| Graphene-Based Electrodes | Ultra-thin, high-conductivity neural interfaces [23] [13] | InBrain Neuroelectronics platform [13] |

| Ultrasoft Implant Materials | Minimize foreign body response; improve chronic stability [23] [13] | Axoft Fleuron material [13] |

Signaling Pathways & Neural Processing Workflows

Discussion & Future Directions

The race to develop optimal invasive implants for speech decoding reveals significant engineering trade-offs. Paradromics emphasizes data bandwidth and surgical practicality with its high-electrode-count, microwire-based approach that leverages existing surgical workflows [24] [27]. Neuralink prioritizes electrode density and miniaturization but depends on complex robotic implantation that may limit scalability [24]. Precision Neuroscience offers a minimally invasive compromise with its surface-layer approach, though with potentially lower signal resolution for fine speech motor commands [26].

Future developments will likely focus on improved biocompatibility through novel nanomaterials like graphene and ultrasoft polymers to enhance chronic stability [23] [13]. Closed-loop systems that combine recording with stimulation capabilities represent another frontier, potentially enabling bidirectional communication [23]. As these technologies mature, the integration of privacy-preserving decoding algorithms that distinguish intended speech from private thoughts will become increasingly critical for ethical implementation [6] [5]. The convergence of materials science, neural engineering, and machine learning continues to drive rapid innovation in this transformative field, offering hope for restoring natural communication to those silenced by neurological disorders.

The restoration of communication for individuals with paralysis due to conditions like amyotrophic lateral sclerosis (ALS) represents one of the most urgent and transformative applications of brain-computer interface (BCI) technology. Recent advances at the intersection of neuroscience and artificial intelligence have catalyzed the development of sophisticated speech decoding pipelines. These systems translate neural signals associated with speech into intelligible text or synthetic voice output, offering a potential pathway to restore fluent, natural communication. This document details the application notes and experimental protocols for implementing a modern decoding pipeline, with a specific focus on leveraging deep learning and large language models (LLMs) for phoneme and word recognition. The content is framed within the broader context of a thesis dedicated to advancing speech-decoding BCIs for ALS communication restoration, providing researchers and scientists with the methodologies and tools necessary to replicate and build upon these groundbreaking techniques.

Technical Foundations

Neural Basis of Speech Decoding

The successful decoding of speech from neural signals is predicated on the alignment between artificial intelligence models and the brain's own processing of language. When processing natural language, artificial neural networks exhibit patterns of functional specialization similar to those of cortical language networks [29]. Research shows that representations in models, particularly Transformers and LLMs, account for a significant portion of the variance observed in the human brain [29]. This alignment is crucial for building effective brain decoding systems.

The brain's motor cortex contains regions that control the muscular movements that produce speech [6]. In both attempted speech (where a person tries to articulate words but may produce no sound) and inner speech (the imagination of speech in one's mind), the motor cortex generates repeatable patterns of neural activity [6] [5]. These patterns, while similar, are typically stronger for attempted speech than for inner speech, presenting a decoding challenge that advanced algorithms are now overcoming [5].

Core Components of a Speech BCI Pipeline

A complete speech BCI pipeline consists of several integrated components:

- Signal Acquisition: The recording of neural activity from implanted microelectrode arrays or non-invasive sensors.

- Neural Feature Extraction: The processing of raw signals to extract relevant features like spike rates or local field potentials.

- Phoneme/Word Decoding: The core translation of neural features into linguistic units using deep learning models.

- Language Model Integration: The application of LLMs to constrain and refine the raw decoded output into coherent language.

- Output Synthesis: The conversion of the decoded text into synthetic speech or visual feedback.

Quantitative Performance Benchmarks

State-of-the-Art Performance Metrics

Table 1: Key Performance Metrics for Invasive Speech BCIs (2024-2025)

| Study / Institution | Vocabulary Size | Word Error Rate (WER) | Decoding Modality | Key Innovation |

|---|---|---|---|---|

| Stanford University [6] [5] | 50 words | 14% - 33% | Inner Speech | High-accuracy inner speech decoding from motor cortex |

| Stanford University [6] [5] | 125,000 words | 26% - 54% | Inner Speech | Large-vocabulary inner speech decoding |

| UC Davis Health [10] | N/A (Real-time) | ~40% (Intelligibility) | Attempted Speech | Real-time voice synthesis with 25ms latency |

| Neuralink (Preclinical) [30] | 50 words | ~25% (Estimated) | Attempted Speech | High-channel-count implant & custom ASIC |

Table 2: Non-Invasive Speech Decoding Performance (PNPL Competition 2025) [31]

| Task | Modality | Dataset Scale | Key Metric | Reported Performance |

|---|---|---|---|---|

| Speech Detection | MEG | 50 hrs, 1 subject | Binary Classification Accuracy | Foundation for future benchmarks |

| Phoneme Classification | MEG | 1,523,920 examples, 39 classes | Phoneme Error Rate (PER) | Foundation for future benchmarks |

Analysis of Performance Data

The quantitative data reveals several critical trends. Firstly, invasive approaches currently achieve lower Word Error Rates (WER), with a 10% WER often cited as a threshold for widespread adoption of automatic speech recognition systems [31]. Secondly, there is a clear trade-off between vocabulary size and accuracy; smaller, closed vocabularies yield higher precision, whereas expanding to open-vocabulary decoding presents greater challenges [29] [5]. Finally, the emergence of real-time voice synthesis, as demonstrated by UC Davis, marks a shift from text-based communication to more natural, spoken output, with a latency of just 25 milliseconds enabling fluid conversation [10].

Experimental Protocols

Protocol 1: Implant-Based Phoneme Decoding for Inner Speech

Objective: To decode phonemes and words from inner speech using intracortical recordings from the speech motor cortex for individuals with ALS.

Background: Inner speech (imagined speech without movement) evokes clear, robust patterns of activity in the motor cortex, though these signals are typically smaller than those for attempted speech [6] [5]. This protocol outlines a real-time decoding approach.

Materials & Reagents:

- Microelectrode Arrays (e.g., 96-channel arrays, Blackrock Neurotech) [6] [30]: Surgically implanted in the speech motor cortex to record neural activity.

- Neural Signal Processor: A computer system for amplifying, filtering, and digitizing neural signals.

- Stimulus Presentation Software: To display visual or auditory cues to the participant.

- Calibration Dataset: A predefined set of words or phonemes for participant to imagine.

Procedure:

- Participant Preparation & Calibration:

- The participant is presented with a visual cue of a word or phoneme to imagine speaking.

- Simultaneously, neural activity is recorded from the implanted microelectrode arrays.

- This process is repeated for hundreds to thousands of trials to build a robust labeled dataset pairing neural signals with intended phonemes/words [30].

Feature Extraction:

- Extract neural features from the recorded signals. For invasive recordings, this typically involves:

- Spike Sorting: Isolating and counting action potentials from individual neurons.

- Local Field Potential (LFP) Analysis: Analyzing low-frequency signals from populations of neurons.

- These features are calculated in short, sequential time bins (e.g., 10-50ms) to capture the temporal dynamics of speech [30].

- Extract neural features from the recorded signals. For invasive recordings, this typically involves:

Model Training:

- Train a deep learning model (e.g., a convolutional or recurrent neural network) to map the extracted neural features to the target phonemes or words.

- The model is trained to output a probability distribution over the vocabulary for each time bin.

Real-Time Decoding & Evaluation:

- In a closed-loop setting, the trained model decodes neural activity in real-time as the participant engages in inner speech.

- The output is a sequence of words or phonemes, which can be stitched into sentences.

- Performance is quantitatively evaluated using Word Error Rate (WER) for a predefined vocabulary [5].

Troubleshooting:

- Low Decoding Accuracy: Retrain the model with more calibration data or adjust the model architecture (e.g., increase layers or units).

- Signal Quality Degradation: Check impedance of electrode contacts and ensure integrity of connections.

Protocol 2: Non-Invasive Phoneme Classification from MEG

Objective: To classify heard or perceived phonemes from non-invasive Magnetoencephalography (MEG) recordings as a foundational step towards a non-invasive speech BCI.

Background: MEG provides millisecond temporal resolution and superior spatial localization compared to EEG, making it suitable for tracking the rapid dynamics of speech processing [31]. This protocol is based on the 2025 PNPL Competition framework.

Materials & Reagents:

- MEG System (306-sensor whole-head system): To record magnetic fields generated by neural activity.

- Stimulus Delivery System: High-fidelity headphones for presenting auditory speech stimuli.

- LibriBrain Dataset [31]: A large-scale public dataset of MEG recordings from participants listening to audiobooks.

Procedure:

- Data Acquisition:

- Record MEG data from a participant listening to hours of narrated audiobooks (e.g., from LibriVox).

- Simultaneously record the audio stimulus to provide precise timing alignment.

Data Preprocessing:

- Apply minimal filtering to the MEG data to remove line noise and drift.

- Downsample the neural data to a manageable rate (e.g., 250 Hz) [31].

- Segment the continuous MEG data and audio stream into short epochs aligned to each phoneme instance.

Model Training for Phoneme Classification:

- Train a model (e.g., a transformer or CNN) to take the neural data segment as input and predict the corresponding phoneme class from a set of 39 standard phonemes.

- The LibriBrain dataset provides over 1.5 million phoneme examples for training [31].

Evaluation:

- Evaluate model performance on a held-out test set using Phoneme Error Rate (PER) or classification accuracy.

- Compare results against the public leaderboard to benchmark performance.

Protocol 3: Privacy-Preserving Decoding with LLM Integration

Objective: To integrate a Large Language Model (LLM) to refine raw BCI outputs while implementing safeguards against the decoding of private inner thoughts.

Background: LLMs powerfully constrain and improve the fluency of decoded text. However, BCIs can potentially decode unintentional inner speech, raising privacy concerns [6] [5]. This protocol addresses both enhancement and safety.

Materials & Reagents:

- Trained Phoneme/Word Decoder: The output from Protocol 1 or a similar BCI decoder.

- Pre-trained Large Language Model (e.g., GPT-style model): For text generation and refinement.

- Privacy Protection Algorithm: A software-based mechanism for access control.

Procedure:

- Sequence Decoding:

- The initial BCI decoder produces a sequence of candidate words or phonemes from neural signals.

- This sequence may be fragmented or contain errors (e.g., "I | feel | tire | d").

LLM-Based Refinement:

- The raw sequence is fed into an LLM with a prompt to generate a coherent, grammatically correct version.

- The LLM leverages its internal statistical model of language to output a fluent sentence (e.g., "I feel tired.") [32] [33]. This step significantly improves intelligibility and semantic consistency.

Privacy Safeguard Implementation (Select One):

- A) Modality-Based Filtering: Train the decoder to distinguish between neural patterns of attempted speech and inner speech. Configure the system to only decode and output the former, silencing unintended inner monologue [5].

- B) Password-Protected Decoding: Implement a system where the BCI only becomes active for decoding after detecting a specific, rare imagined passphrase (e.g., "as above, so below") from the user. This prevents accidental "leaking" of private thoughts [6] [5].

Troubleshooting:

- LLM Introduces Hallucinations: Adjust the decoding parameters of the LLM (e.g., use lower temperature sampling) to make its output more conservative and faithful to the BCI's raw output [32].

- Privacy Lock Fails to Activate: Retrain the password/phrase detection model with more user data to improve its recall.

Visualization of Workflows

Speech BCI Decoding Pipeline Architecture

Privacy Protection Mechanisms for Inner Speech

The Scientist's Toolkit

Table 3: Essential Research Reagents & Materials for Speech BCI Development

| Item | Function / Application | Example Specifications / Notes |

|---|---|---|

| Microelectrode Arrays [6] [30] | Invasive recording of neural spiking activity and local field potentials from the cortical surface. | 96+ channels; flexible polyimide threads; platinum-tungsten or iridium oxide contacts. |

| MEG System [31] | Non-invasive recording of magnetic fields from neural activity with high temporal resolution. | 306-sensor whole-head system; requires magnetically shielded room. |

| LibriBrain Dataset [31] | Large-scale, public benchmark for training and evaluating non-invasive speech decoding models. | Over 50 hours of MEG data from a single subject; aligned to audiobook stimuli. |

| Deep Learning Models (CNNs/RNNs/Transformers) [29] [30] | Core model architectures for mapping neural features to phonemes or words. | Trained on paired neural data and speech labels; can be subject-specific. |

| Large Language Models (LLMs) [29] [32] [33] | Refines raw, errorful BCI output into fluent, coherent text; improves semantic consistency. | Used in decoding phase; can be integrated via APIs or locally hosted. |

| Robotic Surgical Implantation System [30] | Ensures precise, minimally invasive placement of electrode arrays in brain tissue. | Provides sub-100-micron accuracy; reduces tissue damage and inflammation. |

| Differentially Private Decoding Algorithms [34] | Protects user privacy by preventing memorization and leakage of sensitive training data. | Perturbation mechanisms applied at the decoding stage; provides theoretical privacy guarantees. |

Real-time speech synthesis via brain-computer interfaces (BCIs) represents a transformative frontier in neuroprosthetics, aiming to restore natural communication for individuals with severe speech impairments due to conditions such as amyotrophic lateral sclerosis (ALS), brainstem stroke, or locked-in syndrome. Traditional augmentative and alternative communication (AAC) devices often rely on slow, sequential selection processes, leading to delayed and effortful interactions that fall short of fluid conversation. The emerging paradigm of near-zero latency speech synthesis directly translates neural signals into audible speech, potentially revolutionizing assistive communication technologies. This application note details the experimental protocols, performance data, and technical architectures underpinning recent breakthroughs in instantaneous voice-synthesis neuroprostheses, framed within the broader research context of speech decoding BCIs for ALS communication restoration.

Performance Benchmarks and Quantitative Analysis

Recent clinical trials have demonstrated significant advances in the performance of real-time speech synthesis systems. The quantitative benchmarks below highlight the progression from text-based to voice-synthesized outputs.

Table 1: Comparative Performance Metrics of Recent Speech Neuroprostheses

| Study / System | Participant Condition | Output Modality | Latency | Speed (words/min) | Intelligibility/Accuracy |

|---|---|---|---|---|---|

| UC Davis Instantaneous Voice Synthesis [10] [35] | ALS with severe dysarthria | Real-time voice synthesis | ~10 ms | Not specified | 94.34% sentence identification (multiple choice); 34% phoneme error rate (open transcription) |

| UC Berkeley/UCSF Neuroprosthesis [36] | Brainstem stroke (anarthria) | Text & synthesized audio | 1.12 seconds | 47.5 | Word error rate of 23.8% from a 125,000-word vocabulary |

| ECoG-based Synthesis in ALS [4] | ALS with dysarthria | Synthesized words (closed vocabulary) | Not specified | Self-paced | 80% word recognition accuracy (6-keyword vocabulary) |

| Stanford Inner Speech BCI [5] | ALS/Stroke with impaired speech | Decoded inner speech | Real-time | Not specified | 14-33% error rate (50-word vocab); 26-54% error rate (125,000-word vocab) |

Table 2: Analysis of Expressive Speech Capabilities

| Feature | Experimental Measure | Performance Result | Study |

|---|---|---|---|

| Intonation Control | Question vs. statement differentiation | 90.5% accuracy | UC Davis [10] [35] |

| Word Emphasis | Stressing specific words in sentences | 95.7% accuracy | UC Davis [10] [35] |

| Vocal Identity | Voice similarity to pre-injury voice | Successfully matched using pre-injury recording | UC Berkeley/UCSF [36] |

| Emotional Expression | Singing simple melodies | 73% pitch identification accuracy | UC Davis [10] [35] |

| Spontaneous Communication | Ability to interrupt conversations | Enabled by near-zero latency system | UC Davis [10] [35] |

Experimental Protocols for Real-Time Speech Synthesis

Protocol 1: Implantable ECoG-Based Speech Synthesis for ALS

This protocol outlines the methodology for implementing a real-time speech synthesis BCI using electrocorticography (ECoG) in individuals with ALS, based on published research [4].

Objective: To enable a participant with ALS-induced dysarthria to produce intelligible, synthesized words in a self-paced manner using a chronically implanted BCI that preserves vocal identity.

Materials:

- Two 64-electrode ECoG grids (4 mm center-to-center spacing)

- NeuroPort Neural Signal Processing System (Blackrock Neurotech)

- High-fidelity microphone for acoustic recording

- BCI2000 software for stimulus presentation and data alignment

- Custom signal processing and machine learning pipeline

Procedure:

- Surgical Implantation: Position two ECoG grids over speech-related cortical regions, including ventral sensorimotor cortex and dorsal laryngeal area, guided by pre-operative MRI and anatomical landmarks.

- Signal Acquisition: Record ECoG signals at 1 kHz sampling rate with common average referencing. Simultaneously record audio at 48 kHz for training data alignment.

- Feature Extraction: Extract high-gamma (70-170 Hz) power features using bandpass filtering (IIR Butterworth, 4th order) and compute logarithmic power with 50 ms windows at 10 ms intervals.

- Neural Voice Activity Detection (nVAD): Implement a unidirectional RNN to identify and buffer speech segments from continuous high-gamma activity, incorporating a 0.5s context window for smooth transitions.

- Acoustic Feature Mapping: Use a bidirectional RNN to map buffered high-gamma features onto 18 Bark-scale cepstral coefficients and 2 pitch parameters (LPC coefficients).

- Speech Synthesis: Transform LPC coefficients into acoustic waveforms using the LPCNet vocoder for audio playback.

- System Validation: Conduct closed-loop sessions with the participant, providing delayed auditory feedback of synthesized words. Evaluate intelligibility through human listener tests with a closed vocabulary of 6 keywords.

Key Considerations: This approach requires preserved but dysarthric speech for initial training data alignment. The system demonstrated stability over 5.5 months between training and testing [4].

Protocol 2: Instantaneous Voice Synthesis Using Microelectrode Arrays

This protocol describes the methodology for achieving near-zero latency speech synthesis using intracortical microelectrode arrays, enabling real-time conversational speech [10] [35].

Objective: To create a real-time voice synthesis neuroprosthesis that translates neural activity into synthesized speech with minimal latency, allowing for natural conversation patterns.

Materials:

- Four microelectrode arrays (256 total electrodes)

- Real-time neural signal processing hardware

- Transformer-based deep learning model for neural decoding

- Voice cloning technology (e.g., HiFi-GAN) for personalized voice synthesis

- Text-to-speech synthesis pipeline for target generation

Procedure:

- Array Implantation: Surgically implant four microelectrode arrays in the ventral precentral gyrus to capture detailed neural population activity.

- Neural Signal Processing: Extract neural features within 1 ms of signal acquisition, focusing on action potentials and local field potentials.

- Decoder Training: Collect training data by presenting text cues and recording corresponding neural activity as the participant attempts to speak. Generate synthetic target speech waveforms from text and time-align with neural signals.

- Real-Time Decoding: Implement a multilayer Transformer-based model to predict acoustic speech features from neural signals every 10 ms.

- Voice Personalization: Apply voice-cloning technology to pre-illness voice recordings, using models like HiFi-GAN to create a synthetic voice that approximates the participant's original voice.

- Latency Optimization: Design the complete processing pipeline (signal acquisition to audio output) to operate within 10 ms, matching natural audio feedback delays.

- Performance Assessment: Evaluate through transcript-matching tests, open transcription tasks, and quantification of prosodic control (question intonation, word emphasis, and melody production).

Key Considerations: This approach innovatively addresses the absence of ground-truth speech data in severely affected participants by using text-derived synthetic targets. The system captures paralinguistic features like pitch modulation and emphasis from precentral gyrus activity [35].

Protocol 3: EEG-Based Inner Speech Decoding with Privacy Protection

This protocol outlines methods for decoding inner speech from non-invasive EEG recordings while implementing safeguards against decoding private thoughts [5] [37].

Objective: To develop a non-invasive BCI that decodes inner speech in real-time while preventing the unintended decoding of private thoughts.

Materials:

- 64-channel EEG system with specialized electrodes for artifact detection

- Spatial filtering and independent component analysis (ICA) software

- Short-Time Fourier Transform (STFT) and Hilbert-Huang Transform processing tools

- Real-time phoneme classification pipeline

- FastPitch-based speech synthesis system

Procedure:

- EEG Setup: Position EEG electrodes over motor cortex, Broca's area, Wernicke's area, and auditory cortex. Include dedicated sensors for ocular and muscular artifact detection.

- Artifact Removal: Implement a multi-stage preprocessing pipeline with spatially constrained ICA to suppress artifacts while preserving phoneme-relevant oscillations.

- Feature Extraction: Combine STFT, Hilbert-Huang Transform, and Phase-Amplitude Coupling features, then reduce dimensionality.

- Phoneme-Level Decoding: Train self-supervised learning models to extract phoneme-discriminative embeddings from unlabeled EEG data, enabling open-vocabulary generalization.

- Imagined Speech Discrimination: Implement a module to differentiate word-related cognition from non-linguistic mental noise, improving decoding reliability.

- Privacy Protection Mechanisms:

- Train decoders to distinguish attempted speech from inner speech and silence the latter

- Implement a keyword "unlocking" system that only decodes inner speech after detecting a specific intentional command

- Edge Deployment: Optimize the pipeline for IoT-compatible edge architecture with low-latency processing.

Key Considerations: This approach addresses the critical ethical concern of thought privacy while enabling communication. The phoneme-focused design supports open-vocabulary communication rather than being limited to a fixed word set [5] [37].

System Architectures and Technical Implementation

Real-Time Speech Synthesis System Architecture

The following diagram illustrates the complete signal processing pipeline for real-time speech synthesis, from neural signal acquisition to audio output:

Neural Decoding and Voice Synthesis Dataflow

This diagram details the neural decoding process and how it integrates with voice synthesis components:

The Scientist's Toolkit: Essential Research Reagents and Materials

Table 3: Key Research Reagents and Materials for Speech Neuroprosthetics

| Category | Item | Specifications | Research Application |

|---|---|---|---|

| Neural Recording | ECoG Grids | 64-253 electrodes, 2-4 mm spacing | Capture population-level neural activity from speech motor cortex [36] [4] |

| Neural Recording | Microelectrode Arrays | 256 channels, Utah arrays | Record single-neuron and multi-unit activity for fine-grained decoding [10] [35] |

| Signal Processing | Real-Time Processors | NeuroPort System (Blackrock) | Acquire and preprocess neural signals with minimal latency [7] [4] |

| Machine Learning | RNN Architectures | Unidirectional & bidirectional RNNs with GRU/LSTM | Neural voice activity detection and acoustic feature mapping [4] |

| Machine Learning | Transformer Models | Multi-layer attention networks | Neural-to-acoustic decoding with contextual understanding [35] |

| Speech Synthesis | Vocoders | LPCNet, HiFi-GAN | High-fidelity speech synthesis from acoustic parameters [4] [38] |

| Voice Banking | Voice Cloning Systems | HiFi-GAN with fine-tuning | Create personalized synthetic voices from limited speech samples [38] |

| Experimental Software | BCI2000 | Open-source platform | Stimulus presentation, data acquisition, and system coordination [7] [4] |

Real-time speech synthesis with near-zero latency represents a paradigm shift in communication restoration for individuals with severe speech impairments. The protocols and architectures detailed herein demonstrate the feasibility of translating neural signals directly into intelligible, expressive speech with latencies comparable to natural auditory feedback. Current systems have achieved remarkable milestones in personalized voice output, prosodic control, and real-time interaction capabilities.

Future research directions should focus on expanding vocabulary sizes, improving intelligibility in open-vocabulary settings, enhancing system adaptability to individual neurophysiological differences, and developing less invasive recording methods. Additionally, addressing the ethical implications of inner speech decoding and ensuring equitable access to these technologies remain critical considerations. As these neuroprosthetic systems evolve from laboratory demonstrations to clinical applications, they hold the potential to fundamentally restore the natural human experience of conversation for those who have lost the ability to speak.

Brain-computer interfaces (BCIs) represent a transformative technology for restoring communication in patients with severe neurological conditions such as amyotrophic lateral sclerosis (ALS). These systems translate brain signals into commands for external devices, bypassing damaged neural pathways. Two distinct approaches have emerged: minimally invasive endovascular systems that record signals from within blood vessels, and non-invasive systems that measure brain activity from the scalp. This article provides detailed application notes and experimental protocols for these approaches, with specific focus on speech decoding for ALS communication restoration.

Table: Comparison of BCI Approaches for Speech Decoding

| Feature | Endovascular (Stentrode) | Non-Invasive (EEG-Based) |

|---|---|---|

| Primary Paradigms | Motor intent decoding, attempted speech, inner speech | Motor Imagery (MI), P300, SSVEP, Hybrid paradigms |

| Invasiveness | Minimally invasive (implanted via jugular vein) | Non-invasive (scalp electrodes) |

| Spatial Resolution | Moderate (recorded from superior sagittal sinus) | Low (skull dampens signals) |

| Temporal Resolution | High (direct neural signals) | Millisecond-level high temporal resolution [39] |

| Key Hardware | Stentrode electrode array, subcutaneous telemetry unit | EEG cap, amplifiers, signal processing units |

| Primary Signal Type | Cortical neural signals (high-gamma activity) | EEG rhythms (mu, beta) and event-related potentials |

| Typical Applications | Real-time device control, speech decoding [6] [5] | Assistive technology, neurorehabilitation, basic communication [40] |

| Risk Profile | Surgical risks (thrombosis, infection) but lower than open-brain surgery [41] | No surgical risks; comfort and artifact issues |

Synchron's Stentrode Endovascular BCI

The Stentrode system (Synchron) utilizes a minimally invasive endovascular approach to place recording electrodes near the motor cortex. The system comprises three main components: (1) a self-expanding nitinol stent scaffold (40mm length, 8mm diameter) embedded with 16 platinum-iridium electrodes coated with iridium oxide; (2) a flexible, insulated lead that traverses the venous system via the internal jugular vein; and (3) a subcutaneous implantable receiver–transmitter unit (IRTU) housed in a subclavicular pocket that digitizes and wirelessly transmits neural data [42].

The Stentrode is deployed via catheter through the jugular vein and positioned within the superior sagittal sinus, adjacent to the motor cortex. Following implantation, the device undergoes natural endothelialization over approximately four weeks, becoming incorporated into the vessel wall. This process stabilizes the electrode-vessel interface while preserving venous patency. Patients typically receive dual antiplatelet therapy (aspirin and clopidogrel) for 90 days post-implantation to mitigate thromboembolic risk [41] [42].