Coupling Disturbance Strategy in NPDOA: A Brain-Inspired Metaheuristic for Complex Optimization

This article provides a comprehensive analysis of the Coupling Disturbance Strategy, a core component of the novel Neural Population Dynamics Optimization Algorithm (NPDOA).

Coupling Disturbance Strategy in NPDOA: A Brain-Inspired Metaheuristic for Complex Optimization

Abstract

This article provides a comprehensive analysis of the Coupling Disturbance Strategy, a core component of the novel Neural Population Dynamics Optimization Algorithm (NPDOA). Tailored for researchers and drug development professionals, we explore the neuroscientific foundations of this strategy, its algorithmic implementation for balancing exploration in optimization, and its practical application in solving complex, non-convex problems prevalent in biomedical research. The content covers theoretical underpinnings, methodological details, performance validation against state-of-the-art algorithms, and discusses the strategy's implications for enhancing global search capabilities in computational biology and clinical data analysis.

The Neuroscience Behind Coupling Disturbance: From Neural Populations to Optimization Theory

Neural population dynamics investigates how collectives of neurons encode, maintain, and compute information to generate perception, cognition, and behavior. Unlike single-neuron analyses, this approach recognizes that cognitive functions emerge from collective interactions across neuronal ensembles [1]. Research in posterior cortices reveals that neural populations represent correlated task variables using less-correlated population modes, implementing a form of partial whitening that enables efficient information coding [1]. This coding geometry allows multiple interrelated variables to be represented together without interference while being coherently maintained and updated through time.

A fundamental finding is that population codes enable sample-efficient learning by shaping the inductive biases of downstream readout neurons. The similarity structure of population responses, formalized through neural kernels, determines how readily a readout neuron can learn specific stimulus-response mappings from limited examples [2]. This efficiency stems from a built-in bias toward explaining observed data with simple stimulus-response maps, allowing organisms to generalize effectively from few experiences—a crucial capability in dynamic environments.

Fundamental Principles of Neural Population Coding

Encoding Formats and Task Dependence

Neural populations employ distinct encoding formats depending on cognitive demands. During delayed match-to-category (DMC) tasks requiring working memory, parietal cortex neurons exhibit binary-like category encoding with nearly identical firing rates to all stimuli within a category. Conversely, during one-interval categorization (OIC) tasks with immediate saccadic responses, the same neurons show more graded, mixed selectivity that preserves sensory feature information alongside category signals [3].

This task-dependent flexibility suggests that encoding formats are not fixed but dynamically adapt to computational requirements. Binary-like representations emerge when cognitive demands include maintaining or manipulating information in working memory, potentially through attractor dynamics that compress graded feature information into discrete categorical representations [3].

Efficient Coding and Representational Geometry

Neural populations implement efficient coding principles by matching representational geometry to behaviorally relevant variables. Highly correlated task variables are represented by less-correlated neural modes, reducing redundancy while maintaining discriminability [1]. This population-level whitening differs from traditional efficient coding theories by utilizing neural population modes rather than individual neurons as the fundamental encoding unit.

The resulting representational geometry creates an inductive bias that determines which tasks can be learned sample-efficiently. The kernel function ( K(θ,θ') = \frac{1}{N}∑{i=1}^N ri(θ)r_i(θ') ), which quantifies population response similarity between stimuli θ and θ′, completely determines generalization performance of a linear readout [2]. Spectral properties of this kernel bias learning toward functions that align with its principal components.

Sequential Dynamics and Multiplexing

Neural populations multiplex sequential dynamics with encoding functions through multiplicative sequences that provide a temporal scaffold for time-dependent computations [1]. These dynamics reliably follow changes in task-variable correlations throughout behavioral trials, enabling a single population to support multiple related computations across time. The embedding of coding geometry within sequential dynamics allows populations to maintain temporal continuity while updating representations in response to changing task demands.

Table 1: Key Properties of Neural Population Codes

| Property | Description | Functional Significance |

|---|---|---|

| Encoding Geometry | Correlated task variables represented by less-correlated neural modes | Enables efficient information coding without interference [1] |

| Task-Dependent Encoding | Binary-like encoding in memory tasks vs. graded encoding in immediate response tasks | Adapts representation format to computational demands [3] |

| Kernel Structure | Similarity structure defined by population response inner products | Determines sample-efficient learning capabilities [2] |

| Sequential Multiplexing | Time-varying representations embedded in neural sequences | Supports temporal maintenance and updating of information [1] |

Experimental Methodologies and Protocols

Neurophysiological Recording During Behavioral Tasks

To investigate how neural populations encode task variables, researchers record from neural ensembles while subjects perform structured behavioral tasks:

Behavioral Paradigm Design: Animals (typically non-human primates or mice) are trained on tasks such as delayed match-to-category (DMC) or one-interval categorization (OIC) [3]. In DMC, subjects report whether sequentially presented stimuli belong to the same category, requiring working memory maintenance and comparison. In OIC, subjects immediately report category membership with a saccadic eye movement.

Neural Recording Techniques: Modern experiments employ high-density electrode arrays or two-photon calcium imaging to simultaneously monitor hundreds of neurons in regions such as posterior parietal cortex [1] [3]. Recording from identified excitatory neuronal populations provides cell-type-specific insights.

Data Analysis Pipeline: Population responses are analyzed using dimensionality reduction techniques (PCA, demixed PCA) to visualize neural trajectories across the task. Representational similarity analysis examines the relationship between stimulus relationships and neural response patterns [3].

Recurrent Neural Network Modeling

To test hypotheses about mechanisms underlying neural population dynamics, researchers train recurrent neural networks (RNNs) on analogous tasks:

Network Architecture: Continuous-time RNNs with nonlinear activation functions are commonly used, as they capture the temporal dynamics of biological neural circuits [3]. The networks typically receive time-varying inputs representing stimuli and produce decision-related outputs.

Training Procedure: Networks are trained using backpropagation through time or evolutionary algorithms to perform categorization tasks identical to those used in animal experiments. Successful training produces networks that replicate key aspects of animal behavior and neural dynamics [3].

Dynamics Analysis: Trained networks are analyzed through fixed-point analysis, which identifies stable states (attractors) in the network's state space. This reveals how categorical representations are maintained as attractors during memory delays [3].

Data-Driven Control of Neural Populations

Recent advances enable direct manipulation of neural population dynamics using data-driven approaches:

Control Objective Specification: Researchers define an objective function that quantifies the desired synchronization pattern (e.g., synchrony or desynchrony) in terms of measurable network outputs [4].

Iterative Learning Algorithm: Control parameters are iteratively refined by perturbing the network, observing effects on the objective function, and updating parameters to minimize this function [4]. This model-free approach leverages local linear approximations of network dynamics without requiring global models.

Physical Constraint Incorporation: The framework accommodates biological constraints such as charge-balanced inputs in electrical stimulation, ensuring practical applicability to real neural systems [4].

Table 2: Experimental Protocols in Neural Population Research

| Method | Key Procedures | Output Measurements | Applications |

|---|---|---|---|

| Neurophysiological Recording | Behavioral training, multi-electrode recording, population vector analysis | Neural firing rates, local field potentials, population trajectories | Identify coding principles across task conditions [1] [3] |

| RNN Modeling | Network training on cognitive tasks, fixed-point analysis, connectivity analysis | Network decisions, hidden state dynamics, attractor landscapes | Test mechanistic hypotheses about neural computations [3] |

| Data-Driven Control | Objective function specification, iterative parameter perturbation, constraint incorporation | Population synchrony measures, firing pattern consistency | Regulate pathological synchronization patterns [4] |

Bio-Inspired Optimization Algorithms

Principles of Bio-Inspired Optimization

Bio-inspired optimization algorithms adapt principles from biological systems to solve complex computational problems. These methods are particularly valuable for high-dimensional, nonlinear optimization landscapes where traditional gradient-based methods struggle [5]. The fundamental insight is that biological systems have evolved efficient mechanisms for exploration and exploitation in complex environments.

These algorithms excel in handling sparse, noisy data and can effectively navigate high-dimensional parameter spaces common in medical and biological applications [6] [5]. Unlike gradient-based optimizers that can become trapped in local minima, population-based approaches maintain diversity in solution candidates, enabling more thorough exploration of the solution space [6].

Major Algorithm Classes

Genetic Algorithms (GAs): Inspired by natural selection, GAs maintain a population of candidate solutions that undergo selection, crossover, and mutation operations across generations [5]. This evolutionary approach effectively explores complex fitness landscapes without requiring gradient information.

Particle Swarm Optimization (PSO): Based on social behavior of bird flocking or fish schooling, PSO updates candidate solutions based on their own experience and that of neighboring solutions [5]. Particles move through the search space with velocities dynamically adjusted according to historical behaviors.

Artificial Immune Systems: These algorithms emulate the vertebrate immune system's characteristics of learning and memory to solve optimization problems [7]. They feature mechanisms for pattern recognition, noise tolerance, and adaptive response.

Ant Colony Optimization: Inspired by pheromone-based communication of ants, this approach uses simulated pheromone trails to probabilistically build solutions to optimization problems [5]. The pheromone evaporation mechanism prevents premature convergence.

Applications in Biomedical Domains

Bio-inspired optimization has demonstrated particular utility in biomedical applications:

Medical Diagnosis: Optimization algorithms enhance disease detection systems by selecting optimal feature subsets and tuning classifier parameters [6] [5]. For chronic kidney disease prediction, population-based optimization of deep neural networks achieved superior performance compared to traditional models [6].

Drug Discovery: In pharmaceutical development, bio-inspired algorithms optimize molecular structures for desired properties while minimizing side effects [7]. They efficiently search vast chemical space to identify promising candidate compounds.

Neural Network Optimization: Beyond direct biomedical applications, these algorithms optimize neural network architectures and hyperparameters [8] [5]. The BioLogicalNeuron framework incorporates homeostatic regulation and adaptive repair mechanisms inspired by biological neural systems [8].

Integration for NPDOA Coupling Disturbance Strategies

The integration of neural population dynamics (NPD) and bio-inspired optimization algorithms (OA) creates a powerful framework for developing coupling disturbance strategies to regulate pathological neural synchrony.

Theoretical Foundation

The NPDOA framework leverages the fact that neural population dynamics can be characterized by low-dimensional manifolds [1] [3] and that bio-inspired optimization can efficiently identify control policies to shift these dynamics toward healthy patterns [4]. This approach is particularly valuable when precise dynamical models are unavailable or when neural circuits exhibit non-stationary properties.

The kernel structure of population codes [2] provides a mathematical foundation for predicting how perturbations will affect population-wide activity patterns. By understanding how similarity relationships in neural responses shape learning, optimization algorithms can be designed to exploit these inductive biases for more efficient control policy discovery.

Implementation Framework

Control Objective Specification: Define an objective function that quantifies the desired disturbance to pathological coupling, typically aiming to minimize excessive synchrony while maintaining information coding capacity [4].

Population-Based Policy Search: Use bio-inspired optimization to search for stimulation policies that effectively disrupt pathological coupling. The optimization maintains a population of candidate stimulation patterns evaluated against the control objective [5] [4].

Adaptive Policy Refinement: As neural dynamics evolve in response to stimulation, continuously adapt control policies using iterative learning based on measured outcomes [4]. This closed-loop approach compensates for non-stationarities in neural circuits.

Application to Neurological Disorders

The NPDOA approach has significant potential for treating neurological conditions characterized by pathological neural synchronization:

Parkinson's Disease: Excessive beta-band synchronization in basal ganglia-cortical circuits could be disrupted through optimized stimulation patterns that shift population dynamics toward healthier states [4].

Epilepsy: Preictal network synchronization could be detected through population activity analysis and prevented through optimally-timed disturbances identified through evolutionary algorithms [4].

Neuropsychiatric Disorders: Conditions like schizophrenia involve disrupted neural coordination that might be normalized through precisely-targeted coupling modulation [4].

Table 3: Research Reagent Solutions for Neural Population Studies

| Reagent/Resource | Function | Example Applications |

|---|---|---|

| High-Density Electrode Arrays | Simultaneous recording from hundreds of neurons | Monitoring population dynamics during cognitive tasks [1] [3] |

| Calcium Indicators (e.g., GCaMP) | Optical monitoring of neural activity via fluorescence | Large-scale population imaging in rodent models [2] |

| Optogenetic Actuators | Precise manipulation of specific neural populations | Testing causal roles of population activity patterns [4] |

| Recurrent Neural Network Models | Computational simulation of neural population dynamics | Testing mechanistic hypotheses about neural computations [3] |

| Hodgkin-Huxley Neuron Models | Biophysically realistic simulation of neuronal activity | Studying synchronization in controlled networks [4] |

Emerging Research Frontiers

The integration of neural population dynamics and bio-inspired optimization is advancing several frontiers:

Bio-Plausible Deep Learning: Incorporating biological mechanisms like homeostasis and adaptive repair into artificial neural networks creates more robust and efficient learning systems [8]. The BioLogicalNeuron framework demonstrates how calcium-driven homeostasis can maintain network stability during learning [8].

Personalized Neuromodulation: As recording technologies provide richer measurements of individual neural population dynamics, optimization algorithms can tailor stimulation policies to patient-specific circuit abnormalities [4].

Multi-Scale Optimization: Future frameworks will optimize across molecular, cellular, and circuit scales simultaneously, requiring novel optimization approaches that can handle extreme multi-modality and cross-scale interactions.

Neural population dynamics and bio-inspired optimization represent complementary approaches to understanding and manipulating complex biological systems. Neural populations implement efficient coding strategies through their representational geometry [1] [2] and dynamically adapt encoding formats to task demands [3]. Bio-inspired optimization provides powerful tools for navigating high-dimensional parameter spaces in biomedical applications [6] [5] [7].

Their integration in the NPDOA framework offers a promising path toward developing effective coupling disturbance strategies for neurological disorders. By combining principles from neuroscience and optimization theory, this approach enables model-free control of neural population dynamics [4], potentially leading to novel therapies for conditions characterized by pathological neural synchronization.

As measurement technologies provide increasingly detailed views of neural population activity, and as optimization algorithms become more sophisticated at exploiting biological principles, this synergistic relationship will likely yield further insights into both natural and artificial intelligence.

Defining the Coupling Disturbance Strategy in NPDOA's Framework

The Coupling Disturbance Strategy represents a foundational component within the Novel Pharmacological Dynamics and Optimization Approach (NPDOA) framework. This strategy systematically investigates and exploits the interconnected nature of biological systems to optimize therapeutic interventions. In complex pharmacological systems, coupling describes the functional dependencies between different biological scales—from molecular interactions to tissue-level responses. The disturbance strategy intentionally modulates these couplings to redirect pathological signaling networks toward therapeutic states.

Within the NPDOA framework, coupling disturbance moves beyond single-target paradigms to embrace a systems-level understanding of drug action. This approach recognizes that therapeutic efficacy emerges not from isolated receptor binding alone, but from the coordinated rewiring of biological networks that span multiple scales and subsystems. The strategy integrates computational prediction, experimental validation, and clinical translation to develop interventions with enhanced precision and reduced off-target effects.

Theoretical Foundations and Conceptual Framework

The conceptual framework for coupling disturbance rests on three interconnected theoretical pillars: system connectivity mapping, disturbance propagation modeling, and therapeutic window optimization.

System Connectivity Mapping

Biological systems exhibit multi-layered connectivity across molecular, cellular, and tissue levels. Understanding these connections enables targeted disturbances that create cascading therapeutic effects. Connectivity mapping involves:

- Node Identification: Cataloging key biological entities (proteins, metabolites, cell types)

- Edge Characterization: Quantifying interaction strengths and directions

- Pathway Integration: Understanding how signals flow across traditional pathway boundaries

Disturbance Propagation Modeling

Theoretical models predict how intentional disturbances at one system node propagate through connected networks. These models incorporate:

- Signal Amplification: Identifying points where small disturbances create large effects

- Network Buffering: Recognizing system properties that resist change

- Feedback Integration: Accounting for compensatory mechanisms that modulate disturbance effects

Therapeutic Window Optimization

Coupling disturbance aims to maximize the separation between therapeutic effects and adverse responses through:

- Selective Network Targeting: Identifying disturbances that differentially affect pathological versus physiological states

- Dynamic Dosing: Timing interventions to align with biological rhythms and states

- Combinatorial Modulation: Using multiple, smaller disturbances to achieve efficacy while minimizing individual toxicities

Table 1: Core Components of the Coupling Disturbance Theoretical Framework

| Component | Key Elements | Mathematical Representation | Biological Interpretation |

|---|---|---|---|

| System Connectivity | Nodes, Edges, Pathways | Graph G = (V, E) where V represents biological entities and E represents interactions | Map of potential disturbance propagation routes through biological system |

| Disturbance Propagation | Signal transfer, Network topology, Dynamics | Differential equations describing state changes: dx/dt = f(x) + Bu(t) where u(t) represents external disturbances | Prediction of how targeted interventions will affect overall system behavior over time |

| Therapeutic Optimization | Efficacy-toxicity separation, Dynamic control | Objective function J(u) = ∫[Q(x) + R(u)]dt with constraints g(x) ≤ x_max | Quantitative framework for designing interventions that maximize benefit while minimizing harm |

Computational Methodologies and Implementation

Implementing the coupling disturbance strategy requires advanced computational approaches that integrate diverse data types and modeling paradigms.

Multi-Omics Data Integration

The foundation of effective coupling disturbance begins with comprehensive data integration. Current methodologies combine:

- Transcriptomic Profiling: RNA-seq data capturing gene expression states across conditions

- Proteomic Mapping: Mass spectrometry-based protein quantification and post-translational modifications

- Metabolomic Characterization: LC-MS measurements of metabolic fluxes and concentrations

Advanced machine learning methods facilitate the integration of these multi-omics layers to generate mechanistic hypotheses about the overall state of cell signaling [9]. Specifically, dimensionality reduction techniques (e.g., principal component analysis) and identification of enriched genes/proteins/metabolites are overlayed on pre-built signaling networks or used to construct models of relevant pathways.

Hybrid QSP-ML Modeling Framework

Quantitative Systems Pharmacology (QSP) modeling has become a powerful tool in the drug development landscape, and its integration with machine learning represents a cornerstone of the NPDOA coupling disturbance strategy [9].

The symbiotic QSP-ML/AI approach follows two primary implementation patterns:

- Consecutive Application: One approach tackles a specific stage, and partial results feed into the other methodology

- Simultaneous Application: Both approaches work together on the same data, leveraging their complementary strengths

For coupling disturbance, consecutive application often involves using ML/AI first to identify potential disturbance points from high-dimensional data, followed by QSP modeling to mechanistically validate these candidates and predict system-wide consequences.

Table 2: Hybrid QSP-ML Implementation Patterns for Coupling Disturbance

| Implementation Pattern | Workflow Sequence | Advantages for Coupling Disturbance | Application Examples |

|---|---|---|---|

| ML → QSP | ML identifies candidate disturbances from high-dimensional data; QSP models validate and refine predictions | Unbiased discovery of novel coupling points; Mechanistic validation of data-driven findings | ML analysis of single-cell RNA-seq data identifies differentiation regulators; QSP models test disturbance strategies [9] |

| QSP → ML | QSP generates synthetic training data; ML models learn from this enhanced dataset | Augments limited experimental data; Improves ML model generalizability | QSP models of signaling networks generate perturbation responses; ML predicts optimal disturbance combinations |

| Simultaneous QSP-ML | Both approaches work concurrently on the same problem | Handles diverse data types; Leverages full potential of rich data landscape | Combined analysis of imaging, omics, and clinical data for multi-scale coupling identification |

Feature Selection Strategies for Predictive Modeling

Selecting features with the highest predictive power critically affects model performance and biological interpretability in coupling disturbance strategies [10].

Comparative studies reveal that:

- Data-Driven Feature Selection: Recursive Feature Elimination (RFE) with Support Vector Regression (SVR) outperforms other computational methods

- Biologically Informed Features: Pathway-based gene sets derived from knowledge bases (KEGG, CTD) provide interpretability but may show lower predictive performance

- Hybrid Approaches: Integrating computational and biologically informed gene sets consistently improves prediction accuracy across several anticancer drugs [10]

The optimal feature selection strategy for coupling disturbance combines:

- Initial Biological Filtering: Selection of genes/proteins within relevant pathways

- Computational Refinement: RFE-SVR or similar methods to identify most predictive features

- Mechanistic Validation: QSP modeling to ensure biological plausibility

Binding Affinity Prediction with Holistic Descriptors

Accurate drug-target affinity prediction is essential for designing effective coupling disturbances. Recent advances incorporate molecular descriptors based on molecular vibrations and treat molecule-target pairs as integrated systems [11].

Key innovations include:

- Vibration-Based Descriptors: E-state molecular descriptors associated with molecular vibrations show higher importance in quantifying drug-target interactions

- Protein Sequence Descriptors: Normalized Moreau-Broto autocorrelation (G3), Moran autocorrelation (G4), and transition-distribution (G7) descriptors

- Whole-System Modeling: Simultaneous consideration of both receptors and ligands rather than fragmented approaches

Random Forest models built on these principles demonstrate exceptional performance with coefficients of determination (R²) greater than 0.94, providing reliable affinity predictions for coupling disturbance design [11].

Experimental Protocols and Validation

Protocol 1: Multi-Omics Integration for Coupling Identification

This protocol identifies potential coupling points through integrated analysis of multiple molecular layers.

Materials and Reagents:

- Cell lines or tissue samples representing disease and control states

- RNA extraction kit (e.g., Qiagen RNeasy)

- Protein lysis and extraction buffer

- LC-MS grade solvents for metabolomics

- Single-cell RNA-seq platform (10X Genomics)

- Mass spectrometry system (Orbitrap series)

Procedure:

- Sample Preparation:

- Culture cells under standardized conditions

- Apply perturbations (compound treatment, genetic modification)

- Harvest cells at multiple time points (0, 2, 6, 12, 24 hours)

Multi-Omics Data Generation:

- Transcriptomics: Perform single-cell RNA sequencing using 10X Genomics platform

- Proteomics: Extract proteins, digest with trypsin, analyze by LC-MS/MS

- Metabolomics: Quench metabolism, extract metabolites, analyze by LC-MS

Data Integration:

- Apply dimensionality reduction (PCA, UMAP) to each data layer

- Use multi-omics integration algorithms (MOFA+) to identify shared factors

- Identify concordant changes across molecular layers

Coupling Point Identification:

- Construct association networks between features across omics layers

- Calculate disturbance propagation scores using random walk algorithms

- Prioritize nodes with high cross-omics connectivity and propagation potential

Validation:

- CRISPR-based perturbation of identified coupling points

- Measurement of downstream effects across molecular layers

- Assessment of functional consequences (cell viability, differentiation)

Protocol 2: Hybrid QSP-ML Model Development

This protocol develops and validates integrated QSP-ML models for predicting coupling disturbance effects.

Materials:

- High-performance computing cluster

- Python/R programming environments

- QSP modeling software (MATLAB, COPASI)

- ML libraries (scikit-learn, TensorFlow)

- Experimental validation dataset (dose-response measurements)

Procedure:

- Data Curation:

- Collect drug response data (IC₅₀, AUC) from public databases (GDSC, CTRP)

- Obtain corresponding molecular data (gene expression, mutations)

- Curate literature knowledge on pathway connectivity

Model Architecture Design:

- QSP Component: Develop mechanistic models of key signaling pathways

- ML Component: Design neural networks for pattern recognition in high-dimensional data

- Interface Layer: Create bidirectional communication between model components

Model Training:

- Train ML component on experimental data to predict pathway activation states

- Calibrate QSP parameters using ML-predicted states as constraints

- Implement iterative refinement until convergence

Disturbance Simulation:

- Simulate targeted disturbances across different network nodes

- Predict system-wide effects using the integrated model

- Identify optimal disturbance combinations that maximize therapeutic index

Validation:

- Prospective prediction of drug combination effects

- Comparison with experimental results

- Assessment of model accuracy using defined metrics (RMSE, AUC-ROC)

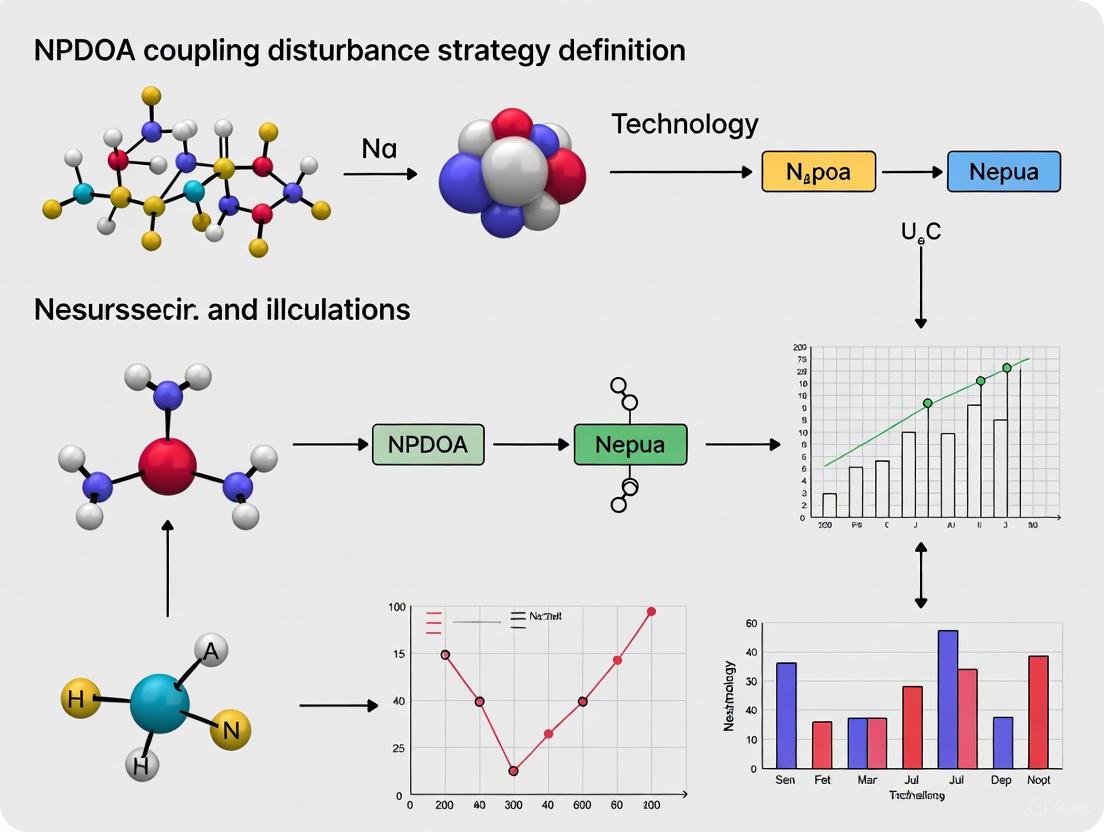

Visualization of Coupling Disturbance Framework

NPDOA Coupling Disturbance Workflow

Hybrid QSP-ML Integration Architecture

Research Reagent Solutions and Computational Tools

Table 3: Essential Research Reagents and Computational Tools for Coupling Disturbance Implementation

| Category | Item/Resource | Specification/Provider | Application in Coupling Disturbance |

|---|---|---|---|

| Omics Technologies | Single-cell RNA-seq Platform | 10X Genomics Chromium System | Cellular heterogeneity analysis and identification of rare cell states affected by disturbances |

| Mass Spectrometry System | Orbitrap Exploris Series | High-resolution proteomic and metabolomic profiling for multi-layer coupling analysis | |

| Multiplex Imaging Platform | CODEX/GeoMX Systems | Spatial context preservation for understanding tissue-level coupling | |

| Computational Resources | QSP Modeling Software | MATLAB, COPASI, SimBiology | Mechanistic modeling of biological systems and disturbance simulation |

| Machine Learning Libraries | TensorFlow, PyTorch, scikit-learn | Pattern recognition in high-dimensional data and predictive modeling | |

| Molecular Descriptor Tools | PaDEL-Descriptor, RDKit | Calculation of vibration-based and structural descriptors for affinity prediction [11] | |

| Experimental Validation | CRISPR Screening Tools | Pooled library screens (Brunello) | High-throughput validation of identified coupling points |

| Organoid Culture Systems | Patient-derived organoids | Physiological context maintenance during disturbance testing | |

| High-Content Imaging | ImageXpress Micro Confocal | Multiparameter assessment of disturbance effects across cellular features |

Applications and Case Studies

The coupling disturbance strategy has demonstrated significant utility across multiple therapeutic areas, with particularly promising applications in oncology and precision medicine.

Anticancer Drug Response Prediction

Implementation of coupling disturbance principles has substantially improved prediction of anticancer drug responses. Research demonstrates that integrating computational and biologically informed gene sets consistently improves prediction accuracy across several anticancer drugs, including Afatinib (EGFR/ERBB2 inhibitor) and Capivasertib (AKT inhibitor) [10].

Key findings include:

- Feature Selection Impact: Recursive Feature Elimination with Support Vector Regression (RFE-SVR) outperformed other computational methods

- Biological Integration Value: Pathway-based gene sets, while sometimes showing lower standalone performance, enhanced interpretability and provided biological validation

- Multi-Omics Transferability: Predictive models trained on transcriptomic data showed significantly higher performance compared to corresponding proteomic features

Drug-Target Affinity Optimization

The holistic approach to drug-target interaction modeling, where molecule-target pairs are treated as integrated systems, has yielded substantial improvements in affinity prediction accuracy [11].

Critical advances include:

- Vibration-Based Descriptors: E-state molecular descriptors associated with molecular vibrations demonstrated higher importance in quantifying drug-target interactions

- Random Forest Superiority: RF models achieved exceptional performance with coefficients of determination (R²) greater than 0.94

- Wide Applicability: The whole-system approach enabled accurate predictions across diverse target classes, including membrane proteins that pose challenges for structure-based methods

Cellular Network Reprogramming

Coupling disturbance strategies have enabled targeted reprogramming of cellular networks for therapeutic purposes, particularly in:

- Differentiation Control: Modulating regulatory networks to direct cell fate decisions

- Metabolic Reprogramming: Rewiring metabolic networks to disrupt pathological states

- Signaling Pathway Redirecting: Altering information flow through signaling networks to achieve therapeutic outcomes

Future Directions and Implementation Challenges

While the coupling disturbance strategy within the NPDOA framework shows significant promise, several challenges must be addressed for broader implementation.

Technical and Methodological Challenges

- Data Heterogeneity: Integrating disparate data types (imaging, omics, clinical) with different scales and noise characteristics

- Model Scalability: Developing computationally efficient approaches for whole-cell and multi-scale modeling

- Validation Complexity: Establishing standardized experimental protocols for validating computational predictions of coupling disturbances

Analytical and Interpretative Challenges

- Causality Determination: Distinguishing correlation from causation in high-dimensional biological data

- Network Context Dependency: Accounting for how coupling strengths change across biological contexts and states

- Feedback Incorporation: Modeling multi-layer feedback loops that modulate disturbance effects

Translational Challenges

- Clinical Implementation: Adapting complex computational strategies for clinical decision support

- Regulatory Acceptance: Establishing standards for validating computational predictions in therapeutic development

- Interdisciplinary Collaboration: Bridging computational and experimental domains to advance coupling disturbance applications

The continued development of the coupling disturbance strategy will require advances in computational methods, experimental technologies, and interdisciplinary collaboration. As these challenges are addressed, coupling disturbance is poised to become an increasingly powerful approach for developing targeted, effective, and safe therapeutic interventions within the NPDOA framework.

The Role of Coupling in Disrupting Attractor Convergence to Prevent Premature Stagnation

Within the framework of NPDOA coupling disturbance strategy definition research, controlling the dynamics of attractor states is paramount. In neural systems and biological networks, attractor states represent stable, self-sustaining patterns of activity. While convergence to an attractor enables stable function, premature stagnation in a single attractor can be pathological, preventing adaptive responses and information processing. This technical guide explores how strategic coupling disturbance can disrupt attractor convergence to maintain system flexibility, with significant implications for therapeutic intervention in neurological diseases and drug development.

The dynamics of perceptual bistability provide a foundational model for understanding these principles. When presented with an ambiguous stimulus, perception alternates irregularly between distinct interpretations because no single percept remains dominant indefinitely [12]. This demonstrates a core principle: a healthy, adaptive system must resist premature stagnation in any one stable state. This guide details the quantitative parameters, experimental protocols, and theoretical models for defining coupling disturbance strategies that prevent such pathological stability.

Theoretical Foundations of Attractor Dynamics and Coupling

Defining Attractor States and Convergence

An attractor in a dynamical system is a set of states toward which the system tends to evolve. In computational neuroscience, attractor networks are models where memories or percepts are represented as stable, persistent activity patterns [12]. Attractor convergence is the process by which the system's state evolves toward one of these stable patterns.

- Stable Fixed Points: In the context of perceptual bistability, each distinct interpretation (e.g., Percept A or Percept B) is a stable fixed point or attractor. The system's activity, represented by the firing rates (rA, rB) of competing neural populations, will flow toward one of these states (Aon or Bon) and remain there in the absence of perturbation [12].

- Premature Stagnation: This occurs when a network becomes trapped in a single attractor state for a pathologically long duration, disrupting normal alternation dynamics and impairing information processing. This is a target for therapeutic intervention.

The Role of Coupling in Network Dynamics

Coupling refers to the strength and nature of interactions between different elements of a network, such as synaptic connections between neural populations. Coupling parameters directly determine the stability and mobility of attractor states:

- Stabilizing Coupling: Strong, homogeneous excitatory coupling within a neural population can reinforce an attractor state, increasing its basin of attraction and making it more resistant to escape.

- Destabilizing Coupling: Strategic inhibitory coupling or the introduction of specific noise can destabilize an attractor, facilitating transitions.

Recent research on Continuous-Attractor Neural Networks (CANNs) with short-term synaptic depression reveals that the distribution of synaptic release probabilities (a key coupling parameter) directly modulates attractor state stability and mobility. Narrowing the variation of release probabilities stabilizes attractor states and reduces the network's sensitivity to noise, effectively promoting stagnation. Conversely, widening this variation can destabilize states and increase network mobility [13].

Quantitative Data on Coupling Parameters and Attractor Stability

The following tables synthesize key quantitative relationships between coupling parameters, network properties, and resulting attractor dynamics, as established in computational and experimental studies.

Table 1: Impact of Synaptic Release Probability Distribution on Network Dynamics [13]

| Release Probability Variation | Attractor State Stability | Network Response Speed | Noise Sensitivity |

|---|---|---|---|

| Narrowing (Less Variable) | Increases | Slows | Reduces |

| Widening (More Variable) | Decreases | Accelerates | Increases |

Table 2: WCAG 2 Contrast Ratios as a Model for Stimulus Salience and Percept Stability [14]

| Content Type | Minimum Ratio (AA) | Enhanced Ratio (AAA) | Analogy to Percept Strength |

|---|---|---|---|

| Body Text | 4.5:1 | 7:1 | Standard stimulus salience |

| Large Text | 3:1 | 4.5:1 | High stimulus salience (weakened convergence) |

| UI Components | 3:1 | Not defined | Non-perceptual structural elements |

Table 3: Effects of Stimulus Strength Manipulation on Dominance Durations [12]

| Experimental Manipulation | Effect on Dominance Duration of Manipulated Percept | Effect on Dominance Duration of Unmanipulated Percept | Net Effect on Alternation Rate |

|---|---|---|---|

| Increase one stimulus | Little to no change | Decreases | Increases |

| Increase both stimuli | Decreases | Decreases | Increases |

| Decrease one stimulus | Little to no change | Increases | Decreases |

Experimental Protocols for Investigating Coupling Disturbance

Protocol 1: Modulating Synaptic Release Probability in CANNs

This in silico protocol is designed to test how manipulating a core coupling parameter influences attractor dynamics.

- Network Construction: Implement a Continuous-Attractor Neural Network (CANN) model incorporating short-term synaptic depression. The model should feature a ring-like architecture representing a continuous stimulus feature space [13].

- Parameter Definition:

- Define the synaptic release probability (Prelease) for each connection, initially drawing these values from a gamma distribution.

- The key independent variable is the variation (e.g., standard deviation) of this gamma distribution.

- Stimulation and Measurement:

- Apply a localized input stimulus to the network to establish a persistent activity bump (the attractor state).

- Apply a constant, low-level noise perturbation to the entire network.

- Measure the diffusion constant of the activity bump's position over time, which quantifies attractor mobility.

- Measure the threshold of a competing stimulus required to dislodge the primary attractor.

- Experimental Manipulation: Systematically narrow and widen the variation of the Prelease distribution across simulation trials while holding the mean constant.

- Data Analysis: Correlate the Prelease variation with the measured diffusion constant and translational threshold. A negative correlation between variation and mobility supports the coupling disturbance hypothesis [13].

Protocol 2: CRISPR/Cas9 Engineering of Chromosome Bridges for Mechanical Coupling Analysis

This cell biology protocol investigates how physical coupling, via chromosome bridges, influences mitotic resolution—a process analogous to escaping a stagnant state. The controlled creation of physical links (bridges) allows for the study of force-based disruption mechanisms [15].

- Cell Line Preparation: Culture Telomerase-immortalized RPE-1 cells expressing Cas9 under a doxycycline-inducible promoter. Use cells with stable integration of lentiviral vectors containing species-specific guide RNAs (sgRNAs) targeting defined chromosomal locations.

- sgRNA Design and Validation:

- Design sgRNAs to target intergenic, subtelomeric regions on specific chromosome arms (e.g., Chr2q, Chr1p).

- Select targets to achieve a linear distribution of intercentromeric distances (e.g., from 0.8 to 240 Mbp), which defines the bridging chromatin length [15].

- Validate sgRNA plasmids using Sanger sequencing.

- Bridge Induction and Synchronization:

- Add doxycycline to the culture medium to induce Cas9 expression, generating simultaneous double-strand breaks at the two target loci. This produces a dicentric chromosome with a defined bridge length.

- Synchronize cells at the G2/M transition using a CDK1 inhibitor to ensure precise temporal control.

- Live-Cell Imaging and Quantification:

- Use time-lapse microscopy to track the resolution of chromosome bridges from anaphase through telophase.

- Quantify the frequency of bridges at each mitotic stage.

- Measure the maximum separation between the bridge kinetochores immediately prior to breakage.

- Data Analysis: Correlate the bridging chromatin length with the kinetochore separation distance required for breakage. A positive correlation demonstrates that the force required to disrupt a stable, coupled state (the bridge) is a function of the coupling strength (bridge length) [15].

Visualization of Concepts and Workflows

The following diagrams, generated with Graphviz, illustrate the core logical relationships and experimental workflows described in this guide.

Attractor Dynamics and Coupling Disturbance

Chromosome Bridge Resolution Workflow

The Scientist's Toolkit: Research Reagent Solutions

Table 4: Essential Reagents and Materials for Coupling Disturbance Research

| Item Name | Function/Application | Example Use Case |

|---|---|---|

| CRISPR/Cas9 System (Doxycycline-Inducible) | Precise genomic engineering to create defined physical couplings. | Engineering chromosome bridges with specific intercentromeric distances to study force-based resolution [15]. |

| Lentiviral Vectors (e.g., psPAX2, pMD2.G) | Efficient delivery and stable integration of genetic constructs (e.g., sgRNAs) into cell lines. | Creating stable cell lines for inducible bridge formation [15]. |

| CDK1 Inhibitor (e.g., RO-3306) | Chemical synchronization of cells at the G2/M transition. | Achieving precise temporal control over mitosis for studying bridge dynamics [15]. |

| Gamma Distribution Model | A statistical model for generating a specified mean and variance in synaptic parameters. | Implementing distributions of synaptic release probabilities in computational models of CANNs [13]. |

| Short-Term Synaptic Depression | A biological mechanism causing synaptic strength to transiently decrease after activity. | Modeling dynamic, activity-dependent changes in coupling strength within attractor networks [13]. |

Comparative Analysis of NPDOA within the Metaheuristic Algorithm Landscape

Metaheuristic algorithms have emerged as powerful tools for solving complex optimization problems that are difficult to address using traditional gradient-based methods. These algorithms are typically inspired by natural phenomena, biological systems, or physical principles [16]. The Neural Population Dynamics Optimization Algorithm (NPDOA) represents a recent innovation in this field, drawing inspiration from the cognitive processes and dynamics of neural populations in the brain [17]. This paper provides a comprehensive technical analysis of NPDOA within the broader landscape of metaheuristic optimization algorithms, with specific focus on its coupling disturbance strategy definition and applications in scientific domains including drug development.

The development of NPDOA responds to the No Free Lunch (NFL) theorem, which states that no single algorithm can perform optimally across all optimization problems [17]. This theoretical foundation has driven continued innovation in the metaheuristic domain, with researchers developing specialized algorithms tailored to specific problem characteristics. NPDOA contributes to this landscape by modeling the sophisticated information processing capabilities of neural systems, potentially offering advantages in problems requiring complex decision-making and adaptation.

Theoretical Foundations of NPDOA

Core Principles and Biological Inspiration

NPDOA is grounded in the computational principles of neural population dynamics observed in cognitive systems. The algorithm models how neural populations in the brain, particularly in the prefrontal cortex (PFC), coordinate to implement cognitive control during complex decision-making tasks [18]. The prefrontal cortex is recognized as the main structure supporting cognitive control of behavior, integrating multiple information streams to generate adaptive behavioral responses in changing environments [18].

The algorithm specifically mimics several key neurophysiological processes:

- Attractor dynamics: Neural populations trend toward attractor states representing optimal decisions

- Population coupling: Divergence from attractors through coupling with other neural populations enhances exploration

- Information projection: Controlled communication between neural populations facilitates transition from exploration to exploitation [19]

These processes are mathematically formalized to create a robust optimization framework that maintains a balance between exploration (searching new areas of the solution space) and exploitation (refining known good solutions).

Algorithmic Framework and Coupling Disturbance Strategy

The NPDOA framework implements a coupling disturbance strategy that regulates information transfer between neural populations. This strategy is fundamental to the algorithm's performance and represents its key innovation within the metaheuristic landscape.

The coupling disturbance mechanism operates through three primary components:

Neural Population Initialization: Multiple neural populations are initialized with random positions within the solution space, representing different potential solutions to the optimization problem.

Attractor Trend Strategy: Each population experiences a force pulling it toward the current best solution (the attractor), ensuring exploitation capability.

Disturbance Injection: Controlled disturbances are introduced through inter-population coupling, preventing premature convergence and maintaining diversity in the search process.

The mathematical formulation of the coupling disturbance strategy can be represented as:

Xi(t+1) = Xi(t) + α(A(t) - X_i(t)) + βΣj(Cij*(Xj(t) - Xi(t)))

Where:

- X_i(t) is the position of neural population i at iteration t

- A(t) is the position of the attractor (best solution found) at iteration t

- α is the attraction coefficient controlling exploitation intensity

- β is the coupling coefficient regulating exploration

- C_ij is the coupling strength between populations i and j

This formulation allows NPDOA to dynamically adjust its search behavior based on problem characteristics and convergence progress.

Comparative Analysis with Contemporary Metaheuristics

Algorithm Taxonomy and Classification

The metaheuristic algorithm landscape can be categorized based on sources of inspiration and operational mechanisms. NPDOA occupies a unique position within mathematics-based algorithms while incorporating elements from swarm intelligence approaches.

Table 1: Classification of Metaheuristic Algorithms

| Category | Representative Algorithms | Inspiration Source |

|---|---|---|

| Evolution-based | Genetic Algorithm (GA) [17] | Biological evolution |

| Swarm Intelligence | Particle Swarm Optimization (PSO) [19], Secretary Bird Optimization (SBOA) [17] | Collective animal behavior |

| Physics-based | Simulated Annealing [20] | Thermodynamic processes |

| Human Behavior-based | Hiking Optimization Algorithm [17] | Human problem-solving |

| Mathematics-based | Newton-Raphson-Based Optimization (NRBO) [17], NPDOA [17] | Mathematical principles |

Performance Comparison on Standard Benchmarks

Quantitative evaluation of metaheuristic algorithms typically employs standardized test suites such as CEC2017 and CEC2022, which provide diverse optimization landscapes with varying characteristics [17] [20]. These benchmarks assess algorithm performance across multiple dimensions including convergence speed, solution accuracy, and robustness.

Table 2: Performance Comparison on CEC2017 Benchmark Functions (100 Dimensions)

| Algorithm | Average Rank | Convergence Speed | Solution Accuracy | Robustness |

|---|---|---|---|---|

| NPDOA [17] | 2.69 | High | High | High |

| PMA [17] | 2.71 | High | High | High |

| CSBOA [20] | 3.12 | Medium-High | High | Medium-High |

| SBOA [17] | 3.45 | Medium | Medium-High | Medium |

| RTH [19] | 3.78 | Medium | Medium | Medium |

The tabulated data reveals that NPDOA demonstrates highly competitive performance, particularly in high-dimensional optimization spaces. Its balanced exploration-exploitation mechanism enables effective navigation of complex solution landscapes while maintaining convergence efficiency.

Statistical validation through Wilcoxon rank-sum tests and Friedman tests confirms that NPDOA's performance advantages are statistically significant (p < 0.05) when compared to most other metaheuristic algorithms [17]. This statistical rigor ensures that observed performance differences are not attributable to random chance.

NPDOA Coupling Disturbance Strategy: Experimental Protocols

Methodology for Coupling Disturbance Analysis

The investigation of NPDOA's coupling disturbance strategy requires carefully designed experimental protocols to isolate and quantify its effects on optimization performance. The following methodology provides a framework for systematic evaluation:

Phase 1: Baseline Establishment

- Execute standard NPDOA on selected benchmark functions from CEC2017 suite

- Record convergence curves, solution quality, and population diversity metrics

- Establish performance baseline without specialized coupling disturbance modifications

Phase 2: Disturbance Parameter Sensitivity Analysis

- Systematically vary coupling coefficients (β) from 0.1 to 1.0 in increments of 0.1

- For each coefficient value, execute 30 independent runs to account for stochasticity

- Measure performance metrics including final solution quality, convergence iteration, and population diversity

Phase 3: Comparative Analysis

- Implement alternative disturbance strategies including random resetting, opposition-based learning, and chaotic maps

- Maintain identical experimental conditions and parameter settings

- Quantify performance differentials across disturbance strategies

Phase 4: Real-world Validation

- Apply optimized coupling disturbance parameters to engineering design problems

- Validate performance in practical applications including UAV path planning and drug development optimization

This protocol enables comprehensive characterization of the coupling disturbance strategy's contribution to NPDOA's overall performance profile.

Workflow Visualization

Figure 1: NPDOA Coupling Disturbance Experimental Workflow

Application in Drug Development and Pharmaceutical Research

Optimization Challenges in Pharmaceutical Development

The drug development pipeline presents numerous complex optimization challenges that align well with NPDOA's capabilities. Key application areas include:

Clinical Trial Optimization: Designing efficient clinical trial protocols requires balancing multiple constraints including patient recruitment, treatment scheduling, and regulatory compliance. NPDOA's ability to handle high-dimensional, constrained optimization makes it suitable for generating optimal trial designs.

Drug Formulation Optimization: Pharmaceutical formulation involves identifying optimal component ratios and processing parameters to achieve desired drug properties. NPDOA can efficiently navigate these complex mixture spaces while satisfying multiple performance constraints.

Pharmacokinetic Modeling: Parameter estimation in complex pharmacokinetic/pharmacodynamic (PK/PD) models represents a challenging optimization problem. NPDOA's robustness to local optima enables more accurate model calibration.

The Pharmaceuticals and Medical Devices Agency (PMDA) in Japan has highlighted the pressing issue of "Drug Loss," where new drugs approved overseas experience significant delays or failures in reaching the Japanese market [21]. Advanced optimization approaches like NPDOA could help address this challenge by streamlining development pathways and improving resource allocation.

Real-World Evidence and Multi-Regional Clinical Trial Optimization

The growing importance of Real-World Data (RWD) and Real-World Evidence (RWE) in regulatory decision-making creates new optimization challenges [21]. NPDOA can optimize the integration of RWD into drug development pipelines by identifying optimal data collection strategies and evidence generation frameworks.

For Multi-Regional Clinical Trials (MRCTs), NPDOA's coupling disturbance strategy offers advantages in balancing regional requirements while maintaining global trial efficiency. This capability is particularly valuable for emerging bio-pharmaceutical companies (EBPs), which face resource constraints when expanding into new markets [21].

Table 3: Research Reagent Solutions for Neuro-Inspired Algorithm Validation

| Reagent/Resource | Function in NPDOA Research | Application Context |

|---|---|---|

| CEC2017 Benchmark Suite [17] | Standardized performance evaluation | Algorithm validation |

| CEC2022 Test Functions [20] | Contemporary problem landscapes | Modern optimization challenges |

| Wilcoxon Rank-Sum Test [17] | Statistical significance testing | Performance validation |

| Friedman Test Framework [17] | Multiple algorithm comparison | Competitive benchmarking |

| UAV Path Planning Simulator [19] | Real-world application testbed | Practical performance assessment |

Implementation Framework and Technical Considerations

Parameter Configuration and Tuning Guidelines

Successful implementation of NPDOA requires careful attention to parameter configuration. Based on experimental results across multiple problem domains, the following parameter ranges provide robust performance:

- Population Size: 50-100 neural populations for most problems

- Attraction Coefficient (α): 0.3-0.7, with higher values emphasizing exploitation

- Coupling Coefficient (β): 0.1-0.5, with higher values increasing exploration

- Disturbance Frequency: Adaptive adjustment based on convergence detection

The coupling disturbance strategy particularly benefits from adaptive parameter control, where β values decrease gradually as the algorithm progresses to shift emphasis from exploration to exploitation.

Computational Complexity Analysis

The computational complexity of NPDOA is primarily determined by three factors: fitness evaluation, attractor calculation, and coupling operations. For a problem with d dimensions and p neural populations, the per-iteration complexity is O(p² + p·d). This complexity profile is competitive with other population-based metaheuristics and scales reasonably to high-dimensional problems.

This comparative analysis establishes NPDOA as a competitive contributor to the contemporary metaheuristic landscape, with particular strengths in problems requiring balanced exploration-exploitation and robustness to local optima. The algorithm's coupling disturbance strategy represents a sophisticated mechanism for maintaining population diversity while preserving convergence efficiency.

Future research should focus on several promising directions:

- Adaptive Disturbance Strategies: Developing self-tuning mechanisms for coupling parameters based on convergence metrics

- Hybrid Approaches: Integrating NPDOA with local search techniques and gradient-based methods for improved refinement

- Large-Scale Optimization: Extending NPDOA to very high-dimensional problems (>1000 dimensions) through dimension reduction and cooperative coevolution

- Pharmaceutical Applications: Specializing NPDOA for drug development pipelines, clinical trial optimization, and RWE generation

The continued refinement of NPDOA and its coupling disturbance strategy holds significant potential for advancing optimization capabilities across scientific domains, including the critically important field of pharmaceutical development where efficient optimization can accelerate patient access to novel therapies.

Theoretical Advantages of Brain-Inspired Dynamics for Complex Search Spaces

The exploration of complex search spaces, particularly in fields like drug discovery and protein design, presents significant computational challenges. This technical guide delineates the theoretical advantages of leveraging brain-inspired dynamical systems to navigate these high-dimensional, non-convex landscapes. Drawing on principles from neuroscience—such as dynamic sparsity, oscillatory networks, and multi-timescale processing—we frame these advanced computational strategies within the context of NPDOA (Neural Population Dynamics and Optimization Algorithms) coupling disturbance strategy definition research. The integration of these bio-inspired paradigms facilitates a more efficient, robust, and context-aware exploration of search spaces, promising to accelerate the identification of novel therapeutic candidates and optimize molecular structures.

In drug development and molecular design, researchers are confronted with search spaces of immense complexity and dimensionality. These spaces are characterized by non-linear interactions, a plethora of local optima, and expensive-to-evaluate objective functions (e.g., binding affinity, solubility, synthetic accessibility). Traditional optimization algorithms often struggle with such landscapes, necessitating innovative approaches.

NPDOA coupling disturbance strategy definition research is predicated on the hypothesis that the brain's inherent algorithms for processing information and navigating perceptual and cognitive spaces can be abstracted and applied to computational search problems. The brain excels at processing noisy, high-dimensional data in real-time, adapting to new contexts, and focusing resources on salient information—all hallmarks of an efficient search strategy. This guide explores the core brain-inspired principles that can be translated into a competitive advantage for tackling complex search tasks in scientific research.

Core Brain-Inspired Principles and Their Theoretical Advantages

The following principles, derived from computational neuroscience, offer distinct advantages for managing complexity and enhancing search efficiency.

Dynamic Sparsity

Concept: Unlike static network pruning, dynamic sparsity leverages data-dependent redundancy to activate only relevant computational pathways during inference. This is inspired by the sparse firing patterns observed in biological neural networks, where only a small fraction of neurons are active at any given time, drastically reducing energy consumption [22].

- Advantage for Search Spaces: In complex search, not all parameters or dimensions are equally relevant for every evaluation step. Dynamic sparsity allows the search algorithm to selectively focus on promising subspaces or feature combinations, ignoring redundant or non-informative dimensions. This leads to a significant reduction in computational cost per iteration and can prevent over-exploration of barren regions.

- NPDOA Context: A disturbance strategy can be defined to dynamically "silence" certain search dimensions or model parameters based on ongoing performance feedback, mimicking the brain's context-aware resource allocation.

Stable Oscillatory Dynamics

Concept: The brain leverages neural oscillations across various frequency bands to coordinate information processing and maintain temporal stability. The Linear Oscillatory State-Space Model (LinOSS) is an AI model inspired by these dynamics, using principles of forced harmonic oscillators to ensure stable and efficient processing of long-range dependencies in data sequences [23].

- Advantage for Search Spaces: Oscillatory dynamics can prevent convergence to sharp, sub-optimal local minima by maintaining a healthy exploration-exploitation balance. The rhythmic, wave-like nature of the search process can help in "tunneling" through barriers in the fitness landscape. Furthermore, models like LinOSS demonstrate superior performance in handling long-sequence data, which is analogous to navigating search spaces where the fitness of a candidate depends on long-range interactions within its structure (e.g., protein folding).

- NPDOA Context: The oscillatory dynamics provide a natural mechanism for a coupling disturbance, periodically perturbing the search state to escape local optima without resorting to random, destabilizing jumps.

Multi-Timescale Processing and Stateful Computation

Concept: The brain maintains localized states at synapses and neurons, allowing it to integrate information over time and form context-aware models of the environment [22]. Coarse-grained brain modeling techniques capture these macroscopic dynamics, and their acceleration on brain-inspired hardware relies on dynamics-aware quantization and multi-timescale simulation strategies [24].

- Advantage for Search Spaces: Search processes can benefit from operating on multiple timescales simultaneously. A fast-timescale process can perform local exploitation, while a slow-timescale process can guide the overall search direction and adapt strategy based on accumulated knowledge. This stateful, "memory-augmented" search avoids recalculating everything from scratch for each new candidate solution.

- NPDOA Context: The strategy definition in NPDOA can incorporate a hierarchical framework where a meta-controller (slow timescale) observes the performance of a base searcher (fast timescale) and adapts its parameters or even its fundamental strategy based on long-term trends.

Inhibitory Microcircuits for Local Error Computation

Concept: Biological neural networks feature diverse inhibitory interneurons (e.g., Parvalbumin (PV), Somatostatin (SOM), and Vasoactive Intestinal Peptide (VIP) interneurons) that form microcircuits for local error computation and credit assignment [25]. The VIP-SOM-Pyramidal cell circuit, for instance, creates a disinhibitory pathway that can gate learning and plasticity based on behavioral relevance.

- Advantage for Search Spaces: This provides a blueprint for sophisticated, local credit assignment within a complex model. Instead of relying solely on a global error signal (e.g., overall binding affinity), a brain-inspired search algorithm can compute local errors for different components of a candidate solution (e.g., functional groups in a molecule). This allows for more precise and efficient updates, directing resources specifically to the parts of the solution that require improvement.

- NPDOA Context: The coupling between different search modules can be gated by a similar disinhibitory logic, where a top-down "relevance" signal (akin to a neuromodulator) determines which module is allowed to learn and adapt based on local errors.

Table 1: Summary of Brain-Inspired Principles and Their Search Space Advantages

| Brain-Inspired Principle | Neuroscience Basis | Theoretical Advantage in Complex Search |

|---|---|---|

| Dynamic Sparsity [22] | Sparse neural coding; energy efficiency | Focused resource allocation; reduced computational cost per evaluation |

| Stable Oscillatory Dynamics [23] | Neural oscillations for stable computation | Stable navigation of landscapes; escape from local optima; handling long-range dependencies |

| Multi-Timescale Processing [24] | Localized neural states; coarse-grained modeling | Balanced exploration/exploitation; context-aware strategy adaptation |

| Inhibitory Microcircuits [25] | PV, SOM, VIP interneuron networks | Precise, local credit assignment; gated learning for efficient updates |

Experimental Protocols and Methodologies

Translating these theoretical advantages into practical algorithms requires specific methodological approaches.

Protocol: Implementing Dynamic Sparsity in Molecular Optimization

Objective: To reduce the computational cost of evaluating candidate molecules in a generative model by implementing a dynamic sparsity mechanism.

- Model Architecture: Use a deep generative model (e.g., Graph Neural Network) for molecular generation. The final layers should be over-parameterized.

- Sparsity Mechanism: Incorporate a gating function (e.g., Gumbel-Softmax) preceding the weights of the final layers. This gate produces a sparse, binary mask that is a function of the input molecule's latent representation.

- Training: Jointly train the model weights and the gating function parameters. Apply a L0-style regularization loss on the gate activations to encourage sparsity.

- Evaluation: Compare the computational cost (FLOPs), wall-clock time, and quality (e.g., drug-likeness, target affinity) of generated molecules against a dense baseline model.

Protocol: Leveraging Oscillatory Dynamics for Protein Folding Search

Objective: To utilize an oscillatory state-space model to explore the conformational landscape of a protein more effectively.

- Problem Formulation: Frame protein folding as a sequence modeling problem, where the state is the evolving 3D conformation.

- Oscillatory Model: Implement a LinOSS-based model [23] to update the conformational state. The oscillatory dynamics are inherently integrated into the state transition matrix.

- Search Process: The model generates a sequence of conformational states. The "energy" of each state is evaluated using a physics-based or statistical potential function.

- Analysis: Monitor the search trajectory for its ability to find low-energy states and avoid becoming trapped in recurrent, high-energy local minima. Compare the diversity and quality of discovered folds against those found by traditional molecular dynamics simulations or Markov Chain Monte Carlo methods.

Visualization of Core Concepts

The following diagrams illustrate the key brain-inspired concepts and their proposed implementation in search algorithms.

Dynamic Sparsity in a Neural Network

Cortical Microcircuit for Gated Learning

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Computational Tools and Frameworks for Brain-Inspired Search

| Tool/Reagent | Function / Role | Relevance to NPDOA Research |

|---|---|---|

| State-Space Models (e.g., LinOSS) [23] | Provides a framework for building stable, oscillatory dynamics into sequence models. | Core engine for implementing oscillatory search dynamics and handling long-range dependencies in candidate solutions. |

| Brain-Inspired Computing Chips (e.g., Tianjic) [24] | Specialized hardware that offers high parallelism and efficiency for running sparse, neural algorithms. | Platform for ultra-fast evaluation of brain-inspired search algorithms, potentially offering orders-of-magnitude acceleration. |

| Dynamics-Aware Quantization Framework [24] | A method for converting high-precision models to low-precision (integer) without losing key dynamical characteristics. | Enables efficient deployment of complex search models on resource-constrained hardware, crucial for large-scale simulations. |

| Inhibitory Microcircuit Models (PV, SOM, VIP) [25] | Computational models of cortical interneurons that can be integrated into ANNs. | Building blocks for creating sophisticated credit assignment and gating mechanisms within a search algorithm's architecture. |

| Metaheuristic Optimization Algorithms [26] | A class of high-level search procedures (e.g., Population-based methods). | Provides the outer loop for the NPDOA strategy, managing a population of candidates and integrating brain-inspired principles for candidate evaluation and update. |

The theoretical framework outlined herein posits that brain-inspired dynamics offer a powerful and multifaceted arsenal for confronting the inherent difficulties of complex search spaces. The principles of dynamic sparsity, oscillatory stability, multi-timescale statefulness, and microcircuit-based credit assignment collectively address the critical bottlenecks of computational cost, convergence to local optima, and inefficient resource allocation. Framing these advances within NPDOA coupling disturbance strategy definition research provides a cohesive narrative for the next generation of optimization algorithms in scientific domains like drug discovery. The experimental protocols and tools detailed herein offer a concrete pathway for researchers to begin validating these theoretical advantages, paving the way for more intelligent, efficient, and effective exploration of the vast combinatorial landscapes that define the frontiers of modern science.

Implementing the Coupling Disturbance Strategy: Algorithm Design and Practical Applications

In computational science, coupling disturbance refers to the phenomenon where the state or output of one system component interferes with, disrupts, or modifies the behavior of another connected component. This concept is fundamental to understanding complex systems across domains ranging from neural dynamics to engineering systems. The modeling of coupling disturbance enables researchers to simulate how interconnected systems respond to internal and external perturbations, providing critical insights for system optimization, control, and prediction. Within the broader context of Neural Population Dynamics Optimization Algorithm (NPDOA) research, understanding coupling disturbance is particularly valuable for developing robust optimization strategies that can navigate complex, non-stationary solution spaces. The NPDOA itself, which models the dynamics of neural populations during cognitive activities, provides a bio-inspired framework for solving optimization problems, where coupling mechanisms between neural elements directly influence the algorithm's performance and convergence properties [17].

Contemporary research has demonstrated that properly managed coupling disturbance can be harnessed for beneficial purposes. For instance, in stochastic resonance systems, introducing controlled coupling between system components can enhance weak signal detection in noisy environments—a principle successfully applied in ship radiated noise detection systems. These hybrid multistable coupled asymmetric stochastic resonance (HMCASR) systems leverage coupling disturbance to improve signal-to-noise ratio gains through synergistic effects between connected components [27]. Similarly, in neural systems, the coupling between oscillatory phases and behavioral outcomes represents a fundamental mechanism underlying cognitive processes, with different coupling modes (1:1, 2:1, etc.) offering distinct computational advantages for information processing [28].

Table 1: Key Applications of Coupling Disturbance Modeling

| Application Domain | Type of Coupling | Computational Purpose |

|---|---|---|

| Neural Oscillations & Behavior | Phase-outcome coupling | Relating brain rhythm phases to behavioral decisions [28] |

| Ship Radiated Noise Detection | Multistable potential coupling | Enhancing weak signal detection in noisy environments [27] |

| Metaheuristic Optimization | Neural population coupling | Balancing exploration/exploitation in NPDOA [17] |

| Quadcopter Dynamics | Physical parameter coupling | Identifying nonlinear system interactions [29] |

Mathematical Frameworks for Coupling Disturbance

Langevin Equation with Coupled Potentials

The foundation for many coupling disturbance models in physical systems begins with the Langevin equation, which describes the motion of particles under both deterministic and random forces. For coupled systems, this equation extends to incorporate interactive potential functions:

Where C(x,x_j) represents the coupling term between the primary system variable x and other system variables x_j. In hybrid multistable coupled asymmetric stochastic resonance (HMCASR) systems, researchers have developed sophisticated coupling models that combine multiple potential functions to create enhanced signal detection capabilities. These systems introduce coupling between a control system and a controlled system, creating a network structure with complex dynamics that facilitate two-dimensional transition behavior of particles between potential wells [27].

The potential function U(x) itself can be engineered to create specific coupling behaviors. Recent work has moved beyond classical symmetric bistable potentials to develop multistable asymmetric potential functions that better represent real-world system interactions. For instance, the introduction of multi-parameter adjustable coefficient terms and Gaussian potential models enables the creation of potential landscapes with precisely controlled coupling disturbances between states. The mathematical formulation for such multistable asymmetric potential functions can be represented as:

Where the parameters a, b, c, d, and e are carefully tuned to create the desired coupling behavior between system states, with the cubic and Gaussian terms introducing controlled asymmetries that influence how disturbance propagates through coupled components [27].

Neural Phase-Outcome Coupling Models

In neural systems, coupling disturbance is frequently modeled through phase-outcome relationships, where the phase of neural oscillations couples with behavioral outcomes. Four primary statistical approaches have emerged for quantifying this form of coupling disturbance:

- Phase Opposition Sum (POS): Measures the extent to which phases of different outcome classes cluster at opposing portions of a cycle, based on inter-trial phase coherence [28].