Breaking the Channel Barrier: Advanced Strategies for High-Accuracy BCI Classification with Limited Electrodes

This article explores the critical challenge of improving Brain-Computer Interface (BCI) classification accuracy while using a limited number of EEG channels—a key objective for developing portable, efficient, and clinically viable...

Breaking the Channel Barrier: Advanced Strategies for High-Accuracy BCI Classification with Limited Electrodes

Abstract

This article explores the critical challenge of improving Brain-Computer Interface (BCI) classification accuracy while using a limited number of EEG channels—a key objective for developing portable, efficient, and clinically viable systems. We synthesize the latest research, covering foundational principles of channel selection, innovative methodological approaches like hybrid statistical-AI frameworks and the novel integration of EOG signals, and strategies for troubleshooting computational and generalization issues. A dedicated comparative analysis validates these techniques against state-of-the-art machine learning models, providing researchers and drug development professionals with a comprehensive roadmap for optimizing BCI performance in biomedical applications, from neurorehabilitation to assistive communication.

The Core Challenge: Why Channel Reduction is Critical for Next-Generation BCI

This technical support center provides troubleshooting guides and FAQs for researchers working on channel reduction in Brain-Computer Interface (BCI) systems for motor imagery (MI) classification. The content is designed to help you navigate specific challenges in optimizing system performance with limited channels.

Frequently Asked Questions

What is the core trade-off in reducing EEG channels in a BCI system? Reducing the number of EEG channels decreases system computational complexity, setup time, and potential for overfitting, which enhances practicality for real-world use. However, removing too many channels or the wrong channels can also discard valuable neural information, potentially leading to a decline in classification accuracy. The key is to identify and retain the most informative, non-redundant channels for the specific MI task [1] [2].

Why does my model's performance drop significantly after I reduce channels? A significant performance drop often indicates that channels critical for classifying the specific motor imagery task were removed. This can happen if the channel selection method is not tailored to the subject or the specific MI paradigms (e.g., hand vs. foot movement). To address this, implement subject-specific channel selection algorithms and validate that the selected channel subset retains discriminative information by checking its performance on a validation set [1] [3].

Can EOG channels really improve my MI classification accuracy? Yes. Contrary to being viewed only as a source of noise, Electrooculogram (EOG) channels can provide useful information for MI signal classification. One study demonstrated that combining just 3 EEG channels with 3 EOG channels (6 total) achieved 83% accuracy on a 4-class MI dataset, showcasing the effectiveness of a hybrid approach [3].

How can I select the most relevant channels without a brute-force approach? Filter-based methods (e.g., statistical tests, divergence measures) and wrapper-based methods are common. A novel hybrid approach combines statistical t-tests with a Bonferroni correction to identify statistically significant channels, discarding those with correlation coefficients below 0.5 to minimize redundancy. This method has been shown to achieve accuracies above 90% across subjects [1] [4].

Troubleshooting Guides

Issue: Inconsistent Classification Accuracy Across Subjects

Problem: Your channel reduction method works well for one subject but fails for another, leading to inconsistent results.

Solution: This is often due to high subject-specific variability in EEG signals.

- Recommended Action: Move from a one-size-fits-all channel set to a subject-dependent channel selection protocol.

- Methodology: For each subject, use a filtering technique like the Rayleigh coefficient map or a statistical t-test with Bonferroni correction to identify the most reactive channels for that individual before training the classifier [1] [3].

- Workflow:

Issue: System Becomes Impractical Due to High Computational Load

Problem: The computational cost of feature extraction and model training is too high after channel reduction, slowing down system performance.

Solution: Optimize the entire pipeline by integrating efficient channel selection with a lightweight deep learning model.

- Recommended Action: Implement a channel reduction technique that is computationally inexpensive itself, such as a filter-based method, and pair it with a deep learning model designed for efficiency, like one using depthwise-separable convolutions [1] [3].

- Methodology: The Deep Learning Regularized Common Spatial Pattern with Neural Network (DLRCSPNN) framework is one such approach. It uses a statistical-based channel reduction followed by a regularized CSP for feature extraction and a neural network for classification, demonstrating high accuracy with reduced channels [1] [4].

- Workflow:

Experimental Protocols & Performance Data

Protocol 1: Hybrid Statistical Channel Selection with DLRCSPNN

This methodology is designed for high-accuracy MI classification with a reduced channel set [1] [4].

- Data Acquisition: Use publicly available datasets like BCI Competition III Dataset IVa or BCI Competition IV Dataset IIa.

- Channel Selection:

- Perform a statistical t-test on each channel's data between the different MI classes.

- Apply a Bonferroni correction to adjust the p-values and control for false positives.

- Calculate correlation coefficients and exclude channels with coefficients below 0.5, retaining only statistically significant and non-redundant channels.

- Feature Extraction: Apply a Deep Learning Regularized Common Spatial Pattern (DLRCSP) to the selected channels. This technique shrinks the covariance matrix toward the identity matrix, with the γ regularization parameter automatically determined using Ledoit and Wolf’s method for robustness.

- Classification: Feed the extracted features into a Neural Network (NN) or Recurrent Neural Network (RNN) for classification.

- Accuracy: Achieved above 90% for every subject across all tested datasets.

- Improvement: Outperformed seven existing machine learning algorithms, with accuracy gains of 3.27% to 42.53% on individual subjects.

Protocol 2: Leveraging EOG Channels for Multi-Class MI

This protocol is particularly useful for multi-class MI problems where performance typically drops with a low number of EEG channels [3].

- Data Acquisition: Use a multi-class dataset such as BCI Competition IV Dataset IIa (4-class) or the Weibo dataset (7-class).

- Channel Strategy: Deliberately use a very small set of EEG channels (e.g., 3) and combine them with EOG channels (e.g., 2-3).

- Model Architecture: Employ a deep learning model built with multiple 1D convolution blocks and depthwise-separable convolutions (e.g., an EEGNet variant) to optimize classification from few channels.

- Training & Validation: Train the model end-to-end and validate on a separate test set.

Reported Performance [3]:

- BCI Competition IV IIa (4-class): 83% accuracy using 3 EEG + 3 EOG channels (6 total).

- Weibo Dataset (7-class): 61% accuracy using 3 EEG + 2 EOG channels (5 total).

Performance Comparison Table

The following table summarizes the quantitative results from different channel reduction approaches as reported in the literature.

| Method / Study | Channel Selection / Reduction Approach | Dataset(s) | Key Result |

|---|---|---|---|

| Hybrid Statistical (DLRCSPNN) [1] [4] | T-test with Bonferroni correction | BCI Competition III IVa, BCI Competition IV | Accuracy >90% per subject; 3.27% - 42.53% improvement over baselines. |

| EOG Hybrid Model [3] | Fixed small set of EEG and EOG channels | BCI Competition IV IIa (4-class) | 83% accuracy with 6 total channels. |

| EOG Hybrid Model [3] | Fixed small set of EEG and EOG channels | Weibo (7-class) | 61% accuracy with 5 total channels. |

| Filter-Based (ReliefF) & CSP [2] | ReliefF algorithm | BCI Competition III IVa | Major reduction from 118 to 10 electrodes while maintaining performance. |

The Scientist's Toolkit

This table details key reagents, datasets, and algorithms essential for experimenting in EEG channel reduction for BCI.

| Item Name | Type | Function / Application |

|---|---|---|

| BCI Competition Datasets (e.g., III-IVa, IV-IIa) [1] [3] | Data | Publicly available benchmark datasets for validating and comparing MI-BCI algorithms. |

| Statistical t-test with Bonferroni Correction [1] [4] | Algorithm | A filter-based channel selection method to identify statistically significant channels while controlling for multiple comparisons. |

| Deep Learning Regularized CSP (DLRCSP) [1] [4] | Algorithm | A robust feature extraction technique that regularizes the covariance matrix to improve generalization from limited channels. |

| EEGNet [3] | Algorithm | A compact deep learning architecture using depthwise-separable convolutions, ideal for training with a low number of channels. |

| ReliefF Algorithm [2] | Algorithm | A filter-based feature (channel) selection method that estimates the quality of features based on how well their values distinguish between instances that are near to each other. |

Troubleshooting Guides

Troubleshooting Guide: Managing Data Redundancy and Noise

Problem: My high-density EEG data contains excessive noise and redundant channels, which seems to be harming my BCI classifier's performance instead of improving it.

Explanation: A higher number of EEG electrodes does not always guarantee better classification performance. Irrelevant channels can introduce noise and redundant information, which reduces accuracy and slows system performance [1] [4]. There is often an optimal "sweet spot" for electrode count that provides sufficient spatial information without introducing detrimental redundancy [5].

Solution: Implement a statistical channel selection method to identify and retain only the most task-relevant EEG channels.

Steps:

- Perform Initial Preprocessing: Apply a band-pass filter (e.g., 8–13 Hz for mu rhythm relevant to motor imagery) to your raw EEG data [5].

- Calculate Channel Significance: Conduct a statistical test (e.g., t-test) to compare signal amplitudes between different task conditions (e.g., right hand vs. right foot motor imagery) for each channel [4].

- Apply Multiple Comparison Correction: Use a Bonferroni correction to adjust significance levels, controlling for false positives due to the large number of channels [1] [4].

- Set a Correlation Threshold: Calculate correlation coefficients between channels. Exclude channels with correlation coefficients below 0.5 to ensure you retain only statistically significant, non-redundant channels [4].

- Validate Selected Channels: Train your BCI classifier (e.g., SVM or Neural Network) using only the selected channel subset and evaluate accuracy on a validation set [5] [1].

Troubleshooting Guide: Addressing Computational Bottlenecks

Problem: Processing my high-density EEG dataset (e.g., 118 channels) is computationally intensive, slowing down my analysis and model development cycle.

Explanation: High-density EEG systems generate large volumes of data, which can be computationally demanding to process, especially for source localization and advanced machine learning algorithms [5]. More channels result in a large amount of data, increasing computational load [5].

Solution: Optimize your computational workflow by leveraging efficient feature extraction and leveraging source imaging to reduce dimensionality.

Steps:

- Utilize Source Imaging: Instead of working with all scalp-level signals, map EEG signals to cortical source space. This can eliminate the low spatial resolution of scalp EEG and provide a more direct measure of brain activity with fewer virtual sources [5].

- Implement Regularized CSP: For motor imagery tasks, use Regularized Common Spatial Patterns (DCSP) for feature extraction. The regularization parameter can be automatically determined using Ledoit and Wolf’s method to ensure stability and efficiency [1].

- Adopt a Hybrid Deep Learning Framework: Employ a framework like Deep Learning Regularized Common Spatial Pattern with Neural Network (DLRCSPNN), which is designed to handle high-dimensional EEG data efficiently [4].

- Benchmark Performance: Compare the processing time and classification accuracy of your optimized pipeline against the full-channel approach. The goal is to achieve comparable or better accuracy with significantly reduced computation [1].

Frequently Asked Questions (FAQs)

What is the optimal number of EEG electrodes for a motor imagery BCI study?

The optimal number is task-dependent and not simply "the more, the better." Experimental evidence from motor imagery studies indicates a point of diminishing returns.

Evidence: One study systematically testing configurations of 19, 30, 61, and 118 electrodes found that 61 channels yielded the best classification accuracy (84.73%), outperforming the 118-channel setup (83.95%) [5]. This suggests that for specific applications, a high-but-sub-250 channel count may be optimal.

The table below summarizes key findings on electrode count versus performance:

| Number of Electrodes | Key Finding | Research Context |

|---|---|---|

| 19 | Lower classification accuracy compared to higher-density setups [5]. | Motor Imagery BCI |

| 30-61 | Optimal range for best classification accuracy (e.g., 84.70%-84.73%) [5]. | Motor Imagery BCI |

| 118 | Results better than 19 channels but worse than 30/61 channels; potential redundancy [5]. | Motor Imagery BCI |

| < 32 | Source localization success decreases significantly [5]. | Epileptic Foci Localization |

| 31-63 | Global-level network analyses can be reasonably accurate [6]. | Infant Cortical Networks |

| 124+ | Essential for accurate characterization of phase correlations at higher frequencies [6]. | Infant Cortical Networks |

How can I select the most relevant channels from a high-density array to improve accuracy?

Strategic channel reduction can enhance BCI performance by removing noise and redundancy.

Solution: A hybrid approach combining statistical testing with a Bonferroni correction has been shown effective [4]. This method excludes channels with low correlation coefficients (<0.5), retaining only statistically significant, non-redundant channels. Research demonstrates this approach can achieve classification accuracies above 90% across subjects by focusing on the most informative signals [4].

My high-density EEG setup is prone to artifacts. How does this impact BCI classification?

Artifacts pose a significant challenge as EEG is susceptible to biologically caused artifacts (e.g., eye blinks, muscle activity) which reduce signal quality [5]. In high-density systems, artifacts can spread across multiple channels, leading to misinterpretation of brain activity and degraded classifier performance.

Mitigation Strategies:

- Preprocessing: Implement advanced preprocessing pipelines using techniques like Empirical Mode Decomposition (EMD) and Continuous Wavelet Transform (CWT) for effective noise isolation [7].

- Source Imaging: Converting scalp EEG to cortical sources can help mitigate the impact of certain artifacts [5].

- Real-Time Monitoring: Use acquisition software to monitor per-channel impedance and signal quality in real-time to identify problematic channels during setup [8].

Experimental Protocols & Workflows

Detailed Protocol: Channel Reduction for Enhanced MI Classification

This protocol outlines the methodology for applying a statistical channel reduction technique to improve motor imagery (MI) task classification [1] [4].

1. EEG Data Acquisition:

- Utilize a publicly available dataset (e.g., BCI Competition III Dataset IVa) containing MI data (e.g., right hand vs. right foot movements) recorded with high-density electrodes (e.g., 118 channels) [5] [4].

- Ensure data is partitioned into training and testing sets according to the standard dataset specifications.

2. Pre-processing:

- Apply a band-pass filter to the raw EEG data, typically in the 8–13 Hz (mu rhythm) range, as significant changes occur in these rhythms during motor imagery [5].

3. Channel Selection (Statistical t-test with Bonferroni Correction):

- For each EEG channel and each subject, perform a statistical test (t-test) to calculate the significance of the difference in signal features between the two MI task conditions.

- Apply the Bonferroni correction to the obtained p-values to account for multiple comparisons across all channels.

- Reject the null hypothesis for channels with corrected p-values below the significance level (e.g., p < 0.05).

- Additionally, discard channels with correlation coefficients below 0.5 to ensure only statistically significant, non-redundant channels are retained [4].

4. Feature Extraction:

- On the selected subset of channels, perform feature extraction using a method such as Regularized Common Spatial Patterns (DCSP) [1].

5. Classification:

- Train a classifier, such as a Neural Network (NN) or Support Vector Machine (SVM), using the extracted features from the reduced channel set [5] [4].

- Evaluate classification performance (e.g., accuracy, precision, recall) on the held-out test set.

Detailed Protocol: Assessing Electrode Density on Source Estimation

This protocol describes the methodology for evaluating how different electrode counts affect source estimation accuracy in MI-BCI studies [5].

1. Data Preparation:

- Start with a high-density EEG dataset (e.g., 118 channels) recorded during a motor imagery task.

2. Electrode Sub-sampling:

- Create several lower-density configurations by sub-sampling electrodes from the original high-density setup. Typical configurations to simulate are 19, 30, and 61 electrodes [5].

3. Cortical Source Signal Calculation:

- For each electrode configuration, use software like Brainstorm to compute cortical source signals. This involves solving the EEG inverse problem to map scalp potentials to activity on the cortical surface [5].

4. Feature Extraction and Classification:

- Use a method like Common Spatial Patterns (CSP) as a spatiotemporal filter to extract features from the cortical signals that are relevant to the MI task.

- Train an SVM classifier to distinguish between different MI tasks (e.g., right hand vs. right foot) for each electrode configuration [5].

5. Performance Comparison:

- Compare the classification accuracy across the different electrode configurations to identify the optimal number of electrodes for the specific MI paradigm [5].

Research Reagent Solutions

The following table details key materials and computational tools used in advanced high-density EEG research for BCI applications.

| Item Name | Function / Application | Explanation |

|---|---|---|

| BCI Competition Datasets | Benchmark Data | Publicly available, well-validated EEG datasets (e.g., BCI Comp III IVa, BCI Comp IV) for method development and comparison [1] [4]. |

| Brainstorm Software | EEG Source Imaging | Open-source software tool used for cortical source signal calculation and solving the EEG inverse problem [5]. |

| Common Spatial Patterns (CSP) | Feature Extraction | A spatial filtering algorithm used to enhance discriminability between two classes of EEG signals (e.g., different motor imagery tasks) [5] [7]. |

| Deep Learning Regularized CSP (DLRCSP) | Advanced Feature Extraction | A regularized version of CSP integrated with deep learning frameworks to improve robustness and feature quality [1]. |

| Support Vector Machine (SVM) | Classification | A traditional machine learning algorithm often used to classify extracted EEG features due to its effectiveness with high-dimensional data [5]. |

| Adaptive Deep Belief Network (ADBN) | Classification | A deep learning model that can be optimized with algorithms like Far and Near Optimization (FNO) for high-precision EEG signal classification [7]. |

| Empirical Mode Decomposition (EMD) | Preprocessing / Denoising | A technique for decomposing signals into intrinsic mode functions, useful for isolating noise from neural data in non-stationary signals like EEG [7]. |

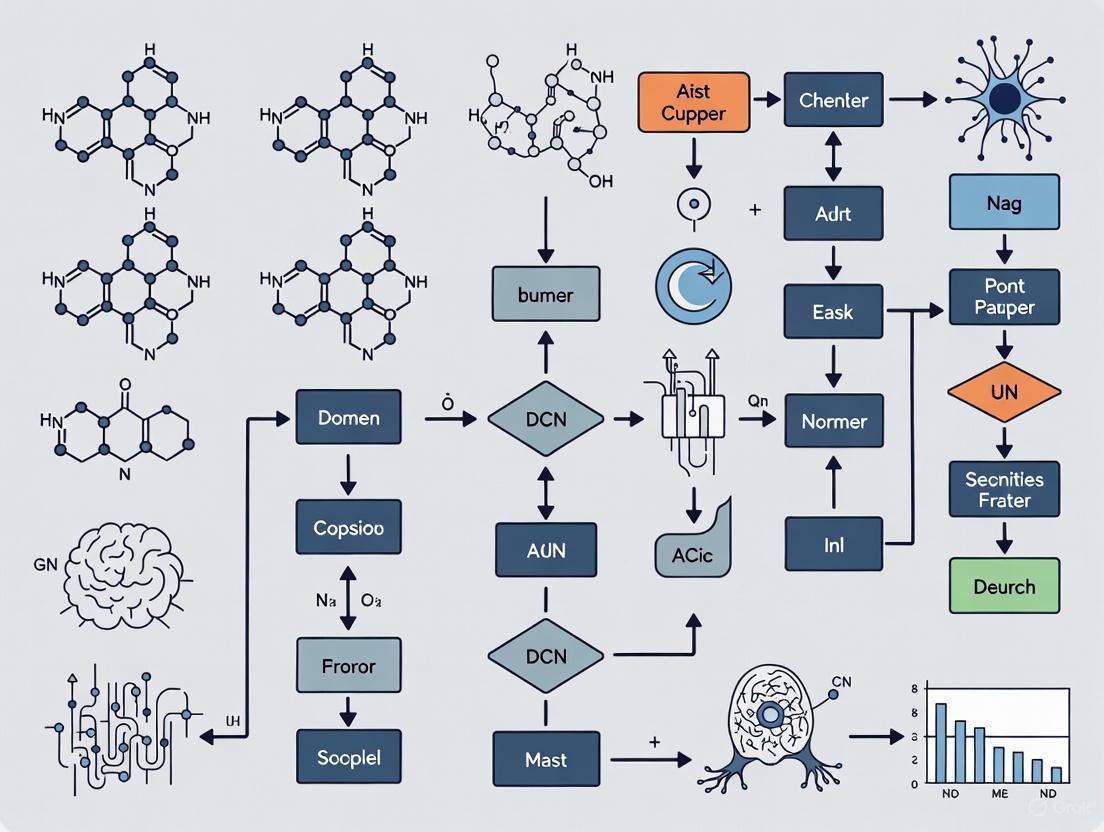

Workflow and Signaling Diagrams

Channel Selection and Classification Workflow

Channel Selection and Classification Workflow

Experimental Design Relationships

Experimental Design Relationships

Frequently Asked Questions

Q: Why should I reduce the number of EEG channels in my Motor Imagery BCI system?

Q: My classification accuracy drops when I use fewer channels. What can I do?

Q: What is a typical performance benchmark for a limited-channel system?

- A: Performance varies with the number of classes. For a 4-class MI task (e.g., BCI Competition IV IIa), an accuracy of 83% using only 3 EEG and 3 EOG channels (6 total) has been achieved. For a more complex 7-class task, an accuracy of 61% using 3 EEG and 2 EOG channels (5 total) has been reported [9] [3]. Other studies on binary classification have achieved accuracies above 90% with aggressive channel reduction [1] [4].

Q: My system is picking up strong 50Hz line noise. What is the likely cause?

- A: A high R² value at 50Hz (or 60Hz) is frequently indicative of a poor ground or reference connection. Ensure your ground electrode (e.g., at FPz) and reference electrodes (e.g., ear clips) are properly applied with good skin contact [10].

Performance Metrics for Limited-Channel BCIs

The following table summarizes key quantitative benchmarks from recent research, providing goals for system performance.

Table 1: Key Performance Metrics from Recent Studies

| Study Focus | Dataset & Task Complexity | Number of Channels (EEG+EOG) | Reported Accuracy | Key Methodology |

|---|---|---|---|---|

| Multi-class MI Classification [9] | BCI Competition IV IIa (4-class) | 3 EEG + 3 EOG (6 total) | 83% | Deep Learning (1D convolutions & depthwise-separable convolutions) |

| Multi-class MI Classification [9] | Weibo Dataset (7-class) | 3 EEG + 2 EOG (5 total) | 61% | Deep Learning (1D convolutions & depthwise-separable convolutions) |

| Binary MI Classification [1] [4] | BCI Competition III IVa (2-class) | Significantly reduced (exact number varies by subject) | >90% (all subjects) | Hybrid statistical test (t-test with Bonferroni correction) & DLRCSPNN framework |

Experimental Protocols for Channel Reduction and Classification

Here are detailed methodologies for two successful approaches to limited-channel BCI systems.

Protocol 1: Hybrid EEG-EOG Approach for Multi-class MI

This protocol leverages the informational value of EOG signals to boost performance with very few channels [9] [3].

- Data Acquisition: Use a dataset with both EEG and EOG recordings, such as BCI Competition IV IIa (4-class) or the Weibo dataset (7-class).

- Channel Selection: Identify a minimal set of EEG channels (e.g., 3) covering motor cortex areas. Retain the available EOG channels.

- Deep Learning Model Architecture:

- Input: Process the time-series data from the selected channels.

- Feature Extraction: Use multiple 1D convolution blocks to extract temporal features.

- Spatial Feature Learning: Employ depthwise-separable convolutions to efficiently combine features across channels and create a compact representation.

- Classification: Use a fully connected layer with a softmax activation for final class prediction.

- Validation: Evaluate using cross-validation to ensure the model generalizes well across subjects and sessions.

The workflow for this protocol is outlined below.

Protocol 2: Statistical Channel Reduction for Binary MI

This protocol uses a rigorous statistical method to select the most relevant EEG channels for high-accuracy binary classification [1] [4].

- Data Acquisition: Start with a high-density EEG dataset for a binary MI task (e.g., left hand vs. right hand).

- Channel Selection & Reduction:

- Perform a statistical t-test between classes for each channel.

- Apply a Bonferroni correction to the obtained p-values to control for false positives due to multiple comparisons.

- Calculate inter-channel correlations and exclude channels with correlation coefficients below a threshold (e.g., 0.5) to minimize redundancy.

- Retain only the channels that are both statistically significant and non-redundant.

- Feature Extraction: Use a Regularized Common Spatial Pattern (DLRCSP) algorithm on the selected channels. The regularization automatically optimizes the covariance matrix estimation for robust feature extraction.

- Classification: Feed the extracted features into a Neural Network (NN) or Recurrent Neural Network (RNN) for final task classification.

The workflow for this statistical channel reduction approach is detailed in the following diagram.

Protocol 3: Generating Virtual Channels

For scenarios where physical channels are limited, this protocol uses a deep learning model to create "virtual" channels, augmenting the data available for analysis [11].

- Input: A small set of actual EEG acquisition channels (e.g., 4 channels).

- Model: Use an EEG-Completion-Informer (EC-informer) model, which is based on the Informer architecture, known for its performance on time-series problems.

- Training: Train the model to learn the relationship between the input channels and the signals from other target channels. This requires only a small number of training samples (e.g., 288 samples).

- Output: The model generates several virtual acquisition channels, providing additional EEG information without requiring physical electrodes. These virtual channels can then be used with existing classification models.

The Scientist's Toolkit

Table 2: Essential Research Reagents and Materials

| Item Name | Function / Explanation |

|---|---|

| BCI Competition Datasets | Publicly available, benchmark datasets (e.g., BCI Competition IV IIa) used for training and validating algorithms in a standardized manner [9] [1]. |

| g.USBamp Amplifier | A commonly used research-grade biosignal amplifier for acquiring high-quality EEG data [10]. |

| EC-informer Model | A deep learning model based on the Informer architecture that generates virtual EEG channels from a limited number of physical inputs, enhancing data richness [11]. |

| EEGNet | A compact convolutional neural network architecture specifically designed for EEG-based BCI paradigms, effective for MI classification [9] [1]. |

| Regularized CSP (DLRCSP) | A feature extraction algorithm that improves upon traditional Common Spatial Patterns by regularizing the covariance matrix, leading to more robust features, especially with limited data [1] [4]. |

| 10-20 System Electrode Cap | A standard cap for positioning EEG electrodes consistently across subjects, ensuring data comparability and reproducibility [12] [10]. |

The transition of Brain-Computer Interface (BCI) technology from laboratory prototypes to clinically viable and commercially sustainable assistive technology hinges on solving a critical challenge: optimizing classification accuracy while minimizing the number of EEG channels. Systems requiring numerous electrodes are cumbersome, time-consuming to set up, and impractical for daily use, creating a significant barrier to adoption for individuals with motor disabilities [3] [13]. Consequently, research into channel reduction has become a central focus, driven by the dual goals of enhancing user convenience and system performance [4]. This technical support center addresses the key methodological and practical issues researchers encounter in this endeavor, providing troubleshooting guides and experimental protocols to accelerate the development of robust, real-world BCI systems.

FAQs: Addressing Core Research Challenges

Q1: Why should I reduce EEG channels in my motor imagery BCI experiment? What are the primary benefits?

Reducing the number of EEG channels is not merely a convenience; it is a strategic imperative for developing practical BCIs. The key benefits include:

- Enhanced System Portability and Usability: Fewer channels lead to faster setup times, improved comfort, and greater potential for use outside controlled laboratory environments [3]. This is crucial for daily assistive technology.

- Improved Computational Efficiency: Channel reduction decreases the data dimensionality, which lowers the computational cost and enables faster processing. This is vital for developing real-time BCI systems with low latency [4].

- Mitigation of Overfitting: High-dimensional data with limited training samples increases the risk of models overfitting to noise or subject-specific artifacts rather than generalizable neural patterns. Selecting a relevant subset of channels helps create more robust and generalizable classifiers [4] [14].

- Increased Signal-to-Noise Ratio: By eliminating channels that provide redundant or irrelevant information for the specific motor imagery task, you effectively reduce the overall noise in your dataset, which can lead to higher classification accuracy [4].

Q2: Beyond standard EEG electrodes, are there other channel types that can improve a reduced-channel system?

Yes, emerging research indicates that incorporating Electrooculogram (EOG) channels can significantly enhance the performance of a system with a reduced number of EEG channels. Counter to the traditional view that EOG signals primarily represent eye-movement artifacts to be removed, studies show they contain valuable neural information related to motor imagery. One study demonstrated that combining just 3 EEG channels with 3 EOG channels achieved 83% accuracy in a 4-class motor imagery task, challenging the notion that EOG channels only introduce noise [3]. This hybrid approach presents a promising path for boosting accuracy in channel-limited configurations.

Q3: How does the choice of BCI paradigm (e.g., with vs. without feedback) influence my channel selection strategy?

The optimal number of channels is not universal; it depends on your experimental paradigm. Research shows that the brain's activity and the involved neural networks differ between simple cue-based motor imagery and paradigms involving real-time feedback for control.

- MI Task Paradigm (Without Feedback): Requires the fewest optimal channels, as the cognitive process is more focused on the imagination itself [14].

- Control Paradigms (With Real-Time Feedback): Require more channels. As the task complexity increases from a two-class control to a four-class control paradigm, the number of channels needed to achieve optimal classification accuracy also increases. The introduction of visual feedback engages additional brain areas involved in visual processing and real-time cognitive adjustment [14].

Troubleshooting Guides: Overcoming Common Experimental Hurdles

Issue: Poor Classification Accuracy After Channel Reduction

Problem: After implementing a channel reduction strategy, your model's classification accuracy has dropped significantly.

Solution: Systematically verify your channel selection and processing pipeline.

| Possible Cause | Diagnostic Steps | Recommended Solution |

|---|---|---|

| Suboptimal Channel Selection | Check if selected channels align with sensorimotor cortex (e.g., C3, C4, Cz). Use a spatial map of feature importance. | Employ a robust hybrid channel selection method (e.g., combining statistical tests with a Bonferroni correction) rather than relying on a fixed set [4]. |

| Insufficient Feature Information | Analyze whether features from the reduced set still discriminate between classes. | Combine features from multiple domains (e.g., temporal, spectral, and spatial). Use advanced spatial filtering techniques like Regularized Common Spatial Patterns (RCSP) to enhance signal quality from few channels [4]. |

| Model Overfitting | Evaluate performance on a held-out test set. Check for a large gap between training and test accuracy. | Simplify the model or increase regularization. Ensure the feature dimensionality is appropriate for the number of training trials after channel reduction. |

Issue: Inconsistent Performance Across Subjects (Lack of Generalizability)

Problem: Your channel reduction and classification pipeline works well for some subjects but fails for others.

Solution: Implement strategies that account for high subject-to-subject variability.

| Possible Cause | Diagnostic Steps | Recommended Solution |

|---|---|---|

| Subject-Dependent Optimal Channels | Perform channel selection on a per-subject basis and compare results. | Move from a one-size-fits-all channel set to a subject-dependent channel selection process. Use algorithms that can identify a personalized optimal channel set for each user [14]. |

| Inadequate Model Adaptation | Train a single model on data pooled from multiple subjects and evaluate per-subject performance. | Utilize transfer learning or domain adaptation techniques to calibrate a base model to a new subject with minimal data. Avoid relying solely on subject-independent models. |

Experimental Protocols: Detailed Methodologies for Key Studies

Protocol 1: A Hybrid EEG/EOG Channel Reduction Framework

This protocol is based on a study that demonstrated high performance using a combination of a reduced EEG channel set and EOG channels [3].

1. Objective: To achieve high classification accuracy in a multi-class Motor Imagery (MI) task using a minimal number of channels by leveraging the synergistic information from EEG and EOG signals.

2. Datasets:

- BCI Competition IV Dataset IIa: 4-class MI (left hand, right hand, both feet, tongue), 22 EEG + 3 EOG channels, 250 Hz [3].

- Weibo Dataset: 7-class MI, includes EOG channels [3].

3. Methodology:

- Channel Configuration: For the 4-class dataset, use 3 EEG channels and 3 EOG channels (6 total). For the 7-class dataset, use 3 EEG and 2 EOG channels (5 total).

- Deep Learning Architecture: A model composed of multiple 1D convolution blocks is used for feature extraction and classification. The architecture incorporates depthwise-separable convolutions to efficiently model spatial and temporal features while reducing the number of trainable parameters.

- Signal Processing: The EOG channels are not treated as pure artifacts. The deep learning model is trained to automatically discern and utilize the MI-related neural components within the EOG signals.

4. Outcome Metrics:

- The study achieved an average accuracy of 83% on the 4-class dataset and 61% on the more complex 7-class dataset, demonstrating the effectiveness of the hybrid channel approach [3].

Protocol 2: A Statistical and Machine Learning-Based Channel Selection Framework

This protocol outlines a novel method that combines statistical testing with a advanced deep learning framework for channel reduction [4].

1. Objective: To develop a channel selection method that efficiently identifies the most relevant, non-redundant EEG channels for MI classification, maximizing accuracy with a minimal channel set.

2. Datasets: BCI Competition III (Dataset IVa) and BCI Competition IV (Datasets 1 and 2a) [4].

3. Methodology:

- Channel Selection (Hybrid Approach):

- Apply a statistical t-test between classes for each channel to evaluate its discriminative power.

- Use the Bonferroni correction to adjust p-values and control for false positives due to multiple comparisons.

- Calculate correlation coefficients between channels. Channels with correlation coefficients below 0.5 are considered redundant and excluded.

- Retain only the statistically significant and non-redundant channels for further processing.

- Feature Extraction (DLRCSP):

- Employ Regularized Common Spatial Patterns (RCSP) for feature extraction. The regularization (e.g., using Ledoit and Wolf's method to shrink the covariance matrix) helps to overcome the instability of standard CSP, especially when dealing with a small number of channels or limited training data.

- Classification:

- Use a Neural Network (NN) or Recurrent Neural Network (RNN) classifier to map the extracted features to MI task labels.

4. Outcome Metrics:

- This method achieved classification accuracy above 90% for every subject across all three datasets used in the study. It showed improvements of 3.27% to 42.53% over existing machine learning algorithms for individual subjects [4].

Comparative Analysis of Channel Reduction Performance

The table below summarizes quantitative results from key studies, providing a benchmark for evaluating your own channel reduction experiments.

Table 1: Performance Comparison of Different Channel Reduction Approaches in MI-BCI

| Study & Method | Dataset | Number of Classes | Final Channel Count | Reported Accuracy | Key Insight |

|---|---|---|---|---|---|

| Hybrid EEG/EOG with Deep Learning [3] | BCI Competition IV IIa | 4 | 6 (3 EEG + 3 EOG) | 83.0% | EOG channels provide valuable neural information, not just noise. |

| Statistical + Bonferroni (DLRCSPNN) [4] | BCI Competition III IVa | 2 | Significantly Reduced | >90.0% (all subjects) | A hybrid statistical-ML selection method can achieve very high accuracy. |

| IterRelCen Channel Selection [14] | Custom Two-Class Control | 2 | Optimal Set Selected | 94.1% (avg) | Paradigms with feedback require different channel sets than cue-based tasks. |

| IterRelCen Channel Selection [14] | Custom Four-Class Control | 4 | Optimal Set Selected | 83.2% (avg) | More complex control tasks require a greater number of channels for optimal performance. |

The Scientist's Toolkit: Essential Research Reagents & Materials

Table 2: Key Resources for BCI Channel Reduction Research

| Item / Technique | Specific Example / Product | Function in Research |

|---|---|---|

| Public BCI Datasets | BCI Competition IV IIa, BCI Competition III IVa, Weibo Dataset [3] [4] | Provides standardized, high-quality EEG data for developing and benchmarking new algorithms without the need for new data collection. |

| Deep Learning Frameworks | TensorFlow, PyTorch | Enables the implementation and training of custom architectures like 1D CNNs and Depthwise-Separable Convolutions for feature learning and classification [3]. |

| Spatial Filtering Algorithms | Common Spatial Patterns (CSP), Regularized CSP (RCSP) [4] | Enhances the signal-to-noise ratio of MI tasks by maximizing the variance for one class while minimizing it for the other, crucial when working with few channels. |

| Channel Selection Algorithms | IterRelCen [14], T-test with Bonferroni Correction [4] | Automates the process of identifying the most discriminative and non-redundant channels for a given task or subject. |

| Statistical Analysis Tools | Bonferroni Correction, t-test, Correlation Analysis [4] | Provides a robust statistical foundation for feature and channel selection, helping to avoid false discoveries. |

Cutting-Edge Methodologies for Intelligent Channel Selection and Feature Extraction

Troubleshooting Guides and FAQs

Frequently Asked Questions (FAQs)

Q1: Why should I use a Bonferroni correction in my channel selection analysis? When you perform multiple statistical tests (like t-tests on many EEG channels), the chance of incorrectly finding a significant result (Type I error) increases. The Bonferroni correction controls this "familywise error rate" by using a more stringent significance level. It adjusts the alpha level by dividing it by the number of tests performed (α/N). While it is simple to implement, it can be conservative and reduce statistical power. The related Holm-Bonferroni method is a sequential procedure that is more powerful while still controlling the error rate [15].

Q2: My classification accuracy dropped after channel reduction. What might be the cause? A drop in accuracy can occur if the channel reduction process is too aggressive and removes informative channels along with redundant ones. Re-evaluate your correlation coefficient threshold; the 0.5 value is a starting point, but the optimal threshold may be subject-specific [4]. Also, consider that some channels traditionally considered noisy, like EOG channels, may contain valuable neural information related to your task. Incorporating them alongside a reduced set of EEG channels can sometimes improve performance [3].

Q3: What is the practical benefit of reducing the number of channels in a BCI system? Reducing channels enhances the practicality of BCI systems by:

- Improving Computational Efficiency: Processing fewer channels reduces computational load and can speed up system performance [4].

- Increasing User Comfort: Systems become more flexible and portable with fewer electrodes, reducing setup time and discomfort [3] [16].

- Mitigating Overfitting: By eliminating redundant or noisy channels, you reduce the risk of the model overfitting to training data, which can improve generalization [4].

Q4: Can I use deep learning models for channel reduction? Yes, deep learning offers alternative approaches. One method involves using models like the EEG-Completion-Informer (EC-informer) to create "virtual channels" based on a small number of physical channels. This can supplement information without increasing the number of physical electrodes [11]. Other deep learning models can also automatically learn to weigh the importance of different channels during the classification process.

Troubleshooting Common Experimental Issues

Issue: Inconsistent or Low Classification Accuracy Across Subjects

| Potential Cause | Diagnostic Steps | Solution |

|---|---|---|

| Overly conservative correction | Compare results with and without the Bonferroni correction. Check if the p-value threshold is too strict, eliminating too many channels. | Use a less conservative correction method like the Holm-Bonferroni procedure or False Discovery Rate (FDR) [15]. |

| Non-optimal channel set | Analyze the spatial pattern of selected channels. Are they clustered in motor areas? Validate with a simple CSP-NN framework. | Implement a subject-dependent channel selection approach rather than a one-size-fits-all set [3]. |

| Insufficient features | The reduction process may have removed too much signal variability. | Combine the reduced EEG channels with data from other modalities, such as EOG [3] or fNIRS [16], to provide complementary information. |

Issue: Challenges in Reproducing a Published Channel Reduction Protocol

| Potential Cause | Diagnostic Steps | Solution |

|---|---|---|

| Unclear statistical parameters | Check the original paper's methodology section for specifics on correlation measures and alpha levels. | For the method in [4], use a two-sample t-test for channel selection, retain channels with correlation >0.5, and apply Bonferroni-adjusted alpha. |

| Dataset differences | Compare the number of initial channels and the task (e.g., 2-class vs. 4-class MI) with your dataset. | Note that performance can vary with the number of classes. Adjust reduction expectations for multi-class problems [3]. |

| Classifier performance variance | Replicate the baseline (all-channel) performance first to ensure your feature extraction and classification pipeline is correct. | Use the DLRCSPNN framework as described, ensuring proper regularization of the CSP covariance matrix [4]. |

Experimental Protocols and Data

Detailed Methodology: Hybrid Channel Reduction and Classification

The following workflow is synthesized from recent research on enhancing Motor Imagery (MI) classification [4].

1. EEG Data Acquisition

- Description: Begin with multi-channel EEG data recorded from subjects performing MI tasks.

- Example Datasets: BCI Competition III (Dataset IVa) and BCI Competition IV (Datasets 1 and 2a) are commonly used benchmarks [4].

2. Channel Selection via Statistical Testing

- Objective: Identify and retain the most statistically significant, non-redundant EEG channels.

- Procedure: a. Perform a statistical test (e.g., a two-sample t-test) for each channel to assess its ability to discriminate between MI tasks. b. Obtain a p-value for each channel. c. Apply the Bonferroni Correction to account for multiple comparisons: * Adjusted Significance Level (αB) = α / N * Where α is the original significance level (e.g., 0.05) and N is the total number of channels tested [15] [17]. d. Retain only channels with p-values less than αB. e. Correlation Filter: Further filter the channels by calculating correlation coefficients. Exclude any channels with correlation coefficients below 0.5 to ensure only non-redundant, task-related channels are kept [4].

3. Feature Extraction using Deep Learning Regularized CSP (DLRCSP)

- Objective: Extract discriminative spatial patterns from the reduced channel set.

- Procedure: The standard Common Spatial Pattern (CSP) algorithm is enhanced with regularization. The covariance matrix is shrunk toward an identity matrix, and the regularization parameter (γ) is automatically determined using Ledoit and Wolf’s method to improve generalization and stability [4].

4. Classification with a Neural Network (NN)

- Objective: Classify the extracted features into the respective MI tasks.

- Procedure: The features from the DLRCSP step are fed into a Neural Network classifier. A comparative framework using a standard CSP with a Neural Network (CSPNN) is often used to benchmark the performance improvement of the DLRCSPNN framework [4].

Experimental Workflow Diagram

The table below summarizes quantitative results from key studies implementing channel reduction for MI-BCI classification.

| Study / Method | Number of Channels (EEG/Other) | Dataset | Key Performance Result |

|---|---|---|---|

| Hybrid t-test + Bonferroni + DLRCSPNN [4] | Reduced (number not specified) | BCI Competition III, IVa | Highest accuracy for every subject >90%; improvement of 3.27% to 42.53% over baselines. |

| EEG + EOG with Deep Learning [3] | 3 EEG + 3 EOG (6 total) | BCI Competition IV IIa (4-class) | Accuracy: 83% (with only 6 total channels). |

| EEG + EOG with Deep Learning [3] | 3 EEG + 2 EOG (5 total) | Weibo (7-class) | Accuracy: 61% (demonstrating effectiveness in complex, multi-class setting). |

| Compact Hybrid EEG-fNIRS [16] | 2 EEG + 2 fNIRS pairs | Proprietary (3-class: MA, MI, Idle) | Accuracy: 77.6% ± 12.1% (feasibility of a compact hybrid system). |

The Scientist's Toolkit: Research Reagent Solutions

Table: Essential Materials and Computational Tools for BCI Channel Reduction Research.

| Item | Function in Research |

|---|---|

| Public BCI Datasets (e.g., BCI Competition III, IVa, IV IIa) | Provide standardized, real EEG data for developing and fairly comparing different channel reduction and classification algorithms [4] [3]. |

| Statistical Software/Libraries (e.g., Python SciPy, R Stats) | Used to perform the foundational statistical tests (t-tests) and multiple comparison corrections (Bonferroni, Holm-Bonferroni) during the channel selection phase [4] [15]. |

| Deep Learning Frameworks (e.g., TensorFlow, PyTorch) | Enable the implementation of advanced feature extraction models (like DLRCSP) and neural network classifiers for achieving high classification accuracy after channel reduction [4] [3]. |

| Regularized Common Spatial Patterns (CSP) | A feature extraction algorithm that is enhanced with regularization to improve the stability and generalization of spatial filters, especially important when working with a reduced set of channels [4]. |

| EC-informer Model | A deep learning model based on the Informer architecture that can generate virtual EEG channels from a small number of physical inputs, offering an alternative to physical channel reduction [11]. |

This technical support center is designed for researchers and scientists working on Brain-Computer Interface (BCI) systems, specifically those implementing the Deep Learning Regularized Common Spatial Pattern with Neural Network (DLRCSPNN) framework for motor imagery (MI) task classification. The content focuses on troubleshooting experimental protocols aimed at improving classification accuracy with limited EEG channels, a key challenge in BCI research. The DLRCSPNN framework integrates a novel channel reduction concept with advanced deep learning architectures to achieve high-accuracy classification while reducing computational complexity [4] [1].

Frequently Asked Questions (FAQs)

Q1: What is the primary advantage of the DLRCSPNN framework over traditional methods? A1: The DLRCSPNN framework significantly enhances classification accuracy while reducing the number of EEG channels required. Experimental results across three BCI competition datasets show accuracy improvements ranging from 3.27% to 42.53% for individual subjects compared to seven existing machine learning algorithms. The framework achieves these results by combining statistical channel selection with regularized feature extraction [4] [1].

Q2: How does channel reduction improve BCI system performance? A2: Channel reduction addresses several critical challenges in BCI systems: (1) reduces redundant information and noise from irrelevant channels, (2) decreases computational complexity and processing time, (3) minimizes setup time for practical applications, and (4) helps prevent overfitting while maintaining or improving classification accuracy [4] [9].

Q3: Can EOG channels provide valuable information for MI classification? A3: Contrary to traditional views that consider EOG channels primarily as sources of ocular artifacts, recent research demonstrates that EOG channels can contain valuable neural activity information related to motor imagery. One study achieved 83% accuracy on a 4-class MI task using only 3 EEG and 3 EOG channels, suggesting EOG channels may capture complementary information for classification [9].

Q4: What are the common causes of overfitting in DLRCSPNN models? A4: Overfitting in DLRCSPNN models typically results from: (1) insufficient training data relative to model complexity, (2) inadequate regularization in the CSP covariance matrix estimation, (3) channel selection that is too specific to training subjects, and (4) neural network architectures with excessive parameters for the available data [4] [1].

Troubleshooting Guides

Poor Classification Accuracy

Problem: Model fails to achieve expected classification accuracy (>90%) on motor imagery tasks.

Solution Steps:

- Verify Channel Selection: Ensure the statistical t-test with Bonferroni correction properly selects task-relevant channels. Check that channels with correlation coefficients below 0.5 are excluded [4] [1].

- Inspect DLRCSP Implementation: Confirm the regularized Common Spatial Pattern correctly applies Ledoit and Wolf's method for automatic γ regularization parameter determination to shrink the covariance matrix toward the identity matrix [4].

- Evaluate Training Data Sufficiency: Ensure adequate trial numbers per subject. The original DLRCSPNN validation used datasets with 28-224 training trials per subject [1].

- Consider Multi-Domain Features: If problem persists, implement feature fusion from spatial, temporal, and spectral domains as demonstrated in complementary research achieving 90.77% accuracy [18].

Computational Performance Issues

Problem: Experiment runtime excessively long despite channel reduction.

Solution Steps:

- Audit Channel Reduction Rate: Verify the hybrid statistical approach achieves sufficient channel reduction. Target models using 3-6 EEG channels for optimal performance [9].

- Profile Processing Stages: Identify whether bottlenecks occur during (a) channel selection, (b) DLRCSP feature extraction, or (c) neural network classification.

- Optimize Neural Network Architecture: Implement the validated DLRCSPNN structure rather than larger, unproven architectures [4] [1].

- Consider Hardware Acceleration: Utilize GPU acceleration for both CSP computation and neural network training, which is particularly beneficial for the DLRCSPNN framework.

Inconsistent Cross-Subject Performance

Problem: Model works well for some subjects but poorly for others.

Solution Steps:

- Implement Subject-Specific Channel Selection: Rather than using fixed channels across all subjects, apply the statistical t-test with Bonferroni correction individually for each subject [4].

- Validate Data Quality: Check EEG signal quality for underperforming subjects, focusing on signal-to-noise ratio and artifact contamination.

- Adjust Regularization Parameters: Modify the γ regularization parameter in DLRCSP to address subject-specific covariance matrix characteristics [4].

- Consider Hybrid Approaches: Investigate incorporating EOG channels alongside reduced EEG channels, as this has shown improved generalizability in multi-class scenarios [9].

Experimental Protocols & Methodologies

DLRCSPNN Framework Implementation

The complete DLRCSPNN experimental workflow comprises five critical phases that transform raw EEG data into classified motor imagery tasks:

Phase 1: EEG Data Acquisition

- Utilize standard BCI competition datasets (BCI Competition III Dataset IVa, BCI Competition IV Datasets 1 and 2a) [4] [1]

- Ensure proper electrode placement according to the international 10-20 system

- Maintain consistent sampling rates and recording parameters across subjects

Phase 2: Channel Selection Protocol

- Apply statistical t-test to identify channels with significant differences between motor imagery classes

- Implement Bonferroni correction to address multiple comparison problem

- Exclude channels with correlation coefficients below 0.5 to minimize redundancy

- Retain only statistically significant, non-redundant channels for further processing [4] [1]

Phase 3: Pre-processing Standards

- Apply bandpass filtering appropriate for motor imagery (typically 8-30 Hz)

- Implement artifact removal techniques (ocular, muscular)

- Segment data into epochs time-locked to motor imagery cues

- Apply baseline correction if necessary

Phase 4: DLRCSP Feature Extraction

- Implement Regularized Common Spatial Patterns using Ledoit and Wolf's method for covariance matrix regularization [4]

- Extract spatial features optimized for class discrimination

- Transform high-dimensional EEG data into lower-dimensional feature space

Phase 5: Neural Network Classification

- Implement feedforward neural network with optimized architecture

- Train using backpropagation with appropriate loss function

- Validate using k-fold cross-validation (typically 5-fold)

- Test alternative architectures (RNN, LSTM) for comparative analysis [4] [1]

Performance Validation Protocol

To ensure comparable and reproducible results, implement this standardized validation protocol:

- Data Partitioning: Apply 5-fold cross-validation, training on k-1 subsets and testing on the held-out subset [4] [1]

- Performance Metrics: Calculate accuracy, precision, recall, and F1-score for each fold

- Statistical Testing: Perform significance testing (e.g., paired t-tests) to compare against baseline methods

- Comparative Analysis: Benchmark against traditional CSP with Neural Network (CSPNN) and other established algorithms [4]

Experimental Results & Performance Data

DLRCSPNN Classification Accuracy

Table 1: Classification Performance of DLRCSPNN Framework on BCI Competition Datasets

| Dataset | Subjects | Accuracy Range | Mean Improvement vs. Baselines | Key Experimental Conditions |

|---|---|---|---|---|

| BCI Competition III Dataset IVa | 5 | >90% for all subjects | 3.27% to 42.53% | Binary MI (right hand vs. right foot); 118 channels reduced via statistical selection [4] [1] |

| BCI Competition IV Dataset 1 | 7 | >90% for all subjects | 5% to 45% | Binary MI (hand vs. foot); 59 channels reduced via proposed method [4] [1] |

| BCI Competition IV Dataset 2a | 9 | >90% for all subjects | 1% to 17.47% | 4-class MI; channel reduction applied [4] |

Comparative Algorithm Performance

Table 2: Performance Comparison of MI Classification Algorithms

| Algorithm | Reported Accuracy | Channels Used | Computational Complexity | Limitations |

|---|---|---|---|---|

| DLRCSPNN (Proposed) | 90-100% [4] [1] | Reduced set (statistically selected) | Medium | Requires parameter tuning |

| CSP-R-MF [4] | 77.75% | Multiple | High | Frequency band dependency |

| TSCNN with DGAFF [4] | 73.41-97.82% | Subject-wise selection | Very High | Model complexity issues |

| DB-EEGNET with MPJS [4] | 83.9% | Optimized set | High | Performance inconsistencies |

| CDCS with CSP/LDA [4] | 66.06-77.57% | Cross-domain selection | Medium | Limited trial data |

Research Reagent Solutions

Table 3: Essential Materials and Computational Tools for DLRCSPNN Research

| Research Component | Function/Purpose | Implementation Notes |

|---|---|---|

| EEG Datasets (BCI Competition III/IV) [4] [1] | Benchmark data for method validation | Publicly available from bbci.de; binary and multi-class MI tasks |

| Statistical Channel Selection | Identifies task-relevant channels while reducing dimensionality | Combines t-test with Bonferroni correction; excludes channels with correlation <0.5 [4] [1] |

| DLRCSP Algorithm | Regularized feature extraction for enhanced discrimination | Applies Ledoit and Wolf's method for automatic γ parameter determination [4] |

| Neural Network Classifier | Classification of extracted features | Feedforward architecture; compared with RNN/LSTM variants [4] [1] |

| EOG Channels (Alternative approach) [9] | Provides complementary information for MI classification | 3 EEG + 3 EOG channels achieved 83% accuracy in 4-class MI |

Advanced Technical Diagrams

DLRCSPNN Algorithmic Architecture

This comprehensive technical support resource provides researchers with the necessary tools to successfully implement, troubleshoot, and optimize the DLRCSPNN framework for enhanced motor imagery classification in brain-computer interface systems.

FAQs: Integrating EOG Signals into Your BCI Research

1. Why should I consider using EOG channels instead of just removing them as artifacts?

Traditional BCI systems view EOG signals as noise that must be eliminated. However, recent research demonstrates that EOG channels capture valuable neural information related to motor imagery tasks, not just ocular artifacts. By incorporating these channels alongside a reduced set of EEG electrodes, you can improve classification accuracy while decreasing the total number of channels required. This enhances system portability and computational efficiency without sacrificing performance [3].

2. What is the experimental evidence supporting EOG channels as informative signals?

A 2024 study tested this paradigm on two public datasets. For the BCI Competition IV Dataset IIa (4-class MI), using 3 EEG and 3 EOG channels (6 total) achieved 83% accuracy. For the Weibo dataset (7-class MI), using 3 EEG and 2 EOG channels (5 total) achieved 61% accuracy. This demonstrates that combining a reduced EEG set with EOG channels can be more effective than using a larger number of EEG channels alone [3].

3. How do I set up my experiment to capture useful EOG signals?

The methodology involves placing EOG electrodes to capture both vertical and horizontal eye movements. The recorded EOG channel consists of a mixture of neural activities and eye movement artifacts. Advanced deep learning techniques, including multiple 1D convolution blocks and depthwise-separable convolutions, can then be employed to optimize classification accuracy by extracting the relevant motor imagery information from these combined signals [3].

4. What are the main challenges when using EOG channels informatively, and how can I address them?

A primary challenge is the bidirectional contamination problem, where EOG recordings capture underlying neural activity from the prefrontal cortex, while EEG recordings in the prefrontal region pick up ocular patterns. Simple regression techniques may remove brain signals along with artifacts. To counter this, consider using Blind Source Separation (BSS) techniques like Stationary Subspace Analysis (SSA) combined with adaptive signal decomposition methods like Empirical Mode Decomposition (EMD) to separate cerebral activity from artifacts more effectively [19].

5. Can I implement this approach for online BCI systems?

Yes, though it requires careful design. One proven approach combines Blind Source Separation/Independent Component Analysis (BSS/ICA) with automatic classification using Support Vector Machines (SVMs). This setup isolates artifactual components and removes them while preserving the informative EOG signals, making it suitable for online environments with continuous data streams [20].

Troubleshooting Guides

Problem: Poor Classification Performance After Incorporating EOG Channels

Potential Causes and Solutions:

Cause 1: Inadequate separation of neural signals from ocular artifacts.

- Solution: Implement a hybrid artifact correction method. First apply Stationary Subspace Analysis (SSA) to concentrate artifacts in fewer components, then use Empirical Mode Decomposition (EMD) to denoise these components and recover neural information that leaked into artifactual components [19].

Cause 2: Suboptimal channel selection for your specific paradigm.

- Solution: Use meta-heuristic optimization algorithms for channel selection. Studies show that methods like the Dual-Front Sorting Algorithm (DFGA) can find optimal channel sets for each user, significantly improving accuracy while reducing channel count [21].

Cause 3: Incorrect deep learning model configuration.

- Solution: Employ specialized architectures like EEGNet, which uses temporal convolutional layers and depthwise separable convolutions to efficiently capture both temporal and spatial patterns in EEG/EOG data [3].

Problem: Online System Performance Degradation with EOG Signals

Potential Causes and Solutions:

Cause 1: Latency in artifact processing affecting real-time performance.

- Solution: Optimize your BSS algorithm selection. Research indicates that AMUSE and JADE algorithms offer favorable trade-offs between artifact isolation capability and computational efficiency for online implementation [20].

Cause 2: Inconsistent EOG signal quality across sessions.

- Solution: Implement an adaptive classification system that continuously optimizes the classifier through error-related potential (ErrP) detection. This allows the system to automatically expand training samples and adjust to changing signal conditions [22].

Protocol: Validating EOG as Informative Signals in MI-BCI

Objective: To determine if EOG channels improve motor imagery classification accuracy with reduced channel counts.

Materials:

- EEG/EOG recording system with minimum 16 channels

- Standard electrode placement (including EOG electrodes for vertical and horizontal eye movements)

- BCI Competition IV Dataset IIa or Weibo dataset for validation

- Deep learning framework (Python with TensorFlow/PyTorch)

Procedure:

- Record data from both EEG and EOG channels during motor imagery tasks.

- Preprocess signals: bandpass filter (0.5-40 Hz), remove line noise.

- Extract segments time-locked to motor imagery cues.

- Train a deep learning model with multiple 1D convolution blocks and depthwise-separable convolutions.

- Compare performance between EEG-only vs. EEG+EOG channel sets.

- Use permutation importance or channel attention mechanisms to validate EOG channel contributions.

Analysis:

- Calculate classification accuracy for different channel configurations.

- Perform statistical testing to confirm significant differences (e.g., paired t-tests).

- Visualize feature importance to identify which EOG channels contribute most.

Quantitative Performance Data

Table 1: Classification Accuracy with Reduced Channels Using EOG Signals

| Dataset | Paradigm | EEG Channels | EOG Channels | Total Channels | Accuracy | Comparison to EEG-Only |

|---|---|---|---|---|---|---|

| BCI Competition IV IIa | 4-class MI | 3 | 3 | 6 | 83% | Higher than full EEG set (22 channels) |

| Weibo Dataset | 7-class MI | 3 | 2 | 5 | 61% | Higher than conventional approaches |

| P300 Speller | P300 ERP | Optimized subset (avg. 4.66) | Included in optimization | Variable | +3.9% improvement | Over common 8-channel set [21] |

Table 2: Research Reagent Solutions for EOG-Informed BCI Experiments

| Material/Algorithm | Function | Application Context |

|---|---|---|

| EEGNet Architecture | Deep learning classification | Optimized for EEG/EOG spatial-temporal patterns [3] |

| Stationary Subspace Analysis (SSA) | Artifact concentration | Separates non-stationary EOG artifacts from stationary EEG [19] |

| Empirical Mode Decomposition (EMD) | Signal denoising | Recovers neural information from artifactual components [19] |

| Dual-Front Sorting Algorithm (DFGA) | Channel selection optimization | Finds optimal user-specific channel sets [21] |

| Support Vector Machines (SVMs) | Automated component classification | Identifies and removes artifacts in online systems [20] |

| Error-Related Potential (ErrP) Detection | System adaptation | Enables continuous classifier optimization [22] |

Signaling Pathways and Experimental Workflows

Troubleshooting Guide: Common RCSP Issues & Solutions

1. Problem: High Classification Variance or Overfitting

- Question: My CSP model performs well on training data but generalizes poorly to new subject data. What causes this and how can it be fixed?

- Diagnosis: This indicates overfitting, often caused by insufficient training data or noisy covariance matrix estimation.

- Solution: Implement Regularized CSP (R-CSP) which shrinks the covariance matrix toward the identity matrix. The γ regularization parameter can be automatically determined using Ledoit and Wolf’s method to stabilize covariance estimates [1] [4].

- Prevention: Use DLRCSP frameworks that integrate deep learning with automatic regularization parameter selection [1] [4].

2. Problem: Suboptimal Frequency Band Selection

- Question: How can I determine the best frequency bands for CSP filtering for my specific subjects?

- Diagnosis: Traditional CSP performance heavily depends on frequency band selection, with significant ERD/ERS variability among subjects [23].

- Solution: Implement transformed CSP (tCSP) which selects subject-specific frequency bands after CSP filtering rather than before. Consider filter bank approaches (FBCSP) that decompose signals into multiple frequency bands [23].

- Validation: Testing shows tCSP can improve accuracy by ~8% over standard CSP and ~4.5% over FBCSP [23].

3. Problem: Excessive Computational Demand with High Channel Counts

- Question: My spatial filtering process is computationally intensive with many EEG channels. How can I optimize this?

- Diagnosis: High-dimensional EEG data with irrelevant channels introduces noise and slows processing [1] [4].

- Solution: Apply hybrid channel selection combining statistical t-tests with Bonferroni correction, discarding channels with correlation coefficients below 0.5. This retains only statistically significant, non-redundant channels [1] [4].

- Optimization: Multi-objective evolutionary algorithms can simultaneously optimize channel selection and spatial filters, providing Pareto-optimal solutions for channel count vs. accuracy tradeoffs [24].

4. Problem: Inconsistent Spatial Filter Outputs

- Question: My spatial filter produces seemingly random channel assignments during offline analysis. What could be wrong?

- Diagnosis: This may indicate improper filter configuration or channel mapping issues in the processing pipeline [25].

- Solution: Verify spatial filter parameters and channel lists in analysis software. For BCI2000 implementations, check that

SpatialFilterTypeandSpatialFiltermatrix are correctly configured with proper input-output channel mappings [25]. - Debugging: Test with synthetic data (sine waves on known channels) to validate filter behavior before applying to experimental data [25].

RCSP Performance Comparison Table

Table 1: Performance comparison of different CSP variants in MI-BCI classification

| Method | Average Accuracy | Key Improvement | Computational Load | Best Use Case |

|---|---|---|---|---|

| Standard CSP [23] | ~76% | Baseline | Low | Initial prototyping |

| Filter Bank CSP (FBCSP) [23] | ~80% | Multiple frequency bands | Medium | General purpose MI-BCI |

| Transformed CSP (tCSP) [23] | ~84% | Frequency selection after CSP | Medium | Subject-specific optimization |

| Regularized CSP (R-CSP) [23] | 82-85% | Stabilized covariance matrix | Medium-High | Small datasets, noisy data |

| DLRCSPNN with Channel Selection [1] [4] | >90% | Channel reduction + regularization | High | High-accuracy applications |

| Multi-objective Optimization [24] | Varies by channel count | Optimal channel-filter combinations | High | Resource-constrained environments |

Table 2: Channel reduction impact on classification performance

| Channel Selection Method | Channels Retained | Accuracy Improvement | Key Advantage |

|---|---|---|---|

| Statistical t-test + Bonferroni [1] [4] | Significant channels only | 3.27% to 42.53% vs. baselines | Statistical significance |

| Evolutionary Multi-objective [24] | Flexible trade-off | Optimal for channel count | Pareto front solutions |

| Hybrid AI-based [1] | Task-relevant only | 5% to 45% across datasets | Automatic relevance detection |

Experimental Protocols

Protocol 1: DLRCSPNN with Channel Selection Framework

Purpose: To implement a complete pipeline combining channel selection with Regularized CSP for improved MI task classification [1] [4].

Workflow:

- EEG Data Acquisition: Utilize public datasets (BCI Competition III IVa, BCI Competition IV) with appropriate ethical approvals [1].

- Channel Selection: Apply statistical t-tests with Bonferroni correction, excluding channels with correlation coefficients <0.5 [1] [4].

- Pre-processing: Band-pass filter (e.g., 0.5-100 Hz), notch filter (50/60 Hz), artifact removal [23].

- Feature Extraction: Implement DLRCSP with automatic regularization parameter selection [1] [4].

- Classification: Utilize Neural Networks (NN) or Recurrent Neural Networks (RNN) for final classification [1].

Validation: Test on multiple subjects, comparing against baseline CSP+NN framework [1].

Protocol 2: Multi-objective Channel and Filter Optimization

Purpose: To simultaneously optimize electrode selection and spatial filters using evolutionary algorithms [24].

Workflow:

- Initialization: Start with full electrode set (e.g., 32 channels from BCI Competition III datasets) [24].

- Encoding: Represent solutions as spatial filter matrices with selection threshold [24].

- Multi-objective Optimization: Apply NSGA-II algorithm to optimize both classification error and number of electrodes [24].

- Pareto Front Analysis: Identify optimal trade-off solutions between channel count and accuracy [24].

- Validation: Compare against approaches that optimize channels while keeping spatial filter fixed [24].

Visualization: RCSP with Channel Selection Workflow

RCSP with Channel Selection Workflow

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential components for RCSP experiments

| Component | Function | Implementation Example |

|---|---|---|

| EEG Datasets | Algorithm validation | BCI Competition III IVa, BCI Competition IV [1] [23] |

| Spatial Filtering | Signal separation | Regularized CSP with covariance shrinkage [1] [4] |

| Regularization Methods | Prevent overfitting | Ledoit-Wolf covariance estimation [1] [4] |

| Channel Selection | Dimensionality reduction | Statistical t-test with Bonferroni correction [1] [4] |

| Frequency Optimization | Band selection | tCSP for post-filtering band selection [23] |

| Classification Algorithms | Pattern recognition | Neural Networks, RNN, SVM [1] [26] |

| Multi-objective Optimization | Parameter tuning | NSGA-II for channel-filter optimization [24] |

| Performance Metrics | Validation | Classification accuracy, computational efficiency [1] [24] |

Frequently Asked Questions (FAQs)

Q1: How does Regularized CSP specifically address the small sample size problem? Regularized CSP (R-CSP) stabilizes covariance matrix estimation by shrinking sample covariance matrices toward a target matrix (often the identity matrix). This shrinkage reduces variance in estimates when training data is limited, preventing overfitting to noise in small datasets [1] [4].

Q2: What is the practical advantage of selecting frequency bands after CSP filtering as in tCSP? Traditional approaches select frequency bands before CSP, which may preserve irrelevant frequency components. tCSP applies CSP first, then selects discriminant frequency bands from the spatially filtered signals, better capturing subject-specific ERD/ERS patterns and improving accuracy by ~8% over standard CSP [23].

Q3: How many channels are typically sufficient for effective MI classification after optimization? While optimal counts vary by subject, multi-objective optimization typically identifies solutions with significantly reduced channels (often 8-15) while maintaining 85-95% of the accuracy achieved with full channel sets (32-118 electrodes) [24].

Q4: What are the key differences between DLRCSP and traditional CSP approaches? DLRCSP integrates deep learning with regularized CSP, automatically determining optimal regularization parameters and learning complex feature representations. This achieves more robust spatial filters and higher accuracy (>90% in multiple datasets) compared to traditional CSP [1] [4] [27].

Q5: How can researchers balance computational efficiency with classification accuracy? Implement hybrid approaches: use fast statistical methods for initial channel selection, then apply RCSP on reduced channel sets. Multi-objective optimization provides a Pareto front of solutions showing the explicit trade-offs, allowing researchers to choose appropriate operating points based on their specific requirements [24].

Navigating Practical Obstacles: From Data Quality to Model Generalization

FAQs: Addressing Core Challenges in Cross-Subject BCI

What is the primary source of subject-specific variability in EEG-based BCIs? Subject-specific variability in EEG signals stems from individual differences in brain anatomy, neural dynamics, electrode placement, and cognitive strategies during motor imagery. These factors cause significant distribution shifts across subjects, making models trained on pooled data often underperform when applied to new individuals [28].

How can we build accurate models with limited calibration data from a new user? Few-shot learning and meta-learning frameworks are designed specifically for this challenge. For instance, Task-Conditioned Prompt Learning (TCPL) generates subject-specific prompts from a few calibration samples, enabling rapid adaptation to new subjects with minimal data by modulating a stable, shared backbone network [28].

Are there methods to maintain performance while reducing the number of EEG channels? Yes, channel selection and optimization are key strategies. Methods include:

- Statistical Selection: A hybrid approach using t-tests with Bonferroni correction can exclude channels with low correlation coefficients (below 0.5), retaining only significant, non-redundant channels [1] [4].

- Optimization Algorithms: Particle Swarm Optimization (PSO) can identify a compact, optimal channel montage. One study achieved robust performance using only eight EEG channels [29].

Can we achieve high accuracy without subject-specific calibration? While full zero-shot performance is challenging, some approaches significantly reduce calibration needs. Using Large Language Models (LLMs) as denoising agents can help extract subject-independent semantic features from EEG signals, improving model generalization to unseen subjects [30].

Which deep learning architectures best handle cross-subject variability? Hybrid architectures that combine different strengths are often most effective:

- CNN-Transformer Models: Convolutional Neural Networks (CNNs) extract spatial features, while Transformers capture global dependencies and long-range interactions [28] [31].

- CNN-LSTM with Attention: CNNs extract spatial features, Long Short-Term Memory (LSTM) networks model temporal dynamics, and attention mechanisms selectively weight the most informative spatial and temporal features [31].

Troubleshooting Guides: Solving Experimental Roadblocks

Problem: Poor Model Generalization to New Subjects

Symptoms: Your model performs well on the training subjects but shows a significant drop in accuracy when tested on new, unseen subjects.

Solutions: