Brain Neuroscience Metaheuristic Algorithms: Foundations and Breakthroughs in Biomedical Research

This article provides a comprehensive exploration of brain neuroscience metaheuristic algorithms, a class of optimization techniques inspired by the computational principles of the brain.

Brain Neuroscience Metaheuristic Algorithms: Foundations and Breakthroughs in Biomedical Research

Abstract

This article provides a comprehensive exploration of brain neuroscience metaheuristic algorithms, a class of optimization techniques inspired by the computational principles of the brain. Aimed at researchers, scientists, and drug development professionals, it covers the foundational theories of neural population dynamics and their translation into powerful optimization algorithms. The scope extends to methodological applications in critical areas such as neuropharmacology, medical image analysis for brain tumors, and central nervous system drug discovery. The article further addresses troubleshooting and optimization strategies to enhance algorithm performance and provides a validation framework through comparative analysis with established methods. By synthesizing insights from foundational concepts to clinical implications, this review serves as a roadmap for leveraging these advanced computational tools to overcome complex challenges in biomedical research.

The Neural Blueprint: How Brain-Inspired Computation is Shaping Next-Generation Metaheuristics

Theoretical Foundations of Neural Population Dynamics

Neural Population Dynamics refers to the study of how the coordinated activity of populations of neurons evolves over time to implement computations. This framework posits that the brain's functions—ranging from motor control to decision-making—emerge from the temporal evolution of patterns of neural activity, conceptualized as trajectories within a high-dimensional state space [1]. The mathematical core of this framework is the dynamical system, described as:

( \frac{dx}{dt} = f(x(t), u(t)) )

Here, ( x ) is an N-dimensional vector representing the firing rates of N neurons (the neural population state), and ( u ) represents external inputs to the network. The function ( f ), which captures the influence of the network's connectivity and intrinsic neuronal properties, defines a flow field that governs the possible paths (neural trajectories) the population state can take [2] [1]. A key prediction of this view is that these trajectories are not arbitrary; they are structured and constrained by the underlying network, making them difficult to violate or reverse voluntarily [2] [3]. This robustness of neural trajectories against perturbation is the fundamental property from which optimization inspiration can be drawn.

Core Principles for Optimization Inspiration

The constrained nature of neural population dynamics offers several core principles that can inform the design of robust optimization algorithms, contrasting with traditional bio-inspired metaheuristics.

Table 1: Core Dynamical Principles and Their Optimization Analogues

| Neural Dynamical Principle | Description | Potential Optimization Insight |

|---|---|---|

| Constrained Trajectories | Neural activity follows stereotyped, "one-way" paths through state space that are difficult to reverse or alter [2] [3]. | Algorithms could embed solution paths that efficiently guide the search process, preventing oscillations and unproductive explorations. |

| Underlying Flow Field | The temporal evolution of population activity is shaped by a latent structure (the flow field) defined by network connectivity [2] [1]. | The search process in an optimizer can be governed by a learned or predefined global structure, rather than purely local, stochastic moves. |

| Computation through Dynamics | The transformation of inputs (sensation) into outputs (action) occurs as the natural evolution of the system's state along its dynamical landscape [1]. | Optimization can be framed as the evolution of a solution state within a dynamical system, where the objective function shapes the landscape. |

| Robustness to Perturbation | Neural trajectories maintain their characteristic paths even when the system is challenged to do otherwise [2]. | Optimization algorithms can be designed to be inherently resistant to noise and to avoid being trapped by local optima. |

Unlike many existing nature-inspired algorithms that simulate the surface-level behavior of animals (e.g., hunting, foraging) [4], an inspiration drawn from neural dynamics focuses on a deeper, mechanistic principle of computation. This principle emphasizes how a system's internal structure naturally gives rise to a constrained, efficient, and robust search process in a high-dimensional space.

Key Experimental Evidence and Methodologies

Empirical support for the constrained nature of neural trajectories comes from pioneering brain-computer interface (BCI) experiments, which provide a causal test of these principles.

Experimental Paradigm for Probing Dynamics

A key study challenged non-human primates to volitionally alter the natural time courses of their neural population activity in the motor cortex [2] [3]. The experimental workflow is detailed below.

Detailed Experimental Protocol

1. Subject Preparation and Neural Recording:

- Implant: A multi-electrode array is surgically implanted in the primary motor cortex (M1) of a rhesus monkey.

- Neural Signals: Extracellular action potentials are recorded from approximately 90 neural units (a mix of single- and multi-neurons) [2].

2. Neural Latent State Extraction:

- Preprocessing: Spike counts are binned in small time windows (e.g., 10-50 ms).

- Dimensionality Reduction: A causal variant of Gaussian Process Factor Analysis (GPFA) is applied to the binned spike counts from all recorded units to extract a smooth, 10-dimensional (10D) latent state in real-time [2]. This low-dimensional state is believed to capture the behaviorally relevant dynamics of the population.

3. Brain-Computer Interface (BCI) Mapping:

- Mapping Function: A linear mapping transforms the 10D latent state into the 2D position of a computer cursor. This "position mapping" is critical as it makes the temporal structure of the neural activity directly visible to the subject [2].

- Initial Mapping (MoveInt): The initial mapping is calibrated so the cursor movement is intuitive and aligns with the subject's movement intention.

4. Identifying Natural Neural Trajectories:

- Task: The subject performs a standard two-target center-out BCI task, moving the cursor from a center target to peripheral targets (A and B).

- Trajectory Analysis: The 10D neural trajectories for movements from A→B and B→A are analyzed. Although these movements may appear similar in the intuitive "MoveInt" 2D projection, they are often distinct in the full 10D space [2].

- SepMax Projection: A separate 2D projection (the "SepMax" view) is mathematically identified to maximize the visual separation between the A→B and B→A neural trajectories [2].

5. Challenging the Neural Dynamics:

- The subject is then challenged in a series of phases, as shown in the workflow diagram. In the critical test, they are given a reward-based incentive to traverse the natural neural trajectory in a time-reversed direction. The finding is that subjects are unable to produce these reversed or otherwise violated trajectories, indicating the paths are fundamental constraints of the network [2] [3].

The Scientist's Toolkit: Essential Research Reagents

Table 2: Key Reagents and Tools for Neural Population Dynamics Research

| Item | Function in Experiment |

|---|---|

| Multi-electrode Array | A chronic neural implant (e.g., Utah Array) for long-term, stable recording of action potentials from dozens to hundreds of neurons simultaneously [2]. |

| Causal Gaussian Process Factor Analysis (GPFA) | A dimensionality reduction technique used in real-time to extract smooth, low-dimensional latent states from high-dimensional, noisy spike count data [2]. |

| Brain-Computer Interface (BCI) Decoder | The mapping function (e.g., linear transformation) that converts the neural latent state into a control signal, such as cursor velocity or position [2] [3]. |

| Behavioral Task & Reward System | Software and hardware to present visual targets and cues to the subject, and to deliver liquid or food rewards as incentive for correct task performance [2]. |

| Privileged Knowledge Distillation (BLEND Framework) | A computational framework that uses behavior as "privileged information" during training to improve models that predict neural dynamics from neural data alone [5]. |

A Conceptual Framework for Dynamics-Inspired Optimization

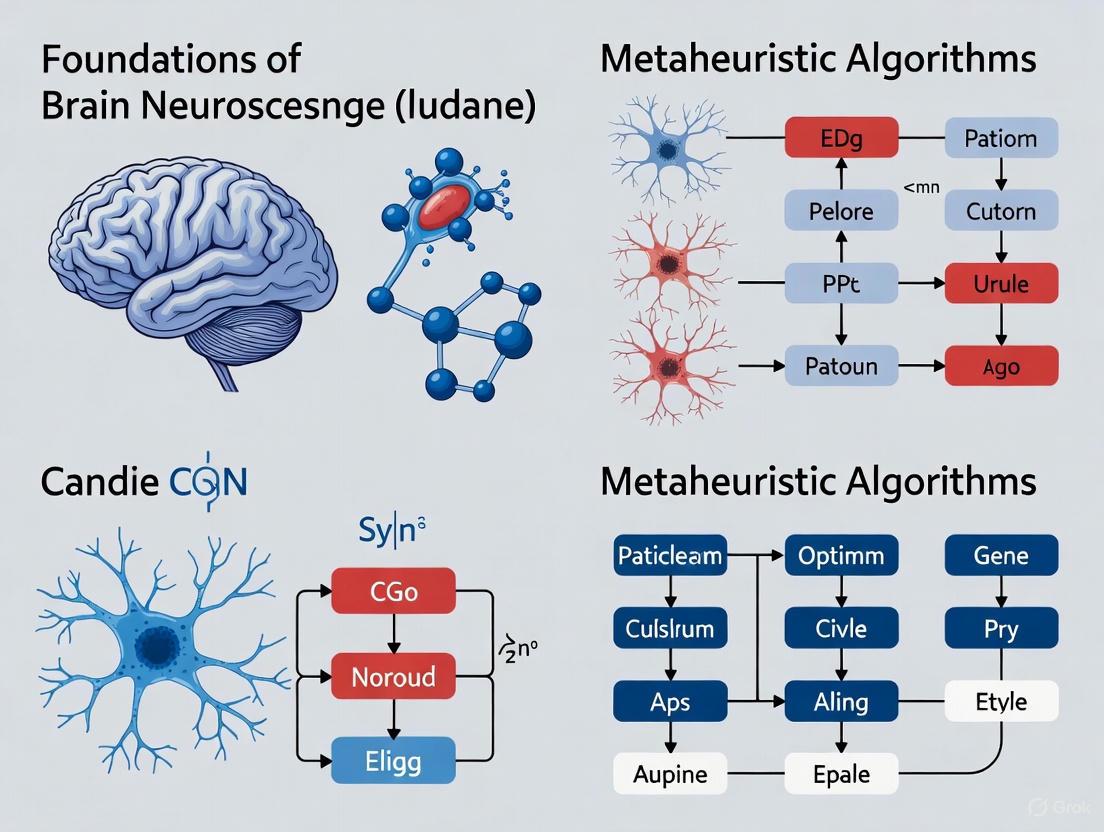

The following diagram illustrates how the principles of constrained neural dynamics can be abstracted into a general framework for optimization.

This framework suggests that an optimization algorithm can be built around a Dynamical Core, analogous to the neural network function ( f ). This core, informed by the problem's objective, creates a structured flow field within the search space. Potential solutions are not generated randomly but evolve as trajectories within this field. These trajectories are inherently constrained, preventing off-path exploration and efficiently guiding the search toward a robust solution, much like neural activity is guided to produce specific motor outputs. This stands in contrast to algorithms that rely on extensive stochastic exploration and may be more prone to getting sidetracked in complex landscapes. Future research at this intersection will involve formalizing the mathematics of such a dynamical core and benchmarking its performance against established metaheuristics.

The study of neural population dynamics represents a paradigm shift in neuroscience, moving beyond the characterization of single neurons to understanding how collective neural activity gives rise to perception, cognition, and action. This perspective has revealed that the brain does not rely on highly specialized, categorical neurons but rather on dynamic networks in which task parameters and temporal response features are distributed randomly across neurons [6]. Such configurations form a flexible computational substrate that can be decoded in multiple ways according to behavioral demands.

Concurrently, these discoveries in theoretical neuroscience have inspired a new class of metaheuristic algorithms that mimic the brain's efficient decision-making processes. The Neural Population Dynamics Optimization Algorithm (NPDOA) exemplifies this translation, implementing computational strategies directly informed by the attractor dynamics and population coding observed in biological neural systems [7]. This whitepaper examines the core principles governing neural population dynamics and their application to algorithm development, providing researchers with both theoretical foundations and practical experimental methodologies.

Theoretical Foundations of Neural Population Coding

Category-Free Coding and Mixed Selectivity

Contrary to traditional views of specialized neural categories, emerging evidence indicates that neurons in association cortices exhibit mixed selectivity, where individual neurons are modulated by multiple task parameters simultaneously [6]. In the posterior parietal cortex (PPC), for instance, task parameters and temporal response features are distributed randomly across neurons without evidence of distinct categories. This organization creates a dynamic network that can be decoded according to the animal's current needs, providing remarkable computational flexibility.

The category-free hypothesis suggests that when parameters are distributed randomly across neurons, an arbitrary group can be linearly combined to estimate whatever parameter is needed at a given moment [6]. This configuration obviates the need for precisely pre-patterned connections between neurons and their downstream targets, allowing the same network to participate in multiple behaviors through different readout mechanisms. Theoretical work indicates this architecture provides major advantages for flexible information processing.

Population Geometry and Dynamics

Neural populations encode cognitive variables through coordinated activity patterns where tuning curves of single neurons define the geometry of the population code [8]. This principle, well-established in sensory areas, also applies to dynamic cognitive variables. The geometry of population activity refers to the shape of trajectories in the high-dimensional state space of neural activity, which reflects the underlying computational processes.

Recent research reveals that neural population dynamics during decision-making explore different dimensions during distinct behavioral phases (decision formation versus movement) [6]. This dynamic reconfiguration allows the same neural population to support evolving behavioral demands. The latent dynamics approach models neural activity on single trials as arising from a dynamic latent variable, with each neuron having a unique tuning function to this variable, analogous to sensory tuning curves [8].

Neural Dynamics of Decision-Making

Attractor Dynamics in Decision Circuits

Decision-making neural circuits often implement attractor dynamics where population activity evolves toward stable states corresponding to particular choices [8]. These dynamics can be described by potential functions that define deterministic forces in latent dynamical systems:

Where Φ(x) is the potential function defining attractor states, D is the noise magnitude, and ξ(t) represents Gaussian white noise accounting for stochasticity in latent trajectories [8].

In the primate dorsal premotor cortex (PMd) during perceptual decision-making, neural population dynamics encode a one-dimensional decision variable, with heterogeneous neural responses arising from diverse tuning of single neurons to this shared decision variable [8]. This architecture allows complex-appearing neural responses to implement relatively simple computational principles at the population level.

Cross-Area Communication and Network Flexibility

Neural populations demonstrate remarkable flexibility, reconfiguring their communication patterns based on behavioral demands. During multisensory decision tasks, the posterior parietal cortex (PPC) shows differential functional connectivity with sensory and motor areas depending on whether the animal is forming decisions or executing movements [6]. This dynamic network reconfiguration enables the same neural population to support multiple cognitive operations through different readout mechanisms.

The information projection strategy observed in biological neural systems regulates information transmission between neural populations, enabling transitions between exploration and exploitation phases [7]. This principle has been directly implemented in metaheuristic algorithms where information projection controls communication between computational neural populations.

Experimental Methods and Protocols

Neural Recording During Decision-Making Tasks

Behavioral Paradigm: Researchers typically employ multisensory decision tasks where animals report judgments about auditory clicks and/or visual flashes presented over a 1-second decision formation period [6]. Stimulus difficulty is manipulated by varying event rates relative to an experimenter-imposed category boundary, and animals report decisions via directional movements to choice ports.

Neural Recording Techniques: Linear multi-electrode arrays are commonly used to record spiking activity from cortical areas such as the posterior parietal cortex (PPC) or dorsal premotor cortex (PMd) during decision-making behavior [6] [8]. These techniques enable simultaneous monitoring of dozens to hundreds of individual neurons across multiple cortical layers or regions.

Table 1: Key Experimental Components for Neural Population Studies

| Component | Specification | Function |

|---|---|---|

| Multi-electrode arrays | 32-128 channels, linear or tetrode configurations | Simultaneous recording from multiple single neurons |

| Behavioral task apparatus | Ports for stimulus presentation and response collection | Controlled presentation of sensory stimuli and measurement of behavioral choices |

| Muscimol | GABAA agonist, 1-5 mM in saline | Temporary pharmacological inactivation of specific brain regions |

| DREADD | Designer Receptors Exclusively Activated by Designer Drugs | Chemogenetic inactivation with precise spatial targeting |

| Data acquisition system | 30 kHz sampling rate per channel | High-resolution capture of neural signals |

| Spike sorting software | Kilosort, MountainSort, or similar | Identification of single-neuron activity from raw recordings |

Neural Population Analysis Framework

Latent Dynamical Systems Modeling: This approach involves modeling neural activity on single trials as arising from a dynamic latent variable x(t), with each neuron having a unique tuning function fi(x) to this latent variable [8]. The dynamics are governed by a potential function Φ(x) that defines deterministic forces, along with stochastic components.

Inference Procedure: Models are fit to spike data by maximizing the likelihood of observed spiking activity given the latent dynamics model. This involves simultaneously inferring the functions Φ(x), p0(x) (initial state distribution), fi(x) (tuning functions), and noise magnitude D [8]. The flexible inference framework dissociates the dynamics and geometry of neural representations.

State-Space Analysis: Novel state-space approaches reveal how neural networks explore different dimensions during distinct behavioral demands like decision formation and movement execution [6]. This analysis tracks the evolution of population activity through a reduced-dimensional state space.

From Neural Principles to Metaheuristic Algorithms

Neural Population Dynamics Optimization Algorithm

The Neural Population Dynamics Optimization Algorithm (NPDOA) represents a direct translation of neural population principles to computational optimization [7]. This brain-inspired metaheuristic implements three core strategies derived from neuroscience:

Attractor Trending Strategy: Drives neural populations toward optimal decisions, ensuring exploitation capability by converging toward stable states associated with favorable decisions [7].

Coupling Disturbance Strategy: Deviates neural populations from attractors by coupling with other neural populations, improving exploration ability through controlled disruption of convergence [7].

Information Projection Strategy: Controls communication between neural populations, enabling transition from exploration to exploitation by regulating information flow [7].

In NPDOA, each decision variable in a solution represents a neuron, and its value represents the firing rate. The algorithm simulates activities of interconnected neural populations during cognition and decision-making, with neural states transferred according to neural population dynamics [7].

Comparative Performance of Brain-Inspired Algorithms

Table 2: Comparison of Neural Population Dynamics Optimization with Traditional Metaheuristics

| Algorithm | Inspiration Source | Exploration Mechanism | Exploitation Mechanism | Application Domains |

|---|---|---|---|---|

| NPDOA | Neural population dynamics | Coupling disturbance strategy | Attractor trending strategy | Benchmark problems, engineering design [7] |

| Genetic Algorithm | Biological evolution | Mutation, crossover | Selection, survival of fittest | Discrete optimization problems [7] |

| Particle Swarm Optimization | Bird flocking | Individual and social cognition | Following local/global best particles | Continuous optimization [7] |

| Differential Evolution | Evolutionary strategy | Mutation based on vector differences | Crossover and selection | Deceptive problems, image processing [9] |

| Simulated Annealing | Thermodynamics | Probabilistic acceptance of worse solutions | Gradual temperature reduction | Combinatorial optimization [7] |

Research Reagents and Computational Tools

Essential Research Reagents

Table 3: Key Research Reagent Solutions for Neural Population Studies

| Reagent/Tool | Composition/Specification | Experimental Function |

|---|---|---|

| Muscimol solution | GABAA agonist, 1-5 mM in sterile saline | Reversible pharmacological inactivation of brain regions to establish causal involvement [6] |

| DREADD ligands | Designer drugs (e.g., CNO) specific to engineered receptors | Chemogenetic manipulation of neural activity with temporal control [6] |

| Multi-electrode arrays | 32-128 channels, various configurations | Large-scale recording of neural population activity with single-neuron resolution [6] [8] |

| Spike sorting algorithms | Software for isolating single-neuron activity | Identification of individual neurons from extracellular recordings [8] |

| Latent dynamics modeling framework | Custom MATLAB/Python code | Inference of latent decision variables from population spiking activity [8] |

Visualization of Neural Population Principles

Neural Decision Dynamics Workflow

The following diagram illustrates the integrated experimental and computational workflow for studying neural population dynamics during decision-making:

Neural Population Optimization Architecture

This diagram illustrates the three core strategies of the Neural Population Dynamics Optimization Algorithm:

The study of neural population dynamics has revealed fundamental principles of collective neural computation that bridge single neuron properties and system-level cognitive functions. The category-free organization of neural representations, combined with attractor dynamics and flexible population coding, provides a powerful framework for understanding how brains implement complex computations.

These neuroscientific principles have already inspired novel metaheuristic algorithms like NPDOA that outperform traditional approaches on benchmark problems [7]. Future research directions include developing more detailed models of cross-area neural communication, understanding how multiple parallel neural populations interact, and translating these insights into more efficient and adaptive artificial intelligence systems.

The continued dialogue between neuroscience and algorithm development promises mutual benefits: neuroscientists can develop better models of neural computation, while computer scientists can create more powerful brain-inspired algorithms. This interdisciplinary approach represents a frontier in understanding both natural and artificial intelligence.

The attractor trending strategy represents a foundational component of brain-inspired metaheuristic algorithms, conceptualizing the brain's inherent ability to converge towards optimal decisions. This computational framework models cognitive processes as trajectories on a high-dimensional energy landscape, where neural activity evolves towards stable, low-energy configurations known as attractor states. Grounded in theoretical neuroscience and supported by empirical validation across multiple domains, this strategy provides a biologically-plausible mechanism for balancing exploration and exploitation in complex optimization problems. This technical whitepaper examines the mathematical foundations, neuroscientific evidence, and practical implementations of attractor trending strategies, with particular emphasis on their transformative potential in drug development and pharmaceutical research where optimal decision-making under uncertainty is paramount.

The human brain demonstrates remarkable efficiency in processing diverse information types and converging toward favorable decisions across varying contexts [10]. This cognitive capability finds its computational analog in attractor dynamics, where neural network activity evolves toward stable states that represent resolved decisions, memories, or perceptual interpretations. In theoretical neuroscience, attractor states constitute low-energy configurations in the neural state space toward which nearby activity patterns naturally converge [11]. This convergence property provides a mechanistic explanation for various cognitive functions, including memory recall, pattern completion, and decision-making.

The attractor trending strategy formalizes this biological principle into a computational mechanism for optimization. Within metaheuristic algorithms, this strategy drives candidate solutions toward promising regions of the search space, analogous to neural populations converging toward states associated with optimal decisions [10]. This process is governed by the underlying network architecture—whether biological neural circuits or artificial optimization frameworks—which defines an energy landscape whose minima correspond to optimal solutions. The attractor trending strategy effectively implements gradient descent on this landscape, guiding the system toward increasingly optimal configurations while maintaining the flexibility to escape shallow local minima.

Mathematical Foundations of Attractor Trending

Fundamental Equations and Energy Formulation

The mathematical foundation of attractor trending derives from dynamical systems theory, particularly Hopfield network models. In these frameworks, a network of (n) neural units exhibits an energy function that decreases as the system evolves toward attractor states. For a functional connectome-based Hopfield Neural Network (fcHNN), the energy function takes the form:

[E = -\frac{1}{2} \sum{i=1}^{n} \sum{j=1}^{n} w{ij} \alphai \alphaj + \sum{i=1}^{n} bi \alphai]

where (w{ij}) represents the connection strength between regions (i) and (j), (\alphai) denotes the activity level of region (i), and (b_i) represents bias terms [11]. The system dynamics follow an update rule that minimizes this energy function:

[\alphai' = S\left(\beta \sum{j=1}^{n} w{ij} \alphaj + b_i\right)]

where (S(\alpha) = \tanh(\alpha)) serves as a sigmoidal activation function constraining activity values to the range ([-1,1]), and (\beta) represents a temperature parameter controlling the stochasticity of the dynamics [11].

Convergence Properties and Stability Analysis

The convergence behavior of attractor trending strategies exhibits distinct mathematical characteristics that can be categorized by their asymptotic properties:

Table: Mathematical Characterization of Attractor Convergence Patterns

| Convergence Type | Mathematical Definition | Biological Interpretation | Optimization Analogy |

|---|---|---|---|

| Fad Dynamics | Finite-time extinction ((I(t) = 0) for (t > T)) | Short-lived neural assemblies for transient tasks | Rapid convergence to local optima |

| Fashion Dynamics | Exponential decay ((I(t) \sim e^{-\lambda t})) | Balanced persistence for medium-term cognitive tasks | Balanced exploration-exploitation |

| Classic Dynamics | Polynomial decay ((I(t) \sim t^{-\gamma})) | Stable attractors for long-term memory | Convergence to global optima |

These convergence patterns emerge from the structure of the underlying dynamics, particularly the nonlinear rejection rates in the governing equations [12]. In the context of metaheuristic optimization, these mathematical properties enable algorithm designers to tailor the convergence characteristics to specific problem domains, balancing the tradeoff between solution quality and computational efficiency.

Neuroscientific Evidence and Experimental Validation

Empirical Support from Neuroimaging Studies

Recent advances in neuroimaging have provided compelling evidence for attractor dynamics in large-scale brain networks. Research utilizing functional connectome-based Hopfield Neural Networks (fcHNNs) demonstrates that empirical functional connectivity data successfully predicts brain activity across diverse conditions, including resting states, task performance, and pathological states [11]. These models accurately reconstruct characteristic patterns of brain dynamics by conceptualizing neural activity as trajectories on an energy landscape defined by the connectome architecture.

In one comprehensive analysis of seven distinct neuroimaging datasets, fcHNNs initialized with functional connectivity weights accurately reproduced resting-state dynamics and predicted task-induced activity changes [11]. This approach establishes a direct mechanistic link between connectivity and activity, positioning attractor states as fundamental organizing principles of brain function. The accuracy of these reconstructions across multiple experimental conditions underscores the biological validity of attractor-based models.

Cellular-Level Evidence from Neural Cultures

At the microscale, studies of cultured cortical networks provide direct evidence for discrete attractor states in neural dynamics. Research has identified reproducible spatiotemporal patterns during spontaneous network bursts that function as discrete transient attractors [13]. These patterns demonstrate key properties of attractor dynamics:

- Basins of attraction: Similar initial conditions converge toward identical spatiotemporal patterns

- Discreteness: A finite repertoire of patterns repeats across time

- Stability: Patterns persist despite minor perturbations

Experimental manipulation of these networks through electrical stimulation reveals that attractor landscapes exhibit experience-dependent plasticity. Stimulating specific attractor patterns paradoxically reduces their spontaneous occurrence while preserving their evoked expression, indicating targeted modification of the underlying energy landscape [13]. This plasticity mechanism operates through Hebbian-like strengthening of specific pathways into attractors, accompanied by weakening of alternative routes to the same states.

Implementation in Metaheuristic Optimization

Neural Population Dynamics Optimization Algorithm (NPDOA)

The Neural Population Dynamics Optimization Algorithm (NPDOA) represents a direct implementation of attractor principles in optimization frameworks. This brain-inspired metaheuristic incorporates three core strategies governing population dynamics [10]:

- Attractor Trending Strategy: Drives neural populations toward optimal decisions, ensuring exploitation capability

- Coupling Disturbance Strategy: Deviates neural populations from attractors through coupling with other populations, improving exploration ability

- Information Projection Strategy: Controls communication between neural populations, enabling transition from exploration to exploitation

In NPDOA, each candidate solution corresponds to a neural population, with decision variables representing neuronal firing rates. The algorithm simulates activities of interconnected neural populations during cognition and decision-making, with neural states evolving according to population dynamics derived from neuroscience principles [10].

Performance Benchmarks and Comparative Analysis

Empirical evaluations demonstrate that NPDOA achieves competitive performance across diverse benchmark problems and practical engineering applications. Systematic comparisons with nine established metaheuristic algorithms on standard test suites reveal NPDOA's particular effectiveness on problems with complex landscapes and multiple local optima [10].

Table: NPDOA Performance on Engineering Design Problems

| Problem Domain | Key Performance Metrics | Comparative Advantage |

|---|---|---|

| Compression Spring Design | Convergence rate, Solution quality | Balanced exploration-exploitation |

| Cantilever Beam Design | Constraint satisfaction, Optimal weight | Effective handling of design constraints |

| Pressure Vessel Design | Manufacturing feasibility, Cost minimization | Superior avoidance of local optima |

| Welded Beam Design | Structural efficiency, Resource utilization | Robustness across parameter variations |

The algorithm's effectiveness stems from its biologically-plausible balance between convergence toward promising solutions (exploitation) and maintenance of population diversity (exploration), directly mirroring the brain's ability to navigate complex decision spaces.

Experimental Protocols and Methodologies

Protocol 1: Mapping Attractor Landscapes in Neural Data

Objective: Characterize attractor dynamics in empirical neural activity data.

Materials:

- Multi-electrode array (MEA) system with 120 electrodes

- Cultured cortical neurons (18-21 days in vitro)

- Extracellular recording equipment

- Clustering and dimensionality reduction software

Procedure:

- Record spontaneous network bursts at 8±4 bursts per minute

- Define network bursts based on threshold-crossing of summed activity

- Represent each burst as spatiotemporal pattern in 120-dimensional space

- Project patterns to 2D space using physical center of mass

- Cluster bursts based on similarity measures (2D correlation, latency, participation)

- Identify attractors as clusters with high internal consistency

- Validate basins of attraction by correlating initial conditions with final patterns

Analysis: Quantify attractor stability through convergence metrics and perturbation responses [13].

Protocol 2: Validating Metaheuristic Performance

Objective: Evaluate attractor trending strategy in optimization algorithms.

Materials:

- Benchmark problem sets (CEC2020, CEC2022)

- Performance metrics (convergence rate, solution quality)

- Statistical testing framework (Wilcoxon rank sum test)

Procedure:

- Implement NPDOA with three core strategies

- Configure algorithm parameters based on problem dimensionality

- Execute optimization runs on benchmark problems

- Record performance metrics across multiple independent trials

- Compare with established metaheuristics (PSO, DE, GA, etc.)

- Apply statistical tests to validate significance of performance differences

- Apply to practical engineering problems (spring design, vessel design)

Analysis: Friedman ranking across multiple problem instances with post-hoc analysis [10] [14].

Visualization of Core Concepts

Attractor Dynamics in Neural State Space

Attractor Dynamics Conceptual Model

NPDOA Algorithm Architecture

NPDOA Algorithm Architecture

Applications in Pharmaceutical R&D and Drug Development

Optimizing Clinical Trial Design through Scenario Modeling

The pharmaceutical industry faces escalating challenges in clinical development, with nearly half (45%) of sponsors reporting extended timelines and 49% identifying rising costs as primary constraints [15]. Attractor-based optimization offers transformative potential through AI-driven scenario modeling that simulates trial outcomes under varying conditions.

By conceptualizing different trial designs as points in an optimization landscape, attractor trending strategies can identify optimal configurations balancing timeline, resource allocation, and patient recruitment constraints. This approach enables sponsors to:

- Proactively adjust staffing and monitoring based on predicted high-activity periods

- Refine eligibility criteria and endpoint selection through simulated impact analysis

- Balance traditional endpoints with real-world evidence generation

- Optimize portfolio prioritization across therapeutic areas

Leading pharmaceutical organizations are increasingly adopting these approaches, with 66% of large sponsors and 44% of small/mid-sized sponsors prioritizing AI technologies to enhance development efficiency [15].

Target Prioritization and Portfolio Optimization

Attractor dynamics provide a mathematical framework for portfolio strategy in pharmaceutical R&D, where companies must navigate complex decision landscapes with multiple competing constraints. The industry demonstrates clear prioritization patterns, with 64% of organizations focusing on oncology, 41% on immunology/rheumatology, and 31% on rare diseases [15].

Implementation of attractor-based optimization enables:

- Strategic resource allocation to high-ROI therapeutic areas

- Dynamic reprioritization based on evolving clinical and commercial landscapes

- Balanced portfolio construction across development stages

- Optimized licensing and acquisition decisions

The trend toward later-stage assets in dealmaking (with clinical-stage deals growing while preclinical deals return to 2009 levels) reflects an industry-wide shift that can be systematically optimized through attractor-based decision frameworks [16].

Research Reagent Solutions

Table: Essential Research Materials for Attractor Dynamics Investigation

| Research Reagent | Specification | Experimental Function |

|---|---|---|

| Multi-Electrode Array (MEA) | 120 electrodes, cortical neuron compatibility | Extracellular recording of network activity patterns |

| Cortical Neurons | 18-21 days in vitro (DIV) | Biological substrate exhibiting spontaneous burst dynamics |

| Clustering Algorithm | Similarity graph-based with multiple metrics | Identification of recurrent spatiotemporal patterns |

| Hopfield Network Framework | Continuous-state, sigmoidal activation | Computational modeling of attractor dynamics |

| Functional Connectivity Data | fMRI BOLD timeseries, regularized partial correlation | Empirical initialization of network weights |

| Stimulation Electrodes | Localized, precise timing control | Targeted perturbation of network dynamics |

Future Directions and Research Challenges

While attractor trending strategies show significant promise across computational and biological domains, several challenges merit continued investigation. Future research should address:

- Multi-scale integration linking microscale neuronal dynamics to macroscale network phenomena

- Dynamic landscape modeling capturing how attractor architectures evolve with experience

- Clinical translation developing attractor-based biomarkers for neurological and psychiatric conditions

- Algorithm hybridization combining attractor trending with complementary optimization principles

The convergence of neuroscience and optimization theory through attractor dynamics represents a fertile interdisciplinary frontier with potential to transform how we approach complex decision-making across scientific and clinical domains.

The attractor trending strategy embodies a fundamental principle of brain function with far-reaching implications for optimization theory and practice. By formalizing how neural systems converge toward optimal states through landscape dynamics on high-dimensional energy surfaces, this approach provides both explanatory power for cognitive processes and practical algorithms for complex problem-solving. The strong neuroscientific evidence for attractor dynamics across scales—from cultured neural networks to human neuroimaging—lends biological validity to these computational frameworks.

For pharmaceutical research and development, where decision complexity continues to escalate amid growing cost pressures and regulatory requirements, attractor-based optimization offers a mathematically rigorous approach to portfolio strategy, clinical trial design, and resource allocation. As the industry increasingly embraces AI-driven methodologies, principles derived from brain neuroscience provide biologically-inspired pathways to enhanced decision-making efficiency and therapeutic innovation.

In the field of brain neuroscience-inspired metaheuristic algorithms, the twin challenges of balancing exploration and exploitation represent a fundamental computational dilemma. Exploration involves searching new regions of a solution space, while exploitation refines known good solutions. The human brain excels at resolving this conflict through sophisticated neural dynamics, providing a rich source of inspiration for optimization algorithms. Recent research has begun formalizing these biological principles into computational strategies, particularly through mechanisms termed coupling disturbance and information projection [10].

This technical guide examines the theoretical foundations, experimental validations, and practical implementations of these brain-inspired mechanisms. We focus specifically on their role in managing exploration-exploitation tradeoffs in optimization problems relevant to scientific domains including drug development. The Neural Population Dynamics Optimization Algorithm (NPDOA) serves as our primary case study, representing a novel framework that explicitly implements coupling disturbance and information projection as core computational strategies [10].

Theoretical Foundations

Neural Basis of Exploration-Exploitation

The explore-exploit dilemma manifests throughout neural systems, from foraging behaviors to cognitive search. Neurobiological research reveals that organisms employ two primary strategies: directed exploration (explicit information-seeking) and random exploration (behavioral variability) [17]. These strategies appear to have distinct neural implementations, with directed exploration associated with prefrontal structures and mesocorticolimbic pathways, while random exploration correlates with increased neural variability in decision-making circuits [17].

From a computational perspective, the explore-exploit balance represents one of the most challenging problems in optimization. The No Free Lunch theorem formally establishes that no single algorithm can optimally solve all problem types, necessitating specialized approaches for different problem domains [18] [10]. This theoretical limitation has driven interest in brain-inspired algorithms that can dynamically adapt their search strategies based on problem context and solution progress.

Formalizing Brain-Inspired Mechanisms

The NPDOA framework conceptualizes potential solutions as neural populations, where each decision variable corresponds to a neuron's firing rate. The algorithm operates through three interconnected strategies:

- Attractor Trending: Drives neural populations toward optimal decisions, ensuring exploitation capability.

- Coupling Disturbance: Deviates neural populations from attractors through interaction with other neural populations, improving exploration ability.

- Information Projection: Controls communication between neural populations, enabling transition from exploration to exploitation [10].

In this framework, coupling disturbance specifically addresses the challenge of escaping local optima by introducing controlled disruptions to convergent patterns. These disturbances mimic the stochastic interactions observed between neural assemblies in biological brains during decision-making under uncertainty. Meanwhile, information projection regulates how solution states communicate and influence one another, creating a dynamic balance between divergent and convergent search processes [10].

The NPDOA Framework: Implementation Details

Algorithmic Formulation

The Neural Population Dynamics Optimization Algorithm implements brain-inspired principles through specific mathematical formalisms. Let us define a neural population as a set of candidate solutions, where each solution ( x = (x1, x2, ..., x_D) ) represents a D-dimensional vector corresponding to a neural state.

The attractor trending strategy follows the update rule: [ x{i}^{t+1} = x{i}^{t} + \alpha \cdot (A{k} - x{i}^{t}) ] where ( A_{k} ) represents an attractor point toward which the solution converges, and ( \alpha ) controls the convergence rate. This mechanism facilitates local exploitation by driving solutions toward regions of known high fitness [10].

The coupling disturbance strategy implements: [ x{i}^{t+1} = x{i}^{t} + \beta \cdot (x{j}^{t} - x{i}^{t}) + \gamma \cdot \epsilon ] where ( x_{j}^{t} ) represents a different neural population, ( \beta ) controls coupling strength, ( \gamma ) determines disturbance magnitude, and ( \epsilon ) represents random noise. This formulation creates controlled disruptions that promote global exploration [10].

The information projection strategy governs the transition between exploration and exploitation phases through a dynamic parameter ( \lambda ): [ \lambda(t) = \lambda{max} - (\lambda{max} - \lambda{min}) \cdot \frac{t}{T} ] where ( T ) represents the maximum iterations, and ( \lambda ) decreases linearly from ( \lambda{max} ) to ( \lambda_{min} ), gradually shifting emphasis from exploration to exploitation [10].

Computational Workflow

The following diagram illustrates the integrated workflow of these three strategies within the NPDOA framework:

Figure 1: NPDOA Computational Workflow Integrating Three Core Strategies

Experimental Validation & Performance Analysis

Benchmark Testing Protocols

To validate the efficacy of the coupling disturbance and information projection framework, the NPDOA was evaluated against established metaheuristics using the CEC 2017 and CEC 2022 benchmark suites. The experimental protocol followed rigorous standards:

- Population Size: 30-100 individuals, scaled with problem dimensionality

- Termination Condition: Maximum of 10,000 iterations or convergence threshold of ( 1 \times 10^{-8} )

- Statistical Validation: 30 independent runs per algorithm to ensure statistical significance

- Performance Metrics: Solution accuracy, convergence speed, and algorithm stability [10]

Comparative analysis included nine state-of-the-art metaheuristics: Particle Swarm Optimization (PSO), Genetic Algorithm (GA), Differential Evolution (DE), Grey Wolf Optimizer (GWO), Whale Optimization Algorithm (WOA), and others representing different inspiration categories [10].

Quantitative Performance Results

Table 1: Comparative Performance Analysis on CEC 2017 Benchmark Functions

| Algorithm | 30-Dimensional | 50-Dimensional | 100-Dimensional | Stability Index |

|---|---|---|---|---|

| NPDOA | 3.00 | 2.71 | 2.69 | 0.89 |

| WOA | 4.85 | 5.12 | 5.24 | 0.76 |

| GWO | 4.21 | 4.56 | 4.78 | 0.81 |

| PSO | 5.34 | 5.45 | 5.62 | 0.72 |

| GA | 6.12 | 6.08 | 6.15 | 0.68 |

Note: Performance measured by Friedman ranking (lower values indicate better performance). Stability index calculated as normalized inverse standard deviation across 30 runs [10].

The NPDOA demonstrated superior performance across all dimensionalities, with particularly strong showing in higher-dimensional problems. The algorithm's stability index of 0.89 indicates more consistent performance across independent runs compared to alternative approaches. This stability stems from the balanced integration of coupling disturbance and information projection mechanisms, which prevent premature convergence while maintaining systematic progress toward optimal regions [10].

Table 2: Engineering Design Problem Performance Comparison

| Application Domain | NPDOA | Best Competitor | Performance Improvement |

|---|---|---|---|

| Compression Spring Design | 0.0126 | 0.0129 | 2.3% |

| Pressure Vessel Design | 5850.38 | 6050.12 | 3.3% |

| Welded Beam Design | 1.6702 | 1.7250 | 3.2% |

| Cantilever Beam Design | 1.3395 | 1.3400 | 0.04% |

Note: Results represent best objective function values obtained. Lower values indicate better performance for these minimization problems [10].

Domain Applications & Implementation Guidelines

Pharmaceutical and Biomedical Applications

Brain-inspired metaheuristics with coupled disturbance mechanisms show particular promise in biomedical domains:

Drug Discovery: Optimization of molecular structures for enhanced binding affinity and reduced toxicity represents a complex, high-dimensional search problem. The coupling disturbance mechanism facilitates exploration of novel chemical spaces, while information projection maintains focus on pharmacologically promising regions [19].

Medical Image Analysis: Bio-inspired metaheuristics have demonstrated significant utility in optimizing deep learning architectures for brain tumor segmentation in MRI data. Algorithms like GWO have successfully optimized convolutional neural network parameters, achieving segmentation accuracy up to 97.10% on benchmark datasets [20].

Biological Network Modeling: Optimization of parameters in complex biological systems (e.g., gene regulatory networks, metabolic pathways) benefits from the balanced search capabilities of brain-inspired algorithms. The NPDOA framework shows particular promise in reconstructing neural connectivity from diffusion MRI tractography data [20].

Implementation Toolkit

Table 3: Research Reagent Solutions for Experimental Implementation

| Research Tool | Function | Implementation Example |

|---|---|---|

| PlatEMO v4.1 | Experimental platform for metaheuristic optimization | Provides standardized benchmarking environment for algorithm comparison [10] |

| CEC Benchmark Suites | Standardized test functions | Performance validation on 2017 and 2022 test suites with 30-100 dimensions [10] |

| Gray Wolf Optimizer | Bio-inspired optimization reference | Benchmark comparison algorithm representing swarm intelligence approaches [20] |

| Repast Simphony 2.10.0 | Multi-agent modeling platform | Simulation of coupled exploration dynamics in multi-team environments [21] |

The integration of coupling disturbance and information projection mechanisms represents a significant advancement in brain-inspired metaheuristic algorithms. By formally implementing neural population dynamics observed in biological systems, the NPDOA framework achieves a sophisticated balance between exploration and exploitation that outperforms existing approaches across diverse problem domains.

The experimental results demonstrate consistent performance advantages, particularly for high-dimensional optimization problems relevant to drug development and biomedical research. The explicit modeling of neural interaction dynamics provides a biologically-plausible foundation for adaptive optimization that responds intelligently to problem structure and solution progress.

Future research directions include extending these principles to multi-objective optimization problems, investigating dynamic parameter adaptation mechanisms, and exploring neuromorphic hardware implementations for enhanced computational efficiency. As metaheuristic algorithms continue to evolve, brain-inspired approaches offer promising pathways to more adaptive, efficient, and intelligent optimization strategies.

Comparative Analysis of Brain-Inspired vs. Nature-Inspired and Physics-Inspired Metaheuristics

Metaheuristic algorithms (MAs) are powerful, high-level methodologies designed to solve complex optimization problems that are intractable for traditional exact algorithms [22]. Their prominence has grown across diverse domains, including engineering, healthcare, and artificial intelligence, due to their flexibility, derivative-free operation, and ability to avoid local optima [22] [23]. The foundational No-Free-Lunch theorem logically establishes that no single algorithm can be universally superior for all optimization problems, which has fueled the continuous development and specialization of new metaheuristics [10] [24]. These algorithms can be broadly classified by their source of inspiration, leading to three major families: nature-inspired (including swarm intelligence and evolutionary algorithms), physics-inspired, and the emerging class of brain-inspired metaheuristics [22] [10] [25]. While nature-inspired and physics-inspired algorithms have been extensively studied and applied for decades, brain-inspired metaheuristics represent a novel frontier, drawing directly from computational models of neural processes in the brain [10] [26]. This in-depth technical guide provides a comparative analysis of these paradigms, focusing on their underlying principles, operational mechanisms, performance characteristics, and practical applications, particularly within the context of biomedical research and drug development.

Theoretical Foundations and Algorithmic Principles

Brain-Inspired Metaheuristics: Principles of Neural Computation

Brain-inspired metaheuristics are grounded in the computational principles of neuroscience, treating optimization as a process of collective neural decision-making. Unlike other paradigms that often rely on metaphorical analogies, these algorithms aim to directly embody the computational strategies of the brain.

The Neural Population Dynamics Optimization Algorithm (NPDOA) is a seminal example of this class. It models the brain's ability to process information and make optimal decisions by simulating the activities of interconnected neural populations [10]. Its search process is governed by three core strategies derived from theoretical neuroscience:

- Attractor Trending Strategy: This strategy drives neural populations (candidate solutions) towards stable neural states (attractors) associated with favorable decisions, thereby ensuring exploitation capability. It guides the search towards regions of high quality based on the current best-known solutions [10].

- Coupling Disturbance Strategy: This mechanism deviates neural populations from their current attractors by coupling them with other neural populations. This introduces constructive interference that improves exploration ability, helping the algorithm escape local optima [10].

- Information Projection Strategy: This strategy controls communication between neural populations, enabling a dynamic transition from exploration to exploitation. It regulates the impact of the other two strategies based on the search progression [10].

Another significant approach involves deploying coarse-grained macroscopic brain models on brain-inspired computing architectures (e.g., Tianjic, SpiNNaker) [26]. These models use mean-field approximations to describe the dynamics of entire brain regions rather than individual neurons, creating a powerful framework for optimization. The model inversion process—fitting these brain models to empirical data—is itself a complex optimization problem that, when accelerated on specialized neuromorphic hardware, can achieve speedups of 75–424 times compared to conventional CPUs [26].

Nature-Inspired Metaheuristics: Lessons from Biological Systems

Nature-inspired metaheuristics can be categorized into two main sub-types: evolutionary algorithms and swarm intelligence algorithms [22] [10].

Evolutionary Algorithms (EAs), such as Genetic Algorithms (GA) and Differential Evolution (DE), are inspired by biological evolution [10]. They operate on a population of candidate solutions, applying principles of selection, crossover, and mutation to evolve increasingly fit solutions over generations. While powerful, EAs can face challenges with problem representation (particularly with discrete chromosomes), premature convergence, and the need to set several parameters like crossover and mutation rates [10].

Swarm Intelligence Algorithms mimic the collective, decentralized behavior of social organisms. Key algorithms include:

- Particle Swarm Optimization (PSO): Inspired by bird flocking behavior, PSO updates particle positions based on individual and collective historical best positions [10] [27]. Its velocity update equation incorporates inertia (w), cognitive (c1), and social (c2) components:

V(k) = w*V(k-1) + c1*R1⊗[L(k-1)-X(k-1)] + c2*R2⊗[G(k-1)-X(k-1)][27]. - Competitive Swarm Optimizer (CSO) and CSO with Mutated Agents (CSO-MA): These are advanced swarm-based algorithms where randomly paired particles compete, with losers learning from winners. CSO-MA adds a mutation operator to enhance diversity and prevent premature convergence [23].

- Other Notable Algorithms: This family also includes the Artificial Bee Colony (ABC), Whale Optimization Algorithm (WOA), and Salp Swarm Algorithm (SSA) [10].

A common challenge for many swarm intelligence algorithms is balancing the trade-off between exploration and exploitation, with some being prone to premature convergence or high computational complexity in high-dimensional spaces [10].

Physics-Inspired Metaheuristics: Harnessing Natural Laws

Physics-inspired metaheuristics are modeled after physical phenomena and laws of nature. Unlike evolutionary and swarm algorithms, they typically do not involve crossover or competitive selection operations [10] [25]. Representative algorithms include:

- Simulated Annealing (SA): Inspired by the annealing process in metallurgy, SA uses a temperature parameter that gradually decreases to control the probability of accepting worse solutions, thereby transitioning from exploration to exploitation [10] [24].

- Gravitational Search Algorithm (GSA): Based on Newton's law of gravitation and motion, GSA treats solutions as objects with mass, with their movements governed by gravitational attraction [10] [24].

- Charged System Search (CSS): This algorithm simulates the behavior of charged particles, where their interactions are influenced by their fitness values and the distances between them [10].

While these algorithms provide versatile optimization tools, they can be prone to becoming trapped in local optima and often struggle with premature convergence [10].

Table 1: Fundamental Characteristics of Metaheuristic Paradigms

| Feature | Brain-Inspired (NPDOA) | Nature-Inspired (Swarm/EA) | Physics-Inspired |

|---|---|---|---|

| Core Inspiration | Neural population dynamics & decision-making in the brain [10] | Biological evolution & collective animal behavior [22] [10] | Physical laws & phenomena (e.g., gravity, thermodynamics) [10] [25] |

| Representative Algorithms | NPDOA, Macroscopic Brain Models on Neuromorphic Chips [10] [26] | PSO, GA, DE, CSO-MA, ABC, WOA [10] [23] | Simulated Annealing, Gravitational Search, Charged System Search [10] [24] |

| Key Search Operators | Attractor trending, coupling disturbance, information projection [10] | Selection, crossover, mutation (EA); Position/velocity update (Swarm) [10] [23] | Temperature-based acceptance (SA); Gravitational force (GSA) [24] |

| Primary Strengths | Balanced exploration-exploitation, high functional fidelity, potential for extreme hardware acceleration [10] [26] | Proven versatility, strong performance on many practical problems, extensive research base [22] [23] | Conceptual simplicity, effective for various continuous problems, no biological metaphors needed [10] [25] |

| Common Limitations | Conceptual complexity, emerging stage of development, hardware dependency for maximum speed [10] [26] | Premature convergence, parameter sensitivity, high computational cost for high dimensions [10] | Prone to local optima, less adaptive search dynamics, premature convergence [10] |

Comparative Performance Analysis

Algorithmic Performance and Behavioral Characteristics

A comprehensive evaluation of metaheuristics must consider multiple performance dimensions, including solution quality, convergence behavior, computational efficiency, and robustness. Bibliometric analysis reveals that human-inspired methods constitute the largest category of metaheuristics (45%), followed by evaluation-inspired (33%), swarm-inspired (14%), and physics-based algorithms (4%) [22].

Recent large-scale benchmarking studies highlight critical performance differentiators. An exhaustive study evaluating over 500 nature-inspired algorithms found that many newly proposed metaheuristics claiming novelty based on metaphors do not necessarily outperform established ones [28]. The study revealed that only one of the eleven newly proposed high-citation algorithms performed similarly to state-of-the-art established algorithms, while the others were "less efficient and robust" [28]. This underscores the importance of rigorous validation beyond metaphorical framing.

The Competitive Swarm Optimizer with Mutated Agents (CSO-MA), a nature-inspired algorithm, has demonstrated superior performance relative to many competitors, including enhanced versions of PSO [23]. Its computational complexity is O(nD), where n is the swarm size and D is the problem dimension, and it has proven effective on benchmark functions with dimensions up to 5000 [23].

For physics-inspired algorithms, a comparative study focused on feature selection problems found the Equilibrium Optimizer (EO) delivered comparatively better accuracy and fitness than other physics-inspired algorithms like Simulated Annealing and Gravitational Search Algorithm [24].

The emerging brain-inspired NPDOA has been validated against nine other metaheuristics on benchmark and practical engineering problems, showing "distinct benefits" for addressing many single-objective optimization problems [10]. Its three-strategy architecture appears to provide a more natural and effective balance between exploration and exploitation compared to many nature- and physics-inspired alternatives.

Table 2: Quantitative Performance Comparison Across Application Domains

| Application Domain | Exemplary Algorithm(s) | Key Performance Metrics | Comparative Findings |

|---|---|---|---|

| General Benchmark Functions [23] [28] | CSO-MA (Nature) vs. PSO variants (Nature) vs. New Metaphor Algorithms | Solution quality, convergence speed, robustness on CEC2017 suite | CSO-MA frequently the fastest with best quality results; many new metaphor algorithms underperform established ones [23] [28] |

| Feature Selection in Machine Learning [24] | EO (Physics) vs. SA (Physics) vs. GSA (Physics) | Classification accuracy, number of features selected, fitness value | Equilibrium Optimizer outperformed other physics-inspired algorithms in accuracy and fitness [24] |

| Dose-Finding Clinical Trial Design [27] [29] | PSO (Nature) vs. DE (Nature) vs. Cocktail Algorithm (Deterministic) | Design efficiency, computational time, optimization reliability | PSO efficiently finds locally multiple-objective optimal designs and tends to outperform deterministic algorithms and DE [29] |

| Macroscopic Brain Model Inversion [26] | Brain Models on Neuromorphic Chips (Brain) vs. CPU implementation | Simulation speed, model fidelity, parameter estimation accuracy | Brain-inspired computing achieved 75-424× acceleration over CPUs while maintaining high functional fidelity [26] |

Search Behavior and Bias Analysis

The search behavior of metaheuristics is a crucial differentiator. A significant finding from comparative studies is that many algorithms exhibit a search bias toward the center of the search space (the origin) [28]. This bias can artificially enhance performance on benchmark functions where the optimum is centrally located but leads to performance deterioration when the optimum is shifted away from the center. Studies have shown that state-of-the-art metaheuristics are generally less affected by such transformations compared to newly proposed metaphor-based algorithms [28].

In terms of exploration-exploitation dynamics, analyses of convergence and diversity patterns reveal that newly proposed nature-inspired algorithms often present "rougher" behavior (with high oscillations) in their trade-off between exploitation and exploration and population diversity compared to established state-of-the-art algorithms [28].

Brain-inspired approaches like NPDOA address this fundamental challenge through their inherent biological fidelity. The attractor trending strategy facilitates focused exploitation, the coupling disturbance strategy promotes systematic exploration, and the information projection strategy enables dynamic balancing between these competing objectives [10]. This neurobiological grounding may provide a more principled approach to the exploration-exploitation dilemma compared to the more ad hoc balancing mechanisms found in many nature- and physics-inspired algorithms.

Experimental Protocols and Methodologies

Protocol 1: Benchmarking with CEC Test Suites

Objective: To quantitatively compare the performance of brain-inspired, nature-inspired, and physics-inspired metaheuristics on standardized benchmark functions [23] [28].

Methodology:

- Algorithm Selection: Include representative algorithms from each paradigm: NPDOA (Brain), CSO-MA and PSO (Nature), EO and SA (Physics).

- Test Environment: Use the CEC (Congress on Evolutionary Computation) benchmark suites (e.g., CEC2017). These suites contain functions with diverse properties like multimodality, separability, and complex landscapes [28].

- Parameter Configuration: Use automatic parameter tuning tools like

iraceto ensure fair comparisons by finding optimal parameter settings for each algorithm [28]. - Performance Metrics:

- Solution Accuracy: Error from known optimum.

- Convergence Speed: Number of function evaluations to reach a target accuracy.

- Robustness: Performance consistency across different function types.

- Statistical Analysis: Apply non-parametric statistical tests (Friedman test, Bayesian rank-sum test) to validate significance of results [28].

Protocol 2: Clinical Trial Dose-Finding Optimization

Objective: To evaluate the efficacy of metaheuristics in finding optimal designs for continuation-ratio models in phase I/II dose-finding trials [27] [29].

Methodology:

- Problem Formulation: Define the continuation-ratio model for trinomial outcomes (no reaction, efficacy without toxicity, toxicity) [29]:

log(π₃(x)/(1-π₃(x))) = a₁ + b₁xlog(π₂(x)/π₁(x)) = a₂ + b₂x - Optimization Goal: Find dose levels and allocation ratios that maximize precision for estimating parameters or specific functions like the Maximum Tolerated Dose (MTD) or Most Effective Dose (MED) [29].

- Algorithm Implementation:

- Evaluation: Compare designs using statistical efficiency measures and computational time. Validate designs through simulation studies to estimate actual operating characteristics [29].

Protocol 3: Feature Selection for High-Dimensional Data

Objective: To assess the capability of different metaheuristics for selecting optimal feature subsets in classification problems [24].

Methodology:

- Wrapper Method Setup: Use K-Nearest Neighbor (K-NN) as the classifier within a wrapper method framework [24].

- Fitness Function: Minimize:

Fitness = λ*γ_S(D) + (1-λ)*|S|/|F|whereγ_S(D)is classification error,|S|is selected feature count,|F|is total features, andλbalances importance [24]. - Datasets: Use diverse-natured classification datasets (e.g., from UCI repository) with varying sizes and feature dimensions [24].

- Comparison Metrics: Record average number of selected features, classification accuracy, fitness value, convergence behavior, and computational cost across multiple runs.

Visualization of Algorithmic Structures and Workflows

Neural Population Dynamics Optimization Algorithm (NPDOA) Workflow

Comparative Metaheuristic Paradigms Diagram

Table 3: Key Research Reagents and Computational Resources for Metaheuristics Research

| Tool/Resource | Type | Function/Purpose | Representative Examples/Platforms |

|---|---|---|---|

| Benchmark Suites | Software Library | Standardized test functions for rigorous algorithm comparison | CEC2017 Benchmark Suite [28] |

| Parameter Tuning Tools | Software Tool | Automated configuration of algorithm parameters for fair comparisons | irace (Iterated Racing) [28] |

| Neuromorphic Hardware | Computing Architecture | Specialized processors for accelerating brain-inspired algorithms | Tianjic, SpiNNaker, Loihi [26] |

| Metaheuristic Frameworks | Software Library | Pre-implemented algorithms for practical application and prototyping | PySwarms (PSO tools in Python) [23] |

| Clinical Trial Simulators | Specialized Software | Environment for testing dose-finding designs and optimization algorithms | Custom simulation platforms for continuation-ratio models [27] [29] |

| Statistical Analysis Packages | Software Library | Non-parametric statistical tests for result validation | Bayesian rank-sum test, Friedman test implementations [28] |

This comparative analysis reveals that brain-inspired metaheuristics represent a promising paradigm with distinct advantages in balancing exploration-exploitation dynamics and potential for extreme acceleration on specialized hardware. The NPDOA algorithm, with its three core strategies derived directly from neural population dynamics, offers a principled approach to optimization that differs fundamentally from the metaphorical inspiration of nature-inspired and physics-inspired algorithms [10] [26].

However, the performance landscape is nuanced. Established nature-inspired algorithms like CSO-MA and PSO continue to demonstrate robust performance across diverse applications, from clinical trial design to high-dimensional optimization [23] [29]. The physics-inspired Equilibrium Optimizer has shown competitive performance in specific domains like feature selection [24]. Critically, empirical evidence suggests that metaphorical novelty does not necessarily translate to algorithmic superiority, with many newly proposed algorithms underperforming established ones [28].

Future research should focus on several key directions: 1) Developing more rigorous benchmarking methodologies that test algorithms on problems with shifted optima to avoid center-bias advantages [28]; 2) Exploring hybrid approaches that combine the strengths of different paradigms, such as integrating brain-inspired dynamics with swarm intelligence frameworks; 3) Leveraging brain-inspired computing architectures more broadly for scientific computing beyond their original focus on AI tasks [26]; and 4) Establishing more standardized evaluation protocols and reporting standards to facilitate meaningful cross-paradigm comparisons.

For researchers and drug development professionals, the selection of an appropriate metaheuristic should be guided by problem-specific characteristics rather than metaphorical appeal. Brain-inspired approaches show particular promise for problems where the exploration-exploitation balance is critical and where neural dynamics provide a natural model for the optimization process, while established nature-inspired and physics-inspired algorithms remain powerful tools for a wide range of practical applications.

From Theory to Therapy: Applications in Drug Discovery and Medical Imaging

Few-Shot Meta-Learning for Accelerated CNS Drug Discovery and Repurposing

The development of therapeutics for Central Nervous System (CNS) disorders represents one of the most challenging areas in drug discovery, characterized by long timelines (averaging 15-19 years from discovery to approval) and high attrition rates [30]. These challenges stem from multiple factors, including the complex pathophysiology of neurological disorders, the protective blood-brain barrier (BBB) that limits compound delivery, and the scarcity of high-quality experimental data for many rare CNS conditions [30]. Traditional drug discovery approaches face particular difficulties in the CNS domain due to the limited availability of annotated biological and chemical data, creating an ideal application scenario for advanced artificial intelligence methodologies that can learn effectively from small datasets.

Few-shot meta-learning has emerged as a transformative approach that addresses these fundamental limitations by leveraging prior knowledge from related tasks to enable rapid learning with minimal new data [31] [32]. This technical guide explores the integration of few-shot meta-learning within a broader framework of brain neuroscience metaheuristic algorithms, presenting a comprehensive roadmap for accelerating CNS drug discovery and repurposing. By combining meta-learning's data efficiency with metaheuristic optimization's robust search capabilities, researchers can navigate the complex landscape of neuropharmacology with unprecedented precision and speed, potentially reducing both the time and cost associated with bringing new CNS therapies to market.

Theoretical Foundations: Meta-Learning and Metaheuristics in Neuroscience

Few-Shot Meta-Learning Paradigms

Few-shot meta-learning, often characterized as "learning to learn," encompasses computational frameworks designed to rapidly adapt to new tasks with limited training examples. In the context of CNS drug discovery, this approach addresses a critical bottleneck: the scarcity of labeled data for many rare neurological disorders and experimental compounds [31]. The core mathematical formulation involves training a model on a distribution of tasks such that it can quickly adapt to new, unseen tasks with only a few examples.

The Model-Agnostic Meta-Learning (MAML) framework provides a foundational approach by learning parameter initializations that can be efficiently fine-tuned to new tasks with minimal data. For CNS applications, this translates to models that leverage knowledge from well-characterized neurological targets and pathways to make predictions for less-studied conditions [31]. Recent advances have specialized these frameworks for biomedical applications, incorporating graph-based meta-learning that operates on biological networks and metric-based approaches that learn embedding spaces where similar drug-disease pairs cluster together regardless of available training data [33].

Integration with Metaheuristic Algorithms

Metaheuristic optimization algorithms provide powerful complementary approaches for navigating the complex, high-dimensional search spaces inherent to CNS drug discovery. These nature-inspired algorithms – including Genetic Algorithms (GA), Particle Swarm Optimization (PSO), and Grey Wolf Optimization (GWO) – excel at problems where traditional optimization methods struggle due to non-linearity, multimodality, and discontinuous parameter spaces [18] [19].

The integration of meta-learning with metaheuristics creates a synergistic framework where meta-learners rapidly identify promising regions of the chemical or biological space, while metaheuristics perform intensive local search and optimization within those regions. This hybrid approach is particularly valuable for optimizing multi-objective properties critical to CNS therapeutics, including BBB permeability, target affinity, and synthetic accessibility [30] [19]. For example, GWO has been successfully applied to optimize convolutional neural network architectures for medical image segmentation, achieving accuracy of 97.10% on white matter tract segmentation in neuroimaging data [20].

Table 1: Metaheuristic Algorithms in Neuroscience Research

| Algorithm | Inspiration Source | CNS Application Examples |

|---|---|---|

| Genetic Algorithm (GA) | Biological evolution | Neural architecture search, feature selection for biomarker identification |

| Particle Swarm Optimization (PSO) | Social behavior of bird flocking | Parameter optimization in deep learning models for brain tumor segmentation |

| Grey Wolf Optimization (GWO) | Hierarchy and hunting behavior of grey wolves | White matter fiber tract segmentation [20] |

| Whale Optimization Algorithm (WOA) | Bubble-net hunting behavior of humpback whales | Optimization of hyperparameters in neuroimaging analysis |

| Power Method Algorithm (PMA) | Power iteration method for eigenvectors | Novel algorithm for complex optimization problems in engineering [18] |

Methodological Approaches: Frameworks and Architectures

Graph Neural Networks for Drug Repurposing

Graph Neural Networks (GNNs) have emerged as particularly powerful architectures for drug repurposing due to their ability to naturally model complex relational data inherent in biological systems. The fundamental operation of GNNs involves message passing between connected nodes, allowing the model to capture higher-order dependencies in heterogeneous biomedical knowledge graphs [33] [34].

The TxGNN framework exemplifies the state-of-the-art in this domain, implementing a zero-shot drug repurposing approach that can predict therapeutic candidates even for diseases with no existing treatments [33]. TxGNN operates on a comprehensive medical knowledge graph encompassing 17,080 diseases and 7,957 drug candidates, using a GNN optimized on relationships within this graph to produce meaningful representations for all concepts. A key innovation is its metric learning component that transfers knowledge from well-annotated diseases to those with limited treatment options by measuring disease similarity through normalized dot products of their signature vectors [33]. This approach demonstrated a 49.2% improvement in prediction accuracy for indications and 35.1% for contraindications compared to previous methods under stringent zero-shot evaluation.

Meta-Learning Architectures for Neuropharmacology

Specialized meta-learning architectures have been developed to address the particular challenges of CNS drug discovery. The Meta-CNN model integrates few-shot meta-learning algorithms with whole brain activity mapping (BAMing) to enhance the discovery of CNS therapeutics [31] [32]. This approach utilizes patterns from previously validated CNS drugs to facilitate rapid identification and prediction of potential drug candidates from limited datasets.

The methodology involves pretraining on diverse brain activity signatures followed by rapid fine-tuning on specific neuropharmacological tasks with limited data. Experimental results demonstrate that Meta-CNN models exhibit enhanced stability and improved prediction accuracy over traditional machine learning methods when applied to CNS drug classification and repurposing tasks [31]. The integration with brain activity mapping provides a rich phenotypic signature that captures the systems-level effects of compounds, offering advantages over target-based approaches for complex CNS disorders where multi-target interventions are often required.