AI-Driven Automated Perfusion Analysis in Acute Stroke: Revolutionizing Diagnosis, Treatment, and Clinical Research

This article provides a comprehensive analysis of the transformative role of Artificial Intelligence (AI) in automated perfusion analysis for acute ischemic stroke.

AI-Driven Automated Perfusion Analysis in Acute Stroke: Revolutionizing Diagnosis, Treatment, and Clinical Research

Abstract

This article provides a comprehensive analysis of the transformative role of Artificial Intelligence (AI) in automated perfusion analysis for acute ischemic stroke. Tailored for researchers, scientists, and drug development professionals, it explores the foundational principles of AI in stroke imaging, details the methodologies and clinical applications of leading software platforms, and addresses key challenges in optimization and integration. The scope extends to rigorous comparative validation of emerging AI tools against established standards, synthesizing evidence from recent multicenter studies and clinical trials. By examining the entire pipeline from image acquisition to outcome prediction, this review aims to inform the development of next-generation diagnostic tools and refine patient stratification for novel therapeutic interventions.

The Foundation of AI in Stroke Perfusion: Core Principles, Clinical Imperatives, and Workflow Integration

The management of acute ischemic stroke is governed by the fundamental principle that "time is brain," a concept which quantitatively establishes that 1.9 million neurons are lost each minute during untreated ischemia [1]. This paradigm underscores the irreversible nature of brain tissue damage as stroke progresses, creating a narrow therapeutic window for intervention. While this time-sensitive foundation has traditionally driven stroke systems of care, a significant evolution is underway—the augmentation of temporal urgency with advanced imaging precision [2].

The integration of artificial intelligence (AI) into acute stroke triage represents a transformative advancement, enabling both accelerated diagnostic pathways and sophisticated tissue viability assessment. AI-powered platforms now facilitate a paradigm that synergizes speed with imaging-based physiological evaluation, allowing for patient selection based on vascular and physiologic information rather than rigid time windows alone [2]. This application note details the protocols and analytical frameworks through which AI-driven automated perfusion analysis is reshaping acute stroke triage, providing researchers and drug development professionals with the methodological foundation for advancing this critical field.

Quantifying the Urgency: The Biological Imperative

The "Time is Brain" Equation

The original quantification of neural loss in acute ischemic stroke revealed the staggering pace of circuitry destruction, providing a biological rationale for emergent intervention. The foundational research calculated that during a typical large vessel supratentorial ischemic stroke:

Table 1: Quantified Neural Loss in Acute Ischemic Stroke [1]

| Neural Metric | Loss Per Hour | Loss Per Minute |

|---|---|---|

| Neurons | 120 million | 1.9 million |

| Synapses | 830 billion | 14 billion |

| Myelinated Fibers | 714 km (447 miles) | 12 km (7.5 miles) |

This neural loss occurs over an average stroke evolution duration of 10 hours, resulting in a typical final infarct volume of 54 mL [1]. When contextualized against normal brain aging, the ischemic brain ages 3.6 years each hour without treatment, emphasizing the profound impact of timely intervention [1].

Contemporary Stroke Burden and Access Disparities

The global burden of stroke continues to escalate, with recent epidemiological data revealing 11.9 million incident strokes annually worldwide [3]. This burden is disproportionately concentrated in low- and middle-income countries, which bear 87% of global stroke deaths and 89% of stroke-related disability-adjusted life years (DALYs) [3].

Geographic disparities in access to specialized stroke care present significant challenges to realizing the "time is brain" imperative. Recent spatial analyses demonstrate that approximately 20% of the U.S. adult population (49 million people) resides in census tracts beyond a 60-minute drive from advanced, endovascular-capable stroke care [4]. Critically, these underserved areas demonstrate significantly higher prevalence of stroke risk factors, including hypertension, diabetes, and coronary heart disease, creating a concerning mismatch between need and resource availability [4].

AI-Powered Perfusion Analysis: Technical Frameworks

Platform Architecture and Core Functions

AI-powered acute stroke triage platforms employ sophisticated computational architectures to automate the analysis of perfusion imaging. These systems function through multi-step analytical pipelines that transform raw imaging data into clinically actionable information.

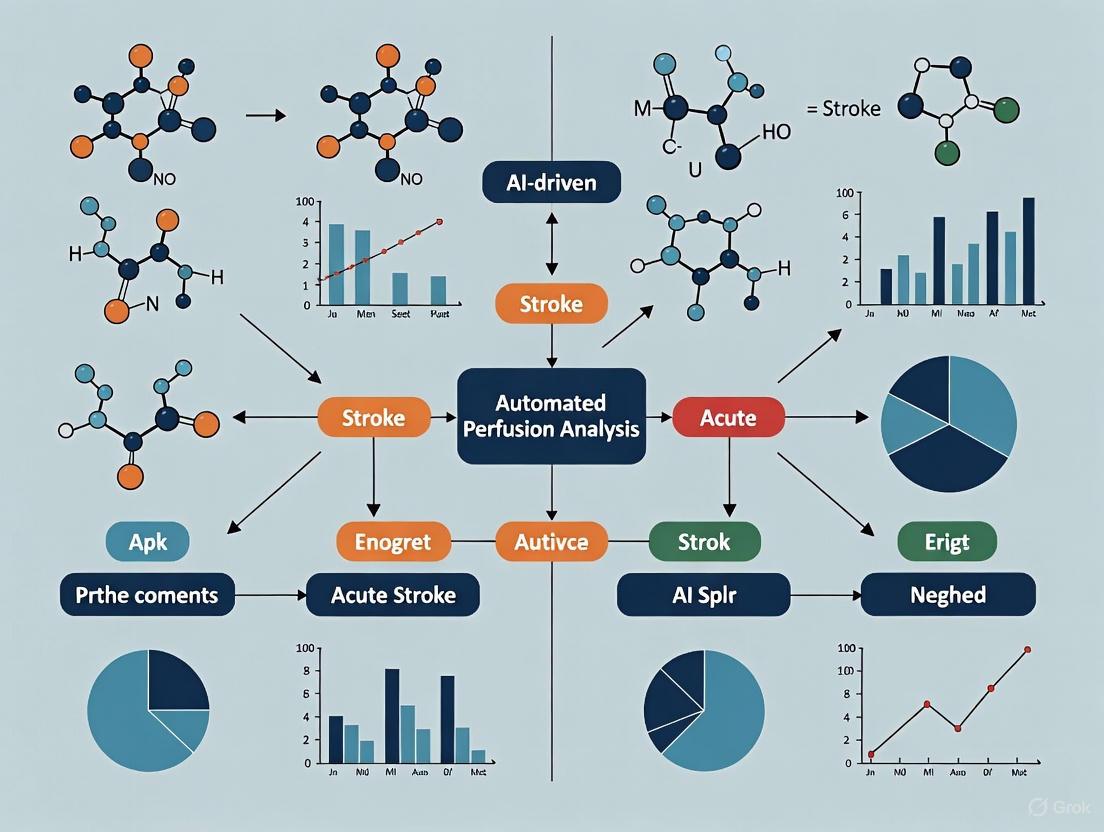

Figure 1: AI-Powered Perfusion Analysis Workflow

The workflow illustrates the transformation from raw imaging data to clinical decision support, emphasizing the automated processing steps that enable rapid triage. Platforms such as RAPID and JLK PWI implement variations of this pipeline, with specific methodological differences in algorithmic approaches to perfusion parameter calculation and threshold application [5].

Validation Framework for AI Perfusion Platforms

Rigorous validation of AI perfusion analysis tools requires standardized assessment protocols to establish diagnostic accuracy and clinical concordance. The following methodology, adapted from a recent multicenter comparative study, provides a template for platform evaluation:

Table 2: Core Validation Metrics for AI Perfusion Platforms [5]

| Validation Dimension | Quantitative Metrics | Statistical Methods | Acceptability Threshold |

|---|---|---|---|

| Volumetric Agreement | Ischemic core volume; Hypoperfused volume; Mismatch volume | Concordance correlation coefficient (CCC); Bland-Altman plots; Pearson correlation | Excellent agreement (CCC > 0.81) |

| Clinical Decision Concordance | EVT eligibility based on DAWN/DEFUSE-3 criteria | Cohen's kappa coefficient | Substantial agreement (κ = 0.61-0.80) |

| Technical Performance | Processing time; Success rate; Artifact resistance | Descriptive statistics; Failure rate analysis | >95% technical adequacy |

Experimental Protocol 1: Multicenter Platform Validation

Study Population: Recruit 250-300 patients with acute ischemic stroke who underwent perfusion imaging within 24 hours of symptom onset. Key inclusion criteria: clinical diagnosis of acute ischemic stroke, availability of quality perfusion imaging (PWI or CTP), and documented clinical outcomes.

Imaging Acquisition: Standardize imaging protocols across participating centers with documentation of scanner manufacturer, field strength, sequence parameters (TR/TE), and contrast administration protocols.

Parallel Analysis: Process all imaging studies through both reference (e.g., RAPID) and test (e.g., JLK PWI) platforms using standardized operating procedures.

Outcome Measures:

- Primary: Volumetric agreement for ischemic core and hypoperfused tissue

- Secondary: Concordance in endovascular therapy eligibility classification

- Exploratory: Correlation with 90-day functional outcomes (modified Rankin Scale)

Statistical Analysis Plan:

- Calculate concordance correlation coefficients (CCC) with 95% confidence intervals

- Generate Bland-Altman plots with limits of agreement

- Compute Cohen's kappa for categorical treatment decisions

- Perform subgroup analyses by stroke etiology, onset-to-imaging time, and imaging modality

This validation framework recently demonstrated excellent agreement between emerging and established platforms, with CCC values of 0.87 for ischemic core and 0.88 for hypoperfused volume, alongside substantial clinical decision concordance (κ = 0.76-0.90) [5].

Research Reagent Solutions: Essential Materials for AI Stroke Research

Table 3: Research Reagent Solutions for AI-Powered Stroke Investigation

| Category | Specific Solution | Function | Example Platforms |

|---|---|---|---|

| Imaging Analysis Software | Automated PWI/CTP processing | Quantifies perfusion parameters; delineates core/penumbra | RAPID, JLK PWI, Viz.ai, Aidoc |

| Clinical Decision Support | AI-powered triage platforms | Automates large vessel occlusion detection; facilitates care coordination | Viz.ai, RapidAI |

| Data Integration Tools | Interoperability frameworks | Enables PACS integration; supports DICOM standardization | Custom middleware solutions |

| Validation Datasets | Curated imaging libraries with reference standards | Provides ground truth for algorithm training/validation | Multicenter retrospective cohorts |

| Computational Infrastructure | High-performance computing resources | Supports deep learning model training/inference | Cloud-based GPU clusters |

The AI-powered acute stroke triage market reflects significant investment in these solutions, projected to grow from $1.72 billion in 2025 to $3.83 billion by 2029 at a compound annual growth rate of 22.2% [6]. Leading commercial platforms have demonstrated real-world impact, with implementation associated with reduced door-to-groine puncture times and improved coordination in hub-and-spoke networks [7].

Advanced Experimental Protocols

Protocol for Comparative Platform Assessment

Experimental Protocol 2: Methodological Framework for PWI Platform Comparison [5]

Image Preprocessing Standardization:

- Apply consistent motion correction algorithms to all dynamic susceptibility contrast-enhanced PWI data

- Implement automated brain extraction via skull stripping and vessel masking

- Normalize signal intensity curves across scanner platforms and magnetic field strengths

Perfusion Parameter Calculation:

- Employ automated arterial input function selection using standardized geometric criteria

- Apply block-circulant singular value decomposition for deconvolution operations

- Calculate quantitative maps for CBF, CBV, MTT, and Tmax using identical mathematical models across platforms

Tissue Classification Implementation:

- Apply consistent threshold for hypoperfused tissue definition (Tmax > 6 seconds)

- Implement platform-specific infarct core segmentation (ADC thresholding vs. deep learning approaches)

- Calculate mismatch ratio as (Hypoperfused Volume - Ischemic Core Volume) / Ischemic Core Volume

Validation Against Reference Standards:

- Compare volumetric outputs against manually segmented reference standards created by expert neuroradiologists

- Assess spatial overlap using Dice similarity coefficients in addition to volumetric correlations

- Evaluate clinical concordance using established trial criteria (DAWN, DEFUSE-3) as reference standard

This protocol recently demonstrated that emerging PWI analysis platforms can achieve excellent technical agreement (CCC = 0.87-0.88) and substantial clinical decision concordance (κ = 0.76-0.90) with established commercial systems [5].

Implementation Science Framework

Experimental Protocol 3: Health Systems Integration and Impact Assessment

Pre-Implementation Baseline Assessment:

- Establish baseline metrics for key stroke care time intervals (door-to-imaging, door-to-needle, door-to-puncture)

- Document existing workflow processes and identify potential bottlenecks

- Assess specialist availability and communication pathways between emergency departments, radiologists, and stroke neurologists

Staged Implementation Approach:

- Phase 1: Implement AI-powered automated detection with PACS integration

- Phase 2: Add automated notification systems to mobile devices and secure messaging platforms

- Phase 3: Integrate telemedicine capabilities for remote expert consultation

Outcome Measurement Framework:

- Primary effectiveness endpoints: time metrics for critical care pathway steps

- Secondary clinical endpoints: rates of intravenous thrombolysis and endovascular therapy, 90-day functional outcomes

- System efficiency endpoints: reduction in inter-facility transfer times, appropriate triage decisions

Economic Impact Assessment:

- Calculate operational costs associated with platform implementation and maintenance

- Measure resource utilization changes including length of stay and ICU days

- Assess cost-effectiveness through quality-adjusted life year (QALY) analysis

Real-world implementation of these systems has demonstrated meaningful improvements in workflow efficiency, with one study reporting significant reductions in inter-facility transfer times and hospital length of stay following AI coordination platform deployment [7].

The integration of AI-powered perfusion analysis into acute stroke triage represents a maturation of the "time is brain" paradigm, augmenting temporal urgency with precision imaging assessment. The experimental frameworks and validation methodologies detailed in these application notes provide researchers and drug development professionals with standardized approaches for advancing this rapidly evolving field. As the technology continues to develop, priorities include prospective multicenter validation, addressing algorithmic bias across diverse populations, and demonstrating cost-effectiveness across healthcare systems. Through rigorous implementation of these protocols, the stroke research community can further refine the synthesis of speed and precision that defines modern acute stroke care.

Perfusion is a fundamental biological function that refers to the delivery of oxygen and nutrients to tissue by means of blood flow at the capillary level [8]. Unlike bulk blood flow through major vessels, perfusion imaging captures hemodynamic processes at the microcirculatory level, providing critical insights into tissue viability and function [9]. In the context of acute ischemic stroke (AIS) and neuro-oncology, perfusion imaging has emerged as an indispensable tool for identifying salvageable brain tissue, guiding treatment decisions, and advancing therapeutic development.

The transition from raw imaging data to quantitative perfusion maps relies on sophisticated tracer kinetic models and computational algorithms. With the advent of artificial intelligence (AI), this process is being transformed through automated analysis, enhanced accuracy, and reduced processing times. This article explores the fundamental principles, protocols, and emerging AI applications that are shaping the future of perfusion imaging in clinical research and drug development.

Physical Principles and Technical Foundations

Core Hemodynamic Parameters

Perfusion imaging quantifies three primary hemodynamic parameters that characterize tissue vascularity and function, as defined in the table below.

Table 1: Key Perfusion Parameters and Their Significance

| Parameter | Abbreviation | Units | Physiological Significance |

|---|---|---|---|

| Cerebral Blood Flow | CBF | mL/100g/min | Rate of blood delivery to capillary beds [8] [9] |

| Cerebral Blood Volume | CBV | mL/100g | Volume of flowing blood in capillary network [10] [9] |

| Mean Transit Time | MTT | seconds | Average time for blood to pass through tissue vasculature [10] [8] |

These parameters are interrelated through the central volume principle: CBV = CBF × MTT [9]. This relationship forms the mathematical foundation for calculating perfusion maps from tracer kinetics data.

Tracer Kinetic Models

The conversion of pixel intensity changes to quantitative perfusion maps relies on tracer kinetic modeling. Two primary approaches dominate clinical practice:

- Compartmental Models: These models describe contrast agent distribution between intravascular and extravascular compartments. The Patlak model quantifies blood volume and capillary permeability, assuming unidirectional transfer from intravascular to extravascular space [11].

- Deconvolution Methods: These approaches use arterial and tissue time-concentration curves to calculate the impulse residue function, which characterizes the tissue response to an ideal arterial bolus [11]. Deconvolution is particularly valuable for calculating perfusion despite variable arterial input functions.

The mathematical foundation for these models is represented in the following workflow:

Modality-Specific Imaging Approaches

Computed Tomography Perfusion (CTP)

CT perfusion imaging follows the tracer kinetic model using iodinated contrast agents. During a CTP study, dynamic CT scanning captures the first pass of contrast through cerebral vasculature, generating time-attenuation curves for each voxel [9] [11]. The fundamental equation relating signal intensity to contrast concentration is:

ΔHU(t) ∝ C(t)

where ΔHU(t) is the change in Hounsfield Units over time and C(t) is the tissue concentration of contrast agent [11].

Table 2: Typical CTP Acquisition Protocol for Acute Stroke

| Parameter | Specification | Rationale |

|---|---|---|

| Scanner Type | Multidetector CT (≥16-slice) | Adequate temporal and spatial resolution [11] |

| Contrast Volume | 40-50 mL | Sufficient bolus for first-pass kinetics [9] |

| Injection Rate | 4-6 mL/sec | Compact bolus for accurate parameter estimation [9] [11] |

| Temporal Sampling | 1 image/second for 45-60 seconds | Capture complete first-pass kinetics [9] |

| Coverage | 80-160 mm (depending on detector array) | Include major vascular territories [9] |

| Tube Parameters | 80 kVp, 100-200 mAs | Balance radiation dose and image quality [9] |

Magnetic Resonance Perfusion Imaging

MR perfusion encompasses three distinct techniques with different contrast mechanisms and applications:

Dynamic Susceptibility Contrast (DSC) MRI: Based on T2* susceptibility effects during the first pass of gadolinium-based contrast agents. The signal intensity follows the relationship: S(t) = S₀ · exp(-ΔR2(t)), where ΔR2 is the change in transverse relaxation rate proportional to contrast concentration [10] [8]. DSC-MRI is particularly valuable for brain tumors and cerebrovascular diseases.

Dynamic Contrast-Enhanced (DCE) MRI: Utilizes T1-weighted sequences to track contrast extravasation into the extravascular extracellular space. The signal change is proportional to contrast concentration: R1 = R10 + r1·C, where R1 is the longitudinal relaxation rate, R10 is the pre-contrast relaxation rate, r1 is the longitudinal relaxivity, and C is contrast concentration [8].

Arterial Spin Labeling (ASL): A completely non-invasive technique that uses magnetically labeled arterial blood water as an endogenous diffusible tracer [8]. ASL provides quantitative CBF measurements without exogenous contrast administration but has lower signal-to-noise ratio compared to contrast-based methods.

Table 3: Comparison of MR Perfusion Techniques

| Feature | DSC-MRI | DCE-MRI | ASL |

|---|---|---|---|

| Contrast Mechanism | T2*/T2 weighting | T1 weighting | Endogenous blood labeling |

| Contrast Agent | Gadolinium-based | Gadolinium-based | None |

| Primary Parameters | rCBV, rCBF, MTT | Ktrans, ve, vp | CBF |

| Key Applications | Tumor grading, stroke | Oncology, permeability assessment | Pediatric imaging, longitudinal studies |

| Strengths | High sensitivity to microvasculature | Quantifies permeability | Non-invasive, absolute quantification |

| Limitations | Susceptibility to leakage effects | Complex modeling | Low signal-to-noise ratio |

Experimental Protocols and Methodologies

CT Perfusion Protocol for Acute Stroke

For acute ischemic stroke evaluation, CTP protocols prioritize rapid acquisition and processing to identify potentially salvageable tissue [9] [12]:

- Patient Preparation: Establish large-bore (18-gauge) intravenous access in antecubital vein.

- Baseline Imaging: Perform non-contrast head CT to exclude hemorrhage and establish anatomical reference.

- Scan Planning: Position CTP volume to include territories of anterior, middle, and posterior cerebral arteries.

- Contrast Administration: Inject 40 mL of non-ionic iodinated contrast (300-370 mgI/mL) at 4-5 mL/sec followed by saline flush.

- Image Acquisition: Initiate cine acquisition 5-7 seconds after injection start; acquire 4-8 slices every 1-2 seconds for 45-60 seconds.

- Post-Processing: Utilize deconvolution algorithms to generate CBF, CBV, and MTT maps with automated vessel identification.

MR Perfusion Protocol for Neuro-Oncology

Comprehensive brain tumor evaluation often combines DSC and DCE techniques to capture both vascularity and permeability characteristics [10] [13]:

- Patient Setup: Place 20-gauge or larger peripheral IV for power injector.

- Pre-Contrast Imaging: Acquire structural sequences including 3D T1-weighted GRE, T2-weighted, and FLAIR.

- DSC-MRI Acquisition:

- Use T2* weighted EPI sequence

- Inject 2/3 total contrast dose at 4-5 mL/sec 8-10 seconds after sequence start

- Apply flip angle of 30-35° to minimize T1 leakage effects

- Acquire 1.5-2 second temporal resolution for 60-90 seconds

- DCE-MRI Acquisition:

- Use 3D T1-weighted sequence

- Inject remaining 1/3 contrast dose at 2 mL/sec

- Acquire pre-contrast baseline with variable flip angles for T1 mapping

- Maintain 3-5 second temporal resolution during 5-10 minute acquisition

- Post-Contrast Imaging: Obtain high-resolution 3D T1-weighted images for anatomical reference.

The integration of these techniques is visualized in the following workflow:

AI-Driven Automation in Perfusion Analysis

Current AI Applications in Perfusion Imaging

Artificial intelligence is revolutionizing perfusion analysis through automated processing, enhanced accuracy, and novel approaches to data interpretation:

Fully Automated Processing: Commercial platforms like RAPID AI automatically coregister images, identify arterial input functions, generate parametric maps, and segment perfusion abnormalities based on validated thresholds (e.g., Tmax >6 seconds for critically hypoperfused tissue) [12]. These systems achieve sensitivity of 95.55% and specificity of 81.73% for detecting arterial occlusions in acute stroke [12].

Cross-Modality Prediction: Generative adversarial networks (GANs) can predict perfusion parameters directly from non-contrast CT images, potentially eliminating the need for dedicated perfusion studies. Recent studies demonstrate moderate performance (SSIM 0.79-0.83) in generating CBF and Tmax maps from NCHCT [14].

Workflow Integration: AI platforms like deepcOS integrate automated perfusion analysis directly into clinical workflows, providing processing in under three minutes with DEFUSE-3 criteria visualization for evidence-based treatment decisions [15].

Validation and Performance Metrics

AI algorithms for perfusion analysis require rigorous validation against ground truth measurements:

Table 4: Performance Metrics of AI-Based Perfusion Analysis Tools

| Metric | Current Performance | Validation Standard |

|---|---|---|

| Sensitivity for LVO Detection | 95.55% (CI: 93.50-97.10%) [12] | CTA-confirmed occlusion |

| Specificity for LVO Detection | 81.73% (CI: 75.61-86.86%) [12] | CTA-confirmed occlusion |

| Negative Predictive Value | 98.22% (CI: 97.39-98.79%) [12] | CTA as reference standard |

| SSIM for Synthetic CBF Maps | 0.79 (GAN-based prediction) [14] | Ground truth CTP |

| Processing Time | <3 minutes [15] | Manual processing (>10 minutes) |

The Scientist's Toolkit: Essential Research Reagents and Materials

Successful implementation of perfusion imaging protocols requires specific technical resources and analytical tools:

Table 5: Essential Research Resources for Perfusion Imaging Studies

| Resource | Specification | Research Application |

|---|---|---|

| CT Scanner | Multidetector (≥16 slices) with 0.5-1s rotation time | Adequate temporal resolution for tracer kinetics [11] |

| MRI System | 1.5T or 3T with high-performance gradients | DSC/DCE/ASL sequence implementation [10] [13] |

| Contrast Agent | Iodinated (370-400 mgI/mL) or Gadolinium-based | Optimal bolus characteristics for first-pass imaging [9] [11] |

| Power Injector | Dual-syringe with programmable rates | Precise bolus administration and saline flush [9] [13] |

| Post-Processing Software | RAPID, Olea, MITK, or custom algorithms | Parametric map generation and quantitative analysis [15] [12] |

| AI Platforms | deepcOS, mRay-VEOcore, custom neural networks | Automated analysis and cross-modality prediction [15] [14] |

Advanced Applications in Stroke Research and Drug Development

Perfusion imaging provides critical biomarkers for evaluating novel therapeutics in stroke and neuro-oncology:

Ischemic Penumbra Identification: CTP and MRP define the mismatch between critically hypoperfused tissue (Tmax >6 seconds) and the irreversibly injured core (CBF <30% normal or ADC reduction) [9] [12]. This mismatch identifies patients most likely to benefit from revascularization therapies beyond conventional time windows.

Treatment Response Assessment: In neuro-oncology, perfusion parameters (particularly rCBV) correlate with tumor grade, differentiate true progression from pseudo-progression, and provide early biomarkers of treatment efficacy [10] [8].

Clinical Trial Endpoints: Automated perfusion analysis enables standardized assessment of therapeutic efficacy across multiple centers, supporting drug development through quantitative imaging biomarkers [15] [12].

The integration of AI into the perfusion analysis pipeline creates new opportunities for research and drug development:

The management of acute ischemic stroke is a race against time, where every minute of delay results in the loss of nearly 1.9 million neurons [7]. Traditional neuroimaging modalities, while foundational to diagnosis, present significant limitations including processing delays, accessibility issues, and interpretive variability. The integration of Artificial Intelligence (AI), particularly deep learning, is fundamentally transforming this landscape by extracting nuanced, quantitative data from standard imaging studies that far surpasses human visual assessment capabilities [16].

This revolution is most evident in perfusion imaging analysis, where automated platforms now provide rapid, objective quantification of ischemic core, penumbra, and tissue-at-risk volumes. These AI-driven insights are crucial for extending treatment windows and personalizing therapeutic strategies, particularly for endovascular thrombectomy (EVT) [17]. This document provides detailed application notes and experimental protocols to guide researchers in implementing and validating these transformative technologies within acute stroke research programs.

Quantitative Performance Validation of Automated Perfusion Analysis Platforms

Table 1: Comparative Performance Metrics of RAPID and JLK PWI Platforms

| Performance Parameter | RAPID Platform | JLK PWI Platform | Validation Metrics |

|---|---|---|---|

| Ischemic Core Volume Agreement | Reference Standard | Excellent Agreement (CCC = 0.87) [17] | Concordance Correlation Coefficient (CCC), Pearson Correlation |

| Hypoperfused Volume Agreement | Reference Standard | Excellent Agreement (CCC = 0.88) [17] | Concordance Correlation Coefficient (CCC), Pearson Correlation |

| EVT Eligibility (DAWN Criteria) | Reference Classification | Very High Concordance (κ = 0.80 - 0.90) [17] | Cohen's Kappa |

| EVT Eligibility (DEFUSE-3 Criteria) | Reference Classification | Substantial Agreement (κ = 0.76) [17] | Cohen's Kappa |

| Primary Clinical Application | Triage for Thrompectomy | Reliable Alternative for MRI-based Perfusion [17] | Multicenter Retrospective Validation |

Key: CCC: Concordance Correlation Coefficient; EVT: Endovascular Therapy; κ: Kappa statistic for inter-rater reliability.

Experimental Protocol: Validation of an Automated PWI Analysis Pipeline

Study Population and Data Acquisition

- Patient Cohort: Recruit patients with acute ischemic stroke who underwent MR Perfusion-Weighted Imaging (PWI) within 24 hours of symptom onset. A typical cohort size is n=299, with a median NIHSS score of 11 [17].

- Inclusion Criteria: Confirmed acute ischemic stroke; availability of PWI and DWI sequences; known time of symptom onset.

- Exclusion Criteria: Significant motion artifacts; abnormal arterial input function; inadequate image quality [17].

- Imaging Parameters: Acquire Dynamic Susceptibility Contrast-enhanced PWI using a gradient-echo echo-planar imaging (GE-EPI) sequence. Standard parameters include [17]:

- Repetition Time (TR): 1,500–2,000 ms

- Echo Time (TE): 40–50 ms

- Field of View (FOV): 230 × 230 mm²

- Slice Thickness: 5 mm with no interslice gap

Automated Perfusion Processing Workflow

The following diagram illustrates the core computational pipeline for automated perfusion analysis.

Protocol Steps:

- Image Preprocessing: Export reconstructed images in DICOM format. Perform motion correction and apply brain extraction via skull stripping and vessel masking [17].

- Arterial Input Function (AIF) Selection: Automatically select the AIF and Venous Output Function (VOF), followed by conversion of the MR signal [17].

- Deconvolution and Map Calculation: Perform block-circulant singular value decomposition (SVD) to calculate quantitative perfusion maps, including [17]:

- Cerebral Blood Flow (CBF)

- Cerebral Blood Volume (CBV)

- Mean Transit Time (MTT)

- Time to maximum (Tmax)

- Ischemic Core and Mismatch Calculation:

- For RAPID: Ischemic core is estimated using ADC < 620 × 10⁻⁶ mm²/s [17].

- For JLK PWI: A deep learning-based algorithm segments the infarct core on b1000 DWI, which is then co-registered to the perfusion maps [17].

- The hypoperfused region is delineated using a threshold of Tmax > 6 seconds. The mismatch volume is computed as the difference between the hypoperfused volume and the ischemic core volume [17].

- Visual Inspection: Technically adequacy of all segmentations and resulting images must be confirmed by visual inspection before inclusion in the final analysis [17].

Statistical Analysis for Platform Validation

- Volumetric Agreement: Assess agreement for ischemic core, hypoperfused volume, and mismatch volume using Concordance Correlation Coefficients (CCC), Pearson correlation coefficients, and Bland-Altman plots [17].

- Clinical Decision Concordance: Evaluate agreement in EVT eligibility based on DAWN and DEFUSE-3 trial criteria using Cohen’s kappa (κ) [17].

- Interpretation of Agreement Statistics:

- CCC/Pearson: Values > 0.80 indicate excellent correlation.

- Kappa: < 0.20 (Poor); 0.21-0.40 (Fair); 0.41-0.60 (Moderate); 0.61-0.80 (Substantial); > 0.80 (Almost Perfect) [17].

Advanced AI Applications: Overcoming Specific Imaging Limitations

Generative AI for Cross-Modality Perfusion Prediction

A novel application of generative AI uses a modified pix2pix-turbo Generative Adversarial Network (GAN) to predict perfusion parameters like relative CBF (rCBF) and Tmax directly from non-contrast head CT (NCHCT) images [14]. This approach addresses the limitations of CT perfusion, including additional radiation exposure and processing delays.

Experimental Workflow for Generative AI Perfusion Mapping:

Performance Metrics: In a pilot study, GAN-generated Tmax maps achieved a Structural Similarity Index Measure (SSIM) of 0.827 and Fréchet Inception Distance (FID) of 62.21, demonstrating the feasibility of capturing key hemodynamic features from NCHCT [14].

Machine Learning for Stroke Sub-Type Identification in Resource-Limited Settings

In environments lacking advanced neuroimaging, ML frameworks can classify stroke type (ischemic vs. hemorrhagic) using clinical data alone.

Table 2: Performance of ML Framework for Stroke-Type Identification

| Metric | Performance | Context & Comparison |

|---|---|---|

| Weighted Accuracy | 82.42% | Trained on 2,190 patients with 79 clinical attributes [18] |

| Sensitivity | 82.19% | Ability to correctly identify true stroke types [18] |

| Specificity | 82.65% | Ability to correctly rule out non-existent stroke types [18] |

| F1-Score | 86.68% | Harmonic mean of precision and recall [18] |

| Prospective Validation | 16.42% improvement over Siriraj clinical score | Demonstrates real-world utility and generalizability [18] |

Key Methodology:

- Data Imputation: Employs Multiple Imputation by Chained Equations (MICE) to handle missing data robustly [18].

- Feature Reduction: Utilizes SHAP analysis to identify the 19 most significant clinical attributes, maintaining 82.20% accuracy with a reduced feature set [18].

- Bias Mitigation: Addresses class imbalance (70% Ischemic, 30% Hemorrhagic) by assigning equal weights to both classes during model training [18].

The Scientist's Toolkit: Essential Research Reagents & Software

Table 3: Key Research Reagents and Software Platforms for AI Stroke Research

| Item Name | Type | Primary Function in Research | Example Use Case |

|---|---|---|---|

| RAPID | Commercial AI Software | Automated processing of CTP and PWI to quantify ischemic core and penumbra [19]. | Triage for thrombectomy; core lab adjudication in clinical trials [17] [19]. |

| JLK PWI | Research AI Software | Automated PWI analysis pipeline for ischemic core estimation and hypoperfusion volume calculation [17]. | Comparative validation studies; MRI-based perfusion analysis [17]. |

| GAN (pix2pix-turbo) | Deep Learning Model | Generative network for cross-modality translation of NCHCT to synthetic perfusion maps [14]. | Exploring perfusion imaging in resource-limited settings; reducing radiation exposure [14]. |

| MICE Imputer | Statistical Algorithm | Handles missing data in clinical datasets for robust model training [18]. | Preprocessing of real-world, incomplete clinical stroke registries [18]. |

| SHAP Analysis | Explainable AI Tool | Identifies the most important clinical/model features driving predictions [18]. | Interpreting "black box" models; feature reduction for efficiency [18]. |

| DSC-PWI Sequence | MRI Protocol | Dynamic Susceptibility Contrast perfusion imaging for acquiring hemodynamic data [17]. | Generating ground truth perfusion maps for model training and validation [17]. |

Critical Analysis and Future Directions

While AI demonstrates remarkable performance, researchers must account for critical pitfalls. Dataset bias from single-institution data, temporal drift in clinical practices, and domain shifts across scanner vendors can severely limit model generalizability [20]. Furthermore, the explainability gap—the inability of many complex models to provide a rationale for their outputs—remains a significant barrier to clinical trust and adoption, especially in high-stakes decisions like thrombolysis eligibility [20].

Future research must prioritize:

- Multicenter Prospective Validation: Moving beyond retrospective studies to demonstrate real-world efficacy [7] [20].

- Explainable AI (XAI): Integrating attention maps and saliency features to align model outputs with clinical reasoning and build trust [20].

- Cross-Modality Fusion: Developing transformer-based networks and other advanced architectures to effectively integrate multimodal data (e.g., CT, MRI, clinical notes) for a more holistic patient assessment [16].

The integration of AI into acute stroke imaging is not about replacing clinicians but about providing powerful, objective tools that augment clinical decision-making. By standardizing assessments, compressing time-sensitive workflows, and revealing otherwise imperceptible diagnostic patterns, AI is fundamentally overcoming the long-standing limitations of traditional imaging and paving the way for more precise and accessible stroke care [7] [20].

Perfusion imaging has revolutionized treatment decisions in acute ischemic stroke (AIS), transitioning patient selection from a purely time-based paradigm to a more sophisticated tissue-based model. By quantifying the ischemic core (irreversibly injured tissue) and the hypoperfused penumbra (salvageable tissue), perfusion analysis enables clinicians to identify patients most likely to benefit from reperfusion therapies while avoiding harm in those with minimal potential for recovery. The integration of automated, artificial intelligence (AI)-driven perfusion analysis platforms has further standardized this assessment, facilitating rapid and evidence-based treatment decisions in time-sensitive scenarios. This application note delineates how perfusion analysis informs eligibility for intravenous thrombolysis (IVT) and endovascular thrombectomy (EVT), core pillars of acute stroke reperfusion therapy.

Clinical Application: Perfusion Analysis for Thrombolysis Decisions

Extending the Therapeutic Time Window

For patients presenting within the standard 4.5-hour time window, current guidelines do not mandate perfusion imaging for IVT eligibility. A recent retrospective analysis found that IVT was equally safe and effective in AIS patients without perfusion deficits on CT perfusion (CTP) as in those with perfusion deficits, suggesting limited clinical utility for routine CTP in early presenters [21]. However, perfusion imaging is crucial for patients presenting in extended or unknown time windows.

A 2025 systematic review and meta-analysis demonstrated that IVT in selected patients beyond 4.5 hours from last known well significantly improved excellent functional outcome (modified Rankin Scale [mRS] 0-1) at 90 days (RR=1.24; 95%CI:1.14–1.34) despite a higher rate of symptomatic intracerebral hemorrhage (sICH) (RR=2.75; 95%CI:1.49–5.05) [22]. This evidence supports the use of perfusion imaging to identify patients with favorable perfusion patterns who may benefit from late-window thrombolysis.

Predicting Hemorrhagic Transformation Risk

Beyond identifying salvageable tissue, perfusion parameters can help stratify the risk of hemorrhagic transformation (HT), a serious complication of IVT. Research has identified that the permeability surface area product (PS), a parameter reflecting blood-brain barrier disruption, is significantly elevated in patients who develop HT post-IVT [23].

A dynamic nomogram model integrating the National Institutes of Health Stroke Scale (NIHSS) score before IVT, atrial fibrillation (AF), and relative PS (rPS) achieved an area under the curve (AUC) of 0.899 (95% CI: 0.814–0.984) for predicting HT risk, providing a valuable tool for personalized risk assessment [23]. This allows clinicians to weigh the benefits of reperfusion against the risks of hemorrhage more accurately.

Table 1: Key Perfusion Parameters for Thrombolysis Decisions

| Parameter | Clinical Role | Interpretation | Evidence Source |

|---|---|---|---|

| Perfusion Deficit Presence | Identifies salvageable tissue (penumbra) in extended windows | Patients with targetable penumbra may benefit from IVT beyond 4.5 hours | Systematic Review/Meta-analysis [22] |

| Permeability Surface (PS) | Predicts hemorrhagic transformation risk | Higher values indicate blood-brain barrier disruption; elevated risk of post-thrombolysis hemorrhage | Retrospective Cohort [23] |

| Ischemic Core Volume | Estimates extent of irreversible injury | Larger core volumes may be associated with poorer outcomes and higher complication risks | Retrospective Analysis [21] |

Clinical Application: Perfusion Analysis for Thrombectomy Decisions

Standardizing EVT Eligibility with Automated Platforms

Perfusion imaging plays a critical role in patient selection for EVT, particularly in extended time windows (>6 hours from last known well). Automated software platforms utilize validated criteria from landmark trials (DAWN, DEFUSE-3) to standardize EVT eligibility assessment [5] [17]. These platforms automatically calculate key volumetric parameters, including ischemic core volume, hypoperfused volume (Tmax >6s), and mismatch ratio (penumbra/core), applying trial-specific thresholds to determine treatment candidacy.

A 2025 multicenter comparative validation study demonstrated that a novel AI-based perfusion-weighted imaging (PWI) software (JLK PWI) showed excellent agreement with the established RAPID platform for ischemic core volume (concordance correlation coefficient [CCC]=0.87) and hypoperfused volume (CCC=0.88) [5] [17]. The platforms showed very high concordance in EVT eligibility classification using DAWN criteria (κ=0.80–0.90) and substantial agreement using DEFUSE-3 criteria (κ=0.76) [5], supporting the use of automated platforms for reliable and reproducible EVT triage.

Expanding EVT to Large Core Infarctions

Historically, patients with large ischemic cores were excluded from EVT due to concerns about limited benefit and increased procedural risks. Recent trials have fundamentally changed this paradigm, showing that thrombectomy consistently improved functional outcomes in large core patients compared to medical management alone [24]. Perfusion imaging, particularly non-contrast CT Alberta Stroke Program Early CT Score (ASPECTS) and CTP core volume measurement, is essential for identifying appropriate candidates for large core thrombectomy.

While absolute functional independence rates (mRS 0-2) were lower than in trials enrolling patients with smaller cores, they still significantly favored thrombectomy, with no significant increase in rates of sICH [24]. This expansion of EVT eligibility underscores the critical role of precise perfusion imaging in identifying patients who may benefit from intervention despite extensive baseline infarction.

Table 2: Key Perfusion Parameters for Thrombectomy Decisions

| Parameter | Clinical Role | Interpretation | Evidence Source |

|---|---|---|---|

| Ischemic Core Volume | Quantifies irreversibly injured tissue | DEFUSE-3: <70 mL; DAWN: age/NIHSS-dependent thresholds | Comparative Validation [5] |

| Hypoperfused Volume (Tmax >6s) | Identifies total tissue at risk | Larger volumes indicate more extensive perfusion compromise | Comparative Validation [5] |

| Mismatch Ratio | Estimates penumbra relative to core | DEFUSE-3: Ratio ≥1.8; indicates sufficient salvageable tissue | Comparative Validation [5] |

| Mismatch Volume | Absolute volume of salvageable tissue | DEFUSE-3: Absolute penumbra volume ≥15 mL | Comparative Validation [5] |

Experimental Protocols for Perfusion Analysis

MRI Perfusion Analysis Protocol for EVT Triage

The following protocol is adapted from a 2025 multicenter validation study comparing perfusion software platforms [5] [17] [25].

Imaging Acquisition Parameters:

- Sequence: Dynamic susceptibility contrast-enhanced perfusion imaging using gradient-echo echo-planar imaging (GE-EPI)

- Scanner Field Strength: 3.0T (62.3%) or 1.5T (37.7%)

- Typical Parameters: Repetition time (TR)=1,500–2,000ms; Echo time (TE)=40–50ms; Field of view (FOV)=230×230mm²; Slice thickness=5mm with no interslice gap

- Coverage: Entire supratentorial brain with 17–25 slices

Image Processing and Analysis (JLK PWI Software):

- Preprocessing: Motion correction, brain extraction (skull stripping, vessel masking), MR signal conversion

- Perfusion Map Calculation: Automatic arterial input function (AIF) and venous output function selection, block-circulant single value deconvolution to generate quantitative maps (CBF, CBV, MTT, Tmax)

- Ischemic Core Delineation: Deep learning-based segmentation on b1000 DWI images using ADC < 620×10⁻⁶ mm²/s threshold

- Hypoperfused Tissue Definition: Tmax >6 seconds threshold

- Mismatch Calculation: Automated co-registration of diffusion and perfusion lesions with volumetric quantification

Validation Methods:

- Volumetric Agreement: Assessed using concordance correlation coefficients (CCC), Bland-Altman plots, and Pearson correlations

- Clinical Concordance: EVT eligibility classification based on DAWN and DEFUSE-3 criteria using Cohen's kappa

CTP Protocol for HT Risk Prediction Post-Thrombolysis

The following protocol is adapted from a 2025 study developing a nomogram for hemorrhagic transformation prediction [23].

CTP Acquisition Parameters:

- Scanner: 256-slice CT scanner

- Acquisition Parameters: 80kVp, 200mA, slice thickness=5mm, matrix=512×512mm, FOV=230mm×230mm

- Contrast Protocol: 40mL non-ionic contrast agent (Ioversol, 350mgI/mL) injected at 4mL/s followed by 30mL saline flush

- Scan Duration: 34 seconds with 20 cycles

Image Analysis Workflow:

- Processing: CTP images processed using CTP 4D software with anterior cerebral artery as input artery and superior sagittal sinus as outflow vein

- Parameter Calculation: Automated generation of CBV, CBF, MTT, TTP, Tmax, and PS maps

- ROI Placement: Regions of interest (10-15mm diameter) placed in ischemic lesion and mirrored to contralateral normal hemisphere

- Relative Parameter Calculation: rCBV, rCBF, rMTT, rTTP, rTmax, and rPS calculated as pathological-to-asymptomatic hemisphere ratios

- Model Development: Logistic regression analysis incorporating clinical (NIHSS, AF) and perfusion (rPS) parameters to create predictive nomogram

Visualization of Decision Pathways

The Scientist's Toolkit: Essential Research Reagents and Platforms

Table 3: Automated Perfusion Analysis Platforms for Stroke Research

| Platform/Software | Modality | Key Features | Research Applications |

|---|---|---|---|

| RAPID | CTP & PWI | FDA-cleared; DEFUSE-3/DAWN criteria automation; Ischemic core (ADC <620) & hypoperfusion (Tmax >6s) quantification | Reference standard for EVT trial eligibility assessment; Ischemic core volume estimation [5] [17] |

| JLK PWI | PWI | Deep learning-based infarct segmentation; Multi-step preprocessing pipeline; High concordance with RAPID (CCC=0.87-0.88) | Alternative for MRI-based perfusion analysis; EVT decision-making concordance studies [5] [17] [25] |

| mRay-VEOcore | CTP & PWI | Fully automated perfusion evaluation (<3 mins); DEFUSE-3 criteria visualization; Automated quality control | Rapid triage in extended time windows; CT/MRI perfusion studies with reduced radiation dose [15] |

| GE AW Workstation with CTP 4D | CTP | Vendor-specific processing; CBV, CBF, MTT, TTP, Tmax, PS mapping; ROI-based relative parameter calculation | Hemorrhagic transformation risk prediction; Permeability surface area product analysis [23] |

The integration of Artificial Intelligence (AI) into acute stroke care has created a transformative shift in diagnostic workflows, triage efficiency, and treatment decision-making. The U.S. Food and Drug Administration (FDA) has cleared a suite of AI-powered tools that automate the detection of intracranial hemorrhage (ICH), large vessel occlusion (LVO), and the quantification of early ischemic changes. These technologies, including platforms from industry leaders such as RapidAI, Brainomix, Aidoc, and Methinks AI, leverage non-contrast CT (NCCT), CT angiography (CTA), and perfusion imaging to provide real-time notifications to stroke teams. This application note details the regulatory-cleared landscape, providing researchers and drug development professionals with a structured overview of available tools, their validated performance metrics, and experimental protocols for their evaluation. This landscape is critical for framing research within the context of AI-driven automated perfusion analysis, ensuring that new methodologies are benchmarked against clinically adopted standards.

Acute ischemic stroke remains a leading cause of death and long-term disability worldwide, where rapid reperfusion is critical for salvaging brain tissue [14]. The advent of AI has addressed key bottlenecks in the stroke imaging workflow, which traditionally relied on expert human interpretation under significant time pressure. FDA-cleared AI tools now function as computer-aided triage (CADt) and notification systems, automatically analyzing images and prioritizing urgent cases, such as those with ICH or LVO, directly to clinicians' smartphones or worklists [26] [27].

The regulatory landscape for these tools has expanded rapidly. By mid-2025, the FDA had cleared approximately 873 AI algorithms for radiology, making medical imaging the single largest AI target among medical specialties [28]. These tools are predominantly based on convolutional neural networks (CNNs), which excel at pattern detection and classification tasks in medical images [28]. The clinical impact is measurable; for instance, the implementation of one AI platform (Viz.ai) has been associated with a 66-minute faster treatment time for stroke patients [28]. For research into AI-driven perfusion analysis, understanding this ecosystem of cleared devices is essential for contextualizing new developments against existing regulatory benchmarks and clinical practices.

FDA-Cleared AI Tools for Stroke: A Comparative Analysis

The following tables summarize key FDA-cleared AI tools for stroke triage and notification, their imaging modalities, primary functions, and reported performance metrics.

Table 1: AI Tools for Hemorrhage and Large Vessel Occlusion Detection

| AI Tool (Vendor) | FDA-Cleared Function | Imaging Modality | Key Performance Metrics |

|---|---|---|---|

| Brainomix 360 Triage ICH [26] | ICH Detection & Notification | Non-Contrast CT (NCCT) | Provides real-time alerts to clinician smartphones. |

| Methinks AI NCCT Stroke [29] | ICH & LVO Detection | Non-Contrast CT (NCCT) | Reduces false negatives by nearly 50% compared to existing NCCT triage tools; detects distal LVOs (e.g., MCA-M2). |

| Aidoc Stroke Package [27] | ICH & LVO Triage | NCCT and CTA | Reduces turnaround time for ICH by 36.6% (University of Rochester Medical Center study). |

| Rapid LVO [19] | LVO Detection | CT Angiography (CTA) | 97% Sensitivity, 96% Specificity for LVO detection. |

| Rapid NCCT Stroke [19] | Suspected LVO Detection | Non-Contrast CT (NCCT) | 55% increase in sensitivity for LVO; 18 minutes faster decision-making vs. no AI. |

| CINA-HEAD (Avicenna.AI) [30] | ICH Detection, LVO Identification, ASPECTS | NCCT and CTA | ICH Detection Accuracy: 94.6%; LVO Identification Accuracy: 86.4%. |

Table 2: AI Tools for Ischemic Core and Perfusion Analysis

| AI Tool (Vendor) | FDA-Cleared Function | Imaging Modality | Key Performance Metrics / Indications |

|---|---|---|---|

| Rapid Perfusion Imaging [19] | Ischemic Core & Penumbra Mismatch | CT Perfusion (CTP) | The only perfusion imaging solution cleared in the U.S. with a mechanical thrombectomy indication; used in pivotal trials (DAWN, DEFUSE 3). |

| Rapid ASPECTS [19] | Automated ASPECT Scoring | Non-Contrast CT (NCCT) | 10% improvement in reader accuracy; provides a standardized ASPECTS score in <2 minutes. |

| Rapid Hypodensity [19] | Quantification of Subacute Infarction | Non-Contrast CT (NCCT) | First and only solution to provide automated quantification of hypodense tissue. |

| mRay-VEOcore (mbits) [15] | Automated Perfusion Analysis (CE-Marked) | CT & MR Perfusion | Fully automated perfusion evaluation in under 3 minutes; visualizes DEFUSE-3 criteria. |

Detailed Experimental Protocols for AI Tool Validation

For research and development professionals, understanding the methodologies used to validate these AI tools is critical for designing comparative studies and evaluating new algorithms. The following protocols are synthesized from recent multicenter studies.

Protocol 1: Multi-step Stroke Imaging Performance Evaluation

This protocol is based on a multicenter diagnostic study evaluating an AI tool with multiple modules [30].

- 1. Study Design: Retrospective, multicenter cohort study.

- 2. Patient Population:

- Inclusion: Patients with NCCT and/or CTA scans acquired for suspicion of acute stroke.

- Exclusion: As defined by the study, typically including severe motion artifacts or inadequate image quality.

- Sample Size: 405 patients (373 NCCT, 331 CTA) from two university hospitals.

- 3. Ground Truth Definition:

- Expert evaluations from a panel of four neuroradiologists, who reviewed images independently or by consensus to establish the reference standard for ICH presence, LVO location, and ASPECTS scores.

- 4. AI Tool Analysis:

- The AI software (e.g., CINA-HEAD) processes the NCCT datasets to flag ICH and calculate ASPECTS.

- The AI processes the CTA datasets to identify LVO.

- 5. Outcome Measures & Statistical Analysis:

- Primary Outcomes: Diagnostic accuracy, sensitivity, specificity, with 95% confidence intervals for ICH detection and LVO identification.

- ASPECTS Analysis: Region-based accuracy and dichotomized ASPECTS classification (e.g., ≥6 vs. <6) accuracy.

- Statistical Tests: Calculation of intraclass correlation coefficients (ICC) for ASPECTS agreement.

Protocol 2: Comparative Validation of Automated Perfusion Analysis Platforms

This protocol is adapted from a study comparing a new perfusion software (JLK PWI) against the established RAPID platform [5]. It serves as a model for benchmarking new perfusion analysis tools.

- 1. Study Design: Retrospective, multicenter study.

- 2. Patient Population:

- Inclusion: Patients with acute ischemic stroke who underwent MR perfusion-weighted imaging (PWI) within 24 hours of symptom onset.

- Final Cohort: 299 patients after exclusions for abnormal arterial input function or severe motion artifacts.

- 3. Imaging and Analysis:

- Image Acquisition: PWI performed on 1.5T or 3.0T scanners from multiple vendors (GE, Philips, Siemens).

- Central Analysis: All datasets underwent standardized preprocessing and normalization in a central image laboratory to minimize inter-scanner variability.

- Software Comparison: Each patient's dataset was processed through two software platforms:

- Reference Platform: RAPID (using ADC < 620 × 10⁻⁶ mm²/s for infarct core).

- Test Platform: JLK PWI (using a deep learning-based algorithm on DWI for infarct core and Tmax >6s for hypoperfusion).

- 4. Outcome Measures:

- Volumetric Agreement: Concordance correlation coefficients (CCC) and Bland-Altman plots for ischemic core volume, hypoperfused volume, and mismatch volume.

- Clinical Decision Concordance: Cohen’s kappa (κ) coefficient to evaluate agreement in endovascular therapy (EVT) eligibility based on DAWN and DEFUSE-3 trial criteria.

- 5. Data Interpretation:

- Agreement magnitude classified as poor (0.0-0.2), fair (0.21-0.40), moderate (0.41-0.60), substantial (0.61-0.80), and excellent (0.81-1.0).

Workflow and Logical Diagrams

The following diagram illustrates the integrated workflow of AI tools in an acute stroke pathway, from image acquisition to treatment decision.

This workflow demonstrates how AI tools process different imaging modalities in parallel to provide a comprehensive set of inputs that inform the final treatment decision.

The Scientist's Toolkit: Research Reagent Solutions

For researchers designing experiments in the field of AI-driven stroke analysis, the following table outlines essential "research reagents" – the key software platforms and data components required for robust study design and validation.

Table 3: Essential Research Reagents for AI-Driven Stroke Perfusion Analysis

| Research Reagent | Function in Experimental Protocol | Example in Use |

|---|---|---|

| Reference Standard Software | Serves as the benchmark for comparing new AI algorithms. Provides validated volumetric and clinical decision outputs. | RAPID software, central to DAWN and DEFUSE-3 trials, is used as a reference in comparative studies [5] [19]. |

| Curated Multicenter Imaging Datasets | Provides a diverse, real-world dataset for training and validating AI models. Essential for assessing generalizability. | Retrospective collections of NCCT, CTA, and CTP from multiple hospitals and scanner vendors [5] [30]. |

| Expert-Adjudicated Ground Truth | Establishes the reference standard for performance evaluation, against which AI output is measured. | Consensus readings from panels of expert neuroradiologists for ICH, LVO, and infarct core [30]. |

| Clinical Trial Criteria Frameworks | Translates imaging outputs into clinically actionable eligibility criteria, enabling validation of clinical utility. | Automated application of DAWN and DEFUSE-3 criteria to software outputs to determine EVT eligibility [5]. |

| Automated Perfusion Mapping Algorithms | Generates quantitative maps (CBF, CBV, MTT, Tmax) from raw CTP or PWI data, forming the basis for tissue classification. | JLK PWI and RAPID perfusion pipelines that deconvolve time-concentration data to create maps [5]. |

| Image Preprocessing & Normalization Tools | Standardizes images from different scanners and protocols, reducing variability and improving AI reliability. | Tools for motion correction, brain extraction, and signal conversion used prior to perfusion analysis [5]. |

AI Perfusion Platforms in Action: Methodologies, Algorithms, and Clinical Workflow Applications

The integration of advanced computational techniques is revolutionizing acute ischemic stroke (AIS) research and clinical care. Automated perfusion analysis has become indispensable for extending the treatment window for endovascular therapy (EVT), with algorithms now capable of quantifying ischemic core and penumbra volumes to guide patient selection [31] [5]. This evolution encompasses three fundamental computational approaches: traditional deconvolution methods that form the mathematical foundation of perfusion parameter calculation, deep learning networks that enable rapid image analysis and feature detection, and emerging generative artificial intelligence that can synthesize functional information from structural scans. The synergy of these techniques is creating a new paradigm in stroke imaging, moving beyond simple automation to providing previously unattainable diagnostic insights.

Deconvolution techniques provide the mathematical backbone for calculating hemodynamic parameters from time-resolved perfusion studies. These algorithms reverse the blurring introduced by the vascular system's impulse response, essentially solving the inverse problem to determine the underlying tissue perfusion characteristics [31] [5]. Deep learning architectures, particularly convolutional neural networks (CNNs) and object detection models like YOLO (You Only Look Once), have demonstrated remarkable capabilities in automating the detection of pathological features such as large vessel occlusions (LVOs) and medium vessel occlusions (MeVOs) [32] [19]. Most recently, generative AI approaches have emerged that can predict perfusion maps directly from non-contrast images, potentially bypassing the need for specialized perfusion imaging altogether [33]. Together, these technologies are creating increasingly sophisticated tools for quantifying salvageable brain tissue and optimizing treatment decisions in time-critical scenarios.

Core Algorithmic Frameworks and Methodologies

Deconvolution Fundamentals and Implementation

Deconvolution techniques operate on the fundamental principle of reversing a system's impulse response from the observed data. In perfusion imaging, this impulse response is represented by the vascular transport function, which describes how a contrast bolus is modified as it passes through the cerebral vasculature. The mathematical foundation relies on modeling the observed tissue contrast concentration time curve, ( C{tissue}(t) ), as the convolution of the arterial input function (AIF), ( C{arterial}(t) ), with the tissue residue function, ( R(t) ), scaled by cerebral blood flow (CBF): ( C{tissue}(t) = CBF \cdot C{arterial}(t) \otimes R(t) ) [5]. Deconvolution solves for CBF and R(t) to derive critical perfusion parameters.

In clinical practice, several deconvolution algorithms are employed, each with distinct advantages and limitations. Block-circulant singular value decomposition (cSVD) is widely implemented in commercial software like RAPID and Viz CTP due to its robustness to delay and dispersion effects commonly encountered in pathology [5]. The cSVD approach incorporates temporal delay insensitivity by creating a block-circulant matrix structure, making it particularly suitable for acute stroke applications where arrival time delays are expected in ischemic territories. Osemplar algorithms offer an alternative approach based on model-free deconvolution, but may be more sensitive to noise in low-signal conditions. Advanced implementations now incorporate bayesian methods and tikhonov regularization to stabilize solutions in regions with severely reduced perfusion, though these approaches may increase computational complexity [5].

Figure 1: Deconvolution Workflow in CT Perfusion Analysis

Deep Learning Architectures for Stroke Imaging

Deep learning approaches have dramatically expanded the capabilities of automated perfusion analysis, particularly through convolutional neural networks (CNNs) and specialized object detection architectures. The YOLO (You Only Look Once) family of models has demonstrated exceptional performance in real-time detection of acute ischemic stroke in magnetic resonance imaging [32]. A comparative evaluation of state-of-the-art versions found YOLOv11 achieved the highest mean average precision at IoU 0.5 (mAP@50) of 98.5%, with balanced precision (95.4%) and recall (96.6%) across multiple classes including Normal, PD-Patient, Acute Ischemic Stroke, and Control categories [32]. YOLOv12 performed comparably (mAP@50 98.3%) with slightly slower inference speeds, while YOLO-NAS offered the fastest processing (154 FPS) but lower precision (76.3%) [32].

These architectures employ sophisticated feature extraction mechanisms tailored to medical imaging challenges. YOLOv11 incorporates Cross Stage Partial Self-Attention (C2PSA) for enhanced feature propagation, allowing the model to maintain contextual relationships across image regions [32]. YOLOv12 integrates attention mechanisms such as Area Attention and Flash Attention to improve detection of subtle ischemic changes while maintaining near real-time inference speeds critical for emergency settings [32]. YOLO-NAS utilizes Neural Architecture Search (NAS) and quantization-aware modules to optimize the trade-off between detection performance and computational efficiency, making it particularly suitable for deployment on edge devices in resource-limited environments [32]. Specialized variants like TE-YOLOv5 have been developed specifically for stroke lesion detection in diffusion-weighted imaging (DWI), integrating Technical Aggregate Pool (AP) and Reverse Attention (RA) modules to boost performance in feature extraction and edge tracing for ill-defined lesion boundaries [32].

Generative AI for Cross-Modality Prediction

Generative artificial intelligence represents the cutting edge of perfusion analysis research, with demonstrated capabilities to synthesize functional information from structural scans. A groundbreaking approach utilizes a modified pix2pix-turbo generative adversarial network (GAN) to translate co-registered non-contrast head CT (NCHCT) images into corresponding perfusion maps for parameters including relative cerebral blood flow (rCBF) and time-to-maximum (Tmax) [33]. This cross-modality learning bypasses the need for actual CT perfusion imaging, potentially reducing radiation exposure, decreasing processing times, and expanding access to perfusion data in settings where dedicated CTP is unavailable.

In the pilot implementation, the GAN architecture was trained using paired NCHCT-CTP data with training, validation, and testing splits of 80%:10%:10% [33]. Quantitative performance assessment demonstrated that generated Tmax maps achieved a structural similarity index measure (SSIM) of 0.827, peak signal-to-noise ratio (PSNR) of 16.99, and Fréchet inception distance (FID) of 62.21, while rCBF maps showed comparable metrics (SSIM 0.79, PSNR 16.38, FID 59.58) [33]. These results indicate the model successfully captures key cerebral hemodynamic features from non-contrast images alone. The approach is particularly valuable for patients with contraindications to contrast administration or when traditional CTP provides limited diagnostic information due to technical factors or artifact.

Experimental Protocols and Validation Frameworks

Protocol: Multi-Center Software Validation Study

Objective: To evaluate the performance and clinical concordance of automated perfusion analysis software against established reference standards in acute ischemic stroke.

Patient Population:

- Inclusion: Patients with acute ischemic stroke presenting within 24 hours of symptom onset, confirmed large vessel occlusion on CTA or MRA, complete perfusion imaging (CTP or PWI), and baseline clinical documentation [5].

- Exclusion: Non-diagnostic perfusion studies, significant motion artifacts, posterior circulation strokes, or incomplete clinical/imaging data [31].

Imaging Protocol:

- Acquisition: CT perfusion studies should cover the entire supratentorial brain with standardized parameters (80 kVp, 150-200 mAs, 5-mm slice thickness). For PWI, utilize dynamic susceptibility contrast-enhanced imaging with parameters: TR=1500-2000 ms, TE=40-50 ms, FOV=230×230 mm², 5-mm slice thickness with no gap [5].

- Contrast Administration: Iohexol (350 mgI/mL) or gadoterate meglumine (0.5 mmol/mL) at 5-6 mL/s followed by 40 mL saline flush using a power injector [5].

Software Analysis:

- Process all studies through both reference (e.g., RAPID) and test software (e.g., JLK PWI, Viz CTP) [31] [5].

- Ensure consistent pre-processing including motion correction, brain extraction, and arterial input function selection.

- Generate core perfusion parameter maps: CBF, CBV, MTT, Tmax with standardized thresholds (CBF <30% for core, Tmax >6s for hypoperfusion) [31].

Outcome Measures:

- Primary: Volumetric agreement for ischemic core and hypoperfused tissue using concordance correlation coefficients (CCC), Pearson correlation, and Bland-Altman analysis [5].

- Secondary: Treatment eligibility concordance based on DAWN/DEFUSE-3 criteria assessed via Cohen's kappa coefficient [31] [5].

- Statistical Analysis: Classify agreement as poor (0.0-0.2), fair (0.21-0.40), moderate (0.41-0.60), substantial (0.61-0.80), or excellent (0.81-1.0) per Landis and Koch criteria [5].

Protocol: Generative AI Model Development and Testing

Objective: To develop and validate a generative AI model for predicting perfusion parameters from non-contrast CT images.

Data Curation:

- Retrospectively identify patients with paired NCHCT, CTA, and CTP studies performed for acute stroke evaluation [33].

- Apply exclusion criteria: significant motion artifacts, prior large territory infarction, intracranial hemorrhage, or poor image quality.

- Allocate data into training (80%), validation (10%), and testing (10%) sets maintaining case distribution balance [33].

Model Architecture:

- Implement a modified pix2pix-turbo generative adversarial network with U-Net generator and PatchGAN discriminator [33].

- Input: Co-registered NCHCT image slices with standard brain windowing (80/40 HU).

- Output: Synthetic perfusion maps for rCBF and Tmax parameters.

Training Protocol:

- Pre-processing: Normalize image intensities, resample to isotropic resolution (1mm³), and augment data with random rotations (±5°), translations (±10 pixels), and intensity variations (±10%) [33].

- Loss Function: Combine adversarial loss, L1 distance, and perceptual loss with weighting factors 1:100:10 respectively.

- Optimization: Use Adam optimizer with initial learning rate 2×10⁻⁴, batch size 8, trained for 200 epochs with early stopping [33].

Validation Framework:

- Quantitative Metrics: Calculate structural similarity index (SSIM), peak signal-to-noise ratio (PSNR), and Fréchet inception distance (FID) against ground truth CTP maps [33].

- Clinical Validation: Assess concordance in identifying tissue-at-risk (Tmax >6s) and ischemic core (rCBF <30%) with board-certified neuroradiologist reads as reference standard.

- Statistical Analysis: Perform receiver operating characteristic analysis for detection of clinically significant perfusion deficits.

Performance Comparison and Clinical Validation

Quantitative Software Performance Metrics

Table 1: Performance Comparison of Automated Perfusion Analysis Platforms

| Software Platform | Ischemic Core Volume Concordance (CCC) | Hypoperfused Volume Concordance (CCC) | EVT Eligibility Agreement (κ) | Processing Time |

|---|---|---|---|---|

| RAPID (Reference) | 0.87 [5] | 0.88 [5] | 0.96 (DAWN) [31] | <5 minutes [19] |

| Viz CTP | 0.96 [31] | 0.93 [31] | 0.96 (DAWN) [31] | <5 minutes [31] |

| JLK PWI | 0.87 [5] | 0.88 [5] | 0.80-0.90 (DAWN) [5] | Not specified |

| Generative AI (NCHCT) | SSIM: 0.79-0.83 [33] | SSIM: 0.79-0.83 [33] | Under investigation | <2 minutes [33] |

Table 2: Deep Learning Model Performance for Acute Ischemic Stroke Detection

| Model Architecture | Precision (%) | Recall (%) | mAP@0.5 (%) | Inference Speed (FPS) |

|---|---|---|---|---|

| YOLOv11 | 95.4 [32] | 96.6 [32] | 98.5 [32] | 142 [32] |

| YOLOv12 | 95.2 [32] | 96.0 [32] | 98.3 [32] | 138 [32] |

| YOLO-NAS | 76.3 [32] | 87.5 [32] | 92.1 [32] | 154 [32] |

| TE-YOLOv5 | 81.5 [32] | 75.8 [32] | 80.7 [32] | Not specified |

Clinical validation studies demonstrate that automated software platforms show excellent agreement in critical decision-making parameters. In a direct comparison of 46 patients, RAPID and Viz CTP showed almost perfect agreement for EVT eligibility by DAWN criteria (κ=0.96) with no significant difference in final treatment decisions [31]. Similarly, a multicenter study of 299 patients found JLK PWI demonstrated excellent agreement with RAPID for both ischemic core (CCC=0.87) and hypoperfused volume (CCC=0.88) measurements [5]. These results confirm that well-validated automated platforms can be used interchangeably in clinical workflows without affecting treatment eligibility for the majority of patients.

The implementation of these AI-driven solutions has demonstrated tangible improvements in clinical workflows. Institutions utilizing the RAPID platform have reported an 18-minute faster time-to-decision, 51% increase in mechanical thrombectomy procedures post-implementation, and 35 minutes saved with direct-to-angio suite patient routing [19]. These workflow optimizations are clinically significant, as reduced time to treatment directly correlates with improved functional outcomes in acute ischemic stroke.

Integration Framework for Clinical Deployment

Figure 2: Integrated AI-Driven Stroke Imaging Workflow

Research Reagent Solutions and Computational Tools

Table 3: Essential Research Tools for Algorithm Development in Perfusion Analysis

| Tool Category | Specific Solutions | Primary Function | Application Context |

|---|---|---|---|

| Commercial Perfusion Software | RAPID (RAPID AI), Viz CTP (Viz.ai), JLK PWI (JLK Inc.) | Automated processing of CTP/PWI studies with core/penumbra quantification | Clinical trial patient selection, routine stroke care [31] [5] [19] |

| Deep Learning Frameworks | YOLO architectures (v11, v12, NAS), CNN models (ResNest, U-Net) | Real-time detection of ischemic changes, segmentation of pathology | Research prototyping, algorithm development [32] [33] |

| Generative AI Models | Modified pix2pix-turbo GAN, Diffusion Models | Cross-modality prediction of perfusion maps from non-contrast images | Resource-limited settings, contrast contraindication research [33] |

| Validation Datasets | REFINE SPECT Registry, Multi-center stroke imaging cohorts | Model training, benchmarking, and validation | Algorithm validation, performance comparison [5] [34] |

The deployment of these computational tools requires careful consideration of integration frameworks and validation protocols. Commercial platforms like RAPID and Viz CTP have established regulatory clearance and are integrated with hospital PACS systems, enabling seamless implementation into clinical workflows [19]. For research implementations, the use of standardized metrics (SSIM, PSNR, FID for generative models; CCC and kappa for clinical concordance) ensures comparable results across institutions [5] [33]. The emerging trend toward holistic AI analysis that incorporates extra-cerebral findings and clinical parameters demonstrates the evolution from purely imaging-based assessment to comprehensive patient evaluation [34].

Future developments in this field will likely focus on enhanced generalization across imaging protocols and scanners, refined detection of medium vessel occlusions, and more sophisticated integration of non-imaging clinical data. The demonstrated feasibility of generating perfusion information from non-contrast studies suggests a potential paradigm shift in stroke imaging workflows, particularly in resource-limited settings. As these algorithms continue to evolve, maintaining rigorous validation standards and clinical correlation will be essential to ensuring their safe and effective implementation in patient care.

The management of acute ischemic stroke has been revolutionized by the transition from a "time window" to a "tissue window" paradigm, guided by advanced neuroimaging. Artificial intelligence (AI) driven automated perfusion analysis platforms are central to this shift, enabling rapid, standardized identification of salvageable brain tissue (penumbra) and irreversibly injured tissue (core infarct). These tools provide critical, quantitative data for patient selection in endovascular thrombectomy (EVT), particularly in extended time windows up to 24 hours after symptom onset [35] [36]. This application note provides a detailed technical comparison of four prominent AI perfusion platforms—RAPID, JLK PWI, UGuard, and mRay-VEOcore—framed within the context of their capabilities for supporting rigorous clinical research and drug development.

Platform Technical Specifications and Workflows

Core Technical Capabilities

The following table summarizes the key technical specifications, imaging modalities, and primary outputs of the four platforms, highlighting their roles in acute stroke assessment.

Table 1: Core Technical Capabilities of Automated Perfusion Analysis Platforms

| Platform | Primary Imaging Modality | Core Analysis Outputs | Key Technical Features | Research Context |

|---|---|---|---|---|

| RAPID | CTP, MR-PWI | Ischemic core volume (rCBF<30%), Penumbra volume (Tmax>6s), Mismatch Ratio [19] [36] | Delay-insensitive deconvolution algorithm; automated AIF/VOF selection; integrated scan quality controls [19] [36] | Gold standard in DAWN/DEFUSE-3 trials; FDA-cleared; used in >75% of US Comprehensive Stroke Centers [19] |

| JLK PWI | MR-PWI, DWI | Ischemic core (Deep Learning on DWI), Hypoperfused volume (Tmax>6s), Mismatch volume [5] [37] | Deep learning-based infarct segmentation on b1000 DWI; automated pre-processing & perfusion parameter pipeline [5] | Demonstrates excellent concordance with RAPID for volumetric measures and EVT eligibility (κ=0.76-0.90) [5] [37] |

| UGuard | CTP | Ischemic Core Volume (rCBF<30%), Penumbra Volume (Tmax>6s) [38] | Machine learning algorithm; adaptive anisotropic filtering networks; deep convolutional model for artery/vein segmentation [38] | Strong agreement with RAPID (ICC ICV:0.92, PV:0.80); comparable predictive value for clinical outcome [38] |

| mRay-VEOcore | CTP, MR-PWI | Infarct core volume, Penumbra volume, Mismatch ratio, e-ASPECTS [39] [15] | Fully automated perfusion evaluation; includes quality control for patient motion/contrast issues; supports DEFUSE-3 criteria [39] | Enables rapid, evidence-based triage; integrated for real-time clinical collaboration via deepcOS platform [15] |

Platform Workflows

The following diagram illustrates the generalized operational workflow shared by automated perfusion analysis platforms, from image acquisition to treatment decision support.

Figure 1: Generalized AI Perfusion Analysis Workflow. This flowchart outlines the common steps from scan initiation to result dissemination, enabling rapid therapy decisions.

Experimental Validation and Performance Data

Comparative Validation Metrics

Validation against the reference standard, RAPID, is a common study design. The table below consolidates key quantitative performance metrics from recent comparative studies.

Table 2: Comparative Validation Metrics of Alternative Platforms vs. RAPID

| Performance Metric | JLK PWI (vs. RAPID) | UGuard (vs. RAPID) | mRay-VEOcore |

|---|---|---|---|

| Ischemic Core Agreement | CCC = 0.87 [5] [37] | ICC = 0.92 (95% CI: 0.89–0.94) [38] | Information Not Specified in Sources |

| Hypoperfused Volume Agreement | CCC = 0.88 [5] [37] | ICC = 0.80 (95% CI: 0.73–0.85) [38] | Information Not Specified in Sources |

| EVT Eligibility Concordance | DAWN Criteria: κ=0.80-0.90DEFUSE-3: κ=0.76 [5] [37] | Model with UGuard ICV/PV showed best predictive performance for favorable outcome [38] | Visualizes DEFUSE-3 inclusion criteria per ESO guidelines [39] |

| Sensitivity/Specificity | Information Not Specified in Sources | Specificity for outcome prediction higher than RAPID [38] | Fully automated evaluation in <3 minutes [39] |

Detailed Experimental Protocols

For researchers seeking to validate or utilize these platforms, understanding the underlying experimental methodology is crucial.