Adaptive Filtering Algorithms for EEG Artifact Removal: A Comprehensive Guide for Biomedical Research

This article provides a comprehensive overview of adaptive filtering algorithms for electroencephalogram (EEG) artifact removal, tailored for researchers, scientists, and drug development professionals.

Adaptive Filtering Algorithms for EEG Artifact Removal: A Comprehensive Guide for Biomedical Research

Abstract

This article provides a comprehensive overview of adaptive filtering algorithms for electroencephalogram (EEG) artifact removal, tailored for researchers, scientists, and drug development professionals. It covers the foundational principles of EEG artifacts and adaptive filter theory, explores a range of methodological implementations from classic algorithms to modern deep learning hybrids, addresses critical troubleshooting and optimization challenges for real-world applications, and offers a comparative analysis of algorithm performance. The content synthesizes current literature to guide the selection, implementation, and validation of these techniques in both clinical and research settings, with a focus on improving data integrity for neurological and pharmacological studies.

Understanding EEG Artifacts and Adaptive Filtering Fundamentals

FAQ: A Researcher's Guide to EEG Artifacts

Q1: What is an EEG artifact and why is its removal critical for research? An EEG artifact is any recorded signal that does not originate from neural activity within the brain [1]. These unwanted signals contaminate the EEG recording, obscuring the underlying brain signals and reducing the signal-to-noise ratio (SNR) [1] [2]. Their presence can introduce uncontrolled variability into data, confounding experimental observations, reducing statistical power, and potentially leading to incorrect data interpretation or clinical misdiagnosis [1] [3]. Effective artifact removal is therefore a crucial preprocessing step for ensuring the validity of subsequent EEG analysis [4].

Q2: What are the most common physiological artifacts encountered in EEG experiments? The most frequent physiological artifacts arise from the researcher's own body [5] [1]:

- Ocular Artifacts (EOG): Caused by eye blinks and movements. Blinks create high-amplitude, low-frequency deflections maximal in frontal electrodes (Fp1, Fp2), while lateral movements show opposing polarities in electrodes like F7 and F8 [5] [2].

- Muscle Artifacts (EMG): Generated by the contraction of head, jaw, or neck muscles (e.g., clenching, chewing, frowning). EMG appears as high-frequency, broadband "buzz" of fast activity that can overlap with and mask beta and gamma EEG rhythms [5] [1] [6].

- Cardiac Artifacts (ECG): Caused by the electrical activity of the heart. It appears as rhythmic waveforms time-locked to the heartbeat, often more prominent on the left side of the scalp, and can be mistaken for cerebral rhythms [5] [2].

- Pulse Artifacts: A mechanical artifact occurring when an electrode is placed over a pulsating blood vessel, causing a slow, rhythmic movement of the electrode [5] [2].

- Sweat Artifacts: Result from changes in skin conductivity due to perspiration, producing very slow, large-amplitude drifts in the signal, typically below 0.5 Hz [5] [1].

Q3: What technical or non-physiological artifacts can compromise EEG data quality? These artifacts originate from the equipment or environment [1] [2]:

- Power Line Interference: A persistent 50 Hz or 60 Hz oscillation (depending on the regional power grid) caused by electromagnetic interference from nearby electrical wiring and devices [1] [2].

- Electrode "Pop": A sudden, high-amplitude transient with a very steep upslope, caused by a temporary disruption in the electrode-skin contact, often due to a loose electrode or drying gel [5] [1].

- Cable Movement: Movement of electrode cables can cause electromagnetic interference and impedance changes, leading to transient signal alterations or drifts [1] [2].

- Loose Electrode Contact: Results in an unstable signal characterized by slow drifts and sudden shifts in the baseline [2].

Q4: How do artifacts impact the development of adaptive filtering algorithms? Artifacts present a significant challenge for adaptive filtering algorithms due to their heterogeneous and overlapping properties. Different artifacts have distinct temporal, spectral, and spatial characteristics [4]. For instance, ocular (EOG) artifacts are primarily concentrated in the low-frequency spectrum, while muscle (EMG) artifacts are broadly distributed across mid-to-high frequencies [4]. A major research focus is developing unified models that can effectively remove multiple types of interleaved artifacts without requiring prior knowledge of the specific artifact type contaminating the signal [7] [4]. Furthermore, the irregular and non-stationary nature of artifacts like EMG requires algorithms that are robust and can adapt in real-time [8].

Table 1: Physiological Artifacts and Their Impact on the EEG Signal

| Artifact Type | Origin | Time-Domain Signature | Frequency-Domain Signature | Spatial Distribution on Scalp |

|---|---|---|---|---|

| Ocular (EOG) | Eye blinks and movements [1] | High-amplitude, slow deflections [2] | Delta/Theta bands (0.5-8 Hz) [2] | Maximal at frontal sites (Fp1, Fp2) [5] |

| Muscle (EMG) | Head, face, neck muscle contraction [1] | High-frequency, low-amplitude "buzz" [6] | Broadband, dominant in Beta/Gamma (>20 Hz) [1] | Frontal (frowning) & Temporal (jaw) regions [5] |

| Cardiac (ECG) | Electrical activity of the heart [1] | Rhythmic, spike-like waveforms [2] | Overlaps multiple EEG bands [1] | Often left-sided or central [5] |

| Pulse | Arterial pulsation under electrode [5] | Slow, rhythmic waves [5] | Delta frequency range [5] | Focal, over a blood vessel [2] |

| Sweat | Changes in skin impedance [1] | Very slow, large-scale drifts [2] | Very low frequencies (<0.5 Hz) [5] | Often frontal, but can be widespread [6] |

Table 2: Non-Physiological (Technical) Artifacts

| Artifact Type | Origin | Time-Domain Signature | Frequency-Domain Signature | Common Causes |

|---|---|---|---|---|

| Power Line | AC power interference [1] | Persistent high-frequency oscillation [2] | Sharp peak at 50/60 Hz [1] | Unshielded cables, nearby electrical devices [1] |

| Electrode Pop | Sudden change in electrode-skin contact [1] | Sudden, steep upslope with no field [5] | Broadband, non-stationary [1] | Loose electrode, drying gel [5] |

| Cable Movement | Physical movement of electrode cables [1] | Sudden deflections or rhythmic drift [2] | Can introduce artificial spectral peaks [1] | Cable swinging, participant movement [2] |

| Loose Electrode | Poor or unstable electrode contact [2] | Slow baseline drifts and instability [2] | Increased power across all frequencies [1] | Loose-fitting cap, hair pushing electrode away [2] |

Experimental Protocol: Benchmarking a Novel Deep Learning Artifact Removal Model

Objective: To train and evaluate the performance of a novel deep learning model (e.g., CLEnet) in removing multiple types of artifacts from EEG signals, comparing its efficacy against established benchmark models [7].

Detailed Methodology:

Dataset Preparation:

- Utilize a benchmark dataset such as EEGdenoiseNet [7] [4] or a custom-collected multi-channel dataset containing both clean EEG and known artifacts [7].

- For a comprehensive evaluation, use at least three distinct datasets:

- Dataset I: Semi-synthetic data created by mixing clean single-channel EEG with recorded EMG and EOG artifacts [7].

- Dataset II: Semi-synthetic data created by mixing clean EEG with Electrocardiogram (ECG) artifacts [7].

- Dataset III: Real 32-channel EEG data collected from participants performing tasks that induce unknown or mixed artifacts (e.g., a 2-back task) [7].

Model Architecture - CLEnet:

- Stage 1: Morphological & Temporal Feature Enhancement: Use a dual-branch CNN with convolutional kernels of different scales to extract morphological features at different resolutions. Embed a 1D Efficient Multi-Scale Attention (EMA-1D) module to enhance temporal features during this stage [7].

- Stage 2: Temporal Feature Extraction: Pass the enhanced features through fully connected layers for dimensionality reduction, then into a Long Short-Term Memory (LSTM) network to capture and preserve the temporal dependencies of genuine EEG [7].

- Stage 3: EEG Reconstruction: Flatten the fused features and use fully connected layers to reconstruct the artifact-free EEG signal [7].

- Training: Train the model in a supervised manner using Mean Squared Error (MSE) between the model's output and the known clean EEG as the loss function [7].

Performance Metrics:

- Signal-to-Noise Ratio (SNR): Measures the power ratio between the clean signal and the removed noise. Higher is better [7].

- Correlation Coefficient (CC): Measures the linear correlation between the cleaned signal and the true clean EEG. Closer to 1 is better [7].

- Relative Root Mean Square Error (RRMSE): Calculated in both the temporal (RRMSEt) and frequency (RRMSEf) domains. Lower values indicate better performance [7].

Benchmarking and Ablation:

The Scientist's Toolkit: Key Reagents & Computational Solutions

Table 3: Essential Tools for EEG Artifact Removal Research

| Item / Solution Name | Type | Primary Function in Research |

|---|---|---|

| EEGdenoiseNet [7] [4] | Benchmark Dataset | Provides a semi-synthetic benchmark dataset with clean EEG and recorded artifacts (EMG, EOG) for standardized training and evaluation of artifact removal algorithms. |

| Custom Multi-channel Dataset [7] | Proprietary Data | Enables testing of algorithms on real, complex artifacts (including "unknown" types) in multi-channel scenarios, moving beyond semi-synthetic data. |

| Independent Component Analysis (ICA) [7] [3] | Classical Algorithm | A blind source separation technique used to decompose multi-channel EEG into independent components, allowing for manual or automated identification and removal of artifact-related components. |

| Artifact Subspace Reconstruction (ASR) [9] | Classical Algorithm | An automated method for removing large-amplitude, transient artifacts from multi-channel EEG data by reconstructing corrupted segments based on clean baseline data. |

| CNN-LSTM Hybrid Network [7] [3] | Deep Learning Architecture | Combines Convolutional Neural Networks (CNN) to extract spatial/morphological features and Long Short-Term Memory (LSTM) networks to model temporal dependencies, effective for end-to-end artifact removal. |

| NARX Network [8] | Neural Network Model | A nonlinear autoregressive network with exogenous inputs, suitable for time-series prediction and modeling, can be used with adaptive filtering for artifact removal. |

| Adaptive Filter with FLM Optimization [8] | Optimization Algorithm | A hybrid Firefly + Levenberg-Marquardt algorithm used to find optimal weights for a neural network-based adaptive filter, enhancing its noise cancellation capabilities. |

Core Principles of Adaptive Filters

An adaptive filter is a digital system with a linear filter whose transfer function is controlled by variable parameters. These parameters are continuously adjusted by an optimization algorithm to minimize an error signal, which is the difference between the filter's output and a desired signal [10]. This self-adjusting capability is the core of its operation, allowing it to perform optimally even when the signal characteristics or noise properties are unknown or changing over time [11] [10].

The most common optimization algorithm used is the Least Mean Squares (LMS) algorithm, which aims to minimize the mean square of this error signal [10]. The filter coefficients are updated iteratively, with the magnitude and direction of the change being proportional to the error and the input signal [12].

Troubleshooting Guides and FAQs

Frequently Asked Questions

- Q1: Why is my adaptive filter unstable or failing to converge?

- A: The most likely cause is an improper step size (μ). If μ is too large, the algorithm becomes unstable and cannot converge. If it is too small, convergence is extremely slow [11] [10]. Ensure your step size is within a stable range, often related to the signal power. The LMS algorithm, for instance, requires

0 < μ < 1 / (σ^2), where σ² is the input signal power [10].

- A: The most likely cause is an improper step size (μ). If μ is too large, the algorithm becomes unstable and cannot converge. If it is too small, convergence is extremely slow [11] [10]. Ensure your step size is within a stable range, often related to the signal power. The LMS algorithm, for instance, requires

- Q2: My filter converges, but the output signal is distorted. What is happening?

- A: Signal distortion often occurs if the filter is not properly tuned and removes parts of the desired signal along with the noise [11]. In EEG contexts, this can mean erasing neural information while removing artifacts. Verify that your reference signal is strongly correlated with the noise and weakly correlated with the signal of interest [10].

- Q3: Can I implement an LMS adaptive filter on any digital signal processor (DSP)?

- A: No. While powerful, adaptive filters are computationally complex. Some processors, like the ADAU1701, lack the power for adaptive FIR filters, whereas others like the ADAU1761, ADAU1452, or SHARC processors are better suited [13]. Always check your hardware's computational capabilities before implementation.

Common Experimental Issues and Solutions

| Problem | Possible Cause | Suggested Solution |

|---|---|---|

| Slow Convergence | Step size (μ) too small; Non-stationary signal [11] [10] | Increase μ within stable limits; Use a more advanced algorithm like RLS [11]. |

| Algorithm Instability | Step size (μ) too large; High-power input signal [10] | Reduce the step size parameter; Normalize the input signal (use NLMS) [14]. |

| Poor Noise Removal | Reference signal contains desired signal components [10] | Improve the isolation of the reference noise source. |

| High Computational Load | Filter order too high; Complex algorithm [11] | Reduce the number of filter taps; Consider a simpler algorithm or more powerful hardware [13]. |

Quantitative Data Comparison

The following table summarizes key performance metrics from recent research on adaptive filtering techniques used in EEG artifact removal.

Table 1: Performance Metrics of Adaptive Filtering Methods in EEG Research

| Method / Study | Key Performance Metrics | Application Context |

|---|---|---|

| FF-EWT + GMETV Filter [15] | Lower RRMSE, Higher CC, Improved SAR and MAE on real EEG. | Single-channel EOG (eyeblink) artifact removal. |

| FLM (Firefly + LM) Optimization [8] | Achieved high SNR of 42.042 dB. | Removal of various artifacts (EOG, EMG, ECG) from multi-channel EEG. |

| EWT + Adaptive Filtering [14] | Average SNR improvement of 9.21 dB, CC of 0.837. | Ocular artifact removal from EEG signals. |

| LMS Algorithm [10] | Convergence dependent on 0 < μ < 1 / σ². |

General-purpose adaptive noise cancellation. |

Experimental Protocols

1. Protocol for EEG Artifact Removal using Hybrid EWT and Adaptive Filtering

This methodology is based on a 2025 study that demonstrated high effectiveness in removing ocular artifacts [15] [14].

- Aim: To remove EOG (electrooculogram) artifacts from a single-channel EEG signal while preserving the underlying neural information.

- Procedure:

- Decomposition: Use Fixed Frequency Empirical Wavelet Transform (FF-EWT) to decompose the contaminated single-channel EEG signal into six Intrinsic Mode Functions (IMFs) or sub-band components [15].

- Identification: Identify the IMFs contaminated with EOG artifacts using statistical metrics like kurtosis (KS), dispersion entropy (DisEn), and power spectral density (PSD) [15].

- Filtering: Apply a finely tuned Generalized Moreau Envelope Total Variation (GMETV) filter or a Normalized LMS (NLMS) adaptive filter to the identified artifact components to suppress the EOG signal [15] [14].

- Reconstruction: Reconstruct the clean EEG signal from the processed IMFs and the remaining unaltered IMFs.

- Validation: Performance is validated on synthetic and real EEG datasets using metrics like Signal-to-Artifact Ratio (SAR), Correlation Coefficient (CC), and Mean Absolute Error (MAE) [15].

2. Protocol for Artifact Removal using an FLM-Optimized Neural Network

This protocol uses a hybrid optimization approach to train a neural network for enhanced adaptive filtering [8].

- Aim: To remove multiple types of artifacts (EOG, EMG, ECG) from multi-channel EEG data using an optimized neural adaptive filter.

- Procedure:

- Network Selection: Employ a NARX (Nonlinear AutoRegressive with eXogenous inputs) neural network model, which is suitable for modeling nonlinear systems and time series analysis [8].

- Hybrid Optimization: Use a hybrid FLM (Firefly + Levenberg-Marquardt) algorithm to find the optimal weights for the neural network. This combination aims to overcome the individual shortcomings of each algorithm [8].

- Training and Filtering: The neural network, with its optimized weights, functions as an adaptive filter. The contaminated EEG signal is fed into this system, which is trained to output the clean EEG signal.

- Performance Analysis: Compare the results against traditional methods like ICA and wavelet-based techniques using Signal-to-Noise Ratio (SNR), Mean Square Error (MSE), and computation time [8].

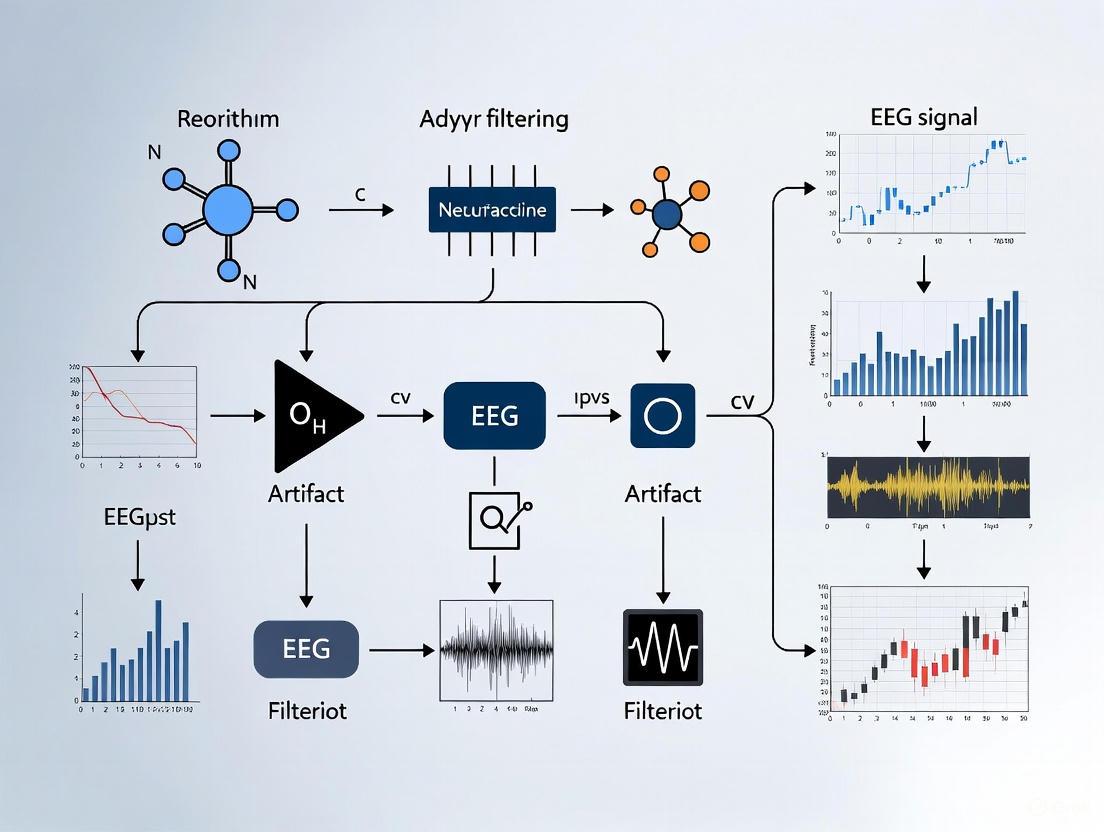

Workflow and System Diagrams

EEG Artifact Removal Workflow

Core Adaptive Filter Structure

The Scientist's Toolkit

Table 2: Essential Research Reagents and Materials

| Item | Function in Research |

|---|---|

| Single-Channel EEG Data | The primary contaminated signal serving as the input for single-channel artifact removal algorithms [15]. |

| Reference EOG/EMG/ECG Signal | A correlated noise reference signal crucial for adaptive noise cancellation setups [8] [10]. |

| Empirical Wavelet Transform (EWT) | A signal decomposition technique used to adaptively break down the EEG signal into components for artifact identification [15] [14]. |

| NARX Neural Network | A recurrent neural network structure used for modeling nonlinear systems and time-series prediction in advanced filtering [8]. |

| LMS/RLS Algorithm | Core optimization algorithms for updating filter coefficients; LMS is simple, RLS converges faster but is more complex [11]. |

| High-Performance DSP (e.g., SHARC) | Digital Signal Processor hardware with sufficient power to run complex adaptive filter algorithms in real-time [13]. |

| Validation Metrics (SNR, MSE, CC) | Quantitative measures (Signal-to-Noise Ratio, Mean Square Error, Correlation Coefficient) to objectively evaluate filter performance [15] [8]. |

In electroencephalogram (EEG) artifact removal research, selecting and implementing the appropriate adaptive filtering algorithm is fundamental to achieving a clean neural signal. These algorithms are crucial for isolating brain activity from contaminants such as ocular movements, muscle activity, and cardiac rhythms. This guide provides a structured comparison, detailed experimental protocols, and troubleshooting advice to help you navigate the challenges of implementing these algorithms effectively in your research.

Algorithm Comparison and Selection Guide

The choice of algorithm involves a direct trade-off between convergence speed, computational complexity, and stability. The following table summarizes the core characteristics of the primary algorithm families to guide your selection.

Table 1: Key Algorithm Families for Adaptive Filtering in EEG Research

| Algorithm Family | Key Principle | Typical Convergence Speed | Computational Complexity | Key Artifacts Addressed | Stability & Notes |

|---|---|---|---|---|---|

| LMS (Least-Mean-Squares) | Stochastic gradient descent; uses instantaneous error for step-wise updates [16] [17]. | Slow | Low (O(n)) | All types, but with less precision [16] [8]. | Robust and stable, but sensitive to step-size parameter [16]. |

| NLMS (Normalized LMS) | Normalizes the step-size based on input signal power for more stable updates. | Moderate | Low (O(n)) | All types, better performance than LMS. | Improved stability over LMS; less sensitive to input power [17]. |

| RLS (Recursive Least Squares) | Recursively minimizes a least-squares cost function, leveraging all past data [16] [18]. | Fast | High (O(n²)) | Effective for various physiological artifacts [16] [8]. | Fast convergence but can face instability with ill-conditioned data [16] [18]. |

| RLS with Rank-Two Updates (RLSR2) | Enhances RLS by simultaneously adding new data and removing old data within a moving window [18]. | Very Fast | High (O(n²)) | Suitable for non-stationary signals with rapid changes. | Improved performance in ill-conditioned scenarios; incorporates both exponential and instantaneous forgetting [18]. |

| Deep Learning (e.g., CLEnet) | Uses neural networks (CNN, LSTM) in an end-to-end model to learn and separate artifacts from clean EEG [7]. | (Requires training) | Very High (GPU recommended) | Multiple and unknown artifacts simultaneously [7]. | Requires large datasets for training; high performance on multi-channel data [7]. |

Experimental Protocols for EEG Artifact Removal

Protocol 1: Baseline Implementation of LMS and RLS

This protocol provides a foundational methodology for comparing classic adaptive filters, using an EOG artifact as a reference.

Objective: To remove ocular artifacts (EOG) from a contaminated EEG signal using a reference EOG channel and compare the performance of LMS and RLS algorithms.

Materials and Setup:

- EEG Data: A recorded single-channel or multi-channel EEG signal contaminated with EOG artifacts.

- Reference Signal: A concurrently recorded EOG channel capturing the blink activity.

- Software: Computational environment like MATLAB or Python with NumPy/SciPy.

Procedure:

- Data Preprocessing: Load the contaminated EEG signal (primary input) and the reference EOG signal. Apply a basic bandpass filter (e.g., 0.5-40 Hz) to both signals to remove extreme noise.

- Algorithm Initialization:

- LMS: Initialize the weight vector to zeros. Set the step-size parameter (μ). A small μ (e.g., 0.01) ensures stability but slow convergence [16].

- RLS: Initialize the weight vector and the inverse correlation matrix (P). Set the forgetting factor (λ), typically close to 1 (e.g., 0.99), to assign more weight to recent samples [16] [18].

- Iterative Filtering: For each time step k:

- Compute the filter output: ( y(k) = w^T(k) * x(k) ), where ( x(k) ) is the reference EOG input vector.

- Calculate the error signal: ( e(k) = d(k) - y(k) ), where ( d(k) ) is the contaminated EEG signal.

- Update the filter weights according to the LMS or RLS algorithm.

- Output: The error signal ( e(k) ) is the cleaned EEG output.

- Performance Evaluation: Calculate the Signal-to-Noise Ratio (SNR), Mean Square Error (MSE), and Correlation Coefficient (CC) between the cleaned signal and a ground-truth clean EEG, if available [16] [8].

Protocol 2: Advanced Deep Learning-Based Removal

For complex scenarios involving multiple or unknown artifacts, deep learning models offer a powerful, data-driven alternative.

Objective: To remove multiple, unknown artifacts from multi-channel EEG data using a hybrid deep learning model.

Materials and Setup:

- EEG Data: Multi-channel EEG data (e.g., from a 32-channel system) with unknown or mixed artifacts [7].

- Computing Environment: Python with deep learning frameworks like TensorFlow or PyTorch, and a GPU for accelerated training.

Procedure:

- Data Preparation: Use a benchmark dataset like EEGdenoiseNet [7] or a custom dataset of real EEG. Split the data into training, validation, and test sets.

- Model Construction: Build a hybrid neural network, such as CLEnet, which integrates:

- Model Training: Train the model in a supervised manner. Use mean squared error (MSE) between the model's output and the clean target EEG as the loss function. Use an optimizer like Adam.

- Artifact Removal: Feed the artifact-contaminated EEG into the trained model. The model will output the cleaned EEG signal in an end-to-end fashion.

- Validation: Evaluate performance using RRMSE (Relative Root Mean Square Error) in both time and frequency domains (RRMSEt, RRMSEf), SNR, and CC on the held-out test set [7].

The following diagram illustrates the core workflow of a deep learning-based approach like CLEnet, which processes raw EEG to output a cleaned signal.

Deep Learning EEG Cleaning Workflow

The Scientist's Toolkit

Table 2: Essential Research Reagents and Computational Tools

| Tool / Resource | Function / Description | Application in Research |

|---|---|---|

| EEGdenoiseNet | A benchmark dataset containing semi-synthetic EEG signals contaminated with EMG and EOG artifacts [7]. | Provides standardized data for training and evaluating new artifact removal algorithms. |

| MNE-Python | An open-source Python package for exploring, visualizing, and analyzing human neurophysiological data [19]. | Used for data ingestion, preprocessing, filtering, and implementation of various artifact removal methods. |

| Independent Component Analysis (ICA) | A blind source separation technique that separates statistically independent components from multi-channel EEG [19] [1]. | Used to identify and remove components corresponding to ocular, muscular, and cardiac artifacts. |

| Hybrid FLM Optimization | A hybrid Firefly and Levenberg-Marquardt algorithm used to find optimal weights for a neural network-based adaptive filter [8]. | Applied to neural network models like NARX to enhance the filter's performance in artifact removal. |

Frequently Asked Questions (FAQs)

Q1: In theory, LMS and stochastic gradient descent seem identical. What is the practical distinction in adaptive filtering?

While both operate on the principle of gradient descent, the key difference lies in how the gradient is estimated. The true gradient requires knowledge of the expected value ( E(\mathbf{x}[n]e^[n]) ), which is typically unknown. The LMS algorithm approximates this gradient instantaneously using ( \mathbf{x}[n]e^[n] ) [17]. Therefore, LMS is a specific implementation of a stochastic gradient descent method that uses this particular, efficient approximation suited for real-time filtering.

Q2: My RLS algorithm is becoming unstable, especially with a short window size. What could be the cause and how can I mitigate it?

This is a classic problem associated with RLS. A short window size can lead to an ill-conditioned information matrix, making its inversion numerically unstable and causing error accumulation [18]. Mitigation strategies include:

- Initialization: Initialize the inverse of the information matrix to a positive definite matrix (e.g., ( P(0) = \delta I ), where ( \delta ) is a large positive number) [18].

- Advanced Algorithms: Consider using RLS with Rank-Two Updates (RLSR2), which is designed to handle the movement of data in and out of a window more robustly and has shown better convergence properties in ill-conditioned cases [18].

- Forgetting Factor Tuning: Carefully tune the forgetting factor to balance the influence of old and new data.

Q3: For removing unknown artifacts from multi-channel EEG data, are traditional algorithms like RLS still sufficient?

For complex, unknown artifacts in multi-channel data, traditional algorithms like RLS may struggle due to their linear assumptions and lack of context from other channels. Recent research indicates a shift towards deep learning models for such tasks. Architectures like CLEnet, which combine CNNs for spatial/morphological feature extraction and LSTMs for temporal modeling, are specifically designed to handle multi-channel inputs and can learn to separate a wider variety of artifacts, including unknown ones, directly from data [7]. These models have demonstrated superior performance in terms of SNR and correlation coefficient on tasks involving unknown artifacts [7].

Q4: What is the role of the "forgetting factor" in the RLS algorithm, and how should I choose its value?

The forgetting factor (( \lambda )), which is between 0 and 1, exponentially weights past data. A value of ( \lambda = 1 ) considers all past data equally, while a value less than 1 discounts older observations, allowing the filter to track changes in a non-stationary signal like EEG [18] [20]. The choice is a trade-off:

- ( \lambda ) close to 1: Provides accurate estimation in stable conditions but reacts slowly to changes.

- ( \lambda ) less than 1: Allows faster tracking of signal changes but can lead to noisier estimates. For EEG, a value between 0.99 and 0.999 is often a good starting point. There are also advanced methods, like OFFRLS, that optimize the forgetting factor in real-time using heuristic optimization to improve performance [20].

The Role of Reference Signals in Adaptive Noise Cancellation

Frequently Asked Questions

What is the fundamental principle behind adaptive noise cancellation? Adaptive noise cancellation is a signal processing technique that uses a primary input (containing the desired signal plus correlated noise) and a reference input (containing only noise correlated with the primary input's noise). An adaptive filter processes the reference signal to create an optimal estimate of the noise corrupting the primary signal, then subtracts this estimate to cancel the interference while preserving the desired signal [21] [22].

Why is a reference signal critical for this process? The reference signal provides a "clean" version of the interfering noise that is uncorrelated with the target signal but correlated with the noise in the primary input. This enables the adaptive filter to model the specific noise characteristics and generate an effective cancelling signal. Without a proper reference, the system cannot distinguish between desired signal and noise [21] [23].

What are the key requirements for an effective reference signal? The reference signal must meet two essential requirements: (1) It should be highly correlated with the noise corrupting the primary signal, and (2) It should be uncorrelated with the desired target signal. If these conditions aren't met, the noise cancellation will be ineffective and may even degrade the target signal [21].

What types of reference signals are used in EEG artifact removal? In EEG research, common reference signals include:

- Separate physiological recordings: EOG (electrooculogram) for eye movement artifacts, EMG (electromyogram) for muscle artifacts, or ECG (electrocardiogram) for heart-related interference [7].

- Accelerometer data: For motion artifacts during ambulatory EEG recordings [23].

- Synthetic or simulated signals: In semi-synthetic experimental setups where artifacts are artificially added to clean EEG data [7].

Why might my adaptive noise cancellation system be performing poorly? Common issues include:

- Insufficient correlation between the reference signal and the actual noise in the primary input

- Signal leakage where the desired signal contaminates the reference input

- Inappropriate filter length or step-size parameter selection

- Non-stationary noise characteristics that change faster than the adaptive algorithm can track [21] [22]

- Physical sensor issues such as blocked microphones or poor electrode contact in EEG systems [24]

Troubleshooting Guide

Problem: Inadequate Noise Reduction

Symptoms:

- Minimal reduction in target noise levels

- High residual error after convergence

- Poor signal-to-noise ratio improvement

Diagnostic Steps:

- Check reference signal quality: Verify the reference contains a correlated version of the target noise with minimal desired signal contamination [21].

- Measure correlation: Calculate the correlation coefficient between the primary and reference inputs - it should be significant for the noise components [21].

- Verify signal paths: Ensure the physical placement of reference sensors appropriately captures the interference source [23].

Solutions:

- Reposition reference sensors closer to the noise source

- Implement pre-filtering to enhance noise components in the reference

- Consider multi-channel reference inputs for complex noise environments [22]

Problem: Target Signal Distortion

Symptoms:

- Desired signal components appear attenuated or modified

- Introduction of artifacts not present in the original signal

- Unnatural characteristics in the output signal

Diagnostic Steps:

- Test for signal leakage: Check if the desired signal appears in the reference input [21].

- Analyze cross-correlation: Measure correlation between the reference input and desired signal - it should be minimal [21].

- Verify signal decorrelation: Confirm the target signal and interference are statistically independent [21].

Solutions:

- Improve isolation between desired signal sources and reference sensors

- Add adaptive delays to compensate for propagation differences

- Implement coherence-based filtering to preserve desired signal components [22]

Problem: Slow Convergence or Instability

Symptoms:

- Extended time to reach effective cancellation

- Oscillatory behavior in filter coefficients

- System divergence or excessive output variation

Diagnostic Steps:

- Analyze step size parameters: Check if the adaptation rate is appropriate for the signal characteristics [22].

- Evaluate signal power variations: Assess if non-stationary signals require normalized algorithms [22].

- Check secondary path modeling: Verify the accuracy of the estimated transfer function between actuator and error sensor [25].

Solutions:

- Switch from LMS to NLMS algorithm for non-stationary environments [22]

- Implement variable step-size techniques

- Improve secondary path modeling accuracy

- Consider RLS algorithm for faster convergence (if computational resources allow) [22]

Performance Comparison of Adaptive Filter Algorithms

Table 1: Algorithm selection guide for EEG artifact removal applications

| Algorithm | Computational Complexity | Convergence Speed | Stability | Best For EEG Applications |

|---|---|---|---|---|

| LMS | Low | Slow | Moderate | Stationary noise environments, computational resource constraints [22] |

| NLMS | Low to Moderate | Moderate | Good | Non-stationary artifacts, changing signal conditions [22] |

| RLS | High | Fast | Good | Rapidly changing artifacts, quality-critical applications [22] |

| FxLMS | Moderate | Slow to Moderate | Moderate | Systems with secondary path effects, active noise control [26] [25] |

Table 2: Typical performance metrics for EEG artifact removal

| Artifact Type | Best Method | Typical SNR Improvement | Correlation Coefficient | Key Challenges |

|---|---|---|---|---|

| Ocular (EOG) | RLS/Adaptive Filtering | 8-12 dB | 0.90-0.95 | Avoiding neural signal removal, especially frontal lobe activity [7] [27] |

| Muscle (EMG) | Hybrid Methods | 10-15 dB | 0.85-0.92 | Overlapping frequency spectra with neural signals [7] |

| Motion Artifacts | Accelerometer Reference | 5-10 dB | 0.80-0.90 | Complex transfer function between motion and electrical interference [23] |

| Cardiac (ECG) | Adaptive Cancellation | 12-18 dB | 0.92-0.98 | Periodic nature requires precise synchronization [21] [7] |

Experimental Protocols

Protocol 1: Motion Artifact Removal Using Accelerometer Reference

Objective: Remove motion artifacts from ambulatory EEG recordings using accelerometer data as a reference signal [23].

Materials Needed:

- Mobile EEG system with dry electrodes

- Tri-axial accelerometer

- Data synchronization unit

- Signal processing software (MATLAB, Python, or similar)

Methodology:

- Setup: Mount accelerometer on subject's torso or head. Place EEG electrodes according to international 10-20 system [23].

- Calibration: Record 2-minute baseline with no movement followed by 2-minute period with typical movements but no cognitive task [23].

- Data Collection: Simultaneously record EEG and accelerometer data during experimental tasks with varying movement levels [23].

- Synchronization: Precisely align EEG and accelerometer data streams using synchronization pulses.

- Implementation:

- Use accelerometer signal as reference input to adaptive filter

- Apply NLMS algorithm with normalized step size for non-stationary characteristics

- Set filter length based on delay between motion and artifact manifestation

- Process each EEG channel separately with shared accelerometer reference [23]

Validation:

- Compare power spectral density before and after processing

- Calculate correlation between residual signal and accelerometer reference (should approach zero)

- Verify preservation of known neural responses (e.g., event-related potentials)

Protocol 2: Hybrid Broadband/Narrowband ANC for Mixed Artifacts

Objective: Address EEG recordings contaminated by both broadband (EMG) and narrowband (line noise) artifacts using reference signal decomposition [26].

Materials Needed:

- Multi-channel EEG system

- Reference sensors for target artifacts

- Signal decomposition tools

Methodology:

- Reference Signal Acquisition: Obtain reference signals for both broadband and narrowband interference sources [26].

- Signal Decomposition: Separate reference signal into broadband and narrowband components using linear prediction [26].

- Parallel Processing:

- Process broadband component with standard adaptive filter

- Process narrowband component with specialized harmonic canceller

- Recombine outputs for comprehensive artifact removal [26]

- Coefficient Weighting: Apply balancing method to manage convergence speed differences between subsystems [26].

Validation Metrics:

- Calculate relative root mean square error in temporal (RRMSEt) and frequency (RRMSEf) domains [7]

- Measure signal-to-noise ratio improvement across frequency bands

- Verify absence of artifact residual in output signal

Reference Signal Selection Guide

Table 3: Reference signal options for common EEG artifacts

| Artifact Type | Optimal Reference Signal | Alternative Options | Implementation Considerations |

|---|---|---|---|

| Ocular Artifacts | EOG electrodes | Frontal EEG channels | Risk of capturing neural signals from frontal lobes [7] |

| Muscle Artifacts | EMG from jaw/neck muscles | Temporal EEG channels | Significant spectral overlap with neural gamma activity [7] |

| Motion Artifacts | Accelerometer data | Gyroscopic sensors | Complex, non-linear relationship to electrical artifacts [23] |

| Line Noise | Synthetic 50/60 Hz reference | Empty channel reference | Requires precise frequency tracking [28] |

| Cardiac Artifacts | ECG recording | Pulse oximeter | Periodic nature requires adaptive phase tracking [21] |

The Scientist's Toolkit

Table 4: Essential research reagents and solutions for adaptive filtering experiments

| Item | Function | Example Implementation |

|---|---|---|

| Semi-Synthetic EEG Datasets | Algorithm validation with ground truth | EEGdenoiseNet: Provides clean EEG with controlled artifact addition [7] |

| Adaptive Filter Algorithms | Core processing engine | LMS, NLMS, RLS implementations for different artifact characteristics [22] |

| Reference Sensors | Capture noise sources | EOG/EMG electrodes, accelerometers, separate reference channels [23] |

| Performance Metrics | Algorithm evaluation | SNR, correlation coefficient, RRMSEt, RRMSEf calculations [7] |

| Hybrid Processing Frameworks | Complex artifact handling | Combined CNN-LSTM networks (e.g., CLEnet) for unknown artifacts [7] |

Diagram: Adaptive Noise Canceller Configuration

Diagram: Hybrid EEG Processing Workflow

Implementing Adaptive Filters: From Classic Algorithms to Advanced Architectures

Frequently Asked Questions (FAQs)

Q1: What are the key advantages of using adaptive filters like LMS, NLMS, and RLS for EEG artifact removal over other methods?

Adaptive filters are highly effective for EEG artifact removal because they can track and remove non-stationary noise, which is common in physiological signals, without requiring prior knowledge of the signal or noise statistics. Specifically, LMS (Least Mean Squares) is simple and computationally efficient, making it suitable for real-time applications. NLMS (Normalized LMS) improves upon LMS by normalizing the step size, leading to greater stability with varying signal power, which is ideal for handling amplitude variations in artifacts like eye blinks [29] [30]. RLS (Recursive Least Squares) offers faster convergence and better performance at the cost of increased computational complexity, making it suitable for applications where convergence speed is critical, though it is less common in resource-constrained real-time systems [30] [31].

Q2: When should I choose NLMS over standard LMS for ocular artifact removal?

You should choose NLMS when dealing with ocular artifacts (EOG) because these artifacts can have high and variable amplitude, causing instability in the standard LMS filter. NLMS uses a normalized step-size, which makes the filter more stable and provides consistent performance even when the input signal power changes significantly, such as during large eye blinks [29] [30]. Research has demonstrated that an NLMS-based adaptive filtering technique can achieve an average improvement in signal-to-noise ratio (SNR) of over 9 dB when removing ocular artifacts [29].

Q3: Can these classic algorithms handle multi-channel EEG data for artifact removal?

Classic adaptive filtering algorithms like LMS, NLMS, and RLS are primarily designed for single-channel applications where a separate reference noise signal (e.g., from an EOG or ECG channel) is available [30] [31]. For multi-channel data without explicit reference channels, other techniques like Independent Component Analysis (ICA) are typically employed to separate neural activity from artifacts [29] [32] [31]. However, adaptive filters can be part of a hybrid approach, used to further clean components identified by source separation methods [29].

Q4: What is a common implementation challenge with the RLS algorithm?

A primary challenge with the RLS algorithm is its high computational complexity compared to LMS and NLMS. RLS involves updating a covariance matrix and calculating its inverse, which requires more computations per time step. This can be a limiting factor for real-time applications on devices with limited processing power or battery life [30] [31].

Troubleshooting Guides

Problem 1: Poor Convergence or Slow Adaptation (LMS/NLMS)

- Symptoms: The artifact is not effectively removed; the error signal remains large.

- Potential Causes & Solutions:

- Cause: Step-size parameter (μ) is too small.

- Solution: Gradually increase the step-size until performance improves, but ensure it remains within the stability bound (0 < μ < 2 for LMS).

- Cause: The reference signal is poorly correlated with the actual artifact in the EEG.

- Solution: Verify the quality and placement of the reference electrode (e.g., for EOG, ensure VEOG and HEOG channels are properly recorded) [30].

- Cause: The filter length is too short to model the artifact's impact.

- Solution: Increase the filter order (number of taps) and re-evaluate performance.

Problem 2: Filter Instability (LMS/NLMS)

- Symptoms: The output signal diverges or shows large, unrealistic oscillations.

- Potential Causes & Solutions:

- Cause: Step-size parameter (μ) is too large.

- Solution: Reduce the step-size value. For NLMS, the step-size is automatically normalized, but the initial μ should still be set carefully, typically between 0 and 1 [30].

- Cause: Abrupt changes in signal power or electrode impedance.

- Solution: Use NLMS instead of standard LMS, as it is inherently more robust to input power variations [30].

Problem 3: High Computational Load Leading to Real-Time Processing Delays

- Symptoms: The system cannot process data samples as fast as they are acquired.

- Potential Causes & Solutions:

- Cause: Using the RLS algorithm on a low-power processor.

- Solution: Switch to the simpler LMS or NLMS algorithm. If performance is insufficient, consider optimizing the RLS code or using a processor with higher computational power [30] [31].

- Cause: The filter order is set excessively high.

- Solution: Perform a design trade-off analysis to find the minimum filter order that provides acceptable artifact removal.

Performance Comparison Table

The following table summarizes the key characteristics and application scenarios for LMS, NLMS, and RLS algorithms in EEG artifact removal.

| Algorithm | Computational Complexity | Convergence Speed | Stability | Ideal Application Scenario in EEG |

|---|---|---|---|---|

| LMS | Low | Slow | Conditionally stable [30] | Real-time systems with limited processing power; well-defined, stationary noise. |

| NLMS | Low to Moderate | Moderate (faster than LMS) | More stable than LMS [29] [30] | Removing ocular artifacts (EOG) where signal amplitude varies; general-purpose artifact removal. |

| RLS | High | Fast | Stable [30] | Scenarios requiring rapid convergence where computational resources are not a primary constraint. |

Experimental Protocol: Ocular Artifact Removal using NLMS

This protocol outlines a standard methodology for removing ocular artifacts from a single EEG channel using the NLMS adaptive filter, based on established research practices [29] [30].

1. Objective To remove ocular artifacts (eye blinks and movements) from a contaminated frontal EEG channel (e.g., Fp1) using a recorded EOG reference and the NLMS adaptive filtering technique.

2. Materials and Data Acquisition

- EEG System: A multi-channel EEG acquisition system (e.g., according to the 10-20 international standard).

- Reference Signals: Two additional electrodes to record the Vertical EOG (VEOG) and Horizontal EOG (HEOG).

- Data: The raw EEG signal

s(n)from the frontal channel is modeled ass(n) = x(n) + d(n), wherex(n)is the true EEG andd(n)is the ocular artifact. The recorded VEOG and HEOG signals form the reference inputr(n)for the adaptive filter [30].

3. Algorithm Setup and Workflow The following diagram illustrates the NLMS adaptive noise cancellation setup.

- Step 1: Filter Initialization. Initialize the NLMS adaptive filter weights

w(n)to zero. Set the filter length (L) and the step-size parameter (μ). A typical μ for NLMS is less than 1 [30]. - Step 2: Filtering and Error Calculation. For each new sample

n:- The reference input vector

r(n) = [r_veog(n), r_heog(n)]^Tis processed by the adaptive filter to produce an artifact estimatey(n). - The error signal

e(n) = s(n) - y(n)is computed, which represents the clean EEG output.

- The reference input vector

- Step 3: Weight Update. The filter weights are updated using the NLMS rule:

w(n+1) = w(n) + (μ / (||r(n)||² + ψ)) * e(n) * r(n)whereψis a small constant to prevent division by zero [30]. - Step 4: Iteration. Repeat Steps 2 and 3 for the entire duration of the signal.

4. Performance Validation

- Quantitative Metrics: Calculate performance metrics to assess the quality of the cleaned signal [29] [33].

- Signal-to-Noise Ratio (SNR): Measures the power ratio between the signal and residual artifact. An increase in SNR indicates better performance.

- Correlation Coefficient (CC): Measures the linear similarity between the cleaned signal and a ground-truth clean EEG (if available). A value closer to 1 is better.

- Root Mean Square Error (RMSE): Measures the difference between the cleaned signal and the ground truth. A lower value is better.

Research Reagent Solutions

The table below lists key computational tools and data resources essential for experimenting with adaptive filtering in EEG research.

| Resource | Type | Function in Research |

|---|---|---|

| EEGdenoiseNet [29] [34] | Benchmark Dataset | Provides clean EEG and artifact signals (EOG, EMG) to create semi-synthetic data for standardized algorithm testing and validation. |

| MATLAB with Signal Processing Toolbox | Software Environment | Offers built-in functions for implementing and simulating LMS, NLMS, and RLS algorithms, along with visualization tools for analyzing results. |

| BCI Competition IV Dataset 2b [33] | Real-world EEG Data | Supplies real EEG data contaminated with artifacts, allowing researchers to test algorithm performance under realistic conditions. |

| Python (SciPy, NumPy, MNE) | Software Environment | Provides open-source libraries for numerical computation, signal processing, and EEG-specific analysis, enabling flexible implementation of adaptive filters. |

Accelerometer Troubleshooting and FAQs

FAQ: Why is my accelerometer reading +1g at rest instead of 0g?

Accelerometers measure proper acceleration, which is the acceleration it experiences relative to freefall. When at rest on the Earth's surface, the device is accelerating upwards relative to a local inertial frame (the frame of a freely falling object). To counteract gravity and keep the sensor stationary, it experiences an upward acceleration of approximately +1g [35].

FAQ: What does it mean if the accelerometer's bias voltage is 0 VDC?

A measured Bias Output Voltage (BOV) of 0 volts typically indicates a system short or a power failure [36].

- Primary Checks:

FAQ: What does an erratic or shifting bias voltage indicate?

An unstable bias voltage suggests a very low-frequency signal is being interpreted as a change in the DC level. This is often visible in the time waveform as erratic jumping or spiking [36].

- Common Causes & Solutions:

- Poor Connections: Check for corroded, dirty, or loose connections. Repair or replace as necessary, and apply non-conducting silicone grease to reduce future contamination [36].

- Ground Loops: These occur when the cable shield is grounded at two points with differing electrical potential. Solution: Ensure the cable shield is grounded at one end only [36].

- Thermal Transients: Uneven thermal expansion of the sensor housing can be sensed as a low-frequency signal [36].

- Signal Overload: High-amplitude vibration can overload the sensor [36].

Troubleshooting Guide: Analyzing Bias Output Voltage (BOV)

The BOV is a key diagnostic tool for most accelerometer systems. The table below summarizes common issues and their resolutions [36].

| BOV Measurement | Indicated Problem | Recommended Troubleshooting Actions |

|---|---|---|

| Equals supply voltage (e.g., 18-30 VDC) | Open circuit (sensor disconnected or reverse powered) [36] | 1. Check cable connections at junction boxes and the sensor itself [36].2. Inspect the entire cable length for damage [36].3. Test cable continuity [36]. |

| 0 VDC | System short or power failure [36] | 1. Confirm power is on and connected [36].2. Check for shorts in junction box terminations and cable shields [36].3. Test for infinite resistance (>50 MΩ) between signal leads and shield [36]. |

| Low or High (out of specification) | Damaged sensor [36] | Common damage sources include excessive temperature, shock impacts, incorrect power, or electrostatic discharge. Sensor failure from long-term temperature exposure is common [36]. |

EOG Artifact Removal Troubleshooting and FAQs

FAQ: Why is my EOG signal contaminated with high-frequency noise?

High-frequency noise in EOG signals is often environmental interference.

- Electrode Placement: Ensure you are using a true bipolar configuration for each channel (differential measurement between the red and green wires on the same channel) rather than a common reference. Place the electrodes as far apart as the measurement allows to increase signal amplitude [37].

- Grounding (BIAS): Always use the BIAS (ground) electrode, typically placed on the center of the forehead or earlobe. This centers the differential amplifiers and can help cancel mains noise [37].

- Filtering: Apply a bandpass filter (e.g., 0.1 Hz to 30 Hz) and enable a notch filter at your local mains frequency (50/60 Hz) to remove line interference [37].

- Cabling & Enclosure: Bundling cables in a sleeve or using a non-grounded enclosure can act as an antenna. Try wrapping cables in a conductive material before sheathing and ensure the enclosure is properly grounded [37].

FAQ: My EOG signal is weak. How can I improve the signal-to-noise ratio?

Weak signals are a common challenge, especially with non-standard electrode placements or dry electrodes.

- Electrode Type: For the best signal quality, use pre-gelled adhesive Ag/AgCl electrodes. Dry electrodes have much higher skin impedance, which couples more environmental noise and results in weaker signals [37].

- Placement: Follow standard EOG placements for the strongest signal. For horizontal EOG, place electrodes to the left of the left eye and to the right of the right eye. For vertical EOG, place electrodes above and below one eye [37].

- Skin Preparation: Reduce skin impedance by cleaning the skin area with alcohol before applying electrodes [37].

Advanced EOG Artifact Removal Protocol

For researchers integrating EOG reference signals into EEG artifact removal algorithms, the following methodology based on Fixed Frequency Empirical Wavelet Transform (FF-EWT) and a Generalized Moreau Envelope Total Variation (GMETV) filter provides a robust framework [15].

Aim: To automatically remove EOG artifacts from single-channel EEG signals.

Procedure:

- Decomposition: Use FF-EWT to decompose the artifact-contaminated EEG signal into six Intrinsic Mode Functions (IMFs) with compact frequency support [15].

- Identification: Identify EOG-related IMFs from the set of decomposed IMFs. This is done automatically using feature thresholding based on kurtosis (KS), dispersion entropy (DisEn), and power spectral density (PSD) metrics [15].

- Filtering: Apply a finely-tuned GMETV filter to the identified artifact components to suppress the EOG content [15].

- Reconstruction: Reconstruct the clean EEG signal from the processed IMFs [15].

Performance Metrics: This method can be validated using Relative Root Mean Square Error (RRMSE), Correlation Coefficient (CC), Signal-to-Artifact Ratio (SAR), and Mean Absolute Error (MAE) on both synthetic and real EEG datasets [15].

The Scientist's Toolkit: Research Reagent Solutions

| Item | Function / Specification | Application Note |

|---|---|---|

| Piezoelectric Accelerometer | Range: ±245 m/s² (±25g); Frequency Response: 0-100 Hz [38]. | Inherent high-pass filter provides AC response; ideal for vibration. Can saturate from high-frequency resonances or shocks [35]. |

| Piezoresistive Accelerometer | DC-coupled accelerometer [35]. | Best for shock testing; contains internal gas damping to prevent resonance issues and does not experience saturation decay like piezoelectric sensors [35]. |

| Ag/AgCl Electrodes (Wet) | Pre-gelled adhesive electrodes [37]. | Gold standard for EOG/EEG; provide low skin impedance and high signal quality, minimizing environmental noise [37]. |

| Dry Electrodes | Electrodes used without gel [37]. | User-friendly but yield higher skin impedance and much weaker signals, making them more susceptible to noise [37]. |

| Twisted Pair Shielded Cable | Cable with internal twisting and external shield [36]. | Minimizes magnetically coupled noise. Shield should be grounded at one end only to prevent ground loops [36]. |

| Notch Filter | Software or hardware filter [37]. | Critical for removing power line interference (50/60 Hz) from physiological signals like EOG and EEG [37]. |

Integrated Experimental Protocol: EOG Reference-Based EEG Cleaning

This protocol outlines a methodology for using an EOG reference channel to clean a contaminated EEG signal, suitable for validating adaptive filtering algorithms [34] [15].

Objective: To evaluate the performance of a deep learning model (CLEnet) in removing mixed EOG and EMG artifacts from multi-channel EEG data.

Setup:

- Data Acquisition: Use a 32-channel EEG system. For the EOG reference, place electrodes in a bipolar configuration at the outer canthi of both eyes (horizontal EOG) and above and below one eye (vertical EOG). Ensure the BIAS (ground) electrode is properly placed [37].

- Dataset Creation:

- Semi-synthetic Data: Artificially mix clean, artifact-free EEG segments with recorded EOG and EMG signals at known Signal-to-Noise Ratios (SNRs) to create a ground-truth dataset [34].

- Real Task Data: Collect EEG data from subjects performing a task known to induce artifacts, such as a 2-back working memory task, which can involve eye movements and muscle activity [34].

Procedure:

- Preprocessing: Apply a bandpass filter (e.g., 0.5 - 45 Hz) and a 50/60 Hz notch filter to all data (EEG and EOG channels) [37].

- Model Training: Train the CLEnet model on the semi-synthetic dataset in a supervised manner. The model uses a dual-branch architecture combining Convolutional Neural Networks (CNN) and Long Short-Term Memory (LSTM) networks to extract morphological and temporal features, respectively [34].

- Validation: Evaluate the model's performance on both the semi-synthetic dataset and the real 32-channel task data. Use the clean EEG from the semi-synthetic data and artifact-manually-identified-clean segments from the real data for validation [34].

Performance Evaluation: Calculate the following metrics by comparing the model's output to the ground-truth clean signal [34]:

- Signal-to-Noise Ratio (SNR) - Higher is better.

- Correlation Coefficient (CC) - Higher is better (closer to 1).

- Relative Root Mean Square Error (RRMSE) - Lower is better.

Troubleshooting Guides & FAQs

Frequently Asked Questions

Q1: My hybrid adaptive filter model converges slowly when removing EOG artifacts from single-channel EEG. What could be the issue? A: Slow convergence often stems from an improperly tuned step size parameter (μ) in the Least Mean Squares (LMS) component of your algorithm [11]. For EOG artifacts, which are low-frequency and high-amplitude, ensure your reference signal is well-correlated with the artifact. Consider integrating a Fixed Frequency Empirical Wavelet Transform (FF-EWT) for initial artifact isolation, which can provide a cleaner reference for the adaptive filter, thereby improving convergence speed [15].

Q2: After applying a WPTEMD hybrid approach, I notice residual high-frequency muscle artifacts in my pervasive EEG data. How can I address this? A: The Wavelet Packet Transform followed by Empirical Mode Decomposition (WPTEMD) is particularly effective for various motion artifacts, but may require parameter tuning for specific EMG noise [39]. You can:

- Reassess the thresholding criteria for the Wavelet Packet Transform to target higher-frequency components.

- Incorporate a subsequent adaptive filtering stage, like a Normalized LMS filter, which is more stable and can further reduce the uncorrelated high-frequency residuals [40] [11].

Q3: When using a hybrid deep learning model like CLEnet for multi-channel EEG, the model struggles with "unknown" artifacts not seen during training. What strategies can help? A: Generalizing to unseen artifacts is a known challenge. You can improve model robustness by:

- Data Augmentation: Artificially corrupt clean EEG segments with a wide variety of synthetic artifacts (e.g., EMG, EOG, motion) during training to expose the model to more noise types [7].

- Hybrid Preprocessing: Use a front-end adaptive filter or a blind source separation method like ICA to pre-clean the data, reducing the burden on the deep learning model to handle all artifact types from raw data [41] [39].

Q4: In a real-time BCI application, my adaptive filter causes distortion in the cleaned EEG signal. How can I minimize this? A: Signal distortion typically occurs if the adaptive filter is over-fitting or the step size is too large [11].

- Algorithm Choice: Switch from the simpler LMS algorithm to a Recursive Least Squares (RLS) algorithm, which provides faster convergence and better stability for a slightly higher computational cost [40] [11].

- Parameter Tuning: Carefully reduce the step size (μ) and monitor the Mean Square Error (MSE). A smaller step size reduces misadjustment and distortion but may slow convergence, requiring a careful balance [11].

Troubleshooting Common Experimental Issues

Issue 1: Poor Performance of ICA in Low-Density, Pervasive EEG Systems

- Problem: Independent Component Analysis (ICA) performance degrades significantly when the number of EEG channels is low (e.g., < 20), as is common in wireless systems, because it lacks sufficient channels to separate sources effectively [39].

- Solution: Implement a hybrid approach where another technique performs initial artifact suppression.

- Protocol: First, apply a Wavelet Packet Transform (WPT) to decompose the signal from each channel. Identify and remove components correlating with known artifact signatures (e.g., high power in high-frequency bands for EMG). Reconstruct the signal and then apply ICA. This pre-processing provides a cleaner input for ICA, improving its ability to isolate remaining artifacts [39].

Issue 2: Ineffective Removal of Ocular Blink Artifacts with Fixed Filters

- Problem: Traditional band-pass filters fail to remove ocular blink artifacts effectively because the artifact's frequency band (3–15 Hz) overlaps with crucial neural signals in the theta and alpha bands [41].

- Solution: Use a hybrid regression-adaptive filtering method.

- Protocol:

- Reference Signal: Obtain an EOG channel or use a frontal EEG channel as a reference for the ocular blink artifact [41].

- Regression: Use a time-domain regression (e.g., Gratton and Cole algorithm) to estimate the artifact's contribution to each EEG channel and subtract it [41].

- Adaptive Refinement: Apply an adaptive filter (e.g., LMS) with the same reference signal to further clean any residual, non-stationary artifact components that the linear regression missed [41].

Experimental Protocols & Performance Data

Detailed Methodology: The WPTEMD Hybrid Approach

This protocol is designed for removing motion artifacts from pervasive EEG data without prior knowledge of artifact characteristics [39].

- Signal Decomposition: Apply a Wavelet Packet Transform (WPT) to each EEG channel. This decomposes the signal into a complete tree of frequency sub-bands, providing a fine-grained time-frequency representation.

- Artifact Component Identification: For each node in the WPT tree, calculate a statistical metric (e.g., kurtosis or entropy). Artifact-contaminated components often exhibit statistically abnormal values. Set a threshold to automatically identify these components.

- Initial Reconstruction: Set the coefficients of the identified artifact components to zero, or attenuate them, and reconstruct a preliminary "cleaned" signal using the inverse WPT.

- Further Decomposition: Subject this preliminarily cleaned signal to Empirical Mode Decomposition (EMD). EMD adaptively decomposes the signal into Intrinsic Mode Functions (IMFs).

- Final Artifact Removal: Analyze the IMFs using similar statistical criteria as in Step 2. Identify and remove any residual artifact-dominant IMFs.

- Signal Reconstruction: Reconstruct the final artifact-free EEG signal from the remaining clean IMFs.

Detailed Methodology: The FF-EWT with Adaptive Filtering

This protocol is optimized for automated removal of EOG artifacts from single-channel EEG [15].

- Fixed Frequency Decomposition: Use Fixed Frequency Empirical Wavelet Transform (FF-EWT) to decompose the single-channel EEG signal into six Intrinsic Mode Functions (IMFs). The FF-EWT adaptively creates wavelets based on the signal's Fourier spectrum, focusing on fixed frequency ranges associated with EOG artifacts.

- Automated Identification: Calculate features like kurtosis (KS), dispersion entropy (DisEn), and power spectral density (PSD) for each IMF. Use a pre-defined feature threshold to automatically identify the IMFs that are correlated with EOG artifacts.

- Filtering: Pass the identified EOG-related IMFs through a finely-tuned Generalized Moreau Envelope Total Variation (GMETV) filter. This filter is highly effective at suppressing the artifact while preserving the sharpness of the underlying neural signal.

- Adaptive Enhancement (Extension): To handle non-stationary residuals, the cleaned IMFs can be further processed by an adaptive filter (e.g., a sign-error LMS filter) configured for noise cancellation. The original contaminated signal can serve as the reference input to remove any remaining correlated noise.

- Final Reconstruction: Reconstruct the artifact-free EEG signal from the processed IMFs and the untouched, clean IMFs.

Quantitative Performance Comparison of Hybrid Methods

Table 1: Performance of Hybrid Methods on Semi-Synthetic EEG Data

| Hybrid Method | Artifact Type | Key Performance Metrics | Reported Results |

|---|---|---|---|

| WPTEMD [39] | General Motion Artifacts | Root Mean Square Error (RMSE) | Outperformed other methods by 51.88% in signal recovery accuracy. |

| FF-EWT + GMETV [15] | EOG | Correlation Coefficient (CC)Relative RMSE (RRMSE) | Higher CC and lower RRMSE on synthetic data compared to other single-channel methods. |

| CLEnet (CNN + LSTM + EMA-1D) [7] | Mixed (EMG + EOG) | Signal-to-Noise Ratio (SNR)Correlation Coefficient (CC) | SNR: 11.498 dBCC: 0.925 |

Table 2: Comparison of Underlying Adaptive Filter Algorithms

| Algorithm | Convergence Speed | Computational Complexity | Stability | Best Use Case in Hybrid Models |

|---|---|---|---|---|

| LMS [40] [11] | Slow | Low | Sensitive to step size and input statistics | Pre-processing stage where low complexity is critical. |

| Normalized LMS [40] | Faster than LMS | Moderate | More stable than LMS | Real-time systems with varying input signal power. |

| RLS [40] [11] | Fast | High | Highly stable | Final denoising stages where convergence speed and accuracy are paramount. |

The Scientist's Toolkit

Table 3: Essential Research Reagents & Computational Tools

| Item Name | Function / Explanation | Example Application / Note |

|---|---|---|

| EEGdenoiseNet [7] | A benchmark dataset containing semi-synthetic EEG signals contaminated with EMG and EOG artifacts. | Used for training and fair comparison of artifact removal algorithms [7]. |

| Fixed Frequency EWT (FF-EWT) [15] | A signal decomposition technique that creates adaptive wavelets to isolate components in fixed frequency bands. | Highly effective for initial separation of EOG artifacts in single-channel EEG [15]. |

| Generalized Moreau Envelope TV (GMETV) Filter [15] | A filtering technique optimized to suppress artifacts while preserving signal sharpness and edges. | Used post-decomposition to clean artifact-laden components without distorting neural data [15]. |

| Wavelet Packet Transform (WPT) [39] | A generalization of DWT that decomposes both the approximation and detail coefficients, providing a rich time-frequency dictionary. | Serves as the first stage in hybrid models to identify artifact components across a full range of frequencies [39]. |

| Efficient Multi-Scale Attention (EMA-1D) [7] | A 1D attention mechanism that captures cross-dimensional interactions and multi-scale features. | Integrated into deep learning models (e.g., CLEnet) to enhance feature extraction without disrupting temporal information [7]. |

Workflow Visualization

Hybrid Artifact Removal Workflow

Adaptive Filter Core Algorithm

Frequently Asked Questions (FAQs)

FAQ 1: What are the main advantages of using a hybrid CNN-LSTM model over traditional methods for EEG artifact removal?

Hybrid CNN-LSTM models overcome key limitations of traditional methods like Independent Component Analysis (ICA) and regression. Unlike these methods, which often require manual intervention, reference channels, or struggle with unknown artifacts, deep learning approaches provide automated, end-to-end artifact removal. The CNN layers excel at extracting spatial and morphological features from the EEG signal, while the LSTM layers are adept at capturing long-term temporal dependencies, which are crucial for reconstructing clean brain activity patterns [7] [3]. This combination allows the model to adaptively remove various artifacts without the need for pre-defined reference signals.

FAQ 2: Can a single CNN-LSTM model effectively remove different types of artifacts, such as EMG and EOG?

Yes, this is a key area of advancement. Earlier deep learning models were often tailored to a specific artifact type, but newer architectures are designed to handle multiple artifacts simultaneously. For instance, the CLEnet model has demonstrated effectiveness in removing mixed artifacts (EMG + EOG) from multi-channel EEG data. Furthermore, frameworks like A²DM incorporate an "artifact-aware module" that first identifies the type of artifact present and then applies a targeted denoising strategy, enabling a unified model to handle the heterogeneous distributions of different artifacts in the time-frequency domain [7] [4].

FAQ 3: How is the performance of a deep learning-based artifact removal model quantitatively evaluated?

Performance is typically measured using a suite of metrics that compare the denoised signal to a ground-truth, clean EEG signal. Common metrics include:

- SNR (Signal-to-Noise Ratio) and CC (Correlation Coefficient): Higher values indicate better performance [7] [33].

- RRMSE (Relative Root Mean Square Error): Measured in both temporal (RRMSEt) and frequency (RRMSEf) domains, with lower values being desirable [7]. These metrics assess the model's success in removing noise while preserving the underlying neural information.

Troubleshooting Guide

Problem 1: Model performance is poor on real-world, multi-channel EEG data with unknown artifacts.

- Potential Cause: The model was trained only on semi-synthetic datasets containing specific, known artifacts (like pure EMG or EOG) and has not learned the features of other contaminations or the inter-channel correlations present in multi-channel data.

- Solution:

- Utilize or create a multi-channel dataset with real artifacts for training and validation, similar to the 32-channel dataset collected for CLEnet [7].

- Incorporate an attention mechanism, such as the improved EMA-1D used in CLEnet, which helps the model focus on relevant features across different scales and channels, enhancing its ability to handle complex, real-world data [7].

- Consider data augmentation strategies for EEG and artifact signals to expand the diversity of your training data, making the model more robust [3].

Problem 2: The denoising process inadvertently removes or damages genuine neural signals.

- Potential Cause: Overly aggressive filtering or a model architecture that is not sufficiently tuned to preserve the temporal dynamics of EEG signals.

- Solution:

- Implement a time-domain compensation module (TCM), as seen in the A²DM framework, to recover potential losses of global neural information after frequency-domain filtering [4].

- Adopt a targeted cleaning approach. Instead of subtracting entire components, focus removal on the specific time periods or frequency bands where the artifact is dominant. This method has been shown to reduce artificial inflation of effect sizes and minimize biases in source localization [27].

- Use a loss function during training that specifically penalizes the distortion of clean EEG components, ensuring the generator network learns to preserve neural information [33].

Problem 3: Training is unstable, or the model fails to converge when using a GAN-based framework.

- Potential Cause: GANs are known for training instability due to the adversarial competition between the generator and discriminator networks.

- Solution:

- Use advanced loss functions. Incorporate temporal-spatial-frequency constraints or Wasserstein distance to stabilize training and improve reconstruction quality [33].

- Design a robust discriminator. A common approach is to use a 1D convolutional neural network (CNN) as the discriminator to effectively judge the quality of the generated (denoised) EEG signal [33].

Experimental Protocols & Quantitative Performance

The following table summarizes the experimental protocols and key quantitative results from recent seminal studies in deep learning-based EEG artifact removal.

Table 1: Performance Comparison of Deep Learning Models for EEG Artifact Removal

| Model Name | Architecture | Key Innovation | Artifact Types | Reported Performance (Best) |

|---|---|---|---|---|

| CLEnet [7] | Dual-scale CNN + LSTM + improved EMA-1D attention | Fuses morphological and temporal feature extraction; handles multi-channel EEG. | EMG, EOG, Mixed (EMG+EOG), ECG | Mixed Artifact Removal: SNR: 11.498 dB, CC: 0.925, RRMSEt: 0.300 [7] |

| A²DM [4] | CNN-based with Artifact-Aware Module (AAM) & Frequency Enhancement Module (FEM) | Uses artifact representation as prior knowledge for targeted, type-specific removal. | EOG, EMG | Unified Denoising: 12% improvement in CC over a benchmark NovelCNN model [4] |

| Hybrid CNN-LSTM with EMG [3] | CNN-LSTM using additional EMG reference signals | Leverages simultaneous EMG recording as a precise noise reference for cleaning SSVEP-based EEG. | Muscle Artifacts (from jaw clenching) | Effective removal while preserving SSVEP responses; quality assessed via SNR increase [3] |

| ART [42] | Transformer | Captures transient, millisecond-scale dynamics of EEG; end-to-end multichannel denoising. | Multiple artifacts | Outperformed other deep learning models in restoring multichannel EEG and improving BCI performance [42] |

| AnEEG [33] | LSTM-based GAN | Adversarial training to generate artifact-free EEG signals. | Various artifacts | Achieved lower NMSE/RMSE and higher CC, SNR, and SAR compared to wavelet-based techniques [33] |

Detailed Experimental Protocol: CLEnet

A typical state-of-the-art protocol, as used by CLEnet, involves a three-stage, supervised learning process [7]:

- Morphological Feature Extraction & Temporal Feature Enhancement: The contaminated EEG input is processed through two convolutional kernels of different scales to extract features at different resolutions. An improved EMA-1D (One-Dimensional Efficient Multi-Scale Attention) mechanism is embedded within the CNN to enhance the extraction of genuine EEG morphological features while preserving temporal information.

- Temporal Feature Extraction: The features from the first stage are passed through fully connected layers for dimensionality reduction. The refined features are then fed into an LSTM network to model the long-term temporal dependencies of the brain's electrical activity.

- EEG Reconstruction: The enhanced features from the LSTM are flattened and processed by fully connected layers to reconstruct the final, artifact-free EEG signal. The model is trained in an end-to-end manner using Mean Squared Error (MSE) as the loss function.

Signaling Pathway & Model Architecture

The following diagram illustrates the core dataflow and logical structure of a hybrid CNN-LSTM model for EEG artifact removal, synthesizing elements from the cited architectures.

CNN-LSTM EEG Denoising

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Materials and Computational Tools for EEG Artifact Removal Research

| Item / Resource | Function / Description | Example from Literature |

|---|---|---|

| EEGdenoiseNet Dataset [7] | A semi-synthetic benchmark dataset containing clean EEG segments and recorded EOG/EMG artifacts, allowing for controlled model training and evaluation. | Used for training and benchmarking models like CLEnet and A²DM [7] [4]. |

| Custom Multi-channel EEG Dataset | A dataset of real EEG recordings with genuine artifacts, essential for validating model performance on complex, real-world data beyond semi-synthetic benchmarks. | CLEnet researchers collected a 32-channel EEG dataset from subjects performing a 2-back task for this purpose [7]. |

| Independent Component Analysis (ICA) | A blind source separation method used not for direct cleaning, but for generating pseudo clean-noisy data pairs to facilitate supervised training of deep learning models. | Used in the training pipeline for the ART (Artifact Removal Transformer) model [42]. |

| Improved EMA-1D Module | A one-dimensional efficient multi-scale attention mechanism that helps the model focus on relevant features across different scales, improving feature extraction without disrupting temporal information. | A core component integrated into the CNN branch of the CLEnet architecture [7]. |