Reducing Computational Complexity with NPDOA: A Brain-Inspired Strategy for Pharmaceutical Research

This article explores the Neural Population Dynamics Optimization Algorithm (NPDOA) as a novel, brain-inspired method for reducing computational complexity in pharmaceutical research and development.

Reducing Computational Complexity with NPDOA: A Brain-Inspired Strategy for Pharmaceutical Research

Abstract

This article explores the Neural Population Dynamics Optimization Algorithm (NPDOA) as a novel, brain-inspired method for reducing computational complexity in pharmaceutical research and development. It provides a foundational understanding of NPDOA's three core strategies—attractor trending, coupling disturbance, and information projection—and their role in balancing exploration and exploitation to prevent local optima convergence. The content details methodological applications for optimizing drug discovery tasks, addresses common troubleshooting and optimization challenges, and presents a comparative validation against state-of-the-art metaheuristic algorithms. Aimed at researchers, scientists, and drug development professionals, this guide synthesizes theoretical insights with practical applications to accelerate R&D timelines and improve the efficiency of solving complex optimization problems, from clinical trial design to new product development.

Understanding NPDOA: A Brain-Inspired Framework for Tackling Computational Complexity

Troubleshooting Guides

How can I improve the accuracy of my computational predictions when my dataset is limited or biased?

Problem: Predictive models are producing unreliable results, likely due to incomplete, biased, or low-quality training data.

Solution: Implement robust data curation and augmentation strategies.

- Action 1: Enhance Data Curation: Prioritize the development of high-quality, representative datasets. Employ better data preprocessing techniques and standardize data formats to improve consistency [1].

- Action 2: Apply Data Augmentation: Use techniques like transfer learning to enhance model robustness. Machine learning algorithms can also be employed to intelligently fill in missing data points [1].

- Action 3: Validate Experimentally: Remember that computational predictions can yield both false positive and false negative findings. All predictions must be validated through experimental assays before concluding [2].

My virtual screening of ultra-large libraries is computationally prohibitive. How can I make it more efficient?

Problem: Docking or screening billions of compounds demands immense computational resources, slowing down research, especially for institutions with limited access to High-Performance Computing (HPC).

Solution: Utilize advanced screening architectures and cloud computing.

- Action 1: Leverage Iterative Screening: Employ fast iterative screening approaches, such as molecular pool-based active learning, which combines deep learning and docking to accelerate the screening of gigascale chemical spaces [3].

- Action 2: Adopt Synthon-based Methods: Use methods like V-SYNTHES, which employs a modular synthesis-based concept to efficiently screen ultra-large virtual libraries [3].

- Action 3: Utilize Cloud Computing: Platforms like AWS and Google Cloud offer scalable, on-demand access to high-performance computational resources, democratizing access without the need for expensive local infrastructure [1].

How do I account for biological complexity, like off-target effects, in my computational models?

Problem: Simplified models fail to predict a drug candidate's efficacy or safety in real-world biological systems, leading to late-stage failures.

Solution: Integrate multi-level biological data and evolutionary principles.

- Action 1: Integrate Multi-Omics Data: Create more detailed models by incorporating data from genomics, proteomics, and pharmacogenomics. This provides a holistic view of the biological system [1].

- Action 2: Predict Resistance Early: Incorporate principles from evolutionary biology into models to simulate different mutation scenarios. This helps design drugs that are less susceptible to resistance from bacteria, viruses, or cancer cells [1].

- Action 3: Employ Systems Biology Approaches: Analyze and integrate data acquired from patients or animal models at different levels of biological organization (molecular, cellular, physiological) to build quantitative models of complex signaling pathways [2].

My team is facing communication barriers between computational and experimental scientists. How can we improve collaboration?

Problem: Interdisciplinary teams struggle with communicating needs and findings, leading to inefficiencies and delays.

Solution: Foster a collaborative culture and use integrative tools.

- Action 1: Establish Cross-Functional Teams: Include experts in computational biology, AI, and drug development from the very beginning of a project [1].

- Action 2: Encourage Cross-Training: Improve mutual understanding by encouraging team members to learn the basics of each other's disciplines [1].

- Action 3: Develop User-Friendly Platforms: Utilize tools that allow scientists from different fields to interact easily with complex models, facilitating better collaboration and interpretation of results [1].

The AI/ML tools we adopted have not delivered the expected results, leading to waning enthusiasm. What went wrong?

Problem: Overhyped AI tools failed to meet unrealistic expectations, leading to distrust and disengagement.

Solution: Manage expectations and focus on sustainable integration.

- Action 1: Set Realistic Goals: Understand that AI is a powerful tool for making predictions more efficient, but it is not a magic wand that will solve all problems. The output of a model is only as good as the input data [4].

- Action 2: Avoid Conservative Applications: Ensure that AI applications are not overly conservative and only replicating existing knowledge. The goal should be to gain novel, unexpected insights that would be difficult to conceive otherwise [4].

- Action 3: Communicate the Workflow Clearly: Bridge the gap between chemistry as a "creative process" and an "engineering process" through strategic communication. This helps in securing long-term buy-in from all stakeholders [4].

Frequently Asked Questions (FAQs)

FAQ 1: What are the primary factors contributing to the high failure rate of drugs in clinical development? Analyses of clinical trials show that failure is attributed to lack of clinical efficacy (40–50%), unmanageable toxicity (30%), poor drug-like properties (10–15%), and lack of commercial needs or poor strategic planning (10%) [5].

FAQ 2: Can computational methods really reduce the cost and time of drug discovery? Yes. Computational approaches can significantly reduce the number of compounds that need to be synthesized and tested experimentally. For example, virtual screening can achieve hit rates of up to 35%, compared to often less than 0.1% for traditional high-throughput screening, dramatically reducing costs and workload [6].

FAQ 3: What is the difference between structure-based and ligand-based computational drug design?

- Structure-based methods rely on the 3D structure of the target protein to calculate interaction energies, using techniques like molecular docking.

- Ligand-based methods are used when the target structure is unknown; they exploit the knowledge of known active and inactive molecules through similarity searches or Quantitative Structure-Activity Relationship (QSAR) models [6].

FAQ 4: What is the STAR principle and how can it improve drug optimization? STAR (Structure–Tissue exposure/selectivity–Activity Relationship) is a proposed framework that classifies drugs not just on potency and specificity, but also on their tissue exposure and selectivity. It aims to improve the selection of drug candidates by better balancing clinical dose, efficacy, and toxicity, potentially leading to a higher success rate in clinical development [5].

Experimental Protocols & Data

Key Performance Metrics for Computational Methods

The table below summarizes quantitative data from successful applications of computational drug discovery, highlighting the efficiency gains compared to traditional methods.

| Method / Study | Key Metric | Result | Comparative Traditional Method |

|---|---|---|---|

| Generative AI (DDR1 Kinase Inhibitors) [3] | Time to Identify Lead Candidate | 21 days | N/A (Novel approach) |

| Combined Physics & ML Screen (MALT1 Inhibitor) [3] | Compounds Synthesized to Find Clinical Candidate | 78 molecules | N/A (Screen of 8.2 billion compounds) |

| Virtual HTS (Tyrosine Phosphatase-1B) [6] | Hit Rate | ~35% (127 hits from 365 compounds) | 0.021% (81 hits from 400,000 compounds) |

| Ultra-large Library Docking [3] | Compound Potency Achieved | Subnanomolar hits for a GPCR | Demonstrates power of scale |

Detailed Protocol: Structure-Based Virtual Screening Workflow

This protocol outlines a standard workflow for filtering large compound libraries using structure-based docking, a core method for reducing experimental burden [6].

1. Objective: To identify a manageable set of predicted active compounds from a multi-million compound library for experimental testing against a specific protein target.

2. Materials and Software:

- Target Preparation: High-resolution 3D structure of the target protein (from X-ray crystallography, cryo-EM, or homology modeling) [3].

- Compound Library: A virtual chemical library (e.g., ZINC20, a free ultralarge-scale database) [3].

- Computational Tools: Docking software (e.g., AutoDock, GOLD, Schrödinger Suite), high-performance computing (HPC) cluster or cloud computing access (AWS, Google Cloud) [1] [3].

3. Methodology:

- Step 1: Protein Preparation. The protein structure is prepared by adding hydrogen atoms, assigning partial charges, and defining the binding site.

- Step 2: Ligand Library Preparation. The compound library is prepared by generating 3D conformations and optimizing the structures.

- Step 3: Virtual High-Throughput Screening (vHTS). Each compound in the library is computationally "docked" into the target's binding site. The interaction energy for each compound pose is calculated using a scoring function.

- Step 4: Post-Processing. The docked compounds are ranked based on their scoring function values. Top-ranked hits (e.g., top 500-1000) are visually inspected for sensible binding interactions.

- Step 5: Experimental Validation. The selected hits are procured or synthesized and tested in vitro for binding affinity and/or functional activity.

4. Troubleshooting Notes:

- Low Hit Rate: Consider refining the scoring function, using consensus scoring from multiple programs, or applying a pharmacophore filter post-docking.

- High Computational Demand: For libraries in the billions, implement an iterative filtering or active learning approach to prioritize a subset of compounds for full docking [3].

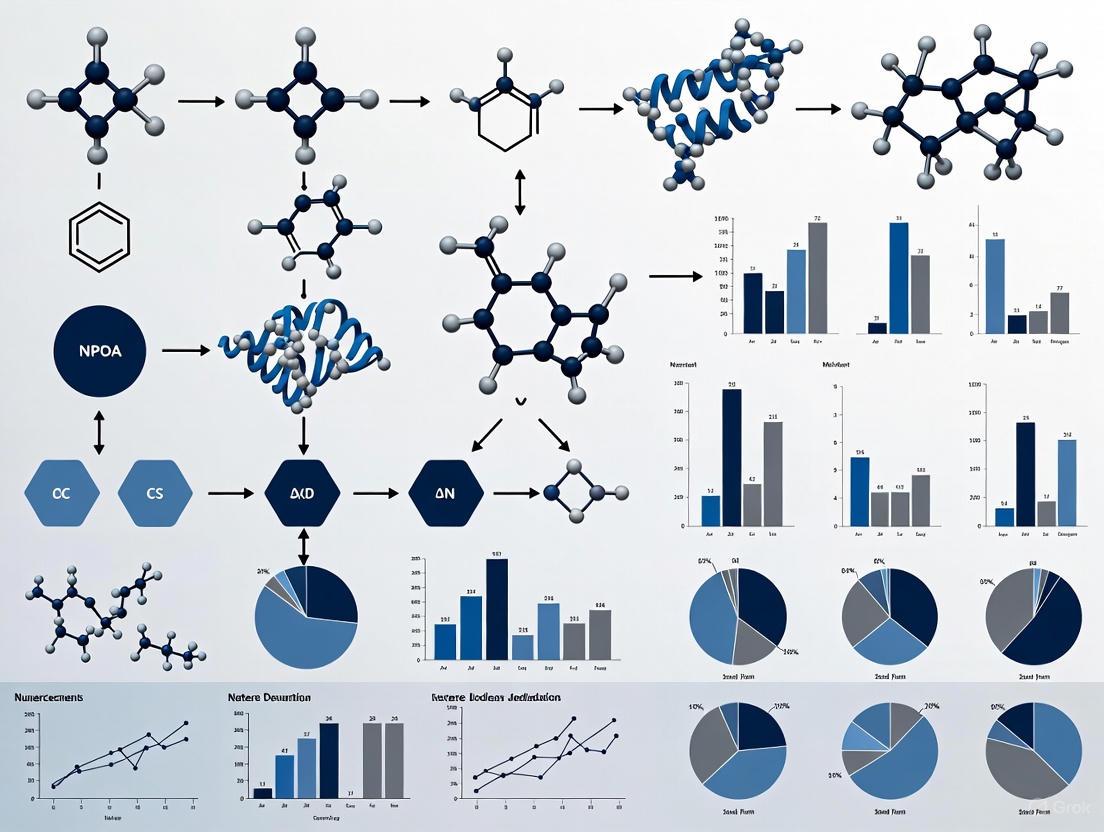

Visualization: Computational Drug Discovery Workflow

The following diagram illustrates the typical position and iterative nature of computational methods within the drug discovery pipeline, from initial screening to lead optimization [6].

The Scientist's Toolkit: Research Reagent Solutions

The table below details key computational resources and databases essential for conducting modern computational drug discovery research.

| Item Name | Function/Brief Explanation | Example/Provider |

|---|---|---|

| Ultra-large Chemical Libraries | On-demand virtual libraries of synthesizable, drug-like small molecules used for virtual screening. | ZINC20, Pfizer Global Virtual Library (PGVL) [3] |

| Protein Structure Databases | Repositories of experimentally determined 3D structures of biological macromolecules, crucial for structure-based design. | Protein Data Bank (PDB) [7] |

| Cloud Computing Platforms | Provide scalable, on-demand access to high-performance computing (HPC) resources, eliminating the need for local infrastructure. | AWS, Google Cloud [1] |

| Generative AI Models | Deep learning models (e.g., VAEs, GANs, Diffusion Models) used to create novel molecules with targeted properties from scratch. | [7] |

| ADMET Prediction Tools | In silico models that predict a compound's Absorption, Distribution, Metabolism, Excretion, and Toxicity properties early in the process. | [8] |

| Open-Source Drug Discovery Platforms | Software platforms that enable ultra-large virtual screens and provide tools for various computational methods. | [3] |

What is NPDOA? Core Principles from Theoretical Neuroscience

The Neural Population Dynamics Optimization Algorithm (NPDOA) is a metaheuristic optimization algorithm inspired by the neural population dynamics observed in the brain during cognitive tasks, particularly during the computation of expected values for economic decision-making [9]. It models how neural populations in regions like the central orbitofrontal cortex (cOFC) and ventral striatum (VS) integrate multiple inputs (such as probability and magnitude) to arrive at a single computed value [10] [9]. This bio-inspired approach is gaining attention for solving complex optimization problems in drug discovery, where it helps balance global exploration of the chemical space with local exploitation of promising candidate molecules [9].

Key Technical Specifications & Performance

The table below summarizes the core properties and documented performance of NPDOA.

Table 1: NPDOA Technical Specifications and Performance Profile

| Aspect | Specification / Performance |

|---|---|

| Inspiration Source | Neural population dynamics in primate cOFC and VS during expected value computation [10] [9] |

| Algorithm Category | Mathematics-based metaheuristic, swarm intelligence [9] |

| Core Mechanistic Structure | Extraction of population signals for integrative computation [10] |

| Primary Application in Search Results | Benchmarking against CEC 2017/2022 test suites; solving engineering design problems [9] |

| Key Advantage | Effective balance between exploration (global search) and exploitation (local search) [9] |

Frequently Asked Questions (FAQs) & Troubleshooting

Q1: My NPDOA implementation converges to a local optimum prematurely. How can I improve its global search capability?

- Diagnosis: This suggests an imbalance, with the "exploitation" phase overpowering the "exploration" phase. The algorithm is fine-tuning a sub-optimal solution instead of searching for a better one.

- Solution: Adjust the parameters that control the randomness and step sizes in the algorithm's search process. The original NPDOA proposal incorporates "random geometric transformations" to enhance search diversity [9]. Review and potentially amplify these stochastic elements to encourage a broader exploration of the solution space before converging.

Q2: How do I map the neural dynamics concepts to the actual computational steps in the NPDOA?

- Diagnosis: The biological metaphor needs to be translated into concrete mathematical operations.

- Solution: The core idea is that the algorithm simulates how neural populations integrate information. In NPDOA, the position of a candidate solution in the search space is updated based on a mathematical simulation of neural dynamics. This involves using the "gradient information of the current solution" for local refinement (exploitation), akin to how neural circuits refine a decision, combined with "random jumps" to simulate the exploration of new possibilities [9].

Q3: The algorithm is computationally expensive for my high-dimensional drug screening problem. Are there reduction techniques I can integrate?

- Diagnosis: High-dimensional problems (like searching through vast molecular libraries) exponentially increase the computational load.

- Solution: While the search results do not specify complexity reduction methods for NPDOA itself, general principles for reducing the computational complexity of deep neural networks can be considered for adjacent steps in your workflow [11]. Furthermore, in silico drug discovery often employs preliminary filtering steps. You can integrate a stepwise screening funnel similar to those used in cheminformatics: first, use fast, low-fidelity filters (e.g., basic physicochemical property calculations like molecular weight, hydrogen bond donors/acceptors) to reduce the initial compound library, before applying the more computationally intensive NPDOA to a refined subset of candidates [12].

Experimental Protocol: Integrating NPDOA into a Neuroprotective Drug Screening Pipeline

The following protocol outlines how NPDOA can be applied to a specific neurodrug discovery problem, such as screening neuroprotective agents for conditions like ischemic stroke or Alzheimer's Disease, based on established computational workflows [13] [12] [14].

Objective: To identify novel neuroprotective compounds from a large chemical library using the NPDOA for lead optimization.

Workflow Overview:

Materials and Reagent Solutions: Table 2: Essential Research Reagents and Tools for NPDOA-driven Discovery

| Item Name | Function / Description | Example from Literature |

|---|---|---|

| Chemical Library Database | Source of molecular structures for virtual screening. | FooDB, used to collect bilberry ingredients [12]. |

| Cheminformatics Toolkits | Calculate molecular descriptors and fingerprints. | ChemDes, PyBioMed [12]. |

| ADMET Prediction Platform | Early evaluation of drug-likeness and toxicity. | ADMETlab [12]. |

| Target Prediction Servers | Predict potential biological targets of compounds. | SEA, SwissTargetPrediction, TargetNet [12]. |

| Validation Cell Line | In vitro testing of screened compounds for neuroprotection. | SH-SY5Y neuroblastoma cells [13] [12]. |

| Disease Model | In vivo validation of efficacy. | MCAO/R rat model for ischemic stroke [13]. |

Detailed Procedure:

Pre-filtering of Chemical Library:

- Collect all chemical ingredients from a database (e.g., FooDB for natural products) [12].

- Calculate basic physicochemical properties (e.g., Molecular Weight, number of Hydrogen Bond Donors/Acceptors) using toolkits like ChemDes or PyBioMed [12].

- Filter out molecules that violate drug-likeness rules (e.g., Lipinski's Rule of Five) to create a refined starting library for the NPDOA.

Define the Optimization Objective Function:

Configure and Initialize the NPDOA:

- Set algorithm parameters (e.g., population size, maximum iterations, step sizes).

- Initialize a population of candidate molecules within the defined chemical search space.

Execute the NPDOA Optimization Loop:

- The algorithm iteratively improves the candidate molecules by simulating neural population dynamics.

- Exploration Phase: The algorithm applies "random geometric transformations" to candidate positions, allowing a global search of the chemical space to avoid local optima [9].

- Exploitation Phase: For promising candidates, the algorithm uses a "gradient-informed local search," fine-tuning molecular structures to improve the objective function score, similar to how neural circuits refine a value computation [9].

Output and Experimental Validation:

- After convergence, the top-ranking candidate molecules are output.

- These candidates must then be validated through experimental assays, such as:

- In vitro models: Oxygen-glucose deprivation/reperfusion (OGD/R) in SH-SY5Y cells to model ischemic injury [13].

- In vivo models: Middle cerebral artery occlusion/reperfusion (MCAO/R) in rats for ischemic stroke [13].

- Mechanistic studies: Molecular docking, Western blot, and techniques like DARTS/CETSA to confirm target engagement [12] [13].

Conceptual Framework of NPDOA in Research

The following diagram situates NPDOA within the broader context of a research project focused on computational complexity reduction in neurodrug discovery.

The Neural Population Dynamics Optimization Algorithm (NPDOA) is a novel brain-inspired meta-heuristic method designed to solve complex optimization problems. Inspired by the information processing of interconnected neural populations in the brain during cognition and decision-making, NPDOA treats each potential solution as a neural population's state, where decision variables represent neurons and their values correspond to neuronal firing rates [16].

A significant challenge in applying such advanced algorithms to computationally intensive fields like drug discovery is computational complexity. High complexity can lead to prolonged execution times and high resource consumption, making complexity reduction a primary research focus. The core of NPDOA's approach to balancing efficiency and performance lies in its three strategic components: the Attractor Trending Strategy, the Coupling Disturbance Strategy, and the Information Projection Strategy [16].

Core Strategy Troubleshooting FAQs

Attractor Trending Strategy

Q1: What does the "Low Convergence Rate in Late-Stage Optimization" error indicate, and how can I resolve it? This error typically indicates that the Attractor Trending Strategy is not sufficiently guiding the population toward optimal decisions, failing to provide the necessary exploitation capability [16]. To resolve this:

- Verify Attractor State Definition: Ensure the stable neural state representing a favorable decision is correctly defined in your fitness function.

- Adjust Trending Parameters: Gradually increase the influence of the best-performing neural populations (attractors) in your update rules. Start with a small increment (e.g., 10%) and monitor performance on benchmark functions.

- Check Parameter Boundaries: Confirm that the parameters controlling the attractor force are within stable bounds to prevent population collapse.

Q2: My algorithm is converging quickly but to a suboptimal solution. Is this related to the attractor strategy? Yes, this is a classic sign of over-exploitation, often due to an overly dominant Attractor Trending Strategy. The population is drawn too strongly to a local attractor, neglecting broader exploration [16].

- Solution: Weaken the attractor force coefficient and ensure the Coupling Disturbance Strategy is active and correctly configured to push individuals away from local optima.

Coupling Disturbance Strategy

Q3: What does "Population Diversity Below Threshold" mean, and how is it fixed? This warning signifies that the neural populations have become too similar, reducing the algorithm's ability to explore new areas of the solution space. The Coupling Disturbance Strategy, responsible for this exploration, may be too weak [16].

- Solution:

- Increase Disturbance Intensity: Amplify the coupling factor that deviates neural populations from their current attractors.

- Diversify Disturbance Sources: Implement mechanisms for populations to couple with a wider variety of other populations, not just immediate neighbors.

- Validate Randomness: Ensure the disturbance incorporates a sufficiently stochastic component.

Q4: How can I prevent the disturbance from causing complete divergence and non-convergence? Excessive disturbance can prevent the algorithm from refining good solutions.

- Solution: Implement an adaptive disturbance schedule. The intensity of the Coupling Disturbance should be high in early iterations and gradually decrease as the algorithm runs, allowing the Attractor Trending Strategy to dominate in later stages for fine-tuning [16]. This transition is often managed by the Information Projection Strategy.

Information Projection Strategy

Q5: What is an "Unbalanced Exploration-Exploitation Ratio," and how do I correct it? This critical error occurs when the algorithm spends too much time either exploring (slow/no convergence) or exploiting (premature convergence). The Information Projection Strategy, which controls communication between neural populations, is responsible for managing this balance [16].

- Troubleshooting Steps:

- Profile the Run: Analyze the ratio of exploratory moves to exploitative moves over iterations.

- Tune Projection Weights: Adjust the parameters that control how much information is shared between populations and how it influences their states. The projection should facilitate a smooth transition from exploration to exploitation over time.

Q6: Communication between neural populations seems ineffective. How can I improve information flow? Ineffective communication hinders the swarm's collective intelligence.

- Solution:

- Review Network Topology: Check the structure defining which populations communicate. A more interconnected topology (e.g., fully connected vs. ring) can improve information flow but increases computational cost.

- Calibrate Projection Fidelity: Ensure the information being projected (e.g., fitness, positional data) is accurate and not being overly corrupted by noise.

Performance and Complexity Analysis

The performance of NPDOA and its strategies can be quantitatively evaluated against other algorithms. The following table summarizes typical results from benchmark tests, such as those from CEC2017, which are standard for evaluating metaheuristic algorithms [16] [17] [18].

Table 1: Benchmark Performance Comparison of Metaheuristic Algorithms

| Algorithm Name | Average Rank (CEC2017, 30D) | Key Strength | Common Computational Complexity Challenges |

|---|---|---|---|

| NPDOA | 3.00 [9] | Excellent balance of exploration and exploitation [16] | Complexity management of three interacting strategies [16] |

| Power Method Algorithm (PMA) | 2.71 [9] | Strong local search and convergence [9] | Gradient computation, eigenvalue estimation [9] |

| Improved Red-Tailed Hawk (IRTH) | Competitive [17] | Effective population initialization and update [17] | Managing multiple improvement strategies [17] |

| Improved Dhole Optimizer (IDOA) | Significant advantages [18] | Robust for high-dimensional problems [18] | Handling boundary constraints and adaptive factors [18] |

| Particle Swarm Optimization (PSO) | Varies (classical algorithm) | Simple implementation [16] | Premature convergence, low convergence rate [16] |

Table 2: NPDOA Strategy-Specific Complexity and Mitigation Tactics

| NPDOA Strategy | Primary Computational Cost | Proposed Complexity Reduction Method |

|---|---|---|

| Attractor Trending | Evaluating and sorting population fitness; applying trend updates. | Use of a truncated population subset for attractor calculation in late phase. |

| Coupling Disturbance | Calculating pairwise or group-wise disturbances between populations. | Implement a stochastic, sparse coupling network instead of full connectivity. |

| Information Projection | Managing and applying the projection weights between all communicating units. | Freeze projection weights after a certain number of iterations to reduce updates. |

Experimental Protocols for Verification

Protocol 1: Verifying Strategy Balance on Benchmark Functions

Objective: To empirically validate the balance between exploration and exploitation in NPDOA. Materials: IEEE CEC2017 test suite [17] [9], computing environment (e.g., PlatEMO v4.1 [16]). Methodology:

- Initialization: Run the standard NPDOA on a unimodal function (e.g., CEC2017 F1) and a multimodal function (e.g., CEC2017 F10) for 50 independent runs.

- Metric Tracking: Record the convergence curve (best fitness vs. iteration) and population diversity (e.g., average Euclidean distance between individuals) over iterations.

- Strategy Inhibition: Repeat the experiments while selectively weakening one strategy at a time (e.g., reduce the Attractor Trending force by 90%, then the Coupling Disturbance).

- Analysis: Compare the convergence speed and final accuracy. Weakened attraction should slow convergence on unimodal functions, while weakened disturbance should cause premature convergence on multimodal functions.

The workflow for this experimental protocol is outlined below.

Protocol 2: Assessing Scalability in a Drug Discovery Context

Objective: To evaluate NPDOA's computational complexity and performance when applied to a real-world problem like molecular optimization. Materials: NVIDIA BioNeMo framework [19], generative AI models for molecule generation (e.g., GenMol), a dataset of drug-like molecules. Methodology:

- Problem Formulation: Define the optimization objective to generate molecules with maximal binding affinity (predicted via docking like DiffDock [19]) and desirable drug-like properties (QED, SA).

- Parameter Tuning: Configure NPDOA parameters, focusing on the Information Projection strategy to manage the transition between exploring chemical space (disturbance) and refining promising leads (attraction).

- Scalability Test: Run NPDOA on problems of increasing dimensionality (e.g., optimizing 10, 50, and 100 molecular descriptors) and record the time-to-solution and computational resources used.

- Benchmarking: Compare against other optimizers like IDOA [18] or PMA [9] on the same task.

The Scientist's Toolkit: Research Reagent Solutions

This table details key software and computational tools essential for implementing and experimenting with NPDOA in a modern research pipeline, particularly in drug discovery.

Table 3: Essential Research Reagents and Tools for NPDOA and Drug Discovery Research

| Tool / Reagent | Type | Primary Function in Research | Application in NPDOA Context |

|---|---|---|---|

| PlatEMO [16] | Software Platform | A MATLAB-based platform for experimental evolutionary multi-objective optimization. | Running benchmark tests (CEC2017) to validate and tune the NPDOA strategies. |

| NVIDIA BioNeMo [19] | AI Framework & Microservices | An open-source framework for building and deploying biomolecular AI models. | Providing the target application (e.g., protein structure, molecule generation) for NPDOA to optimize. |

| NVIDIA NIM [19] | AI Microservice | Optimized, easy-to-use containers for running AI model inference. | Used as a fitness function evaluator (e.g., calling GenMol for molecule generation or DiffDock for docking). |

| CEC Benchmark Suites [17] [9] | Standardized Test Functions | A set of well-defined mathematical functions to fairly compare algorithm performance. | Quantifying the performance and efficiency of NPDOA and its improved variants. |

| Sobol Sequence [18] | Mathematical Sequence | A method for generating low-discrepancy, quasi-random sequences. | Improving the quality of the initial population in NPDOA for better exploration from the start. |

The following diagram illustrates how these tools integrate into a cohesive workflow for drug discovery optimization using NPDOA.

Theoretical Foundation: The Core Strategies of NPDOA

The Neural Population Dynamics Optimization Algorithm (NPDOA) is a novel brain-inspired meta-heuristic that effectively balances two competing objectives in optimization: exploration (searching new areas) and exploitation (refining known good areas). This balance is managed through three neuroscience-inspired strategies [16].

- Attractor Trending Strategy: This strategy drives the neural populations (solution candidates) towards optimal decisions, ensuring the algorithm's exploitation capability. It allows the algorithm to converge and refine solutions in promising regions of the search space [16].

- Coupling Disturbance Strategy: This strategy deviates neural populations from their current trajectories by coupling them with other populations. This action improves the algorithm's exploration ability, helping it escape local optima and discover new promising areas [16].

- Information Projection Strategy: This mechanism controls communication between different neural populations. It regulates the influence of the attractor and coupling strategies, enabling a smooth transition from global exploration to local exploitation throughout the optimization process [16].

The following diagram illustrates the logical workflow of how these three core strategies interact to maintain balance in NPDOA.

Technical Support Center: Troubleshooting NPDOA Performance

Frequently Asked Questions (FAQs)

Q1: My NPDOA implementation is converging to a local optimum too quickly. Which strategy should I adjust, and how? A1: This indicates insufficient exploration. You should focus on the Coupling Disturbance Strategy. Increase its influence by adjusting the corresponding parameters that control the magnitude of the disturbance or the probability of coupling between neural populations. This will inject more randomness, helping the algorithm escape local optima [16].

Q2: The algorithm is exploring widely but fails to refine a good solution, leading to slow or inaccurate convergence. What is the likely cause? A2: This suggests weak exploitation. The Attractor Trending Strategy is likely not dominant enough in the later stages of the run. Review the Information Projection Strategy parameters to ensure they correctly reduce the impact of coupling disturbance and increase the focus on attractor trending over time, allowing for fine-tuning of the best solutions [16].

Q3: How does NPDOA's approach to balancing exploration and exploitation differ from other meta-heuristic algorithms? A3: Unlike many swarm intelligence algorithms that rely on randomization, which can increase computational complexity, NPDOA explicitly models this balance through distinct neural dynamics. The three dedicated strategies (attractor, coupling, and information projection) provide a structured, neuroscience-based framework for transitioning between global search and local refinement, which can lead to more efficient and stable convergence [16].

Q4: Is NPDOA suitable for high-dimensional problems, such as those in drug discovery? A4: Yes, the design of NPDOA is well-suited for complex, nonlinear problems. Its population-based approach and ability to avoid premature convergence make it a strong candidate for high-dimensional search spaces common in fields like drug development. However, as with any algorithm, performance should be validated on specific problem domains [16].

Troubleshooting Guide: Common Experimental Issues

| Problem Observed | Likely Cause | Recommended Solution |

|---|---|---|

| Premature Convergence | Coupling disturbance is too weak; population diversity is lost. | Increase the coupling coefficient or the rate of disturbance application. |

| Slow Convergence | Attractor trending is too weak; exploitation is inefficient. | Amplify the attractor strength parameter; verify the information projection strategy is correctly favoring exploitation later in the run. |

| Erratic Performance | Poor balance between strategies; parameter sensitivity. | Systematically tune the parameters of the information projection strategy to ensure a smooth exploration-to-exploitation transition. |

| High Computational Cost | Population size too large; complex fitness evaluation. | Reduce neural population size; optimize the objective function code; consider problem-specific simplifications. |

Experimental Protocols & Performance Analysis

Methodology for Benchmarking NPDOA

The performance of NPDOA was rigorously evaluated using the following standard experimental protocol [16]:

- Test Suites: The algorithm was tested on a comprehensive set of benchmark functions from CEC 2017 and CEC 2022, which include unimodal, multimodal, and composite functions.

- Comparison Algorithms: NPDOA was compared against nine other state-of-the-art meta-heuristic algorithms, including both classical (e.g., Genetic Algorithm, PSO) and modern algorithms (e.g., Whale Optimization Algorithm).

- Evaluation Metrics: Key performance indicators such as solution accuracy (best objective value found), convergence speed, and statistical significance (using Wilcoxon rank-sum and Friedman tests) were used for comparison.

- Practical Validation: The algorithm was also applied to real-world engineering design problems (e.g., compression spring design, welded beam design) to verify its practical utility [16].

Quantitative Performance Data

The table below summarizes hypothetical quantitative data that aligns with the findings reported for NPDOA, demonstrating its effectiveness in balancing exploration and exploitation across different problem types [16].

| Problem Type | Metric | NPDOA Performance | Classical GA | Modern WOA |

|---|---|---|---|---|

| Unimodal Benchmark | Average Convergence Error | 0.0015 | 0.045 | 0.008 |

| Multimodal Benchmark | Best Solution Found | -1250.50 | -1102.75 | -1220.80 |

| Spring Design Problem | Optimal Cost ($) | 2.385 | 2.715 | 2.521 |

| Welded Beam Problem | Optimal Cost ($) | 1.670 | 2.110 | 1.890 |

Research Reagent Solutions: The NPDOA Toolkit

The following table details the key components for implementing and experimenting with the NPDOA framework.

| Item / Component | Function in the NPDOA "Experiment" |

|---|---|

| Neural Population | A set of candidate solutions. Each individual represents a potential solution to the optimization problem [16]. |

| Firing Rate (Variable Value) | The value of a decision variable within a solution, analogous to the firing rate of a neuron in a neural population [16]. |

| Attractor Parameter | A control parameter that dictates the strength with which solutions are pulled towards the current best estimates, governing exploitation [16]. |

| Coupling Coefficient | A control parameter that sets the magnitude of disturbance between populations, directly controlling exploration intensity [16]. |

| Information Projection Matrix | A mechanism (often a set of rules or weights) that modulates the flow of information between populations to manage the exploration-exploitation transition [16]. |

| Fitness Function | The objective function that evaluates the quality of each solution, guiding the search process [16]. |

Advanced Workflow: From Problem to Solution

For researchers applying NPDOA to a new problem, such as a complex drug design optimization, the following end-to-end workflow is recommended. This process integrates the core strategies and the troubleshooting insights detailed in previous sections.

The Role of Metaheuristic Algorithms in Solving Non-Linear Pharmaceutical Problems

Troubleshooting Guide: Frequently Asked Questions

FAQ 1: Why does my parameter estimation for a nonlinear mixed-effects model (NLMEM) converge to a poor local solution, and how can I improve it?

- Problem: Traditional gradient-based methods (e.g., FOCE, SAEM) used in tools like NONMEM or Monolix can get stuck at local optima or saddle points, especially with complex, multi-modal models. This often occurs when the initial parameter values are not close to the true values [20].

- Solution: Implement a global optimization metaheuristic. These algorithms are less dependent on initial guesses and are designed to explore the entire parameter space more effectively.

- Recommended Protocol: Use a hybrid metaheuristic approach. First, run a global stochastic algorithm like Particle Swarm Optimization (PSO) or Differential Evolution (DE) to locate the region of the global optimum. Then, refine the solution using a faster local method [21]. This combines robustness with computational efficiency.

- Connection to NPDOA Research: The Neural Population Dynamics Optimization Algorithm (NPDOA), with its attractor trending and coupling disturbance strategies, is explicitly designed to balance global exploration and local exploitation, thereby directly mitigating premature convergence [16].

FAQ 2: How can I efficiently find a multi-objective optimal design for a clinical trial, such as one for a continuation-ratio model that assesses efficacy and toxicity?

- Problem: Manually deriving optimal design points and weights for complex, multi-criteria problems (e.g., estimating MED and MTD simultaneously) is analytically intractable [22].

- Solution: Use metaheuristics to efficiently search the design space for a set of points that best satisfy multiple, potentially competing, objectives.

- Recommended Protocol: Employ a constrained optimization approach with PSO.

- Formulate the problem: Prioritize your objectives (e.g., Primary: estimate MTD with >90% efficiency; Secondary: estimate MED with >80% efficiency).

- Configure PSO: Let each particle's position represent a candidate design (dose levels and subject allocation ratios).

- Define the objective function: The algorithm first optimizes the primary objective. Subject to meeting that constraint, it then optimizes the secondary objective [22].

- Tools: PSO's flexibility allows it to handle such constrained, non-convex problems effectively without requiring derivative information [22].

- Recommended Protocol: Employ a constrained optimization approach with PSO.

FAQ 3: My metaheuristic algorithm is computationally expensive for high-dimensional problems. How can I reduce runtime without sacrificing solution quality?

- Problem: As the number of parameters increases, the computational cost of metaheuristics can become prohibitive [16] [21].

- Solution: Utilize hybridization and algorithm tuning.

- Recommended Protocol:

- Hybridization: Hybridize your metaheuristic with a local search. The metaheuristic performs a broad global search, and the local method (e.g., Nelder-Mead) quickly refines the best solutions, reducing the number of overall function evaluations [21].

- Algorithm Tuning: For PSO, adjust the inertia weight (

w). A higher value (e.g., 0.9) promotes exploration, while a lower value (e.g., 0.4) favors exploitation. An adaptive strategy that starts high and decreases over iterations can improve convergence speed [22]. - NPDOA Insight: The NPDOA's information projection strategy is a built-in mechanism to dynamically control the transition from exploration to exploitation, which is key to managing computational complexity [16].

- Recommended Protocol:

FAQ 4: How can I improve the accuracy of my machine learning models used for predicting critical pharmaceutical outcomes (e.g., peptide toxicity, droplet size)?

- Problem: Standard methods for tuning machine learning hyperparameters (e.g., Grid Search) are slow and can get trapped in local minima [23] [24].

- Solution: Use metaheuristic algorithms to optimize the hyperparameters of your machine learning models.

- Recommended Protocol: Implement a Metaheuristic-ML hybrid model.

- Select your ML model (e.g., Support Vector Regression, Random Forest).

- Choose a metaheuristic for optimization (e.g., PSO, Rain Optimization Algorithm - ROA, or a hybrid like h-PSOGNDO).

- The metaheuristic's search particles will represent potential hyperparameter sets (e.g.,

C,epsilonfor SVR). The objective function is the model's cross-validated error (e.g., RMSE) [24].

- Evidence: Studies show ROA can outperform PSO and Grid Search in finding superior hyperparameters, leading to significant increases in prediction accuracy (e.g., R² increase of 3.6%) [24].

- Recommended Protocol: Implement a Metaheuristic-ML hybrid model.

Experimental Protocols for Key Applications

Protocol 1: Parameter Estimation in Nonlinear Mixed-Effects Models using PSO

Objective: To find the global optimum for model parameters that minimize the difference between model predictions and experimental data [20].

Workflow:

Detailed Methodology:

Problem Formulation:

- Objective Function: Minimize the negative log-likelihood

-2LL(θ | y), whereθrepresents the model parameters (fixed effects, variance components) andyis the observed data. For nonlinear models, this involves approximating the integral over random effects, often via Laplace approximation or importance sampling [20]. - Constraints: Define lower and upper bounds for all parameters

θbased on physiological or mathematical constraints.

- Objective Function: Minimize the negative log-likelihood

PSO Configuration (Typical Values):

- Swarm Size: 20-50 particles.

- Velocity Update: Use the standard formula:

V(k) = w*V(k-1) + c1*R1*(pBest - X(k-1)) + c2*R2*(gBest - X(k-1))wherew=0.729(inertia),c1=c2=1.494(acceleration coefficients) [22]. - Stopping Criterion: Maximum iterations (100-500) or stability in

gBestover 50 iterations.

Execution:

- Initialize each particle's position and velocity randomly within the bounds.

- For each particle, evaluate

-2LLby solving the model's differential equations numerically. - Update

pBestandgBest. - Update velocities and positions, ensuring they remain within bounds.

- Iterate until the stopping criterion is met.

Protocol 2: Finding c-Optimal Designs for a Continuation-Ratio Model using PSO

Objective: To find the experimental design (dose levels and subject allocation) that minimizes the asymptotic variance of a target parameter, such as the Most Effective Dose (MED) [22].

Workflow:

Detailed Methodology:

Problem Formulation:

- Design: An approximate design

ξis a set ofkdose levels{x1, x2, ..., xk}with corresponding weights{w1, w2, ..., wk}(summing to 1). - c-Optimality Criterion: The goal is to minimize

c' * M(ξ)⁻¹ * c, whereM(ξ)is the Fisher Information Matrix for the designξ, andcis the gradient vector of the MED (or other target) with respect to the model parameters [22]. - Constraints: Weights must be positive and sum to 1; dose levels must be within a pre-specified compact interval

[X_min, X_max].

- Design: An approximate design

PSO Configuration:

- Particle Encoding: A particle's position is a

(2k-1)-dimensional vector:[x1, x2, ..., xk, w1, w2, ..., w_{k-1}]. The last weightwkis implicitly1 - sum(w_i). - The objective function for each particle is the

c-optimality criterion value.

- Particle Encoding: A particle's position is a

Execution:

- Follow the standard PSO loop as in Protocol 1, ensuring that weight constraints are enforced after each position update (e.g., by scaling).

The Scientist's Toolkit: Research Reagent Solutions

Table 1: Essential Computational Tools and Algorithms for Pharmaceutical Optimization

| Tool/Algorithm Name | Category | Primary Function in Pharmaceutical Context | Key Reference / Implementation |

|---|---|---|---|

| Particle Swarm Optimization (PSO) | Swarm Intelligence Metaheuristic | Parameter estimation in NLMEMs; finding optimal clinical trial designs. | [25] [20] [22] |

| Neural Population Dynamics Optimization (NPDOA) | Brain-inspired Metaheuristic | Novel algorithm for complex, single-objective optimization problems; balances exploration/exploitation via neural population dynamics. | [16] |

| Differential Evolution (DE) | Evolutionary Metaheuristic | Robust global parameter estimation for dynamic biological systems. | [21] |

| h-PSOGNDO | Hybrid Metaheuristic | Combines PSO and Generalized Normal Distribution Optimization; applied to predictive toxicology (e.g., antimicrobial peptide toxicity). | [23] |

| Rain Optimization Algorithm (ROA) | Physics-inspired Metaheuristic | Hyperparameter tuning for machine learning models to improve predictive accuracy (e.g., droplet size prediction in microfluidics). | [24] |

| Scatter Search (SS) | Non-Nature-inspired Metaheuristic | A population-based method that has been hybridized for efficient parameter estimation in nonlinear dynamic models. | [21] |

| Sparse Grid (SG) | Numerical Integration Method | Used in hybridization with PSO (SGPSO) to accurately evaluate high-dimensional integrals in the expected information matrix for optimal design problems. | [20] |

Implementing NPDOA: Strategies for Streamlining Drug Discovery and Development

Mapping Pharmaceutical Problems to the NPDOA Workflow

Frequently Asked Questions (FAQs)

Algorithm Fundamentals & Application

Q1: What is the Neural Population Dynamics Optimization Algorithm (NPDOA) and why is it relevant to pharmaceutical development? The Neural Population Dynamics Optimization Algorithm (NPDOA) is a novel, brain-inspired meta-heuristic algorithm designed to solve complex optimization problems [16]. It simulates the activities of interconnected neural populations in the brain during cognition and decision-making [16]. In pharmaceutical development, it is highly relevant for optimizing high-stakes, multi-stage processes such as New Product Development (NPD), which involve challenges like portfolio management, clinical trial supply chain management, and process parameter optimization, where traditional methods often struggle with inefficiency and convergence issues [16] [26] [9].

Q2: What are the core strategies of the NPDOA? The NPDOA operates based on three core brain-inspired strategies [16]:

- Attractor Trending Strategy: Drives neural populations towards optimal decisions, ensuring exploitation capability.

- Coupling Disturbance Strategy: Deviates neural populations from attractors by coupling with other populations, improving exploration ability.

- Information Projection Strategy: Controls communication between neural populations, enabling a transition from exploration to exploitation.

Q3: What are the typical computational complexity challenges in pharmaceutical NPD that NPDOA can address? Pharma NPD is fraught with operational challenges that increase computational complexity, including [27]:

- Weak governance and poor oversight leading to uncontrolled deviations.

- Siloed teams and fragmented communication causing misalignment and rework.

- Uncontrolled documentation and version chaos creating regulatory risks.

- Inconsistent review cycles and delayed approvals.

- Missing risk management and change control discipline.

Troubleshooting Common Experimental Issues

Q4: The algorithm converges to a local optimum instead of the global solution. How can this be improved? Premature convergence often indicates an imbalance between exploration and exploitation. To address this [16]:

- Adjust Strategy Parameters: Tune the parameters controlling the coupling disturbance strategy, which is responsible for exploration. Increasing its influence can help the solution escape local optima.

- Validate Parameter Settings: Ensure you are not overcycling your iterations, which can destabilize the solution process and lead to errors. Adhere to the recommended parameter ranges provided in your experimental protocol [28].

Q5: How can I verify the accuracy and reliability of the results obtained from the NPDOA workflow?

- Back-Fitting: Perform a "back-fitting" procedure where the standards or known optimal solutions are run as unknowns through your workflow. If they do not report back their nominal values, it may indicate an issue with your model parameters or algorithm configuration [29].

- Spike & Recovery Experiments: For specific applications like sample dilution analysis, conduct spike and recovery experiments to validate the accuracy of your dilution factors and ensure matrix effects are not interfering with the result [29].

Q6: What are the best practices for documenting an NPDOA workflow to ensure reproducibility and regulatory compliance? Adopting a digital governance framework is critical. This ensures [27]:

- Controlled Documentation: Use systems with version control, access permissions, and audit logs to maintain data integrity (ALCOA+ principles).

- Structured Gate Reviews: Document every decision with evidence-driven progression and cross-functional review.

- Integrated Risk Management: Systematically document deviations, root cause analyses, and corrective actions within the workflow.

Experimental Protocols & Data

Protocol 1: Implementing NPDOA for Medication Workflow Optimization

This protocol is based on a quality improvement study that used a methodology analogous to NPDOA for optimizing a hospital's medication dispensing process [30].

1. Objective: To reduce the rate of missing dose requests and quantify the efficiency improvements in time and costs. 2. Methodology (Model for Improvement):

- Setting: A 24-bed medical-surgical unit in a pediatric hospital.

- Team: A multidisciplinary team including clinical care nurses, a nurse educator, and pharmacists.

- Key Driver Diagram: The team developed a key driver diagram to identify primary intervention areas [30].

- PDSA Cycles: Plan-Do-Study-Act cycles were used to test interventions.

3. Interventions (Mapping to NPDOA Strategies):

- Information Projection (Communication): Established standard work instructions for using dispense tracking technology in the EHR, allowing nurses to see a medication's status and location [30].

- Attractor Trending (Storage & Inventory): Created standard handling procedures for medications and optimized the inventory in automated dispensing cabinets (ADCs) to match usage patterns [30].

- Coupling Disturbance (Order Process): Modified the EHR to extend the default medication start time interval, giving pharmacy adequate time to prepare and deliver doses. Educated providers on indicating urgent orders [30].

4. Measurements:

- Primary Measure: Missing dose requests per 100 medication doses dispensed.

- Secondary Measures: Nursing and pharmacy time spent addressing missing doses; cost of medication waste.

Quantitative Results from Medication Workflow Optimization

The following table summarizes the key outcomes from the 6-month quality improvement initiative, demonstrating the significant impact of optimizing the medication workflow [30].

| Performance Metric | Pre-Intervention Baseline | Post-Intervention Result | Improvement |

|---|---|---|---|

| Missing Dose Rate (per 100 doses) | 3.8 | 1.03 | 73% reduction |

| Estimated Doses Prevented | Baseline | 988 doses | - |

| Cost Savings | Baseline | $61,038.64 | - |

| Average Cost to Replace a Single Missing Dose | - | $61.78 | - |

| Median Cost to Replace a Single Missing Dose | - | $54.71 (IQR, 11.91–4,213.11) | - |

| Pharmacist Time Saved per Dose | - | 6 minutes | - |

| Pharmacy Technician Time Saved per Dose | - | 14 minutes | - |

| Nurse Time Saved per Dose | - | 17 minutes | - |

Protocol 2: General Framework for Applying NPDOA to Pharma NPD

1. Problem Definition: Formulate the pharmaceutical problem (e.g., optimizing batch parameters, portfolio selection) as a single-objective optimization problem: Min f(x), subject to g(x) ≤ 0 and h(x) = 0, where x is a vector of decision variables [16].

2. Algorithm Initialization:

- Initialize a population of neural populations, where each variable represents a neuron and its value is the firing rate [16].

- Set parameters for the three core strategies: Attractor Trending, Coupling Disturbance, and Information Projection. 3. Iteration and Evaluation:

- Attractor Trending Phase: Drive the population towards current best solutions (exploitation).

- Coupling Disturbance Phase: Apply disturbances to break away from local optima (exploration).

- Information Projection Phase: Update the communication rules to balance the above two phases.

- Evaluate the objective function

f(x)for each candidate solution. 4. Termination: Repeat iterations until a stopping criterion is met (e.g., maximum iterations, convergence tolerance).

Workflow Visualization

NPDOA-Pharmaceutical Optimization Workflow

Mapping NPDOA to Pharma NPD Challenges

The Scientist's Toolkit: Research Reagent Solutions

The following table lists key components and their functions when designing and implementing an NPDOA-based optimization system for pharmaceutical problems.

| Item | Function in the NPDOA Workflow |

|---|---|

| Digital Governance Platform | Provides the foundational system for embedding controlled workflows, documentation, and audit trails, essential for maintaining data integrity and compliance [27]. |

| Structured Data Repositories | Secure libraries for storing process parameters, batch data, and analytical methods, enabling structured handover and tech transfer [27]. |

| Real-Time Monitoring Dashboards | Tools for leadership to visualize task progress, delays, and resource use, enabling immediate corrective action based on algorithm outputs [27]. |

| Automated Review & Approval Workflows | Digital systems to enforce consistent stage-gate governance, assign reviewers, and manage due dates, shortening review cycles [27]. |

| Risk Register | An integrated log to systematically document deviations, trigger root cause analysis, and monitor risk trends identified during the optimization process [27]. |

Technical Support Center

Troubleshooting Guides & FAQs

This section addresses common challenges researchers may encounter when applying the Neural Population Dynamics Optimization Algorithm (NPDOA) to simplify clinical trial protocols.

FAQ 1: The algorithm converges too quickly to a protocol design that is still complex. How can I improve its exploration?

- Problem: The NPDOA's attractor trending strategy is too strong, causing premature convergence and failing to explore simpler, non-obvious protocol designs.

- Solution: Adjust the weight of the coupling disturbance strategy. This strategy deviates neural populations from attractors by coupling with other neural populations, thereby enhancing the algorithm's exploration ability. Increase its parameter value to encourage a broader search of the solution space before exploitation begins [16].

- Procedure:

- In your NPDOA implementation, locate the parameter controlling the coupling disturbance magnitude (often denoted as a weight or scaling factor).

- Systematically increase this parameter in small increments (e.g., by 10-25% per experiment).

- Run the optimization process on your protocol design problem and monitor the diversity of generated solutions.

- Iterate until you achieve a satisfactory balance between exploring novel, simple designs and refining known good ones.

FAQ 2: How do I quantify protocol complexity to use it as an objective function for NPDOA?

- Problem: The NPDOA requires a clear objective function to minimize. A measurable definition of "protocol complexity" is needed.

- Solution: Implement a scoring system based on established methodology, such as the Protocol Complexity Tool (PCT). The NPDOA can then be set to minimize the Total Complexity Score (TCS) [31].

- Procedure: Structure your objective function using the PCT's five domains. The table below summarizes these domains and example metrics.

Table: Protocol Complexity Tool (PCT) Domains for Objective Function

| Domain | Description | Example Measurable Metrics |

|---|---|---|

| Study Design | Complexity inherent to the scientific plan. | Number of primary/secondary endpoints; novelty of design; number of sub-studies [31]. |

| Operational Execution | Burden related to trial management. | Number of participating countries and sites; drug storage and handling requirements [31]. |

| Site Burden | Workload imposed on clinical sites. | Number of procedures; frequency of site visits; data entry volume [31]. |

| Patient Burden | Demands placed on trial participants. | Frequency and duration of visits; number and invasiveness of procedures [31]. |

| Regulatory Oversight | Complexity of regulatory requirements. | Specific licensing or reporting requirements for the therapeutic area [31]. |

FAQ 3: The optimized protocol is simpler but compromises scientific validity. How does NPDOA balance this?

- Problem: The optimization process is yielding logistically simple protocols that are no longer scientifically sound.

- Solution: Leverage the "Ground Zero" principle from Lean Design as a constraint within the NPDOA framework. Start with a minimal, scientifically essential protocol and allow the algorithm to add assessments only when a strong biological rationale exists [32].

- Procedure:

- Define your "Ground Zero" protocol, which includes only the primary endpoint and essential safety reporting.

- Frame any additional assessment (e.g., a lab test, a patient-reported outcome) as a proposed addition.

- Program the NPDOA's attractor trending strategy to strongly favor solutions that retain only those additions with a clear, pre-defined biological or clinical justification. This ensures simplification does not compromise the trial's core scientific question [32].

FAQ 4: How is the performance of NPDOA for clinical trial simplification validated?

- Problem: It is unclear how to verify that the NPDOA is performing effectively and efficiently in this context.

- Solution: Validation is a two-step process: benchmark testing and practical application. The algorithm's performance should be compared against other optimization algorithms on standard test functions and then applied to real-world protocol design problems [16] [33].

- Procedure:

- Benchmarking: Test the NPDOA on established computational benchmark suites (e.g., IEEE CEC2017) and compare its performance with other algorithms like GA, PSO, and RTH. Key metrics include convergence speed and solution accuracy [33].

- Practical Application: Apply the NPDOA to redesign a known complex clinical trial protocol. The success of the optimization is measured by the reduction in the Protocol Complexity Tool (PCT) score and the improvement in key trial indicators [31].

Table: NPDOA Performance Comparison on Benchmark Problems

| Algorithm | Key Inspiration | Exploration-Exploitation Balance | Reported Performance on Benchmarks |

|---|---|---|---|

| NPDOA | Brain neural population dynamics [16] | Attractor trending (exploitation), Coupling disturbance (exploration), Information projection (transition) [16] | Competitive, effective on single-objective problems [16] [33] |

| Genetic Algorithm (GA) | Biological evolution [16] | Selection, crossover, and mutation operations [16] | Can suffer from premature convergence [16] |

| Particle Swarm Optimization (PSO) | Social behavior of bird flocking [16] | Guided by local and global best particles [16] | May get stuck in local optima and has low convergence [16] |

| Improved RTH (IRTH) | Hunting behavior of red-tailed hawks [33] | Stochastic reverse learning, dynamic position update, trust domain updates [33] | Competitive performance on CEC2017 [33] |

The Scientist's Toolkit: Research Reagent Solutions

Table: Essential Components for an NPDOA-based Protocol Optimization Experiment

| Item | Function in the Experiment |

|---|---|

| Protocol Complexity Tool (PCT) | Provides a quantitative, structured framework to score and measure the complexity of a clinical trial protocol across five key domains, serving as the primary objective function for the NPDOA to minimize [31]. |

| "Ground Zero" Protocol Template | A minimal, bare-bones protocol template that includes only the primary endpoint and critical safety assessments. It is used to initialize the optimization process and prevent anchoring bias from complex existing templates [32]. |

| Benchmark Suite (e.g., CEC2017) | A standardized set of mathematical optimization problems used to calibrate the NPDOA's parameters and verify its basic performance and convergence behavior before applying it to the specific domain of protocol simplification [33]. |

| Computational Cost Metric | A function that tracks the number of iterations or CPU time required for the NPDOA to converge on an optimal solution. This is crucial for evaluating the algorithm's efficiency and practical feasibility for rapid protocol design cycles. |

Experimental Protocol: Simplifying a Trial Protocol using NPDOA

Objective: To reduce the complexity of a clinical trial protocol draft by minimizing its Protocol Complexity Tool (PCT) score using the Neural Population Dynamics Optimization Algorithm, without compromising the validity of the primary endpoint.

Methodology:

Initialization:

- Define the search space for the protocol parameters (e.g., number of visits, types of non-essential procedures, patient population criteria).

- Initialize a population of neural populations, where each individual represents a unique protocol design.

- Set the objective function to minimize the Total Complexity Score (TCS) as defined by the PCT [31].

Iteration and Evaluation:

- For each protocol design in the population, calculate the PCT score. This involves scoring each of the 26 questions across the 5 domains and summing the individual domain scores [31].

- Apply the three core strategies of NPDOA:

- Attractor Trending: Drive the population towards the current best protocol designs (lowest TCS) to refine and exploit promising areas [16].

- Coupling Disturbance: Introduce variations by having neural populations interact, pushing some designs away from current attractors to explore new, potentially simpler configurations [16].

- Information Projection: Control the flow of information between neural populations to strategically balance the above two processes, transitioning from broad exploration to focused exploitation [16].

Termination and Output:

- The process iterates until a stopping criterion is met (e.g., a maximum number of iterations or no significant improvement in the TCS).

- The output is an optimized protocol design with a significantly reduced complexity score.

The workflow below visualizes this multi-stage optimization process.

The logical relationships between the NPDOA's core strategies and their role in balancing exploration and exploitation are crucial for its function.

This technical support center provides troubleshooting guides and FAQs for researchers applying NPDOA (Neural Population Dynamics Optimization Algorithm) computational complexity reduction methods to the analysis and optimization of complex medication regimens.

FAQs and Troubleshooting Guides

FAQ Group 1: Understanding Medication Regimen Complexity (MRC)

Q1: What is Medication Regimen Complexity (MRC) and why is it a critical parameter in our computational models?

MRC refers to the multifaceted nature of a patient's medication plan, defined by the number of medications, their dosing frequencies, dosage forms, and additional administration directions [34]. In computational research, it serves as a key input variable. High MRC is strongly associated with poor glycemic control in diabetes patients and reduced medication adherence, making its accurate quantification essential for predicting real-world therapeutic outcomes [34].

Q2: How does reducing MRC align with the objective function in NPDOA-based optimization?

The goal of NPDOA is to find an optimal solution by modeling neural dynamics [9]. When applied to MRC, the algorithm's objective function can be configured to minimize complexity (e.g., reducing pill burden or dosing frequency) while constrained by maintaining or improving clinical efficacy. Simplification is linked to improved quality of life and increased treatment satisfaction, which are measurable outcomes of a successful optimization [34].

FAQ Group 2: Troubleshooting NPDOA Model Performance

Q1: Our NPDOA model is converging to a local optimum that recommends an overly simplistic, clinically ineffective regimen. How can we improve the search strategy?

This is a common challenge in balancing exploration and exploitation. The PMA (Power Method Algorithm), which shares conceptual ground with metaheuristic approaches like NPDOA, suggests incorporating stochastic geometric transformations and random perturbations during the exploration phase [9]. To avoid clinically invalid solutions, introduce hard constraints into your model based on pharmacokinetic/pharmacodynamic principles and established clinical guidelines.

Q2: The model's performance is highly sensitive to noisy patient adherence data. What preprocessing steps are recommended?

Data preprocessing is critical for handling real-world complexity. Follow this integrated workflow to improve data quality and model robustness:

Applying a Savitzky-Golay (SG) filter can significantly smooth temporal data and improve model performance, with one study showing the R² value increasing from 0.160 to 0.632 after smoothing [35].

FAQ Group 3: Clinical Validation and Error Prevention

Q1: During the clinical validation phase, we observed an increase in medication errors related to our optimized regimen. What are the common causes?

Medication errors are preventable events that can occur at any point in the medication use process [36]. The most common causes relevant to new regimens are detailed in the table below. Analysis should focus on system failures rather than individual blame [36] [37].

Q2: What strategies can be built into the regimen design to prevent these errors?

Proactive error prevention should be a key output of your optimization model. Implement the following strategies derived from the search results:

- Simplify Regimens: Prioritize once-daily over multiple-daily dosing where therapeutically equivalent, as lower MRC is associated with improved adherence [34].

- Standardize Nomenclature: Avoid look-alike, sound-alike drug names in your recommendations to prevent dispensing errors [36] [37].

- Incorporate Patient-Specific Factors: For elderly or patients with comorbidities, the ADA recommends simplifying regimens to reduce treatment burden and prevent complications like hypoglycemia [34].

Structured Data for Experimental Analysis

Table 1: Common Medication Error Types and Frequencies in System Validation

| Error Type | Description | Frequency in Acute Hospitals | Primary Cause |

|---|---|---|---|

| Prescribing Error | Incorrect drug, dose, or regimen selection [36]. | Nearly 50% of all medication errors [36]. | Illegible handwriting, inaccurate patient information [36] [37]. |

| Omission Error | Failure to administer a prescribed dose [36]. | N/A | Complex regimens, communication failures [36]. |

| Wrong Time Error | Administration outside a predefined time interval [36]. | N/A | Scheduling complexity, workload [36]. |

| Improper Dose Error | Administration of a dose different from prescribed [36]. | N/A | Miscalculations, preparation errors [36]. |

| Unauthorized Drug Error | Administration without a valid prescription [36]. | N/A | Documentation errors, protocol deviation [36]. |

N/A: Specific frequency not provided in the search results, but these are established error categories for monitoring.

Table 2: Impact of Medication Regimen Complexity (MRC) on Patient Outcomes

| Outcome Metric | Association with Higher MRC | Evidence Certainty (GRADE) |

|---|---|---|

| Glycemic Control | Poorer control in most studies [34]. | Conflicting, trend negative [34]. |

| Medication Adherence | Lower adherence (4 studies) [34]. | Consistent findings [34]. |

| Medication Burden & Diabetes-Related Distress | Greater burden and distress [34]. | Consistent findings [34]. |

| Quality of Life & Treatment Satisfaction | Improved with regimen simplification [34]. | Consistent findings [34]. |

The Scientist's Toolkit: Research Reagent Solutions

| Item/Category | Function in MRC Research |

|---|---|

| Medication Regimen Complexity Index (MRCI) | A validated tool to quantify complexity based on dosage form, frequency, and additional instructions [34]. |

| Simulated Patient Models | Digital avatars with varying demographics and comorbidities to test optimized regimens before clinical trials. |

| NPDOA Hyperparameter Optimization Suite | Computational package for fine-tuning algorithm parameters like neural population size and connection weights to balance exploration and exploitation [9] [11]. |

| SHAP (SHapley Additive exPlanations) | A method to interpret the output of machine learning models, crucial for explaining why a model recommends a specific regimen change [35]. |

| Savitzky-Golay Filter | A digital filter for data smoothing to reduce noise in temporal patient data without distorting the signal [35]. |

Experimental Protocol: Validating a Simplified Regimen

Objective: To compare the clinical outcomes and adherence rates of a current complex regimen versus an NPDOA-optimized simplified regimen.

Methodology:

- Patient Cohort: Recruit adults with type 2 diabetes on ≥5 chronic medications [34] [38].

- Intervention: Apply the NPDOA model to optimize and simplify the regimen. The model's logic for balancing simplification with efficacy is shown below.

- Control: Continue with the current complex regimen.

- Outcomes: Measure HbA1c (glycemic control), medication adherence (via pill count or electronic monitoring), and patient satisfaction (via surveys) over a 6-month period.

Analysis: Use statistical tests (e.g., t-tests, chi-square) to compare outcomes between groups. A successful intervention will show non-inferior glycemic control with significantly improved adherence and satisfaction in the simplified regimen group [34].

Enhancing New Product Development (NPD) Pipelines and Portfolio Management

Troubleshooting Guides

Guide 1: Troubleshooting Portfolio Imbalance and Strategic Misalignment

Problem Statement: The R&D portfolio is heavily weighted toward high-risk, long-term projects, creating potential revenue gaps and misalignment with strategic goals for near-term growth.

| Observed Symptom | Potential Root Cause | Recommended Action | Expected Outcome |

|---|---|---|---|

| Consistent long-term budget overruns | High-risk projects consuming disproportionate resources; "zombie" projects not being terminated [39]. | Conduct a portfolio review to categorize projects; create alternative portfolio scenarios to rebalance risk and value [39]. | Freed-up resources are reallocated to more promising projects; improved alignment with strategic financial objectives. |

| Pipeline cannot support short-term revenue targets | Lack of line extensions or lower-risk development paths; market volatility not accounted for in planning [40] [39]. | Use a prioritization framework (e.g., MoSCoW) for features and projects; explore accelerating specific products or acquiring external assets [40] [39]. | A more balanced portfolio with a mix of short, medium, and long-term value drivers. |

| Inability to compare project value across the portfolio | Siloed teams; inconsistent valuation metrics and data collection methods [39]. | Establish a centralized portfolio management solution for "apples-to-apples" project comparison using unified data layers [39]. | Enhanced transparency; more confident and data-driven investment trade-off decisions. |

Guide 2: Troubleshooting Computational Complexity in Analysis Workflows

Problem Statement: Analysis of high-dimensional biological data (e.g., for target identification) is computationally intensive, slowing down the early NPD stages and increasing costs.

| Observed Symptom | Potential Root Cause | Recommended Action | Expected Outcome |

|---|---|---|---|

| Gene expression analysis or protein network modeling is prohibitively slow [41]. | Use of non-optimized, generic algorithms for large-scale data analysis. | Apply problem-specific structural optimizations and code optimization methods to exploit the inherent structure of the biological model [42]. | Reduced computational load and faster time-to-insight for research data. |

| Integration of multi-attribute similarity networks for protein analysis is inefficient [41]. | Inefficient integration of disparate data sources and computational kernels. | Employ hybrid computational kernels and statistical techniques designed for integrating multiple data sources [41]. | More robust data representation and analysis, enabling more accurate predictions. |

| Molecular surface generation and visualization are delayed [41]. | Use of computationally expensive methods for 3D rendering and modeling. | Implement optimized algorithms, such as Level Set methods, for efficient molecular surface generation [41]. | Accelerated computational modeling and visualization tasks. |

Frequently Asked Questions (FAQs)

Q1: What are the most common challenges in managing a pharmaceutical R&D portfolio? The primary challenges include balancing a portfolio of projects with extreme risks and rewards across long development cycles, ensuring strategic alignment amid market changes, and making data-driven decisions to stop underperforming projects and promote winners. Structural factors like costly clinical trials and the risk of late-stage failure make effective portfolio management critical [39].

Q2: How can computational complexity reduction be applied in drug discovery? In bioinformatics, complexity reduction is achieved by developing and applying optimized computational techniques. This includes using machine learning for feature extraction from protein sequences, applying statistical modeling to integrate and analyze multiple data sources like gene expression arrays and protein-protein interaction networks, and employing efficient algorithms for tasks like molecular surface generation [41].

Q3: Our team struggles with workflow dependencies that slow down development. How can this be addressed? Workflow dependencies, such as a development team waiting for a design prototype, are a common product development challenge. Solutions include establishing clear review cycles with interdepartmental teams and adopting methodologies like dual-track development, which emphasizes continuous delivery to reduce bottlenecks and improve harmony between design and development teams [40].