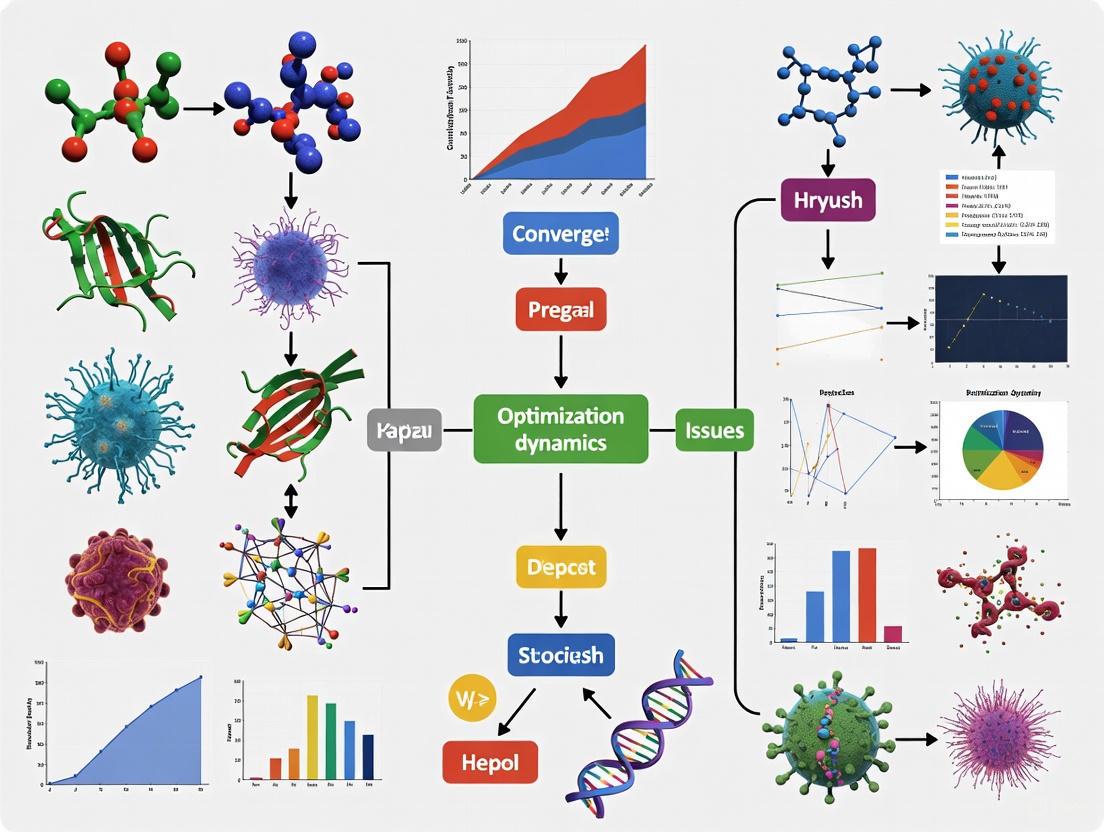

Overcoming Convergence Issues in Neural Population Dynamics: From Biological Constraints to Optimization Algorithms

This article explores the critical challenge of convergence issues in neural population dynamics optimization, a key area intersecting computational neuroscience and machine learning.

Overcoming Convergence Issues in Neural Population Dynamics: From Biological Constraints to Optimization Algorithms

Abstract

This article explores the critical challenge of convergence issues in neural population dynamics optimization, a key area intersecting computational neuroscience and machine learning. We first establish the foundational principles of neural population dynamics and their inherent constraints, as revealed by experimental neuroscience. The discussion then progresses to novel methodological frameworks, including brain-inspired meta-heuristic algorithms and geometric deep learning approaches, designed to improve convergence. A dedicated troubleshooting section analyzes common pitfalls like local optima entrapment and premature convergence, offering practical optimization strategies. Finally, we present rigorous validation paradigms and comparative analyses of state-of-the-art methods, providing researchers and drug development professionals with a comprehensive resource for addressing convergence challenges in both biological network modeling and AI-driven drug discovery applications.

The Fundamental Challenge: Why Neural Population Dynamics Resist Arbitrary Optimization

Neural population dynamics is a computational framework for understanding how interconnected networks of neurons collectively process information to drive perception, cognition, and behavior. This approach examines how the coordinated activity of neural populations evolves over time, forming trajectories in a high-dimensional state space that implement specific computations through their temporal structure [1] [2].

The core mathematical formulation represents neural population dynamics as a dynamical system where the neural population state vector x(t), representing the firing rates of N neurons at time t, evolves according to the equation: dx/dt = f(x(t), u(t)). Here, f is a function capturing the intrinsic circuit dynamics shaped by network connectivity, and u(t) represents external inputs to the circuit [1]. This framework has been successfully applied to understand diverse neural functions including motor control [2], decision-making [2], timing [2], and working memory [2].

Frequently Asked Questions (FAQs)

Q1: What are the most common convergence issues in neural population dynamics optimization?

The most frequent convergence issues in neural dynamics optimization stem from improper balancing between exploration and exploitation phases, premature convergence to local optima, and inadequate parameter settings that fail to capture the underlying biological constraints.

Table: Common Convergence Issues and Their Manifestations

| Issue Type | Typical Symptoms | Common In Algorithms |

|---|---|---|

| Premature Convergence | Rapid performance plateau, limited solution diversity | PSO, GA, Physical-inspired algorithms |

| Exploration-Exploitation Imbalance | Inability to escape local optima or refine promising solutions | Classical SI algorithms, Mathematics-inspired algorithms |

| Parameter Sensitivity | Widely varying performance across problems | Algorithms requiring extensive hyperparameter tuning |

| Computational Complexity | Prohibitive runtime for high-dimensional problems | WOA, SSA, WHO with extensive randomization |

Q2: How can I validate that my optimization algorithm accurately captures biological neural dynamics?

Biological validation requires both computational benchmarks and empirical consistency checks. For motor cortex dynamics, experimental studies show that natural neural trajectories are remarkably constrained and difficult to violate, even when animals are directly challenged to do so through brain-computer interfaces [2]. Your optimized dynamics should demonstrate similar robustness. Additionally, leverage interpretability tools like MARBLE (MAnifold Representation Basis LEarning) to compare your algorithm's latent representations with experimental neural recordings across conditions and animals [3].

Q3: What metrics should I use to evaluate the performance of neural population dynamics optimization?

A multi-faceted evaluation approach is essential, combining optimization performance metrics with dynamical systems analysis:

Table: Key Evaluation Metrics for Neural Dynamics Optimization

| Metric Category | Specific Metrics | Interpretation Guidance |

|---|---|---|

| Optimization Performance | Convergence rate, Solution quality (fitness), Population diversity | Compare against benchmark problems with known optima |

| Biological Plausibility | Trajectory smoothness, Fixed point structure, Dynamical richness | Validate against experimental neural recordings |

| Computational Efficiency | Wall-clock time, Memory usage, Scaling with dimensionality | Critical for large-scale problems |

| Generalization | Performance across diverse problems, Sensitivity to parameters | Tests robustness beyond training settings |

Troubleshooting Guides

Problem 1: Premature Convergence in Neural Dynamics Optimization

Symptoms: The algorithm rapidly stagnates on suboptimal solutions without improving despite continued iterations. Population diversity decreases too quickly.

Diagnosis:

- Monitor population diversity metrics throughout optimization

- Check if coupling disturbance mechanisms are effectively maintaining exploration [4]

- Verify that information projection strategy properly regulates exploration-exploitation transitions [4]

Solutions:

- Implement or strengthen coupling disturbance strategies to deviate neural populations from attractors [4]

- Increase population size and implement diversity maintenance mechanisms

- Adaptively adjust parameters to balance global and local search

- For JKO-based methods, ensure proper time discretization and energy functional formulation [5]

Problem 2: Inability to Capture Biological Realism in Optimized Dynamics

Symptoms: Optimized dynamics lack the temporal structure, constraints, or computational properties observed in experimental neural recordings.

Diagnosis:

- Compare fixed point structure with experimental observations

- Check if neural trajectories can be volitionally altered (biological dynamics should be constrained) [2]

- Verify consistency across different projections of the neural state space [2]

Solutions:

- Incorporate dynamical constraints observed in experimental studies: neural activity time courses are difficult to violate volitionally [2]

- Implement manifold learning to ensure dynamics evolve on biologically plausible low-dimensional subspaces [3]

- Use geometric deep learning to map local flow fields into interpretable latent representations [3]

- Validate against motor cortex experiments showing direction-dependent neural trajectories in high-dimensional space [2]

Problem 3: High Computational Complexity in Population Dynamics Simulation

Symptoms: Simulation time becomes prohibitive for large populations or high-dimensional problems, limiting practical application.

Diagnosis:

- Profile code to identify computational bottlenecks

- Check if dimensionality reduction opportunities are being utilized

- Evaluate whether approximation methods could maintain accuracy while reducing cost

Solutions:

- Implement exact mean-field reductions for spiking neuron networks when possible [6]

- Use the JKO scheme for efficient time discretization of Wasserstein gradient flows [5]

- Leverage MARBLE's approach for representing dynamics through local flow fields rather than full state evolution [3]

- Apply dimensionality reduction techniques (PCA, GPFA) to work with latent representations [2]

Experimental Protocols & Methodologies

Protocol 1: Testing Flexibility of Neural Trajectories Using BCI

Purpose: To determine whether neural activity time courses reflect flexible cognitive strategies or constrained network dynamics [2].

Materials:

- Multi-electrode array implanted in motor cortex (e.g., ~90 neural units in rhesus monkeys)

- Real-time neural signal processing system (e.g., causal Gaussian Process Factor Analysis for 10D latent state extraction)

- Brain-computer interface with customizable mapping from neural states to cursor position

- Visual display for target presentation and cursor feedback

Procedure:

- Record baseline neural activity during a two-target BCI task with intuitive "movement-intention" mapping

- Identify natural neural trajectories between targets in the high-dimensional state space

- Compute a "separation-maximizing" projection that reveals direction-dependent neural paths

- Provide visual feedback in this new projection and assess whether neural trajectories persist

- Directly challenge animal to produce time-reversed neural trajectories

- Quantify adherence to natural dynamics versus ability to generate altered trajectories

Interpretation: If neural trajectories are constrained by underlying network mechanisms, animals will be unable to volitionally violate natural time courses despite strong incentives [2].

Neural Trajectory Flexibility Testing Protocol

Protocol 2: MARBLE Framework for Interpretable Neural Dynamics Representation

Purpose: To learn interpretable latent representations of neural population dynamics that enable comparison across conditions, sessions, and animals [3].

Materials:

- Neural firing rate data (multiple trials across conditions)

- Computational implementation of MARBLE framework

- User-defined labels of experimental conditions for local feature extraction

Procedure:

- Input neural firing rates {x(t; c)} for trials under each condition c

- Represent local dynamical flow fields over the underlying neural manifolds

- Approximate unknown manifold using proximity graph construction

- Decompose dynamics into local flow fields (LFFs) around each neural state

- Map LFFs to latent vectors using unsupervised geometric deep learning

- Compute optimal transport distances between latent representations of different conditions

- Identify consistent latent representations across networks and animals

Key Steps:

- Gradient filter layers for p-th order approximation of LFFs

- Inner product features for embedding invariance

- Multilayer perceptron for latent vector generation

- Unsupervised training using manifold continuity as contrastive objective

MARBLE Representation Learning Pipeline

The Scientist's Toolkit: Research Reagent Solutions

Table: Essential Computational Tools for Neural Population Dynamics Research

| Tool/Resource | Type | Primary Function | Application Context |

|---|---|---|---|

| NPDOA Framework [4] | Optimization Algorithm | Brain-inspired metaheuristic with attractor trending, coupling disturbance, and information projection strategies | Solving complex optimization problems with balanced exploration-exploitation |

| MARBLE [3] | Representation Learning Method | Geometric deep learning for interpretable latent representations of neural dynamics | Comparing dynamics across conditions, sessions, and animals |

| JKO Scheme [5] | Optimization Framework | Time discretization of Wasserstein gradient flows for population dynamics | Learning energy functionals from population-level data without trajectory information |

| Exact Mean-Field Models [6] | Analytical Tool | Deriving population-level equations from spiking neuron networks | Studying synchronization phenomena and large-scale brain rhythms |

| Gaussian Process Factor Analysis (GPFA) [2] | Dimensionality Reduction | Extracting low-dimensional latent states from high-dimensional neural recordings | Brain-computer interface applications and neural trajectory visualization |

| θ-Neuron/QIF Models [6] | Spiking Neuron Model | Biophysically plausible neuron modeling with exact mean-field reductions | Network studies of synchronization and population dynamics |

Advanced Optimization Frameworks

Neural Population Dynamics Optimization Algorithm (NPDOA)

The NPDOA is a novel brain-inspired metaheuristic that directly translates principles of neural computation to optimization frameworks [4]. It implements three core strategies:

Attractor Trending Strategy: Drives neural populations toward optimal decisions, ensuring exploitation capability by converging toward stable neural states associated with favorable decisions [4]

Coupling Disturbance Strategy: Deviates neural populations from attractors through coupling with other neural populations, improving exploration ability and preventing premature convergence [4]

Information Projection Strategy: Controls communication between neural populations, enabling smooth transition from exploration to exploitation phases throughout the optimization process [4]

This approach addresses fundamental limitations of existing metaheuristic categories - evolutionary algorithms' premature convergence, swarm intelligence algorithms' local optimum trapping, and physics-inspired algorithms' exploration-exploitation imbalance [4].

JKO Scheme for Population Dynamics Learning

The Jordan-Kinderlehrer-Otto (JKO) scheme provides a variational approach to modeling population dynamics through Wasserstein gradient flows [5]. The recent iJKOnet framework combines JKO with inverse optimization to learn population dynamics from observed marginal distributions at discrete time points, which is particularly valuable when individual trajectory data is unavailable [5].

Key Advantages:

- Handles population-level data without requiring individual trajectory information

- Models dynamics as a sequence of distributions minimizing total energy functional

- Suitable for applications with destructive sampling (e.g., single-cell genomics) or aggregate observations (e.g., financial markets, crowd dynamics) [5]

JKO-Based Dynamics Learning Framework

Troubleshooting Guide: Frequently Asked Questions

FAQ 1: My experimental manipulation failed to alter the neural trajectory as predicted. What could be the cause? This is a common challenge when investigating dynamical constraints. The underlying neural network connectivity may be imposing a fundamental limitation on the possible sequences of neural population activity. In a key experiment, researchers used a brain-computer interface (BCI) to challenge subjects to volitionally alter or even time-reverse their natural neural trajectories. Despite strong incentives and visual feedback, subjects were unable to violate these natural activity time courses. This indicates that the failure to alter a trajectory may not be a technical flaw, but rather evidence of a successful experimental probe, revealing that the observed neural dynamics are robust and constrained by the network itself [2] [7]. To troubleshoot, verify that your manipulation is not being "absorbed" by the network's inherent flow field by testing its effect on multiple, distinct neural trajectories.

FAQ 2: How can I determine if a neural population exhibits low-dimensional dynamics? A primary method is to perform dimensionality reduction (e.g., using Gaussian process factor analysis) on your recorded population activity and examine the variance explained by the top latent dimensions. If a small number of dimensions capture most of the variance, this suggests low-dimensional dynamics. Furthermore, you can fit a low-rank autoregressive model to the neural data; if a model with a rank significantly lower than the total number of neurons accurately predicts future neural states, this is strong evidence for low-dimensional structure [8]. The singular value spectrum of the neural population activity matrix can also be a useful visual guide, often showing a few dominant dimensions [8].

FAQ 3: What controls are critical for interpreting neural circuit manipulation experiments? It is essential to include controls that rule out indirect effects of your manipulation. A key pitfall is attributing a change in a specific behavior directly to the manipulated circuit, when the manipulation may have induced a more general state change (e.g., a seizure, altered arousal, or motor artifact). Always:

- Monitor for Off-Target Effects: Use immediate early gene expression (e.g., c-Fos) or other functional markers to check for unexpected, widespread activation outside your target population [9].

- Assess Behavioral Specificity: Employ a battery of behavioral tasks to determine if the manipulation's effect is specific to one behavior or impacts multiple latent variables (e.g., motivation, sensation, motor output) [9].

- Validate Causal Links: Ensure that the timing of the neural manipulation aligns with the proposed computational role of the circuit during the behavior.

Summarized Experimental Data

Table 1: Key Quantitative Findings from Empirical Studies of Neural Dynamical Constraints

| Experimental Paradigm | Key Quantitative Result | Implication for Dynamical Constraints |

|---|---|---|

| BCI Challenge to Reverse Neural Trajectories [2] [7] | Subjects were unable to volitionally traverse natural neural activity time courses in a time-reversed manner, despite ~100 trial attempts. | Neural population dynamics are obligatory on short timescales, strongly constrained by the underlying network. |

| Low-Rank Dynamical Model Fit [8] | A low-rank autoregressive model accurately predicted neural population responses to photostimulation, with dynamics residing in a subspace of lower dimension than the total recorded neurons. | Neural population dynamics are intrinsically low-dimensional, simplifying their identification and modeling. |

| Parameter Tuning in Dynamical Models [10] | In a Zeroing Neural Network (ZNN), increasing the fixed parameter γ from 1 to 1000 reduced convergence time from 0.15 s to 0.15×10⁻⁵ s for a specific task. |

The convergence speed of engineered neural dynamics can be directly and predictably controlled by model parameters. |

Table 2: Research Reagent Solutions for Probing Neural Dynamics

| Reagent / Tool | Primary Function | Key Consideration |

|---|---|---|

| Two-Photon Holographic Optogenetics [8] | Precise photostimulation of experimenter-specified groups of individual neurons to causally probe network dynamics. | Enables active learning of informative stimulation patterns for efficient model identification. |

| Brain-Computer Interface (BCI) [2] | Provides real-time visual feedback of neural population activity, allowing experimenters to challenge subjects to alter their own neural dynamics. | A causal tool for testing the flexibility and constraints of neural trajectories in a closed loop. |

| Soft Electrode Systems [11] | Neural stimulation and recording with materials that conform to and mimic neural tissue for improved biointegration and long-term functionality. | Reduces tissue damage and inflammatory responses, leading to more stable and reliable chronic recordings. |

| Viral Tracers (Anterograde/Retrograde) [12] | Identification of efferent and afferent connectomes of selected subpopulations of neurons to map circuit-level architecture. | Critical for correlating observed dynamics with the underlying anatomical connectivity that may give rise to them. |

Detailed Experimental Protocols

Protocol 1: Testing the Robustness of Neural Trajectories Using a BCI This protocol is designed to empirically test whether observed neural trajectories are flexible or constrained by the underlying network [2].

- Neural Recording & Decoding: Implant a multi-electrode array in the target brain region (e.g., primary motor cortex). Record from a large population of neural units (~90). Use a causal dimensionality reduction technique (e.g., Gaussian process factor analysis) to project the high-dimensional neural activity into a lower-dimensional latent state (e.g., 10D).

- Establish a Baseline Mapping: Create an intuitive BCI mapping (e.g., a "movement-intention" mapping) that projects the latent neural state to the 2D position of a computer cursor. Have the subject perform a simple task, such as moving the cursor between two targets.

- Identify Natural Neural Trajectories: Analyze the neural latent states during the baseline task to identify the characteristic, time-ordered sequence of population activity patterns (the neural trajectory) for each movement direction.

- Identify an Informative Projection: Find a 2D projection of the latent state space (e.g., a "separation-maximizing" projection) where the neural trajectories for different directions (e.g., A→B vs. B→A) are distinct and exhibit clear directional curvature.

- Challenge the Trajectory: Change the BCI mapping to provide visual feedback in the informative projection. Then, challenge the subject to perform the same two-target task but now also try to "straighten" the curved trajectory or even traverse it in a time-reversed order. Offer strong incentives (e.g., fluid reward) for success.

- Analysis: Compare the challenged neural trajectories to the baseline trajectories. A failure to alter the trajectory, despite incentive and feedback, is evidence for a dynamical constraint.

Experimental Workflow for BCI Trajectory Challenge

Protocol 2: Active Learning of Neural Population Dynamics via Photostimulation This protocol uses targeted perturbations to efficiently identify the dynamical system governing a neural population [8].

- Prepare Neural Population: Express calcium indicators (e.g., GCaMP) and optogenetic actuators (e.g., Channelrhodopsin) in a population of neurons in a target region (e.g., mouse motor cortex).

- Acquire Baseline Data: Use two-photon calcium imaging to record activity from 500-700 neurons at a high frame rate (e.g., 20Hz). Simultaneously, deliver photostimulation pulses to randomly selected groups of 10-20 neurons across many trials (~2000 trials).

- Fit a Preliminary Model: Fit a low-rank linear dynamical systems model to the initial dataset of neural responses to photostimulation.

- Active Stimulation Design: Use an active learning algorithm to select the next photostimulation pattern. The algorithm chooses patterns expected to be most informative for reducing uncertainty in the dynamical model parameters, rather than selecting patterns randomly.

- Iterate and Update: Deliver the chosen photostimulation, record the neural response, and update the dynamical model. Repeat steps 4 and 5 iteratively.

- Validation: Compare the predictive power of the model learned through active stimulation to a model learned from a passively collected dataset of equivalent size.

Active Learning Loop for System Identification

Conceptual Frameworks

Dynamical Systems in Neural Circuits Neural circuits are nonlinear dynamical systems that can be described by coupled differential equations. The following diagram illustrates several fundamental dynamical paradigms that can arise from different network configurations, even with just a few neurons [13].

Classes of Neural Dynamical Systems

Frequently Asked Questions (FAQs)

1. What does an "attractor landscape" refer to in neural population dynamics? The attractor landscape is a conceptual framework for understanding how neural population activity evolves over time. Imagine a ball rolling on a hilly surface: the valleys (attractor basins) represent stable brain states, such as a specific decision or memory, while the hills represent energy barriers that make transitioning between states difficult. The landscape's topography defines the system's dynamics, determining how easily the brain can switch between different activity patterns [14].

2. Why is my neural network model getting "stuck" and failing to switch cognitive states? This is a classic sign of overly deep attractor basins. Causal evidence shows that neuromodulatory systems, like the cholinergic input from the nucleus basalis of Meynert (nbM), are critical for stabilizing these landscapes. Inhibiting the nbM leads to a flattening of the landscape and a decrease in the energy barriers for state transitions. If your model is too stable, it may lack the biological mechanisms that appropriately modulate the depth and stability of attractor basins [14]. Furthermore, research indicates that neural trajectories are intrinsically constrained by the underlying network connectivity, making it difficult to force the system into non-native activity sequences [2].

3. My model lacks behavioral flexibility and is overly stable. How can I model a more "nimble" brain? A nimble brain requires a specific basin structure. Studies of chimera states (mixed synchronous and asynchronous activity) suggest that fractal or "riddled" basin boundaries are a key mechanism. In this configuration, the boundaries between different attractors are highly intermingled. This means that even a small perturbation, such as a minor sensory input, can be enough to push the system from one stable state to another, enabling rapid switching [15].

4. I've observed hysteresis (asymmetry) in state transitions during my experiments. Is this expected? Yes, asymmetric neural dynamics during state transitions are a documented phenomenon. For instance, induction of and emergence from unconsciousness under anesthesia follow different neural paths. This "neural inertia" shows that the brain resists returning to a conscious state, meaning the energy landscape is not symmetric for forward and reverse transitions. This asymmetry cannot be explained by pharmacokinetics alone and is likely an intrinsic property of the neural dynamics [16].

5. How do "higher-order interactions" (non-pairwise couplings) affect the attractor landscape? Higher-order interactions can significantly remodel the global landscape. They typically lead to the formation of new, deeper attractor basins. While this increases the linear stability of the system within a basin, it also makes the basins narrower. The overall effect is that fewer initial conditions will lead to a particular attractor, and the system may become more likely to jump between states [17].

Troubleshooting Guides

Problem 1: Failure to Converge to Stable Attractor States

Symptoms: Neural population activity does not settle into a persistent, stable pattern. Activity is overly noisy and fails to represent a decision or memory.

| Potential Cause | Diagnostic Steps | Solution & Experimental Protocol |

|---|---|---|

| Insufficient recurrent excitation within selective neural populations [18]. | Analyze the connectivity matrix. Measure the strength of NMDA receptor-mediated self-excitation in model populations. | Systematically increase the recurrent connectivity weight (w+) in your model. For a spiking network model, a value of ~1.61 (dimensionless) can support bistable decision states [18]. |

| Low global inhibition leading to uncontrolled, network-wide activity [18]. | Measure the firing rate of inhibitory interneuron populations. If it is low during decision epochs, inhibition may be insufficient. | Calibrate the strength of GABAergic inhibition. Ensure reciprocal inhibition between competing selective populations is strong enough to implement a winner-take-all mechanism. |

| Excessive noise overwhelming the signal. | Calculate the signal-to-noise ratio of your inputs or intrinsic neural noise. | Adjust the background input rates to a level that allows for spontaneous firing but does not disrupt attractor states. For a decision-making task, use Poisson-derived inputs with a defined motion coherence (c) and strength (μ) [18]. |

Problem 2: Inability to Transition Between States When Required

Symptoms: The model successfully reaches a stable state but cannot exit it to switch to an alternative state, even when a stimulus or task demands it.

| Potential Cause | Diagnostic Steps | Solution & Experimental Protocol |

|---|---|---|

| Excessively deep energy wells due to over-stabilization [14]. | Quantify the energy barrier as the inverse log probability of state transitions. Compare to control conditions [14]. | Modulate the landscape via simulated neuromodulatory intervention. In a macaque model, local inactivation of the nucleus basalis of Meynert (nbM) with the GABAA agonist muscimol was shown to flatten the landscape and reduce energy barriers [14]. |

| Lack of intermediate "double-up" states that facilitate transitions [18]. | Search for periods of simultaneous, elevated activity in both competing selective populations during transition attempts. | Model parameters can be tuned to allow for tristable landscapes that include a brief "double-up" state. This state acts as a transition hub, increasing the probability of switching between the two primary decision attractors [18]. |

| Non-fractal, simple basin boundaries that require large perturbations to cross [15]. | Map the basin of attraction for different states. Simple, smooth boundaries indicate a lack of intermingling. | Introduce network structures or coupling that promote chimera states (patchy synchrony). This can create fractal basin boundaries, where small perturbations can lead to a switch, mimicking the nimbleness of a biological brain [15]. |

Problem 3: Asymmetric Transition Dynamics (Hysteresis)

Symptoms: The path and energy required to transition from State A to State B are different from the path and energy required to transition back from State B to State A.

| Potential Cause | Diagnostic Steps | Solution & Experimental Protocol |

|---|---|---|

| Intrinsic neural inertia, a property of biological networks that resists a return to a previous state [16]. | In an anesthetic experiment, compare neural dynamics (e.g., temporal autocorrelation) during induction and emergence. | This may not be a problem to "fix" but a feature to model. To study it, design experiments with bidirectional state transitions (e.g., loss and recovery of consciousness). Analyze functional connectivity and temporal autocorrelation separately for each direction of the transition [16]. |

| Non-equilibrium dynamics characterized by probabilistic curl flux [18]. | Calculate the net probability flux between states. A non-zero flux indicates a breakdown of detailed balance. | This asymmetry is a fundamental feature of non-equilibrium biological systems. Quantify the flux to understand its magnitude. The irreversibility of state switches is a signature of the underlying non-equilibrium dynamics and does not necessarily require correction [18]. |

Table 1: Key Parameters from Attractor Landscape Experiments

| Experimental Context | Key Parameter Manipulated | Quantitative Effect on Landscape | Citation |

|---|---|---|---|

| nbM Inactivation (Macaque fMRI) | Focal muscimol injection in nbM (Ch4AM or Ch4AL sub-regions). | Decreased energy barriers for state transitions; maximal slope reduction at MSD=6, TR=8s. | [14] |

| Decision Making (Spiking Network Model) | Recurrent excitation weight (w+); Stimulus strength (μ). | w+ = 1.61, μ = 58 Hz produced a bistable attractor landscape for binary decision. | [18] |

| Higher-Order Interactions (Theoretical) | Inclusion of 3+ node interactions in network models. | Basins become "deeper but smaller," increasing stability but reducing the number of paths to attractor. | [17] |

| Anesthetic Hysteresis (Human fMRI) | Propofol concentration (incremental induction vs. emergence). | Asymmetric neural dynamics: gradual loss vs. abrupt recovery of cortical temporal autocorrelation. | [16] |

Table 2: Research Reagent Solutions for Key Experiments

| Reagent / Resource | Function in Experiment | Example Application Context |

|---|---|---|

| Muscimol | GABAA receptor agonist. Used for reversible, focal inactivation of specific brain nuclei. | Causal testing of nbM's role in stabilizing cortical attractor landscapes in macaques [14]. |

| Propofol | GABAergic anesthetic agent. Titrated to manipulate global brain state. | Studying asymmetric neural dynamics (induction vs. emergence) of unconsciousness in humans [16]. |

| Hindmarsh-Rose Neuron Model | A model of spiking neuron dynamics that can exhibit chaotic/periodic bursting. | Simulating chimera states and fractal basin boundaries on a structural brain network [15]. |

| Brain2 Simulator | An open-source simulator for spiking neural networks. | Implementing biophysically realistic cortical circuit models for decision making [18]. |

| Transfer Entropy (TE) | An information-theoretic measure for detecting directed, time-delayed information flow. | Quantifying how cholinergic inhibition interrupts information transfer between cortical regions [14]. |

Experimental Protocol: Causal Manipulation of Attractor Landscape via nbM Inactivation

Objective: To quantify the causal role of long-range cholinergic input in stabilizing brain state dynamics by locally inactivating the nucleus basalis of Meynert (nbM).

- Animal Model & Preparation: Use non-human primates (e.g., macaques) implanted with a chamber allowing access to the nbM.

- Inactivation:

- Agent: Muscimol, a GABAA receptor agonist.

- Procedure: Perform unilateral, microinjection of muscimol into specific nbM sub-regions (e.g., Ch4AM or Ch4AL). Use sham trials (no injection) as a control.

- Functional Imaging:

- Method: Simultaneously acquire whole-brain resting-state fMRI (cerebral blood volume) data post-inactivation.

- Analysis: Focus on 266 cortical regions of interest (ROIs).

- Landscape Quantification:

- Calculate the Mean-Squared Displacement (MSD) of fMRI signals across the 266 ROIs for a series of temporal delays (TR).

- Estimate the probability of each brain state occurring at a specific displacement and delay.

- Compute the energy barrier as the natural logarithm of the inverse probability: Energy = ln(1 / Probability).

- Expected Outcome: Successful nbM inactivation will result in a "flattening" of the attractor landscape, demonstrated by a statistically significant decrease in the energy barriers required for state transitions compared to the control condition [14].

Core Concepts Visualization

The Native Dynamics Framework

Experimental Causality Test

Frequently Asked Questions (FAQs) on Manifold Analysis

FAQ 1: Our neural population data appears high-dimensional. Why should we assume a low-dimensional manifold structure exists? It is a common misconception that neural manifolds must be "low dimensional" in an absolute sense. The key is the distinction between embedding dimensionality (the full neural population) and intrinsic dimensionality (the underlying degrees of freedom). Even if data occupies a high-dimensional embedding space, the intrinsic dimensionality governing its dynamics is often much smaller due to network recurrence, redundancy, and the constrained nature of behavior [19]. Your analysis should focus on identifying this intrinsic structure.

FAQ 2: We applied PCA but are unsure if the results truly capture the neural manifold. What are the limitations? Using Principal Component Analysis (PCA) presents a classic pitfall. PCA is a linear dimensionality reduction technique and will only identify hyperplanes. Given the high recurrence and nonlinearities in neural circuits, the true neural manifold is likely nonlinear [19]. A linear method like PCA can distort the true structure of the data, giving an incomplete description. For a more accurate manifold estimation, consider employing non-linear embedding techniques such as Laplacian Eigenmaps (LEM), Uniform Manifold Approximation and Projection (UMAP), or t-distributed Stochastic Neighbor Embedding (t-SNE) [20].

FAQ 3: Can we interpret the activity of single neurons within the manifold framework, or is it purely a population-level concept? The manifold framework does not dismiss the importance of single neurons. It provides a population-level structure to contextualize their activity. The activity of any given neuron is best understood in relation to the other neurons that provide its inputs [19]. The manifold view and the single-neuron view are complementary, not a false dichotomy. The manifold offers a level of analysis that bridges granular single-neuron activity to macroscopic processes underlying behavior.

FAQ 4: Our model's dynamics fail to converge to a stable attractor. Could manifold constraints be the cause? Failed convergence can indeed stem from manifold-related constraints. A key mechanism for the emergence of stable, low-dimensional dynamics is time-scale separation, where fast oscillatory dynamics average out over time, allowing slower, task-related processes to dominate [20]. If your system lacks this separation or if the connectivity structure (symmetry) does not support the formation of a stable invariant manifold, dynamics may fail to collapse onto a reliable attractor. Furthermore, in a learning context, neural activity can be constrained to an "intuitive manifold," making it difficult to generate patterns outside of this subspace, even when required for a new task [21].

FAQ 5: How can we experimentally determine if a low-dimensional pattern is due to functional demands or underlying neural constraints? Disambiguating function from constraint requires causal experiments. A seminal approach involves using a brain-computer interface (BCI). First, identify an "intuitive manifold" from baseline neural activity. Then, perturb the BCI mapping in two ways: one that requires new activity patterns within the intuitive manifold, and another that requires patterns outside of it. If subjects can adapt to the inside-manifold perturbation but not the outside-manifold one, it provides causal evidence that the low-dimensionality is a constraint, not just a functional reflection of the task [21].

Quantitative Data on Dimensionality Reduction Techniques

Table 1: Comparison of Manifold Learning and Dimensionality Reduction Techniques

| Technique | Type | Key Strengths | Key Limitations | Example Application in Neuroscience |

|---|---|---|---|---|

| Principal Component Analysis (PCA) | Linear | Computationally efficient; provides global data structure [20]. | Can distort nonlinear manifolds; limited to linear subspaces [19]. | Initial exploration of neural state space; identifying dominant activity patterns [20]. |

| t-SNE | Nonlinear | Excellent at revealing local structure and clusters in high-D data [20]. | Preserves local over global structure; computational cost for large datasets. | Visualizing clustering of neural population activity by stimulus or behavior [20]. |

| Laplacian Eigenmaps (LEM) | Nonlinear | Captures global flow of dynamics; smooths local density variations [20]. | Sensitive to neighborhood size parameter. | Revealing the global organization of transitions between attractor states on a manifold [20]. |

| UMAP | Nonlinear | Balances local and global structure; often faster than t-SNE [20]. | Similar to t-SNE, parameter selection can influence results. | A modern alternative to t-SNE for visualizing neural population dynamics [20]. |

| PHATE | Nonlinear | Designed specifically for visualizing temporal dynamics and trajectories. | May be less effective for non-temporal data. | Analyzing developmental trajectories from neural population data. |

Table 2: Parameter Tuning for Convergence in Neural Dynamics Models (ZNN Examples)

| Model | Key Parameters | Effect of Parameter Tuning | Convergence Outcome |

|---|---|---|---|

| Traditional ZNN | Fixed gain (γ) | Increasing γ from 10 to 1000 proportionally reduces convergence time [10]. | Global asymptotic convergence; precision better than 3e-5 m achieved [10]. |

| Finite-Time ZNN (FTZNN) | γ, κ₁, κ₂ | Enables finite-time convergence; parameters allow control of convergence speed [10]. | Superior convergence speed for real-time tasks compared to traditional ZNN [10]. |

| Segmented Variable-Parameter ZNN | μ₁(t), μ₂(t) | Parameters change in segments (e.g., before/after δ₀), enhancing adaptability [10]. | Improved immunity to external disturbances and maintained stability [10]. |

Experimental Protocols for Manifold Probing

Protocol: Testing Manifold Emergence via Time-Scale Separation

Objective: To validate the hypothesis that low-dimensional manifolds emerge in neural dynamics through the averaging of fast oscillatory activity [20].

Methodology:

- Network Simulation: Construct a network of N nodes (e.g., N=10). A small subset of M nodes (e.g., M=2) is configured to have bistable dynamics (e.g., up-state and down-state). The remaining N-M nodes exhibit monostable dynamics, oscillating around their up-state driven by Gaussian noise [20].

- Dynamics Integration: Numerically integrate the system of differential equations governing node activity. A representative node equation is:

ẋᵢ = (1 - xᵢ²)xᵢ - G∑_{j≠i} c_{ij} x_j² x_i + η_iwhere G is coupling strength, c{ij} is the connectivity matrix, and ηi is a noise term [20]. - Dimensionality Reduction: Apply a non-linear dimensionality reduction technique (e.g., Laplacian Eigenmaps - LEM) to the high-dimensional time-series data of all N nodes [20].

- Analysis: Observe the projected state vectors. Successful emergence of a low-dimensional manifold is indicated by the collapse of the trajectory onto a structured, lower-dimensional space that reflects the dynamics of the bistable nodes, with the fast oscillations of the monostable nodes averaged out.

Figure 1: Workflow for testing manifold emergence via time-scale separation.

Protocol: Disambiguating Function vs. Constraint with BCI

Objective: To causally determine whether a low-dimensional neural manifold results from optimal task performance (function) or an inherent limitation in the neural circuit (constraint) [21].

Methodology:

- Baseline Mapping: Identify the "intuitive manifold" by recording neural population activity while a subject passively observes or performs a task. Use PCA to define the principal subspace of this activity [21].

- Initial Learning: Subjects learn to control a BCI cursor using a mapping that projects their neural activity onto this intuitive manifold.

- Perturbation: Introduce two types of perturbed BCI mappings:

- Inside-Manifold Perturbation: The mapping projects neural activity onto the intuitive manifold but then permutes the manifold's dimensions before moving the cursor. Generating desired cursor movements requires new activity patterns, but these patterns still lie within the intuitive manifold.

- Outside-Manifold Perturbation: The mapping projects neural activity onto a subspace orthogonal to the intuitive manifold. Controlling the cursor now requires generating activity patterns outside of the subject's baseline repertoire [21].

- Assessment: Compare the subject's learning speed and ability to achieve proficient cursor control under the two perturbation conditions.

- Interpretation: Rapid adaptation to the inside-manifold perturbation but failure to adapt to the outside-manifold perturbation provides strong evidence that the low-dimensionality is a neural constraint. Successful adaptation to both would suggest the manifold was primarily functional [21].

Figure 2: BCI experimental logic for distinguishing function from constraint.

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Computational Tools for Neural Manifold Research

| Tool / "Reagent" | Function / Purpose | Key Consideration |

|---|---|---|

| Nonlinear Dimensionality Reduction (UMAP, t-SNE, LEM, PHATE) | Projects high-dimensional neural data into a lower-dimensional space for visualization and analysis of manifold structure [20]. | No single technique is universally "best"; choice depends on data and goal (e.g., local vs. global structure preservation) [20] [19]. |

| Dynamical Systems Models (e.g., bistable/monostable node networks) | Provides a theoretical and simulation framework to test hypotheses about the mechanisms of manifold emergence, such as time-scale separation [20]. | Models should incorporate realistic features like noise and specific connectivity patterns to bridge theory and experimental data [20]. |

| Brain-Computer Interface (BCI) | A causal tool for probing the constraints and plasticity of neural manifolds by altering the relationship between neural activity and output [21]. | Critical for disambiguating whether observed low-dimensionality is a functional requirement or a hard constraint [21]. |

| Manifold Capacity Theory | A mathematical framework to quantify the number of object manifolds that can be linearly separated by a perceptron, linking geometry to function [22]. | Provides geometric measures like "anchor radius" (RM) and "anchor dimension" (DM) to predict classification performance [22]. |

| Zeroing Neural Networks (ZNNs) | An ODE-based neural dynamics framework designed for finite-time convergence and robustness in solving time-varying problems, useful for dynamic system control [10]. | Parameters like gain (γ) can be tuned as fixed or dynamic variables to optimize convergence speed and anti-noise performance [10]. |

Troubleshooting Guide: Common Convergence Issues

This guide addresses frequent convergence problems encountered when applying neural population dynamics to optimization algorithms.

Problem 1: Premature Convergence or Trapping in Local Optima

- Description: The algorithm converges quickly to a solution that is not the global optimum.

- Possible Causes & Solutions:

- Cause: Over-reliance on exploitation (attractor trending) without sufficient exploration [4].

- Solution: Increase the strength of the coupling disturbance strategy. This disrupts the trend towards attractors, pushing neural populations to explore new areas of the solution space [4].

- Cause: Poor balance between exploration and exploitation phases [4].

- Solution: Adjust the information projection strategy parameters to better control the transition and communication between neural populations, ensuring a smoother shift from exploration to exploitation [4].

Problem 2: Slow or Failed Convergence

- Description: The optimization process takes an excessively long time to find a good solution or fails to converge.

- Possible Causes & Solutions:

- Cause: Inadequate initial parameters or search region bounds that do not contain the optimal solution [23].

- Solution: Re-specify appropriate maximum and minimum values for all tuned parameters. Run a search-based method first to get closer to an acceptable solution area [23].

- Cause: The optimization problem may have complex, non-linear constraints that are difficult to satisfy [23].

- Solution: Relax the constraints or design requirements that are violated the most. Once a solution is found for the relaxed problem, you can gradually tighten the constraints again [23].

Problem 3: Unstable or Erratic Optimization Behavior

- Description: The algorithm's performance becomes unstable, with wild fluctuations in the solution quality or failure to recover from unstable regions.

- Possible Causes & Solutions:

- Cause: Parameter values becoming too large, leading to instability [23].

- Solution: Add or tighten the lower and upper bounds on parameter values [23].

- Cause: In neural network training, this can manifest as

NaNvalues due to vanishing or exploding gradients [24]. - Solution: Retune the network. Use gradient normalization to avoid gradients that are too large or too small. Ensure proper weight initialization (e.g., Xavier or He initialization) and consider using different activation functions (e.g., ReLU/Leaky ReLU instead of sigmoid) to mitigate vanishing gradient problems [24].

Frequently Asked Questions (FAQs)

Q1: What does "convergence" mean in the context of neural population dynamics optimization? A: In this context, convergence refers to the algorithm's ability to drive the state of neural populations towards a stable and optimal decision. This is biomimetically inspired by the brain's efficiency in processing information and making optimal decisions. The algorithm is considered converged when the neural populations' states stabilize near an attractor representing a high-quality solution [4] [25].

Q2: How can I test if my algorithm is stable, and why is it important? A: Algorithmic stability measures how sensitive an algorithm is to small changes in its training data. A stable algorithm will produce similar results even if the input data is slightly perturbed [26]. Stability is crucial because it is directly connected to an algorithm's ability to generalize—that is, to perform accurately on new, unseen data [26] [27]. However, under computational constraints, testing the stability of a black-box algorithm with limited data is fundamentally challenging, and exhaustive search is often the only universally valid method for certification [27].

Q3: My optimization consistently violates a specific design requirement. What should I do? A: It might be impossible to achieve all your initial specifications simultaneously. First, try relaxing the constraints that are violated the most. Find an acceptable solution to this relaxed problem, then gradually tighten the constraints again in a subsequent optimization run [23]. Alternatively, the optimization may have converged to a local minimum; try restarting the optimization from a different initial guess [23].

Q4: In neural network training, what are the key hyperparameters to adjust for better convergence? A: The following table summarizes the most critical hyperparameters:

| Hyperparameter | Typical Role in Convergence | Tuning Advice |

|---|---|---|

| Learning Rate [24] | Controls the step size during optimization; too high causes divergence, too low causes slow convergence. | Start with values like 1e-1, 1e-3, 1e-6 to gauge the right order of magnitude. Visualize the loss to adjust. |

| Minibatch Size [24] | Balances noise in gradient estimates and computational efficiency. | Common values are 16-128. Too small (e.g., 1) loses parallelism benefits; too large can be slow. |

| Regularization (L1/L2) [24] | Prevents overfitting by penalizing large weights, which can aid generalization. | Common L2 values are 1e-3 to 1e-6. If loss increases too much after adding regularization, the strength is likely too high. |

| Dropout [24] | Prevents overfitting by randomly ignoring neurons during training. | A dropout rate of 0.5 (50% retention) is a common starting point. |

Experimental Protocols for Key Methods

Protocol 1: Benchmarking Neural Population Dynamics Optimization Algorithm (NPDOA)

- Objective: To verify the effectiveness and balanced performance of the NPDOA on standard benchmark problems [4].

- Materials: Standard benchmark suites (e.g., CEC, BBOB), computational environment (e.g., PlatEMO v4.1), hardware (computer with CPU like Intel Core i7 and sufficient RAM) [4].

- Procedure:

- Implement the NPDOA with its three core strategies: attractor trending, coupling disturbance, and information projection [4].

- Select a set of state-of-the-art meta-heuristic algorithms for comparison (e.g., PSO, DE, WOA) [4].

- Run all algorithms on the selected benchmark problems, ensuring each is run for multiple independent trials to ensure statistical significance.

- Record key performance metrics: final solution accuracy, convergence speed, and success rate.

- Analysis: Compare the mean and standard deviation of the results. Use statistical tests (e.g., Wilcoxon signed-rank test) to determine if performance differences are significant. The NPDOA should demonstrate a robust balance between exploration and exploitation [4].

Protocol 2: Evaluating Generalization via Algorithmic Stability

- Objective: To assess the generalization capability of a learning algorithm by measuring its stability [26].

- Materials: A dataset, the learning algorithm to be evaluated.

- Procedure:

- Train the algorithm on the full training set

Sto obtain modelf_S[26]. - For

i = 1tom(the size of the training set), create a modified training setS^{|i}by removing thei-th example [26]. - Train the algorithm on each

S^{|i}to obtain modelsf_{S^{|i}}. - For a new test point

z, calculate the absolute difference in loss|V(f_S, z) - V(f_{S^{|i}}, z)|for eachi[26].

- Train the algorithm on the full training set

- Analysis: Compute the average of this difference over all

iand random draws ofSandz. A small average difference (e.g., on the order ofO(1/m)) indicates good hypothesis stability, which implies better generalization [26].

Diagram: From Neural Dynamics to Algorithmic Stability

The following diagram illustrates the logical pathway for translating principles from biological neural populations into a stable computational optimization algorithm.

The Scientist's Toolkit: Research Reagent Solutions

The following table details key computational "reagents" and their functions in the study of neural population dynamics and algorithmic convergence.

| Research Reagent / Solution | Function in Experimentation |

|---|---|

| Neural Population Dynamics Optimization Algorithm (NPDOA) | A novel brain-inspired meta-heuristic algorithm used as the primary engine for solving complex optimization problems, balancing exploration and exploitation through its three core strategies [4]. |

| Benchmark Problems (Theoretically) | Standardized optimization problems (e.g., cantilever beam design, pressure vessel design) used to quantitatively evaluate and compare the performance of different algorithms in a controlled manner [4]. |

| PlatEMO v4.1 | A software platform (e.g., based on MATLAB) used as the experimental environment for running optimization algorithms, conducting comparative experiments, and collecting performance data [4]. |

| Stability Metrics (e.g., Uniform Stability) | Quantitative measures used to assess the sensitivity of a learning algorithm to perturbations in its input data, providing a theoretical link to generalization performance [26]. |

| JKO Scheme | A variational framework for modeling the evolution of particle systems as a sequence of distributions, used for learning population dynamics from observational data [5]. |

Advanced Algorithms and Frameworks for Stable Dynamics Optimization

The Neural Population Dynamics Optimization Algorithm (NPDOA) is a novel brain-inspired meta-heuristic that simulates the activities of interconnected neural populations during cognition and decision-making [28]. Within this framework, each solution is treated as a neural population state, with decision variables representing neuronal firing rates [28]. Despite its demonstrated efficiency on benchmark and practical problems, researchers may encounter specific convergence issues during implementation and experimentation [28] [29]. This technical support center provides targeted troubleshooting guides and FAQs to address these challenges, framed within ongoing thesis research on NPDOA convergence properties.

Troubleshooting Guides: Identifying and Resolving Convergence Issues

Premature Convergence to Local Optima

Problem Description: The algorithm converges too quickly to suboptimal solutions, failing to explore the search space adequately. This manifests as population diversity dropping rapidly within the first few generations.

| Observed Symptom | Potential Root Cause | Recommended Solution | Expected Outcome After Intervention |

|---|---|---|---|

| Rapid loss of population diversity [29]. | Overly dominant attractor trending strategy; weak coupling disturbance [28]. | Increase the coupling coefficient to enhance exploration [28]. | Better exploration of search space, delayed convergence. |

| Consistent convergence to a known local optimum. | Insufficient initial population diversity or small population size. | Implement opposition-based learning during initialization [29]. | Wider initial spread of solutions in the search space. |

| Stagnation in mid-optimization phases. | Imbalance between exploitation and exploration parameters. | Introduce an adaptive parameter that changes with evolution [29]. | Dynamic balance, preventing early stagnation. |

Experimental Protocol for Verification: To confirm premature convergence is due to parameter imbalance, run a controlled experiment on a benchmark function like CEC 2017's F1 (Shifted and Rotated Bent Cigar Function). Use a small population size (e.g., 30) and standard parameters. Monitor the percentage of individuals trapped in a single basin of attraction over 50 iterations. Re-run with the recommended solutions and compare the diversity metrics.

Slow Convergence Speed and Low Accuracy

Problem Description: The algorithm takes excessively long to converge or fails to reach a satisfactory solution precision within a practical number of iterations.

| Observed Symptom | Potential Root Cause | Recommended Solution | Expected Outcome After Intervention |

|---|---|---|---|

| Slow progress toward known optimum. | Inefficient attractor trending; poor information projection [28]. | Incorporate a simplex method strategy into the update formulas [29]. | Faster convergence speed and improved accuracy. |

| High computational cost per iteration. | High-dimensional problems with complex fitness evaluations. | Utilize a dimensionality reduction technique on the neural state [1]. | Reduced computation time per iteration. |

| Ineffective local search. | Weak gradient information usage. | Integrate a local search strategy inspired by the power method [30]. | Finer precision in the exploitation phase. |

Experimental Protocol for Verification: Test convergence speed on the CEC 2017's F7 (Shifted and Rotated Schwefel's Function). Track the best fitness value over 1000 iterations. Compare the number of function evaluations required to reach a specific accuracy (e.g., 1e-6) before and after applying the simplex method or local search enhancement.

Population Stagnation and Diversity Collapse

Problem Description: The evolutionary process halts, with the population failing to produce improved offspring for many consecutive generations, often due to a lack of diversity.

| Observed Symptom | Potential Root Cause | Recommended Solution | Expected Outcome After Intervention |

|---|---|---|---|

| No fitness improvement over >N gens. | Information projection strategy overly suppresses exploration [28]. | Introduce an external archive with a diversity supplementation mechanism [29]. | Renewed search impetus and escape from local optima. |

| Identical or near-identical individuals. | Coupling disturbance strategy fails to create sufficient deviation [28]. | Use a learning strategy combined with opposition-based learning [29]. | Increased population variance and new search directions. |

Experimental Protocol for Verification: On a multi-modal test function like CEC 2017's F15 (Composition Function), monitor the average Hamming distance (for binary) or Euclidean distance (for continuous) between population individuals. When diversity drops below a set threshold, trigger the external archive mechanism and observe the recovery of population variance and fitness improvement.

Frequently Asked Questions (FAQs)

Q1: What are the core dynamical strategies in NPDOA, and how do they relate to convergence?

NPDOA operates via three core strategies inspired by neural population dynamics [28]:

- Attractor Trending Strategy: Drives neural populations towards optimal decisions, ensuring exploitation capability. Over-reliance can cause premature convergence.

- Coupling Disturbance Strategy: Deviates neural populations from attractors via coupling, improving exploration ability. Its strength is crucial for avoiding local optima.

- Information Projection Strategy: Controls communication between neural populations, enabling a transition from exploration to exploitation. Improper tuning can disrupt the balance, leading to either stagnation or failure to converge.

Q2: How can I validate that my NPDOA implementation is correct before running complex experiments?

It is recommended to follow a standardized validation protocol:

- Benchmark Testing: Use standard benchmark suites like CEC 2017 or CEC 2022 [30] [29]. Start with lower-dimensional functions (e.g., 30 dimensions) to verify basic functionality.

- Comparison with Baselines: Compare your results against published performance of standard NPDOA and other algorithms like PSO, DE, or WHO on the same benchmarks [28] [29].

- Parameter Sensitivity Analysis: Systematically vary key parameters (e.g., coupling coefficient, learning rates) to observe expected performance trends [28].

Q3: My algorithm is not converging on a specific real-world engineering problem, despite working on benchmarks. What should I do?

This is a common issue addressed in thesis research. Consider the following:

- Problem Analysis: Real-world problems are often non-linear, non-convex, and noisy. Re-examine the problem formulation, constraints, and objective function.

- Constraint Handling: Ensure constraint handling techniques (e.g., penalty functions, feasibility rules) are appropriately integrated with NPDOA's dynamics.

- Hybridization: As demonstrated in improved algorithms like ICSBO and INPDOA, hybridizing NPDOA with other methods (e.g., simplex method, opposition-based learning) can significantly enhance robustness for complex problems [29] [31].

Q4: Are there known modifications to NPDOA that improve its convergence properties?

Yes, recent research has proposed several improved variants:

- ICSBO: Though inspired by a different system, it incorporates adaptive parameters, simplex method, and external archives, which are general strategies applicable to improving NPDOA's balance and diversity [29].

- INPDOA: An improved version used in an AutoML framework for medical prognosis, demonstrating enhanced optimization performance for feature selection and hyperparameter tuning [31].

- Integration of Mathematical Strategies: Incorporating strategies from other mathematics-based algorithms, such as stochastic angles and adjustment factors from the Power Method Algorithm (PMA), can improve local search and balance [30].

The Scientist's Toolkit: Essential Research Reagents and Materials

| Item Name | Function / Role in Experimentation | Application Context in NPDOA Research |

|---|---|---|

| CEC Benchmark Suites (e.g., CEC 2017, CEC 2022) | Standardized set of test functions for rigorous, comparable performance evaluation [30] [29]. | Quantifying convergence speed, accuracy, and robustness of NPDOA against other algorithms. |

| PlatEMO Platform (v4.1 or higher) | A MATLAB-based open-source platform for evolutionary multi-objective optimization [28]. | Provides a framework for implementing, testing, and comparing NPDOA with a wide array of existing algorithms. |

| External Archive Module | Stores historically well-performing individuals to preserve genetic diversity [29]. | Used to supplement population diversity when stagnation is detected, helping to escape local optima. |

| Simplex Method Subroutine | A deterministic local search method for fast convergence in local regions [29]. | Integrated into update formulas (e.g., in systemic circulation) to refine solutions and improve convergence accuracy. |

| Opposition-Based Learning (OBL) | A strategy to generate opposing solutions to improve initial population quality or jump out of local optima [29]. | Applied during population initialization or when regeneration is needed to enhance exploration. |

Frequently Asked Questions (FAQs) and Troubleshooting Guides

Convergence Issues

Q: Why does my optimization converge to local optima instead of the global optimum?

A: Premature convergence is often caused by an imbalance between exploration and exploitation. The coupling disturbance strategy is designed to prevent this by deviating neural populations from their current attractors, thus exploring new areas of the solution space [4]. If this strategy's parameters are set too low, the algorithm lacks sufficient exploration. To resolve this:

- Increase the coupling strength parameter to enhance exploration.

- Verify that your information projection strategy is correctly regulating the transition from exploration to exploitation over iterations [4].

- Ensure the population size is adequate for the problem's dimensionality; a small population can lead to premature convergence.

Q: The algorithm's performance is highly variable across different runs on the same problem. What could be the cause?

A: High variance between runs can stem from the stochastic nature of the coupling disturbance. To improve consistency:

- Check the initialization of neural population states. Ensure they are randomly distributed across the search space to avoid initial bias.

- The attractor trending strategy relies on moving towards optimal decisions; if the initial populations are clustered in a poor region, convergence can be inconsistent [4].

- Consider implementing a random seed tracking system for your experiments to better diagnose the root cause of variability.

Parameter Configuration

Q: How should I set the parameters for the three core strategies to achieve balance?

A: Parameter tuning is critical for the Neural Population Dynamics Optimization Algorithm (NPDOA). The table below summarizes the key parameters and their roles [4]:

| Strategy | Key Parameter | Function | Tuning Guidance |

|---|---|---|---|

| Attractor Trending | Attractor Strength | Drives populations towards optimal decisions, ensuring exploitation [4]. | Increase for faster convergence on simple problems; decrease for complex, multi-modal problems to avoid local optima. |

| Coupling Disturbance | Coupling Strength | Deviates populations from attractors, improving exploration [4]. | Increase to escape local optima; decrease if the algorithm is not converging stably. |

| Information Projection | Projection Rate | Controls communication between populations, regulating the exploration-exploitation transition [4]. | Start with a higher rate to favor exploration early on, and implement a schedule for it to decrease over iterations, shifting focus to exploitation. |

Q: My model fails to learn any meaningful pattern, and the loss does not decrease. How can I troubleshoot this?

A: This can occur due to gradient imbalance or issues with the system's stiffness, particularly in complex dynamical systems [32] [33]. Recommended steps include:

- Normalize your data and ODEs: Implement a comprehensive normalization procedure for all inputs, outputs, and the governing differential equations to ensure stable training [33].

- Balance the loss functions: Use adaptive re-weighting to individually adjust the loss components for data fidelity and physical constraints (physics-informed approaches) [33].

- Respect temporal causality: Employ a sequential learning approach where training starts on a small time sub-domain and gradually expands to cover the entire domain [32].

Experimental Protocols and Implementation

Q: Can you provide a standard workflow for implementing and testing the NPDOA on a new problem?

A: The following protocol outlines a standard methodology for applying NPDOA:

Protocol 1: Standard Implementation and Validation of NPDOA

- Problem Formulation: Define the objective function, decision variables, and constraints for your single-objective optimization problem [4].

- Algorithm Initialization:

- Set the neural population size (N).

- Initialize the neural state (firing rate) for each population randomly within the feasible domain.

- Initialize parameters for the three strategies (see parameter table above).

- Iterative Optimization:

- Attractor Trending: For each population, calculate the movement towards the current best solution (attractor) to update its state [4].

- Coupling Disturbance: Select other populations and compute the coupling effect to perturb the current state, encouraging exploration [4].

- Information Projection: Update the overall state of each population by combining the attractor and coupling effects, weighted by the information projection rate [4].

- Evaluate the fitness of the new population states.

- Update the global best solution if improved solutions are found.

- Termination & Validation: Repeat Step 3 until a termination criterion is met (e.g., max iterations, convergence threshold). Validate results against known benchmarks or alternative algorithms [4].

Q: How can I visualize the concept of cross-attractor dynamics in my research?

A: The dynamics of neural populations can be conceptualized as moving through a landscape of attractors. The following diagram illustrates this theoretical framework, which is key to understanding the NPDOA's inspiration [34].

The Scientist's Toolkit: Research Reagent Solutions

Essential computational tools and models used in the field of neural population dynamics and bio-inspired optimization.

| Item | Function in Research |

|---|---|

| Wilson-Cowan Type Model | A biophysical network model used to simulate the mean-field activity of excitatory and inhibitory neuronal populations, forming the basis for analyzing multistable dynamics [34]. |

| Physics-Informed Neural Networks (PINNs) | A deep learning framework that incorporates physical laws (e.g., ODEs) as loss functions, used for solving forward and inverse problems in dynamical systems with limited data [32] [33]. |

| NEURON Simulator | A widely used simulation environment for building and testing computational models of neurons and networks of neurons [35]. |

| Cross-Attractor Coordination Analysis | A methodological framework to examine how regional brain states are correlated across all attractors, providing a better prediction of functional connectivity than single-attractor models [34]. |

| PlatEMO | A MATLAB-based platform for experimental evaluation of multi-objective optimization algorithms, used in NPDOA validation [4]. |

Troubleshooting Guide: Common MARBLE Experimentation Issues

Q1: My MARBLE model fails to learn consistent latent representations across different animals or sessions. What could be wrong? A: This inconsistency often stems from the model's inability to find meaningful dynamical overlap. To resolve this:

- Verify Local Flow Field (LFF) Calculation: Ensure the proximity graph (often a k-nearest neighbor graph) is correctly constructed from your neural state point cloud

X_c. An incorrect graph will lead to erroneous LFFs [3] [36]. - Check Hyperparameters: The order

pof the local approximation is critical. A value that is too low may miss important dynamical context, while one that is too high can overfit to noise. Tunepand other hyperparameters like learning rate as detailed in the method's supplementary tables [3]. - Confirm Dynamical Consistency: MARBLE assumes trials under the same user-defined condition

care dynamically consistent. Review your condition labels to ensure trials within a condition are governed by the same underlying process [3].

Q2: The decoded behavior from the latent space is inaccurate. How can I improve decoding performance? A: Within- and across-animal decoding accuracy is a key strength of MARBLE. If performance is poor:

- Inspect Input Data Quality: MARBLE requires neural firing rates. Confirm that your preprocessing pipeline correctly extracts these rates from raw recordings [36].

- Benchmark Against Baselines: Compare your decoding results against current methods like CEBRA or LFADS using the same dataset. Unsupervised MARBLE has been shown to achieve state-of-the-art or significantly better accuracy, so a large discrepancy may indicate an implementation error [3] [36].

- Leverage the Manifold Structure: The method relies on the low-dimensional manifold hypothesis. Use techniques like PCA as an initial check to verify that your neural population data exhibits this structure [36].

Q3: I am getting poor alignment of dynamical flows from different recording sessions. A: This issue relates to the core metric of dynamical similarity.

- Review the Similarity Metric: MARBLE uses the Optimal Transport distance between the latent distributions

P_candP_c'to quantify dynamical overlap. Verify your implementation of this distance metric, as it generally outperforms entropic measures like KL-divergence for this purpose [3] [36]. - Operate in the Correct Mode: MARBLE can run in an "embedding-agnostic" mode, which uses inner product features to achieve invariance to local rotations of the LFFs. This is essential for comparing across sessions or animals where the neural embedding may differ. Ensure you are using this mode for cross-session alignment [3].

Frequently Asked Questions (FAQs)

Q1: What is the primary innovation of the MARBLE framework compared to methods like PCA or LFADS? A: MARBLE's key innovation is its focus on learning from local dynamical flow fields (LFFs) over the neural manifold, rather than from static neural states or global trajectories. While PCA and UMAP treat neural activity as a static point cloud, and LFADS models the temporal evolution of single trajectories, MARBLE uses geometric deep learning to create a distributional representation of the underlying dynamics. This allows it to compare computations in a way that is invariant to the specific neural embedding, leading to highly interpretable latent spaces and robust across-animal decoding without requiring behavioral labels [3] [36].

Q2: Can MARBLE be used in a fully unsupervised manner, and if so, how does it learn without labels? A: Yes, MARBLE is designed as a fully unsupervised framework. It uses a contrastive learning objective that leverages the natural continuity of the manifold. The core idea is that LFFs from adjacent points on the manifold should be more similar to each other than to LFFs from distant, unrelated points. This self-supervision signal allows the model to learn a meaningful organization of the latent space without any external labels like behavior or stimulus, which is crucial for the unbiased discovery of neural computational structure [3].

Q3: What types of neural computations and behavioral variables has MARBLE been shown to capture? A: Through extensive benchmarking on both simulated and experimental data, MARBLE has been proven to infer latent representations that parametrize high-dimensional dynamics related to several key cognitive computations. This includes gain modulation, decision-making, and changes in internal state (e.g., during a reaching task in primates and spatial navigation in rodents). The representations are consistent enough to train universal decoders and compare computations across different individuals [3] [36].

Q4: How does MARBLE handle the "neural embedding problem," where different neurons are recorded across sessions? A: MARBLE addresses this through its local viewpoint and architectural design. By decomposing dynamics into local flow fields and then mapping them to a shared latent space, it focuses on the intrinsic dynamical process rather than the specific set of recorded neurons. Furthermore, the network's "inner product features" make the latent vectors invariant to local rotations of the LFFs, which correspond to different embeddings of the neural states in the measured population activity [3].

Experimental Protocols & Methodologies

Protocol 1: Inferring Latent Representations from Primate Premotor Cortex Data

Objective: To obtain a decodable and interpretable latent representation of neural population dynamics during a reaching task.

Materials:

- Neural Data: Single-neuron population recordings from the premotor cortex of a macaque [3] [36].

- Software: MARBLE package (available on GitHub [37]).

Methodology:

- Data Preprocessing: Extract trial-aligned neural firing rates

{x(t; c)}for each conditionc(e.g., different reach targets). - Input Configuration: Provide the ensemble of trials

{x(t; c)}and the user-defined condition labelscas input to MARBLE. The labels are not class assignments but indicate which trials are expected to be dynamically consistent. - Model Execution:

- The algorithm constructs a proximity graph from the point cloud

X_cof all neural states [3] [36]. - It approximates the vector field

F_cand decomposes it into Local Flow Fields (LFFs) for each neural state. - The geometric deep learning network maps each LFF to a latent vector

z_i, forming the distributional representationP_c[3].

- The algorithm constructs a proximity graph from the point cloud

- Output Analysis: The latent vectors

Z_ccan be visualized and used to decode kinematic variables, demonstrating the interpretability of the representation.

Protocol 2: Comparing Cognitive Computations Across RNNs

Objective: To use MARBLE's similarity metric to detect subtle changes in high-dimensional dynamical flows of RNNs trained on cognitive tasks.

Materials:

- In Silico Data: Trained Recurrent Neural Networks (RNNs), such as those performing decision-making tasks with varying gain or decision thresholds [3] [36].

Methodology:

- Data Generation: Simulate or extract the internal activations (hidden states) of the RNNs under different task conditions or parameter settings.

- MARBLE Application: Run MARBLE on the neural states (RNN activations) from each system or condition to be compared.

- Distance Calculation: After training, compute the Optimal Transport distance