Optimizing EEG Artifact Removal: From Foundational Principles to Advanced AI-Driven Techniques

This article provides a comprehensive analysis of state-of-the-art optimization techniques for electroencephalogram (EEG) artifact removal, tailored for researchers and drug development professionals.

Optimizing EEG Artifact Removal: From Foundational Principles to Advanced AI-Driven Techniques

Abstract

This article provides a comprehensive analysis of state-of-the-art optimization techniques for electroencephalogram (EEG) artifact removal, tailored for researchers and drug development professionals. It explores the fundamental challenge of distinguishing neural signals from physiological and technical artifacts, reviews a spectrum of methods from traditional blind source separation to cutting-edge deep learning models like autoencoder-targeted adversarial transformers, and delivers a rigorous comparative framework for performance validation. The content further addresses critical troubleshooting and optimization strategies for real-world clinical and research applications, culminating in a discussion on future directions and implications for enhancing data integrity in neuroscience research and clinical trials.

Understanding EEG Artifacts: A Foundational Guide to Sources, Impacts, and Detection Challenges

Electroencephalography (EEG) is designed to record cerebral activity, but it also captures electrical activities arising from sites other than the brain. These unwanted signals, known as artifacts, can obscure crucial neural signals and compromise data quality, making their identification and removal essential for accurate analysis [1] [2]. Artifacts are classified into two main categories: physiological artifacts, which originate from the patient's body, and non-physiological artifacts, which arise from external sources such as equipment or the environment [1] [3]. Because EEG signals are typically in the microvolt range, they are highly susceptible to contamination from these sources, which can have amplitudes much larger than the neural signals of interest [4] [2]. Effectively managing these artifacts is a critical step in EEG preprocessing, particularly in research contexts aimed at optimizing removal techniques.

Types of EEG Artifacts and Their Signatures

Understanding the origin and characteristics of different artifacts is the first step in troubleshooting contamination in EEG recordings. The following tables summarize the key features of common physiological and non-physiological artifacts.

Table 1: Physiological EEG Artifacts

| Artifact Type | Origin | Time-Domain Signature | Frequency-Domain Signature | Topographic Distribution |

|---|---|---|---|---|

| Ocular (Blink) | Corneo-retinal dipole; eyelid movement [5] [6] | High-amplitude, smooth deflections [5] | Delta/Theta bands (0.5–4 Hz / 4–8 Hz) [2] | Bifrontal (Fp1, Fp2); symmetric [5] |

| Ocular (Lateral Movement) | Corneo-retinal dipole during saccades [3] | Box-shaped deflections with opposite polarity [3] | Delta/Theta bands, effects up to 20 Hz [3] | Frontotemporal (F7, F8); asymmetric [1] |

| Muscle (EMG) | Muscle contractions (e.g., jaw, forehead, neck) [1] [2] | High-frequency, irregular, low-amplitude "spiky" activity [5] [2] | Broadband, dominates Beta/Gamma (>13 Hz) [2] | Frontal, Temporal regions; can be focal or diffuse [1] [3] |

| Cardiac (ECG/Pulse) | Electrical activity of the heart; arterial pulsation [1] [2] | Rhythmic, sharp waveforms time-locked to QRS complex [5] [7] | Overlaps multiple EEG bands [2] | Diffuse, but often more prominent on the left side [5] |

| Glossokinetic | Tongue movement (dipole: tip negative, base positive) [1] | Slow, delta-frequency waves [1] [5] | Delta band [5] | Broad field, maximal inferiorly [1] |

| Sweat | Changes in skin impedance from sweat glands [2] [3] | Very slow baseline drifts (<0.5 Hz) [5] [3] | Very low frequencies (<1 Hz) [2] [3] | Often bilateral, but can be unilateral or focal [5] |

| Respiration | Chest/head movement altering electrode contact [1] [2] | Slow, rhythmic waveforms synchronized with breathing [2] | Delta/Theta bands [2] | Often posterior if patient is supine [1] |

Table 2: Non-Physiological (Technical) EEG Artifacts

| Artifact Type | Origin | Time-Domain Signature | Frequency-Domain Signature | Topographic Distribution |

|---|---|---|---|---|

| Electrode Pop | Sudden change in electrode-skin impedance [5] [2] | Abrupt, high-amplitude transient with steep upslope [5] | Broadband, non-stationary [2] | Limited to a single electrode [1] [5] |

| Cable Movement | Cable movement causing electromagnetic interference [1] [2] | Chaotic, high-amplitude deflections; can be rhythmic [2] | Can introduce peaks at low or mid frequencies [2] | Often affects multiple channels [3] |

| Line Noise | Electromagnetic interference from AC power [1] [4] | Persistent, high-frequency oscillation [3] | Sharp peak at 50 Hz or 60 Hz [5] [2] | All channels, but severity can vary [3] |

| Loose Electrode | Disrupted contact between electrode and scalp [3] | Slow drifts and/or sudden "pops" [3] | Slow drifts affect very low frequencies [3] | Typically a single electrode, but reference affects all [2] |

Experimental Protocols for Artifact Identification and Removal

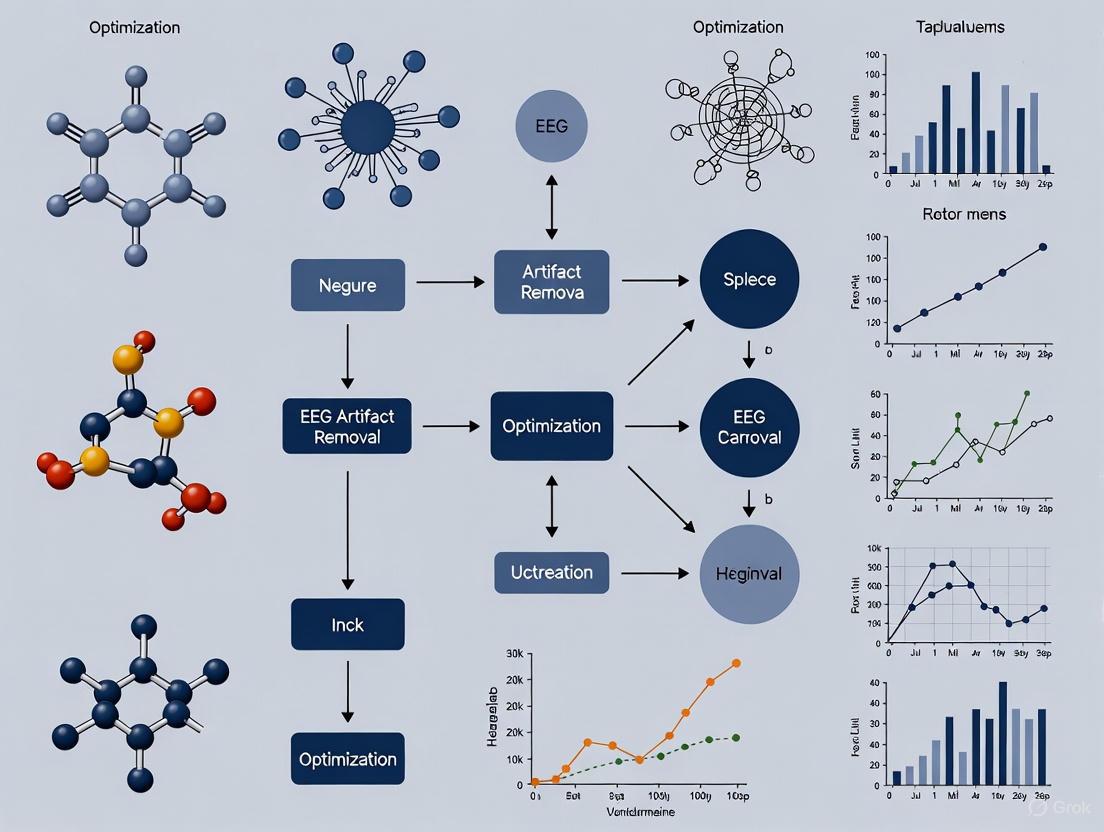

Workflow for Systematic EEG Artifact Handling

A standardized workflow is crucial for ensuring consistent and effective artifact management in EEG research. The following diagram outlines a recommended protocol from recording to clean data.

EEG Artifact Handling Workflow

Protocol for Independent Component Analysis (ICA)

ICA is a widely used blind source separation method for isolating and removing artifacts, particularly effective for ocular and muscular contamination [6] [2].

Principle: ICA decomposes multi-channel EEG data into statistically independent components (ICs), each with a fixed scalp topography and time course [6]. The underlying assumption is that artifacts and neural signals originate from statistically independent sources.

Step-by-Step Methodology:

- Preprocessing: Band-pass filter the data (e.g., 1-40 Hz) to remove slow drifts and high-frequency noise that can interfere with ICA decomposition [6].

- Data Decomposition: Apply an ICA algorithm (e.g., Infomax, FastICA) to the preprocessed data. This results in:

- A mixing matrix, which defines how the components project to the scalp sensors.

- The independent components (ICs) themselves, which are the time courses of the sources.

- Component Classification: Identify artifact-laden components. This can be done:

- Manually: Inspecting component topography (e.g., frontal projection for blink artifacts) and time course (e.g., high-frequency bursts for EMG) [2].

- Automatically: Using trained classifiers (e.g., ICLabel, MARA) that label components based on features.

- Component Removal: Subtract the identified artifact components from the data by projecting only the "brain" components back to the sensor space.

- Validation: Visually inspect the cleaned data to ensure the artifact is removed and cerebral activity is preserved.

Considerations: ICA requires a high number of EEG channels (typically >40 is ideal) and stationarity of the signal [6]. It is computationally intensive and may require manual intervention for component selection.

Protocol for Regression-Based Methods

Regression is a traditional approach, particularly effective for correcting ocular artifacts when a reference electrooculogram (EOG) channel is available [6].

Principle: The method assumes a linear and time-invariant relationship between the EOG reference and the artifact present in the EEG channels. It models and subtracts this contribution [6].

Step-by-Step Methodology (Gratton & Cole Algorithm):

- Calibration Phase: Record a segment of data where the participant produces spontaneous blinks and eye movements. This is used to estimate the propagation factor (weight, β) of the ocular artifact from the EOG channel to each EEG channel [6].

- Filtering:

- Filter the raw EEG signal to the frequency band of interest.

- Low-pass filter the EOG signal (cut-off ~15 Hz) to eliminate high-frequency disturbances [6].

- Regression Coefficient Estimation: For each EEG channel, calculate the weight β_ei that best predicts the artifact in the EEG based on the EOG signal.

- Artifact Subtraction: For the main experimental data, subtract the EOG signal scaled by the calculated β_ei from each corresponding EEG channel:

Clean_EEG = Raw_EEG - β_ei * EOG[6].

Considerations: This method requires a separate EOG recording. A limitation is the risk of over-correction, where genuine brain signals correlated with the EOG are also subtracted [6].

Protocol for Deep Learning-Based Removal

Deep learning represents a modern, data-driven approach to artifact removal, capable of learning complex features directly from contaminated EEG [8].

Principle: Neural networks, such as Convolutional Neural Networks (CNNs) and Long Short-Term Memory (LSTM) networks, are trained to map contaminated EEG signals to their clean counterparts [8].

Step-by-Step Methodology (e.g., CLEnet Model):

- Dataset Preparation: A large dataset of paired contaminated and clean EEG is required for supervised training. This is often created semi-synthetically by adding recorded artifacts (e.g., EMG, EOG) to clean EEG templates [8].

- Network Architecture:

- Dual-scale CNN: Extracts morphological features from the EEG signal at different temporal scales.

- LSTM: Captures the temporal dependencies and sequential nature of the EEG.

- Attention Mechanism (e.g., EMA-1D): Helps the network focus on the most relevant features for separating artifact from brain signal [8].

- Training: The network is trained end-to-end using a loss function like Mean Squared Error (MSE) to minimize the difference between the model's output and the clean ground-truth EEG.

- Inference: The trained model takes new, contaminated EEG data as input and directly outputs the cleaned signal.

Considerations: This method requires extensive computational resources and large, well-annotated datasets for training. A key advantage is its potential to remove multiple types of artifacts without needing manual intervention or reference channels [8].

The Scientist's Toolkit: Key Reagents & Computational Solutions

Table 3: Essential Tools for EEG Artifact Research

| Tool / Solution | Category | Primary Function | Application in Research |

|---|---|---|---|

| ICA (e.g., Infomax) | Algorithm | Blind source separation | Isolate and remove ocular, muscular, and cardiac components from high-density EEG [6] [2] |

| Artifact Subspace Reconstruction (ASR) | Algorithm | Statistical detection & reconstruction | Clean continuous EEG in real-time or offline; effective for large-amplitude, non-stationary artifacts [6] |

| Deep Learning Models (e.g., CNN-LSTM) | Algorithm | End-to-end signal mapping | Remove multiple artifact types simultaneously without reference channels; adaptable to multi-channel data [2] [8] |

| Regression-Based Methods | Algorithm | Linear artifact subtraction | Remove ocular artifacts using EOG reference channels; useful for calibration-based studies [6] |

| EOG/ECG Reference Electrodes | Hardware | Record artifact sources | Provide reference signals for regression-based methods and validation of artifact removal [6] |

| High-Density EEG Systems (64+ channels) | Hardware | Data acquisition | Improve spatial resolution and the performance of source separation methods like ICA [6] |

| Notch / Band-Pass Filters | Signal Processing | Frequency-based filtering | Remove line noise (50/60 Hz) and limit signal bandwidth to biologically plausible ranges [5] [3] |

Troubleshooting Guide & FAQs

FAQ 1: How can I distinguish a frontal epileptic spike from an eye blink artifact?

Answer: Key distinguishing features are topography and waveform morphology. Eye blinks appear as high-amplitude, symmetric, smooth deflections maximal at Fp1 and Fp2, with no electrical field spreading to the occipital region. In contrast, frontal spikes are typically sharper, may have a more localized field, and are often followed by a slow wave. Critically, genuine spikes will have a visible electrical field that propagates to adjacent electrodes, which blinks lack [5].

FAQ 2: My EEG data shows rhythmic, high-frequency "buzz" in the temporal channels. What is this, and how can I remove it?

Answer: This is most likely electromyogenic (EMG) artifact from the temporalis muscles, often caused by jaw clenching or tension. It is characterized by high-frequency, low-amplitude "spiky" activity [5]. Removal can be challenging due to its broadband nature. Recommended removal techniques include:

- ICA: Effective if the muscle activity is transient. Components representing EMG often have a circumscribed topography and a high-frequency, random time course [2].

- Advanced Filtering: Time-frequency based techniques (e.g., wavelet denoising) can target specific contaminated segments.

- Deep Learning: Models like CLEnet have shown efficacy in removing EMG artifacts from multi-channel data [8].

FAQ 3: I see a regular, sharp waveform in my EEG that coincides with the heartbeat. Is this a cerebral signal?

Answer: This is almost certainly a cardiac artifact. It can manifest as:

- ECG artifact: Direct pick-up of the heart's electrical signal, appearing as a rhythmic sharp wave time-locked to the QRS complex [5].

- Pulse artifact: Mechanical pulsation of a scalp artery under an electrode, causing a slow wave that follows the QRS complex by 200-300 ms [1]. To confirm, check synchronization with a dedicated ECG channel. For removal, ICA is often effective. If an ECG channel is available, regression-based methods or more sophisticated approaches like ASR can be used [3].

FAQ 4: What is the best single method for removing all types of artifacts?

Answer: There is no universal "best" method; the optimal choice depends on the artifact type, EEG setup, and research goal. The table below provides a guide:

Table 4: Artifact Removal Method Selection Guide

| Artifact Type | Recommended Methods | Notes & Considerations |

|---|---|---|

| Ocular (Blinks, Saccades) | ICA, Regression-based [6] [3] | Regression requires EOG channel; ICA is more common for high-density data. |

| Muscle (EMG) | ICA, Deep Learning [2] [8] | ICA works best for transient bursts; Deep Learning shows promise for persistent EMG. |

| Cardiac (ECG/Pulse) | ICA [3] | Very effective when the artifact is consistent. |

| Line Noise (50/60 Hz) | Notch Filter [5] [4] | Use a narrow bandwidth to minimize signal loss. |

| Electrode Pop | Artifact Rejection, Interpolation [3] | Remove the affected epoch or interpolate the bad channel. |

| Sweat/Slow Drift | High-Pass Filtering (e.g., 0.5 Hz cut-off) [3] | Prevents amplifier saturation and slow baseline wander. |

FAQ 5: When should I reject data segments versus using a correction algorithm?

Answer: As a general rule, reject data segments if the artifact is:

- Extreme: Saturation of amplifiers or massive movement artifacts that obliterate the EEG signal [3].

- Infrequent: Occurring in only a small percentage of trials, making rejection a more straightforward and conservative approach. Use correction algorithms (e.g., ICA, ASR, Deep Learning) when:

- The artifact is persistent or frequent, and rejecting contaminated segments would lead to an unacceptable loss of data.

- The artifact overlaps in time with the event-related potential (ERP) or neural oscillation of interest.

- The artifact can be reliably isolated from the neural signal by the chosen algorithm [6] [8].

In electroencephalography (EEG) research, an "artifact" is defined as any recorded electrical activity that does not originate from the brain [5]. Effective artifact management is crucial for data integrity, particularly in drug development and clinical research where accurate neural signal interpretation is paramount. Artifacts are broadly categorized into physiological (originating from the patient's body) and non-physiological (originating from external sources) [1]. Misinterpretation of artifacts can lead to false positives in event-related potential studies, inflated effect sizes, and biased source localization estimates, ultimately compromising research validity [9]. This guide provides a structured framework for researchers to identify, classify, and troubleshoot common EEG artifacts.

Artifact Classification Tables

Physiological Artifacts

Physiological artifacts arise from various bodily sources other than cerebral activity. The table below summarizes their characteristics and resolution strategies.

| Artifact Type | Typical Waveform/Morphology | Primary Scalp Location | Frequency Characteristics | Identification & Troubleshooting Tips |

|---|---|---|---|---|

| Eye Blinks [5] [1] | High-amplitude, positive deflection | Bifrontal (Fp1, Fp2) | Very slow (delta) | • Check video for correlation with blinks.• Should have no posterior field.• A key component of normal awake EEG. |

| Lateral Eye Movements [5] [1] | Phase reversals at F7/F8; opposing polarities | Frontotemporal | Very slow (delta) | • "Look to the positive side" on bipolar montage.• Right look: positivity at F8, negativity at F7. |

| Muscle (EMG) [5] [1] | Very fast, spiky, low-voltage activity | Frontal, Temporal (Frontalis, Temporalis) | High-Frequency (Beta >30 Hz) | • Often from jaw clenching, talking.• Can be rhythmic in movement disorders.• Minimal over vertex. |

| Glossokinetic (Tongue) [5] [1] | Slow, broad potential field | Diffuse, maximal inferiorly | Delta | • Ask patient to say "la la la" to reproduce.• Often seen with chewing artifact. |

| Cardiac (ECG) [5] [1] | Sharp, rhythmic waveform time-locked to QRS | Left hemisphere, generalized | Corresponds to heart rate | • Correlate with simultaneous ECG channel.• More common in individuals with short, wide necks. |

| Pulse [1] | Slow, rhythmic waves | Over a pulsating artery | Very slow (delta) | • Time-locked to ECG but with a 200-300 ms delay.• Repositioning the affected electrode should resolve it. |

| Sweat [5] [1] | Very slow, drifting baseline | Generalized, can be focal | Very slow (<0.5 Hz) | • Caused by sodium chloride in sweat.• Adjust room temperature if possible. |

Non-Physiological Artifacts

Non-physiological artifacts originate from the environment, equipment, or improper setup. The table below outlines their common causes and solutions.

| Artifact Type | Typical Waveform/Morphology | Affected Electrodes/Channels | Frequency Characteristics | Identification & Troubleshooting Tips |

|---|---|---|---|---|

| Mains Interference [10] [1] | Monotonous, high-frequency waves | All channels, but worse in high-impedance electrodes | 50 Hz or 60 Hz | • Check and lower all electrode impedances.• Use a notch filter in post-processing.• Ensure proper grounding and distance from power sources. |

| Electrode Pop [5] [10] | Sudden, very steep upslope with slower decay | A single electrode | DC shift | • Caused by a loose or faulty electrode.• Check impedance and reapply the offending electrode. |

| Cable Movement [10] | Sudden, high-amplitude shifts | Multiple channels connected to the moved cable | Low to very high | • Use lightweight, actively shielded cables.• Secure cables to prevent sway. |

| Bad Channel/High Impedance [10] | Unbiological, noisy signal; often includes 50/60 Hz | One or a few specific channels | Variable | • Check impedances before recording.• Re-prep and reapply the problematic electrodes. |

| Headbox/Amplifier Issue [11] | Strange signal across all channels; oversaturation (grayed out) | All channels, particularly reference/ground | Variable | • Systematic troubleshooting required.• Swap headbox or amplifier to isolate the issue. |

Frequently Asked Questions (FAQs)

Q1: Why is it critical to accurately classify artifacts before removal in research? Accurate classification is the first step toward targeted cleaning. Indiscriminate removal techniques, such as subtracting entire Independent Component Analysis (ICA) components, can remove neural signals along with artifacts. This not only results in data loss but can also artificially inflate effect sizes in event-related potential analyses and bias source localization [9]. Using strategies targeted to specific artifact types (e.g., removing only the artifact-dominated periods of a component) preserves neural data integrity.

Q2: A specific electrode shows sudden, large spikes with no field. What is the most likely cause? This description is classic for an electrode pop artifact [5] [10]. It is caused by a sudden change in impedance, typically due to a loose electrode. The solution is to check the impedance of the specific electrode and reapply it to ensure a stable connection to the scalp.

Q3: My EEG shows rhythmic, high-frequency "buzz" across all channels. What should I check first? This points to mains interference (50/60 Hz) [10] [1]. Your first step should be to check the impedance of all electrodes, particularly the ground and reference. High impedance at any electrode, especially the ground, can make the entire system susceptible to this interference. Ensure all electrodes have a stable, low-impedance connection.

Q4: I see rhythmic, slow-wave activity that doesn't look cerebral. How can I determine if it's a glossokinetic artifact? You can perform a simple provocation test. Ask the participant to say "la la la" or another lingual phoneme. If the same slow-wave pattern appears on the EEG, it confirms the artifact is from tongue movement [5]. This artifact is often seen in conjunction with the muscle artifact from chewing.

The Scientist's Toolkit: Essential Reagents & Materials

The following table details key solutions and materials essential for effective EEG artifact management in a research context.

| Tool/Reagent | Primary Function | Application Notes |

|---|---|---|

| Abrasive Skin Prep Gel | Reduces scalp impedance by removing dead skin cells and oils. | Critical for achieving stable electrode connections. Reduces non-physiological artifacts like mains interference and electrode pop. |

| Conductive Electrode Paste/Gel | Provides a stable, low-impedance electrical pathway between scalp and electrode. | The quality and application directly impact signal stability and baseline noise. |

| Notch Filter | Digitally removes power line frequency (50/60 Hz) from the signal. | A post-processing tool for mitigating mains interference [10]. Use cautiously as it can slightly distort the signal. |

| Independent Component Analysis (ICA) | A blind source separation algorithm that statistically isolates neural and artifactual sources. | The cornerstone of modern artifact removal pipelines [9]. Allows for selective rejection of artifact components. |

| REL AX Pipeline | A standardized, automated EEG pre-processing pipeline. | Implements targeted artifact reduction methods to minimize effect size inflation and source localization bias [9]. |

| Wavelet Transform Toolboxes | Allow for time-frequency analysis and filtering of non-stationary signals. | Useful for detecting and removing transient artifacts like muscle twitches or electrode pops. |

Experimental Workflow for Systematic Artifact Identification and Troubleshooting

The diagram below outlines a systematic protocol for identifying and resolving EEG artifacts during data acquisition, a critical skill for every researcher.

Figure 1: A systematic troubleshooting workflow for common EEG artifacts, based on a hierarchical approach to problem-solving [11].

Troubleshooting Guides

Ocular Artifact (EOG) Troubleshooting Guide

Q: My EEG data shows frequent, high-amplitude slow waves in frontal channels, particularly during subject responses. What is this and how can I resolve it?

A: This is a classic signature of ocular artifact, caused by eye blinks or movements. The eyeball acts as an electric dipole (positive cornea, negative retina), and its movement generates a large electrical field that dominates frontal electrodes [5] [1].

- Step 1: Confirmation. Verify the artifact by checking its distribution. A true ocular artifact should be most prominent in the frontal and frontopolar electrodes (e.g., Fp1, Fp2, F7, F8) and show a rapid, high-amplitude waveform without a posterior field [5].

- Step 2: Experimental Mitigation.

- Instruct the subject to minimize eye blinks during critical task periods and to fixate on a point when possible.

- Use a structured rest period between trials to allow for natural blinking.

- If available, record a dedicated EOG reference channel using electrodes placed above and below the eye (vertical EOG) and at the outer canthi (horizontal EOG) [12] [13].

- Step 3: Post-Processing Removal.

- Traditional Method: Apply Independent Component Analysis (ICA) to decompose the signal and manually remove components that topographically and temporally match the EOG pattern [12] [1].

- Advanced Deep Learning Method: For an automated, high-fidelity approach, use a model like CLEnet, which integrates CNNs and LSTM networks with an attention mechanism to separate EOG artifacts from neural signals without manual intervention [8].

Muscle Artifact (EMG) Troubleshooting Guide

Q: I am observing high-frequency, chaotic noise across several channels, especially in temporal regions, which obscures my beta and gamma band analysis. How can I clean this signal?

A: You are likely dealing with electromyogenic (EMG) artifact from jaw, face, neck, or scalp muscle contractions. EMG produces high-frequency, broadband noise that significantly overlaps with and can mask cognitively relevant beta (13-30 Hz) and gamma (>30 Hz) rhythms [12] [2].

- Step 1: Confirmation. Identify the artifact by its characteristic "spiky," non-rhythmic appearance in the time domain and its broad spectral power in high frequencies. It is often worsened by subject anxiety, task difficulty, or poor head/neck support [2] [1].

- Step 2: Experimental Mitigation.

- Ensure the subject is comfortable and relaxed. Use a neck support if the subject is seated.

- Instruct the subject to relax their jaw, keep their mouth slightly open, and avoid clenching, swallowing, or talking during recordings.

- Check that the EEG cap is not overly tight, causing discomfort and tension.

- Step 3: Post-Processing Removal.

- Traditional Method: A band-pass filter (e.g., 1-50 Hz) can partially remove the highest-frequency EMG components, but at the cost of losing genuine neural gamma activity. ICA can also be used to remove muscular components [12].

- Advanced Deep Learning Method: For superior results, employ a model specifically designed for EMG removal, such as NovelCNN [8] or the LSTEEG autoencoder, which captures non-linear, temporal dependencies in the data to isolate and remove muscle noise [14].

Cardiac Artifact (ECG/BCG) Troubleshooting Guide

Q: I see a rhythmic, sharp waveform that appears synchronously across my EEG channels, particularly on the left side. What is the cause and how do I remove it?

A: This describes a cardiac artifact. It can manifest as two types:

- ECG Artifact: The electrical signal from the heart muscle is directly picked up by the EEG electrodes [1].

- BCG Artifact: In simultaneous EEG-fMRI recordings, the ballistocardiogram (BCG) artifact is caused by scalp electrode movement due to pulsatile blood flow in the magnetic field [15].

- Step 1: Confirmation. Correlate the sharp transients in the EEG with a simultaneously recorded ECG channel. The artifact will be time-locked to the QRS complex of the heartbeat [5] [1]. BCG artifact is also pulse-synchronous but may have a more complex topography.

- Step 2: Experimental Mitigation.

- For ECG, ensure the subject is not leaning their head to the left side, which brings electrodes closer to the heart.

- For BCG in EEG-fMRI, use specialized, low-motion electrode setups and carbon-fiber wires to minimize the artifact at the source [15].

- Step 3: Post-Processing Removal.

- For ECG Artifact: Template-based subtraction methods (like AAS) or ICA are effective. The artifact's regularity makes it a good candidate for these approaches [1].

- For BCG Artifact: Several dedicated methods exist.

- Average Artifact Subtraction (AAS): Creates an average artifact template from pulse-synchronous epochs and subtracts it. Offers high signal fidelity [15].

- Optimal Basis Set (OBS): Uses PCA to adaptively model and remove the complex BCG artifact shape, preserving signal structure well [15].

- Independent Component Analysis (ICA): Decomposes the signal and allows for the removal of BCG-related components [15].

- Hybrid Methods (e.g., OBS + ICA): Combine the strengths of different methods, often yielding the best results for connectivity analysis [15].

Perspiration Artifact Troubleshooting Guide

Q: My EEG baseline is exhibiting very slow, drifting waveforms that make it difficult to analyze low-frequency components. What is the source of this drift?

A: This is typically caused by perspiration (sweat) artifact. The sodium chloride in sweat alters the electrode-skin impedance, creating slow, fluctuating electrical potentials that manifest as baseline drift, often affecting multiple channels [2] [1].

- Step 1: Confirmation. The artifact appears as very low-frequency activity (typically <0.5 Hz) and can be bilateral or generalized. It is often associated with a warm environment, physical exertion, or subject stress [2].

- Step 2: Experimental Mitigation.

- Control the laboratory environment to maintain a cool, comfortable temperature with stable humidity.

- Allow the subject to acclimate to the environment before starting the recording.

- Ensure the subject is psychologically relaxed to minimize stress-induced sweating.

- Step 3: Post-Processing Removal.

- Traditional Method: Apply a high-pass filter with a very low cutoff frequency (e.g., 0.5 Hz or 1 Hz). This is often the most straightforward and effective solution [2].

- Alternative Method: Regression-based approaches or ICA can also be used to identify and remove the slow, persistent components associated with sweat [13].

The following tables summarize the performance of various artifact removal techniques as reported in recent literature, providing a basis for selecting an appropriate method.

Table 1: Performance Comparison of Deep Learning Models for Artifact Removal

Data sourced from recent studies on semi-synthetic and real EEG datasets. SNR: Signal-to-Noise Ratio (higher is better); CC: Correlation Coefficient (higher is better); RRMSE: Relative Root Mean Square Error (lower is better). [8]

| Model / Architecture | Target Artifact | Key Metric Results | Advantages & Applicability |

|---|---|---|---|

| CLEnet (CNN + LSTM + EMA-1D) | Mixed (EOG+EMG) | SNR: 11.50 dB, CC: 0.925, RRMSEt: 0.300 [8] | Superior for multi-artifact and multi-channel removal; integrates temporal and morphological features. |

| AnEEG (LSTM-based GAN) | General Artifacts | Improved NMSE, RMSE, CC, SNR, and SAR vs. wavelet methods [16] | Adversarial training effective for generating clean EEG from contaminated signals. |

| LSTEEG (LSTM Autoencoder) | General Artifacts | Superior artifact detection and correction vs. convolutional autoencoders [14] | Captures long-range, non-linear dependencies in sequential EEG data. |

| NovelCNN (CNN) | EMG | Specialized for EMG artifact removal [8] | Network structure tailored for specific EMG patterns. |

Table 2: Performance of BCG Artifact Removal Methods in EEG-fMRI

Evaluation of methods on simultaneous EEG-fMRI data, measuring signal fidelity and structural similarity. MSE: Mean Squared Error (lower is better); PSNR: Peak Signal-to-Noise Ratio (higher is better); SSIM: Structural Similarity Index (higher is better). [15]

| Removal Method | Key Metric Results | Strengths and Topological Impact |

|---|---|---|

| AAS (Average Artifact Subtraction) | MSE: 0.0038, PSNR: 26.34 dB [15] | Best raw signal fidelity; simple template-based approach. |

| OBS (Optimal Basis Set) | SSIM: 0.72 [15] | Best preserves structural similarity of the original signal. |

| ICA (Independent Component Analysis) | Shows sensitivity in dynamic graph metrics [15] | Effective for identifying complex artifact components; requires expertise for component selection. |

| OBS + ICA (Hybrid) | Produced lowest p-values in frequency band pair connectivity [15] | Combined approach beneficial for functional connectivity and network analysis. |

Detailed Experimental Protocols

Protocol: Benchmarking Deep Learning Models for Ocular and Muscle Artifact Removal

This protocol outlines the procedure for training and evaluating deep learning models, such as CLEnet, on a semi-synthetic dataset, as described in recent literature [8].

- Dataset Preparation:

- Source: Use a benchmark dataset like EEGdenoiseNet [8], which provides clean EEG segments and clean EOG and EMG artifact segments.

- Synthetic Contamination: Artificially contaminate the clean EEG signals by linearly mixing them with the clean artifact signals at varying signal-to-noise ratios (SNRs) to generate paired data (clean EEG + contaminated EEG). A typical mixture model is: ( Contaminated\ EEG = Clean\ EEG + \lambda \cdot Artifact ), where (\lambda) is a scaling factor to control the contamination level [8].

- Data Preprocessing:

- Band-pass filter all data (e.g., 0.5-50 Hz) to remove drift and high-frequency noise.

- Segment the continuous data into epochs of fixed length (e.g., 2-second segments).

- Normalize the amplitude of each channel or epoch to a standard range (e.g., z-score normalization).

- Model Training:

- Architecture: Implement the CLEnet model, which uses a dual-branch network with dual-scale CNNs for morphological feature extraction and LSTM networks for temporal feature extraction, integrated with an improved EMA-1D attention mechanism [8].

- Training Regime: Split the data into training, validation, and test sets (e.g., 70%/15%/15%). Train the model in a supervised manner using the contaminated EEG as input and the clean EEG as the target output.

- Loss Function: Use Mean Squared Error (MSE) as the loss function to minimize the difference between the model's output and the ground-truth clean EEG [8].

- Model Evaluation:

- Quantitative Metrics: Calculate the following metrics on the held-out test set by comparing the model's output to the ground-truth clean EEG:

- Signal-to-Noise Ratio (SNR) in dB

- Correlation Coefficient (CC)

- Relative Root Mean Square Error in temporal (RRMSEt) and frequency (RRMSEf) domains [8].

- Comparison: Benchmark the performance against other models like 1D-ResCNN, NovelCNN, and DuoCL [8].

- Quantitative Metrics: Calculate the following metrics on the held-out test set by comparing the model's output to the ground-truth clean EEG:

Protocol: Comparative Evaluation of BCG Removal Methods for EEG-fMRI Connectivity Analysis

This protocol describes a holistic framework for evaluating how different BCG removal methods affect subsequent EEG-based functional connectivity measures [15].

- Data Acquisition:

- Acquire simultaneous EEG-fMRI data from participants using an MRI-compatible EEG system and a standard fMRI sequence.

- Record a synchronised ECG channel to identify the cardiac R-peaks for BCG artifact correction.

- BCG Artifact Removal:

- Apply multiple removal methods to the same raw EEG data for comparison. Key methods include:

- AAS: Average artifact subtraction using the ECG R-peak as a trigger [15].

- OBS: Optimal Basis Set method, which uses PCA on the artifact template to create adaptive basis functions for subtraction [15].

- ICA: Independent Component Analysis (e.g., using FastICA or Infomax algorithms) with manual or automated identification of BCG-related components [15].

- Hybrid Methods: Apply methods sequentially, such as OBS followed by ICA (OBS+ICA) [15].

- Apply multiple removal methods to the same raw EEG data for comparison. Key methods include:

- Signal Quality Assessment:

- Calculate signal-level metrics on the cleaned data, such as Mean Squared Error (MSE), Peak Signal-to-Noise Ratio (PSNR), and Structural Similarity Index (SSIM), to evaluate the fidelity of the cleaning process [15].

- Functional Connectivity and Graph Analysis:

- Network Construction: For each frequency band (delta, theta, alpha, beta, gamma), calculate the functional connectivity between all EEG channel pairs (nodes) using a metric like the weighted Phase Lag Index (wPLI). Threshold the connectivity matrix to create an adjacency matrix for a graph.

- Graph Theory Metrics: Compute key graph metrics for each network, such as:

- Connection Strength (CS): The sum of all connection weights in the network.

- Clustering Coefficient (CC): The degree to which nodes tend to cluster together.

- Global Efficiency (GE): The average inverse of the shortest path length in the network, indicating integration efficiency [15].

- Analysis: Statistically compare these graph metrics across the different BCG removal methods to determine how the preprocessing choice influences the interpreted network topology.

Experimental Workflow and Signaling Pathways

Deep Learning-Based Artifact Removal Workflow

The following diagram illustrates the standard workflow for implementing a deep learning model like CLEnet for EEG artifact removal.

BCG Artifact Removal & Network Analysis Pathway

This diagram outlines the logical pathway for evaluating BCG artifact removal methods and their impact on functional network integrity.

The Scientist's Toolkit: Key Research Reagents & Materials

A curated list of key datasets, algorithms, and evaluation metrics essential for conducting research in EEG artifact removal optimization.

| Category | Item / Resource | Description & Function in Research |

|---|---|---|

| Benchmark Datasets | EEGdenoiseNet [8] | A semi-synthetic dataset providing clean EEG, EOG, and EMG segments. Enables controlled, reproducible training and benchmarking of artifact removal algorithms. |

| BCI Competition IV 2b [16] | A real-world EEG dataset containing ocular artifacts, useful for validating algorithm performance under realistic conditions. | |

| Key Algorithms & Models | CLEnet [8] | An advanced deep learning model combining CNN and LSTM with an attention mechanism. Used as a state-of-the-art solution for removing multiple artifact types from multi-channel EEG. |

| AAS, OBS, ICA [15] | Foundational algorithms for BCG artifact removal in EEG-fMRI studies. Serve as benchmarks and components in hybrid preprocessing pipelines. | |

| Software & Platforms | EEG-LLAMAS [15] | An open-source, low-latency software platform for real-time BCG artifact removal, crucial for closed-loop EEG-fMRI experiments. |

| Evaluation Metrics | SNR, CC, (RR)MSE [8] | Core quantitative metrics for assessing the signal-level fidelity of an artifact removal method by comparing its output to a ground-truth clean signal. |

| Graph Theory Metrics (CS, CC, GE) [15] | Metrics to evaluate the topological impact of artifact removal on functional brain networks, ensuring network integrity is preserved. |

Troubleshooting Guides

Electrode Pop Artifacts

Q: What is an electrode pop artifact and how can I identify it in my EEG recording?

An electrode pop artifact appears as a sudden, sharp, high-amplitude deflection in one or more EEG channels. Unlike physiological signals, it does not conform to the typical morphology of cerebral activity like vertex waves or K-complexes [17]. This artifact is often confined to channels linked to a single reference electrode [17].

Primary Causes and Corrective Actions:

- Cause: Poor Electrode Contact or Drying. Inadequate application of the electrode or drying of the electrolyte gel can cause intermittent changes in impedance [17] [10].

- Solution: Re-apply the problematic electrode. Ensure good skin preparation and use an adequate amount of conductive paste to maintain stable contact [17].

- Cause: Pressure or Pull on the Electrode. Physical stress on the electrode cable can displace it [17].

- Solution: Secure all cables properly to a headband or cap to relieve strain on the individual electrodes.

- Cause: Dirty or Corroded Electrodes. Contaminants on the electrode surface disrupt the stable electrical interface [17].

- Solution: Clean and re-prepare electrodes according to the manufacturer's guidelines before use.

Experimental Protocol for Identification and Resolution:

- Identify: Visually inspect the EEG recording for abrupt, very sharp transients that are localized to a specific channel or set of channels sharing a common reference [17].

- Confirm Source: Re-reference the affected channels to an alternative reference (e.g., from M1 to M2). If the artifact disappears, the original reference electrode is the source [17].

- Check Impedance: Measure the electrode impedance. A high or fluctuating value confirms a poor connection [10].

- Intervene: Re-prepare the skin and re-apply the identified electrode. Re-check the impedance to ensure it is within an acceptable range (typically below 10 kΩ for many modern systems) [10].

Cable Movement Artifacts

Q: Why does my EEG signal become noisy when the participant moves, and how can I reduce this?

Cable movement artifacts are caused by two main phenomena: the motion of the cable conductor within the Earth's magnetic field, and triboelectric noise generated by friction between the cable's internal components [10] [18]. These present as large, slow drifts or sudden, high-amplitude spikes in the signal that are temporally correlated with movement [10].

Primary Causes and Corrective Actions:

- Cause: Cable Sway in Non-Wireless Systems. This is a major contributor to motion artifacts [18].

- Solution: Use lightweight, low-noise cables designed for bio-potential measurements. Secure the main cable bundle to the participant's clothing to minimize swing and movement [10] [18].

- Cause: Triboelectric Effect. Friction within the cable generates electrical noise [18].

- Solution: Employ cables with special low-noise components that reduce internal friction [10].

- Cause: Use of Passive Electrodes. The weak EEG signal is amplified after traveling through the noisy cable environment [18].

- Solution: Use active electrode systems where the signal is pre-amplified at the electrode site on the head, making it more resilient to cable-induced noise [18].

Experimental Protocol for Mitigation:

- Equipment Selection: Opt for a wireless EEG system or one with active electrodes and low-noise, actively shielded cables [10] [18].

- Participant Preparation: Properly dress the cables by gathering and securing them to the participant's back or shirt to restrict large-scale movements.

- Validation: In a pilot test, have the participant perform the intended movements (e.g., walking, tapping fingers) while monitoring the signal. This helps identify the optimal cable management strategy before formal data collection.

Power Line Interference (PLI)

Q: Despite shielding, a persistent 50/60 Hz noise remains in my data. What are the advanced methods to remove it?

Power line interference (50 or 60 Hz) is a pervasive problem, especially in unshielded environments [19]. While notch filters are commonly used, they can introduce severe signal distortions, such as ringing artifacts due to the Gibbs effect, and are generally discouraged in ERP research [19] [20]. Several advanced techniques offer better alternatives.

Comparison of Advanced PLI Removal Techniques:

| Method | Principle | Advantages | Limitations |

|---|---|---|---|

| Spectrum Interpolation [19] | Removes the line noise component in the frequency domain via DFT, interpolates the gap, and transforms back via inverse DFT. | Introduces less time-domain distortion than a notch filter; performs well with non-stationary noise. | Requires careful implementation to avoid artifacts at the segment edges. |

| Discrete Fourier Transform (DFT) Filter [19] | Fits and subtracts a sine/cosine wave at the interference frequency from the data. | Avoids corrupting frequencies away from the power line frequency. | Assumes constant noise amplitude; fails with highly fluctuating noise. |

| CleanLine [19] | Uses a regression model with Slepian multitapers to estimate and remove the line noise component. | Removes only deterministic line components, preserving background spectral energy. | May fail with large, non-stationary spectral artifacts. |

| Sparse Representation (SR) [20] | Uses an over-complete dictionary of harmonic atoms to sparsely represent and subtract the PLI. | Reduces distortion caused by spectral overlap between PLI and EEG signals. | Requires pre-checking of harmonic sparsity; can be computationally complex. |

Experimental Protocol for Applying Spectrum Interpolation:

- Segment Data: Divide the continuous EEG data into manageable epochs.

- Transform to Frequency Domain: Apply the Discrete Fourier Transform (DFT) to each epoch.

- Remove Line Noise: In the amplitude spectrum, identify and remove the component at 50/60 Hz (and harmonics if necessary) by interpolating its value from the neighboring frequencies.

- Transform Back: Apply the inverse DFT to reconstruct the time-domain signal without the line noise component [19].

Frequently Asked Questions (FAQs)

Q: What is the simplest first step to troubleshoot various EEG artifacts? A: Check and optimize electrode impedances. High or unstable impedance is a common source for multiple artifacts, including electrode pop and increased susceptibility to power line noise [10] [21]. Ensuring all electrodes have a stable, low-impedance connection is the most fundamental and effective first step in quality control.

Q: My research involves mobile EEG. Are there specific hardware considerations for minimizing artifacts? A: Yes. For mobile EEG, the choice of hardware is critical. Key recommendations include:

- Use Dry or Semi-Dry Electrodes: These enable rapid setup and improve comfort but may require systems designed to handle their typically higher impedance [22].

- Prefer Wireless Systems: They eliminate cable sway, a major source of motion artifacts [18].

- Select Active Electrodes: Signal pre-amplification at the source makes the data more robust against environmental noise picked up along the signal path [18].

- Consider Electrode Surface Area: A larger electrode surface area can improve signal quality during motion by reducing impedance [18].

Q: When should I use a notch filter versus a more advanced method for power line noise? A: A notch filter should be a last resort, particularly for event-related potential (ERP) research, due to the risk of signal distortion and ringing artifacts that can create artificial components in your data [19]. Advanced methods like spectrum interpolation or CleanLine are preferred as they are designed to remove the interference with minimal impact on the underlying neural signal [19].

Q: Are there emerging solutions that address multiple artifacts automatically? A: Yes, deep learning (DL) approaches are showing significant promise. For instance, networks like CLEnet, which integrate convolutional neural networks (CNN) and Long Short-Term Memory (LSTM) units, can be trained to remove various types of artifacts (like EMG, EOG, and unknown noise) directly from multi-channel EEG data in an end-to-end manner [8]. These methods can adapt to different artifact types without requiring manual component rejection [8] [22].

The Scientist's Toolkit

Table: Essential Materials and Reagents for EEG Artifact Management

| Item | Function in Research |

|---|---|

| Ag/AgCl Electrodes | The standard for wet EEG systems. Non-polarizable surface allows recording of a wide range of frequencies and offers lower motion artifact susceptibility compared to other metals [18]. |

| Conductive Electrolyte Gel/Paste | Creates an ionic connection between the electrode and the skin. Reduces impedance and stabilizes the electrical interface, crucial for preventing electrode pop [18]. |

| Abhesive Skin Prep Gel | Used to gently abrade the skin surface before electrode application. Significantly lowers skin impedance, improving signal quality and stability [21]. |

| Low-Noome, Shielded Cables | Cables with active shielding and low-noise components minimize triboelectric noise and mains interference, directly reducing cable movement and 50/60 Hz artifacts [10] [18]. |

| Electrode Cap or Headset | Provides stable mechanical mounting for electrodes, ensuring consistent placement and reducing movement-induced artifacts. Modern wearable systems integrate these with dry electrodes [23] [22]. |

| Reference Electrodes | Critical for re-referencing strategies to isolate and confirm the source of an artifact, such as an electrode pop from a specific mastoid reference [17]. |

Experimental Workflow and Signaling Pathways

The following diagram illustrates a systematic workflow for identifying and resolving the technical artifacts discussed in this guide.

EEG Artifact Troubleshooting Workflow

The Critical Impact of Artifacts on EEG Analysis and Interpretation in Research and Clinical Settings

Electroencephalography (EEG) is a crucial tool in neuroscience and clinical diagnostics, but its recordings are highly susceptible to artifacts—signals that do not originate from the brain. These artifacts present a significant challenge because EEG signals are measured in microvolts and are easily contaminated by both physiological processes and external interference [2]. The presence of artifacts can obscure genuine neural activity, mimic pathological patterns, and ultimately lead to misinterpretation of data, adversely impacting both research conclusions and patient care [24] [2]. Recognizing and mitigating these artifacts is therefore a foundational step in ensuring the validity of EEG analysis.

Troubleshooting Guide: Identifying and Resolving Common EEG Artifacts

This guide provides a systematic approach to the most common EEG artifacts, helping you identify their source and implement effective solutions.

Table 1: Physiological Artifacts Troubleshooting Guide

| Artifact Type | Key Identifying Features | Impact on Analysis | Recommended Solutions |

|---|---|---|---|

| Ocular (EOG) | Very high amplitude, slow waves in bifrontal leads (Fp1, Fp2); opposite polarities at F7/F8 for lateral movements [5]. | Obscures frontal slow-wave activity; can mimic cerebral signals [5] [25]. | Use ICA with EOG reference; regression-based methods; instruct subjects to minimize eye movements [25] [26]. |

| Muscle (EMG) | High-frequency, low-amplitude "broadband noise" over frontal/temporal regions [5] [2]. | Masks beta/gamma rhythms; can be mistaken for seizure activity [5] [25]. | ICA is often effective due to statistical independence from EEG [25]; ensure subject relaxation. |

| Cardiac (ECG) | Rhythmic waveform time-locked to the QRS complex, often more prominent on the left side [5]. | Can be misinterpreted as cerebral discharges; pulse artifact may mimic slow activity [5] [25]. | Use ECG reference channel for subtraction; algorithmic removal of time-locked events [25]. |

| Sweat | Very slow drifts (<0.5 Hz), low-amplitude activity [5]. | Contaminates delta/theta bands, critical for sleep analysis [2]. | Control room temperature; use high-pass filtering; ensure stable electrode impedance [5]. |

Table 2: Technical and Environmental Artifacts Troubleshooting Guide

| Artifact Type | Key Identifying Features | Impact on Analysis | Recommended Solutions |

|---|---|---|---|

| Electrode Pop | Sudden, very steep upslope with slower downslape, localized to a single electrode with no field [5]. | Can be mistaken for epileptiform spikes [5]. | Check and repair electrode-skin contact; reapply conductive gel [5] [2]. |

| Power Line (60/50 Hz) | Monotonous, very fast activity at 60 Hz (USA) or 50 Hz (Europe) [5] [2]. | Obscures all underlying neural activity. | Use a notch filter; ensure proper grounding/shielding; move away from electrical devices [5] [25]. |

| Cable Movement | Chaotic, high-amplitude deflections; can be rhythmic if movement is repetitive [2]. | Creates non-physiological, disorganized signals that disrupt the entire recording. | Secure all cables; instruct the subject to remain still; use robust connector systems [2]. |

Figure 1: A general workflow for troubleshooting and resolving EEG artifacts, outlining the primary paths for dealing with physiological and technical contaminants.

Comparative Analysis of EEG Artifact Removal Techniques

Selecting the optimal artifact removal strategy is a critical step in the EEG preprocessing pipeline. The table below summarizes the primary methodologies, their principles, advantages, and limitations.

Table 3: Comparison of Major EEG Artifact Removal Techniques

| Method | Underlying Principle | Key Advantages | Key Limitations |

|---|---|---|---|

| Regression | Estimates and subtracts artifact contribution using reference channels (EOG, ECG) [25]. | Simple, intuitive model; well-established [25]. | Requires reference channels; risks over-correction and removal of neural signals [25]. |

| Blind Source Separation (BSS) | Decomposes EEG into components statistically independent from artifacts [25]. | No reference channels needed; effective for ocular and muscle artifacts [25] [8]. | Often requires manual component inspection; performance depends on channel count [25] [8]. |

| Wavelet Transform | Uses multi-resolution analysis to separate signal and artifact in time-frequency domain [25]. | Good for non-stationary signals and transient artifacts [25]. | Choosing the correct wavelet basis and thresholds can be complex [25]. |

| Deep Learning (DL) | Neural networks learn to map contaminated EEG to clean EEG in an end-to-end manner [8] [16]. | Fully automated; can handle unknown artifacts; adapts to multi-channel data [8]. | Requires large datasets for training; "black box" nature; computational intensity [8] [16]. |

Recent advances have shifted toward hybrid and deep learning models. For instance, CLEnet, which integrates CNNs and LSTMs with an attention mechanism, has shown proficiency in removing mixed and unknown artifacts from multi-channel data [8]. Similarly, AnEEG, an LSTM-based Generative Adversarial Network (GAN), demonstrates the potential of adversarial training for generating high-quality, artifact-free signals [16].

Figure 2: A taxonomy of common EEG artifact removal techniques, categorized into traditional algorithms and modern deep learning-based approaches.

Experimental Protocols for Artifact Removal

Protocol: Independent Component Analysis (ICA) for Ocular and Muscle Artifacts

ICA is a widely used BSS technique to isolate and remove artifact components [25].

- Data Preparation: Format the continuous or epoched EEG data into a matrix (channels × time points).

- Preprocessing: Apply a high-pass filter (e.g., 1 Hz cutoff) to remove slow drifts that can impede ICA performance.

- ICA Decomposition: Use an ICA algorithm (e.g., Infomax or FastICA) to decompose the data into independent components (ICs). Each IC has a fixed spatial topography and a time-varying activation.

- Component Classification: Inspect ICs for artifacts. Ocular ICs typically show high activity in frontal electrodes and time-locking to blinks. Muscle ICs have a broadband frequency profile with a topographical focus over temporalis muscles. This can be done manually or with automated classifiers like ICLabel.

- Artifact Removal: Subtract the artifactual components from the data by projecting the remaining components back to the sensor space.

- Validation: Visually inspect the cleaned data to confirm artifact removal and ensure genuine brain activity is preserved.

Protocol: Deep Learning-Based Removal with CLEnet

CLEnet represents a modern, automated pipeline for removing various artifacts, including unknown types [8].

- Data Preparation: Segment multi-channel EEG data into epochs. For supervised training, create semi-synthetic data by adding recorded artifacts (EMG, EOG) to clean EEG segments [8].

- Model Architecture:

- Morphological Feature Extraction: Pass the input through a dual-branch CNN with different kernel sizes to extract features at multiple scales.

- Temporal Feature Enhancement: Integrate the improved EMA-1D (Efficient Multi-scale Attention) module within the CNN to enhance relevant temporal features.

- Temporal Modeling: Reduce feature dimensions with fully connected layers, then process with LSTM to capture long-range temporal dependencies.

- EEG Reconstruction: Flatten the output and use fully connected layers to reconstruct the artifact-free EEG signal.

- Training: Train the model in a supervised manner using Mean Squared Error (MSE) as the loss function to minimize the difference between the output and the clean ground-truth EEG.

- Application: Use the trained model in an end-to-end manner to process new, contaminated EEG data and output the cleaned signal.

Table 4: Key Research Reagents and Computational Tools for EEG Artifact Removal

| Item / Resource | Type | Function / Application |

|---|---|---|

| EEGdenoiseNet | Benchmark Dataset | A semi-synthetic dataset combining clean EEG with recorded EOG and EMG artifacts, enabling standardized training and evaluation of denoising algorithms [8]. |

| Independent Component Analysis (ICA) | Computational Algorithm | A blind source separation workhorse for isolating and removing ocular, muscle, and cardiac artifacts from multi-channel EEG data [25] [2]. |

| CLEnet Model | Deep Learning Architecture | An end-to-end network (CNN + LSTM + EMA-1D) designed for removing multiple artifact types from multi-channel EEG data [8]. |

| Generative Adversarial Network (GAN) | Deep Learning Framework | A network structure (e.g., used in AnEEG) where a generator creates clean EEG from noisy input, and a discriminator critiques the quality, driving iterative improvement [16]. |

| Semi-Synthetic Data Generation | Experimental Method | A protocol for creating ground-truth data by adding real artifacts to clean EEG, which is essential for supervised training of deep learning models [8] [16]. |

Frequently Asked Questions (FAQs)

Q1: Why can't I just use a simple filter to remove all artifacts? Many artifacts, particularly physiological ones like muscle (EMG) and ocular (EOG) activity, have frequency spectra that overlap with genuine brain signals. A simple filter would remove valuable neural data along with the artifact. For example, a low-pass filter set to remove EMG would also erase high-frequency gamma oscillations related to cognitive processing [25] [2]. Advanced techniques like ICA or deep learning are needed to separate these overlapping signals based on other properties like spatial distribution or statistical independence.

Q2: Does artifact rejection (removing bad trials) or artifact correction (cleaning bad trials) lead to better decoding performance in analyses like MVPA? A 2025 study investigating this for SVM- and LDA-based decoding found that the combination of artifact correction (e.g., using ICA for ocular artifacts) and rejection (for large muscle or movement artifacts) did not consistently improve decoding performance across several common ERP paradigms [26]. This is likely because rejection reduces the number of trials available for training the decoder. However, the study notes that artifact correction remains critical to prevent artifact-related confounds from artificially inflating decoding accuracy, which could lead to incorrect conclusions [26].

Q3: What is the most future-proof method for EEG artifact removal? While traditional methods like ICA are well-established, current research is strongly focused on deep learning (DL) models. DL approaches, such as CLEnet and AnEEG, offer key advantages: they are fully automated, can be adapted to remove a wide range of known and unknown artifacts, and perform effectively on multi-channel data without requiring manual intervention [8] [16]. As these models become more robust and are trained on larger, diverse datasets, they are poised to become the standard for artifact removal in both research and clinical applications.

Inherent Challenges in Artifact Detection and the Need for Robust Removal Pipelines

This technical support center provides troubleshooting guides and FAQs for researchers and scientists developing robust pipelines for EEG artifact removal. The content is framed within a broader thesis on optimizing these techniques for applications in clinical diagnostics and drug development.

Frequently Asked Questions (FAQs)

Q1: Why do traditional artifact removal methods like ICA sometimes distort genuine neural signals, and how can this be mitigated? Traditional ICA decomposes data into components, subtracts those identified as artifactual, and reconstructs the data. Due to imperfect separation, this process can inadvertently remove neural signals alongside artifacts. This not only results in a loss of biological information but can also artificially inflate effect sizes in event-related potentials and bias source localization estimates [9]. Mitigation involves moving away from blanket component rejection towards targeted cleaning, where artifact removal is applied only to the specific time periods or frequency bands contaminated by the artifact [9].

Q2: What are the main limitations of deep learning models for EEG artifact removal, particularly for real-world applications? While deep learning has transformed EEG artifact removal, many existing models have critical limitations [8]:

- Limited Generalizability: Models are often tailored to remove specific, known artifacts (e.g., only EMG or only EOG) and exhibit significant performance drops when encountering unknown or mixed artifact types [8].

- Single-Channel Focus: Many networks are designed for single-channel EEG inputs, overlooking the rich inter-channel correlations present in multi-channel EEG data. This leads to poor performance in practical scenarios involving full-head recordings [8].

- Computational Cost: Complex deep learning machines can require extensive hyper-parameter tuning and processing time, making them unsuitable for applications with real-time constraints, such as human-robot interaction or neurofeedback [27].

Q3: How can I effectively handle ocular artifacts in a dataset with a low number of EEG channels? For high-density EEG systems (e.g., >40 channels), ICA is often the preferred method for ocular artifact removal [6]. However, with a low number of channels, regression-based techniques are a suitable alternative. These methods use a calibration run to estimate the influence of the ocular artifact (from a dedicated EOG channel or frontal EEG electrodes) on each EEG channel. This estimated influence is then subtracted from the continuous data [6]. It is crucial to use a calibration signal acquired during the same session as the EEG recording, as spontaneous and voluntary eye movements differ [6].

Q4: What is the practical impact of even small artifacts on experimental results? Artifacts add uncontrolled variability to data, which confounds experimental observations. Even small, frequent artifacts can reduce the statistical power of a study. They can obscure genuine neural signals, alter the amplitude and timing of event-related potentials (ERPs), and in clinical settings, potentially lead to misdiagnosis by mimicking epileptiform activity or other pathological patterns [2] [3].

Troubleshooting Guides

Issue 1: Persistent High-Frequency Noise After Standard Filtering

Problem: Muscle (EMG) artifacts persist in the beta (13-30 Hz) and gamma (>30 Hz) frequency bands after applying a standard band-pass filter (e.g., 1-40 Hz). These artifacts are broadband and can significantly overlap with neural oscillations of interest [2] [27].

Solution:

- Confirm the Artifact: Plot the power spectral density of your data. Muscle artifacts will appear as a broadband increase in power from around 20 Hz up to 300 Hz [2] [28].

- Apply Advanced Techniques:

- Consider a higher low-pass filter (e.g., 30 Hz) if high-frequency neural activity is not the focus of your study, but be aware this will remove genuine gamma-band neural signals.

- Use a deep learning approach like CLEnet, which is designed to separate morphological features of artifacts from genuine EEG, even in the presence of mixed or unknown artifacts [8].

- For offline analysis, explore algorithms that combine ICA with wavelet denoising to target and remove high-frequency, non-stationary muscle components [29].

Issue 2: Introducing Bias During Re-referencing

Problem: Re-referencing to mastoid channels (TP9, TP10) unexpectedly introduces a rhythmic artifact or persistent noise across all EEG channels.

Solution: This is a common issue if the mastoid electrodes themselves are contaminated.

- Primary Cause: The mastoid region is susceptible to biological artifacts, including neck muscle tension (EMG) and pulse (ECG) artifacts from the nearby carotid artery [2] [3]. If these contaminated channels are used as a reference, the artifact is propagated to all other channels.

- Corrective Actions:

- Inspect Original Channels: Before re-referencing, always visually inspect the mastoid channels for signs of rhythmic pulse spikes or persistent high-frequency EMG noise [3].

- Use Alternative Reference: If the mastoids are noisy, consider using a different referencing scheme, such as the average reference [3].

- Clean Before Re-referencing: Apply artifact removal techniques (e.g., ICA, targeted cleaning) to the mastoid channels before using them for re-referencing.

Issue 3: Differentiating Slow Cortical Activity from Artifactual Drift

Problem: It is difficult to distinguish genuine slow cortical potentials (e.g., in the delta band) from slow drifts caused by sweat or body movement [2] [3].

Solution:

- Identify the Source:

- Sweat Artifacts: Appear as very slow, large deflections across multiple channels, often associated with warm environments or physical activity. The spectral power is concentrated below 1 Hz [3].

- Body Sway/Movement: Can cause slow, synchronous drifts across all channels due to changes in electrode impedance from a loose-fitting cap [3].

- Apply Corrective Processing:

- A high-pass filter with a conservative cutoff (e.g., 0.5 Hz or 1 Hz) can effectively attenuate these slow drifts without severely impacting most EEG rhythms of interest [28] [3].

- Caution: If your research specifically investigates slow cortical potentials, filtering is not advisable. Instead, focus on artifact prevention during recording (e.g., ensuring a cool, dry lab environment and a snug electrode cap) and consider using artifact rejection for severely contaminated epochs [3].

Experimental Protocols & Performance Data

Protocol: Benchmarking a New Artifact Removal Algorithm

This protocol outlines a standard methodology for validating a novel artifact removal technique against established benchmarks.

1. Dataset Preparation:

- Semi-Synthetic Datasets: Artificially contaminate clean EEG recordings with known artifacts (EOG, EMG, ECG) at varying signal-to-noise ratios. This provides a ground truth for quantitative evaluation. The EEGdenoiseNet dataset is a commonly used benchmark [8].

- Real-World Datasets: Use a dataset with real, complex artifacts, such as a 32-channel recording from participants performing a cognitive task (e.g., n-back task), which naturally induces artifacts like neck tension and eye movements [8].

2. Performance Metrics: Compare the cleaned EEG output against the ground truth clean EEG using the following metrics [8]:

- Signal-to-Noise Ratio (SNR): Measures the overall noise reduction. Higher is better.

- Average Correlation Coefficient (CC): Measures the preservation of the original signal's morphology. Closer to 1 is better.

- Relative Root Mean Square Error (RRMSE): Calculated in both temporal (RRMSEt) and frequency (RRMSEf) domains. Lower is better.

3. Benchmarking: Compare the new algorithm's performance against established models, such as:

- 1D-ResCNN: A one-dimensional residual convolutional neural network.

- NovelCNN: A CNN designed for EMG artifact removal.

- DuoCL: A model based on CNN and LSTM for temporal feature extraction [8].

Table 1: Example Performance Comparison on Mixed (EMG+EOG) Artifact Removal

| Algorithm | SNR (dB) | CC | RRMSEt | RRMSEf |

|---|---|---|---|---|

| CLEnet (Proposed) | 11.498 | 0.925 | 0.300 | 0.319 |

| DuoCL | 10.981 | 0.901 | 0.322 | 0.330 |

| NovelCNN | 9.856 | 0.873 | 0.355 | 0.351 |

| 1D-ResCNN | 9.421 | 0.862 | 0.368 | 0.360 |

Source: Adapted from [8]

Protocol: Implementing Targeted Artifact Reduction with ICA

This protocol refines the standard ICA workflow to minimize neural signal loss, based on the RELAX method [9].

1. Standard ICA Processing: Run ICA on the high-pass filtered (e.g., 1 Hz cutoff) continuous data to decompose it into independent components.

2. Targeted Component Classification: Identify components corresponding to eye movements and muscle activity.

3. Targeted Cleaning:

- For Eye Components: Instead of subtracting the entire component, use its time-course to identify only the periods containing high-amplitude blinks or saccades. Subtract the component's activity only during these periods.

- For Muscle Components: Instead of subtracting the entire component, apply a frequency-domain approach. Subtract only the frequency bands (e.g., beta and gamma) that are disproportionately contaminated by the muscle artifact from the component's power spectrum.

4. Signal Reconstruction: Reconstruct the data from the modified components.

Table 2: Comparison of Standard vs. Targeted ICA Cleaning

| Aspect | Standard ICA Subtraction | Targeted ICA Cleaning |

|---|---|---|

| Core Action | Removes entire artifactual components | Removes artifact from specific times (eye) or frequencies (muscle) |

| Neural Signal Loss | Higher | Reduced |

| Effect Size Inflation | Can cause artificial inflation | Mitigated |

| Source Localization Bias | Can introduce bias | Minimized |

| Recommended Use | When artifact is isolated in a component and overlaps poorly with neural signals | For eye and muscle artifacts, which often have temporal/spatial overlap with neural data |

Source: Adapted from [9]

The Scientist's Toolkit

Table 3: Essential Software and Algorithmic Tools for Artifact Removal Research

| Tool/Algorithm | Type | Primary Function | Key Reference |

|---|---|---|---|

| CLEnet | Deep Learning Model | Removes mixed/unknown artifacts from multi-channel EEG using dual-scale CNN, LSTM, and an attention mechanism. | [8] |

| RELAX (EEGLAB Plugin) | Software Pipeline | Implements targeted ICA reduction to clean artifacts while preserving neural signals and reducing effect size inflation. | [9] |

| Independent Component Analysis (ICA) | Blind Source Separation | Decomposes multi-channel EEG into statistically independent components for manual or automated artifact rejection. | [6] [28] |

| Artifact Subspace Reconstruction (ASR) | Statistical Algorithm | Detects and reconstructs portions of data contaminated by large-amplitude artifacts in multi-channel EEG. | [6] |

| Regression-Based Methods | Linear Model | Estimates and subtracts ocular artifact influence from EEG channels using an EOG or frontal channel as a template. | [6] |

| NARX Neural Network with FLM | Adaptive Filter | Hybrid firefly and Levenberg-Marquardt algorithm optimizes a neural network for adaptive noise cancellation of artifacts. | [29] |

| MNE-Python | Software Library | A comprehensive open-source Python package for exploring, visualizing, and analyzing human neurophysiological data, including artifact detection and removal tools. | [28] |

Workflow Visualization

EEG Artifact Removal Research Pipeline

Targeted vs. Standard ICA Cleaning

EEG Artifact Removal Methodologies: From Traditional Algorithms to Modern AI Solutions

Electroencephalography (EEG) is a non-invasive tool crucial for studying brain activity in diagnosis, research, and Brain-Computer Interface (BCI) systems. However, the recorded signals are often contaminated by artifacts—unwanted electrical activities from both physiological sources (like eye movements and muscle activity) and environmental noise. These artifacts can obscure the genuine neural signals, making their removal a critical preprocessing step. Traditional techniques such as Regression, Blind Source Separation (BSS), and Wavelet Transforms form the cornerstone of EEG artifact cleaning, each with distinct strengths and optimal application scenarios [30] [31].

This guide provides troubleshooting advice and detailed protocols to help researchers effectively implement these traditional methods within their EEG artifact removal pipelines.

Frequently Asked Questions (FAQs)

Q1: How do I choose between a single-channel and a multi-channel artifact removal method? The choice depends on your EEG system's setup and the number of available channels.

- Use Single-Channel Methods (e.g., Wavelet Transform, EMD) when working with a limited number of electrodes, which is common in wearable EEG systems or specific BCI applications. These methods process each channel individually [30] [32].

- Use Multi-Channel Methods (e.g., BSS like ICA, SOBI) when you have access to multiple EEG channels (typically 16 or more), as they leverage spatial information across channels to separate sources. These methods are standard for data from international 10-20 or 10-10 systems [30] [33].

Q2: My BSS components are mixed with both neural and artifact signals. How can I clean them without losing brain signals? Manually identifying and rejecting artifact components can lead to the loss of underlying brain signal. A highly effective troubleshooting strategy is to combine BSS with a Single Channel Decomposition (SCD) method.

- Recommended Solution: Apply a Wavelet Transform to the artifact-contaminated independent components. This allows you to identify and remove only the wavelet coefficients that represent the artifact, while preserving others that contain neural information. The component is then reconstructed from the cleaned coefficients before projecting back to the sensor space. This hybrid approach (e.g., SOBI-SWT or CCA-SWT) better preserves the non-contaminated EEG signal [30].

Q3: Why does my artifact-corrected EEG lead to worse decoding performance in my BCI model? A recent multiverse analysis found that artifact correction steps, including ICA, can sometimes reduce decoding performance [34].

- Root Cause: The classifier might be learning to exploit the structured noise from the artifacts, which are systematically associated with the task or condition (e.g., eye movements in a visual task, muscle activity related to a motor response). Removing these artifacts removes the features the model learned on [34].

- Troubleshooting: While removing artifacts might lower performance metrics, it greatly improves the model's validity and interpretability by ensuring it is decoding neural signals rather than artifacts. This is crucial for clinically and scientifically meaningful results [34].

Q4: Which traditional method is most effective for strong EMG artifacts from muscle activity? EMG artifacts are particularly challenging due to their broad frequency spectrum and anatomical distribution [31]. Research indicates that hybrid methods are most effective.

- Optimal Technique: A combination of Canonical Correlation Analysis (CCA) and Stationary Wavelet Transform (SWT), known as CCA-SWT, has been shown to be superior for removing EMG artifacts while preserving brain signals [30].

Troubleshooting Guides & Experimental Protocols

Regression-Based Artifact Removal

Principle: This method uses a reference signal (e.g., from EOG electrodes) to estimate the artifact's contribution to the EEG channels and subtracts it [30] [31].

Common Issue: Absence of a dedicated reference channel. Many experimental setups, especially with developmental populations or wearable systems, do not include separate EOG electrodes [32] [35].

Solutions:

- Alternative Reference: Use specific EEG channels located near the eyes (like FP1) as a proxy reference for ocular artifacts [30].

- Protocol: Automated Ocular Artifact Removal with EEG Reference

- Identify Reference Channels: Select one or two frontal EEG channels that show the highest amplitude deflection during eye blinks.

- Calculate Transfer Function: For each EEG channel, compute the regression coefficient (weight) between the EEG channel and the reference channel during segments dominated by blinks.

- Artifact Subtraction: For the entire recording, subtract the scaled version of the reference signal from each EEG channel using the calculated weights.

Blind Source Separation (BSS) Techniques

Principle: BSS algorithms like Independent Component Analysis (ICA) and Second-Order Blind Identification (SOBI) separate multi-channel EEG data into statistically independent or temporally uncorrelated components. Artifactual components are then identified and removed before signal reconstruction [30] [31].