NPDOA vs. Particle Swarm Optimization: A Benchmark Comparison for Complex Biomedical Problems

This article provides a comprehensive benchmark comparison between the novel Neural Population Dynamics Optimization Algorithm (NPDOA) and established Particle Swarm Optimization (PSO) variants, specifically contextualized for researchers and professionals in...

NPDOA vs. Particle Swarm Optimization: A Benchmark Comparison for Complex Biomedical Problems

Abstract

This article provides a comprehensive benchmark comparison between the novel Neural Population Dynamics Optimization Algorithm (NPDOA) and established Particle Swarm Optimization (PSO) variants, specifically contextualized for researchers and professionals in drug development and biomedical research. We explore the foundational principles of both brain-inspired and swarm intelligence algorithms, detail their methodological applications in solving complex biological optimization problems, analyze their respective challenges and optimization strategies, and present a rigorous validation framework using standard benchmarks and real-world case studies. The analysis synthesizes performance metrics, convergence behavior, and practical implementation insights to guide algorithm selection for high-dimensional, nonlinear problems common in pharmaceutical research.

Brain vs. Swarm: Foundational Principles of NPDOA and Particle Swarm Optimization

Metaheuristic algorithms are advanced optimization techniques designed to find adequate or near-optimal solutions for complex problems where traditional deterministic methods fail. These algorithms are derivative-free, meaning they do not require gradient calculations, making them highly versatile for handling non-linear, discontinuous, and multi-modal objective functions common in biomedical research. Their stochastic nature allows them to avoid local optima and explore vast search spaces efficiently by balancing exploration (global search) and exploitation (local refinement) [1]. In biomedical contexts, from drug design to treatment personalization, optimization problems often involve high-dimensional data, noisy measurements, and complex constraints, making metaheuristics an indispensable tool for researchers and clinicians [2].

The field has evolved significantly since the introduction of early algorithms like Genetic Algorithms (GA) in the 1970s and Simulated Annealing (SA) in the 1980s [1]. Inspiration is drawn from various natural phenomena, leading to their classification into evolution-based, swarm intelligence-based, physics-based, and human-based algorithms [3] [4]. The No Free Lunch (NFL) theorem underscores that no single algorithm is superior for all problems, motivating continuous development of new metaheuristics like the recently proposed Walrus Optimization Algorithm (WaOA) [4]. This diversity provides researchers with a rich toolbox for tackling the unique challenges of biomedical optimization.

Classification of Meta-heuristic Algorithms

Metaheuristic algorithms can be categorized based on their source of inspiration and operational methodology. The primary classifications include swarm intelligence, evolutionary algorithms, physics-based algorithms, and human-based algorithms. Each class possesses distinct mechanisms and characteristics suitable for different problem types in biomedical optimization.

Table 1: Classification of Meta-heuristic Algorithms

| Algorithm Class | Inspiration Source | Key Representatives | Key Characteristics |

|---|---|---|---|

| Swarm Intelligence | Collective behavior of animals, insects, or birds | Particle Swarm Optimization (PSO), Ant Colony Optimization (ACO), Grey Wolf Optimizer (GWO) | Population-based, uses social sharing of information, often easy to implement [5] [3] [4] |

| Evolutionary Algorithms | Biological evolution and genetics | Genetic Algorithm (GA), Differential Evolution (DE) | Uses evolutionary operators: selection, crossover, and mutation [1] [3] |

| Physics-Based | Physical laws and phenomena | Simulated Annealing (SA), Gravitational Search Algorithm (GSA) | Often single-solution based, mimics physical processes like metal annealing [1] [3] [4] |

| Human-Based | Human activities and social interactions | Teaching-Learning Based Optimization (TLBO) | Models social behaviors, knowledge sharing, and learning processes [4] |

Among these, swarm intelligence algorithms like PSO have gained significant traction in biomedical applications due to their conceptual simplicity, effective information-sharing mechanisms, and robust performance [5]. Evolutionary algorithms like GA are prized for their global search capability, though they can be computationally intensive. Physics-based methods like SA are often simpler to implement for single-solution optimization, while human-based algorithms effectively model collaborative problem-solving [3].

Performance Benchmarking in Biomedical Applications

Rigorous performance benchmarking is essential for selecting the appropriate metaheuristic algorithm for a specific biomedical problem. Evaluation typically considers solution quality, convergence speed, computational cost, and algorithmic stability. Recent studies across various domains, including energy systems and controller optimization, provide valuable insights into the relative performance of different algorithms [6] [7].

Table 2: Performance Comparison of Meta-heuristic Algorithms

| Algorithm | Key Strengths | Limitations | Reported Performance in Recent Studies |

|---|---|---|---|

| Particle Swarm Optimization (PSO) | Fast convergence, simple implementation, insensitive to design variable scaling [5] [8] | May prematurely converge in complex landscapes [5] | Achieved <2% power load tracking error in MPC tuning [7] |

| Genetic Algorithm (GA) | Powerful global exploration, handles multi-modal problems well [8] | High computational overhead, sensitive to parameter tuning [5] | Reduced power load tracking error from 16% to 8% when considering parameter interdependency [7] |

| Hybrid Algorithms (e.g., GD-PSO, WOA-PSO) | Combines strengths of multiple methods, improved balance of exploration/exploitation [6] | Increased implementation complexity [6] | Consistently achieved lowest average costs with strong stability in microgrid optimization [6] |

| Classical Methods (e.g., ACO, IVY) | Good for specific problem structures (e.g., pathfinding) [1] | Can exhibit higher variability and cost [6] | Exhibited higher costs and variability in microgrid scheduling [6] |

| Walrus Optimization Algorithm (WaOA) | Good balance of exploration and exploitation, recent development [4] | Newer algorithm, less extensively validated [4] | Showed competitive/superior performance on 68 benchmark functions vs. ten other algorithms [4] |

In a notable biomechanical optimization study, PSO was evaluated against a GA, sequential quadratic programming (SQP), and a quasi-Newton (BFGS) algorithm. PSO demonstrated superior global search capabilities on a suite of difficult analytical test problems with multiple local minima. Furthermore, PSO was uniquely insensitive to design variable scaling, a significant advantage in biomechanics where models often incorporate variables with different units and scales. In contrast, the GA was mildly sensitive, and the gradient-based SQP and BFGS algorithms were highly sensitive to scaling, requiring additional preprocessing [8].

Key Experimental Protocols and Methodologies

Biomechanical System Identification

Objective: To estimate muscle or internal forces that cannot be measured directly, using a biomechanical model and experimental movement data [8].

- Problem Formulation: Define an objective function that minimizes the difference between model-predicted kinematics/kinetics and experimental data from motion capture and force plates.

- Algorithm Configuration: Initialize algorithm-specific parameters (e.g., swarm size for PSO, population size and operators for GA). For PSO, a standard population of 20 particles is often used [8].

- Constraint Handling: Implement constraints representing physiological joint limits, muscle force capacities, and other biological constraints.

- Optimization Execution: Run the algorithm with a termination criterion based on maximum iterations or convergence tolerance.

- Validation: Validate the optimized solution using independent experimental data not used in the optimization process.

Computer-Aided Drug Design (CADD) via Docking

Objective: To identify potential drug candidates by predicting the binding affinity and orientation of a small molecule (ligand) to a target disease protein [2].

- Target Preparation: Obtain the 3D structure of the target protein from a database (e.g., Protein Data Bank) and prepare it by removing water molecules and adding hydrogens.

- Ligand Library Preparation: Curate a library of small molecule ligands in the appropriate 3D format.

- Docking Simulation: Use optimization algorithms (e.g., PSO in Psovina software) to search for the optimal conformation and orientation of the ligand within the protein's binding site that minimizes the binding energy [2].

- Scoring and Ranking: Score each docked pose using a scoring function and rank ligands based on predicted binding affinity.

- Post-Analysis: Select top-ranking candidates for further in vitro or in vivo testing.

The Scientist's Toolkit: Research Reagent Solutions

This section details key computational tools and resources essential for conducting metaheuristic optimization in biomedical research.

Table 3: Essential Research Reagents for Computational Optimization

| Reagent / Resource | Type | Primary Function in Research |

|---|---|---|

| Protein Data Bank (PDB) | Database | Repository of 3D protein structures; provides targets for CADD and docking studies [2] |

| Molecular Databases (e.g., ZINC) | Database | Libraries of commercially available small molecules; serve as ligand libraries for virtual screening in drug design [2] |

| Psovina | Software | Docking software that utilizes a Particle Swarm algorithm to enhance the accuracy of molecular docking operations [2] |

| PyMOL | Software | Molecular visualization system; used for separating ligands and proteins and analyzing docking results [2] |

| AutoDock | Software | Suite of automated docking tools; used for calculating binding energy and performing virtual screening [2] |

| MATLAB/ C Code for PSO | Algorithm Code | Freely available implementations of core optimization algorithms for customization and deployment in research projects [8] |

| CEC Benchmark Test Suites | Benchmark Dataset | Standardized sets of test functions (e.g., CEC 2011, 2015, 2017) for objectively evaluating and comparing algorithm performance [4] |

Metaheuristic algorithms, particularly swarm intelligence approaches like PSO, have established themselves as powerful and versatile tools for tackling complex optimization challenges in biomedical research. Their derivative-free nature and global search capabilities make them well-suited for problems characterized by non-linearity, high dimensionality, and noisy data, as commonly encountered in drug design, biomechanics, and medical data analysis.

Benchmarking studies consistently show that while PSO offers excellent convergence speed, simplicity, and robustness to variable scaling, the No Free Lunch theorem holds: no single algorithm is universally best. The emergence of hybrid algorithms and newer bio-inspired methods like WaOA demonstrates the field's ongoing evolution, aiming to better balance exploration and exploitation. For researchers, the selection of an algorithm should be guided by the specific problem structure, computational constraints, and the availability of benchmark performance data in analogous domains. The continued integration of these advanced optimization techniques with machine learning and high-performance computing promises to further accelerate discoveries and innovations in biomedicine.

Meta-heuristic algorithms are powerful tools for solving complex optimization problems that are nonlinear, nonconvex, or otherwise intractable for conventional mathematical methods. Two prominent approaches in this domain are Particle Swarm Optimization (PSO), a well-established swarm intelligence algorithm, and the newer Neural Population Dynamics Optimization Algorithm (NPDOA), inspired by the information processing and decision-making capabilities of the brain. This guide provides an objective comparison of NPDOA against PSO and its variants, synthesizing current research findings to aid researchers and scientists in selecting appropriate optimization tools for advanced applications, including those in drug development.

Algorithmic Foundations and Mechanisms

Neural Population Dynamics Optimization Algorithm (NPDOA)

NPDOA is a novel swarm intelligence algorithm inspired by the collective dynamics of neural populations in the brain during cognitive and motor tasks [9]. It simulates the activities of interconnected neural populations, where each solution is treated as a neural state and decision variables represent neuronal firing rates [9]. Its operation is governed by three core strategies:

- Attractor Trending Strategy: Drives neural populations towards optimal decisions, ensuring exploitation capability by converging towards stable states associated with favorable decisions [9].

- Coupling Disturbance Strategy: Deviates neural populations from attractors through coupling with other populations, thereby improving exploration ability and helping the algorithm escape local optima [9].

- Information Projection Strategy: Controls communication between neural populations, enabling a balanced transition from exploration to exploitation during the search process [9].

Particle Swarm Optimization (PSO) and Its Variants

PSO, introduced in the mid-1990s, is a population-based stochastic optimization technique inspired by the social behavior of bird flocking or fish schooling [5] [10]. Each particle, representing a potential solution, moves through the search space by updating its velocity and position based on its own experience (Pbest) and the best experience found by its neighbors (Gbest) [5] [10].

Despite its simplicity and effectiveness, standard PSO faces challenges like premature convergence and poor local search precision [10]. This has led to numerous variants:

- Hybrid Strategy PSO (HSPSO): Integrates adaptive weight adjustment, reverse learning, Cauchy mutation, and the Hook-Jeeves strategy to enhance global and local search [10].

- Adaptive PSO (APSO): Employs mechanisms like rank-based inertia weights or chaos theory to improve performance in dynamic environments [5].

- Quantum PSO (QPSO): Incorporates quantum mechanical principles to enhance the exploration capability of the swarm [5].

The following diagram illustrates the core operational workflows of NPDOA and PSO, highlighting their distinct mechanistic origins.

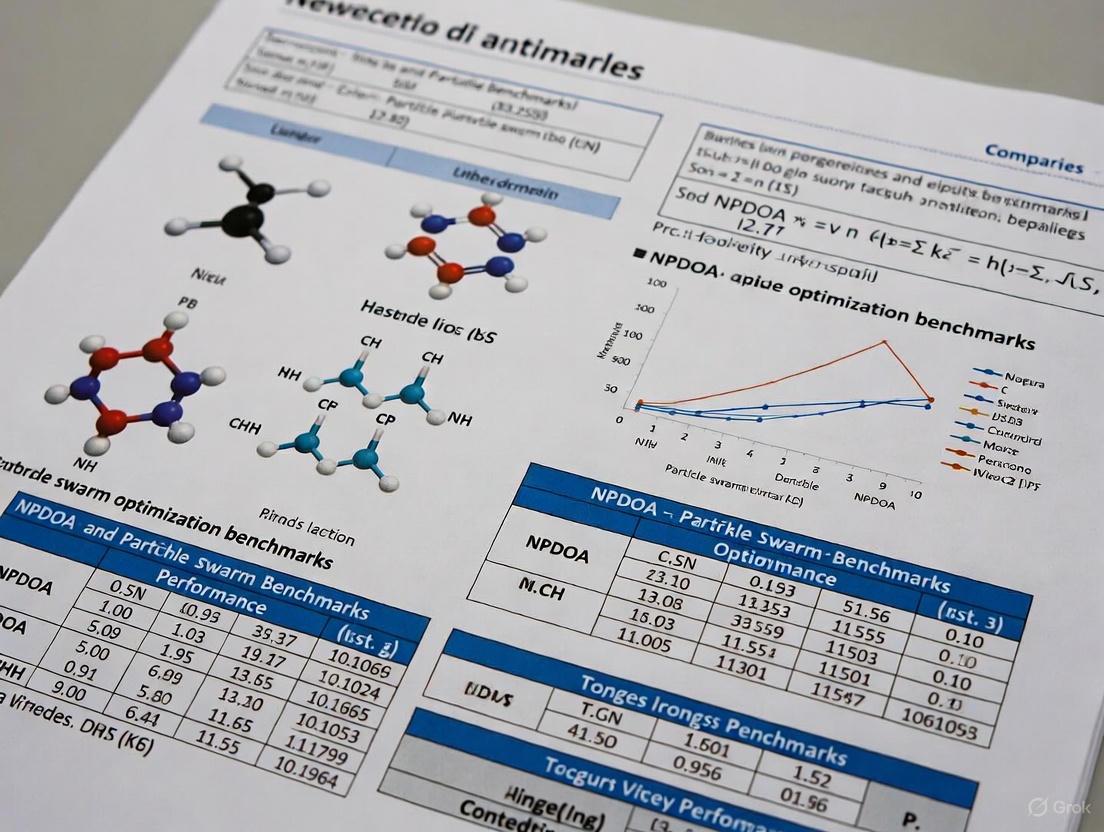

Performance Benchmarking: Quantitative Comparisons

Benchmark Function Performance

The following table summarizes the performance of NPDOA and other algorithms, including PSO variants, on standard benchmark test suites, such as those from CEC (Congress on Evolutionary Computation).

Table 1: Performance Comparison on Benchmark Functions

| Algorithm | Key Characteristics | Reported Performance on CEC Benchmarks | Key Strengths | Common Limitations |

|---|---|---|---|---|

| NPDOA [9] | Brain-inspired; three core strategies (attractor, coupling, projection) | Validated on benchmark and practical problems; shows effectiveness [9] | Balanced exploration-exploitation; novel inspiration | Relatively new; less extensive real-world application data |

| Standard PSO [5] [10] | Social learning from Pbest and Gbest |

Foundational algorithm; performance varies with problem type [5] | Simple implementation; fast initial convergence | Susceptible to local optima; parameter sensitivity [10] |

| HSPSO [10] | Hybrid of adaptive weights, reverse learning, Cauchy mutation | Superior to standard PSO, DAIW-PSO, BOA, ACO, FA on CEC-2005 & CEC-2014 [10] | Enhanced global search; better local optima avoidance | Increased computational complexity |

| Power Method Algorithm (PMA) [11] | Math-inspired; uses power iteration method | Average Friedman rankings of 3.00 (30D), 2.71 (50D), 2.69 (100D) on CEC2017/CEC2022 [11] | Strong mathematical foundation; good balance | May struggle with specific problem structures |

Performance on Practical and Engineering Problems

Algorithms are often tested on real-world engineering design problems to validate their practicality. The table below shows a comparison based on such applications.

Table 2: Performance on Practical Engineering Optimization Problems

| Algorithm | Practical Application Context | Reported Outcome | Inference |

|---|---|---|---|

| NPDOA [9] | Practical engineering problems (e.g., compression spring, cantilever beam design) [9] | Results verified effectiveness in addressing complex, nonlinear problems [9] | Robust performance on constrained, real-world design problems |

| Improved NPDOA (INPDOA) [12] | AutoML model for prognostic prediction in autologous costal cartilage rhinoplasty (ACCR) | Outperformed traditional algorithms; test-set AUC of 0.867 (complications), R² of 0.862 (ROE scores) [12] | Highly effective for complex, multi-parameter optimization in biomedical contexts |

| HSPSO [10] | Feature selection for UCI Arrhythmia dataset | Generated a high-accuracy classification model, outperforming traditional methods [10] | Effective in high-dimensional data mining and feature selection tasks |

| PMA [11] | Eight real-world engineering design problems | Consistently delivered optimal solutions [11] | Generalizability and strong performance across diverse engineering domains |

Experimental Protocols and Methodologies

To ensure the validity and reproducibility of comparative studies between NPDOA and PSO, researchers typically adhere to rigorous experimental protocols.

Standardized Benchmark Testing

- Test Suite Selection: Algorithms are evaluated on recognized benchmark suites like CEC2017 or CEC2022, which contain a diverse set of unimodal, multimodal, hybrid, and composition functions [11] [13].

- Parameter Setting: All algorithms use population sizes (e.g., 30-100 particles/neurons) and maximum function evaluations (e.g., 10,000-50,000) appropriate for the problem dimension (30D, 50D, 100D). Specific parameters for each algorithm (e.g., cognitive and social coefficients for PSO) are set as recommended in their respective literature [9] [10].

- Performance Metrics: The primary metrics are:

- Best Fitness: The lowest error value found.

- Average Fitness: The mean error over multiple independent runs, indicating robustness.

- Convergence Speed: The number of iterations or function evaluations required to reach a satisfactory solution.

- Statistical Significance: Non-parametric tests like the Wilcoxon rank-sum test and the Friedman test are used to rank algorithms and verify that performance differences are statistically significant [11] [10].

Validation on Practical Engineering Problems

- Problem Formulation: Real-world problems (e.g., compression spring design, pressure vessel design) are formalized as constrained single-objective optimization problems, minimizing cost or maximizing performance subject to physical and design constraints [9].

- Algorithm Implementation: Each algorithm is run multiple times on the practical problem.

- Solution Quality Assessment: The best solution found by each algorithm is compared against known optimal solutions or against solutions from other state-of-the-art algorithms. Decision Curve Analysis (DCA) may be used in clinical applications to evaluate net benefit [12].

The workflow for a comprehensive benchmark study integrating these protocols is shown below.

The Scientist's Toolkit: Key Research Reagents

This section details essential computational tools and concepts used in meta-heuristic research, particularly for comparing algorithms like NPDOA and PSO.

Table 3: Essential "Research Reagent Solutions" for Meta-heuristic Algorithm Development

| Tool/Concept | Category | Primary Function in Research |

|---|---|---|

| CEC Benchmark Suites [11] [13] | Test Problem Set | Provides a standardized, diverse collection of optimization functions for fair and reproducible algorithm performance evaluation. |

| PlatEMO [9] | Software Platform | A MATLAB-based platform for evolutionary multi-objective optimization, used to run experiments and perform comparative analysis. |

| Automated Machine Learning (AutoML) [12] | Application Framework | An end-to-end framework where optimization algorithms like INPDOA can be embedded to automate model selection and hyperparameter tuning. |

| Fitness Function | Algorithm Core | A mathematical function defining the optimization goal; algorithms iteratively seek to minimize or maximize its value. |

| SHAP (SHapley Additive exPlanations) [12] | Analysis Tool | Explains the output of machine learning models, quantifying the contribution of each input feature to the prediction. |

| Privileged Knowledge Distillation [14] | Training Paradigm | A technique (e.g., used in BLEND framework) where a model trained with extra "privileged" information guides a final model that operates without it. |

| Opposition-Based Learning [13] | Search Strategy | A strategy used to enhance population diversity by evaluating both a candidate solution and its opposite, accelerating convergence. |

| Diagonal Loading Technique [15] | Numerical Method | Used in signal processing to improve the conditioning of covariance matrices, enhancing robustness in applications like direction-of-arrival estimation. |

The Neural Population Dynamics Optimization Algorithm (NPDOA) represents a significant paradigm shift in meta-heuristic optimization, drawing inspiration from computational neuroscience rather than traditional biological or physical phenomena. This brain-inspired algorithm simulates the activities of interconnected neural populations during cognitive and decision-making processes, treating potential solutions as neural states within a population [9]. Each decision variable in a solution corresponds to a neuron, with its value representing the neuron's firing rate [9]. This novel framework implements three core strategies—attractor trending, coupling disturbance, and information projection—that work in concert to balance the fundamental optimization requirements of exploration and exploitation [9]. As optimization challenges grow increasingly complex in fields like drug discovery and engineering design, NPDOA offers a biologically-plausible mechanism for navigating high-dimensional, non-linear search spaces more effectively than many conventional approaches.

Core Strategic Framework of NPDOA

Attractor Trending Strategy

The attractor trending strategy drives neural populations toward optimal decisions by emulating the brain's ability to converge on favorable stable states during decision-making processes. This strategy ensures the algorithm's exploitation capability by guiding neural populations toward attractor states associated with high-quality solutions [9]. In computational neuroscience, attractor states represent stable firing patterns that neural networks settle into during cognitive tasks, and NPDOA leverages this principle by creating solution landscapes where high-fitness regions act as attractors. The strategy systematically reduces the distance between current solution representations (neural states) and these identified attractors, facilitating refined local search and convergence properties. This mechanism allows the algorithm to thoroughly explore promising regions discovered during the search process, mimicking how the brain focuses computational resources on the most probable solutions to a problem once promising alternatives have been identified through initial processing.

Coupling Disturbance Strategy

The coupling disturbance strategy introduces controlled disruptions to prevent premature convergence by deviating neural populations from attractors through coupling with other neural populations [9]. This strategy enhances the algorithm's exploration capability by simulating the competitive and cooperative interactions between different neural assemblies in the brain [9]. When neural populations become too synchronized or settled into suboptimal patterns, the coupling disturbance introduces perturbations that force the system to consider alternative trajectories through the solution space. This strategic interference prevents the algorithm from becoming trapped in local optima by maintaining population diversity and encouraging exploration of undiscovered regions. The biological analogy lies in the brain's ability to break cognitive fixedness—escaping entrenched thinking patterns to consider novel solutions to problems. The magnitude and frequency of these disturbances can be adaptively tuned based on search progress, providing a self-regulating mechanism for maintaining the exploration-exploitation balance throughout the optimization process.

Information Projection Strategy

The information projection strategy regulates communication between neural populations, enabling a smooth transition from exploration to exploitation phases [9]. This mechanism controls the impact of the attractor trending and coupling disturbance strategies on the neural states of populations [9], functioning as a global coordination mechanism that optimizes information flow throughout the search process. The strategy mimics the brain's capacity to modulate communication between different neural regions based on task demands, selectively enhancing or suppressing information transfer to optimize decision-making. In NPDOA, this translates to dynamically adjusting the influence of different search strategies based on convergence metrics and population diversity measures. During early iterations, information projection may prioritize coupling disturbance to encourage exploration, while gradually shifting toward attractor trending as promising regions are identified. This adaptive coordination ensures that the algorithm maintains an appropriate balance between discovering new solution regions and thoroughly exploiting promising areas already identified.

Table 1: Core Strategic Mechanisms in NPDOA

| Strategy | Primary Function | Biological Analogy | Optimization Role |

|---|---|---|---|

| Attractor Trending | Drives populations toward optimal decisions | Neural convergence to stable states during decision-making | Exploitation |

| Coupling Disturbance | Deviates populations from attractors via coupling | Competitive neural interference patterns | Exploration |

| Information Projection | Controls inter-population communication | Neuromodulatory regulation of information flow | Transition Regulation |

Strategic Integration and Workflow

The three core strategies of NPDOA operate as an integrated system rather than independent mechanisms, creating a sophisticated optimization framework that dynamically adapts to search space characteristics. The strategic workflow follows a cyclic pattern where information projection first regulates the relative influence of attractor trending and coupling disturbance, then these strategies modify population states, followed by fitness evaluation that informs the next cycle's parameter adjustments. This continuous feedback loop enables the algorithm to maintain appropriate exploration-exploitation balance throughout the optimization process. The following diagram illustrates the logical relationships and workflow between these core strategies:

Comparative Experimental Framework: NPDOA vs. Particle Swarm Optimization

Experimental Protocols and Benchmarking Methodologies

The comparative analysis between NPDOA and Particle Swarm Optimization (PSO) follows rigorous experimental protocols established in optimization literature. Benchmarking typically employs standardized test suites such as the CEC 2017 and CEC 2022 benchmark functions, which provide diverse landscapes with known global optima to evaluate algorithm performance across various problem characteristics [16]. These functions include unimodal, multimodal, hybrid, and composition problems that test different aspects of algorithmic capability. In standardized testing, experiments typically run across multiple dimensions (30D, 50D, 100D) to assess scalability, with population sizes fixed for fair comparison [16]. Each algorithm executes multiple independent runs with different random seeds to account for stochastic variations, with performance metrics including convergence speed, solution accuracy, and stability recorded throughout the iterative process. Statistical significance tests, including Wilcoxon rank-sum and Friedman tests, validate performance differences, ensuring observed advantages are not due to random chance [16].

For real-world validation, researchers often implement both algorithms on practical engineering optimization problems, including tension/compression spring design, pressure vessel design, welded beam design, and cantilever beam design problems [9]. These problems feature non-linear constraints and complex objective functions that mirror challenges encountered in industrial applications. The experimental protocol requires both algorithms to handle constraints through established methods like penalty functions, with identical initial conditions and computational budgets allocated to ensure fair comparison.

Performance Metrics and Evaluation Criteria

Algorithm performance is evaluated using multiple quantitative metrics that capture different aspects of optimization effectiveness. The primary metrics include:

- Solution Accuracy: Measured as the deviation from known global optima for benchmark functions or the best-found objective value for practical problems.

- Convergence Speed: Evaluated through iteration count to reach a specified solution quality or by analyzing convergence curves throughout the optimization process.

- Robustness: Assessed via success rate across multiple runs or coefficient of variation in solution quality.

- Computational Efficiency: Measured by function evaluations or execution time to reach convergence criteria.

These metrics provide a comprehensive picture of algorithmic performance, capturing both solution quality and resource requirements. The following diagram illustrates the typical experimental workflow for comparing optimization algorithms:

Comparative Performance Analysis

Benchmark Function Results

Empirical studies demonstrate that NPDOA consistently outperforms PSO across various benchmark functions. In comprehensive testing on CEC 2017 and CEC 2022 test suites, NPDOA achieves superior average Friedman rankings of 3.0, 2.71, and 2.69 for 30, 50, and 100 dimensions respectively, indicating better overall performance across diverse problem types [16]. The algorithm exhibits particular strength on multimodal and hybrid composition functions where maintaining population diversity while pursuing convergence is crucial. This advantage stems from NPDOA's strategic integration of coupling disturbance that prevents premature convergence on local optima while efficiently exploiting promising regions through attractor trending. Statistical analysis using Wilcoxon rank-sum tests confirms the significance of these performance differences with p-values below 0.05 in most test cases [16].

PSO demonstrates competitive performance on unimodal problems where direct gradient-like pursuit of the optimum is effective, but shows limitations on complex multimodal landscapes where the tendency to converge prematurely hinders thorough exploration [9] [17]. The social learning mechanism in PSO, while effective for knowledge sharing, can sometimes cause the swarm to abandon promising regions too quickly in favor of the current global best, potentially missing superior solutions in the vicinity. NPDOA's neural population framework with regulated information projection appears to mitigate this limitation by maintaining more diverse exploration pathways while still leveraging collective intelligence.

Table 2: Benchmark Performance Comparison (CEC 2017 Suite)

| Algorithm | 30D Ranking | 50D Ranking | 100D Ranking | Unimodal Performance | Multimodal Performance |

|---|---|---|---|---|---|

| NPDOA | 3.00 | 2.71 | 2.69 | Excellent | Superior |

| PSO | 4.82 | 5.13 | 5.27 | Good | Moderate |

| DE | 3.95 | 4.02 | 4.11 | Good | Good |

Practical Engineering Problem Performance

In practical engineering applications, NPDOA demonstrates significant advantages in solving complex constrained optimization problems. For classical engineering challenges including the compression spring design problem, cantilever beam design problem, pressure vessel design problem, and welded beam design problem, NPDOA consistently finds superior solutions compared to PSO and other meta-heuristic approaches [9]. The neural population dynamics framework appears particularly adept at handling the non-linear constraints and discontinuous search landscapes common in engineering design problems.

A notable application in drug discovery further demonstrates NPDOA's practical utility. In developing an automated machine learning (AutoML) system for prognostic prediction in autologous costal cartilage rhinoplasty, researchers implemented an improved NPDOA (INPDOA) that significantly enhanced model performance [12]. The INPDOA-enhanced AutoML model achieved a test-set AUC of 0.867 for 1-month complications and R² = 0.862 for 1-year Rhinoplasty Outcome Evaluation scores, outperforming traditional optimization approaches [12]. This demonstrates NPDOA's effectiveness in optimizing complex, real-world prediction models with multiple interacting parameters and objective functions.

Exploration-Exploitation Balance Analysis

The fundamental advantage of NPDOA appears to stem from its more effective balance between exploration and exploitation throughout the optimization process. While PSO relies on inertia weights and social learning parameters to manage this balance, NPDOA's biologically-inspired framework provides more nuanced control through its three core strategies. The attractor trending strategy facilitates intensive exploitation of promising regions, while coupling disturbance maintains population diversity through strategic disruptions. Information projection orchestrates the transition between these modes based on search progress, creating a self-regulating mechanism that adapts to problem characteristics.

Analysis of convergence curves reveals that NPDOA typically maintains higher population diversity during early iterations while accelerating convergence in later stages as the global optimum region is identified. PSO often exhibits faster initial convergence but may stagnate prematurely on complex multimodal problems [9] [17]. This difference becomes more pronounced as problem dimensionality increases, with NPDOA demonstrating superior scalability in high-dimensional search spaces common in modern engineering and drug design applications.

Table 3: Strategic Characteristics and Performance Profiles

| Characteristic | NPDOA | PSO |

|---|---|---|

| Inspiration Source | Brain neuroscience | Bird flocking behavior |

| Exploration Mechanism | Coupling disturbance between neural populations | Stochastic velocity updates |

| Exploitation Mechanism | Attractor trending toward optimal decisions | Convergence toward personal & global best |

| Balance Regulation | Information projection strategy | Inertia weight & learning factors |

| Strength | Effective on complex multimodal problems | Fast initial convergence |

| Limitation | Higher computational complexity per iteration | Premature convergence on complex problems |

Application in Drug Discovery and Molecular Optimization

Molecular Optimization and Drug Design Applications

Swarm intelligence algorithms, including both PSO and brain-inspired approaches like NPDOA, have demonstrated significant utility in molecular optimization and drug design applications. These methods help navigate the vast chemical space to identify compounds with desired properties, dramatically accelerating the drug discovery process [18]. The molecular optimization problem presents particular challenges due to the discrete nature of molecular space and the complex, often non-linear relationships between molecular structure and properties. While traditional high-throughput screening of physical compound libraries typically tests up to 10^7 compounds, the estimated chemical space contains 10^30 to 10^60 potential organic compounds, creating an optimization challenge of immense scale [19].

In de novo drug design, metaheuristic algorithms generate novel molecular structures from scratch rather than searching existing databases, enabling discovery of truly novel chemical entities [18]. The optimization process typically involves scoring molecules based on multiple criteria including drug-likeness (QED), synthetic accessibility, and predicted biological activity against target proteins [19] [18]. The quantitative estimate of drug-likeness (QED) incorporates eight molecular properties—molecular weight (MW), octanol-water partition coefficient (ALOGP), hydrogen bond donors (HBD), hydrogen bond acceptors (HBA), molecular polar surface area (PSA), rotatable bonds (ROTB), aromatic rings (AROM), and structural alerts (ALERTS)—into a single value for compound ranking [18].

Algorithmic Performance in Molecular Search Spaces

In molecular optimization benchmarks, swarm intelligence approaches consistently outperform traditional methods in efficiently exploring the complex chemical space. The Swarm Intelligence-Based Method for Single-Objective Molecular Optimization (SIB-SOMO) demonstrates particular effectiveness, finding near-optimal molecular solutions in remarkably short timeframes compared to other state-of-the-art methods [18]. This approach adapts the core framework of swarm intelligence to molecular representation and modification, treating each particle in the swarm as a molecule and implementing specialized mutation and mix operations tailored to chemical space navigation.

PSO-based approaches have also been successfully applied to molecular optimization, though they sometimes face challenges with the discrete representation of molecular structures and the ruggedness of molecular fitness landscapes [18]. The canonical PSO algorithm, designed for continuous optimization, requires modification to effectively handle molecular graph representations. NPDOA's neural population framework may offer advantages in this domain due to its more flexible representation scheme and better handling of multimodal landscapes, though comprehensive direct comparisons in molecular optimization specifically are not yet available in the literature.

Essential Research Reagents and Computational Tools

Rigorous comparison of optimization algorithms requires standardized testing environments and evaluation frameworks. The following table details key resources essential for conducting meaningful benchmarking studies between NPDOA, PSO, and other metaheuristic algorithms:

Table 4: Essential Research Resources for Optimization Algorithm Benchmarking

| Resource Category | Specific Tools/Functions | Purpose & Application |

|---|---|---|

| Benchmark Suites | CEC 2017, CEC 2022 test functions [16] | Standardized performance evaluation across diverse problem types |

| Engineering Problems | Compression spring, Pressure vessel, Welded beam designs [9] | Validation on practical constrained optimization challenges |

| Statistical Analysis | Wilcoxon rank-sum test, Friedman test [16] | Statistical validation of performance differences |

| Molecular Optimization | QED (Quantitative Estimate of Druglikeness) [18] | Assessment of drug-like properties in molecular design |

| Implementation Platforms | PlatEMO v4.1 [9] | Experimental comparison framework for evolutionary multi-objective optimization |

The comprehensive comparison between NPDOA and PSO reveals a consistent performance advantage for the brain-inspired approach across diverse optimization scenarios. NPDOA's strategic integration of attractor trending, coupling disturbance, and information projection provides a more nuanced and effective balance between exploration and exploitation, particularly evident in complex multimodal landscapes and high-dimensional problems. While PSO remains a competitive and computationally efficient option for many applications, NPDOA demonstrates superior capability in challenging optimization domains including engineering design, drug discovery, and molecular optimization.

Future research directions should focus on refining NPDOA's parameter adaptation mechanisms, exploring hybrid approaches that combine strengths from both algorithms, and expanding applications to emerging challenges in pharmaceutical research and development. As optimization problems in drug discovery continue to grow in complexity and dimensionality, biologically-inspired approaches like NPDOA offer promising frameworks for navigating these expansive search spaces efficiently and effectively.

Particle Swarm Optimization (PSO) is a population-based metaheuristic optimization algorithm inspired by the collective social behavior of bird flocking and fish schooling [20]. Introduced by Kennedy and Eberhart in 1995, PSO has gained prominence as a powerful tool for solving complex, multidimensional optimization problems across various scientific and engineering disciplines [21] [22]. The algorithm's simplicity, effectiveness, and relatively low computational cost have contributed to its widespread adoption in fields ranging from automation control and artificial intelligence to telecommunications and mechanical engineering [23].

The fundamental concept behind PSO originates from observations of natural swarms where individuals, through simple rules and local interactions, collectively exhibit sophisticated global behavior [20]. In PSO, a population of candidate solutions, called particles, "flies" through the search space, adjusting their trajectories based on their own experience and the experience of neighboring particles [21]. This emergent intelligence allows the swarm to efficiently explore and exploit the solution space, eventually converging on optimal or near-optimal solutions.

Despite its strengths, the standard PSO algorithm suffers from well-documented limitations, including premature convergence to local optima and sensitivity to parameter settings [23] [24]. These challenges have motivated extensive research efforts over the past two decades to enhance PSO's performance through various improvement strategies, making it a continuously evolving optimization technique with growing applications in increasingly complex problem domains [25] [26].

Fundamental Principles and Mechanisms

Core Algorithmic Framework

The PSO algorithm operates through a population of particles, where each particle represents a potential solution to the optimization problem [23]. Each particle i maintains two essential attributes at iteration t: a position vector Xi(t) = (xi1, xi2, ..., xiD) and a velocity vector Vi(t) = (vi1, vi2, ..., viD) in a D-dimensional search space [23] [20]. The position vector corresponds to a potential solution, while the velocity vector determines the particle's search direction and step size.

During each iteration, particles update their velocities and positions based on two fundamental experiences: their personal best position (pBest) encountered so far, and the global best position (gBest) discovered by the entire swarm [26]. The velocity update equation incorporates three components: an inertia component preserving the particle's previous motion, a cognitive component drawing the particle toward its personal best position, and a social component guiding the particle toward the global best position [20].

The standard velocity and position update equations are expressed as [23] [26]:

vij(t+1) = ω × vij(t) + c1 × r1 × (pBestij(t) - xij(t)) + c2 × r2 × (gBestj(t) - xij(t))

xij(t+1) = xij(t) + vij(t+1)

Here, ω represents the inertia weight factor, c1 and c2 are acceleration coefficients (typically set to 2), and r1, r2 are random numbers uniformly distributed in [0,1] [26]. The personal best position for each particle is updated after every iteration based on fitness comparison, while the global best represents the best position found by any particle in the swarm [20].

Conceptual Workflow

The following diagram illustrates the standard PSO algorithm's workflow and information flow between particles:

Key Advancements in PSO Variants

Major Improvement Strategies

Recent PSO research has focused on addressing the algorithm's fundamental limitations through various enhancement strategies. The table below summarizes the primary improvement categories and their representative implementations:

Table 1: Key PSO Improvement Strategies and Representative Algorithms

| Improvement Category | Specific Mechanism | Representative Variants | Key Contributions |

|---|---|---|---|

| Parameter Adaptation | Adaptive inertia weight | PSO-RIW, LDIW-PSO [24] [20] | Dynamic balance between exploration and exploitation |

| Time-varying acceleration | TVAC-PSO [24] | Adjusted cognitive and social influences during search | |

| Hybridization | DE mutation strategies | NDWPSO [23] | Enhanced diversity and local optimum avoidance |

| Whale Optimization | NDWPSO [23] | Improved convergence in later iterations | |

| Topology Modification | Dynamic neighborhoods | DMS-PSO [24] | Maintained diversity through changing information flow |

| Von Neumann topology | Von Neumann PSO [24] | Balanced convergence speed and solution quality | |

| Initialization Methods | Quasirandom sequences | WE-PSO, SO-PSO, H-PSO [22] | Improved diversity and coverage of initial search space |

| Elite opposition-based learning | NDWPSO [23] | High-quality starting population for faster convergence | |

| Subpopulation Strategies | Fitness-based partitioning | APSO [26] | Different update rules for elite, ordinary, and inferior particles |

| Multi-swarm approaches | AGPSO [25] | Parallel exploration of different search regions |

Advanced Variants and Their Methodologies

NDWPSO Algorithm

The NDWPSO (Improved Particle Swarm Optimization based on Multiple Hybrid Strategies) algorithm incorporates four key enhancements to address PSO's limitations [23]. First, it employs elite opposition-based learning for population initialization to enhance convergence speed. Second, it utilizes dynamic inertial weight parameters to improve global search capability during early iterations. Third, it implements a local optimal jump-out strategy to counteract premature convergence. Finally, it integrates a spiral shrinkage search strategy from the Whale Optimization Algorithm and Differential Evolution mutation in later iterations to accelerate convergence [23].

Experimental validation on 23 benchmark test functions demonstrated NDWPSO's superior performance compared to eight other nature-inspired algorithms. The algorithm achieved better results for all 49 datasets compared to three other PSO variants, and obtained the best results for 69.2%, 84.6%, and 84.6% of benchmark functions with dimensional spaces of 30, 50, and 100, respectively [23].

Adaptive PSO with Selective Strategies

A recent adaptive PSO variant (APSO) introduces a composite chaotic mapping model integrating Logistic and Sine mappings for population initialization [26]. This approach enhances diversity and exploration capability at the algorithm's inception. APSO implements adaptive inertia weights to balance global and local search capabilities and divides the population into three subpopulations—elite, ordinary, and inferior particles—based on fitness values, with each group employing distinct position update strategies [26].

Elite particles utilize cross-learning and social learning mechanisms to improve exploration performance, while ordinary particles employ DE/best/1 and DE/rand/1 evolutionary strategies to enhance utilization. The algorithm also incorporates a mutation mechanism to prevent convergence to local optima [26]. Experimental results demonstrate APSO's superior performance on standard benchmark functions and practical engineering applications compared to existing metaheuristic algorithms.

Experimental Performance Comparison

Benchmark Function Evaluation

Comprehensive performance evaluation using standardized benchmark functions provides critical insights into PSO variants' capabilities. The table below summarizes quantitative results from comparative studies:

Table 2: Performance Comparison of PSO Variants on Benchmark Functions

| Algorithm | Benchmark Type | Dimensions | Success Rate | Convergence Accuracy | Comparison Basis |

|---|---|---|---|---|---|

| NDWPSO [23] | f1-f13 | 30, 50, 100 | 69.2%, 84.6%, 84.6% | Superior to 8 other algorithms | 23 benchmark functions |

| PSCO [25] | 10 mathematical functions | Variable | No local trapping | More accurate global solutions | AGPSO, DMOA, INFO |

| WE-PSO [22] | 15 unimodal/multimodal | Large | Higher accuracy | Better convergence | Standard PSO, SO-PSO, H-PSO |

| APSO [26] | Standard benchmarks | Multidimensional | Improved convergence | Better solution quality | Existing metaheuristics |

| ADIWACO [24] | Multiple functions | Variable | Significantly better | Enhanced performance | Standard PSO |

Practical Application Performance

Vehicle Routing Problem Implementation

In practical applications such as the Postman Delivery Routing Problem, PSO and Differential Evolution (DE) algorithms were compared for optimizing delivery routes of the Chiang Rai post office in Thailand [17]. Both algorithms significantly outperformed current practices, with PSO and DE reducing travel distances by substantial margins across all operational days examined. Interestingly, DE demonstrated notably superior performance compared to PSO in this specific application domain, highlighting the importance of algorithm selection based on problem characteristics [17].

The experimental methodology involved representing delivery routes as solution vectors and optimizing for minimum travel distance while satisfying all delivery constraints. The superior performance of DE in this context suggests its potential advantage for combinatorial optimization problems with specific constraint structures [17].

River Discharge Prediction

In hydrological forecasting, a novel Particle Swarm Clustered Optimization (PSCO) method was developed to predict Vistula River discharge [25]. PSCO was integrated with Multilayer Perceptron Neural Networks, Adaptive Neuro-Fuzzy Inference System (ANFIS), linear equations, and nonlinear equations. Performance evaluation across thirty consecutive runs demonstrated PSCO's absence of local trapping behavior and superior accuracy compared to Autonomous Groups PSO, Dwarf Mongoose Optimization Algorithm, and Weighted Mean of Vectors [25].

The ANFIS-PSCO model achieved the highest accuracy with RMSE = 108.433 and R² = 0.961, confirming the effectiveness of the clustered optimization approach for complex environmental modeling problems [25].

Research Reagents and Computational Tools

Essential Research Components

The experimental methodologies and performance comparisons discussed in this review rely on several key computational components and benchmark resources:

Table 3: Essential Research Components for PSO Benchmarking

| Component Category | Specific Tools/Functions | Primary Function | Application Context |

|---|---|---|---|

| Benchmark Functions | 23 standard test functions [23] | Algorithm performance evaluation | Multimodal optimization |

| 15 unimodal/multimodal functions [22] | Initialization method validation | Large-dimensional spaces | |

| 10 mathematical benchmark functions [25] | Local trapping analysis | Applied science problems | |

| Implementation Frameworks | PRISMA Statement [21] | Systematic review methodology | Research synthesis |

| Low-discrepancy sequences [22] | Population initialization | Diversity enhancement | |

| Performance Metrics | Success rate statistics [23] | Comparative algorithm assessment | Benchmark studies |

| RMSE and R² values [25] | Prediction accuracy quantification | Practical applications | |

| Hybridization Techniques | DE mutation strategies [23] | Diversity preservation | Local optimum avoidance |

| WOA spiral search [23] | Convergence acceleration | Later iteration phases |

Particle Swarm Optimization continues to evolve as a powerful optimization technique with demonstrated effectiveness across diverse application domains. The advancement from standard PSO to sophisticated variants incorporating adaptive parameter control, hybrid strategies, and specialized initialization methods has substantially addressed early limitations related to premature convergence and solution quality.

Performance comparisons on standardized benchmark functions reveal that contemporary PSO variants, particularly those incorporating multiple enhancement strategies, consistently outperform earlier implementations and competing algorithms. The empirical evidence from practical applications in vehicle routing, hydrological forecasting, and engineering design confirms the operational value of these improvements in real-world scenarios.

Future research directions likely include further refinement of adaptive parameter control mechanisms, development of problem-specific hybridization strategies, and enhanced theoretical understanding of convergence properties. As optimization challenges grow in complexity and dimensionality, PSO variants will continue to provide valuable tools for researchers and practitioners across scientific and engineering disciplines.

The comparison between the Neural Population Doctrine (NPD) and Social Behavior Models (SBM) represents a critical frontier in computational neuroscience and bio-inspired optimization. The Neural Population Doctrine posits that complex information is processed and encoded through the coordinated activity of heterogeneous neural populations, where computational power emerges from collective interactions rather than individual units [27]. This framework is characterized by its focus on population coding, efficient information representation, and the geometric organization of neural activity in state space [28]. In contrast, Social Behavior Models derive from observations of collective intelligence in animal societies, such as flocking birds, schooling fish, and social insects. These models emphasize decentralized control, self-organization, and simple local rules that generate complex global behaviors through particle-like interactions. While historically distinct, these frameworks converge on principles of distributed computation, emergence, and adaptive optimization, making them valuable for different classes of problems in drug development and computational biology.

The fundamental distinction lies in their information processing paradigms. Neural population coding relies on heterogeneous tuning curves, mixed selectivity, and correlation structures that together enable high-dimensional representation of task-relevant variables [27]. Social behavior models typically employ homogeneous agents following identical update rules, where diversity emerges from positional rather than functional differences. This comparison guide examines their theoretical foundations, performance characteristics, and applicability to optimization challenges in pharmaceutical research, providing experimental data and methodologies for informed model selection.

Theoretical Foundations and Mechanisms

Core Principles of Neural Population Coding

The Neural Population Doctrine is grounded in empirical observations from neurophysiological studies across multiple species and brain regions. Key experiments recording from hundreds of neurons simultaneously in posterior parietal cortex of mice during decision-making tasks reveal that neural populations implement a form of efficient coding that whitens correlated task variables, representing them with less-correlated population modes [28]. This population-level computation enables the brain to maintain multiple interrelated variables without interference, updating them coherently through time.

Information in neural populations is organized through several complementary mechanisms. First, heterogeneous tuning curves ensure that different neurons respond preferentially to different stimulus features or task variables, creating a diverse representational space [27]. Second, temporal patterning of activity carries information complementary to firing rates, with precisely timed spike patterns significantly enhancing population coding capacity [27]. Third, structured correlations between neurons can either enhance or limit information, with specialized network motifs optimizing signal transmission to downstream brain areas [29]. These correlations are not random noise but rather reflect functional organization principles, as demonstrated by findings that neurons projecting to the same brain area exhibit elevated pairwise correlations structured to enhance population-level information [29].

Table 1: Core Principles of Neural Population Coding

| Principle | Mechanism | Functional Benefit | Experimental Evidence |

|---|---|---|---|

| Heterogeneous Tuning | Diverse stimulus preferences across neurons | Increased dimensionality of representations | Two-photon calcium imaging in mouse posterior cortex [27] |

| Mixed Selectivity | Nonlinear combinations of task variables | Enables linear decoding of complex features | Population recordings in association cortex [27] |

| Efficient Coding | Decorrelation of correlated variables | Minimizes redundancy in population code | Neural geometry analysis during decision-making [28] |

| Specialized Correlation Motifs | Information-enhancing pairwise structures | Boosts signal-to-noise for downstream targets | Retrograde labeling + calcium imaging in PPC [29] |

| Sequential Dynamics | Time-varying activation patterns | Enables representation of temporal sequences | Population activity tracking during trial tasks [28] |

Fundamentals of Social Behavior Models

Social Behavior Models draw inspiration from collective animal behaviors where complex group-level patterns emerge from simple individual rules. The theoretical foundation rests on principles of self-organization, stigmergy (indirect coordination through environmental modifications), and local information sharing. Unlike the Neural Population Doctrine, which is directly derived from biological measurements, Social Behavior Models are primarily conceptual frameworks implemented computationally after observing animal collective behaviors.

Particle Swarm Optimization (PSO), a prominent Social Behavior Model, operationalizes these principles through position and velocity update equations that balance individual experience with social learning. Each particle adjusts its trajectory based on its personal best position and the swarm's global best position, creating a form of social cooperation that efficiently explores high-dimensional spaces. This emergent optimization capability mirrors the collective decision-making observed in social animals, where groups achieve better solutions than individuals working alone.

Experimental Data and Performance Comparison

Neural Population Coding Performance Metrics

Quantitative studies of neural population codes reveal remarkable information encoding capabilities. Research examining posterior parietal cortex in mice during a virtual navigation decision task demonstrates that population codes reliably track multiple interrelated task variables with high precision [28]. The geometry of these population representations systematically changes throughout behavioral trials, maintaining discriminability between task variables even as their statistical relationships evolve.

Critical performance metrics include information scaling with population size, encoding dimensionality, and noise robustness. Experimental data shows that neural populations achieve efficient information scaling, where a small subset of highly informative neurons often carries the majority of sensory information [27]. This sparse coding strategy contrasts with the more uniform participation typical of social behavior models. Additionally, neural populations exhibit high-dimensional representations enabled by nonlinear mixed selectivity, where neurons respond to specific combinations of input features rather than single variables [27]. This mixed selectivity dramatically expands the coding capacity of neural populations compared to linearly separable representations.

Table 2: Performance Characteristics of Neural Population Codes

| Performance Metric | Experimental Measurement | Typical Range | Dependence Factors |

|---|---|---|---|

| Information Scaling | Mutual information between stimuli and population response | Sublinear scaling with population size [27] | Tuning heterogeneity, noise correlations |

| Encoding Dimensionality | Number of independent task variables represented | Higher than neuron count with mixed selectivity [27] | Nonlinear mixing, population size |

| Noise Robustness | Discrimination accuracy with added noise | Maintained through correlation structures [29] | Correlation motifs, population size |

| Temporal Stability | Representation fidelity across trial time | Dynamic reconfiguration while maintaining accuracy [28] | Sequential dynamics, task demands |

| Decoding Efficiency | Linear separability of population patterns | High with nonlinear mixed selectivity [27] | Tuning diversity, representational geometry |

Comparative Performance in Optimization Tasks

When applied to benchmark optimization problems, Neural Population-inspired algorithms demonstrate distinct strengths compared to Social Behavior approaches like Particle Swarm Optimization. Neural population methods typically excel at problems requiring high-dimensional representation, hierarchical feature extraction, and robustness to correlated inputs. This advantage stems from their foundation in biological systems that have evolved to handle complex, noisy sensory data. The efficient coding principle observed in neural populations – where correlated variables are represented by less-correlated neural modes – provides particular advantage for problems with multicollinear features [28].

Social Behavior Models like PSO generally outperform in problems requiring rapid exploration of large parameter spaces, dynamic environments, and when global structure is unknown. The social information sharing in PSO enables effective navigation of deceptive landscapes where local optima might trap individual searchers. However, neural population approaches typically achieve better sample efficiency once learning stabilizes, meaning they extract more information from each evaluation due to their more sophisticated representation geometry.

Experimental Protocols and Methodologies

Protocol for Neural Population Code Analysis

The investigation of neural population coding principles requires specialized experimental setups and analytical methods. A representative protocol for quantifying population coding properties involves these key steps:

Neural Activity Recording: Simultaneously record from hundreds of neurons using two-photon calcium imaging or high-density electrophysiology in behaving animals. For projection-specific analysis, inject retrograde tracers conjugated to fluorescent dyes to identify neurons projecting to specific target areas [29].

Behavioral Task Design: Implement a decision-making task with multiple interrelated variables. For example, a delayed match-to-sample task in virtual reality where mice must combine a sample cue memory with test cue identity to select reward direction [29].

Multivariate Dependence Modeling: Apply nonparametric vine copula (NPvC) models to estimate mutual information between neural activity and task variables while controlling for movement and other confounding variables. This method expresses multivariate probability densities as products of copulas and marginal distributions, effectively capturing nonlinear dependencies [29].

Population Code Analysis: Quantify the geometry of neural population representations by analyzing how correlated task variables are represented by less-correlated neural population modes. Compute the scaling of information with population size and identify specialized correlation structures [28].

Information Decoding: Use linear classifiers to decode task variables from population activity patterns, evaluating how representation geometry affects decoding accuracy across different population subsets [27].

This protocol has been successfully implemented in studies of mouse posterior parietal cortex, revealing how neural populations maintain multiple task variables without interference through efficient coding principles [28].

Protocol for Social Behavior Algorithm Benchmarking

Standardized benchmarking of Social Behavior Models follows these established methodological steps:

Algorithm Implementation: Code the Social Behavior algorithm (e.g., Particle Swarm Optimization) with standardized parameter settings. Common configurations include swarm sizes of 20-50 particles, inertia weight of 0.729, and cognitive/social parameters of 1.494.

Test Problem Selection: Choose diverse benchmark functions covering different challenge types: unimodal (Sphere, Rosenbrock), multimodal (Rastrigin, Ackley), and hybrid composition functions.

Performance Metrics Measurement: For each benchmark, measure convergence speed (iterations to threshold), solution quality (error at termination), robustness (success rate across runs), and computational efficiency (function evaluations).

Statistical Comparison: Execute multiple independent runs (typically 30+) and perform statistical testing (e.g., Wilcoxon signed-rank tests) to determine significant performance differences.

Parameter Sensitivity Analysis: Systematically vary algorithm parameters to assess robustness to configuration choices and identify optimal settings for different problem classes.

This standardized methodology enables direct comparison between Social Behavior Models and Neural Population-inspired optimizers across diverse problem domains.

Signaling Pathways and Computational Workflows

Neural Population Coding Workflow

The following diagram illustrates the complete experimental and analytical workflow for investigating neural population codes, from neural recording to computational modeling:

Neural Population Coding Analysis Workflow

Social Behavior Algorithm Structure

The following diagram illustrates the core computational structure of Social Behavior Models like Particle Swarm Optimization, highlighting the information flow and decision points:

Social Behavior Algorithm Execution Flow

Research Reagent Solutions Toolkit

Table 3: Essential Research Reagents and Tools for Neural Population Studies

| Reagent/Tool | Function | Example Applications | Key Characteristics |

|---|---|---|---|

| Two-Photon Calcium Imaging | Neural activity recording in behaving animals | Population coding dynamics in cortex [29] | High spatial resolution, cellular precision |

| Genetically-Encoded Calcium Indicators (e.g., GCaMP) | Neural activity visualization | Real-time monitoring of population activity [29] | High signal-to-noise, genetic targeting |

| Retrograde Tracers (fluorescent conjugates) | Projection-specific neuron labeling | Identifying output pathways [29] | Pathway-specific, compatible with imaging |

| Neuropixels Probes | High-density electrophysiology | Large-scale population recording [27] | Hundreds of simultaneous neurons |

| Optogenetic Actuators (e.g., Channelrhodopsin) | Precise neural manipulation | Testing causal role of population patterns [30] | Millisecond precision, cell-type specific |

| Vine Copula Models (NPvC) | Multivariate dependency estimation | Quantifying neural information [29] | Nonlinear dependencies, robust estimation |

| Virtual Reality Systems | Controlled behavioral paradigms | Navigation-based decision tasks [29] | Precise stimulus control, natural behavior |

This research toolkit enables the comprehensive investigation of neural population coding principles from experimental measurement to computational analysis. The combination of advanced recording technologies, pathway-specific labeling, and sophisticated analytical methods provides the necessary infrastructure for extracting the computational principles that make neural population codes so efficient and robust.

Algorithmic Structures and Search Philosophies Compared

In the field of metaheuristic optimization, the continuous pursuit of more efficient and robust algorithms drives comparative research. This guide objectively analyzes the Neural Population Dynamics Optimization Algorithm (NPDOA), a novel brain-inspired method, against the well-established Particle Swarm Optimization (PSO) paradigm. Framed within broader benchmark comparison research, this examination details the fundamental structural philosophies, experimental performances, and practical applications of both algorithms, providing researchers and drug development professionals with actionable insights for algorithmic selection.

The no-free-lunch theorem establishes that no single algorithm excels universally across all problem domains [9]. This reality necessitates rigorous comparative analysis to match algorithmic strengths with specific problem characteristics. NPDOA emerges from computational neuroscience, simulating decision-making processes in neural populations [9], while PSO maintains its popularity as a versatile swarm intelligence technique inspired by collective social behavior [31]. This comparison leverages standardized benchmark results and practical engineering applications to delineate their respective performance boundaries and optimal use cases.

Algorithmic Architectures and Philosophical Foundations

Neural Population Dynamics Optimization Algorithm (NPDOA)

NPDOA represents a paradigm shift toward brain-inspired computation, modeling its search philosophy on interconnected neural populations during cognitive decision-making processes [9]. Unlike nature-metaphor algorithms, NPDOA grounds its mechanics in theoretical neuroscience, treating each solution as a neural state where decision variables correspond to neuronal firing rates [9].

The algorithm operates through three core strategies that govern its search behavior:

- Attractor Trending Strategy: Drives neural populations toward optimal decisions by converging neural states toward different attractors, corresponding to favorable decisions in the search space. This mechanism ensures the algorithm's exploitation capability [9].

- Coupling Disturbance Strategy: Introduces deliberate interference by coupling neural populations with others, deviating them from attractors to prevent premature convergence. This strategy explicitly enhances exploration ability [9].

- Information Projection Strategy: Controls communication between neural populations, regulating the influence of the aforementioned strategies and enabling a controlled transition from exploration to exploitation throughout the optimization process [9].

This architectural foundation allows NPDOA to simulate the human brain's remarkable efficiency in processing diverse information types and arriving at optimal decisions [9]. Each solution ("neural population") evolves through these dynamic interactions, creating a search process that mirrors cognitive decision-making pathways.

Particle Swarm Optimization (PSO)

PSO embodies a fundamentally different inspiration, modeling its search on the collective intelligence observed in bird flocking and fish schooling behaviors [24]. As a population-based stochastic optimizer, PSO maintains a swarm of particles that navigate the search space through simple positional and velocity update rules [26].

The algorithm's core mechanics have evolved since its inception in 1995, with the inertia-weight model representing the current standard formulation. Each particle's position update follows this fundamental equation:

PSO Algorithm Workflow

The velocity update equation reveals the algorithm's social dynamics:

vᵢⱼ(t+1) = ωvᵢⱼ(t) + c₁r₁(pBestᵢⱼ(t) - xᵢⱼ(t)) + c₂r₂(gBestᵢⱼ(t) - xᵢⱼ(t)) [26]

Where:

- ω represents the inertia weight controlling momentum

- c₁, c₂ are acceleration coefficients for cognitive and social components

- r₁, r₂ are random values introducing stochasticity

- pBest tracks a particle's historical best position

- gBest represents the swarm's global best position

PSO's philosophical foundation rests on balancing the cognitive component (personal experience) with the social component (neighborhood influence) [24]. This social metaphor creates an efficient, though sometimes problematic, exploration-exploitation dynamic that has been refined through numerous variants.

Comparative Architectural Analysis

Table 1: Fundamental Architectural Differences

| Aspect | NPDOA | Standard PSO |

|---|---|---|

| Primary Inspiration | Brain neuroscience & neural population dynamics [9] | Social behavior of bird flocking/fish schooling [24] |

| Solution Representation | Neural state (firing rates) [9] | Particle position in search space [26] |

| Core Search Mechanism | Attractor dynamics with coupling disturbances [9] | Velocity-position updates with personal/global best guidance [26] |

| Exploration Control | Coupling disturbance strategy [9] | Inertia weight & social component [26] |

| Exploitation Control | Attractor trending strategy [9] | Cognitive component & personal best [26] |

| Transition Mechanism | Information projection strategy [9] | Time-decreasing inertia or adaptive parameters [24] |

Experimental Benchmarking and Performance Analysis

Methodology and Evaluation Framework

Benchmarking optimization algorithms requires standardized test suites with diverse problem characteristics. Research indicates that both NPDOA and PSO variants undergo rigorous evaluation using established computational benchmarks, particularly the CEC (Congress on Evolutionary Computation) test suites [32]. These frameworks provide controlled environments with known global optima, enabling objective performance comparisons across algorithms.

Experimental protocols typically involve multiple independent runs with randomized initializations to account for stochastic variations [9]. Performance metrics commonly include:

- Solution Accuracy: Measured as deviation from known global optimum

- Convergence Speed: Iterations or function evaluations required to reach target accuracy

- Success Rate: Percentage of runs successfully locating the global optimum within precision tolerance

- Statistical Significance: Analysis using Wilcoxon signed-rank tests or similar methods to validate performance differences [32]

For practical validation, both algorithms undergo testing on real-world engineering design problems, including compression spring design, cantilever beam design, pressure vessel design, and welded beam design [9]. These problems introduce realistic constraints and non-linearities absent from synthetic benchmarks.

Performance on Standardized Benchmarks

Table 2: Benchmark Performance Comparison

| Benchmark Category | NPDOA Performance | PSO Performance | Comparative Analysis |

|---|---|---|---|

| Unimodal Functions | Not explicitly reported | Fast convergence but premature convergence issues [26] | PSO shows faster initial convergence but may stagnate locally |

| Multimodal Functions | Effective exploration capabilities [9] | Improved with topological variations [24] | NPDOA's coupling disturbance enhances multimodal exploration |

| Composite Functions | Strong performance on non-linear, non-convex problems [9] | Adaptive PSO variants show competitiveness [26] | Both benefit from specialized mechanisms for complex landscapes |

| Constrained Problems | Handles constraints through penalty functions or specialized operators | Constraint-handling techniques well-developed [31] | PSO has more mature constraint-handling methodologies |

| Computational Complexity | O(N×D) per iteration similar to PSO [9] | O(N×D) per iteration [26] | Comparable per-iteration complexity |

Recent PSO enhancements demonstrate significant performance improvements on standard benchmarks. One hybrid adaptive PSO variant incorporating composite chaotic mapping, adaptive inertia weights, and subpopulation strategies demonstrated superior performance on standard benchmark functions compared to traditional PSO [26]. Similarly, NPDOA has shown "distinct benefits when addressing many single-objective optimization problems" according to its foundational research [9].

Convergence Characteristics Analysis

The convergence behavior of both algorithms reveals fundamental differences in their search philosophies. NPDOA maintains consistent exploration throughout the optimization process through its coupling disturbance strategy, preventing premature stagnation while systematically refining solutions via attractor trending [9].