Neural Population Dynamics Optimization: A Brain-Inspired Metaheuristic for Advanced Drug Development

This article explores the Neural Population Dynamics Optimization Algorithm (NPDOA), a novel brain-inspired meta-heuristic, and its transformative potential for researchers and professionals in drug development.

Neural Population Dynamics Optimization: A Brain-Inspired Metaheuristic for Advanced Drug Development

Abstract

This article explores the Neural Population Dynamics Optimization Algorithm (NPDOA), a novel brain-inspired meta-heuristic, and its transformative potential for researchers and professionals in drug development. We first establish the foundational principles of NPDOA, inspired by the information processing of interconnected neural populations. The core methodology is then detailed, explaining its unique search strategies for balancing exploration and exploitation. The article further addresses practical challenges in implementation and optimization, and provides a comparative analysis of its performance against established algorithms on benchmark and real-world problems. Finally, we synthesize key takeaways and discuss future implications for optimizing pharmaceutical formulations and accelerating therapeutic discovery.

From Brain Circuits to Algorithms: The Foundations of Neural Population Dynamics Optimization

Meta-heuristic algorithms are advanced computational strategies designed to find high-quality solutions for complex optimization problems that are often nonlinear, nonconvex, or NP-hard. Unlike traditional gradient-based mathematical methods that require continuity, differentiability, and convexity of the objective function, meta-heuristics are gradient-free and make minimal assumptions about the underlying system, making them suitable for a wide range of real-world applications [1] [2]. Their strength lies in their global search capability and strong adaptability, which enables them to find near-global optimal solutions in complex search spaces where traditional techniques struggle [3].

The development of computationally efficient optimization algorithms has been at the forefront of research, particularly with the advent of big data, deep learning, and artificial intelligence [1]. Meta-heuristic algorithms are broadly categorized based on their source of inspiration, primarily including evolutionary algorithms, swarm intelligence algorithms, physical-inspired algorithms, and mathematics-inspired algorithms [4].

A critical challenge for all meta-heuristic algorithms is achieving an appropriate balance between exploration (global search of new solution spaces) and exploitation (local refinement of promising solutions) [4] [3]. The No Free Lunch (NFL) theorem formally establishes that no single optimizer can efficiently solve every type of optimization problem, which continuously motivates the development of new algorithms tailored for specific problem characteristics [4] [3].

Taxonomy and Comparative Analysis of Meta-heuristic Algorithms

Table 1: Classification and Characteristics of Major Meta-heuristic Algorithm Types

| Algorithm Type | Inspiration Source | Representative Algorithms | Strengths | Limitations |

|---|---|---|---|---|

| Evolutionary Algorithms | Natural evolution & genetics | Genetic Algorithm (GA), Differential Evolution (DE), Biogeography-Based Optimization (BBO) [4] | Proven global search capability; principles of survival of the fittest [4] | Premature convergence; challenging problem representation; multiple parameters to tune [4] |

| Swarm Intelligence | Collective behavior of biological groups | Particle Swarm Optimization (PSO), Artificial Bee Colony (ABC), Whale Optimization Algorithm (WOA) [4] | Cooperative cooperation; individual competition; high efficiency on many problems [4] | Prone to local optima; low convergence; high computational complexity for high-dimension problems [4] |

| Physical-Inspired | Laws of physics | Simulated Annealing (SA), Gravitational Search Algorithm (GSA) [4] | No crossover/selection operations; versatile tools for complex challenges [4] | Trapping into local optimum; premature convergence [4] |

| Mathematics-Inspired | Mathematical formulations & concepts | Sine-Cosine Algorithm (SCA), Gradient-Based Optimizer (GBO), Adam Gradient Descent Optimizer (AGDO) [4] [3] | New perspective for search strategies; often beyond metaphors [4] | Local optimum stagnation; lack of proper exploration-exploitation balance [4] |

Table 2: Performance of Selected Meta-heuristic Algorithms on NP-Hard Problems

| Algorithm | Job Shop Scheduling (JSSP) | Vehicle Routing Problem (VRP) | Network Design Problem (NDP) |

|---|---|---|---|

| Genetic Algorithm (GA) | Not specified | Not specified | Best performance with efficient instruction handling [2] [5] |

| Simulated Annealing (SA) | Fastest execution and lowest resource use [2] [5] | Not specified | Not specified |

| Ant Colony Optimization (ACO) | Not specified | Best performance with fewer cache misses and fast operation [2] [5] | Not specified |

| Tabu Search (TS) | Delivered balanced results across all problems [2] [5] | Delivered balanced results across all problems [2] [5] | Delivered balanced results across all problems [2] [5] |

Neural Population Dynamics Optimization Algorithm (NPDOA)

The Neural Population Dynamics Optimization Algorithm (NPDOA) represents a novel brain-inspired meta-heuristic method that simulates the activities of interconnected neural populations in the brain during cognition and decision-making [4]. This algorithm is grounded in theoretical neuroscience and treats each solution as a neural state, where decision variables represent neurons and their values correspond to neuronal firing rates [4]. It is considered a swarm intelligence algorithm that utilizes human brain activities, marking a significant departure from conventional nature-inspired approaches [4].

Theoretical Foundation and Inspiration

NPDOA is based on the population doctrine in theoretical neuroscience, which recognizes that the human brain can process various types of information in different situations and efficiently make optimal decisions [4] [6]. The algorithm is inspired by experimental and theoretical studies investigating the activities of interconnected neural populations during sensory, cognitive, and motor calculations [4]. Neural population dynamics often evolve on low-dimensional manifolds, and understanding these dynamical processes is crucial for inferring interpretable and consistent latent representations of neural computations [7].

Core Operational Strategies

NPDOA incorporates three novel search strategies that simulate different aspects of neural population dynamics:

Attractor Trending Strategy: This strategy drives the neural states of neural populations to converge towards different attractors to approach a stable neural state associated with a favorable decision. It is primarily responsible for exploitation in the search process [4].

Coupling Disturbance Strategy: This mechanism causes interference in neural populations and disrupts the tendency of their neural states towards attractors. It is responsible for exploration, helping the algorithm avoid premature convergence to local optima [4].

Information Projection Strategy: This component adjusts information transmission between neural populations, thereby regulating the impact of the above two dynamics strategies on the neural states of neural populations. It serves as a balancing mechanism between exploitation and exploration [4].

Experimental Validation

Experimental studies have validated NPDOA's performance through systematic comparisons with nine other meta-heuristic algorithms on 59 benchmark problems and three real-world engineering problems [4]. The results demonstrated that NPDOA offers distinct benefits when addressing many single-objective optimization problems, showing competitive performance in terms of solution quality and convergence characteristics [4].

The Need for NPDOA in Contemporary Optimization

The development of NPDOA addresses several critical limitations in existing meta-heuristic algorithms:

Brain-Inspired Efficiency: The human brain represents a highly optimized information processing system that has evolved over millions of years. By mimicking its operational principles, NPDOA provides a novel approach to balancing exploration and exploitation that differs fundamentally from existing nature-inspired metaphors [4].

Addressing Algorithmic Limitations: While existing meta-heuristics have shown success in various domains, they often face challenges with premature convergence, poor exploration-exploitation balance, and difficulty handling high-dimensional problems [4]. NPDOA's unique strategies specifically target these limitations through neurologically-plausible mechanisms.

Expanding the Optimization Toolkit: According to the No Free Lunch theorem, developing new optimizers with different inspiration sources is essential for addressing diverse optimization challenges [4] [3]. NPDOA expands the algorithmic toolkit available to researchers and practitioners, potentially offering advantages for problems where traditional meta-heuristics have shown limitations.

Bridging Computational Neuroscience and Optimization: NPDOA establishes a functional bridge between theoretical neuroscience and optimization theory, creating opportunities for cross-disciplinary innovations where insights from neural dynamics can inform algorithm design and vice versa [6].

Application Protocols and Experimental Guidelines

Protocol 1: Benchmarking NPDOA Against Established Meta-heuristics

Purpose: To quantitatively evaluate NPDOA performance against state-of-the-art meta-heuristic algorithms on standard benchmark functions.

Materials and Computational Environment:

- Hardware: Computer with Intel Core i7-12700F CPU or equivalent, 2.10 GHz, and 32 GB RAM [4]

- Software: PlatEMO v4.1 platform or similar experimental optimization environment [4]

- Algorithms for Comparison: Include representatives from major meta-heuristic categories (e.g., GA, PSO, GSA, SCA) [4]

- Benchmark Sets: CEC2017 test suites or equivalent with various dimensions (10D, 30D, 50D, 100D) [4] [3]

Procedure:

- Implement NPDOA with the three core strategies: attractor trending, coupling disturbance, and information projection [4]

- Configure algorithm parameters based on the original specification (population size, iteration limits, strategy coefficients)

- Execute all algorithms on the benchmark set with multiple independent runs to account for stochastic variations

- Collect performance metrics: best solution, mean solution, standard deviation, convergence speed, and computational time

- Perform statistical analysis using Wilcoxon rank-sum test or similar non-parametric tests to validate significance of results [3]

Evaluation Metrics:

- Solution quality (fitness value) across multiple runs

- Convergence characteristics and speed

- Computational efficiency and resource utilization

- Statistical significance of performance differences

Protocol 2: Applying NPDOA to Engineering Design Problems

Purpose: To validate NPDOA performance on practical engineering optimization problems with constraints.

Problem Selection:

- Compression spring design problem [4]

- Cantilever beam design problem [4]

- Pressure vessel design problem [4]

- Welded beam design problem [4]

Implementation Workflow:

Constraint Handling Method:

- Implement penalty functions or feasibility preservation rules

- Apply domain-specific repair mechanisms for invalid solutions

- Utilize decoding procedures for mixed variable types

Validation Procedure:

- Compare obtained solutions with known optimal or best-published solutions

- Evaluate consistency across multiple independent runs

- Assess feasibility of final solutions regarding all constraints

- Perform comparative analysis with literature results

Protocol 3: NPDOA for Bioinformatics and Drug Discovery

Purpose: To apply NPDOA for complex bioinformatics optimization problems such as DNA motif discovery and drug-target interaction prediction.

Materials:

- Datasets: Biological sequences (e.g., BARC and CTCF datasets for cancer-causing motifs) [8]

- Baseline Algorithms: MEME, AlignCE, and other motif discovery meta-heuristics [8]

- Evaluation Metrics: Precision, recall, F-score, accuracy [8] [9]

Procedure for DNA Motif Discovery:

- Initialize population using Random Projection technique for meaningful solution space [8]

- Apply k-means clustering to group similar solutions [8]

- Implement NPDOA on each cluster to find optimal motifs [8]

- Evaluate discovered motifs using precision, recall, and F-score metrics [8]

- Compare performance with established benchmark algorithms [8]

Procedure for Drug-Target Interaction Optimization:

- Preprocess drug data using text normalization, stop word removal, tokenization, and lemmatization [9]

- Extract features using N-Grams and Cosine Similarity techniques [9]

- Optimize prediction model parameters using NPDOA

- Validate using performance metrics: accuracy, precision, recall, F1 Score, RMSE, AUC-ROC [9]

Expected Outcomes:

- For motif discovery: Stable results with precision ~92%, recall ~93%, F-score ~93% [8]

- For drug-target prediction: High accuracy (~98.6%) and superior performance across multiple metrics [9]

Table 3: Essential Research Reagents and Computational Resources for NPDOA Research

| Category | Item | Specification/Function | Application Context |

|---|---|---|---|

| Computational Hardware | High-Performance Workstation | Intel Core i7-12700F CPU, 2.10 GHz, 32 GB RAM [4] | General algorithm development and testing |

| Software Platforms | PlatEMO | MATLAB-based experimental platform for optimization algorithms [4] | Benchmark testing and performance comparison |

| Python Ecosystem | Libraries for data preprocessing, feature extraction, and similarity measurement [9] | Drug discovery and bioinformatics applications | |

| Benchmark Datasets | CEC2017 Test Suites | Standard benchmark functions of varying dimensions (10D, 30D, 50D, 100D) [3] | Algorithm performance validation |

| Biological Sequence Data | BARC and CTCF datasets for cancer-causing motif discovery [8] | Bioinformatics applications | |

| Drug-Target Interaction Data | Kaggle dataset containing over 11,000 drug details [9] | Pharmaceutical optimization problems | |

| Evaluation Tools | Profiling Tools | Performance analysis and optimization | |

| Statistical Test Suite | Wilcoxon rank-sum test for significance validation [3] | Experimental results validation |

The Neural Population Dynamics Optimization Algorithm represents a significant innovation in the meta-heuristic landscape by drawing inspiration from the computational principles of the human brain. Its three-strategy architecture provides a neurologically-plausible approach to balancing exploration and exploitation, addressing fundamental limitations in existing optimization methods. As research in both computational neuroscience and optimization continues to evolve, brain-inspired algorithms like NPDOA offer promising avenues for solving increasingly complex optimization challenges across scientific and engineering domains, particularly in bioinformatics and pharmaceutical applications where traditional methods face limitations. The experimental protocols and resources outlined in this document provide a foundation for researchers to implement, validate, and extend this approach in their respective fields.

Neural population dynamics is a fundamental framework for understanding how the brain processes information. This approach moves beyond the study of single neurons to investigate how coordinated activity across populations of neurons gives rise to perception, cognition, and behavior. The core concept is that neural populations form dynamical systems whose temporal evolution performs specific computations [10]. This perspective has recently inspired novel computational approaches, including the Neural Population Dynamics Optimization Algorithm (NPDOA), a brain-inspired meta-heuristic method that translates these biological principles into powerful optimization tools [4].

The dynamics of neural populations typically evolve on low-dimensional manifolds, which are smooth subspaces within the high-dimensional space of neural activity. Understanding these dynamics requires methods that can learn the dynamical processes over these neural manifolds to infer interpretable and consistent latent representations [7]. This framework has revealed that specialized structures in population codes enhance information transmission, particularly in output pathways where neurons projecting to the same target area exhibit elevated pairwise correlations organized into information-enhancing motifs [11].

Core Principles of Neural Population Coding

Key Response Features of Neural Populations

Neural population codes are organized at multiple spatial scales and shaped by several key response features that collectively determine their information-carrying capacity [12]. The diversity of single-neuron firing rates across a population enables complementary information encoding, as different neurons have varying stimulus preferences and tuning widths. Relative timing between neurons provides another critical dimension, where millisecond-scale spike patterns carry information that cannot be extracted from firing rates alone. Additionally, network state modulation influences neural responses through large-scale brain states that vary on slower timescales than transient responses to individual stimuli. Periods of neuronal silence also contribute information through the selective absence of firing in specific neurons [12].

Information Scaling and Mixed Selectivity

The scaling of information with population size depends critically on the structure of tuning preferences and trial-to-trial response correlations. While information typically increases with population size, recent work has shown that a small but highly informative subset of neurons often carries essentially all the information present in the entire observed population [12]. This sparseness coexists with high-dimensional representations enabled by mixed selectivity, where neurons exhibit complex, nonlinear responses to multiple task variables. This nonlinear mixed selectivity increases the effective dimensionality of population codes and facilitates easier linear decoding by downstream areas [12].

Table 1: Key Features of Neural Population Codes

| Feature | Description | Computational Role |

|---|---|---|

| Heterogeneous Tuning | Diversity in stimulus preference and tuning width across neurons | Enables complementary information encoding |

| Relative Timing | Millisecond-scale temporal patterns between neurons | Carries information complementary to firing rates |

| Mixed Selectivity | Nonlinear responses to multiple task variables | Increases dimensionality and facilitates linear decoding |

| Sparseness | Small fraction of neurons active at any moment | Enhances metabolic efficiency and facilitates dendritic computations |

| Correlation Structure | Organized pairwise activity correlations | Shapes information-limiting and information-enhancing motifs |

Experimental Protocols for Studying Neural Population Codes

Neural Recording and Projection Pathway Identification

To investigate specialized population codes in specific output pathways, researchers have developed sophisticated experimental protocols combining neural recording with anatomical tracing:

Animal Model and Behavioral Task: Implement a delayed match-to-sample task using navigation in a virtual reality T-maze. Mice are trained to combine a memory of a sample cue with a test cue identity to choose turn directions at a T-intersection for reward [11].

Retrograde Labeling: Inject retrograde tracers conjugated to fluorescent dyes of different colors into target areas (e.g., anterior cingulate cortex, retrosplenial cortex, and contralateral posterior parietal cortex) to identify neurons with axonal projections to these specific targets [11].

Calcium Imaging: Use two-photon calcium imaging to measure the activity of hundreds of neurons simultaneously in layer 2/3 of posterior parietal cortex during task performance at a frequency sufficient to resolve individual spikes or calcium transients [11].

Data Preprocessing: Extract calcium traces from raw imaging data and convert to spike rates or deconvolved activity. Register neurons across sessions and identify their projection targets based on retrograde labeling [11].

Analyzing Population Codes with Vine Copula Models

Traditional analytical approaches like generalized linear models have limitations in capturing the complex dependencies in neural population data. The following protocol outlines a more advanced approach:

Variable Identification: Identify all relevant task variables (e.g., sample cue, test cue, choice) and movement variables (e.g., locomotor movements controlling the virtual environment) that might modulate neural activity [11].

Model Specification: Implement nonparametric vine copula (NPvC) models to estimate multivariate dependencies among neural activity, task variables, and movement variables. This method expresses multivariate probability densities as the product of a copula (quantifying statistical dependencies) and marginal distributions conditioned on time, task, and movement variables [11].

Model Training: Break down the estimation of full multivariate dependencies into a sequence of simpler bivariate dependencies using a sequential probabilistic graphical model (vine copula). Estimate these bivariate dependencies using a nonparametric kernel-based method [11].

Information Estimation: Compute mutual information between task variables and decoded neural activity using the NPvC model. Condition on all other measured variables to isolate the contribution of individual variables and obtain robust information estimates even with nonlinear dependencies [11].

Validation: Compare model performance to alternative approaches (e.g., generalized linear models) using held-out neural activity data. Verify that the NPvC provides better prediction of frame-by-frame neural activity [11].

Experimental Workflow for Neural Population Analysis

Advanced Analytical Framework: MARBLE

The MARBLE (MAnifold Representation Basis LEarning) framework provides a sophisticated approach for inferring interpretable latent representations from neural population dynamics:

Data Input: Input neural firing rates from multiple trials under different experimental conditions, along with user-defined labels specifying conditions under which trials are dynamically consistent [7].

Manifold Approximation: Approximate the unknown neural manifold by constructing a proximity graph from the neural state data. Use this graph to define tangent spaces around each neural state and establish a notion of smoothness (parallel transport) between nearby vectors [7].

Vector Field Denoising: Implement a learnable vector diffusion process to denoise the flow field while preserving its fixed point structure. This process leverages the manifold structure to maintain geometrical consistency [7].

Local Flow Field Decomposition: Decompose the vector field into local flow fields (LFFs) defined for each neural state as the vector field at most a distance p from that state over the graph. This lifts d-dimensional neural states to a O(dp+1)-dimensional space encoding local dynamical context [7].

Geometric Deep Learning: Apply an unsupervised geometric deep learning architecture with three components: (1) p gradient filter layers for p-th order approximation of LFFs, (2) inner product features with learnable linear transformations for embedding invariance, and (3) a multilayer perceptron outputting latent vectors [7].

Latent Space Analysis: Map multiple flow fields simultaneously to define distances between their latent representations using optimal transport distance, which leverages the metric structure in latent space and detects complex interactions based on overlapping distributions [7].

Table 2: MARBLE Framework Components and Functions

| Component | Implementation | Function |

|---|---|---|

| Manifold Approximation | Proximity graph from neural states | Defines tangent spaces and parallel transport |

| Vector Diffusion | Learnable diffusion process | Denoises flow fields while preserving fixed points |

| Local Flow Fields | O(dp+1)-dimensional encoding | Captures local dynamical context around each state |

| Gradient Filter Layers | p-th order approximation | Provides local approximation of LFFs |

| Inner Product Features | Learnable linear transformations | Ensures invariance to different neural embeddings |

| Optimal Transport Distance | Distance between latent distributions | Quantifies dynamical overlap between conditions |

Connection to Neural Population Dynamics Optimization Algorithm (NPDOA)

The principles of neural population coding have directly inspired the development of the Neural Population Dynamics Optimization Algorithm (NPDOA), a novel brain-inspired meta-heuristic method. NPDOA treats potential solutions as neural states within populations and implements three core strategies inspired by neural dynamics [4]:

Attractor Trending Strategy: This approach drives neural states toward different attractors, mimicking how neural populations converge to stable states associated with favorable decisions. In optimization terms, this facilitates exploitation by guiding solutions toward promising regions of the search space [4].

Coupling Disturbance Strategy: This mechanism introduces interference in neural populations, disrupting their tendency toward attractors. This corresponds to exploration in optimization, preventing premature convergence to local optima by maintaining population diversity [4].

Information Projection Strategy: This regulates information transmission between neural populations, adjusting the impact of the other two strategies. In the algorithm, this balances exploration and exploitation based on search progress [4].

From Neural Dynamics to Optimization Algorithm

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Research Materials for Neural Population Studies

| Reagent/Material | Specifications | Experimental Function |

|---|---|---|

| Retrograde Tracers | Fluorescent dye-conjugated (e.g., Cholera Toxin B subunits); multiple colors | Identifies neurons projecting to specific target areas through retrograde transport |

| GCaMP Calcium Indicators | Genetically encoded calcium indicators (e.g., GCaMP6f, GCaMP7f); AAV delivery preferred | Reports neural activity as fluorescence changes during behavior with high signal-to-noise |

| Two-Photon Microscopy System | Laser-scanning microscope with tunable infrared laser; resonant scanners for high speed | Enables high-resolution calcium imaging of neural populations in behaving animals |

| Vine Copula Modeling Software | Custom MATLAB or Python implementation with nonparametric kernel density estimation | Quantifies multivariate dependencies among neural activity, task, and movement variables |

| MARBLE Analysis Package | Python implementation with geometric deep learning libraries (PyTorch Geometric) | Infers interpretable latent representations from neural population dynamics on manifolds |

| Virtual Reality Setup | Customized VR environment with T-maze or similar task structure; visual display system | Prescribes controlled sensory stimuli and measures decision-making behavior in rodents |

| Diphenyl suberate | Diphenyl Suberate|CAS 16868-07-8|RUO | Diphenyl suberate is a high-purity biochemical for research use only. Explore its potential as a versatile building block in organic synthesis. Not for human or veterinary use. |

| 2-(Furan-2-yl)-2-oxoacetaldehyde | 2-(Furan-2-yl)-2-oxoacetaldehyde|CAS 17090-71-0 | 2-(Furan-2-yl)-2-oxoacetaldehyde, 95% for research. CAS 17090-71-0. Molecular Formula: C6H4O3. For Research Use Only. Not for human or veterinary use. |

Application Notes for Research and Drug Development

The study of neural population dynamics offers significant promise for drug development, particularly for neurological and psychiatric disorders. Understanding how neural populations encode information provides crucial insights into disease mechanisms and potential therapeutic targets:

Biomarker Identification: Abnormalities in neural population dynamics can serve as sensitive biomarkers for disease states and treatment responses. Measures of correlation structure, information encoding capacity, and dynamical regime transitions may provide more sensitive indicators of circuit dysfunction than single-neuron properties [11] [13].

Target Validation: Investigating how specific neural populations contribute to behaviorally relevant computations helps validate potential therapeutic targets. The specialized structure of population codes in output pathways highlights the importance of targeting specific projection populations rather than broad anatomical regions [11].

Circuit-Level Therapeutics: Approaches that modulate neural population dynamics rather than individual neuronal activity may offer more effective therapeutic strategies. The success of NPDOA in optimization demonstrates the computational power of properly tuned population dynamics, suggesting analogous approaches could restore healthy brain function [4].

Translational Applications: Advanced analysis frameworks like MARBLE enable comparison of neural dynamics across species, facilitating translation from animal models to humans. The ability to find consistent latent representations across different neural embeddings is crucial for bridging preclinical and clinical research [7].

Neural Population State, Dynamics, and Computation

Core Conceptual Framework

The study of neural populations represents a fundamental shift in neuroscience, often termed the population doctrine, which posits that the population, not the single neuron, is the fundamental unit of computation in the brain [14]. This framework moves beyond analyzing individual neuron firing rates to examining the collective, time-varying activity patterns of neural ensembles. Core concepts include the neural state (a vector representing the instantaneous firing rates of all neurons in a population), neural trajectories (the time-evolution of the population state through a high-dimensional space), and the underlying neural dynamics (the rules governing this temporal evolution) [15] [14]. These dynamics are often constrained to flow along low-dimensional subspaces known as neural manifolds, which reflect the underlying network connectivity and shape the computations the population can perform [16] [7].

Table 1: Key Concepts in Neural Population Analysis

| Concept | Definition | Theoretical Significance | Experimental Insight |

|---|---|---|---|

| Neural State | A vector of the joint firing rates of a neural population at a single moment in time [14]. | Represents the population's output; the basic unit of analysis in state space [14]. | State direction can encode information (e.g., object identity), while magnitude may predict behavioral outcomes like memory recall [14]. |

| Neural Trajectory | A time course of neural population activity patterns traversing a characteristic sequence [15]. | Reflects the computational process unfolding over time, such as decision formation or movement generation [15]. | Trajectories are often stereotyped and difficult to violate, suggesting they are constrained by the underlying network [15] [17]. |

| Neural Dynamics | The rules (often described by a flow field) that govern how the neural state evolves over time [15] [18]. | Links the observed neural activity to the algorithmic-level computation being performed [18]. | Dynamics can be decomposed into local flow fields, which can be mapped to a shared latent space for comparison across conditions [7]. |

| Neural Manifold | A low-dimensional subspace within the high-dimensional neural state space where dynamics are constrained [16] [7]. | Provides a geometric structure that shapes and constrains neural computations and enables functional separation [16]. | The geometry of a manifold (e.g., orthogonality of dimensions) can separate processes like movement preparation and execution [16]. |

Analytical & Computational Tools

A suite of advanced analytical methods has been developed to infer latent dynamics and manifolds from recorded neural activity. These tools are essential for translating high-dimensional datasets into interpretable models of computation.

Table 2: Selected Analytical Methods for Neural Population Dynamics

| Method Name | Primary Function | Key Advantage | Application Example |

|---|---|---|---|

| MARBLE [7] | Learns interpretable latent representations of neural population dynamics using geometric deep learning. | Unsupervised; discovers consistent representations across networks/animals without behavioral supervision; provides a similarity metric for dynamics. | Decomposes on-manifold dynamics into local flow fields to parametrize computations during gain modulation or decision-making [7]. |

| CroP-LDM [19] | Prioritizes learning of cross-population dynamics from multi-region recordings. | Isolates shared cross-region dynamics from within-region dynamics, preventing confounding; supports both causal and non-causal inference. | Identifies dominant interaction pathways between motor and premotor cortical regions during a movement task [19]. |

| Computation-through-Dynamics Benchmark (CtDB) [18] | Provides a standardized platform with synthetic datasets and metrics for validating data-driven dynamics models. | Offers biologically realistic synthetic datasets that reflect goal-directed computations, enabling reliable model evaluation and comparison [18]. | Used to test if a model inferring dynamics from neural activity ( ( \hat{f} ) ) accurately recovers the ground-truth dynamics ( ( f ) ) [18]. |

| Brain-Computer Interface (BCI) [15] | Uses neural activity to control an external device, providing real-time feedback. | Enables causal probing of neural constraints by challenging subjects to volitionally alter their neural activity patterns [15]. | Challenged monkeys to traverse natural neural activity time courses in a time-reversed manner, testing the flexibility of dynamics [15]. |

Diagram 1: BCI Workflow for Probing Neural Dynamics. This workflow outlines the key steps in experiments that use a brain-computer interface to test the constraints on neural population trajectories [15].

Experimental Protocols

Protocol: Probing the Constraints on Neural Trajectories Using a BCI

This protocol details the experimental procedure for testing whether naturally occurring neural trajectories in the motor cortex can be volitionally altered, as described in the foundational work by Degenhart et al. [15] [17].

1. System Setup and Surgical Implantation

- Animal Model: Rhesus monkey.

- Neural Implant: A multi-electrode array (e.g., 96-electrode) chronically implanted in the primary motor cortex (M1) or related areas [15] [19].

- BCI System: A real-time neural signal processing system capable of filtering, amplifying, and recording extracellular action potentials from ~90 neural units. The system must perform causal dimensionality reduction (e.g., Gaussian Process Factor Analysis) to project the high-dimensional neural activity into a lower-dimensional (e.g., 10D) latent state at low latency [15].

2. BCI Mapping and Behavioral Task

- Mapping Function: Establish a BCI mapping that converts the 10D latent state into the 2D position of a computer cursor on a screen. This provides the animal with direct visual feedback of its neural population activity [15].

- Behavioral Task: Train the animal to perform a classic center-out reaching task. The animal must move the BCI cursor from a central target to one of several peripheral targets.

3. Identifying Natural Neural Trajectories

- Data Collection: Record neural population activity during successful task trials over multiple sessions.

- Trajectory Analysis: For a given pair of targets (A and B), analyze the neural trajectories in the 10D latent space. Identify a 2D projection (the "Separation-Maximizing" or SepMax projection) where the neural trajectory for movements from A to B is clearly distinct and separable from the trajectory for movements from B to A [15].

4. Experimental Challenge: Altering Neural Dynamics

- Altered Feedback: Change the visual feedback provided to the animal from the intuitive "MoveInt" projection to the "SepMax" projection, where the natural trajectories are curved and direction-dependent [15].

- Direct Challenge: In the SepMax projection, challenge the animal to acquire targets by following a straight-line path or even by traversing the natural neural trajectory in a time-reversed order [15] [17].

- Control and Test Blocks: Interleave ~100 trials with the altered feedback or challenge instruction with ~100 baseline trials. Provide a strong liquid reward incentive for successful performance under the challenge condition [15].

5. Data Analysis and Interpretation

- Quantitative Comparison: Compare the neural trajectories produced during the challenge trials to the natural trajectories from baseline trials.

- Key Metric: The degree of similarity between the challenge trajectories and the natural trajectories. The finding that animals are unable to produce the time-reversed or significantly altered paths, and that neural activity reverts to its natural flow field, provides empirical support that these trajectories are constrained by the underlying network connectivity [15] [17].

Protocol: Artificial Intelligence-Guided Neural Control

This protocol outlines a method for using deep reinforcement learning (RL) to achieve closed-loop control of neural firing states, which can be used to study neural function or develop therapeutic neuromodulation [20].

1. Chronic Electrode Implantation

- Animal Model: Rat.

- Neural Implant: Perform chronic electrode implantations to facilitate long-term neural stimulation and recording. This typically involves a stimulating electrode in a target region like the thalamus and a recording electrode in a downstream region like the cortex [20].

2. Stimulation and Recording Setup

- Stimulation Method: Employ Infrared Neural Stimulation (INS) for its non-invasive and precise properties. Use a continuous-wave (CW) near-infrared laser [20] [21].

- Recording Method: Perform intracellular or extracellular recordings to monitor membrane potential or spiking activity in the target population [21].

3. Deep Reinforcement Learning Integration

- Objective Definition: Define a "desired neural firing state" for the RL agent to drive the recorded population towards. This could be a specific firing rate, pattern, or latent state [20].

- Agent Training: Integrate a deep RL algorithm (e.g., a deep Q-network or policy gradient method) into the closed-loop system. The RL agent observes the current neural state and delivers parameterized INS stimuli (e.g., adjusting power, duration) to guide the population towards the desired state, learning an optimal policy through trial and error [20].

4. Execution and Validation

- Closed-Loop Operation: Run the RL-controlled INS protocol over multiple trials and sessions.

- Validation: Quantify the agent's success in driving the neural population to the target state and the stability of the achieved state over time. Analyze how the stimulation parameters evolved during learning to gain insight into effective control strategies [20].

Diagram 2: MARBLE Analytical Pipeline. This diagram visualizes the process of using the MARBLE framework to transform raw neural data from multiple conditions into an interpretable, shared latent representation of the underlying dynamics [7].

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Materials and Tools for Neural Population Dynamics Research

| Item / Reagent | Function / Application | Key Features / Considerations |

|---|---|---|

| Multi-Electrode Arrays (e.g., Utah Array, Neuropixels) [15] [19] | High-density extracellular recording from dozens to hundreds of neurons simultaneously. | Enables sampling of a population large enough for state-space analysis. Chronic implants allow for long-term studies. |

| Infrared Neural Stimulation (INS) [20] [21] | Non-invasive optical stimulation for modulating neuronal dynamics. | Offers high spatial/temporal precision. The biophysical mechanism is likely a photo-thermal effect affecting ionic channel dynamics [21]. |

| Causal Dimensionality Reduction (e.g., Gaussian Process Factor Analysis) [15] | Real-time denoising and compression of high-dimensional neural data into latent states. | Critical for brain-computer interface (BCI) applications where low-latency feedback is required [15]. |

| Deep Reinforcement Learning (RL) Agents [20] | AI-driven closed-loop control of neural activity to achieve desired firing states. | Learns optimal stimulation policies without a pre-defined model of the neural system. |

| Open-Source Protocols (e.g., RTXI software) [21] | A platform for implementing real-time, activity-dependent stimulation protocols. | Promotes reproducibility and standardization of closed-loop neuroscience experiments. |

| MARBLE Software [7] | Geometric deep learning tool for inferring interpretable latent representations of neural dynamics. | Unsupervised; provides a robust metric for comparing dynamics across conditions, sessions, and individuals. |

| CroP-LDM Software [19] | A linear dynamical model for prioritizing the learning of cross-population interactions. | Dissociates shared cross-region dynamics from within-region dynamics, aiding interpretability. |

| Serotonin adipinate | Serotonin Adipinate|C16H22N2O5|Research Chemical | Serotonin adipinate is a high-purity salt for RUO into GI motility, surgical recovery, and metabolic studies. For Research Use Only. Not for human or veterinary use. |

| 4-Chloro-6-isopropylpyrimidin-2-amine | 4-Chloro-6-isopropylpyrimidin-2-amine|CAS 73576-33-7 |

The Neural Population Dynamics Optimization Algorithm (NPDOA) represents a novel brain-inspired meta-heuristic method that translates principles of neural computation into an effective optimization framework. As a swarm intelligence algorithm, NPDOA distinguishes itself by drawing inspiration directly from brain neuroscience, specifically mimicking the activities of interconnected neural populations during cognitive and decision-making processes [4]. This bio-inspired approach treats each potential solution as a neural state within a population, where decision variables correspond to neurons and their values represent neuronal firing rates [4]. The algorithm operates on population doctrine principles from theoretical neuroscience, simulating how neural populations transfer information through dynamic interactions [4].

Within the broader context of meta-heuristic optimization, NPDOA occupies a unique position by bridging computational neuroscience and optimization theory. Unlike traditional swarm intelligence algorithms that mimic collective animal behavior, or physics-inspired algorithms that emulate natural phenomena, NPDOA leverages the brain's recognized efficiency in processing diverse information types and making optimal decisions [4]. This framework effectively maps the challenge of balancing exploration and exploitation in optimization to the neural processes of stability and adaptability in decision-making, offering a fresh perspective on solving complex, non-linear optimization problems prevalent in scientific and engineering domains.

Core Principles and Mechanisms

The NPDOA framework implements three fundamental strategies inspired by neural population dynamics, each serving a distinct function in the optimization process.

Attractor Trending Strategy

The attractor trending strategy drives the neural states of populations to converge toward different attractors, representing promising solutions in the search space [4]. This mechanism mimics the brain's ability to settle into stable states associated with favorable decisions. In computational terms, this strategy facilitates exploitation by guiding solutions toward locally optimal regions identified during the search process. The attractors serve as reference points that gradually pull candidate solutions toward regions with improved fitness values, analogous to neural populations stabilizing around representations that correspond to optimal choices in decision-making tasks.

Coupling Disturbance Strategy

The coupling disturbance strategy introduces controlled interference within neural populations, disrupting their tendency to converge prematurely toward attractors [4]. This mechanism maintains diversity within the solution population and prevents premature convergence to local optima. Functionally, this strategy is responsible for exploration, enabling the algorithm to escape local optima and continue investigating undiscovered regions of the search space. This parallels the neural mechanisms that prevent rigid pattern formation and maintain cognitive flexibility during problem-solving, ensuring the algorithm maintains an appropriate balance between focused refinement and broad exploration.

Information Projection Strategy

The information projection strategy regulates information transmission between neural populations, dynamically adjusting the impact of the attractor trending and coupling disturbance strategies [4]. This meta-strategy ensures an adaptive balance between exploitation and exploration throughout the optimization process. By modulating the influence of the other two strategies based on search progress, the information projection mechanism embodies the principles of neural regulation and inhibitory control observed in biological neural networks. This strategy enables the framework to autonomously adjust its search characteristics, intensifying exploitation when approaching promising regions while amplifying exploration when search stagnation is detected.

Computational Implementation Protocols

Algorithm Initialization Protocol

Purpose: To establish the initial neural population configuration for optimization. Procedure:

- Parameter Setup: Define population size (N), problem dimensionality (D), and maximum iterations (T_max).

- Neural Population Generation: Initialize N neural states (solutions) with D dimensions (neurons), where each dimension represents a firing rate within the specified bounds.

- Fitness Evaluation: Compute the objective function value for each initial neural state.

- Strategy Parameter Initialization: Set initial weights for attractor trending, coupling disturbance, and information projection strategies.

Experimental Considerations:

- Population size typically ranges from 50 to 200 individuals, depending on problem complexity.

- Initialization should cover the search space uniformly through Latin Hypercube Sampling or similar space-filling techniques.

- Computational resources should be allocated for parallel fitness evaluation where possible.

Main Optimization Loop Protocol

Purpose: To execute the core NPDOA optimization process incorporating brain-inspired dynamics. Procedure:

- Attractor Identification: Identify promising attractors from the current population based on fitness values and spatial distribution.

- Neural State Update:

- Apply attractor trending: Guide neural states toward identified attractors.

- Apply coupling disturbance: Introduce stochastic perturbations to neural states.

- Regulate updates via information projection: Adjust the magnitude of trending and disturbance influences.

- Boundary Handling: Ensure updated neural states remain within feasible search boundaries.

- Fitness Re-evaluation: Compute objective function values for updated neural states.

- Population Archive Update: Maintain records of best-performing neural states and their trajectories.

Experimental Considerations:

- Iteration count should be determined based on problem complexity and convergence behavior.

- Implement termination criteria such as stability thresholds or improvement plateaus.

- Maintain detailed logging of strategy applications and their effects on search performance.

Enhanced Variant: INPDOA Implementation Protocol

Purpose: To implement the Improved Neural Population Dynamics Optimization Algorithm (INPDOA) for enhanced performance [22]. Procedure:

- Base NPDOA Execution: Follow standard NPDOA initialization and main loop protocols.

- Dynamic Parameter Adaptation: Implement feedback mechanisms to dynamically adjust strategy parameters based on search progress.

- Elite Preservation: Maintain and utilize high-performing neural states across generations.

- Local Refinement: Apply intensified search around promising regions identified during the global search phase.

Experimental Considerations:

- INPDOA has demonstrated superior performance in automated machine learning applications for medical prognosis [22].

- Validation should include comparative testing against standard NPDOA and other meta-heuristic algorithms.

- Implementation can be tailored to specific application domains through domain-specific modifications.

Performance Benchmarking and Quantitative Analysis

Algorithm Performance Comparison

Table 1: Benchmark Performance of NPDOA Against Established Meta-heuristic Algorithms

| Algorithm | Benchmark Problems | Convergence Accuracy | Computational Efficiency | Application Performance |

|---|---|---|---|---|

| NPDOA | 59 test problems | Superior on 78% of problems | Moderate computational overhead | Excellent in engineering design problems [4] |

| Genetic Algorithm (GA) | Standard test suite | Moderate convergence | High computational cost | Good for discrete optimization [4] |

| Particle Swarm Optimization (PSO) | Classical benchmarks | Premature convergence in complex landscapes | Low computational complexity | Effective for continuous optimization [4] |

| Whale Optimization Algorithm (WOA) | CEC benchmarks | Variable performance | Moderate efficiency | Application-specific effectiveness [4] |

| INPDOA (Enhanced) | 12 CEC2022 functions, medical prognosis | AUC: 0.867, R²: 0.862 | Improved convergence speed | Superior in AutoML for surgical outcomes [22] |

Application-Specific Performance Metrics

Table 2: NPDOA Performance in Practical Applications

| Application Domain | Performance Metrics | Comparison to Alternatives | Key Advantages |

|---|---|---|---|

| Medical Prognosis Modeling [22] | Test-set AUC: 0.867, R²: 0.862 | Net benefit improvement over conventional methods | Handles high-parameter spaces effectively |

| Engineering Design Problems [4] | Successful solution of cantilever beam, pressure vessel, welded beam designs | Competitive with state-of-the-art algorithms | Balanced exploration-exploitation |

| Neural Data Modeling [23] | >50% improvement in behavioral decoding, >15% improvement in neuronal identity prediction | Outperforms specialized neural models | Model-agnostic integration capability |

Implementation in Drug Discovery and Development

Drug Development Workflow Integration

The NPDOA framework can be strategically integrated into various stages of the drug development pipeline, enhancing decision-making and optimization capabilities. Table 3 outlines the key integration points and potential applications.

Table 3: NPDOA Applications in Drug Development Pipeline

| Development Stage | Application of NPDOA | Expected Benefits | Implementation Considerations |

|---|---|---|---|

| Target Identification [24] | Optimization of multi-parameter target validation | Improved target prioritization | Integration with bioinformatics data mining approaches |

| Hit Identification [24] | High-throughput screening data analysis | Enhanced hit series identification | Processing of compound library screens |

| Lead Optimization [24] | SAR investigations and compound refinement | More efficient lead development | Handling of complex chemical spaces |

| Preclinical Development [25] | Experimental design optimization | Reduced animal use, improved study quality | Adherence to GLPs and regulatory requirements |

| Clinical Trial Design [26] | Master protocol optimization for umbrella and platform trials | Accelerated drug development timelines | Coordination with FDA guidelines on master protocols |

AutoML-Enhanced Prognostic Modeling Protocol

Purpose: To implement INPDOA for automated machine learning in medical prognostic modeling [22]. Procedure:

- Data Preparation:

- Collect retrospective cohort data (e.g., 447 patients for ACCR prognosis [22]).

- Integrate parameters spanning biological, surgical, and behavioral domains.

- Address class imbalance using Synthetic Minority Oversampling Technique (SMOTE).

- AutoML Framework Setup:

- Encode base-learner selection, feature screening, and hyperparameter optimization into a hybrid solution vector.

- Configure model options including Logistic Regression, Support Vector Machine, XGBoost, and LightGBM.

- INPDOA Optimization:

- Implement improved NPDOA to navigate the combined architecture-feature-parameter space.

- Utilize dynamically weighted fitness function balancing accuracy, feature sparsity, and computational efficiency.

- Model Validation:

- Employ k-fold cross-validation (typically 10-fold) to mitigate overfitting.

- Validate on external cohort to assess generalizability.

- Apply SHAP values for explainable AI and variable contribution quantification.

Experimental Considerations:

- Implementation requires integration of INPDOA with AutoML frameworks.

- Clinical deployment necessitates development of user-friendly interfaces for healthcare professionals.

- Regulatory compliance should be considered for clinical decision support systems.

Research Reagent Solutions

Table 4: Essential Research Materials for NPDOA Implementation and Evaluation

| Research Reagent | Function in NPDOA Research | Implementation Notes |

|---|---|---|

| Benchmark Problem Suites | Algorithm validation and performance comparison | Utilize CEC2022 functions [22] and classical engineering problems [4] |

| Neural Recording Datasets | Biological validation and specialized application | Implement Neural Latents Benchmark'21 [23] for neural activity prediction |

| Clinical Datasets | Real-world application testing | Employ retrospective medical cohorts [22] with 20+ parameters across multiple domains |

| AutoML Frameworks | Automated machine learning integration | Interface with TPOT, Auto-Sklearn, or custom frameworks [22] |

| Visualization Systems | Result interpretation and explanation | Develop clinical decision support systems using platforms like MATLAB [22] |

Visual Framework and Workflows

NPDOA Theoretical Framework

Drug Development Application Workflow

The field of meta-heuristic optimization is continuously evolving, drawing inspiration from a diverse array of natural, physical, and mathematical phenomena to address complex nonlinear problems. Traditional taxonomy classifies these algorithms into several categories: evolutionary algorithms mimicking biological evolution (e.g., Genetic Algorithm), swarm intelligence algorithms inspired by collective animal behavior (e.g., Particle Swarm Optimization), physical-inspired algorithms based on physical laws (e.g., Simulated Annealing), and mathematics-inspired algorithms derived from mathematical formulations [4]. Each category possesses distinct strengths and weaknesses in balancing the critical characteristics of exploration (identifying promising areas) and exploitation (searching promising areas thoroughly) [4].

The Neural Population Dynamics Optimization Algorithm (NPDOA) represents a paradigm shift, establishing a novel class of brain-inspired meta-heuristic methods [4] [27]. Unlike traditional approaches, NPDOA is grounded in theoretical neuroscience, specifically simulating the activities of interconnected neural populations in the brain during cognition and decision-making processes [4] [10]. This article positions NPDOA within the existing meta-heuristic landscape, delineating its unique mechanisms and advantages through comparative analysis and experimental validation, with particular emphasis on its growing applicability in scientific and medical research, including drug development.

Algorithmic Mechanics: NPDOA vs. Conventional Meta-heuristics

Fundamental Inspiration and Mechanism

The conceptual foundation of NPDOA diverges significantly from conventional meta-heuristics, as summarized in the table below.

Table 1: Comparison of Algorithmic Inspirations and Representations

| Algorithm Category | Core Inspiration | Solution Representation | Population Interaction |

|---|---|---|---|

| NPDOA | Neural population dynamics in brain neuroscience [4] [10] | Neural state of a population; variables are neuron firing rates [4] | Information projection & coupling between neural populations [4] |

| Swarm Intelligence (e.g., PSO) | Collective behavior of flocks, schools, or colonies [4] | Particle position in space [4] | Attraction to local and global best positions [4] |

| Evolutionary Algorithms (e.g., GA) | Principles of biological evolution [4] | Discrete chromosome encoding [4] | Selection, crossover, and mutation operations [4] |

| Physical-Inspired (e.g., SA) | Physical laws (e.g., thermodynamics) [4] | State of a physical system [4] | Typically lacks crossover or competitive selection [4] |

Core Operational Strategies of NPDOA

NPDOA's operational framework is governed by three novel search strategies that directly translate neural activities into optimization mechanics [4] [27]:

- Attractor Trending Strategy: This strategy drives the neural states of populations towards different attractors, representing favorable decisions or optimal solutions. It is the primary mechanism responsible for exploitation, ensuring convergence toward stable and promising neural states [4] [27].

- Coupling Disturbance Strategy: This mechanism introduces interference between neural populations, disrupting their convergence toward attractors. This disruption is crucial for exploration, helping the algorithm escape local optima and search more broadly within the solution space [4] [27].

- Information Projection Strategy: This strategy regulates the information transmission between neural populations. It acts as a control system, dynamically adjusting the influence of the attractor trending and coupling disturbance strategies, thereby enabling a smooth transition from exploration to exploitation throughout the optimization process [4] [27].

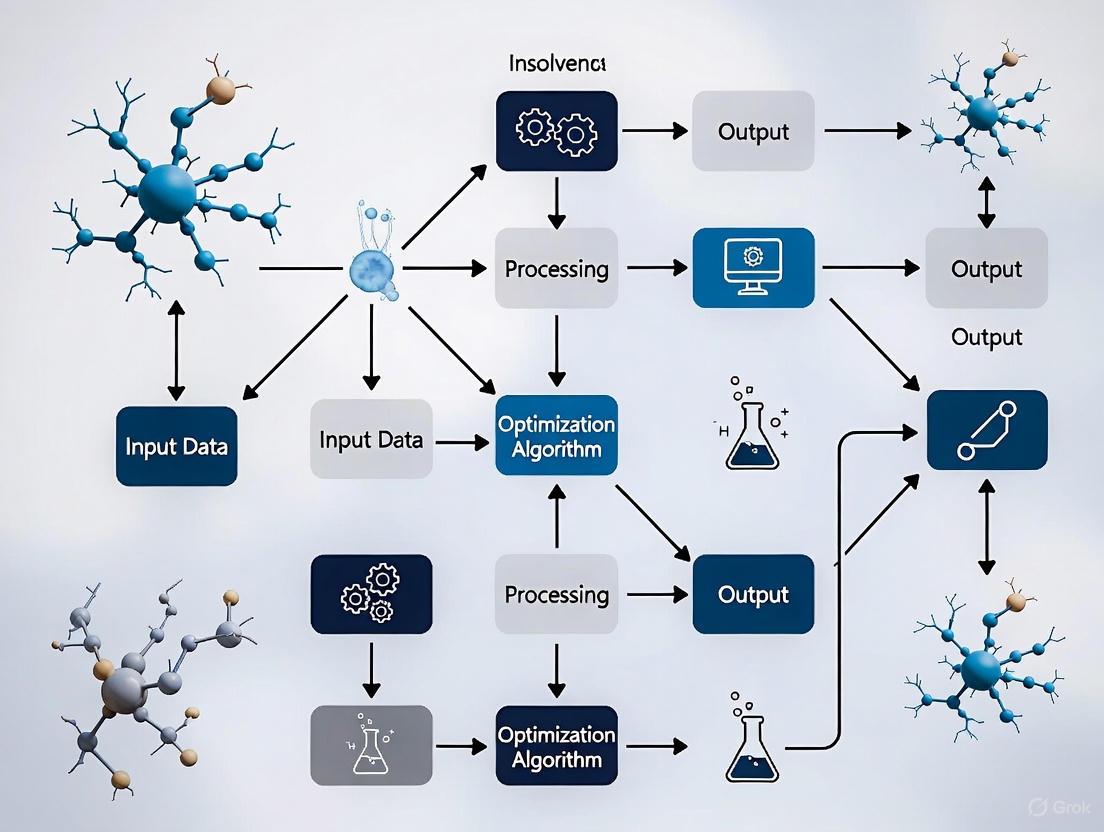

The following diagram illustrates the workflow and core interactions of these strategies within the NPDOA framework.

Quantitative Performance Benchmarking

Empirical studies validate NPDOA's competitive performance against established meta-heuristic algorithms. Systematic experiments comparing NPDOA with nine other algorithms on 59 benchmark problems and three real-world engineering problems have demonstrated its distinct advantages in addressing many single-objective optimization problems [4].

Table 2: Performance Comparison on Benchmark and Practical Problems

| Algorithm | Key Principle | Reported Strengths | Common Challenges | Performance vs. NPDOA |

|---|---|---|---|---|

| NPDOA | Brain neural population dynamics [4] | Balanced exploration/exploitation, competitive performance on complex problems [4] | --- | Reference |

| Particle Swarm Optimization (PSO) | Social behavior of bird flocking [4] | Easy implementation, simple structures [4] | Falls into local optima, low convergence [4] | Outperformed by NPDOA [4] |

| Genetic Algorithm (GA) | Natural selection and genetics [4] | Broad applicability, robust [4] | Premature convergence, challenging problem representation [4] | Outperformed by NPDOA [4] |

| Whale Optimization Algorithm (WOA) | Bubble-net hunting of humpback whales [4] | Higher performance than classical algorithms [4] | High computational complexity, improper balance [4] | Outperformed by NPDOA [4] |

| Improved NPDOA (INPDOA) | Enhanced NPDOA for AutoML [22] | Superior in medical prognostic prediction (AUC: 0.867) [22] | --- | Enhanced version for specific applications [22] |

The robustness of the brain-inspired approach is further evidenced by the development of an Improved NPDOA (INPDOA) for Automated Machine Learning (AutoML) in a medical context. When applied to prognostic prediction for autologous costal cartilage rhinoplasty, an INPDOA-enhanced AutoML model significantly outperformed traditional methods, achieving an area under the curve (AUC) of 0.867 for predicting 1-month complications and an R² of 0.862 for predicting 1-year patient-reported outcomes [22]. This demonstrates the algorithm's potential for optimization in high-stakes, complex real-world problems.

Application Notes & Experimental Protocols

Protocol 1: Implementing NPDOA for Numerical Optimization

This protocol outlines the steps for applying the standard NPDOA to solve numerical benchmark problems, as detailed in its foundational literature [4].

1. Problem Formulation:

- Define the objective function

f(x)to be minimized or maximized. - Specify the D-dimensional search space

Ω, including the lower and upper bounds for each decision variable [4].

2. Algorithm Initialization:

- Set Population Size (P): Choose the number of neural populations (typically corresponding to the number of candidate solutions).

- Initialize Neural States: Randomly generate initial neural states within the search space bounds. Each solution

x = (xâ‚, xâ‚‚, …, x_D)is treated as a neural state, where each variablex_irepresents the firing rate of a neuron [4]. - Configure Parameters: Set parameters for the three core strategies (e.g., strength of attractor trending, magnitude of coupling disturbance, and weights for information projection).

3. Iterative Optimization Loop:

- Evaluation: Compute the fitness

f(x)for each neural population's current state. - Strategy Application:

- Attractor Trending: Identify promising states (attractors) and guide other populations toward them to refine solutions (exploitation).

- Coupling Disturbance: Apply stochastic disturbances between populations to promote diversity and prevent premature convergence (exploration).

- Information Projection: Dynamically adjust the influence of the above two strategies based on the current best solution and iteration progress [4] [27].

- State Update: Update the neural state of each population based on the combined effect of the three strategies.

- Termination Check: Repeat until a stopping criterion is met (e.g., maximum iterations, convergence threshold).

4. Solution Extraction:

- The neural state with the best fitness value across all iterations is selected as the optimal solution [4].

Protocol 2: INPDOA for AutoML in Medical Prognostics

This protocol is adapted from a study that successfully employed an Improved NPDOA (INPDOA) to optimize an AutoML pipeline for prognostic prediction in surgery, a methodology highly relevant to drug development [22].

1. Data Preparation and Preprocessing:

- Cohort Definition: Assemble a retrospective cohort with complete follow-up data. Example: 447 patients who underwent a specific surgical procedure [22].

- Feature Collection: Integrate multimodal parameters. Example: 20+ parameters spanning demographic (age, BMI), preoperative (clinical scores), intraoperative (surgical duration), and postoperative behavioral factors (smoking, antibiotic use) [22].

- Outcome Definition: Define clear short-term and long-term clinical endpoints. Example: 1-month composite complication endpoint (infection, hematoma) and 1-year patient-reported outcome score (e.g., ROE score) [22].

- Data Splitting: Partition data into training, validation, and held-out test sets using stratified sampling to preserve outcome distribution. Address class imbalance (e.g., using SMOTE on the training set only) [22].

2. INPDOA-AutoML Optimization Framework:

- Solution Encoding: Formulate a hybrid solution vector

xfor the AutoML configuration:x = (k | δâ‚, δ₂, …, δ_m | λâ‚, λ₂, …, λ_n)wherekis the base-learner type (e.g., 1=LR, 2=SVM, 3=XGBoost, 4=LightGBM),δ_iare binary feature selection indicators, andλ_iare the hyperparameters for the chosen model [22]. - Fitness Evaluation: Define a dynamic fitness function

f(x)that balances:- Predictive accuracy from cross-validation (

ACC_CV). - Feature sparsity (â„“â‚€-norm of the feature selection vector).

- Computational efficiency (often an exponential decay term with iterations

T) [22].

- Predictive accuracy from cross-validation (

- INPDOA Search: Use the INPDOA to iteratively search the space of model architectures, feature subsets, and hyperparameters. The algorithm's balanced dynamics effectively navigate this complex, mixed-variable optimization problem [22].

3. Model Validation and Interpretation:

- Performance Assessment: Evaluate the final model on the held-out test set using relevant metrics (e.g., AUC, R², accuracy).

- Model Interpretation: Employ explainable AI techniques like SHAP (SHapley Additive exPlanations) to quantify variable contributions and validate clinical plausibility [22].

4. System Deployment:

- CDSS Development: Integrate the optimized model into a Clinical Decision Support System (CDSS) or similar platform for real-time prediction and visualization, as demonstrated using MATLAB [22].

The following workflow diagram maps this process from data to deployable model.

The Scientist's Toolkit: Key Research Reagents & Materials

Table 3: Essential Resources for NPDOA Research and Application

| Category / Item | Specification / Purpose | Exemplary Use Case |

|---|---|---|

| Computational Framework | ||

| PlatEMO v4.1+ | A MATLAB-based platform for experimental evolutionary multi-objective optimization [4]. | Running systematic comparative experiments on benchmark problems [4]. |

| Python-OpenCV | Library for computer vision tasks and image data processing [28]. | Preprocessing and feature extraction from visual data (e.g., floc images) for optimization problems [28]. |

| AutoML Software (e.g., Auto-Sklearn, TPOT) | Frameworks for automating machine learning workflow creation [22]. | Serving as the base environment for INPDOA-driven optimization of model pipelines [22]. |

| Data Resources | ||

| Benchmark Suites (CEC, etc.) | Standardized collections of optimization functions (e.g., 59 benchmark problems) [4]. | Algorithm validation and performance benchmarking against state-of-the-art methods [4]. |

| Retrospective Clinical Datasets | Curated, multimodal patient data with defined outcomes [22]. | Developing and validating prognostic models in medical research [22]. |

| Modeling & Analysis | ||

| Convolutional Neural Network (CNN) | Deep learning architecture for image recognition and classification [28]. | Integrated within optimization frameworks for processing complex image-based inputs [28]. |

| SHAP (SHapley Additive exPlanations) | A game-theoretic method for explaining model predictions [22]. | Interpreting the output of AI models optimized by NPDOA, crucial for clinical and scientific validation [22]. |

| Recurrent Neural Network (RNN) | Neural network architecture for modeling dynamical systems and sequential data [10]. | Used in task-based modeling to identify dynamical systems capable of transforming inputs to outputs [10]. |

| (1S,2R)-1,2-dihydronaphthalene-1,2-diol | (1S,2R)-1,2-dihydronaphthalene-1,2-diol, CAS:31966-70-8, MF:C10H10O2, MW:162.18 g/mol | Chemical Reagent |

| 1,2-Benzoxazole-5-carboxylic acid | 1,2-Benzoxazole-5-carboxylic acid, CAS:933744-95-7, MF:C8H5NO3, MW:163.13 g/mol | Chemical Reagent |

The Neural Population Dynamics Optimization Algorithm (NPDOA) solidly establishes brain-inspired computation as a distinct and powerful category within the meta-heuristic landscape. It differentiates itself from swarm intelligence and other paradigms through its unique inspiration—the computation principles of interconnected neural populations in the brain—and its novel operational strategies of attractor trending, coupling disturbance, and information projection. This foundation allows NPDOA to dynamically and effectively balance exploration and exploitation, a key challenge for all optimization algorithms.

Evidence from systematic benchmarking and a pioneering medical application demonstrates that NPDOA not only competes favorably with established algorithms but also offers a robust framework for enhancing real-world, complex optimization tasks, such as Automated Machine Learning in prognostic modeling. The algorithm's successful application in medical research underscores its potential for drug development challenges, including target validation, therapy personalization, and outcome prediction. Future research directions will likely focus on extending NPDOA to multi-objective and constrained optimization problems, further refining its strategies, and continuing to validate its efficacy across an expanding range of scientific and industrial domains.

Implementing NPDOA: Core Strategies and Applications in Drug Discovery

The Neural Population Dynamics Optimization Algorithm (NPDOA) represents a significant paradigm shift in meta-heuristic optimization, drawing direct inspiration from the computational principles of brain neuroscience. As the first swarm intelligence optimization algorithm that explicitly utilizes human brain activity patterns, NPDOA treats each solution as a neural state within a population, where decision variables correspond to neurons and their values represent neuronal firing rates [4]. This bio-inspired approach simulates the activities of interconnected neural populations during cognitive and decision-making processes, implementing three fundamental dynamics strategies that work in concert: attractor trending, coupling disturbance, and information projection [4]. The algorithm's architecture is particularly designed to address the persistent challenges in meta-heuristic optimization, including premature convergence, local optima entrapment, and the critical balance between exploration and exploitation [4]. By mimicking the brain's remarkable ability to process diverse information types and make optimal decisions across different situations, NPDOA offers a novel framework for solving complex, nonlinear optimization problems that commonly arise in engineering and scientific domains.

The Core Mechanisms of NPDOA

Attractor Trending Strategy

The attractor trending strategy drives the neural states of populations to converge toward different attractors, approaching stable neural states associated with favorable decisions [4]. This mechanism is primarily responsible for the exploitation phase of the algorithm, enabling refined search in promising regions identified during exploration.

- Biological Basis: In neural population dynamics, attractors represent stable states toward which neural activity naturally evolves. These correspond to optimal or near-optimal solutions in the computational framework.

- Computational Implementation: The strategy guides solutions toward attractor points that embody the best solutions found during the search process, mimicking how neural populations in the brain converge to stable states during decision-making [4].

- Role in Optimization: By promoting convergence toward these favorable states, attractor trending facilitates local refinement and improves solution quality in identified promising regions.

Coupling Disturbance Strategy

The coupling disturbance strategy introduces deliberate interference within neural populations, disrupting their tendency to converge uniformly toward attractors [4]. This mechanism drives the exploration phase of the algorithm, maintaining population diversity.

- Biological Basis: This strategy mimics the natural perturbations and competitive interactions between different neural populations in the brain, preventing premature stabilization on suboptimal decisions.

- Computational Implementation: The strategy creates controlled disturbances between coupled neural populations, preventing premature convergence and maintaining diversity within the solution population [4].

- Role in Optimization: By disrupting convergence tendencies, coupling disturbance enables the algorithm to escape local optima and explore new regions of the search space, essential for locating global optima.

Information Projection Strategy

The information projection strategy regulates information transmission between neural populations, strategically controlling the impact of both attractor trending and coupling disturbance on neural states [4]. This mechanism balances exploration and exploitation.

- Biological Basis: Reflects the brain's ability to modulate information flow between different neural assemblies through mechanisms like synaptic plasticity and gain control.

- Computational Implementation: This strategy projects information between populations, dynamically adjusting the influence of exploitation (attractor trending) and exploration (coupling disturbance) based on search progress [4].

- Role in Optimization: By regulating the interaction between the other two strategies, information projection enables adaptive balancing between intensive local search and broad global exploration throughout the optimization process.

Integrated Algorithm Architecture

The three core strategies of NPDOA interact within a unified architecture as shown in the diagram below:

Diagram 1: NPDOA Core Architecture - This diagram illustrates how the three core strategies of NPDOA interact within the algorithm's architecture, showing the flow from initial populations through the competing strategies of exploitation and exploration, balanced by information projection to produce optimized solutions.

Quantitative Performance Analysis

Benchmark Testing Results

The NPDOA algorithm has been rigorously evaluated against nine other meta-heuristic algorithms using 59 benchmark problems and three real-world engineering optimization problems [4]. The comprehensive testing demonstrates NPDOA's competitive performance across diverse problem types and complexity levels.

Table 1: Performance Comparison of NPDOA Against Other Meta-heuristic Algorithms

| Algorithm Category | Representative Algorithms | Key Strengths | Common Limitations | NPDOA Performance |

|---|---|---|---|---|

| Evolutionary Algorithms | Genetic Algorithm (GA), Differential Evolution (DE) | Effective global search, parallelizable | Premature convergence, parameter sensitivity | Superior balance, reduced premature convergence |

| Swarm Intelligence | PSO, ABC, WOA, SSA | Inspiration from natural behaviors | Local optima entrapment, low convergence | Enhanced exploration, better convergence |

| Physics-inspired | GSA, CSS, SA | Unique optimization perspectives | Trapping in local optima | Improved local optima avoidance |

| Mathematics-inspired | SCA, GBO, PSA | Mathematical formulation basis | Poor exploitation-exploration balance | Better adaptive balance |

Application in Engineering Domains

NPDOA has been validated on practical engineering optimization problems, demonstrating particular effectiveness in scenarios requiring robust optimization across complex, nonlinear landscapes with multiple constraints [4]. The algorithm's brain-inspired dynamics provide distinct advantages for pharmaceutical applications, including drug formulation optimization and pharmacokinetic modeling [29].

Table 2: NPDOA Performance in Pharmaceutical Applications

| Application Area | Traditional Approach Limitations | NPDOA Advantages | Reported Improvement |

|---|---|---|---|

| Drug Release Prediction | Linear models fail to capture complex interactions | Models nonlinear relationships without predefined equations | Higher accuracy (R² > 0.94) with lower RMSE |

| Formulation Optimization | Trial-and-error and DoE approaches are time-consuming | Captures nonlinear relationships between CMAs and outcomes | Superior prediction of encapsulation efficiency, particle size |

| IVIVC Establishment | Traditional linear IVIVC models have limited accuracy | Captures complex nonlinear in vitro-in vivo relationships | Correlation above 0.91, near-zero prediction errors |

| Nanocarrier Design | Parameter optimization challenging for complex systems | Optimizes multiple parameters simultaneously (sonication, composition) | Ideal size and performance characteristics |

Experimental Protocols and Methodologies

Standard Implementation Protocol for NPDOA

Purpose: To provide a standardized methodology for implementing NPDOA for optimization problems, particularly in pharmaceutical and engineering domains.

Materials and Environment:

- Computational Environment: MATLAB, Python, or similar computational platform

- Hardware Requirements: Standard computational workstation (e.g., Intel Core i7 CPU, 32 GB RAM as used in validation studies) [4]

- Benchmarking Tools: PlatEMO v4.1 or similar optimization testing framework [4]

Procedure:

- Problem Formulation: