Neural Data Preprocessing Best Practices 2025: A Comprehensive Guide for Biomedical Researchers

This article provides a complete framework for neural data preprocessing tailored to researchers and drug development professionals.

Neural Data Preprocessing Best Practices 2025: A Comprehensive Guide for Biomedical Researchers

Abstract

This article provides a complete framework for neural data preprocessing tailored to researchers and drug development professionals. Covering foundational concepts to advanced validation techniques, we explore critical methodologies for filtering, normalization, and feature extraction specific to neural signals. The guide addresses common troubleshooting scenarios and presents rigorous validation frameworks to ensure reproducible, high-quality data for machine learning applications in biomedical research. Practical code examples and real-world case studies illustrate how proper preprocessing significantly enhances model performance in clinical neuroscience applications.

Understanding Neural Data: Fundamentals for Effective Preprocessing

The Critical Role of Data Quality in Neural Network Performance

For researchers and scientists in drug development, the performance of a neural network is not merely a function of its architecture but is fundamentally constrained by the quality of the data it is trained on. The adage "garbage in, garbage out" is acutely relevant in scientific computing, where models trained on poor-quality data can produce unreliable predictions, jeopardizing experimental validity and downstream applications [1]. A data-centric approach, which prioritizes improving the quality and consistency of datasets over solely refining model algorithms, is increasingly recognized as a critical methodology for building robust and generalizable models [2]. This technical support center outlines the specific data quality challenges, provides troubleshooting guides for common issues, and details experimental protocols to ensure your neural network projects are built on a reliable data foundation.

Understanding Data Quality Dimensions and Their Impact

Data quality can be decomposed into several key dimensions, each of which directly impacts model performance. The table below summarizes these dimensions, their descriptions, and the specific risks they pose to neural network training and evaluation.

Table: Key Data Quality Dimensions and Their Impact on Neural Networks

| Dimension | Description | Impact on Neural Network Performance |

|---|---|---|

| Completeness | The degree to which data is present and non-missing [3]. | Leads to biased parameter estimates and poor generalization, as the model cannot learn from absent information [4] [5]. |

| Accuracy | The degree to which data is correct, reliable, and free from errors [3]. | Causes the model to learn incorrect patterns, leading to fundamentally flawed predictions and unreliable insights [1] [5]. |

| Consistency | The degree to which data is uniform and non-contradictory across systems [3]. | Introduces noise and conflicting signals during training, preventing the model from converging to a stable solution [4]. |

| Validity | The degree to which data is relevant and fit for the specific purpose of the analysis [1]. | Invalid or irrelevant features can distort the analysis, causing the model to focus on spurious correlations [1]. |

| Timeliness | The degree to which data is current and up-to-date [3]. | Using outdated data can render a model ineffective for predicting current or future states, a critical flaw in fast-moving research [3]. |

Troubleshooting Guide: FAQs on Data Quality Issues

FAQ 1: Why does my neural network perform well on training data but fail in production or on real-world test data?

Answer: This is a classic sign of overfitting or encountering a data drift problem, often rooted in data quality issues during the training phase.

- Potential Causes & Solutions:

- Non-Representative Training Data: Your training set may not capture the full variability and distribution of real-world data.

- Solution: Conduct extensive data exploration and employ stratified sampling during dataset splitting to ensure your training, validation, and test sets are representative. Continuously monitor for data drift in production [5].

- Inconsistent Preprocessing Between Training and Serving: The pipeline used to preprocess live data differs from the one used on training data.

- Data Leakage: Information from the test or validation set inadvertently influences the training process.

- Solution: Ensure that any preprocessing steps (like imputation or scaling) are fit only on the training data. Perform a thorough audit of your feature engineering to prevent the use of future information [5].

- Non-Representative Training Data: Your training set may not capture the full variability and distribution of real-world data.

FAQ 2: How can I systematically detect and correct mislabeled instances in my dataset?

Answer: Noisy labels are a pervasive problem, particularly in large, manually annotated datasets common in biomedical research (e.g., medical image classification).

- Methodology: Confident Learning [2]:

- Cross-Validation Pruning: Train your model using cross-validation and examine the out-of-fold predictions for each sample.

- Identify Noisy Candidates: Flag instances where the model's predicted probability for the assigned label is consistently below a defined, optimized threshold across validation folds. These are potential labeling errors.

- Human-in-the-Loop Correction: The flagged instances are then sent for re-evaluation and correction by a domain expert (e.g., a research scientist). This creates a feedback loop for continuous data improvement.

- Tools: Python libraries like

cleanlabimplement confident learning techniques to facilitate this process.

FAQ 3: What are the best practices for handling missing values in patient data without introducing bias?

Answer: Simply deleting rows with missing values can lead to significant data loss and biased models. The appropriate method depends on the nature of the missingness.

- Decision Workflow:

The diagram below outlines a systematic protocol for handling missing data in experimental datasets.

Missing Data Handling Protocol

FAQ 4: Our RAG (Retrieval-Augmented Generation) system for scientific literature is producing poor or hallucinated answers. What data quality issues should we investigate?

Answer: The performance of RAG systems is highly sensitive to the quality of the underlying data and embeddings.

- Primary Data Quality Culprits:

- Embedding Quality: If the vector embeddings fail to capture the semantic meaning of your source documents, the retriever will fetch irrelevant context [8]. Monitor for issues like empty vector arrays, wrong dimensionality, or corrupted values.

- Chunking Strategy: Inconsistent or poor document segmentation can break the logical flow of information, providing the language model with fragmented context [8].

- Outdated or Unclean Source Data: The system's knowledge corpus must be regularly updated and cleansed of irrelevant or duplicate documents to ensure the information is current and non-redundant [3].

Experimental Protocols for Data Quality Assurance

Protocol: Data-Centric vs. Model-Centric Performance Comparison

This experiment, inspired by research published in Scientific Reports, demonstrates the impact of a data-centric approach [2].

- Objective: To compare the performance gain from improving data quality versus improving model architecture/hyperparameters.

- Materials:

- Dataset: CIFAR-10 or a similar benchmark dataset.

- Base Model: ResNet-18.

- Tools: Python, PyTorch/TensorFlow, scikit-learn,

cleanlabfor confident learning.

- Methodology:

- Model-Centric Approach: Perform extensive hyperparameter tuning (learning rate, optimizer, batch size) on the original, potentially noisy dataset.

- Data-Centric Approach:

- Deduplication: Use multi-stage hashing (Perceptual Hash for images, CityHash for speed) to identify and remove duplicate instances [2].

- Noisy Label Correction: Apply confident learning to detect and correct mislabeled images [2].

- Data Augmentation: Apply domain-specific augmentation techniques (e.g., rotation, scaling, color jitter for images).

- Train the same base ResNet-18 model on this cleaned and improved dataset.

- Evaluation: Compare the final accuracy of the two approaches on a held-out, high-quality test set. The study in Scientific Reports found the data-centric approach consistently outperformed the model-centric approach by a relative margin of at least 3% [2].

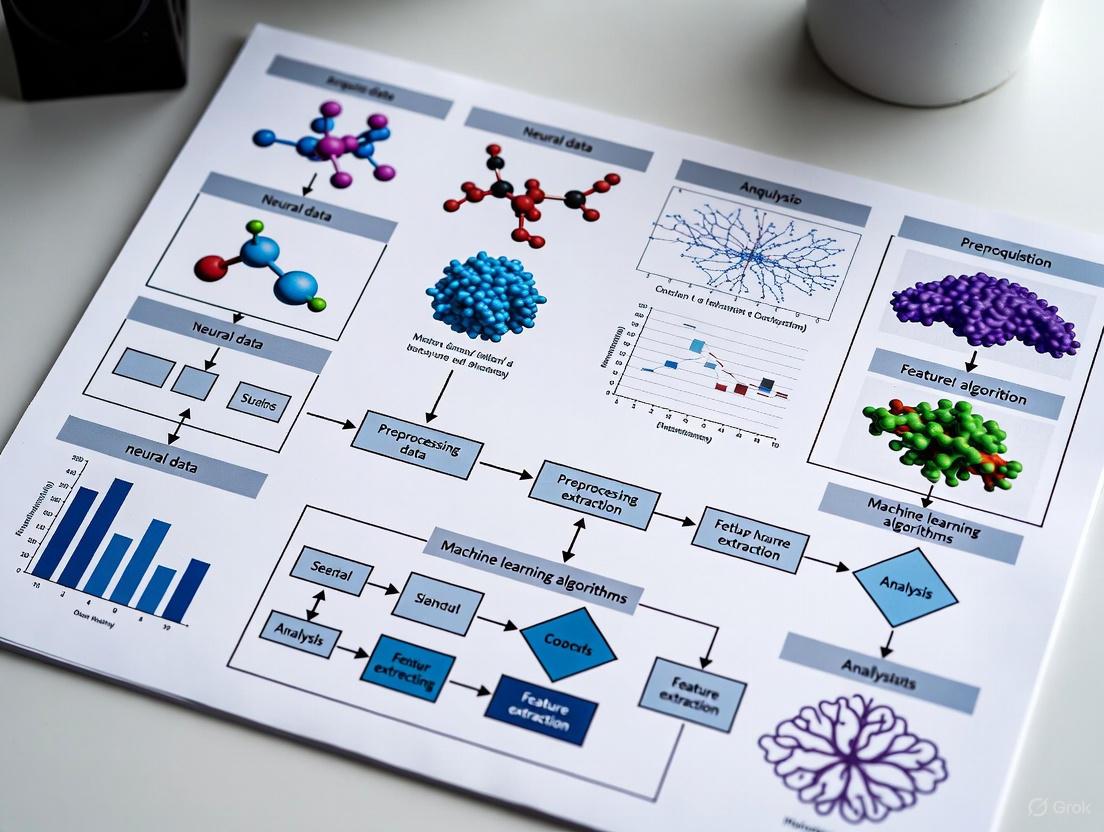

The following workflow visualizes the key stages of this experimental protocol:

Data-Centric vs. Model-Centric Experiment Workflow

Protocol: Implementing a Data Preprocessing Pipeline

A standardized preprocessing pipeline is essential for reproducibility.

- Objective: To create a robust, reproducible pipeline for preparing raw data for neural network ingestion.

- Steps:

- Data Assessment & Profiling: Generate summary statistics (mean, median, null counts) and visualize distributions to understand data structure and identify obvious issues [9] [7].

- Data Cleaning:

- Handle Missing Values: Based on the protocol in FAQ 3, choose an appropriate method (deletion, imputation) [7].

- Remove Duplicates: Use hashing or rule-based matching to identify and remove duplicate entries [3].

- Treat Outliers: Use statistical methods (IQR, Z-score) or visualization (box plots) to detect outliers. Decide whether to cap, remove, or keep them based on domain knowledge [7].

- Data Transformation:

- Data Splitting: Split the processed data into training, validation, and test sets (e.g., 70/15/15) after all preprocessing steps to avoid data leakage [6].

The Scientist's Toolkit: Essential Research Reagents & Solutions

This table details key tools and "reagents" for conducting data quality experiments and building robust preprocessing pipelines.

Table: Essential Tools for Neural Network Data Quality Management

| Tool / Category | Function / Purpose | Example Libraries/Platforms |

|---|---|---|

| Data Profiling & Validation | Automates initial data assessment to identify missing values, inconsistencies, and schema violations. | DQLabs [5], Great Expectations, Pandas Profiling |

| Data Cleaning & Imputation | Provides algorithms for handling missing data, outliers, and duplicates. | scikit-learn SimpleImputer, KNNImputer [7] |

| Noisy Label Detection | Implements confident learning and other algorithms to find and correct mislabeled data. | cleanlab [2] |

| Feature Scaling | Standardizes and normalizes features to ensure stable and efficient neural network training. | scikit-learn StandardScaler, MinMaxScaler, RobustScaler [6] [7] |

| Data Version Control | Tracks changes to datasets and models, ensuring full reproducibility of experiments. | DVC (Data Version Control), lakeFS [6] |

| Workflow Orchestration | Manages and automates complex data preprocessing and training pipelines. | Apache Airflow, Prefect |

| Vector Database Monitoring | Tracks the quality and performance of embeddings in RAG systems to prevent silent degradation. | Custom monitoring for dimensionality, consistency, completeness [8] |

Frequently Asked Questions (FAQs)

Q1: A significant portion (40-70%) of our simultaneous EEG-fMRI studies in focal epilepsy patients are inconclusive, primarily due to the absence of interictal epileptiform discharges during scanning or a lack of significant correlated haemodynamic changes. What advanced methods can we use to localize the epileptic focus in such cases?

A1: You can employ an epilepsy-specific EEG voltage map correlation technique. This method does not require visually identifiable spikes during the fMRI acquisition to reveal relevant BOLD changes [10].

- Methodology: Build patient-specific EEG voltage maps using averaged interictal epileptiform discharges recorded during long-term video-EEG monitoring outside the scanner. Then, compute the correlation of this map with the EEG recorded inside the scanner for each time frame. The resulting correlation coefficient time course is used as a regressor in the fMRI analysis to map haemodynamic changes related to these epilepsy-specific maps (topography-related BOLD changes) [10].

- Efficacy: This approach has been successfully applied in patients with previously inconclusive studies, showing haemodynamic correlates spatially concordant with intracranial EEG or the resection area, particularly in lateral temporal and extratemporal neocortical epilepsy [10].

Q2: Our lab is new to combined EEG-fMRI. What are the primary sources of the ballistocardiogram (BCG) artifact and what are the established methods for correcting it?

A2: The BCG artifact is a major challenge in simultaneous EEG-fMRI, caused by the electromotive force generated on EEG electrodes due to small head movements inside the scanner's magnetic field [11]. The main sources are:

- Movement of electrodes and scalp due to cardiac pulsation.

- Fluctuation of the Hall voltage from blood flow in the magnetic field.

- Induction from movement of electrode leads in the static magnetic field [11].

- Correction Methods: Common approaches include average artifact subtraction (AAS) methods, where an average BCG artifact template is created and subtracted from the EEG signal. Other advanced methods involve using orthogonal derivatives of the EEG for template estimation or leveraging reference layers in specialized EEG caps to model and remove the artifact.

Q3: We are encountering severe baseline drifts and environmental electromagnetic interference in our cabled EEG systems inside the MRI scanner, complicating EEG signal retrieval. Are there emerging technologies that address these hardware limitations?

A3: Yes, recent research demonstrates a wireless integrated sensing detector for simultaneous EEG and MRI (WISDEM) to overcome these exact issues [12].

- Technology: This device is a wirelessly powered oscillator that encodes fMRI and EEG signals on distinct sidebands of its oscillation wave, which is then detected by a standard MRI coil. This design eliminates the cable connections that are susceptible to environmental fluctuations and electromagnetic interference [12].

- Advantages: The system avoids the need for high-gain preamplifiers and high-speed analog-digital converters with large dynamic ranges, reducing system complexity and safety concerns related to heating. It also acquires signals continuously throughout the MR sequence without requiring synchronization hardware [12].

Troubleshooting Guides

Issue 1: Excessive Noise and Artifacts in Raw Neural Data

Problem: Acquired data (e.g., EEG, fMRI) is contaminated with noise, artifacts, and missing values, making it unsuitable for analysis.

Solution: Implement a robust data preprocessing pipeline.

Step 1: Handle Missing Values

- Remove samples/features: If the number of samples is large and the count of missing values in a row is high, consider removal.

- Impute values: Replace missing values using statistical measures (mean, median, mode) or more sophisticated model-based prediction [7] [6].

- Interpolate/Extrapolate: Generate values inside or beyond a known range based on existing data points [7].

Step 2: Treat Outliers

- Use box-plots to detect data points that fall outside the predominant pattern.

- Filter variables based on the observed maximum and minimum ranges to remove disruptive outliers [7].

Step 3: Encode Categorical Data

Step 4: Scale Features (Critical for distance-based models) The table below compares common scaling techniques [7] [6]:

| Scaling Technique | Description | Best Use Case |

|---|---|---|

| Min-Max Scaler | Shrinks feature values to a specified range (e.g., 0 to 1). | When the data does not follow a Gaussian distribution. |

| Standard Scaler | Centers data around mean 0 with standard deviation 1. | When data is approximately normally distributed. |

| Robust Scaler | Scales based on interquartile range after removing the median. | When the dataset contains significant outliers. |

| Max-Abs Scaler | Scales each feature by its maximum absolute value. | When preserving data sparsity (zero entries) is important. |

Issue 2: Poor Spatial Localization from EEG Data

Problem: EEG has excellent temporal resolution but poor spatial resolution, hindering the identification of precise neural sources.

Solution: Integrate EEG data with high-resolution fMRI.

Step 1: Understand the Complementary Relationship

- fMRI: Provides highly localized measures of brain activation (good spatial resolution ~2-3 mm) but poor temporal resolution [11].

- EEG: Provides millisecond-scale temporal resolution but poor spatial resolution due to the inverse problem [11].

- The BOLD fMRI signal has been shown to correlate better with local field potentials (LFP) than with single-neuron activity, providing a physiological link for integration [11].

Step 2: Choose an Integration Method

- fMRI-informed Source Reconstruction: Use fMRI activation maps as spatial priors to constrain the source localization of EEG or ERP signals, improving the accuracy of the estimated neural generators [11].

- EEG-informed fMRI Analysis: Use specific EEG features (e.g., spike times, power in a frequency band, ERP components) as regressors in the fMRI analysis to identify brain regions whose BOLD signal fluctuations correlate with the electrophysiological events [10] [11].

Issue 3: Isolating Specific Cognitive or Epileptic Events

Problem: How to design an experiment to reliably capture and analyze transient neural events, such as epileptic spikes or cognitive ERP components.

Solution: Employ optimized task designs and analysis strategies.

Step 1: Select the Appropriate Task Design

- Blocked Design: Present alternating task conditions lasting 15-30 seconds each. Provides a better signal-to-noise ratio (SNR) for estimating generalized task-related responses [11].

- Event-Related Design: Present short, discrete trials in a randomized order. Optimal for parsing specific component processes and is compatible with ERP analysis [11].

Step 2: For Epilepsy Studies with Few Spikes

- As detailed in FAQ A1, move beyond spike-triggered analysis. Use patient-specific voltage maps derived from long-term monitoring to detect sub-threshold epileptic activity during fMRI sessions, even in the absence of visible spikes [10].

Experimental Protocols & Methodologies

Protocol 1: EEG-fMRI for Localizing Focal Epileptic Activity Using Voltage Maps

This protocol is adapted from studies involving patients with medically refractory focal epilepsy [10].

1. Patient Preparation & Data Acquisition:

- Long-term EEG: Prior to the fMRI scan, conduct long-term clinical video-EEG monitoring to detect and record interictal epileptiform discharges (spikes). From these, build a patient-specific, averaged EEG voltage map that is characteristic of the individual's epileptic activity [10].

- Simultaneous EEG-fMRI: Acquire EEG data continuously inside the MRI scanner while acquiring whole-brain BOLD fMRI volumes (e.g., using EPI sequences). Ensure MR-compatible EEG systems with artifact correction are used [10] [11].

2. Data Preprocessing:

- fMRI Preprocessing: Perform standard steps including slice-timing correction, realignment, co-registration to a structural image, normalization to standard stereotactic space, and spatial smoothing [10].

- EEG Preprocessing: Process the simultaneous EEG data to remove MRI-related artifacts (gradient switching and ballistocardiogram artifacts) [11].

3. Data Integration & Statistical Analysis:

- Time-Course Extraction: For each time frame of the artifact-corrected EEG recorded inside the scanner, compute the correlation coefficient between the instantaneous scalp voltage map and the patient-specific epileptic voltage map obtained from long-term monitoring [10].

- fMRI General Linear Model (GLM): Use the time course of the correlation coefficient from the previous step as a regressor of interest in the GLM. This identifies brain regions where the BOLD signal fluctuates in sync with the presence of the epilepsy-specific EEG topography [10].

- Statistical Thresholding: Results are typically assessed at a significance level (e.g., P < 0.05) corrected for multiple comparisons (e.g., Family-Wise Error) [10].

4. Validation:

- Compare the location of significant topography-related BOLD clusters with the results from subsequent intracranial EEG (icEEG) recordings and/or the surgical resection area in patients who become seizure-free post-surgery [10].

Diagram 1: Workflow for EEG-fMRI voltage map analysis.

The Scientist's Toolkit: Research Reagent Solutions

The following table lists key hardware, software, and methodological "reagents" essential for experiments involving the discussed neural data types [10] [11] [7].

| Item Name | Type | Function / Application |

|---|---|---|

| MR-Compatible EEG System | Hardware | Enables the safe and simultaneous acquisition of EEG data inside the high-field MRI environment, typically with specialized amplifiers and artifact-resistant electrodes/cables. |

| WISDEM | Hardware | A wireless integrated sensing detector that encodes EEG and fMRI signals on a single carrier wave, eliminating cable-related artifacts and simplifying setup [12]. |

| Voltage Map Correlation | Method | A software/methodological solution for localizing epileptic foci in EEG-fMRI when visible spikes are absent during scanning [10]. |

| fMRI-Informed Source Reconstruction | Software/Method | A computational technique that uses high-resolution fMRI activation maps as spatial constraints to improve the accuracy of source localization from EEG signals [11]. |

| Scikit-learn Preprocessing | Software Library | A Python library providing one-liner functions for essential data preprocessing steps like imputation (SimpleImputer) and scaling (StandardScaler, MinMaxScaler) [7]. |

| Ballistocardiogram (BCG) Correction Algorithm | Software/Algorithm | A critical software tool (e.g., AAS, ICA-based) for removing the cardiac-induced artifact from EEG data recorded inside the MRI scanner [11]. |

Frequently Asked Questions

1. What are the most common data quality issues in neural data, and why do they matter? The most prevalent issues are noise, artifacts, and missing values. In neural data like EEG, these problems can significantly alter research results, making their correction a critical preprocessing step [13]. High-quality training data is fundamental for reliable models, as incomplete or noisy data leads to unreliable models and poor decisions [4].

2. How can I identify and handle missing values in my dataset? You can first assess your data to understand the proportion and pattern of missingness. Common handling methods include [7] [14]:

- Removal: Discarding samples or entire variables with missing values. This is only suitable when the missing data is insignificant.

- Imputation: Replacing missing points with estimated values. Simple methods include using the mean, median, mode, or a moving average (for time-series data). More sophisticated multivariate methods use k-nearest neighbors (KNN) or regression models to infer missing values based on other features.

3. What is the best way to remove large-amplitude artifacts from EEG data? A robust protocol involves a multi-step correction process [13]:

- Basic Filtering & Bad Channel Interpolation: Apply a bandpass filter and interpolate malfunctioning channels.

- Ocular Artifact Removal: Use Independent Component Analysis (ICA) to isolate and remove eye blinks and movements.

- Transient Artifact Removal: Apply Principal Component Analysis (PCA) to correct for large-amplitude, non-specific artifacts like muscle noise.

This semi-automatic protocol includes step-by-step quality checking to ensure major artifacts are effectively removed.

4. My dataset is imbalanced, which preprocessing technique should I use? For imbalanced data, solutions can be implemented at the data level [15]:

- Oversampling: Increasing the number of instances in the minority class.

- Undersampling: Reducing the number of instances in the majority class.

- Hybrid Sampling: A combination of both oversampling and undersampling. Studies indicate that oversampling and classical machine learning are common approaches, but solutions with neural networks and ensemble models often deliver the best performance [15].

5. Why is feature scaling necessary, and which method should I choose? Different features often exist on vastly different scales (e.g., salary vs. age), which can cause problems for distance-based machine learning algorithms. Scaling ensures all features contribute equally and helps optimization algorithms like gradient descent converge faster [6] [7]. The choice of scaler depends on your data:

| Scaling Method | Best Use Case | Key Characteristic |

|---|---|---|

| Standard Scaler | Data assumed to be normally distributed. | Centers data to mean=0 and scales to standard deviation=1 [6] [7]. |

| Min-Max Scaler | When data boundaries are known. | Shrinks data to a specific range (e.g., 0 to 1) [6] [7]. |

| Robust Scaler | Data with outliers. | Uses the interquartile range, making it robust to outliers [6] [7]. |

| Max-Abs Scaler | Scaling to maximum absolute value. | Scales each feature by its maximum absolute value [6]. |

6. How do I convert categorical data (like subject group) into numbers? This process is called feature encoding. The right technique depends on whether the categories have a natural order [7]:

- Label/Ordinal Encoding: Assigns an integer (1, 2, 3,...) to each category. Use only for ordinal data (e.g., "low," "medium," "high").

- One-Hot Encoding: Creates a new binary column for each category. Suitable for nominal data (e.g., "control," "test") but can make data bulky if there are many categories.

- Binary Encoding: Converts categories into binary code, which creates fewer columns than one-hot encoding and is more efficient [7].

The Scientist's Toolkit: Research Reagent Solutions

| Item Name | Type | Function |

|---|---|---|

| EEGLAB | Software Toolbox | A primary tool for processing EEG data, providing functions for filtering, ICA, and other preprocessing steps [13]. |

| Independent Component Analysis (ICA) | Algorithm | A blind source separation technique critical for isolating and removing ocular artifacts from EEG signals without EOG channels [13]. |

| Principal Component Analysis (PCA) | Algorithm | Used for dimensionality reduction and for removing large-amplitude, transient artifacts that lack consistent statistical properties [13]. |

| k-Nearest Neighbors (KNN) Imputation | Algorithm | A multivariate method for handling missing values by imputing them based on the average of the k most similar data samples [14]. |

| Scikit-learn | Software Library | A Python library offering a unified interface for various preprocessing tasks, including scaling, encoding, and imputation [7] [16]. |

| Synthetic Minority Oversampling Technique (SMOTE) | Algorithm | An advanced oversampling technique that generates synthetic samples for the minority class to address data imbalance [15]. |

Experimental Protocols & Methodologies

Protocol 1: Semi-Automatic EEG Preprocessing for Artifact Removal

This protocol is designed to remove large-amplitude artifacts while preserving neural signals, ensuring consistent results across users with varying experience levels [13].

Workflow Overview:

Detailed Steps:

- Bandpass Filtering and Bad Channel Interpolation

- Purpose: Prepare the data for optimal ICA decomposition and remove high-frequency noise and slow drifts.

- Method: Apply a bandpass filter (e.g., 1-40 Hz). A high-pass filter of 1-2 Hz is often critical for good ICA results. Identify and interpolate bad channels (e.g., those with unusually high or low amplitude) [13].

ICA-based Ocular Artifact Removal

- Purpose: Isolate and remove artifacts from eye blinks and movements.

- Method:

- Ensure Stationarity: ICA requires stationary data. Select a segment of data that is stationary but contains ocular artifacts for decomposition.

- Run ICA: Perform ICA decomposition on this segment to obtain component weights.

- Identify and Remove Components: Visually inspect the components to identify those representing ocular artifacts. Remove these components from the full dataset [13].

PCA-based Large-Amplitude Transient Artifact Correction

- Purpose: Remove large, non-specific transient artifacts (e.g., from muscle noise) that may not be fully captured by ICA.

- Method: Apply PCA to the data after ICA correction. Identify and remove principal components that represent large-amplitude, idiosyncratic artifacts. This step helps clean the data of major distortions that could impact final analysis [13].

Protocol 2: Handling Missing Values in Building Operational Data (Analogous to Neural Time-Series)

This protocol from time-series analysis in a related field provides a structured approach to deciding how to handle missing data [14].

Decision Workflow:

Quantitative Comparison of Missing Value Imputation Methods [14]:

| Method Category | Specific Technique | Typical Application Range | Key Advantage | Key Limitation |

|---|---|---|---|---|

| Univariate | Mean/Median Imputation | Low missing ratio (1-5%) | Simple and fast | Does not consider correlations with other variables. |

| Univariate | Moving Average | Low missing ratio (1-5%) | Effective for capturing temporal fluctuations in time-series data. | Less effective for high missing data ratios. |

| Multivariate | k-Nearest Neighbors (KNN) | Medium missing ratio (5-15%) | Can achieve satisfactory performance by using similar samples. | Computational cost increases with data size. |

| Multivariate | Regression-Based (e.g., MLR, SVM, ANN) | Medium to High missing ratio | Can capture cross-sectional or temporal data dependencies for more accurate imputation. | Computationally intensive; requires sufficient data for model training. |

Protocol 3: Addressing Class Imbalance in Experimental Data

This methodology is based on a systematic review of preprocessing techniques for machine learning with imbalanced data [15].

Workflow Overview:

- Diagnose Imbalance: Calculate the Imbalance Ratio (IR), which is the ratio of the majority class to the minority class.

- Select Sampling Technique: Choose a data-level solution to rebalance the class distribution before training a model.

- Train Model: Use the resampled dataset to train a standard or ensemble machine learning model.

Quantitative Comparison of Sampling Techniques [15]:

| Sampling Technique | Description | Common ML Models Used With | Reported Performance |

|---|---|---|---|

| Oversampling | Increasing the number of instances in the minority class. | Classical ML Models | Most common preprocessing technique. |

| Undersampling | Reducing the number of instances in the majority class. | Classical ML Models | Common preprocessing technique. |

| Hybrid Sampling | Combining both oversampling and undersampling. | Ensemble ML Models, Neural Networks | Potentially better results; often used with high-performing models. |

The systematic review notes that while oversampling and classical ML are the most common approaches, solutions that use neural networks and ensemble ML models generally show the best performance [15].

Data Acquisition Considerations for Different Neural Modalities

Frequently Asked Questions (FAQs)

What is data acquisition in the context of neural data? Data acquisition is the process of measuring electrical signals from the nervous system that represent real-world physical conditions, converting these signals from analog to digital values using an analog-to-digital converter (ADC), and saving the digitized data to a computer or onboard memory for analysis [17]. In neuroscience, this involves capturing signals from various neural recording modalities.

What are the key components of a neural data acquisition system? A complete system typically includes:

- Sensors/Electrodes: To capture the neural signals (e.g., EEG electrodes, fMRI coils).

- Data Acquisition Device: Hardware to receive, condition, and record the sensor signals.

- Software: To configure the acquisition parameters, visualize the data in real-time, and save it for analysis [17].

Why is the ADC's measurement resolution critical? The ADC's bit resolution determines the smallest change in the input neural signal that the system can detect. A higher bit count allows the system to resolve finer details. For example, a 12-bit ADC with a ±10V range can detect voltage changes as small as about 4.9mV, which is essential for capturing the often subtle fluctuations in neural activity [17].

How do I choose the correct sample rate? The sample rate must be high enough to accurately capture the frequency content of the neural signal of interest. Sample rates can range from one sample per hour for very slow processes to 160,000 samples per second or more for high-frequency neural activity. Setting the correct rate is a complex but vital decision, as an insufficient rate will result in a loss of signal information [17].

What does it mean for a data acquisition system to be isolated, and do I need one? Isolated data acquisition systems are designed with protective circuitry to electrically separate measurement channels from each other and from the computer ground. This is crucial in neural experiments to:

- Eliminate dangerous ground loops.

- Protect research subjects and equipment from potential faults.

- Minimize noise in the recorded signals, ensuring data integrity [17].

Troubleshooting Common Data Acquisition Issues

Issue: Acquired neural data appears excessively noisy.

- Potential Cause & Solution: Check for electrical interference and ground loops. Use isolated data acquisition units to minimize noise and prevent signal interactions between channels [17].

- Methodology: Run a baseline test with the sensors connected but no active stimulus presented. Inspect the power spectrum of the recorded signal for peaks at 50/60 Hz (line frequency) or harmonics, which indicate electrical interference.

Issue: The recorded signal is distorted or does not match expectations.

- Potential Cause & Solution: Incorrect ADC resolution or sample rate. Ensure the ADC has sufficient resolution to capture the dynamic range of your neural signal and that the sample rate is at least twice the highest frequency component present in the signal (Nyquist theorem) to avoid aliasing [17].

- Methodology: Record a known, calibrated test signal. Verify that the recorded waveform and its frequency components match the input. If not, adjust the acquisition settings accordingly.

Issue: Data file sizes are impractically large, hindering analysis.

- Potential Cause & Solution: Inefficient data storage format. Saving data in a human-readable text format can cause files to be at least ten times larger than storing in a binary format [17].

- Methodology: Utilize acquisition software that allows you to record data in a compact binary format. Convert only the segments of data you need for analysis into text, preserving storage space and improving read/write speeds.

Issue: Difficulty reproducing findings from a published study.

- Potential Cause & Solution: Poor dataset construction or unknown preprocessing steps. Issues can include an insufficient number of examples, noisy labels, imbalanced classes, or a mismatch between the training and test set distributions [18].

- Methodology: Meticulously document all data acquisition and preprocessing steps. Start by implementing a simple model and a known, standardized dataset to establish a performance baseline before moving to your custom data [18] [19].

Experimental Protocols & Workflows

Protocol: Multimodal Neural Data Acquisition and Fusion

Objective: To acquire and integrate neural data from multiple modalities (e.g., visual, auditory, textual) for predicting brain activity or decoding stimuli, as demonstrated in challenges like Algonauts 2025 [20].

Methodology:

- Stimulus Presentation: Present carefully curated, often naturalistic, multimodal stimuli (e.g., movies with audio) to participants while simultaneously recording brain activity via fMRI, EEG, or ECoG [20] [21].

- Feature Extraction: Use pre-trained foundation models (e.g., CNNs for video, audio models like Whisper, LLMs like Llama for text) to convert the stimuli into high-quality feature representations. Do not train new feature extractors from scratch [20].

- Temporal Alignment: Precisely align the extracted stimulus features with the recorded neural signals in the time domain, accounting for any inherent delays like the hemodynamic response in fMRI [22].

- Data Integration and Modeling: Feed the aligned, multimodal features into an encoding model. Top-performing approaches range from simple linear models and convolutions to transformers, which fuse the data to predict brain activity [20].

- Validation: Evaluate the model's performance on a held-out dataset using metrics appropriate to the task, such as the Pearson correlation coefficient between predicted and actual brain activity [20] [22].

Workflow Diagram: Multimodal Neural Data Pipeline

The following diagram illustrates the logical flow of data in a typical multimodal neural data processing experiment.

Technical Specifications for Data Comparison

Table: Key Data Acquisition Parameters for Common Neural Modalities

Table: This table summarizes critical technical parameters to consider when acquiring data from different neural recording modalities.

| Parameter | fMRI | EEG | MEG | ECoG |

|---|---|---|---|---|

| Spatial Resolution | High (mm) | Low (cm) | High (mm) | Very High (mm) |

| Temporal Resolution | Low (1-2s) | High (ms) | High (ms) | Very High (ms) |

| Invasiveness | Non-invasive | Non-invasive | Non-invasive | Invasive |

| Signal Origin | Blood oxygenation | Post-synaptic potentials | Post-synaptic potentials | Cortical surface potentials |

| Key Acq. Consideration | Hemodynamic response delay | Skull conductivity, noise | Magnetic shielding, noise | Surgical implantation |

| Typical Sample Rate | ~0.5 - 2 Hz (TR) | 250 - 2000 Hz | 500 - 5000 Hz | 1000 - 10000 Hz [22] |

Table: Quantitative Metrics for Evaluating Neural Decoding Performance

Table: Based on the search results, different evaluation metrics are used depending on the nature of the neural decoding task.

| Task Paradigm | Example Metric | What It Measures |

|---|---|---|

| Stimuli Recognition | Accuracy | Percentage of correctly identified stimuli from a candidate set [22]. |

| Brain Recording Translation | BLEU, ROUGE, BERTScore | Semantic similarity between decoded text and reference text [22]. |

| Speech Reconstruction | Pearson Correlation (PCC) | Linear relationship between reconstructed and original speech features [22]. |

| Inner Speech Recognition | Word Error Rate (WER) | Accuracy of decoded words at the word level [22]. |

The Scientist's Toolkit: Research Reagent Solutions

Table: Essential Computational Tools for Neural Data Preprocessing

Table: A non-exhaustive list of common tools and techniques used in the preprocessing of acquired neural data.

| Tool / Technique | Primary Function | Relevance to Neural Data |

|---|---|---|

| Pre-trained Feature Extractors (e.g., V-JEPA2, Whisper, Llama) | Convert raw stimuli (video, audio, text) into meaningful feature representations [20]. | Foundational for modern encoding models; eliminates need to train extractors from scratch. |

| Data Preprocessing Libraries (e.g., in Python: scikit-learn, pandas) | Automate data cleaning, normalization, encoding, and scaling [6]. | Crucial for handling missing values, normalizing signals, and preparing data for model ingestion. |

| Version Control for Data (e.g., lakeFS) | Isolate and version data preprocessing pipelines using Git-like branching [6]. | Ensures reproducibility of ML experiments by tracking the exact data snapshot used for training. |

| Oversampling Techniques (e.g., SMOTE) | Balance imbalanced datasets by generating synthetic samples for the minority class [15]. | Addresses class imbalance in tasks like stimulus classification, improving model reliability. |

| Workflow Management Tools (e.g., Apache Airflow) | Orchestrate and automate multi-step data preprocessing and model training pipelines [6]. | Manages complex, sequential workflows from data acquisition to final model evaluation. |

Ethical Considerations and Data Privacy in Clinical Neural Data

Frequently Asked Questions (FAQs)

Regulatory and Compliance FAQs

What makes neural data different from other health data? Neural data is fundamentally different from traditional health data because it can reveal your thoughts, emotions, intentions, and even forecast future behavior or health risks. Unlike medical records that describe your physical condition, neural data can reveal the essence of who you are - serving as a digital "source code" for your identity. This data can include subconscious and involuntary activity, exposing information you may not even consciously recognize yourself [23] [24].

What are the key regulations governing neural data privacy? The regulatory landscape is rapidly evolving across different regions:

Table: Global Neural Data Regulations

| Region | Key Regulations/Developments | Approach |

|---|---|---|

| United States | Colorado & California privacy laws | Explicitly classify neural data as "sensitive personal information" [23] |

| Chile | Constitutional amendment (2021) | First country to constitutionally protect "neurorights" [23] [24] |

| European Union | GDPR provisions | Neural data likely falls under "special categories of data" requiring heightened safeguards [23] |

| Global | UNESCO standards (planned 2025) | Developing global ethics standards for neurotechnology [23] |

How should I handle informed consent for neural data collection? Colorado's law sets a strong precedent requiring researchers to: obtain clear, specific, unambiguous consent; refrain from using "dark patterns" in consent processes; refresh consent every 24 months; and provide mechanisms for users to manage opt-out preferences at any time. Consent should explicitly cover how neural data will be collected, used, stored, and shared with third parties [23].

Technical Implementation FAQs

What technical safeguards should we implement for neural data? Implement multiple layers of protection including: data encryption both at rest and in transit; differential privacy techniques that add statistical noise to protect individual records; and federated learning approaches that allow model training without transferring raw neural data to central servers. These technical safeguards should complement your ethical and legal frameworks [25].

How can we ensure compliance when preprocessing neural data? Integrate privacy protections directly into your preprocessing pipelines. For neuroimaging data, tools like DeepPrep offer efficient processing while maintaining data integrity. Establish version-controlled preprocessing workflows that allow you to reproduce exact processing steps and maintain audit trails for compliance reporting [26].

What are the emerging technological threats to neural privacy? Major technology companies are converging multiple sensors that can infer mental states through non-neural data pathways. For example: EMG sensors can detect subtle movement intentions, eye-tracking reveals cognitive load and attention, and heart-rate variability indicates stress states. When combined with AI, these technologies create potential backdoors to mental state inference even without direct neural measurements [27].

Troubleshooting Guides

Compliance and Regulatory Challenges

Problem: Uncertainty about regulatory requirements across jurisdictions

Solution: Implement the highest common standard of protection across all your research activities. Since neural data protections are evolving rapidly, build your protocols to exceed current minimum requirements. Classify all brain-derived data as sensitive health data regardless of collection context, and apply medical-grade protections even to consumer-facing applications [24] [25].

Problem: Obtaining meaningful informed consent for complex neural data applications

Solution:

- Develop tiered consent processes that explain specific uses in clear, accessible language

- Provide ongoing mechanisms for participants to withdraw consent or modify permissions

- Avoid broad, blanket consent forms that don't specify exact usage scenarios

- Implement regular consent refresh cycles, with Colorado's 24-month requirement as a benchmark [23]

Technical and Data Management Challenges

Problem: Managing large-scale neural datasets while maintaining privacy

Solution: Adopt specialized preprocessing pipelines like DeepPrep that demonstrate tenfold acceleration over conventional methods while maintaining data integrity. For even greater scalability, leverage workflow managers like Nextflow that can efficiently distribute processing across local computers, high-performance computing clusters, and cloud environments [26].

Table: Neural Data Preprocessing Pipeline Comparison

| Feature | Traditional Pipelines | DeepPrep Approach |

|---|---|---|

| Processing Time (per participant) | 318.9 ± 43.2 minutes | 31.6 ± 2.4 minutes [26] |

| Scalability | Designed for small sample sizes | Processes 1,146 participants/week [26] |

| Clinical Robustness | 69.8% completion rate on distorted brains | 100% completion rate [26] |

| Computational Expense | 5.8-22.1 times higher | Significantly lower due to dynamic resource allocation [26] |

Problem: Protecting against unauthorized mental state inference

Solution: Adopt a technology-agnostic framework that focuses on protecting against harmful inferences regardless of data source. Rather than regulating specific technical categories, implement safeguards that trigger whenever mental or health state inference occurs, whether from neural data or other biometric signals [27].

Research Reagent Solutions

Table: Essential Tools for Neural Data Research

| Tool/Technology | Function | Application Context |

|---|---|---|

| Neuropixels Probes | High-density neural recording | Large-scale electrophysiology across multiple brain regions [28] |

| DeepPrep Pipeline | Accelerated neuroimaging preprocessing | Processing structural and functional MRI data with deep learning efficiency [26] |

| BIDS (Brain Imaging Data Structure) | Standardized data organization | Ensuring reproducible neuroimaging data management [26] |

| Federated Learning Frameworks | Distributed model training | Analyzing patterns across datasets without centralizing raw neural data [25] |

| Differential Privacy Tools | Statistical privacy protection | Adding mathematical privacy guarantees to shared neural data [25] |

Workflow Diagrams

Neural Data Preprocessing and Privacy Compliance Workflow

Neural Data Privacy Compliance Pathway

Practical Preprocessing Pipelines: From Raw Data to Analysis-Ready Signals

Troubleshooting Guides

Guide 1: Resolving Excessive Noise and Missing Alpha Peaks in Resting-State EEG

Problem: A resting-state EEG recording shows an extremely noisy signal and a lack of the expected alpha peak (8-13 Hz) in the power spectral density (PSD) plot, even after basic filtering.

Symptoms:

- A PSD plot dominated by a large, narrow peak at 60 Hz (or 50 Hz, depending on region) [29].

- A PSD that appears flat and does not show the typical 1/f-like structure of clean EEG after broad filtering [29].

- No distinct peak in the alpha frequency band during eyes-closed resting states [29].

Diagnosis and Solutions:

Step 1: Confirm the Noise Source The sharp PSD peak at 60 Hz is a classic indicator of power line interference from alternating current (AC) in the environment [29] [30]. This interference can be exacerbated by unshielded cables, nearby electrical devices, or improper grounding [31].

Step 2: Apply a Low-Pass Filter If your analysis does not require frequencies above 45 Hz, apply a low-pass filter with a cutoff at 40-45 Hz. This is often more effective than a narrow notch filter for removing the 60 Hz line noise and its influence, as it attenuates a wider band of high-frequency interference [29].

Step 3: Re-examine the PSD Observe the PSD of the low-pass filtered data up to 45 Hz. If the signal remains flat and lacks an alpha peak, the data may have been contaminated by broadband noise across all frequencies, potentially from environmental electromagnetic interference (EMI) or hardware issues, which can obscure neural signals [29].

Step 4: Check Recording Conditions Investigate the recording setup:

- Amplifier and Cables: Ensure the EEG amplifier is functioning correctly and that all cables are properly shielded and connected [31].

- Simultaneous Recordings: Be aware that recording multiple participants simultaneously on one amplifier or collecting other physiological data (like ECG) can sometimes introduce complex noise profiles [29].

- Environmental Check: Identify and remove potential noise sources from the recording environment, such as monitors, power adapters, or fluorescent lights [31].

Conclusion: If the time-series signal looks plausible after low-pass filtering, the data may still be usable for analyses like connectivity or power-based metrics. However, a complete lack of an alpha peak suggests significant signal quality issues [29].

Guide 2: Eliminating Muscle Artifacts for Clean Spike Sorting

Problem: Neural signals intended for spike sorting are contaminated with high-frequency noise from muscle activity (EMG), making it difficult to isolate the precise morphology of action potentials.

Symptoms:

- High-frequency, irregular noise superimposed on the neural signal [32] [31].

- Poor performance of spike sorting algorithms due to distorted waveform shapes.

Solution: Implement Wavelet Denoising Wavelet-based methods are highly effective for in-band noise reduction while preserving the morphology of spikes, which is crucial for sorting [33]. The performance is strongly dependent on the choice of parameters.

Table 1: Optimal Wavelet Denoising Parameters for Neural Signal Processing

| Parameter | Recommended Choice | Rationale and Implementation Details |

|---|---|---|

| Mother Wavelet | Haar | This simple wavelet is well-suited for representing transient, spike-like signals [33]. |

| Decomposition Level | 5 levels | This level is appropriate for signals sampled at around 16 kHz and effectively separates signal from noise [33]. |

| Thresholding Method | Hard Thresholding | This method zeroes out coefficients below the threshold, better preserving the sharp features of neural spikes compared to soft thresholding [33]. |

| Threshold Estimation | Han et al. (2007) | This threshold definition has been shown to outperform other methods in preserving spike morphology while reducing noise [33]. |

| Performance | Outperforms 300-3000 Hz bandpass filter | This wavelet parametrization yields higher Pearson's correlation, lower root-mean-square error, and better signal-to-noise ratio compared to conventional filtering [33]. |

Guide 3: Adaptively Removing Electrical Interference in Electrophysiology

Problem: Extracellular electrophysiology recordings are contaminated with narrow-band electrical interference from various sources, which is difficult to remove with pre-set filters because the exact frequencies may be unknown or variable.

Symptoms:

- Distinct, narrow peaks in the frequency domain of the signal that are not of neural origin [30].

- A deteriorated signal-to-noise ratio (SNR) that hampers spike-sorting accuracy [30].

Solution: Apply Adaptive Frequency-Domain Filtering This method automatically detects and removes narrow-band interference without requiring prior knowledge of the exact frequencies [30].

Experimental Protocol: Spectral Peak Detection and Removal (SPDR)

- Compute the Signal Spectrum: Calculate the power spectral density (PSD) of the recorded electrophysiology data.

- Detect Spectral Peaks: Scan the PSD to identify tall, narrowband peaks. The detection is based on a Spectral Peak Prominence (SPP) threshold [30].

- Apply Targeted Notch Filtering: For each peak identified by the algorithm, apply a narrow notch filter centered precisely on that frequency to remove the interference [30].

- Validate to Avoid Over-filtering: Proper selection of the SPP threshold is critical. If set too low, it may mistake neural signal features for noise, leading to distortion. The firing-rate activity in the filtered data should be consistent with a secondary cellular activity marker (e.g., fluorescence calcium imaging) to validate that neural information is preserved [30].

Frequently Asked Questions (FAQs)

Q: What is the most common type of artifact in EEG, and how can I identify it? A: Ocular artifacts from eye blinks and movements are among the most common. In the time-domain, they appear as sharp, high-amplitude deflections over frontal electrodes (like Fp1 and Fp2). In the frequency-domain, their power is dominant in the low delta (0.5-4 Hz) and theta (4-8 Hz) bands [32] [31].

Q: My data has a huge 60Hz peak. Should I use a notch filter or a low-pass filter? A: For most analyses focusing on frequencies below 45 Hz, a low-pass filter is preferable. It provides much better attenuation of line noise with a suitably wide transition band compared to a very narrow notch filter. A low-pass filter will also remove any other high-frequency interference, not just the 60 Hz component [29].

Q: Why would I choose wavelet denoising over a conventional band-pass filter for neural signals? A: Conventional band-pass filters are effective for removing noise outside a specified range. However, wavelet denoising is superior at reducing in-band noise—noise within the same frequency band as your signal of interest (like spikes). This leads to better preservation of spike morphology, which is critical for the accuracy of downstream spike sorting [33].

Q: What is a simple first step to remove cardiac artifacts from my EEG data? A: A common approach is to use a reference channel. Since cardiac activity (ECG) can be measured with a characteristic regular pattern, you can record an ECG signal simultaneously. During processing, you can use this reference to estimate and subtract the cardiac artifact from the EEG channels using regression-based methods or blind source separation [32].

The Scientist's Toolkit: Essential Materials for Neural Data Preprocessing

Table 2: Key Research Reagents and Solutions for Neural Signal Processing

| Item Name | Function/Brief Explanation |

|---|---|

| Tungsten Microelectrode (5 MΩ) | Used for in vivo extracellular recordings from specific brain nuclei, such as the cochlear nucleus, providing high-impedance targeted recordings [34]. |

| ICA Algorithm | A blind source separation (BSS) technique used to decompose EEG signals into independent components, allowing for the identification and removal of artifact-laden sources [32] [31]. |

| Spectral Peak Prominence (SPP) Threshold | A software-based parameter used in adaptive filtering to automatically detect narrow-band interference in the frequency domain for subsequent removal [30]. |

| Haar Wavelet | A specific mother wavelet function that is optimal for wavelet denoising of neural signals when the goal is to preserve the morphology of action potentials [33]. |

| Frozen Noise Stimulus | A repeated, identical broadband auditory stimulus used to compute neural response coherence and reliability, essential for validating filtering efficacy in auditory research [34]. |

Workflow Visualization

Spike sorting is a fundamental data analysis technique in neurophysiology that processes raw extracellular recordings to identify and classify action potentials, or "spikes," from individual neurons. This process is critical because modern electrodes typically capture electrical activity from multiple nearby neurons simultaneously. Without sorting, the spikes from different neurons are mixed together and cannot be interpreted on a neuron-by-neuron basis [35].

The core assumption underlying spike sorting is that each neuron produces spikes with a characteristic shape, while spikes from different neurons are distinct enough to be separated. This process enables researchers to study how individual neurons encode information, their functional roles in neural circuits, and their connectivity patterns [36] [37].

Frequently Asked Questions (FAQs)

1. What are the main steps in a standard spike sorting pipeline? Most spike sorting methodologies follow a consistent pipeline consisting of four key stages [38]:

- Signal Filtering: Raw neural signals are band-pass filtered (typically between 300-3000 Hz) to isolate the frequency components of spikes from lower-frequency local field potentials and noise [38].

- Spike Detection: The filtered signal is analyzed to identify candidate spike events, often using amplitude thresholding where signals crossing a predefined threshold are marked as spikes [35] [37].

- Feature Extraction: Detected spikes are aligned and characterized by a set of informative features, reducing the dimensionality of the data for clustering. This can involve techniques like Principal Component Analysis (PCA), waveform derivatives, or advanced nonlinear manifold learning [36] [39] [40].

- Clustering: Spikes are grouped into clusters based on the similarity of their features, with each cluster ideally corresponding to the activity of a single neuron [38].

2. What is the key difference between offline and online spike sorting? Offline spike sorting is performed after data collection is complete. This allows for the use of more computationally intensive and sophisticated algorithms that may provide higher accuracy, as there are no strict time constraints [38]. Online spike sorting must be performed during the recording itself, requiring faster and more computationally efficient approaches to keep up with the incoming data stream. This is crucial for brain-machine interfaces where real-time processing is needed [41] [38].

3. My spike sorting results are noisy and clusters are not well-separated. What can I do? Poor cluster separation can arise from high noise levels or suboptimal feature extraction. You can consider the following troubleshooting steps:

- Verify Preprocessing: Ensure your band-pass filter settings are appropriate for your spike data.

- Advanced Feature Extraction: Shift from linear methods like PCA to nonlinear dimensionality reduction techniques such as UMAP, t-SNE, or PHATE. These methods are better at capturing complex, nonlinear relationships in spike waveforms and can create more separable clusters [42] [36].

- Robust Clustering Algorithms: Use clustering methods known for handling noise and irregular cluster shapes, such as density-based clustering (e.g., DBSCAN) or hierarchical agglomerative clustering [36] [39].

4. How can I validate the results of my spike sorting? Validation is a critical step. For synthetic datasets where the true neuron sources are known, you can use metrics like Accuracy or the Adjusted Rand Index (ARI) to compare sorting results against ground truth [42]. For real data without ground truth, internal validation metrics such as the Silhouette Score and Davies-Bouldin Index can help assess cluster quality and cohesion [40]. Furthermore, you can check for the presence of a refractory period (a brief interval of 1-2 ms with no spikes) in the autocorrelogram of each cluster, which is a physiological indicator of a well-isolated single neuron [37].

5. Are there fully automated spike sorting methods available? Yes, the field is moving toward full automation to handle the increasing channel counts from modern neural probes. Methods like NhoodSorter [39], Kilosort [38], and fully unsupervised Spiking Neural Networks (SNNs) [41] are designed to operate with minimal to no manual intervention. These methods often incorporate robust feature extraction and automated clustering decisions to streamline the workflow.

Troubleshooting Common Spike Sorting Issues

| Problem | Possible Causes | Potential Solutions |

|---|---|---|

| Low cluster separation [36] [37] | High noise levels, suboptimal feature extraction failing to capture discriminative features. | Employ advanced nonlinear feature extraction (UMAP, PHATE) [42] [36]. Use robust, density-based clustering algorithms [39]. |

| Too many clusters | Over-fitting, splitting single neuron units due to drift or noise. | Apply cluster merging strategies based on waveform similarity or firing statistics. Use a separability index to guide merging decisions [37]. |

| Too few clusters | Under-fitting, merging multiple distinct neurons into one cluster. | Increase feature space resolution (e.g., use more principal components or derivative-based features) [40]. Utilize clustering methods that automatically infer cluster count [36]. |

| Drifting cluster shapes [36] | Electrode drift or physiological changes causing slow variation in spike waveform shape over time. | Implement drift-correction algorithms. Use sorting methods that model waveforms as a mixture of drifting t-distributions [36]. |

| Handling overlapping spikes [36] [40] | Two or more neurons firing nearly simultaneously, resulting in a superimposed waveform. | Use feature extraction methods robust to overlaps (e.g., spectral embedding) [36]. Employ specialized algorithms like template optimization in phase space to resolve superpositions [40]. |

Quantitative Performance Comparison of Modern Spike Sorting Methods

The table below summarizes the demonstrated performance of various contemporary spike sorting approaches as reported in recent studies. This can serve as a guide for selecting a method suitable for your data.

| Method / Algorithm | Core Methodology | Reported Performance (Accuracy) | Key Strengths |

|---|---|---|---|

| GSA-Spike / GUA-Spike [36] | Gradient-based preprocessing with Spectral Embedding/UMAP and clustering. | Up to 100% (non-overlapping) and 99.47% (overlapping) on synthetic data. | High accuracy, excellent at resolving overlapping spikes. |

| Deep Clustering (ACeDeC, DDC, etc.) [38] | Autoencoder-based neural networks that jointly learn features and cluster. | Significantly outperforms traditional methods (e.g., PCA+K-means) on complex datasets. | Learns non-linear representations tailored for clustering; handles complexity well. |

| NhoodSorter [39] | Improved Locality Preserving Projections (LPP) with density-peak clustering. | Excellent noise resistance and high accuracy on both simulated and real data. | Fully automated, robust to noise, user-friendly. |

| Spectral Clustering [37] | Clustering on a similarity graph built from raw waveforms or PCA features. | ~73.84% average accuracy on raw data across 16 signals of varying difficulty. | Effective with raw samples, reducing need for complex feature engineering. |

| SS-SPDF / K-TOPS [40] | Shape, phase, and distribution features with template optimization. | High performance on single-unit and overlapping waveforms in real recordings. | Uses physiologically informative features; includes validity/error indices. |

| Frugal Spiking Neural Network [41] | Single-layer SNN with STDP learning for unsupervised pattern recognition. | Effective for online, unsupervised classification on simulated and real data. | Ultra-low power consumption; suitable for future implantation in hardware. |

Experimental Protocols for Advanced Methodologies

Protocol 1: Implementing Nonlinear Manifold Feature Extraction

This protocol is based on studies demonstrating that nonlinear feature extraction outperforms traditional PCA [42] [36].

Data Preprocessing:

- Load your detected and aligned spike waveforms.

- Normalize the amplitude of each spike waveform to unit variance to minimize amplitude-based bias.

Feature Extraction with UMAP:

- Input the matrix of normalized spike waveforms (samples × time points) into the UMAP algorithm.

- Set the target dimensionality for the embedding, typically 2 or 3 for visualization and initial clustering assessment.

- Configure UMAP parameters:

n_neighbors(e.g., 15-50, balances local/global structure) andmin_dist(e.g., 0.1, controls cluster tightness). - Run UMAP to project the high-dimensional spikes into a low-dimensional space.

Clustering and Validation:

Protocol 2: Unsupervised Spike Sorting with a Spiking Neural Network

This protocol outlines the use of a frugal SNN for fully unsupervised sorting, ideal for low-power applications [41].

Network Architecture:

- Construct a single-layer network of Low-Threshold Spiking (LTS) neurons. The dynamics of LTS neurons automatically adapt to the temporal durations of input patterns.

- Connect the input spike trains (from your recorded data) to the LTS neurons via plastic synapses.

Unsupervised Learning:

- Use Spike-Timing-Dependent Plasticity (STDP) as the local learning rule. STDP strengthens synapses from input neurons that consistently fire shortly before the output neuron fires.

- Implement Intrinsic Plasticity (IP) to adjust the excitability of the output neurons, helping them to specialize in different input patterns.

Pattern Classification:

- Present the multichannel neural data stream to the network. Each output neuron will self-configure through STDP and IP to fire specifically for one recurring spatiotemporal spike pattern.

- The firing of a specific output neuron signals the detection of its assigned pattern (i.e., a particular neuron's action potential across multiple channels).

Visualizing the Spike Sorting Workflow

The following diagram illustrates the two main branches of the spike sorting pipeline, contrasting traditional and modern approaches.

The Scientist's Toolkit: Essential Research Reagents & Solutions

This table details key computational tools and algorithms that function as essential "reagents" in a spike sorting experiment.

| Tool / Algorithm | Function / Role | Key Characteristics |

|---|---|---|

| PCA (Principal Component Analysis) [39] | Linear dimensionality reduction for initial feature extraction. | Computationally efficient, simple to implement, but may struggle with complex non-linear data structures. |

| UMAP (Uniform Manifold Approximation and Projection) [42] [36] | Non-linear dimensionality reduction for advanced feature extraction. | Preserves both local and global data structure, often leads to highly separable clusters for spike sorting. |

| t-SNE (t-Distributed Stochastic Neighbor Embedding) [42] | Non-linear dimensionality reduction primarily for visualization. | Excellent at revealing local structure and cluster separation, though computationally heavier than PCA. |

| K-Means Clustering [40] | Partition-based clustering algorithm. | Simple and fast, but requires pre-specifying the number of clusters (K) and assumes spherical clusters. |

| Hierarchical Agglomerative Clustering [36] | Clustering by building a hierarchy of nested clusters. | Does not require pre-specifying cluster count; results in a informative dendrogram. |

| Spectral Clustering [37] | Clustering based on the graph Laplacian of the data similarity matrix. | Effective for identifying clusters of non-spherical shapes and when cluster separation is non-linear. |

| Spiking Neural Network (SNN) with STDP [41] | Unsupervised, neuromorphic pattern classification. | Extremely frugal and energy-efficient; suitable for online, real-time sorting and potential hardware embedding. |

Frequently Asked Questions

Q1: My machine learning model's performance is poor. Could improperly scaled data be the cause? Yes, this is a common issue. Many algorithms, especially those reliant on distance calculations like k-nearest neighbors (K-NN) and k-means, or those using gradient descent-based optimization (like SVMs and neural networks), are sensitive to the scale of your input features [43] [44]. If features are on different scales, one with a larger range (e.g., annual salary) can dominate the algorithm's behavior, leading to biased or inaccurate models [45] [44]. Normalizing your data ensures all features contribute equally to the result.

Q2: When should I use Min-Max scaling versus Z-score normalization? The choice depends on your data's characteristics and the presence of outliers. The table below summarizes the key differences:

| Feature | Min-Max Scaling | Z-Score Normalization |

|---|---|---|

| Formula | (value - min) / (max - min) [46] [44] |

(value - mean) / standard deviation [45] [47] |

| Resulting Range | Bounded (e.g., [0, 1]) [44] | Mean of 0, Standard Deviation of 1 [45] |

| Handling of Outliers | Sensitive; a single outlier can skew the scale [46] [44] | Robust; less affected by outliers [45] [44] |

| Ideal Use Case | Bounded data, neural networks requiring a specific input range [46] [44] | Data with outliers, clustering, algorithms assuming centered data [45] [44] |

Q3: I applied a log transformation to my skewed neural data, but the distribution looks worse. What happened? While log transformations are often used to handle right-skewed data, they do not automatically fix all types of skewness [48]. If your original data does not follow a log-normal distribution, the transformation can sometimes make the distribution more skewed [48]. It is crucial to validate the effect of any transformation by checking the resulting data distribution.

Q4: Do I need to normalize my data for all machine learning models? No. Tree-based algorithms (e.g., Decision Trees, Random Forests) are generally scale-invariant because they make decisions based on feature thresholds [43] [44]. Normalization is not necessary for these models.

Q5: When in the machine learning pipeline should I perform normalization? You should always perform normalization after splitting your data into training and test sets [44]. Calculate the normalization parameters (like min, max, mean, and standard deviation) using only the training data. Then, apply these same parameters to transform your test data. This prevents "data leakage," where information from the test set influences the training process, leading to over-optimistic and invalid performance estimates.

Troubleshooting Guides

Problem: Model Performance is Degraded After Normalization

Potential Cause #1: The presence of extreme outliers.

- Diagnosis: Plot boxplots or histograms of your features. A few data points far from the majority can disrupt scaling.

- Solution:

- For Z-score: While Z-score is more robust, extreme outliers can still be problematic. Consider Robust Scaling, which uses the median and interquartile range instead of the mean and standard deviation [49] [6].

- For Min-Max: Outliers will force all other values into a very narrow range [46]. You may need to implement outlier detection and removal or clipping (winsorizing) before applying Min-Max scaling.

Potential Cause #2: Applying normalization to the entire dataset before splitting.

- Diagnosis: This is a critical procedural error. If you normalized the entire dataset at once, you have leaked information from the test set into your training process.

- Solution: Redo your preprocessing pipeline. Always fit the scaler (e.g., calculate min/max or mean/std) on the training set only, then use that fitted scaler to transform both the training and test sets [44].

Problem: How to Interpret a Model Trained on Log-Transformed Data

Interpreting coefficients becomes less straightforward when using log transformations. The correct interpretation depends on which variable(s) were transformed.

| Scenario | Interpretation Guide | Example |

|---|---|---|

| Log-transformed Dependent Variable (Y) | A one-unit increase in the independent variable (X) is associated with a percentage change in Y [50]. | Coefficient = 0.22.Calculation: (exp(0.22) - 1) * 100% ≈ 24.6%.Interpretation: A one-unit increase in X is associated with an approximate 25% increase in Y. |

| Log-transformed Independent Variable (X) | A one-percent increase in X is associated with a (coefficient/100) unit change in Y [50]. | Coefficient = 0.15.Interpretation: A 1% increase in X is associated with a 0.0015 unit increase in Y. |

| Both Variables Log-Transformed | A one-percent increase in X is associated with a coefficient-percent change in Y [50]. This is an "elasticity" model. | Coefficient = 0.85.Interpretation: A 1% increase in X is associated with an approximate 0.85% increase in Y. |

Experimental Protocol: Comparing Normalization Techniques on Neural Datasets

1. Objective To empirically evaluate the impact of Z-score normalization, Min-Max scaling, and Log scaling on the performance of a classifier trained on neural signal data.

2. Materials and Reagents The following table details key computational tools and their functions in this experiment.

| Research Reagent Solution | Function in Experiment |

|---|---|

| Python with NumPy/SciPy | Core numerical computing; used for implementing normalization formulas and statistical calculations [45]. |

| Scikit-learn Library | Provides ready-to-use scaler objects (StandardScaler, MinMaxScaler) and machine learning models for consistent evaluation [45]. |

| Simulated Neural Dataset | A controlled dataset with known properties, allowing for clear assessment of each normalization method's effect. |

3. Methodology

- Step 1: Data Simulation & Splitting Generate a synthetic neural dataset with multiple features on different scales (e.g., spike rates, local field potential power). Introduce known, realistic skewness to some features. Split the dataset into training (70%) and testing (30%) subsets [6].

- Step 2: Normalization (Independent Variable)

- Group 1 (Z-score): On the training set, calculate the mean (μ) and standard deviation (σ) for each feature. Transform both training and test sets using:

z = (x - μ) / σ[45]. - Group 2 (Min-Max): On the training set, calculate the min and max for each feature. Transform both sets using:

x_scaled = (x - min) / (max - min)[46]. - Group 3 (Log): Apply a natural log transformation

x_log = log(x)to skewed features. Note: Ensure no zero or negative values are present, or uselog(x + 1). - Control Group (Raw): Use the original, non-normalized data.

- Group 1 (Z-score): On the training set, calculate the mean (μ) and standard deviation (σ) for each feature. Transform both training and test sets using:

- Step 3: Model Training & Evaluation Train an identical model (e.g., a Support Vector Machine or a simple neural network) on each of the four preprocessed training sets. Evaluate each model on its correspondingly transformed test set. Use metrics like accuracy, F1-score, and convergence time for comparison.

4. Workflow Visualization The following diagram illustrates the experimental workflow.

Decision Framework for Selecting a Normalization Strategy

Use the workflow below to choose the most appropriate method for your neural data preprocessing pipeline.

Troubleshooting Guides

Guide 1: Diagnosing the Nature of Your Missing Data

Problem: A researcher is unsure why data is missing from their neural dataset and does not know how to choose an appropriate handling method. The model's performance is degraded after a simple mean imputation.

Solution: The first step is to diagnose the mechanism behind the missing data, as this dictates the correct handling strategy [51] [52].

Action 1: Determine the Missing Data Mechanism Use the following criteria to classify your missing data. This classification is pivotal for selecting an unbiased handling method [53] [52].

Action 2: Apply the Corresponding Diagnostic and Handling Strategy Based on your diagnosis from Action 1, follow the corresponding pathway below. The diagram outlines the logical decision process for selecting a handling method after diagnosing the missing data mechanism.

Guide 2: Selecting an Imputation Method for Predictive Modeling

Problem: A scientist needs to impute missing values in a high-dimensional neural dataset intended for a predictive model but is overwhelmed by the choice of methods.

Solution: The choice of method involves a trade-off between statistical robustness and computational efficiency, particularly in a big-data context [54] [55].

Action 1: Define Your Analysis Goal Confirm that your primary goal is prediction and not statistical inference (e.g., estimating exact p-values or regression coefficients). Predictive models are generally more flexible regarding imputation methods [52].

Action 2: Review and Select a Method from Comparative Evidence The following table summarizes quantitative findings from a recent 2024 cohort study that compared various imputation methods for building a predictive model [55]. Performance was measured using Root Mean Square Error (RMSE), Mean Absolute Error (MAE), and the Area Under the Curve (AUC) of the resulting model.