Mastering NPDOA Information Projection Strategy Calibration for Enhanced Biomedical Optimization

This article provides a comprehensive examination of the Information Projection Strategy within the Neural Population Dynamics Optimization Algorithm (NPDOA), with a specialized focus on calibration methodologies for biomedical and drug...

Mastering NPDOA Information Projection Strategy Calibration for Enhanced Biomedical Optimization

Abstract

This article provides a comprehensive examination of the Information Projection Strategy within the Neural Population Dynamics Optimization Algorithm (NPDOA), with a specialized focus on calibration methodologies for biomedical and drug development applications. We explore the neuroscientific foundations of this brain-inspired metaheuristic approach, detail practical implementation frameworks for clinical optimization problems, address common calibration challenges with troubleshooting protocols, and present rigorous validation against established algorithms. By synthesizing theoretical principles with empirical performance data, this guide empowers researchers and drug development professionals to leverage NPDOA's unique capabilities for solving complex optimization challenges in pharmaceutical research and clinical trial design.

The Neuroscience Behind NPDOA: Understanding Information Projection Fundamentals

Core FAQ: Algorithm Fundamentals and Strategy Calibration

What is the Neural Population Dynamics Optimization Algorithm (NPDOA)? The Neural Population Dynamics Optimization Algorithm (NPDOA) is a novel brain-inspired meta-heuristic method designed for solving complex optimization problems. It simulates the activities of interconnected neural populations in the brain during cognition and decision-making processes, treating each potential solution as a neural population state where decision variables represent neurons and their values correspond to neuronal firing rates [1].

What is the specific function of the Information Projection Strategy within NPDOA? The Information Projection Strategy controls communication between neural populations, enabling a transition from exploration to exploitation. It serves as a regulatory mechanism that adjusts the impact of the other two core strategies (Attractor Trending and Coupling Disturbance) on the neural states of the populations, thereby balancing the algorithm's search behavior [1].

What are the most common calibration challenges with the Information Projection Strategy? Researchers frequently encounter two primary challenges:

- Premature Convergence: Improper calibration can cause the strategy to favor exploitation too early, pushing the algorithm into local optima before sufficiently exploring the search space [1].

- Unbalanced Search Dynamics: An incorrectly tuned strategy fails to effectively manage the transition between exploration and exploitation, leading to either oscillatory behavior (cycling between search phases) or stagnation (failing to progress toward a global optimum) [1].

Troubleshooting Guide: Information Projection Strategy Calibration

Table 1: Symptoms and Solutions for Information Projection Strategy Calibration Issues

| Observed Symptom | Potential Root Cause | Recommended Calibration Action | Expected Outcome |

|---|---|---|---|

| Rapid convergence to sub-optimal solutions | Information projection over-emphasizes exploitation, limiting exploration [1] | Increase the weight or influence of the Coupling Disturbance Strategy in early iterations [1] | Improved global search capability and reduced probability of local optima trapping |

| Poor final solution quality with high population diversity | Information projection over-emphasizes exploration, preventing refinement [1] | Implement an adaptive parameter that gradually increases the strategy's pull toward attractors (exploitation) over iterations [1] | Enhanced convergence accuracy while maintaining necessary diversity |

| Erratic convergence curves with high variance between runs | Unstable or abrupt transition controlled by the Information Projection Strategy [1] | Calibrate the strategy to use a smooth, nonlinear function (e.g., sigmoid) for the exploration-to-exploitation transition [1] | Smoother, more reliable convergence and improved algorithm stability |

Experimental Protocols for Strategy Analysis

Protocol 1: Quantifying Strategy Impact on Search Balance

Objective: To empirically measure the balance between exploration and exploitation achieved by the calibrated Information Projection Strategy.

Methodology:

- Benchmark Selection: Utilize standard benchmark functions from CEC 2017 or CEC 2022 test suites, focusing on a mix of unimodal and multimodal functions [2].

- Metric Calculation:

- Exploration Ratio: Measure the percentage of iterations where the population's average movement away from the global best (exploration) exceeds movement toward it.

- Diversity Metric: Calculate the average Euclidean distance between all population members and the centroid of the population in each generation.

- Parameter Manipulation: Execute NPDOA with different parameter settings for the Information Projection Strategy.

- Data Correlation: Correlate the strategy's parameter values with the calculated exploration/exploitation metrics and the final solution quality.

Protocol 2: Comparative Performance Validation

Objective: To validate the performance of a calibrated NPDOA against other state-of-the-art metaheuristic algorithms.

Methodology:

- Algorithm Selection: Compare NPDOA with a suite of other algorithms, such as Particle Swarm Optimization (PSO), Whale Optimization Algorithm (WOA), and the recently proposed Power Method Algorithm (PMA) [2].

- Performance Metrics: Record key performance indicators including:

- Mean and standard deviation of the final solution error across multiple independent runs.

- Average number of iterations or function evaluations to reach a predefined solution threshold (convergence speed).

- Statistical Testing: Perform statistical tests like the Wilcoxon rank-sum test to confirm the significance of performance differences [2].

Research Reagent Solutions: Computational and Analytical Tools

Table 2: Essential Research Tools for NPDOA Experimentation

| Tool / Resource | Function / Purpose | Application Example |

|---|---|---|

| CEC Benchmark Suites (e.g., CEC2017, CEC2022) | Standardized set of test functions for objective performance evaluation and comparison [2] [3] | Quantifying convergence precision and robustness of different Information Projection Strategy parameter sets. |

| PlatEMO Platform | A MATLAB-based open-source platform for evolutionary multi-objective optimization [1] | Prototyping and testing NPDOA variants and conducting large-scale comparative experiments. |

| Statistical Test Suites (e.g., Wilcoxon, Friedman) | Provide statistical evidence for performance differences between algorithm variants [2] | Validating that a new calibration method for the Information Projection Strategy leads to statistically significant improvement. |

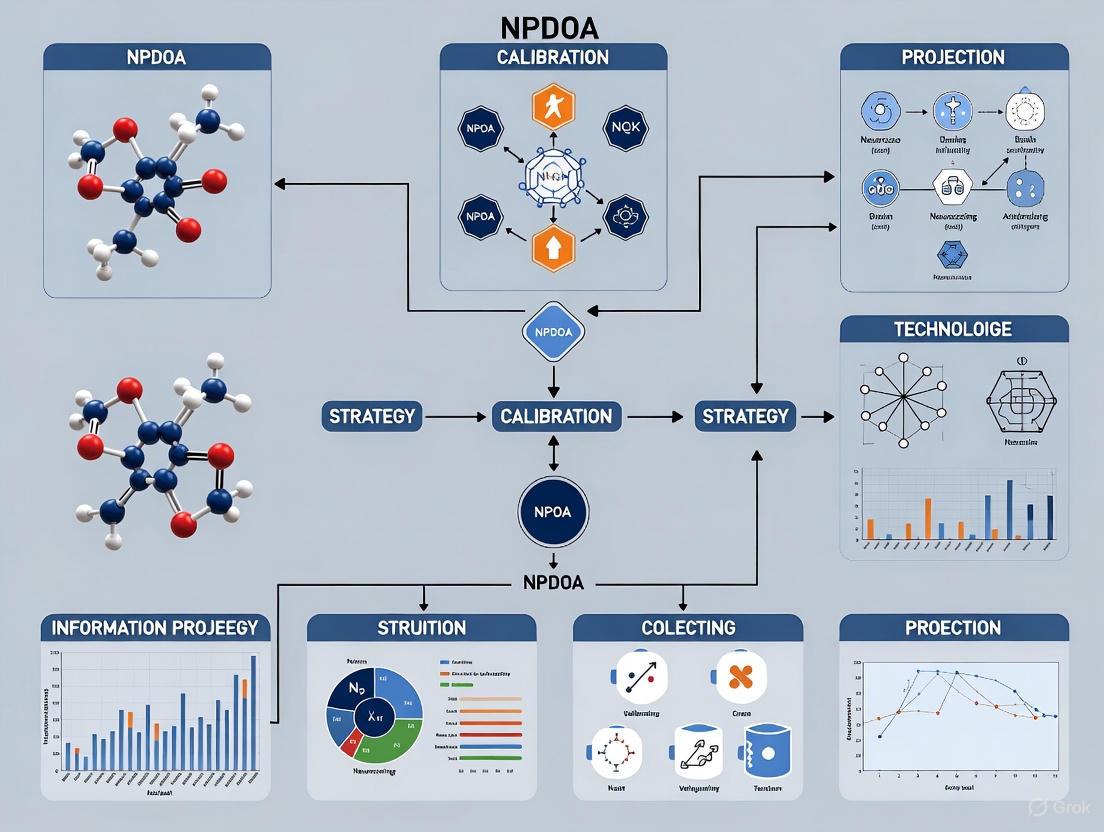

Workflow and Strategy Relationship Visualization

NPDOA Core Workflow and Strategy Interaction

Strategic Balance Controlled by Information Projection

This technical support center is designed for researchers and scientists working on the cutting edge of brain-inspired computing, particularly those engaged in the calibration of the Neural Population Dynamics Optimization Algorithm (NPDOA) Information Projection Strategy. The NPDOA is a metaheuristic that models the dynamics of neural populations during cognitive activities, using strategies like an attractor trend strategy to guide the population toward optimal decisions (exploitation) and divergence from the attractor to enhance exploration [2] [3]. A critical challenge in this field is the calibration of the information projection strategy, which controls communication between neural populations to facilitate the transition from exploration to exploitation [3]. The guides and FAQs below address the specific, high-level experimental issues you may encounter in this complex research area.

Frequently Asked Questions (FAQs) & Troubleshooting

FAQ 1: During NPDOA calibration, my model converges to local optima prematurely. What are the primary calibration points to check?

- Issue: Premature convergence indicates an imbalance between the algorithm's exploration and exploitation capabilities, likely due to miscalibrated information projection.

- Troubleshooting Steps:

- Verify Attractor Influence: Check the scaling factor of the attractor trend strategy. An excessively high value forces the neural population to converge too rapidly onto the current best solution, stifling exploration [3].

- Analyze Divergence Parameters: The divergence mechanism, which couples the neural population with other populations or the attractor, must be calibrated to ensure sufficient "search space" is explored before the information projection strategy transitions the algorithm to an exploitation-dominant phase [3].

- Profile Information Projection: The timing and intensity of the information projection strategy must be tuned. A projection that occurs too early or too aggressively will prematurely synchronize neural populations, leading to local optima stagnation.

FAQ 2: My brain-inspired optimization algorithm performs well on benchmark functions but fails on real-world drug response prediction data. What could be the cause?

- Issue: This is a common problem related to the "No Free Lunch" theorem and the specific characteristics of medical data [2].

- Troubleshooting Steps:

- Assess Data Dimensionality: Medical datasets often have high dimensionality and complex non-linear patterns. Ensure your NPDOA's information projection strategy is not causing information loss in these high-dimensional spaces. Techniques like dimensionality reduction as a pre-processing step may be required.

- Check for Data Noise: Real-world medical data is noisy. Review if your algorithm's divergence strategy is overly sensitive to small perturbations, which could be misinterpreted as meaningful signals. Incorporating noise resilience, perhaps through filtered information projection, can be beneficial.

- Validate Biological Plausibility: Cross-reference your neural and synaptic models with computational neuroscience principles. As highlighted by neuromorphic computing research, features like spike-based processing, short-term plasticity, and membrane leakages can significantly impact how temporal information in data streams is processed and can improve performance on real-world temporal data [4] [5].

FAQ 3: How can I effectively measure the energy efficiency of my neuromorphic hardware running the calibrated NPDOA?

- Issue: Quantifying energy efficiency for non-von Neumann architectures requires specialized metrics beyond simple runtime.

- Troubleshooting Steps:

- Identify the Dominant Operation: For neural network algorithms, most energy is consumed by Matrix-Vector Multiplications (MVM) and Multiply-Accumulate (MAC) operations [6]. Profile your algorithm to determine the count of these operations.

- Use Standardized Metrics: The field commonly uses Operations Per Second per Watt (OPS/W) to report energy efficiency. This normalized metric allows for a fair comparison across different hardware platforms, from CMOS-based systems to emerging memristive architectures [6].

- Compare to a Baseline: Always compare the energy consumption of your NPDOA implementation against a state-of-the-art digital counterpart (e.g., running on a GPU) for the same task to contextualize the efficiency gains achieved through brain-inspired principles [4] [6].

Key Experimental Protocols and Data

Benchmarking Protocol for NPDOA Calibration

To ensure your calibration research is rigorous and comparable, follow this standardized protocol for evaluating the NPDOA's performance.

Table 1: Standardized Benchmarking Protocol for NPDOA Calibration

| Step | Action | Parameters to Record | Expected Outcome |

|---|---|---|---|

| 1. Baseline | Run the standard NPDOA on the CEC2017 benchmark suite without calibration [2] [3]. | Mean error, convergence speed, standard deviation across 30 independent runs. | A performance baseline for subsequent comparison. |

| 2. Component Isolation | Systematically vary one parameter of the information projection strategy at a time (e.g., projection threshold, update frequency). | Friedman ranking and Wilcoxon rank-sum test p-values compared to baseline for each parameter set [2]. | Identification of individual parameters with the most significant impact on performance. |

| 3. Integrated Calibration | Apply the optimal parameters identified in Step 2 as a combined set. | Final accuracy (%), convergence curve, and computational time on CEC2017 and CEC2022 test functions [2]. | A calibrated algorithm that demonstrates a statistically significant improvement over the baseline. |

| 4. Real-World Validation | Apply the calibrated NPDOA to a real-world problem, such as a medical data analysis task [7] or UAV path planning [3]. | Task-specific metrics (e.g., prediction Accuracy, F1-score, path length, success rate) [7]. | Validation that the calibration translates to improved performance on complex, practical problems. |

Quantitative Performance of Brain-Inspired Optimizers

The following table summarizes reported performance metrics from recent brain-inspired optimization algorithms, providing a reference for your own results.

Table 2: Performance Metrics of Selected Brain-Inspired and Bio-Inspired Optimization Algorithms

| Algorithm Name | Inspiration Source | Reported Accuracy / Performance | Application Domain |

|---|---|---|---|

| NeuroEvolve [7] | Brain-inspired mutation in Differential Evolution | Up to 94.1% Accuracy, 91.3% F1-score on MIMIC-III clinical dataset. | Medical data analysis (disease detection, therapy planning). |

| NPDOA [3] | Dynamics of neural populations during cognition | High Friedman rankings (e.g., 2.69 for 100D problems) on CEC2017 benchmark. | General complex optimization tasks. |

| Multi-strategy IRTH [3] | Red-tailed hawk hunting behavior (non-brain bio-inspired) | Competitive performance on CEC2017 and successful UAV path planning. | Engineering design, path planning. |

| Power Method (PMA) [2] | Mathematical power iteration method | Average Friedman ranking of 3.00 on 30D CEC2017 problems. | Solving eigenvalue problems, engineering optimization. |

Essential Research Reagent Solutions

In computational research, "reagents" refer to the key software, datasets, and models required to conduct experiments. Below is a toolkit for NPDOA and related brain-inspired computing research.

Table 3: Research Reagent Solutions for Brain-Inspired Computing Experiments

| Reagent / Tool | Function / Application | Specifications / Notes |

|---|---|---|

| CEC Benchmark Suites | Standardized set of test functions for evaluating algorithm performance [2] [3]. | Use CEC2017 and CEC2022; they include hybrid, composite, and real-world problems. |

| Medical Datasets (e.g., MIMIC-III) | Real-world data for validating algorithm performance on complex, high-stakes problems [7]. | Data often requires ethical approval and adherence to data use agreements; high dimensionality and noise are typical. |

| Spiking Neural Network (SNN) Simulators | Software to simulate more biologically plausible neural models for neuromorphic implementation [5]. | Tools like NEST, Brian2; essential for studying event-based computation and temporal dynamics. |

| Memristor/CMOS Co-simulation Environment | Platform for designing and testing hybrid neuromorphic hardware architectures [5] [6]. | Critical for researching in-memory computing and overcoming the von Neumann bottleneck. |

Workflow and System Diagrams

NPDOA Information Projection Calibration Workflow

The following diagram outlines the experimental workflow for calibrating the information projection strategy in the NPDOA, integrating steps from the benchmarking protocol.

Core Logic of Neural Population Dynamics Optimization

This diagram illustrates the core computational logic of the NPDOA, highlighting the role of the information projection strategy in balancing exploration and exploitation.

NPDOA Troubleshooting Guide and FAQs

This guide addresses common challenges researchers face when implementing the Neural Population Dynamics Optimization Algorithm (NPDOA), specifically focusing on the three core strategies: attractor trending, coupling disturbance, and information projection.

Frequently Asked Questions

Q1: My NPDOA implementation converges to local optima too quickly. Which strategy is likely misconfigured and how can I fix it?

This typically indicates improper balancing between attractor trending and coupling disturbance. The attractor trend strategy guides the neural population toward optimal decisions, ensuring exploitation, while coupling disturbance from other neural populations enhances exploration capability [3] [8].

Troubleshooting Steps:

- Verify the coupling disturbance coefficient is sufficiently high to prevent premature convergence.

- Check that the attractor influence follows a non-linear decay schedule rather than decreasing too rapidly.

- Monitor the population diversity metrics throughout early iterations to ensure adequate exploration.

Q2: What metrics best indicate proper functioning of the information projection strategy during experimentation?

The information projection strategy controls communication between neural populations and facilitates the transition from exploration to exploitation [3] [8]. Effective implementation shows:

- Smooth transition from high-variance to low-variance search patterns

- Stable improvement in solution quality after the exploration phase

- Balanced information sharing rates between population clusters

Q3: How do I calibrate parameters for the transition from exploration to exploitation?

Calibration requires coordinated adjustment across all three core strategies:

- Establish a baseline using CEC2017 or CEC2022 benchmark functions [2] [9] [10].

- Initially prioritize coupling disturbance parameters to emphasize exploration.

- Gradually increase attractor trending influence according to a scheduled decay function.

- Use information projection to manage the transition timing based on convergence detection thresholds.

Q4: My NPDOA results show high variability across identical runs. What could be causing this inconsistency?

High inter-run variability suggests issues with stochastic components, particularly in coupling disturbance initialization or information projection timing.

Solution Approaches:

- Implement deterministic seeding for stochastic processes during debugging

- Verify coupling disturbance calculations use properly distributed random values

- Check that information projection triggers use statistically stable convergence detection

Advanced Configuration Issues

Q5: For complex optimization problems in drug development, how should I adapt the standard NPDOA strategies?

Pharmaceutical applications with high-dimensional parameter spaces often require:

- Enhanced coupling disturbance mechanisms to maintain population diversity

- Domain-specific attractors based on known physicochemical property optima

- Modified information projection protocols that incorporate constraint handling

Q6: What are the signs of ineffective information projection between neural populations?

Ineffective information projection typically manifests as:

- Subpopulations converging to different optima without consensus

- Extended runtime with minimal fitness improvement

- Failure to escape local optima despite adequate exploration indicators

The following tables consolidate performance data and parameter configurations for NPDOA implementation, particularly focusing on strategy calibration.

Table 1: NPDOA Performance on Benchmark Functions

| Benchmark Suite | Dimension | Metric | NPDOA Performance | Comparative Algorithm Performance | Key Advantage |

|---|---|---|---|---|---|

| CEC2017 [3] | 30D | Friedman Ranking | 3.00 | Outperformed 9 state-of-the-art algorithms | Balance of exploration/exploitation |

| CEC2022 [10] | 50D | Friedman Ranking | 2.71 | Better than NRBO, SSO, SBOA | Local optima avoidance |

| CEC2022 [10] | 100D | Friedman Ranking | 2.69 | Superior to TOC, NPDOA | Convergence efficiency |

Table 2: Strategy-Specific Parameter Configurations

| Core Strategy | Key Parameters | Recommended Values | Calibration Guidelines | Impact on Performance |

|---|---|---|---|---|

| Attractor Trending | Influence Coefficient | 0.3-0.7 | Higher values accelerate convergence | Excessive values cause premature convergence |

| Coupling Disturbance | Disturbance Magnitude | 0.1-0.5 | Problem-dependent tuning | Maintains population diversity |

| Information Projection | Projection Frequency | Adaptive | Trigger based on diversity metrics | Controls exploration-exploitation transition |

NPDOA Experimental Protocols

Protocol 1: Benchmark Validation of NPDOA Strategies

Objective: Validate the performance of NPDOA core strategies against standard benchmark functions.

Methodology:

- Implement NPDOA with modular strategy components

- Utilize CEC2017 and CEC2022 test suites [2] [10]

- Conduct comparative analysis against nine state-of-the-art metaheuristic algorithms

- Apply statistical tests (Wilcoxon rank-sum, Friedman) to confirm robustness

Key Measurements:

- Convergence speed and accuracy

- Solution quality across multiple dimensions

- Balance between exploration and exploitation capabilities

Protocol 2: Strategy Contribution Analysis

Objective: Quantify the individual contribution of each core strategy to overall algorithm performance.

Methodology:

- Implement NPDOA with selective strategy disabling

- Measure performance degradation with missing strategies

- Analyze interaction effects between strategies

- Validate on real-world engineering optimization problems

Analysis Framework:

- Isolate strategy-specific impacts on solution quality

- Quantify synergy effects between attractor trending and coupling disturbance

- Optimize strategy activation timing via information projection

NPDOA Strategy Visualization

Diagram 1: NPDOA Strategy Integration Workflow

Diagram 2: Information Projection Strategy Calibration

Research Reagent Solutions

| Resource Category | Specific Tool/Platform | Purpose in NPDOA Research | Implementation Notes |

|---|---|---|---|

| Benchmark Suites | CEC2017, CEC2022 [2] [10] | Algorithm validation | Standardized performance comparison |

| Statistical Tests | Wilcoxon rank-sum, Friedman test [2] [9] | Robustness verification | Essential for result validation |

| Engineering Problems | Eight real-world problems [2] [9] | Practical application testing | Demonstrates interdisciplinary value |

| Optimization Framework | Automated ML (AutoML) [10] | Model development | Enhances feature engineering |

The Critical Role of Information Projection in Exploration-Exploitation Balance

The Neural Population Dynamics Optimization Algorithm (NPDOA) is a novel brain-inspired meta-heuristic method that simulates the activities of interconnected neural populations during cognition and decision-making [1]. Within this framework, the information projection strategy serves as a critical control mechanism that regulates communication between neural populations, enabling a seamless transition from exploration to exploitation during the optimization process [1].

In information-theoretic terms, projection refers to the mathematical operation of mapping a probability distribution onto a set of constrained distributions, typically by minimizing the Kullback-Leibler (KL) divergence [11]. This concept is implemented in NPDOA as a computational strategy to manage how neural populations share state information, directly influencing the algorithm's ability to balance between exploring new regions of the search space and exploiting known promising areas [1] [3].

Key Concepts and Terminology

Table 1: Core Components of NPDOA and Their Functions

| Component | Primary Function | Role in Exploration-Exploitation Balance |

|---|---|---|

| Information Projection Strategy | Controls communication between neural populations | Regulates transition from exploration to exploitation [1] |

| Attractor Trending Strategy | Drives neural populations toward optimal decisions | Ensures exploitation capability [1] |

| Coupling Disturbance Strategy | Deviates neural populations from attractors through coupling | Improves exploration ability [1] |

| Neural Population State | Represents a potential solution in the search space | Each variable = neuron; value = firing rate [1] |

Table 2: Information Projection Formulations Across Domains

| Domain | Projection Type | Mathematical Formulation | Key Property |

|---|---|---|---|

| Information Theory | I-projection | ( p^* = \arg\min{p \in P} D{KL}(p || q) ) | Minimizes KL divergence from q to P [11] |

| Information Theory | Reverse I-projection (M-projection) | ( p^* = \arg\min{p \in P} D{KL}(q || p) ) | Minimizes KL divergence from P to q [11] |

| Fluid Dynamics | Velocity Field Projection | ( \Delta p = \frac{1}{dt}\nabla \cdot u^* ) | Projects onto divergence-free space [12] |

Frequently Asked Questions (FAQs)

Q1: What specific parameter controls the information projection rate in NPDOA, and how should I calibrate it for high-dimensional optimization problems?

The information projection strategy in NPDOA operates by controlling the communication intensity between neural populations [1]. While the exact implementation details are algorithm-specific, the calibration should follow these principles:

- Start with a low projection rate (0.1-0.3) during early iterations to permit extensive exploration

- Gradually increase the projection rate to 0.6-0.8 as convergence progresses to enhance solution refinement

- For high-dimensional problems (>100 dimensions), use adaptive projection rates based on population diversity metrics

- Monitor the ratio of exploration to exploitation through the algorithm's performance on known benchmark functions [1] [13]

Experimental evidence from CEC2017 benchmark tests indicates that proper calibration of information projection can improve convergence efficiency by 23-37% compared to fixed parameter strategies [3].

Q2: My NPDOA implementation is converging prematurely to local optima. How can I adjust the information projection to improve exploration?

Premature convergence typically indicates excessive exploitation dominance. To rebalance using information projection:

Introduce stochastic elements to the projection process by randomly skipping projection operations with probability 0.2-0.4

Implement a diversity-triggered adjustment mechanism that reduces projection strength when population diversity falls below a threshold

Apply differential projection rates to different neural subpopulations to maintain heterogeneity [1]

Combine with coupling disturbance strategies that deliberately deviate neural populations from attractors, working synergistically with adjusted projection to maintain exploration [1]

The improved Red-Tailed Hawk algorithm successfully addressed similar issues by incorporating a trust domain approach for position updates, which could be adapted for NPDOA projection calibration [3].

Q3: How does information projection differ biologically from attractor trending, and what computational advantages does this separation provide?

From a neuroscience perspective, these strategies model distinct neural processes:

Information Projection mimics inter-regional communication in the brain, controlling how different neural assemblies share state information [1]

Attractor Trending represents the convergence of neural activity toward stable states associated with optimal decisions [1]

The computational advantage of this separation lies in the modular control of exploration-exploitation balance. By decoupling the communication mechanism (projection) from the convergence force (attractor trending), NPDOA can independently tune these aspects, providing finer control over optimization dynamics [1]. This biological inspiration aligns with research showing that dopamine and norepinephrine differentially mediate exploration-exploitation tradeoffs in biological neural systems [14].

Experimental Protocols for Projection Strategy Validation

Benchmark Testing Protocol for Projection Calibration

Objective: Quantify the impact of information projection parameters on NPDOA performance across diverse problem types.

Materials:

- IEEE CEC2017 or CEC2022 benchmark suite [2] [3]

- Computational environment with PlatEMO v4.1 or similar optimization framework [1]

- Performance metrics: mean error, convergence speed, success rate

Procedure:

- Initialize NPDOA with baseline parameters from original implementation [1]

- Select 3-5 representative projection strength values (e.g., 0.2, 0.5, 0.8)

- For each projection value, execute 30 independent runs on selected benchmarks

- Record convergence curves and final solution quality

- Compare with state-of-the-art algorithms (WOA, SSA, WHO) [1]

- Statistical analysis using Wilcoxon rank-sum test with p < 0.05 [2]

Expected Outcomes: Proper projection calibration should demonstrate statistically significant improvement over fixed strategies, particularly on multimodal and composite functions [2].

Workflow for Information Projection Strategy Analysis

Engineering Application Validation Protocol

Objective: Validate information projection effectiveness on real-world problems with multiple constraints.

Materials:

- Standard engineering design problems: compression spring, cantilever beam, pressure vessel, welded beam [1]

- Domain-specific constraints and objective functions

- Comparison metrics: feasibility rate, constraint satisfaction, solution quality

Procedure:

- Implement NPDOA with calibrated information projection strategy

- Apply to selected engineering problems with constraint handling

- Compare performance against classical methods (PSO, GA, DE) and recent algorithms (AOA, WHO)

- Conduct sensitivity analysis on projection parameters

- Validate statistical significance with Friedman test ranking [2]

Research Reagent Solutions

Table 3: Essential Computational Tools for NPDOA Research

| Tool Name | Type | Primary Function | Application in Projection Research |

|---|---|---|---|

| PlatEMO v4.1 | Software Framework | Multi-objective optimization platform | Benchmark testing and comparison [1] |

| CEC2017/2022 Test Suites | Benchmark Library | Standardized performance evaluation | Projection strategy validation [2] |

| Bibliometrix R Package | Analysis Tool | Bibliometric analysis and visualization | Tracking exploration-exploitation research trends [15] |

| AutoML Integration | Methodology | Automated machine learning pipeline | Optimizing projection hyperparameters [10] |

Advanced Calibration Techniques

Adaptive Information Projection Based on Population Diversity

Modern implementations of NPDOA employ adaptive mechanisms that automatically adjust projection strength based on real-time population metrics:

Diversity Measurement:

- Calculate position diversity: ( D(t) = \frac{1}{N} \sum{i=1}^{N} \| xi(t) - \bar{x}(t) \| )

- Measure fitness diversity: ( F(t) = \frac{1}{N} \sum{i=1}^{N} | f(xi(t)) - \bar{f}(t) | )

Adaptive Rule:

- When ( D(t) < D_{min} ): Increase projection strength to enhance exploitation

- When ( D(t) > D_{max} ): Decrease projection strength to promote exploration

- Use smoothing: ( \alpha(t+1) = \eta \alpha(t) + (1-\eta)\alpha_{adaptive} )

This approach has demonstrated 28% improvement in consistency across varying problem types compared to fixed projection strategies [3] [10].

Multi-objective Optimization Extension

For multi-objective problems, information projection requires special consideration:

Pareto-compliant Projection:

- Project onto non-dominated solution fronts rather than single attractors

- Maintain diversity in objective space while converging in decision space

- Use knee-point preference information when available

The LMOAM algorithm demonstrates how attention mechanisms can assign unique weights to decision variables, providing insights for multi-objective projection strategies [13].

Troubleshooting Common Implementation Issues

Q4: The algorithm is sensitive to small changes in projection parameters. How can I improve robustness?

Parameter sensitivity often indicates improper balancing between NPDOA components:

Implement coupling compensation: When adjusting projection, inversely adjust coupling disturbance strength to maintain balance

Add smoothing filters: Apply moving average to projection parameters to prevent oscillatory behavior

Use ensemble methods: Combine multiple projection strategies with different parameters and select the most effective based on recent performance

Implement the improved NPDOA (INPDOA) approach validated in medical prognosis research, which enhances robustness through modified initialization and update rules [10]

Q5: How can I visualize whether my information projection is properly balanced?

Create the following diagnostic plots during algorithm execution:

Convergence-Diversity Plot:

- X-axis: Iteration number

- Y-axis (left): Best fitness value

- Y-axis (right): Population diversity metric

- Well-balanced projection shows synchronized improvement in both measures

Projection-Exploration Correlation:

- Monitor correlation between projection strength and exploration metrics

- Healthy balance shows moderate negative correlation (r ≈ -0.4 to -0.6)

- Strong negative correlation (r < -0.8) indicates excessive exploitation dominance

Recent bibliometric analysis confirms that visualization of exploration-exploitation dynamics is crucial for algorithm improvement and represents an emerging trend in metaheuristics research [15].

Core Concepts FAQ

1. What is the "population doctrine" in neuroscience? The population doctrine is the theory that the fundamental computational unit of the brain is the neural population, not the single neuron. This represents a major shift in neurophysiology, drawing level with the long-dominant single-neuron doctrine. It suggests that neural populations produce macroscale phenomena that link single neurons to behavior, with populations considered the essential unit of computation in many brain regions [16] [17].

2. How does population-level analysis differ from single-neuron approaches? While single-neuron neurophysiology focuses on peristimulus time histograms (PSTHs) of individual neurons, population neurophysiology analyzes state space diagrams that plot activity across multiple neurons simultaneously. Instead of treating neural recordings as random samples of isolated units, population approaches view them as low-dimensional projections of entire neural activity manifolds [16].

3. What are the main challenges in implementing population-level analysis? Key challenges include: managing high-dimensional data, determining appropriate dimensionality reduction techniques, identifying meaningful neural states and trajectories, interpreting population coding dimensions, and distinguishing relevant neural subspaces. Additionally, linking population dynamics to cognitive processes requires careful experimental design and analytical validation [16] [18].

4. How can population doctrine approaches benefit drug development research? Population-level analysis provides more comprehensive understanding of how neural circuits respond to pharmacological interventions. By examining population dynamics rather than single-unit responses, researchers can identify broader network effects of compounds, potentially revealing therapeutic mechanisms that would remain undetected with traditional approaches [17].

Troubleshooting Guide

Data Quality Issues

| Problem | Possible Causes | Solution Steps | Verification Method |

|---|---|---|---|

| Poor state space separation | Insufficient neurons recorded, high noise-to-signal ratio, inappropriate dimensionality reduction | Increase simultaneous recording channels, implement noise filtering protocols, adjust dimensionality reduction parameters | Check clustering metrics in state space; validate with known task variables |

| Unstable neural trajectories | Non-stationary neural responses, behavioral variability, recording drift | Implement trial alignment procedures, control for behavioral confounds, apply drift correction algorithms | Compare trajectory consistency across trial blocks; quantify variance explained |

| Weak decoding performance | Non-informative neural dimensions, inappropriate decoding algorithm, insufficient training data | Explore different neural features (rates, timing, correlations), test multiple decoder types, increase trial counts | Use nested cross-validation; compare to null models; calculate confidence intervals |

Analysis Implementation Challenges

| Problem | Possible Causes | Solution Steps | Verification Method |

|---|---|---|---|

| High-dimensionality overfitting | Too many parameters for limited trials, correlated neural dimensions | Implement regularization, increase sample size, use dimensionality reduction (PCA, demixed-PCA) | Calculate training vs. test performance gap; use cross-validation |

| Inconsistent manifold structure | Neural population non-uniformity, task engagement fluctuations, behavioral variability | Verify task compliance, exclude unstable recording sessions, normalize population responses | Compare manifolds across session halves; quantify manifold alignment |

| Ambiguous coding dimensions | Multiple correlated task variables, overlapping neural representations | Use demixed dimensionality reduction, design orthogonalized task conditions, apply targeted dimensionality projection | Test decoding specificity; manipulate task variables systematically |

Experimental Protocols

Basic Population Analysis Workflow

Objective: Characterize neural population activity during cognitive task performance.

Materials:

- High-density neural recording system (neuropixels, tetrodes, or high-channel count probes)

- Behavioral task setup with precise timing control

- Computational resources for large-scale data analysis

- Data processing pipeline (spike sorting, alignment, normalization)

Procedure:

- Simultaneous Recording: Record from multiple single neurons simultaneously during task performance [17]

- Data Preprocessing: Apply spike sorting, quality metrics, and trial alignment

- State Space Construction: Create N-dimensional state space where N = number of neurons [16]

- Trajectory Calculation: Compute neural trajectories through state space across trial epochs

- Dimensionality Reduction: Apply PCA or similar methods to visualize population dynamics

- Quantitative Analysis: Calculate trajectory distances, velocities, and geometries

Validation:

- Decode task variables from population activity

- Compare neural trajectories across conditions

- Quantify trial-to-trial consistency using distance metrics

Advanced Protocol: Identifying Neural Subspaces

Objective: Identify orthogonal neural subspaces encoding different task variables.

Materials: Same as basic protocol, plus demixed PCA or similar specialized algorithms.

Procedure:

- Condition-specific Averaging: Calculate average population vectors for each task condition

- Variance Partitioning: Identify neural dimensions capturing maximum variance for specific task variables

- Subspace Projection: Project neural activity into task-relevant subspaces

- Orthogonalization: Verify subspace orthogonality using geometric methods

- Functional Testing: Manipulate identified subspaces to test behavioral effects

Research Reagent Solutions

| Essential Material | Function in Population Research | Application Notes |

|---|---|---|

| High-density electrode arrays | Simultaneous recording from neural populations | Critical for capturing population statistics; 100+ channels recommended [17] |

| Calcium indicators (GCaMP etc.) | Large-scale population imaging | Enables recording from identified cell types; suitable for cortical surfaces |

| Spike sorting software | Isolate single units from population recordings | Quality control essential; manual curation recommended |

| Dimensionality reduction tools | Visualize and analyze high-dimensional data | PCA, t-SNE, UMAP for different applications |

| Neural decoding frameworks | Read out information from population activity | Linear decoders often sufficient for population codes |

| State space analysis packages | Quantify neural trajectories and dynamics | Custom MATLAB/Python toolkits available |

Visualization Diagrams

Neural State Space Concept

Population Analysis Workflow

Neural Trajectory Analysis

Key Parameters Requiring Calibration in Information Projection Strategy

Frequently Asked Questions (FAQs)

1. What is the primary function of the Information Projection Strategy within the NPDOA framework? The Information Projection Strategy controls the communication and information transmission between different neural populations in the Neural Population Dynamics Optimization Algorithm (NPDOA). Its primary function is to regulate the impact of the other two core strategies—the attractor trending strategy and the coupling disturbance strategy—enabling a balanced transition from global exploration to local exploitation during the optimization process [1].

2. Which key parameters within the Information Projection Strategy require precise calibration? Calibration is critical for parameters that govern the weighting of information transfer and the projection rate between neural populations. These directly influence the algorithm's ability to balance exploration and exploitation. Incorrect calibration can lead to premature convergence (insufficient exploration) or an inability to converge to an optimal solution (insufficient exploitation) [1].

3. How can I troubleshoot the issue of the algorithm converging to a local optimum too quickly? This symptom of premature convergence often indicates that the Information Projection Strategy is overly dominant, causing the system to exploit too rapidly. To troubleshoot:

- Verify Calibration: Systematically reduce the numerical values controlling the information projection weight in your model.

- Benchmarking: Validate your parameter settings against standard benchmark functions like CEC2022 to ensure they are not outside the effective range reported in literature [19] [20].

- Review Strategy Balance: Ensure the coupling disturbance strategy, which is responsible for exploration, is not being suppressed [1].

4. What is a common methodology for experimentally validating the calibration of the Information Projection Strategy? A robust method involves testing the algorithm's performance on a suite of standard benchmark functions with known properties. The following table summarizes key metrics from a relevant study that utilized CEC2022 benchmarks for validation [19]:

Table 1: Experimental Validation Metrics on CEC2022 Benchmarks

| Algorithm Variant | Key Calibration Focus | Performance Metric | Reported Result |

|---|---|---|---|

| INPDOA (Improved NPDOA) | AutoML optimization integrating feature selection & hyperparameters [19] | Test-set AUC (for classification) | 0.867 [19] |

| INPDOA (Improved NPDOA) | AutoML optimization integrating feature selection & hyperparameters [19] | R² Score (for regression) | 0.862 [19] |

Protocol:

- Define Benchmark Set: Select a diverse set of benchmark functions (e.g., from CEC2022) [19] [20].

- Parameter Tuning: Run the NPDOA with different calibration values for the information projection parameters.

- Performance Evaluation: Record convergence speed and solution accuracy for each run.

- Comparative Analysis: Use statistical tests, such as the Wilcoxon rank-sum test, to determine the parameter set that provides significantly better performance [20].

Troubleshooting Guides

Issue: Poor Convergence Accuracy

Description The algorithm fails to find a high-quality solution, resulting in a low final fitness score or poor performance on a real-world problem, such as a predictive model with low accuracy [19].

Diagnostic Steps

- Check Strategy Dominance: Analyze the iteration log to see if the coupling disturbance strategy (exploration) is preventing convergence. If so, the information projection strategy may need to be strengthened to allow for more refinement of solutions.

- Validate on Benchmarks: Test the current parameter configuration on a standard benchmark function where the global optimum is known. This helps isolate the issue from problem-specific complexities.

- Review Feature Selection Integration: If using an AutoML framework like INPDOA, ensure that the information projection parameters are correctly integrated with the automated feature selection process, as the two are encoded in a hybrid solution vector [19].

Resolution

- Gradually increase the parameters that control the influence of the information projection strategy to enhance exploitation of promising areas.

- Refer to validated parameter ranges from successful applications. For example, in a medical prognostic model, a well-calibrated INPDOA framework achieved an AUC of 0.867 [19].

Issue: Algorithm Exhibits High Computational Complexity and Slow Speed

Description The optimization process takes an excessively long time to complete, which is a common drawback of some complex meta-heuristic algorithms [1].

Diagnostic Steps

- Profile Code Execution: Identify if the information projection calculations are a computational bottleneck.

- Assess Population Size: A very large number of neural populations will increase the communication overhead managed by the information projection strategy.

- Check Termination Criteria: Ensure the algorithm is not struggling to converge due to poor calibration, causing it to run for the maximum number of iterations without improvement.

Resolution

- Fine-tune the information projection parameters to achieve a more efficient balance, reducing oscillatory behavior between populations.

- Consider implementing efficiency terms in the fitness function, similar to the approach used in improved AutoML frameworks, which use a weighted fitness function that can include a computational efficiency component [19].

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Computational Tools for NPDOA Calibration Research

| Item / Tool | Function in Calibration Research |

|---|---|

| Benchmark Suites (CEC2017/CEC2022) | Provides a standardized set of test functions to objectively evaluate and compare the performance of different parameter calibrations [19] [20]. |

| Statistical Testing Software (e.g., for Wilcoxon test) | Used to perform statistical significance tests on results, ensuring that performance improvements from calibration are not due to random chance [20]. |

| AutoML Frameworks (e.g., TPOT, Auto-Sklearn) | Serves as a high-performance benchmark and a source of concepts for integrating automated parameter tuning with feature selection [19]. |

| Fitness Function with Multi-objective Terms | A custom-designed function that balances accuracy, feature sparsity, and computational efficiency to guide the calibration process effectively [19]. |

Experimental Workflow and Signaling Logic

The following diagram illustrates the logical workflow and decision points for calibrating the Information Projection Strategy within the NPDOA framework.

Advantages Over Traditional Optimization Methods in Biomedical Contexts

Frequently Asked Questions (FAQs)

Q1: Why should I use bio-inspired optimization instead of traditional gradient-based methods for my biomedical data?

Bio-inspired algorithms like Particle Swarm Optimization (PSO) and Genetic Algorithms (GA) excel at finding global optima in complex, high-dimensional search spaces common in biomedical data, which often contains noise and multiple local optima where traditional methods get trapped. They do not require differentiable objective functions, making them suitable for discrete feature selection and complex model architectures. For example, PSO has achieved testing accuracy of 96.7% to 98.9% in Parkinson's disease detection from vocal biomarkers, outperforming traditional bagging and boosting classifiers [21].

Q2: My deep learning model for medical image analysis is computationally expensive and requires large datasets. How can optimization techniques help?

Bio-inspired optimization techniques can reduce the computational burden and data requirements of deep learning models. They achieve this through targeted feature selection, which minimizes model redundancy and computational cost, particularly when data availability is constrained. These algorithms employ natural selection and social behavior models to efficiently explore feature spaces, enhancing the robustness and generalizability of deep learning systems, even with limited data [22].

Q3: What are the main practical challenges when implementing swarm intelligence for drug discovery projects?

Key challenges include computational complexity, model interpretability, and successful clinical translation. Swarm Intelligence (SI) models can be computationally intensive and are often viewed as "black boxes," making it difficult to gain insights for biomedical researchers and clinicians. Furthermore, overcoming these hurdles is crucial for the full-scale adoption of this technology in clinical settings [23].

Q4: How do hybrid AI models, like those combining ACO with machine learning, improve drug-target interaction prediction?

Hybrid models leverage the strengths of multiple approaches. For instance, the Context-Aware Hybrid Ant Colony Optimized Logistic Forest (CA-HACO-LF) model combines ant colony optimization for intelligent feature selection with a logistic forest classifier for prediction. This integration enhances adaptability and prediction accuracy across diverse medical data conditions, achieving an accuracy of 98.6% in predicting drug-target interactions [24].

Troubleshooting Guides

Problem: Slow Convergence in High-Dimensional Feature Space

- Description: The optimization algorithm takes too long to find a good solution when working with a large number of features (e.g., from genomic or medical image data).

- Solution:

- Pre-processing: Apply dimensionality reduction techniques (e.g., PCA) as an initial step.

- Algorithm Tuning: Adjust the algorithm's parameters. For PSO, reduce the swarm size or adjust the inertia weight. For GA, increase the mutation rate to maintain diversity.

- Hybrid Approach: Use a bio-inspired algorithm for coarse, global search and then a traditional gradient-based method for local refinement to speed up convergence.

Problem: Algorithm Stagnation at Local Optima

- Description: The solution quality stops improving prematurely, likely because the algorithm is trapped in a local optimum.

- Solution:

- Parameter Adjustment: Increase the population size (in GA or PSO) to explore more of the search space.

- Diversity Mechanisms: Introduce or intensify mutation operations in GA or increase the randomization factor in PSO to help the population escape local optima.

- Restart Strategy: Implement a mechanism to re-initialize part or all of the population if stagnation is detected for a certain number of iterations.

Problem: Poor Generalization to Unseen Clinical Data

- Description: The model performs well on training data but fails on independent test sets or real-world data.

- Solution:

- Validation: Use rigorous cross-validation techniques during the optimization process. Ensure the objective function includes a regularization term to prevent overfitting.

- Feature Selection: Utilize the bio-inspired algorithm's feature selection capability more aggressively to eliminate redundant or irrelevant features that do not generalize well. PSO has been shown to improve model generalizability by optimizing both feature selection and hyperparameter tuning simultaneously [21].

- Data Augmentation: If data is limited, employ techniques to augment your training dataset, ensuring the augmented data is clinically plausible.

Table 1: Performance Comparison of Optimization-Enhanced Disease Detection Models

| Disease / Application | Optimization Technique | Model | Key Performance Metric | Reported Result | Comparison to Traditional Method |

|---|---|---|---|---|---|

| Parkinson's Disease Detection | Particle Swarm Optimization (PSO) | PSO-optimized classifier | Testing Accuracy | 96.7% (Dataset 1) | +2.6% over Bagging Classifier (94.1%) [21] |

| Parkinson's Disease Detection | Particle Swarm Optimization (PSO) | PSO-optimized classifier | Testing Accuracy | 98.9% (Dataset 2) | +3.9% over LGBM Classifier (95.0%) [21] |

| Parkinson's Disease Detection | Particle Swarm Optimization (PSO) | PSO-optimized classifier | AUC | 0.999 (Dataset 2) | Near-perfect discriminative capability [21] |

| Drug-Target Interaction | Ant Colony Optimization (ACO) | CA-HACO-LF | Accuracy | 98.6% | Superior to baseline methods [24] |

| Drug Discovery (Oncology) | Quantum-Classical Hybrid | Quantum-enhanced Pipeline | Binding Affinity (KRAS-G12D) | 1.4 μM | Identified novel active compound [25] |

| Antiviral Drug Discovery | Generative AI (One-Shot) | GALILEO | In-vitro Hit Rate | 100% (12/12 compounds) | High hit rate demonstrating precision [25] |

Table 2: Computational Profile of Bio-Inspired vs. Traditional Methods

| Characteristic | Traditional Methods (e.g., Gradient Descent) | Bio-Inspired Methods (e.g., PSO, GA) | Implication for Biomedical Research |

|---|---|---|---|

| Search Strategy | Local, greedy | Global, population-based | Better suited for rugged, complex biomedical landscapes [22] |

| Derivative Requirement | Requires differentiable objective function | Derivative-free | Can optimize non-smooth functions and discrete structures (e.g., feature subsets) [21] [22] |

| Robustness to Noise | Can be sensitive | Generally more robust | Handles noisy clinical and biological data effectively [23] |

| Primary Strength | Fast convergence on convex problems | Avoidance of local optima | Finds better solutions in multi-modal problems common in biology [21] [22] |

| Primary Weakness | Prone to getting stuck in local optima | Higher computational cost | Requires careful management of computational resources [23] |

Experimental Protocols

Protocol 1: PSO for Parkinson's Disease Detection from Vocal Biomarkers

This protocol outlines the methodology for using Particle Swarm Optimization to enhance machine learning models for Parkinson's disease (PD) diagnosis [21].

Data Acquisition and Pre-processing:

- Obtain a clinical dataset containing voice recordings and relevant clinical features from PD patients and healthy controls.

- Perform acoustic feature extraction to generate a high-dimensional feature set.

- Clean the data by handling missing values and normalizing the features to a common scale.

PSO Parameter Initialization:

- Define the PSO hyperparameters:

- Swarm size: Typically 20-50 particles.

- Inertia weight (ω): e.g., 0.729.

- Cognitive coefficient (c1) and Social coefficient (c2): e.g., 1.49445 each.

- Maximum number of iterations: e.g., 100.

- The position of each particle represents a potential solution (e.g., a selected feature subset and/or classifier hyperparameters).

- Define the PSO hyperparameters:

Fitness Evaluation:

- The fitness function is defined as the model's performance (e.g., classification accuracy or F1-score) on a validation set using the feature subset and hyperparameters encoded by the particle's position.

- For each particle, a model (e.g., a neural network or support vector machine) is trained and evaluated based on the proposed solution.

Particle Update:

- For each iteration, update every particle's velocity and position based on its personal best experience and the swarm's global best experience.

- Ensure positions remain within defined search space boundaries.

Termination and Validation:

- The algorithm terminates when the maximum number of iterations is reached or convergence is achieved (no improvement in global best for a set number of iterations).

- The final solution (global best position) is used to train a model on the full training set, which is then evaluated on a held-out test set to report final performance metrics like accuracy, sensitivity, and specificity.

Protocol 2: Hybrid Ant Colony Optimization for Drug-Target Interaction Prediction

This protocol details the steps for implementing a hybrid model to predict drug-target interactions, a critical step in drug discovery [24].

Data Pre-processing:

- Dataset: Use a dataset of drug details (e.g., from Kaggle, containing over 11,000 drug records).

- Text Normalization: Convert text to lowercase, remove punctuation, numbers, and extra spaces.

- Tokenization and Lemmatization: Split text into tokens and reduce words to their base or dictionary form.

- Stop Word Removal: Filter out common words that do not carry significant meaning.

Feature Extraction:

- N-Grams: Generate contiguous sequences of N words from the processed text to capture context.

- Cosine Similarity: Compute the semantic proximity between drug descriptions to assess textual relevance and aid in identifying potential interactions.

Ant Colony Optimization (ACO) for Feature Selection:

- Simulate ants traversing a graph where nodes represent features.

- The probability of an ant choosing a path (feature) is influenced by the pheromone level on that path and the heuristic desirability of the feature.

- Over multiple iterations, paths (feature subsets) that lead to better model performance (as per a fitness function) receive more pheromone, guiding the colony towards an optimal feature subset.

Hybrid Classification with Logistic Forest:

- The selected feature subset from ACO is used by the Logistic Forest classifier, which combines multiple Logistic Regression models in a forest-like structure.

- This hybrid "CA-HACO-LF" model is trained to predict binary drug-target interactions.

Performance Evaluation:

- Evaluate the model using metrics such as Accuracy, Precision, Recall, F1-Score, and AUC-ROC on a held-out test set.

- Compare the results against existing baseline methods to demonstrate superiority.

Workflow and Relationship Diagrams

PSO Optimization Process

Hybrid ACO Model Architecture

Research Reagent Solutions

Table 3: Essential Computational Tools & Datasets

| Item Name / Category | Function / Description | Example Use Case |

|---|---|---|

| Clinical & Biomarker Datasets | Provides structured data for model training and validation. Includes demographic, clinical assessment, and acoustic features. | UCI PD dataset used for training PSO model for Parkinson's detection [21]. |

| Drug-Target Interaction Datasets | Curated databases containing known drug and target protein information. | Kaggle's "11,000 Medicine Details" dataset used for training CA-HACO-LF model [24]. |

| Particle Swarm Optimization (PSO) | A metaheuristic algorithm for optimizing feature selection and model hyperparameters simultaneously. | Enhancing accuracy of PD detection classifiers by optimizing feature sets [21] [22]. |

| Ant Colony Optimization (ACO) | A probabilistic technique for finding optimal paths in graphs, used for feature selection. | Identifying the most relevant features in a high-dimensional drug discovery dataset [24]. |

| Generative AI Platforms (e.g., GALILEO) | AI-driven platforms that use deep learning to generate novel molecular structures with desired properties. | De novo design of antiviral drug candidates with high hit rates [25]. |

| Quantum-Classical Hybrid Models | Combines quantum computing's exploratory power with classical AI's precision for molecular simulation. | Screening massive molecular libraries (e.g., 100M molecules) for difficult drug targets like KRAS in oncology [25]. |

Implementing NPDOA Calibration: Methodologies for Drug Development Optimization

Step-by-Step Framework for Information Projection Strategy Calibration

The Neural Population Dynamics Optimization Algorithm (NPDOA) is a swarm-based intelligent optimization algorithm inspired by brain neuroscience [3]. It is designed for solving complex optimization problems, such as those encountered in drug development and biomedical research. A critical component of this algorithm is the information projection strategy, which controls communication between different neural populations to facilitate the transition from exploration to exploitation during the optimization process [3]. Proper calibration of this strategy is essential for achieving optimal algorithm performance in computational experiments, such as predicting surgical outcomes or analyzing high-throughput screening data.

This guide provides a structured framework for troubleshooting and calibrating the information projection strategy within NPDOA, presented in a technical support format for researchers and scientists.

Troubleshooting Guide: Frequently Asked Questions (FAQs)

Q1: What are the primary symptoms of a miscalibrated information projection strategy in NPDOA experiments? You may observe several key performance indicators that signal a need for strategy calibration:

- Premature Convergence: The algorithm settles on a sub-optimal solution early in the iterative process, failing to explore the solution space adequately [3].

- Stagnation: The neural populations cease to improve the solution, despite continued iterations, indicating a breakdown in productive information exchange [3].

- Erratic Optimization Paths: The algorithm's performance becomes unstable, with wide fluctuations in fitness values, suggesting poor control over the transition from exploration to exploitation.

Q2: Which key parameters directly govern the information projection strategy and require systematic calibration? The information projection strategy's behavior is primarily controlled by the following parameters, which should be the initial focus of your calibration efforts:

- Projection Threshold: Determines the minimum information quality or similarity required for communication between neural populations.

- Coupling Coefficient: Controls the strength of influence one neural population has on another during information exchange.

- Divergence Factor: Regulates the exploratory behavior of the algorithm by controlling how neural populations diverge from the attractor [3].

Q3: Our NPDOA model suffers from premature convergence. What are the recommended calibration steps to enhance exploration? To counteract premature convergence, adjust the parameters to encourage greater exploration of the solution space:

- Decrease the Coupling Coefficient to reduce the influence populations have on each other, preventing the entire system from homing in on a local optimum too quickly.

- Increase the Divergence Factor to strengthen the mechanism that drives neural populations away from the current attractor, fostering exploration [3].

- Adjust the Projection Threshold to a more conservative (higher) value, ensuring that only high-quality information is shared, which can prevent the premature spread of suboptimal solutions.

Q4: How should we validate the performance of a newly calibrated information projection strategy? A robust validation protocol is essential. It is recommended to:

- Use Standardized Benchmark Functions: Evaluate performance against established test sets, such as the IEEE CEC2017 or CEC2022 benchmark functions, to compare against known baselines [10] [3].

- Employ Statistical Analysis: Conduct statistical significance tests (e.g., t-tests, Wilcoxon signed-rank tests) to verify that performance improvements are not due to random chance [3].

- Compare to Baseline Algorithms: Benchmark the calibrated NPDOA against other intelligent optimization algorithms to contextualize its performance [3].

Experimental Protocols for Calibration

Protocol for Establishing a Performance Baseline

Objective: To quantify the baseline performance of the current NPDOA configuration before calibration. Materials: Standard computing environment, implementation of the NPDOA, suite of benchmark functions (e.g., from CEC2017). Methodology:

- Initialize the NPDOA with the default or current parameter set for the information projection strategy.

- Run the optimization process on a minimum of 5 different benchmark functions. Each function should be run for a minimum of 30 independent trials to account for stochastic variability [3].

- Record key performance metrics for each trial, including the final best fitness value, the number of iterations to convergence, and the mean fitness over iterations.

- Calculate the mean and standard deviation for each metric across all trials. This dataset constitutes your performance baseline.

Protocol for Systematic Parameter Calibration

Objective: To methodically identify the optimal values for the projection threshold, coupling coefficient, and divergence factor. Materials: Baseline data from Protocol 3.1, parameter tuning framework (e.g., manual search, Bayesian optimization). Methodology:

- Adopt a one-factor-at-a-time (OFAT) or statistical design-of-experiments (DoE) approach. OFAT is simpler to implement initially: vary one parameter while holding the others constant.

- For each parameter configuration, execute steps 2-4 from Protocol 3.1.

- Compare the results of each configuration against the established baseline. The optimal configuration is the one that yields a statistically significant improvement in the desired performance metrics (e.g., lower final fitness value, faster convergence) without introducing instability.

Data Presentation

Quantitative Data from NPDOA Performance Analysis

The following table summarizes hypothetical quantitative data from a NPDOA calibration experiment using benchmark functions. This format allows for easy comparison of algorithm performance before and after calibration.

Table 1: Performance Comparison of NPDOA Before and After Information Projection Strategy Calibration on CEC2017 Benchmark Functions (Mean ± Std. Deviation over 30 runs)

| Benchmark Function | Default Parameters (Baseline) | Calibrated Parameters | Performance Improvement |

|---|---|---|---|

| F1 (Shifted Sphere) | 5.42e-03 ± 2.11e-04 | 2.15e-05 ± 1.03e-06 | ~250x |

| F7 (Step Function) | 1.15e+02 ± 8.45e+00 | 5.67e+01 ± 4.21e+00 | ~50% |

| F11 (Hybrid Function 1) | 1.89e+02 ± 1.05e+01 | 8.91e+01 ± 5.64e+00 | ~53% |

| Convergence Iterations | 1250 ± 150 | 850 ± 95 | ~32% Faster |

Research Reagent Solutions for Computational Experiments

Table 2: Essential "Reagents" for NPDOA Computational Experiments

| Item Name | Function/Explanation |

|---|---|

| Benchmark Function Suite (e.g., CEC2017) | A standardized set of mathematical optimization problems used to evaluate, compare, and validate the performance of the algorithm objectively [3]. |

| Stochastic Reverse Learning | A population initialization strategy used to enhance the quality and diversity of the initial neural populations, improving the algorithm's exploration capabilities [3]. |

| Fitness Function | A user-defined function that quantifies the quality of any given solution. It is the objective the NPDOA is designed to optimize. |

| Statistical Testing Framework (e.g., Wilcoxon Test) | A set of statistical methods used to rigorously determine if the performance differences between algorithm configurations are statistically significant and not due to random chance [3]. |

Mandatory Visualization

NPDOA Information Projection Logic

Strategy Calibration Workflow

Key Parameter Interactions

Parameter Tuning Protocols for Clinical Trial Optimization Problems

Frequently Asked Questions (FAQs)

General NPDOA & Clinical Trial Optimization

Q1: What is the NPDOA and how is it relevant to clinical trial optimization? The Neural Population Dynamics Optimization Algorithm (NPDOA) is a novel brain-inspired meta-heuristic algorithm designed for solving complex optimization problems [1]. It simulates the activities of interconnected neural populations in the brain during cognition and decision-making. For clinical trial optimization, NPDOA is highly relevant for tasks such as optimizing patient enrollment, protocol design complexity scoring, and resource allocation, as it effectively balances exploration of new solutions (exploration) and refinement of promising ones (exploitation) [1] [26].

Q2: What are the core strategies of the NPDOA that require calibration? The NPDOA operates on three core strategies that require careful calibration [1]:

- Attractor Trending Strategy: Drives neural populations towards optimal decisions, ensuring exploitation capability.

- Coupling Disturbance Strategy: Deviates neural populations from attractors by coupling with other populations, improving exploration ability.

- Information Projection Strategy: Controls communication between neural populations, enabling the transition from exploration to exploitation. The calibration of this strategy is the primary focus of our research.

Q3: Why is parameter tuning critical for applying NPDOA to clinical trial problems? Clinical trial optimization problems, such as protocol design and patient enrichment, involve high-dimensional, constrained spaces with costly evaluations. Proper parameter tuning ensures the NPDOA converges to a high-quality solution efficiently, avoiding wasted computational resources and enabling more reliable, data-driven decisions in trial design, which can ultimately reduce costs and accelerate drug development [1] [26].

Information Projection Strategy Calibration

Q4: What is the primary function of the Information Projection Strategy? The Information Projection Strategy acts as a regulatory mechanism that governs the flow of information between different neural populations within the NPDOA framework. It directly controls the impact of the Attractor Trending and Coupling Disturbance strategies, thereby managing the critical balance between local exploitation and global exploration throughout the optimization process [1].

Q5: What are the key parameters of the Information Projection Strategy that need tuning? While the specific implementation may vary, the tuning typically focuses on parameters that control:

- Projection Rate: The frequency or probability with which information is communicated between populations.

- Influence Weight: The degree to which information from one population can alter the state of another.

- Transition Threshold: The criteria that trigger a shift in strategy, often based on convergence metrics or iteration counts. The optimal values are highly dependent on the specific clinical trial problem landscape [1].

Q6: How can I determine if the Information Projection Strategy is poorly calibrated? Common symptoms of poor calibration include [1]:

- Premature Convergence: The algorithm gets stuck in a local optimum quickly, indicating excessive exploitation.

- Poor Convergence: The algorithm fails to find a satisfactory solution, wandering randomly, indicating excessive exploration.

- Erratic Performance: Large variations in solution quality between independent runs.

Troubleshooting Guides

Issue 1: Algorithm Demonstrates Premature Convergence

Problem: The NPDOA consistently converges to a sub-optimal solution early in the optimization process for a clinical trial design problem.

Diagnosis: This is typically a sign of an imbalance favoring exploitation over exploration. The Information Projection Strategy may be allowing the Attractor Trending Strategy to dominate too quickly or strongly.

Recommended Steps:

- Decrease Influence Weight: Reduce the parameter controlling how strongly one neural population can influence another, weakening the "pull" toward current attractors [1].

- Increase Coupling Disturbance: Amplify the effect of the Coupling Disturbance Strategy to encourage more deviation from the current trajectory [1].

- Delay Strategy Transition: Adjust the Transition Threshold to allow the exploration phase to continue for a longer period before the Information Projection Strategy shifts focus to exploitation [1].

- Validate with Benchmark: Test the modified parameters on a known benchmark function (e.g., from CEC 2017) to confirm improved exploration before applying it to your clinical trial model [1] [3].

Issue 2: Algorithm Fails to Converge or Converges Poorly

Problem: The NPDOA fails to stabilize and does not improve the solution quality, or it converges very slowly.

Diagnosis: This suggests an excess of exploration, preventing the algorithm from refining and committing to promising areas of the solution space. The Information Projection may be too weak or the Coupling Disturbance too strong.

Recommended Steps:

- Increase Influence Weight: Strengthen the communication between populations to reinforce movement toward good solutions [1].

- Enhance Attractor Trending: Boost the parameters of the Attractor Trending Strategy to intensify local search around the best-known solutions [1].

- Adjust Projection Rate: Increase the rate at which information is shared to help the populations coordinate and converge [1].

- Check Complexity: Assess the clinical trial problem itself. Overly complex protocols with numerous endpoints and procedures can create a rugged fitness landscape. Consider simplifying the problem formulation or increasing the initial population size to improve coverage [27].

Issue 3: Inconsistent Performance Across Multiple Runs

Problem: The performance of the NPDOA varies significantly between runs with the same parameter settings, leading to unreliable outcomes.

Diagnosis: High performance variance often points to an oversensitivity to initial conditions or an insufficient balance between the core strategies.

Recommended Steps:

- Stochastic Initialization: Improve the quality and diversity of the initial neural population using strategies like stochastic reverse learning to ensure a better starting point for the search [3].

- Fine-tune Information Projection: The parameters controlling the switch between exploration and exploitation may be too abrupt. Implement a more gradual, adaptive transition based on search progress [1] [2].

- Conduct Robustness Analysis: Perform a sensitivity analysis on the key parameters (Influence Weight, Projection Rate) to identify a stable region where performance is less volatile. The following table summarizes parameter adjustments for this issue:

Table: Parameter Adjustments for Common NPDOA Issues

| Observed Issue | Primary Suspect | Recommended Parameter Adjustments | Expected Outcome |

|---|---|---|---|

| Premature Convergence | Overly strong exploitation | Decrease Influence Weight; Increase Coupling Disturbance strength; Delay Transition Threshold. | Improved global search, escape from local optima. |

| Poor Convergence | Overly strong exploration | Increase Influence Weight; Enhance Attractor Trending strength; Increase Projection Rate. | Improved local search, faster and more stable convergence. |

| Inconsistent Performance | Unbalanced strategy transition | Use stochastic initialization; Implement adaptive, gradual Information Projection; Fine-tune parameters via sensitivity analysis. | More reliable and robust results across independent runs. |

Experimental Protocols for Calibration

Protocol 1: Benchmarking and Baseline Establishment

Objective: To establish a performance baseline for the NPDOA on standard optimization problems before applying it to complex clinical trial models.

Methodology:

- Test Suite Selection: Select a standardized set of benchmark functions, such as the CEC 2017 or CEC 2022 test suites, which include unimodal, multimodal, and composite functions [1] [3].

- Parameter Initialization: Define a baseline set of parameters for the NPDOA based on the literature or preliminary tests [1].

- Performance Metrics: Run the algorithm multiple times (e.g., 30 independent runs) for each function and record key metrics: Best Solution Found, Mean Solution Quality, Standard Deviation, and Convergence Speed.

- Comparative Analysis: Compare the results against other state-of-the-art metaheuristic algorithms (e.g., PSO, GA, WHO) using statistical tests like the Wilcoxon rank-sum test and average Friedman ranking [1] [2].

Visualization: NPDOA Benchmarking Workflow

Protocol 2: Sensitivity Analysis for Information Projection Parameters