Ensuring fMRI Preprocessing Pipeline Reliability: A Guide for Reproducible Research and Clinical Translation

Functional magnetic resonance imaging (fMRI) is a cornerstone of modern neuroscience and is increasingly used to inform drug development and clinical diagnostics.

Ensuring fMRI Preprocessing Pipeline Reliability: A Guide for Reproducible Research and Clinical Translation

Abstract

Functional magnetic resonance imaging (fMRI) is a cornerstone of modern neuroscience and is increasingly used to inform drug development and clinical diagnostics. However, the validity of its findings hinges on the reliability of the preprocessing pipeline, which cleans and standardizes the complex BOLD signal. This article provides a comprehensive guide for researchers and drug development professionals on establishing robust fMRI preprocessing workflows. We explore the foundational steps and common pitfalls, evaluate current methodologies from standardized tools to emerging foundation models, and present optimization strategies for specific clinical populations. Finally, we outline a framework for the quantitative validation and comparative assessment of pipelines, emphasizing metrics that enhance reproducibility and ensure findings are both statistically sound and biologically meaningful.

The Foundation of Reliable fMRI: Understanding Core Preprocessing Steps and Their Impact on Data Integrity

Functional magnetic resonance imaging (fMRI) has become a cornerstone technique for studying brain function in both basic neuroscience and clinical applications. However, the path from raw fMRI data to a scientifically valid and clinically actionable inference is fraught with methodological challenges. The reliability of the entire analytical process is fundamentally constrained by the first and most critical stage: data preprocessing. Variations in preprocessing methodologies across different software toolboxes and research groups have been identified as a major source of analytical variability, undermining the reproducibility of neuroimaging findings [1]. This application note examines the intrinsic link between preprocessing pipeline reliability and reproducible inference, framing the discussion within the context of a broader thesis on fMRI preprocessing pipeline reliability research. We explore the requirements, methodologies, and practical implementations for achieving standardized, robust preprocessing workflows that can support both scientific discovery and clinical decision-making.

The Standardization Imperative: From Analytical Variability to Reproducible Workflows

The Challenge of Analytical Variability

The neuroimaging field has produced a diverse ecosystem of software tools (e.g., AFNI, FreeSurfer, FSL, SPM) with varying implementations of common processing steps [1]. This methodological richness, while beneficial for knowledge formalization and accessibility, has gradually revealed a significant drawback: methodological variability has become an obstacle to obtaining reliable results and interpretations [1]. The Neuroimaging Analysis Replication and Prediction Study (NARPS) starkly illustrated this problem when 70 teams of fMRI experts analyzed the same dataset to test identical hypotheses [1]. The results demonstrated poor agreement in conclusions across teams, with methodological variability identified as the core source of divergent results [1].

Standardization as a Solution

Within classical test theory, standardization emerges as a powerful approach to enhance measurement reliability by reducing sources of variability relating to the measurement instrumentation [1]. For fMRI preprocessing, this involves strictly predetermining all experimental choices and establishing unique workflows. Standardized preprocessing offers numerous benefits for enhancing reliability and reproducibility:

- Reduced analytical variability: By limiting the domain of coexisting analytical alternatives, standardization constrains the multiverse of possible pipelines that must be traversed in mapping result variability [1].

- Improved comparability: Standardized outputs facilitate comparison between studies conducted at different sites, by different sponsors, and with different molecules [2].

- Enhanced quality control: Consistent workflows enable systematic quality assessment and validation across diverse datasets [3].

However, standardization is not without trade-offs. A reliable measure is not necessarily "valid," and standardization may enforce specific assumptions about the data that introduce biases [1]. The challenge lies in developing standardized approaches that maintain robustness across data diversity while preserving flexibility for legitimate methodological variations required by specific research questions.

Quantitative Evidence: Measuring the Impact of Pipeline Reliability

Reproducibility Metrics for fMRI Pipelines

The evaluation of preprocessing pipeline performance requires robust metrics that capture different aspects of reliability. The NPAIRS split-half resampling framework provides prediction and reproducibility metrics that enable empirical optimization of pipeline components [4]. Studies utilizing this approach have demonstrated that both prediction and reproducibility metrics are required for pipeline optimization and often yield somewhat different results, highlighting the multi-faceted nature of pipeline reliability [4].

Single-Subject Reproducibility in Clinical Context

For clinical applications, single-subject reproducibility is particularly critical, as clinicians focus on individual patients rather than group averages. Established test-retest reliability guidelines based on intra-class correlation (ICC) interpret values below 0.40 as poor, 0.40–0.59 as fair, 0.60–0.74 as good, and above 0.75 as excellent [5]. For scientific purposes, a fair test-retest reliability of at least 0.40 is suggested, while an excellent correlation of at least 0.75 is required for clinical applications [5].

Table 1: Single-Subject Reproducibility Improvements with Optimized Filtering

| Pipeline Type | Time Course Reproducibility (r) | Connectivity Correlation (r) | Clinical Applicability |

|---|---|---|---|

| Conventional SPM Pipeline | 0.26 | 0.44 | Not suitable (Poor) |

| Data-Driven SG Filter Framework | 0.41 | 0.54 | Potential (Fair) |

| Improvement | +57.7% | +22.7% | Poor → Fair |

Data derived from [5] demonstrates that conventional preprocessing pipelines yield single-subject time course reproducibility of only r = 0.26, which is far below the threshold for clinical utility [5]. However, implementing a data-driven Savitzky-Golay (SG) filter framework can improve average reproducibility correlation to r = 0.41, representing a 57.7% enhancement that brings single-subject reproducibility to a "fair" level according to established guidelines [5]. This improvement is substantial but also highlights the significant gap that remains before fMRI reaches the "excellent" reliability (ICC > 0.75) required for routine clinical use [5].

Foundation Models and Reproducibility

Recent advances in foundation models for fMRI analysis offer promising approaches to enhance reproducibility through large-scale pre-training. The NeuroSTORM model, pre-trained on 28.65 million fMRI frames from over 50,000 subjects, demonstrates how standardized representation learning can achieve consistent performance across diverse downstream tasks including age and gender prediction, phenotype prediction, and disease diagnosis [6]. By learning generalizable representations directly from 4D fMRI volumes, such models reduce sensitivity to acquisition variations and mitigate variability introduced by preprocessing pipelines [6].

Protocols for Pipeline Optimization and Validation

Protocol 1: Savitzky-Golay Filter Optimization for Enhanced Single-Subject Reproducibility

Background: Single-subject fMRI time course reproducibility is critical for clinical applications but remains limited in conventional pipelines. This protocol outlines a method for optimizing Savitzky-Golay (SG) filter parameters to enhance reproducibility [5].

Materials:

- fMRI data from a working memory task (e.g., Sternberg tasks)

- FreeSurfer/FsFast pipelines for preprocessing

- MATLAB with Custom CleanBrain code (https://github.com/hinata2305/CleanBrain)

Procedure:

- Data Acquisition and Preprocessing:

- Acquire fMRI data using a block-designed working memory task (e.g., verbal and spatial Sternberg tasks)

- Perform initial preprocessing using FreeSurfer's recon-all pipeline for segmentation

- Co-register 3D gray matter time courses to 2D FS_average space using FsFast spherical alignment

- Extract time courses from 34 working memory-related ROIs based on meta-analysis

Empirical Predictor Time Course Generation:

- Calculate subject-specific hemodynamic response function (HRF) by averaging task-related signal changes in the time course

- Use this empirical HRF rather than a canonical HRF to account for individual variations in BOLD expression

Parameter Optimization:

- Define optimization targets: maximize correlation between filtered time course and empirical predictor while maintaining autocorrelations within predefined limits

- Use brute-force algorithms to test different combinations of SG filter parameters (window size, polynomial order)

- Set autocorrelation limits based on the empirical predictor time course

Validation:

- Apply optimized SG filters to a distinct cognitive experiment

- Assess improvement in test-retest reliability of individual subject time courses

- Evaluate enhancement in detectable connectivity

- Verify that residual noise time courses do not contain task-related frequency bands

Expected Outcomes: Implementation of this protocol typically improves average time course reproducibility from r = 0.26 to r = 0.41 and connectivity correlation from r = 0.44 to r = 0.54 [5].

Protocol 2: Foundation Model Pre-training for Generalizable fMRI Analysis

Background: Foundation models represent a paradigm-shifting approach for enhancing reproducibility through large-scale pre-training and adaptable architectures. This protocol outlines the pre-training procedure for the NeuroSTORM foundation model [6].

Materials:

- Large-scale fMRI datasets (UK Biobank, ABCD, HCP-YA, HCP-A, HCP-D)

- Computational resources capable of handling 4D fMRI volumes (up to 10^6 voxels per scan)

- PyTorch framework with custom NeuroSTORM implementation (github.com/CUHK-AIM-Group/NeuroSTORM)

Procedure:

- Data Curation:

- Assemble a diverse corpus integrating multiple large-scale neuroimaging datasets

- Ensure data spans diverse demographics (ages 5-100), clinical conditions, and acquisition protocols

- Preprocess all data following BIDS standards and consistent preprocessing protocols

Model Architecture Implementation:

- Implement a Shifted-Window Mamba (SWM) backbone combining linear-time state-space modeling with shifted-window mechanisms

- Design architecture to efficiently process 4D fMRI volumes while reducing computational complexity and GPU memory usage

Pre-training Strategy:

- Apply Spatiotemporal Redundancy Dropout (STRD) module during pre-training to learn inherent characteristics in fMRI data

- Use self-supervised learning objectives to capture noise-resilient patterns

- Employ multi-task learning across different fMRI paradigms (resting-state, task-based)

Downstream Adaptation:

- Implement Task-specific Prompt Tuning (TPT) strategy for fine-tuning on specific applications

- Use minimal trainable, task-specific parameters when adapting to new tasks

- Validate across multiple downstream tasks: age/gender prediction, phenotype prediction, disease diagnosis, fMRI retrieval, tfMRI state classification

Expected Outcomes: A general-purpose fMRI foundation model that achieves state-of-the-art performance across diverse tasks, with enhanced reproducibility and transferability across populations and acquisition protocols [6].

Table 2: Key Research Reagents and Computational Resources for fMRI Pipeline Development

| Resource | Type | Function | Access |

|---|---|---|---|

| fMRIflows | Software Pipeline | Fully automatic neuroimaging pipelines for fMRI analysis, performing standardized preprocessing, 1st- and 2nd-level univariate and multivariate analyses | https://github.com/miykael/fmriflows [3] |

| NeuroSTORM | Foundation Model | General-purpose fMRI analysis through large-scale pre-training, enabling enhanced reproducibility and transferability | https://github.com/CUHK-AIM-Group/NeuroSTORM [6] |

| BIDS Standard | Data Standard | Consistent framework for structuring data directories, naming conventions, and metadata specifications to maximize shareability | https://bids.neuroimaging.io/ [1] |

| fMRIPrep | Software Pipeline | Robust automated fMRI preprocessing pipeline with BIDS compliance, generating quality control measures | https://fmriprep.org/ [7] [1] |

| CleanBrain | MATLAB Package | Implementation of data-driven SG filter framework for enhancing single-subject time course reproducibility | https://github.com/hinata2305/CleanBrain [5] |

| OpenNeuro | Data Repository | Platform for sharing BIDS-formatted neuroimaging data, enabling testing of robustness across hundreds of datasets | https://openneuro.org/ [3] [1] |

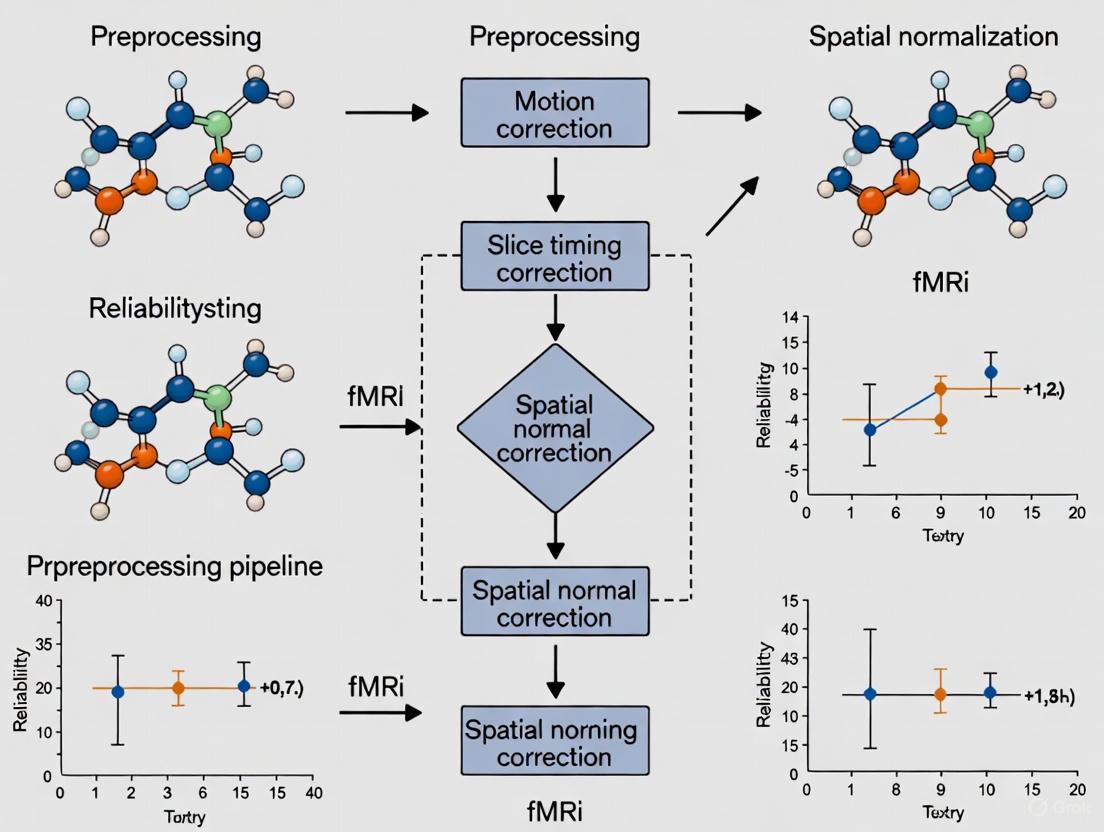

Visualizing the Preprocessing Reliability Framework

Relationship Between Pipeline Standardization and Inference Reliability

fMRI Pipeline Optimization and Validation Workflow

Application in Drug Development and Clinical Translation

fMRI in the Drug Development Pipeline

The reliability of fMRI preprocessing pipelines has particular significance in drug development, where functional neuroimaging has potential applications across multiple phases:

- Phase 0/1: Detecting functional CNS effects of pharmacological treatment in appropriate brain regions, providing indirect evidence of target engagement [2].

- Phase 2/3: Demonstrating normalization of disease-related fMRI signals at one or few dose levels, providing objective demonstration of disease modification [2].

For regulatory acceptance, fMRI readouts must be both reproducible and modifiable by pharmacological agents [2]. The high burden of proof for biomarker qualification requires rigorous characterization of precision and reproducibility, which directly depends on preprocessing pipeline reliability [2]. Currently, no fMRI biomarkers have been fully qualified by regulatory agencies, though initiatives like the European Autism Interventions project have requested qualification of fMRI biomarkers for stratifying autism spectrum disorder populations [2].

Clinical Applicability and Current Limitations

Despite technical advances, the clinical applicability of fMRI remains constrained by reliability limitations. A recent study concluded that roughly 10-30% of the population may benefit from optimized fMRI pipelines in a clinical setting, while this number was negligible for conventional pipelines [5]. This highlights both the potential value of pipeline optimization and the substantial work still required to make fMRI clinically viable for broader populations.

For presurgical mapping, a meta-analysis demonstrated that conducting fMRI mapping prior to surgical procedures reduces the likelihood of functional deterioration afterward (odds ratio: 0.25; 95% CI: 0.12, 0.53; P < .001) [5]. This evidence supports the clinical value of fMRI when properly implemented, underscoring the importance of reliable preprocessing pipelines for generating clinically actionable results.

The critical link between preprocessing pipeline reliability and reproducible inference in fMRI analysis cannot be overstated. As the field moves toward more clinical applications and larger-scale studies, standardization efforts through initiatives like BIDS, NiPreps, and foundation models offer promising pathways to enhanced reproducibility [6] [1]. The quantitative evidence presented demonstrates that methodical optimization of preprocessing components can substantially improve single-subject reproducibility, though significant gaps remain before fMRI reaches the reliability standards required for routine clinical use [5].

Future developments in fMRI pipeline reliability research should focus on several key areas: (1) enhanced computational efficiency to enable more sophisticated processing on large-scale datasets; (2) improved adaptability across diverse populations, from infancy to old age [7]; (3) more rigorous validation metrics that capture real-world clinical utility; and (4) greater integration with artificial intelligence approaches that can learn robust representations from large, multi-site datasets [6]. By addressing these challenges through collaborative, open-source development of standardized preprocessing tools, the neuroimaging community can strengthen the foundation upon which reproducible scientific inference and clinical decision-making are built.

A Step-by-Step Deconstruction of the Standard Preprocessing Workflow

Functional Magnetic Resonance Imaging (fMRI) has revolutionized our ability to non-invasively study brain function and connectivity. The preprocessing of raw fMRI data constitutes an essential foundation for all subsequent neurological and clinical inferences, as it transforms noisy, artifact-laden raw signals into standardized, analyzable data. The inherent complexity of fMRI data, which captures spontaneous blood oxygen-level dependent (BOLD) signals alongside numerous non-neuronal contributions, necessitates a rigorous preprocessing workflow to ensure valid scientific conclusions [8]. Within the broader context of fMRI preprocessing pipeline reliability research, this protocol deconstructs the standard workflow, emphasizing how each step contributes to the enhancement of data quality, reproducibility, and ultimately, the validity of neuroscientific and clinical findings. The establishment of robust, standardized protocols is particularly crucial for multi-site studies and clinical applications, such as drug development, where consistent measurement across time and location is paramount for detecting subtle treatment effects [9] [10].

The neuroimaging field has developed several sophisticated software packages to address the challenges of fMRI preprocessing. While implementations differ, they converge on a common set of objectives: removing unwanted artifacts, correcting for anatomical and acquisition-based distortions, and transforming data into a standard coordinate system for group-level analysis. The following diagram illustrates the logical sequence of a standard, volume-based preprocessing workflow, from raw data input to a preprocessed output ready for statistical analysis.

Figure 1: A standard volume-based fMRI preprocessing workflow. The yellow start node indicates raw data input, green nodes represent core preprocessing steps, red ellipses indicate optional or conditional data inputs, and the blue end node signifies the final preprocessed data ready for analysis.

Several major software pipelines implement this standard workflow, each with distinct strengths, methodological approaches, and suitability for different research contexts. The table below provides a structured comparison of these widely-used tools.

Table 1: Comparative Analysis of Major fMRI Preprocessing Pipelines

| Pipeline Name | Core Methodology | Primary Output Space | Key Advantages | Typical Use Cases |

|---|---|---|---|---|

| fMRIPrep [11] | Analysis-agnostic, robust integration of best-in-breed tools (ANTs, FSL, FreeSurfer) | Volume & Surface | High reproducibility, minimal manual intervention, less uncontrolled spatial smoothness | Diverse fMRI data; large-scale, reproducible studies |

| CONN - Default Pipeline [12] | SPM12-based with realignment, unwarp, slice-time correction, direct normalization | Volume | User-friendly GUI, integrated denoising and connectivity analysis | Volume-based functional connectivity studies |

| FuNP [8] | Fusion of AFNI, FSL, FreeSurfer, Workbench components | Volume & Surface | Incorporates recent methodological developments, user-friendly GUI | Studies requiring combined volume/surface analysis |

| DeepPrep [13] | Replaces time-consuming steps (e.g., registration) with deep learning models | Volume & Surface | Dramatically reduced computation time (minutes vs. hours) | Rapid processing of large datasets; studies leveraging AI |

| HALFpipe [14] | Semi-automated pipeline based on fMRIPrep, designed for distributed analysis | Volume & Surface | Standardized for ENIGMA consortium; enables meta-analyses without raw data sharing | Large-scale, multi-site consortium studies |

Detailed Protocol: Step-by-Step Deconstruction

Functional Realignment and Motion Correction

Purpose: To correct for head motion during the scanning session, which is a major source of artifact and spurious correlations in functional connectivity MRI networks [11].

Detailed Methodology: The functional time-series is realigned using a rigid-body registration where all scans are coregistered and resampled to a reference image (typically the first scan of the first session) using b-spline interpolation [12]. As part of this step, the realign & unwarp procedure in SPM12 also estimates the derivatives of the deformation field with respect to head movement. This addresses susceptibility-distortion-by-motion interactions, a key factor in improving data quality. When a double-echo sequence is available, the field inhomogeneity (fieldmap) inside the scanner is estimated and used for Susceptibility Distortion Correction (SDC), resampling the functional data along the phase-encoded direction to correct absolute deformation [12].

Outputs: The primary outputs are the realigned functional images, a new reference image (the average across all scans after realignment), and estimated motion parameters. These motion parameters (typically a .txt file with rp_ prefix) are critical as they are used for outlier identification in subsequent steps and as nuisance regressors during denoising [12].

Slice-Timing Correction

Purpose: To correct for the temporal misalignment between different slices introduced by the sequential nature of the fMRI acquisition protocol.

Detailed Methodology: Slice-timing correction (STC) is performed using sinc-interpolation to time-shift and resample the signal from each slice to match the time of a single reference slice (usually the middle of the acquisition time, TA). The specific slice acquisition order (ascending, interleaved, etc.) must be specified by the user or read automatically from the sidecar .json file in a BIDS-formatted dataset [12].

Outputs: The STC-corrected functional data, typically stored with an 'a' filename prefix in SPM-based pipelines [12].

Outlier Identification ("Scrubbing")

Purpose: To identify and flag individual volume acquisitions (scans) that are contaminated by excessive motion or abrupt global signal changes.

Detailed Methodology: Potential outlier scans are identified using framewise displacement (FD) and global BOLD signal changes. A common threshold, as implemented in CONN, flags acquisitions with FD above 0.9mm or global BOLD signal changes above 5 standard deviations [12]. Framewise displacement is computed by estimating the largest displacement among six control points placed at the center of a bounding box around the brain. These flagged scans are not immediately removed but are later used to create a "scrubbing" regressor for denoising, or the volumes can be outright removed from analysis.

Outputs: A list of potential outliers (imported as a 'scrubbing' first-level covariate) and a file containing scan-to-scan global BOLD change and head-motion measures for quality control (QC) [12].

Coregistration and Spatial Normalization

Purpose: To align the functional data with the subject's high-resolution anatomical image and subsequently warp both into a standard stereotaxic space (e.g., MNI) to enable group-level analysis.

Detailed Methodology: This is typically a two-step process.

- Coregistration: A rigid registration aligns the mean functional image (after realignment) to the subject's T1-weighted anatomical image. This optimizes the alignment based on the boundary between gray and white matter [13].

- Spatial Normalization: The functional and anatomical data are normalized into standard MNI space. In a Direct approach (e.g., CONN's default), unified segmentation, and normalization is applied separately to the functional and structural data. In an Indirect approach, often considered higher quality, the non-linear transformation is estimated from the high-resolution structural data and then applied to the functional data [12]. Deep learning pipelines like DeepPrep use tools like SynthMorph to achieve this non-linear volumetric registration in minutes instead of hours [13].

Outputs: MNI-space functional and anatomical data, and tissue class masks (Grey Matter, White Matter, CSF) which are used to create masks for extracting signals and for denoising [12].

Spatial Smoothing

Purpose: To increase the BOLD signal-to-noise ratio, suppress high-frequency noise, and accommodate residual anatomical variability across subjects.

Detailed Methodology: The normalized functional data is spatially convolved with a 3D Gaussian kernel. The full width at half maximum (FWHM) of the kernel is a key parameter; a common default is 8mm FWHM for volume-based analyses [12]. Surface-based pipelines perform smoothing along the cortical surface manifold rather than in 3D volume space.

Outputs: The final preprocessed, smoothed functional data, typically stored with an 's' filename prefix, ready for statistical analysis and denoising [12].

The Scientist's Toolkit: Essential Research Reagents

A successful preprocessing experiment relies on a suite of software tools and data resources. The following table details the key "research reagents" required for implementing a standard fMRI preprocessing workflow.

Table 2: Essential Materials and Software Tools for fMRI Preprocessing

| Item Name | Function/Purpose | Specifications & Alternatives |

|---|---|---|

| fMRIPrep [11] [15] | A robust, analysis-agnostic pipeline for preprocessing diverse fMRI data. Ensures reproducibility and minimizes manual intervention. | Version 23.1.0+. Alternative: CONN Toolbox, SPM. |

| Reference Atlas [12] | Standard brain template for spatial normalization, enabling cross-subject and cross-study comparison. | MNI152 (ICBM 2009b Non-linear Symmetric). Alternatives: Colin27, FSAverage for surface-based analysis. |

| Tissue Probability Maps (TPMs) [12] | Prior maps of gray matter, white matter, and CSF used to guide the segmentation of structural and functional images. | Default TPMs from SPM12 or FSL. |

| FieldMap Data [12] | Optional but recommended data to estimate and correct for susceptibility-induced distortions (geometric distortions and signal loss). | Requires specific sequence: double-echo (magnitude and phase-difference images) or pre-computed fieldmap in Hz. |

| Quality Control Metrics [11] [8] | Quantitative measures to assess the success of preprocessing and identify potential data quality issues. | Framewise Displacement, Global Signal Change, Image Quality Metrics (IQMs) from MRIQC. |

| 1-(Prop-2-yn-1-yl)piperazine-2-one | 1-(Prop-2-yn-1-yl)piperazine-2-one|RUO|Supplier | 1-(Prop-2-yn-1-yl)piperazine-2-one is a high-purity biochemical reagent for research use only (RUO). It is not for human or veterinary use. |

| 5-Fluoro-1-methyl-3-nitropyridin-2(1H)-one | 5-Fluoro-1-methyl-3-nitropyridin-2(1H)-one|CAS 1616526-85-2 |

Reliability Considerations and Protocol Validation

The reliability of fMRI preprocessing pipelines is not merely a technical concern but a fundamental prerequisite for clinical translation. Poor test-retest reliability, often quantified by low intraclass correlation coefficients (ICCs), can undermine the detection of true biological effects, including those induced by therapeutic interventions [10]. Several strategies can optimize reliability:

- Pipeline Robustness: Using standardized, automated pipelines like fMRIPrep reduces uncontrolled variability introduced by ad-hoc workflows and manual intervention, directly enhancing reproducibility [11].

- Handling Special Populations: Preprocessing must be adapted for specific clinical populations. For example, in stroke patients, a lesion-specific pipeline that accounts for brain lesions when computing tissue masks and incorporates ICA to address lesion-driven artifacts has been shown to significantly reduce spurious connectivity [16].

- Cross-Species Standardization: The principles of standardization extend to animal models. Protocols for rodent fMRI that are generalizable across laboratories and scanner platforms facilitate the acquisition of large, comparable datasets essential for uncovering often small effects, thereby improving the reliability of preclinical findings [9].

The validation of any preprocessing protocol should include a quantitative quality control step. This involves calculating image quality metrics (IQMs) such as framewise displacement, signal-to-noise ratio (SNR), and contrast-to-noise ratio (CNR), and comparing resting-state networks (RSNs) obtained with the pipeline against pre-defined canonical networks to ensure biological validity [8]. By rigorously deconstructing and standardizing each step of the preprocessing workflow, researchers can significantly enhance the reliability of their fMRI data, paving the way for more robust and reproducible discoveries in basic neuroscience and clinical drug development.

Functional magnetic resonance imaging (fMRI) has become an indispensable tool for non-invasively investigating human brain function and functional connectivity [17]. However, the blood-oxygen-level-dependent (BOLD) signal measured in fMRI is inherently characterized by a poor signal-to-noise ratio (SNR), presenting a major barrier to its spatiotemporal resolution, utility, and ultimate impact [17]. The BOLD signal fluctuations related to neuronal activity are subtle, often representing only 1–2% of the total signal change under optimal conditions, and are dwarfed by various noise sources [18]. Effectively identifying and mitigating these artifacts is therefore a prerequisite for any reliable fMRI preprocessing pipeline. The major sources of noise can be categorized into three primary types: motion artifacts, physiological noise, and artifacts from magnetic field inhomogeneities. This application note details the characteristics of these noise sources, provides quantitative assessments of their impact, and outlines structured protocols for their mitigation to enhance the reliability of fMRI data in research and clinical applications.

Motion Artifacts

Characterization and Impact

Head motion during an fMRI scan is a major confound, causing disruptions in the BOLD signal through several mechanisms. It changes the tissue composition within a voxel, distorts the local magnetic field, and disrupts the steady-state magnetization recovery of the spins in the slices that have moved [19]. These effects lead to signal dropouts and artifactual amplitude changes that can dwarf true neuronal signals [18]. Crucially, motion artifacts can introduce spurious correlations in resting-state fMRI, with spatial patterns that can even resemble known resting-state networks like the default mode network, severely compromising the interpretation of functional connectivity [18]. The problem is exacerbated in clinical populations and pediatric studies where subject compliance may be variable.

Quantitative Metrics and Data

Table 1: Motion Artifact Impact and Mitigation Strategies

| Metric/Strategy | Description | Impact on Data | Recommended Correction |

|---|---|---|---|

| Framewise Displacement (FD) | Measures volume-to-volume head movement. | Volumes with high FD can cause signal changes exceeding true BOLD signal. | Volume Censoring: Removing high-motion volumes and adjoining frames [19]. |

| Distance-Dependent Bias | A systematic bias where correlations between signals from nearby regions are artificially enhanced. | Renders functional connectivity metrics unreliable [19]. | Structured Matrix Completion: A low-rank matrix completion approach to recover censored data [19]. |

| QC-FC Correlation | Correlates motion parameters with functional connectivity matrices. | High values indicate motion is inflating correlations; a key diagnostic metric [20]. | Concatenated Regression: Using all nuisance regressors in a single model, though sequential regression may offer superior test-retest reliability [20]. |

Experimental Protocol for Motion Correction

Objective: To implement a motion correction pipeline that effectively minimizes motion-induced variance without reintroducing artifacts or sacrificing data integrity.

- Data Acquisition & Realignment: Acquire fMRI data using fast imaging sequences (e.g., Echo Planar Imaging - EPI) to minimize within-volume motion. Perform six-parameter rigid body transformation to realign all volumes to a reference volume (e.g., the first volume). This assumes the head is a rigid body and corrects for translational and rotational movements between volumes [18].

- Nuisance Regression: Generate nuisance regressors from the realignment parameters. Evidence suggests that a concatenated regression approach, where all regressors (motion, physiological, etc.) are included in a single model, is more effective than sequential application at preventing the reintroduction of artifacts [20].

- Identification and Treatment of High-Motion Volumes:

- Calculate Framewise Displacement (FD) for each volume.

- Censoring (Scrubbing): Identify and remove volumes where FD exceeds a predetermined threshold (e.g., 0.2-0.5 mm). Also remove the volumes immediately preceding and following these high-motion time points to account for spin-history effects [19].

- Advanced Recovery (Optional): To address the data loss and discontinuity caused by censoring, employ a structured low-rank matrix completion method. This technique models the fMRI time series using a Linear Recurrence Relation (LRR) and fills the censored entries by exploiting the underlying structure and correlations in the data, resulting in a continuous, motion-compensated time series [19].

Physiological Artifacts

Characterization and Impact

Physiological noise originates from non-neuronal, periodic bodily processes, primarily the cardiac cycle and respiration. These processes cause small head movements, changes in chest volume that alter the magnetic field, and variations in cerebral blood flow and volume, all of which introduce structured noise into the fMRI signal [18]. In resting-state fMRI, where spontaneous neuronal signal changes are typically only 1-2%, the signal contributions from physiological noise remain a considerable fraction, posing a significant challenge for analysis [18]. Unlike thermal noise, physiological noise is structured and non-white, meaning it has a specific temporal signature and cannot be removed by simple averaging.

Quantitative Metrics and Data

Table 2: Physiological Noise Sources and Correction Tools

| Noise Source | Primary Effect | Tool/Algorithm for Mitigation | Function |

|---|---|---|---|

| Cardiac Pulsation | Rhythmic signal changes, particularly near major blood vessels. | RETROICOR | Uses physiological recordings to create noise regressors based on the phase of the cardiac and respiratory cycles. |

| Respiration | Causes magnetic field fluctuations and spin history effects. | Respiratory Volume per Time (RVT) | Models the low-frequency influence of breathing volume on the BOLD signal. |

| Non-Neuronal Global Signal | Widespread signal fluctuations of non-neuronal origin. | ICA-based Denoising (e.g., tedana) | Identifies and removes noise components deemed to be non-BOLD related based on their TE-dependence or other statistics [21]. |

Experimental Protocol for Physiological Noise Correction

Objective: To separate and remove signal components arising from cardiac and respiratory cycles from the neurally-derived BOLD signal.

- Physiological Data Recording: Simultaneously with the fMRI scan, record the subject's cardiac pulse (using a pulse oximeter) and respiration (using a pneumatic belt). These signals should be recorded at a high sampling rate (e.g., 100 Hz or more).

- Noise Model Generation:

- For cardiac and respiratory phase-based noise, use the RETROICOR algorithm. This involves determining the phase of the cardiac and respiratory cycles at each fMRI volume acquisition and generating Fourier series regressors to model the noise associated with these phases.

- For low-frequency respiratory effects, calculate the Respiratory Volume per Time (RVT) from the respiration trace and convolved it with a canonical respiratory response function to create appropriate regressors.

- Component-Based Correction (for Multi-Echo data): If multi-echo fMRI data is available, use a tool like tedana. This approach leverages the fact that BOLD-induced signal changes exhibit a linear dependence on echo time (TE), while many physiological and motion-related artifacts do not. Independent components analysis (ICA) is performed, and components are classified and removed based on their TE-dependence [21].

- Regression: Include the generated physiological noise regressors (from step 2) in the same concatenated nuisance regression model as the motion parameters to remove them from the BOLD time series.

Magnetic Field Inhomogeneities

Characterization and Impact

Magnetic field inhomogeneities refer to distortions in the main static magnetic field (B0) caused by variations in magnetic susceptibility at tissue interfaces (e.g., between air in sinuses and brain tissue). These inhomogeneities are significantly increased at higher magnetic field strengths (e.g., 3T and 7T) [22]. In fMRI, these distortions manifest as geometric warping, signal loss (dropouts), and blurring, particularly in regions near the sinuses and ear canals, such as the frontal and temporal lobes [23]. These artifacts compromise spatial specificity and can lead to misalignment between functional data and anatomical references.

Quantitative Metrics and Data

Table 3: Distortion Correction Methods at High Magnetic Fields

| Correction Method | Principle | Performance at 7T (High-Resolution) | Key Metric Improvement |

|---|---|---|---|

| B0 Field Mapping | Acquires a map of the static magnetic field inhomogeneities and corrects the EPI data during reconstruction. | Improves cortical alignment. | Moderate improvement in Dice Coefficient (DC) and Correlation Ratio (CR) compared to no correction [23]. |

| Reverse Phase-Encoding (Reversed-PE) | Acquires two EPI volumes with opposite phase-encoding directions to estimate the distortion field. | Shows superior performance in achieving faithful anatomical alignment, especially in frontal/temporal regions [23]. | More substantial improvements in DC and CR, with the largest benefit in regions of high susceptibility [23]. |

Experimental Protocol for Distortion Correction

Objective: To correct for geometric distortions and signal loss in fMRI data caused by magnetic field inhomogeneities.

- Data Acquisition for Correction:

- Option A (Field Map): Acquire a field map. This typically involves a dual-echo gradient echo sequence that allows for the calculation of a B0 field map, which quantifies the field inhomogeneity at each voxel.

- Option B (Reverse Phase-Encoding): Acquire two additional EPI volumes (one in the Anterior-Posterior phase-encoding direction and one in the Posterior-Anterior direction) with identical parameters to the functional run. This is often the preferred method for high-resolution fMRI at ultra-high fields [23].

- Application of Correction:

- For Field Map correction, use the field map to unwarp the functional time series, correcting the geometric distortions.

- For Reverse Phase-Encoding correction, use tools such as FSL's

topupto estimate the distortion field from the two opposing phase-encoding volumes and then apply this field to correct the entire functional time series.

- Validation: After correction, validate the results by inspecting the alignment of the corrected functional data with the subject's high-resolution anatomical scan. Quantitative metrics like the Dice Coefficient (DC) and Correlation Ratio (CR) can be used to assess the improvement in cortical alignment [23].

The Scientist's Toolkit: Research Reagent Solutions

Table 4: Essential Tools for fMRI Noise Mitigation

| Tool/Software | Type | Primary Function in Noise Mitigation |

|---|---|---|

| NORDIC PCA | Denoising Algorithm | Suppresses thermal noise, the dominant noise source in high-resolution (e.g., 0.5 mm isotropic) fMRI, leading to major gains in tSNR and functional CNR without blurring [17]. |

| Total Variation (TV) Denoising | Denoising Algorithm | Enforces smoothness in the BOLD signal by minimizing total variation. Yields denoised multi-echo fMRI data and enables estimation of smooth, dynamic T2* maps [21]. |

| FuNP | Preprocessing Pipeline | A fully automated, wrapper software that combines components from AFNI, FSL, FreeSurfer, and Workbench to provide both volume- and surface-based preprocessing pipelines [8]. |

| tedana | Preprocessing Toolbox | Specialized for multi-echo fMRI data; uses ICA to denoise data by identifying and removing components that do not exhibit linear TE-dependence [21]. |

| Structured Matrix Completion | Advanced Motion Correction | Recovers missing entries from censored (scrubbed) fMRI time series using a low-rank prior, mitigating motion artifacts while maintaining data continuity [19]. |

| 5-Hydroxybenzothiazole-2-carboxylic acid | 5-Hydroxybenzothiazole-2-carboxylic acid, CAS:1261809-89-5, MF:C8H5NO3S, MW:195.2 g/mol | Chemical Reagent |

| 3-(4-Chlorothiophen-2-yl)propanoic acid | 3-(4-Chlorothiophen-2-yl)propanoic acid|CAS 89793-51-1 |

Integrated Noise Mitigation Workflow

The following diagram illustrates a logical, integrated workflow for addressing the three major noise sources in a coordinated preprocessing pipeline.

Figure 1: A recommended sequential workflow for mitigating major noise sources in fMRI preprocessing. The process begins with motion correction, followed by physiological noise removal, magnetic field inhomogeneity correction, and concludes with advanced denoising techniques for final signal enhancement.

A rigorous approach to identifying and mitigating noise is fundamental to the reliability of any fMRI preprocessing pipeline. Motion, physiological processes, and magnetic field inhomogeneities represent the most significant sources of artifact that can confound the interpretation of the BOLD signal. By implementing the structured protocols and utilizing the advanced tools outlined in this document—such as motion censoring with matrix completion, model-based physiological noise regression, reverse phase-encoding distortion correction, and powerful denoising algorithms like NORDIC and Total Variation minimization—researchers can significantly enhance the quality and fidelity of their data. This, in turn, ensures more robust and reproducible results in both basic neuroscience research and clinical drug development applications.

Functional Magnetic Resonance Imaging (fMRI) has become a cornerstone of modern neuroscience, enabling non-invasive investigation of brain function and connectivity. However, the reliability of fMRI findings is fundamentally contingent upon the preprocessing pipelines used to remove noise and artifacts from the raw data. Inadequate preprocessing strategies systematically introduce spurious correlations and false activations, threatening the validity of neuroimaging research and its applications in clinical and drug development settings. This application note examines the primary sources of these artifacts, their impacts on functional connectivity and activation analyses, and provides detailed protocols for mitigating these issues within the broader context of fMRI preprocessing pipeline reliability research.

The complex nature of fMRI data, which captures endogenous blood oxygen level-dependent (BOLD) signals alongside numerous confounding factors, necessitates rigorous preprocessing before meaningful inferences can be drawn. As highlighted across multiple studies, failure to adequately address artifacts from head motion, physiological processes, and preprocessing methodologies themselves can generate spurious network connectivity and significantly distort brain-behavior relationships [24] [25] [26]. These issues are particularly pronounced in clinical populations where increased movement and pathological conditions amplify artifacts, potentially leading to erroneous conclusions about brain function and treatment effects.

Head Motion Artifacts

Head movement during fMRI acquisition introduces systematic noise that persists despite standard motion correction approaches. Even after spatial realignment and regression of motion parameters, residual motion artifacts continue to corrupt resting-state functional connectivity MRI (rs-fcMRI) data [24]. These artifacts exhibit distinctive patterns:

- Decreased long-distance correlations between brain regions

- Increased short-distance correlations in proximate brain areas

- Non-linear relationships that linear regression techniques fail to fully capture

The impact of motion is not uniform across studies. Research demonstrates that motion artifacts have particularly severe consequences for clinical populations, including patients with disorders of consciousness (DoC), where inherent limitations in compliance and increased discomfort lead to greater movement [26]. In these populations, standard preprocessing pipelines may fail to detect known networks such as the default mode network (DMN), potentially leading to incorrect conclusions about network preservation.

Surface-Based Analysis Biases

Surface-based analysis of fMRI data, while offering advantages in cortical alignment, introduces its own unique artifacts. The mapping from volumetric voxels to surface vertices creates uneven inter-vertex distances across the cortical sheet [27]. This spatial bias manifests as:

- Higher vertex density in sulci compared to gyral regions

- Stronger correlations between neighboring sulcal vertices than between gyral vertices

- Incorporation of anatomical folding information into fMRI time series

This "gyral bias" systematically distorts a range of common analyses including test-retest reliability, functional fingerprinting, parcellation approaches, and regional homogeneity measures [27]. Critically, because vertex density tracks individual cortical folding patterns, the bias introduces subject-specific anatomical information into functional connectivity measures, creating spurious correlations that can be misinterpreted as neural phenomena.

Filter-Induced Correlations

Standard preprocessing pipelines commonly employ band-pass filters (typically 0.009-0.08 Hz or 0.01-0.10 Hz) to isolate frequencies of interest in resting-state fMRI data. However, these filters artificially inflate correlation estimates between independent time series [25]. The statistical consequences are severe:

- Increased false positive rates in functional connectivity analyses

- Failure of multiple comparison corrections to fully control Type I errors

- Up to 50-60% of detected correlations in white noise signals remain significant after correction

The cyclic nature of biological signals, combined with filter-induced autocorrelation, creates a fundamental statistical challenge for rs-fMRI. Without appropriate mitigation strategies, these filters systematically amplify spurious correlations, potentially invalidating connectivity findings.

Physiological and Non-Neural Signals

fMRI signals incorporate substantial non-neural contributions from various physiological processes and scanner-related artifacts. Cardiac and respiratory cycles introduce rhythmic fluctuations, while white matter and cerebrospinal fluid signals contain non-neural information that can contaminate connectivity metrics [28] [8]. Traditional denoising approaches based on linear regression may be insufficient to remove nonlinear statistical dependencies between brain regions induced by shared noise sources [28].

Table 1: Major Sources of Spurious Connectivity in fMRI Data

| Source | Impact on Connectivity | Affected Analyses |

|---|---|---|

| Head Motion | Increases short-distance correlations; decreases long-distance correlations | Seed-based correlation, network analyses, group comparisons |

| Surface Vertex Density | Inflates sulcal correlations compared to gyral regions | Surface-based analyses, parcellation, regional homogeneity |

| Band-Pass Filtering | Artificially inflates correlation coefficients between time series | Resting-state functional connectivity, network detection |

| Physiological Noise | Introduces shared fluctuations unrelated to neural activity | All connectivity analyses, especially those without physiological monitoring |

Experimental Evidence and Quantitative Impacts

Motion Artifact Quantification

Research by Power et al. demonstrated that subject motion produces substantial changes in resting-state fcMRI timecourses despite spatial registration and motion parameter regression [24]. In their analysis of multiple cohorts, they found that:

- Framewise displacement varied significantly across age groups, with children showing higher motion parameters

- Data "scrubbing" (removing high-motion frames) produced structured changes in correlation patterns

- Short-distance correlations decreased by up to 30% after rigorous motion correction

- Medium- to long-distance correlations increased by up to 20% after motion artifact removal

The impact of motion was particularly pronounced in developmental and clinical populations, with one child cohort requiring removal of up to 58% of data frames due to excessive motion [24]. These findings highlight how motion artifacts can create spurious developmental or group differences if not adequately addressed.

Surface Analysis Bias Magnitude

The surface-based analysis bias described by Feilong et al. creates substantial distortions in connectivity metrics [27]. Their investigation revealed:

- Inter-vertex distances varied from approximately 1mm in sulci to 3mm at gyral crests

- Vertex areas (proportional to the square of inter-vertex distances) varied by an even greater factor

- Resting-state fMRI correlation between a vertex and its immediate neighbors showed a strong negative association with inter-vertex distance (r = -0.653, p < 0.001)

- Normalized local correlation followed individual cortical folding patterns (r = -0.500, p < 0.001 with sulcal depth)

This bias has particular significance for studies examining individual differences in connectivity, as the artifact incorporates subject-specific anatomical information into functional measures [27].

Filter-Induced Inflation of Correlations

Recent work on the statistical limitations of rsfMRI has quantified the impact of band-pass filtering on correlation inflation [25]. Key findings include:

- Standard band-pass filters (0.009-0.08 Hz) significantly increase correlation estimates between independent time series

- When applied to white noise signals, these filters result in 50-60% of correlations remaining statistically significant after multiple comparison correction

- The combination of cyclic biological signals and narrow band-pass filters creates autocorrelation that invalidates standard statistical assumptions

- Sampling rate selection critically influences the degree of bias introduced by filtering

These findings challenge the validity of many resting-state connectivity studies and emphasize the need for specialized statistical approaches that account for filter-induced artifacts.

Table 2: Quantitative Impact of Preprocessing Artifacts on Connectivity Measures

| Artifact Type | Measurement | Effect Size | Consequence |

|---|---|---|---|

| Head Motion | Change in short-distance correlations | 25-30% increase | False local network detection |

| Head Motion | Change in long-distance correlations | 15-20% decrease | Missed long-range connections |

| Surface Bias | Range of inter-vertex distances | 1mm (sulci) to 3mm (gyri) | Sulcal-gyral correlation differences |

| Surface Bias | Correlation vs. inter-vertex distance | r = -0.653 | Spatial sampling bias |

| Band-Pass Filter | Significant correlations in white noise | 50-60% remain significant | Inflated false positive rate |

Methodological Protocols for Artifact Mitigation

Motion Artifact Correction Protocol

Based on evidence from multiple studies, the following comprehensive motion correction protocol is recommended:

Step 1: Frame-Wise Displacement Calculation

- Compute framewise displacement (FD) from rigid body head realignment parameters

- Apply threshold of FD > 0.2-0.5mm to identify contaminated volumes (adjust based on data quality)

- Generate DVARS (root mean square variance over voxels) to complement FD measures

Step 2: Motion Regression

- Include 24 motion parameters (6 rigid body parameters, their derivatives, and squares) in regression model

- Expand motion parameters using Volterra expansion to capture nonlinear relationships

Step 3: Data Scrubbing

- Remove identified high-motion volumes from analysis

- Replace with interpolation using adjacent low-motion frames

- Alternatively, include spike regressors for contaminated volumes

Step 4: Quality Assessment

- Calculate motion summary statistics for each participant

- Exclude subjects with excessive motion (e.g., >25% frames contaminated)

- Match motion parameters across groups in case-control studies

This comprehensive approach has been shown to significantly reduce motion-related artifacts while preserving neural signals [24] [26].

Surface Analysis Bias Mitigation Protocol

To address surface-based analysis biases, implement the following steps:

Step 1: Surface Mesh Evaluation

- Quantify inter-vertex distances across the cortical surface

- Identify regions with particularly high or low vertex density

- Consider using surface meshes with more uniform vertex spacing

Step 2: Spatial Smoothing Adjustment

- Account for variable vertex density when applying spatial smoothing kernels

- Adjust smoothing kernel size based on local inter-vertex distances

- Use geodesic distance-based smoothing rather than simple Gaussian kernels

Step 3: Validation with Surrogate Data

- Generate random noise time series on the surface mesh

- Calculate local correlation maps for surrogate data

- Compare empirical results to surrogate data to identify bias

Step 4: Control for Sulcal Depth

- Include sulcal depth as a covariate in group-level analyses

- Examine whether findings persist after controlling for anatomical patterns

These steps help mitigate the uneven sampling bias inherent in surface-based analyses [27].

Filtering and Statistical Correction Protocol

To address filter-induced correlations and statistical artifacts:

Step 1: Filter Design

- Adjust sampling rates to align with analyzed frequency band

- Avoid excessively narrow band-pass filters without statistical correction

- Consider alternative filtering approaches that minimize autocorrelation

Step 2: Surrogate Data Analysis

- Generate phase-randomized surrogate data with preserved autocorrelation structure

- Compare empirical correlation distributions to surrogate null distributions

- Use Fourier Transform-based surrogates to account for cyclic properties

Step 3: Pre-whitening Approaches

- Apply pre-whitening to address autocorrelation in time series

- Use autoregressive models to account for temporal dependencies

- Validate pre-whitening effectiveness with diagnostic tests

Step 4: Multiple Comparison Correction

- Implement permutation-based correction methods

- Use false discovery rate (FDR) approaches appropriate for correlated tests

- Consider network-based statistics for connectivity matrices

This statistical framework helps control for artifactual correlations while preserving true neural connectivity [25].

Diagram 1: Comprehensive motion artifact correction workflow integrating framewise displacement calculation, motion parameter regression, and data scrubbing.

Specialized Pipelines for Clinical Populations

Stroke-Specific Preprocessing Pipeline

Recent research has developed specialized preprocessing pipelines for stroke patients with brain lesions [16]. The protocol includes:

Lesion-Aware Processing:

- Account for lesions when computing tissue masks

- Implement lesion masking to prevent spurious connectivity estimates

- Adjust spatial normalization to accommodate structural abnormalities

Artifact Removal:

- Incorporate independent component analysis (ICA) to address lesion-driven artifacts

- Implement specialized denoising strategies for peri-lesional regions

- Validate connectivity measures in both lesioned and preserved networks

Validation:

- Assess pipeline performance using connectivity mean strength metrics

- Evaluate functional connectivity contrast between networks

- Verify that pipeline preserves behavioral prediction accuracy

This stroke-specific pipeline has been shown to significantly reduce spurious connectivity without impacting behavioral predictions [16].

Pipeline for Disorders of Consciousness

For patients with disorders of consciousness, the following enhanced protocol is recommended [26]:

Enhanced Motion Correction:

- Implement aggressive motion parameter regression

- Apply frame-wise exclusion with liberal thresholds

- Use global signal regression as a nuisance covariate

Physiological Noise Removal:

- Incorporate tissue-based regressors (white matter, CSF)

- Apply band-pass filtering (0.009-0.08 Hz) after regression

- Consider component-based noise correction (CompCor)

Validation with DMN Detection:

- Verify default mode network detectability across pipelines

- Compare independent component analysis and seed-based approaches

- Use network detection as quality control metric

This enhanced protocol has demonstrated significantly improved DMN detection in patients with disorders of consciousness [26].

Diagram 2: Specialized preprocessing pipelines for clinical populations including stroke patients and disorders of consciousness (DoC), incorporating lesion masking and enhanced noise removal.

Emerging Solutions and Foundation Models

Automated Preprocessing Platforms

Several automated preprocessing platforms have emerged to address reproducibility challenges in fMRI analysis:

fMRIPrep: A robust, standardized preprocessing pipeline that incorporates best practices from multiple software packages while providing comprehensive quality control outputs [8] [3].

FuNP (Fusion of Neuroimaging Preprocessing): Integrates components from AFNI, FSL, FreeSurfer, and Workbench into a unified platform with both volume- and surface-based processing streams [8].

fMRIflows: Extends beyond preprocessing to include univariate and multivariate single-subject and group analyses, with flexible temporal and spatial filtering options optimized for high-temporal resolution data [3].

These automated platforms reduce pipeline variability and implement current best practices consistently across studies.

The NeuroSTORM Foundation Model

A recent innovation in fMRI analysis is the development of NeuroSTORM, a general-purpose foundation model trained on an unprecedented 28.65 million fMRI frames from over 50,000 subjects [6]. This model:

- Employs a shifted scanning strategy based on a Mamba backbone for efficient 4D fMRI processing

- Incorporates spatial-temporal optimized pre-training with task-specific prompt tuning

- Demonstrates superior performance across diverse downstream tasks including phenotype prediction and disease diagnosis

- Maintains high clinical relevance in predicting psychological/cognitive phenotypes

Foundation models like NeuroSTORM represent a paradigm shift toward standardized, transferable fMRI analysis that may help overcome many current preprocessing challenges [6].

Table 3: Automated Preprocessing Pipelines and Their Specialized Capabilities

| Pipeline | Key Features | Specialized Applications | Validation |

|---|---|---|---|

| fMRIPrep | Robust integration of best practices, comprehensive QC | General-purpose processing, multi-site studies | Extensive validation against manual pipelines |

| FuNP | Combines AFNI, FSL, FreeSurfer, Workbench; GUI interface | Both volume- and surface-based analysis | RSN matching with pre-defined networks |

| fMRIflows | Univariate and multivariate analyses, flexible filtering | Machine learning preparation, high-temporal resolution data | Comparison with FSL, SPM, fMRIPrep |

| fMRIStroke | Lesion-aware processing, ICA for lesion artifacts | Stroke patients with brain lesions | Reduced spurious connectivity, preserved predictions |

The Scientist's Toolkit: Essential Research Reagents

Table 4: Essential Software Tools and Processing Components for fMRI Preprocessing

| Tool/Component | Function | Implementation Considerations |

|---|---|---|

| fMRIPrep | Automated preprocessing pipeline | Default pipeline for standard studies; BIDS-compliant |

| CompCor | Component-based noise correction | Effective for physiological noise removal; linear method that captures nonlinear dependencies |

| FSL | FMRIB Software Library | MELODIC ICA for data-driven artifact identification |

| FreeSurfer | Surface-based reconstruction | Essential for surface-based analyses; provides cortical surface models |

| fMRIStroke | Lesion-specific preprocessing | Critical for stroke populations; open-source tool available |

| NeuroSTORM | Foundation model for fMRI | Emerging approach for standardized analysis; requires significant computational resources |

| Nilearn | Python machine learning library | Provides masking, filtering, and connectivity analysis tools |

| Nipype | Pipeline integration framework | Enables custom pipeline development combining multiple packages |

| Fluometuron-N-desmethyl-4-hydroxy | Fluometuron-N-desmethyl-4-hydroxy, CAS:1174758-89-4, MF:C9H9F3N2O2, MW:234.17 g/mol | Chemical Reagent |

| 6-(3-Methoxyphenyl)pyrimidin-4-ol | 6-(3-Methoxyphenyl)pyrimidin-4-ol, CAS:1239736-95-8, MF:C11H10N2O2, MW:202.21 g/mol | Chemical Reagent |

Spurious connectivity and false activations arising from poor preprocessing represent a fundamental challenge for fMRI research with significant implications for basic neuroscience and clinical applications. The artifacts introduced by head motion, surface analysis biases, filtering procedures, and physiological noise systematically distort functional connectivity measures and can lead to invalid conclusions. However, as detailed in this application note, rigorous methodological protocols employing frame-wise motion correction, surface bias mitigation, advanced statistical approaches, and population-specific pipelines can substantially reduce these artifacts. The development of automated preprocessing platforms and foundation models offers promising pathways toward more standardized, reproducible fMRI analysis. By implementing these detailed protocols and maintaining critical awareness of preprocessing limitations, researchers can enhance the validity and reliability of their fMRI findings, ultimately advancing our understanding of brain function in health and disease.

Quality control (QC) is a fundamental component of functional magnetic resonance imaging (fMRI) research, serving as the critical checkpoint that ensures data validity, analytical robustness, and ultimately, reproducible scientific findings. In the context of fMRI preprocessing pipeline reliability research, establishing standardized QC metrics is paramount for comparing results across studies, validating new methodological approaches, and building confidence in neuroimaging biomarkers. As the field increasingly moves toward larger multi-site studies and analysis of shared datasets, the implementation of consistent, comprehensive QC protocols becomes indispensable for distinguishing true neurological effects from methodological artifacts [29]. This protocol outlines the essential quality control metrics that should be examined in every fMRI preprocessing pipeline, providing researchers with a standardized framework for evaluating data quality throughout the processing workflow.

Core Quality Control Metrics Framework

Data Acquisition Quality Metrics

The foundation of quality fMRI research begins with assessing the intrinsic quality of the raw data acquired from the scanner. These metrics evaluate whether the basic data characteristics support meaningful scientific interpretation.

Table 1: Essential Data Acquisition Quality Metrics

| Metric Category | Specific Metrics | Acceptance Criteria | Potential Issues |

|---|---|---|---|

| Spatial Coverage | Whole brain coverage, Voxel resolution | Complete coverage of regions of interest, Consistent dimensions across participants | Missing brain regions, Cropped cortical areas |

| Image Artifacts | Signal dropout, Ghosting, Reconstruction errors | Minimal visible artifacts, Consistent signal across brain | Susceptibility artifacts, Scanner hardware issues |

| Basic Signal Quality | Signal-to-Noise Ratio (SNR), Temporal SNR (tSNR) | Consistent across participants and sessions | Poor image contrast, System noise |

| Data Integrity | Header information, Timing files, Parameter consistency | Correct matching of acquisition parameters | Mismatched timing, Incorrect repetition time (TR) |

The evaluation of raw data represents the first critical checkpoint in the QC pipeline [30]. Researchers should verify that images include complete whole brain coverage without missing regions, particularly in areas relevant to their research questions. Dropout artifacts, which often occur in regions prone to susceptibility artifacts such as orbitofrontal cortex and temporal poles, must be identified as they can render these areas unusable for analysis [30]. Reconstruction errors stemming from scanner hardware limitations should be flagged, as they introduce inaccuracies in the fundamental image representation [30].

Preprocessing Verification Metrics

Once basic data quality is established, the focus shifts to verifying the execution of preprocessing steps. These metrics evaluate the technical success of spatial and temporal transformations applied to the data.

Table 2: Preprocessing Step Verification Metrics

| Processing Step | Evaluation Metrics | Quality Indicators | Tools for Assessment |

|---|---|---|---|

| Head Motion Correction | Framewise displacement (FD), Translation/rotation parameters | Mean FD < 0.2-0.3mm, Limited spikes in motion timecourses | FSL, SPM, AFNI, fMRIPrep |

| Functional-Anatomical Coregistration | Cross-correlation, Boundary alignment | Precise alignment of gray matter boundaries | SPM Check Registration, Visual inspection |

| Spatial Normalization | Dice coefficients, Tissue overlap metrics | High overlap with template (>0.8-0.9) | ANTs, FSL, SPM |

| Segmentation Quality | Tissue probability maps, Misclassification rates | Clear differentiation of GM, WM, CSF | SPM, FSL, Visual inspection |

| Susceptibility Distortion Correction | Alignment of opposed phase-encode directions | Reduced distortion in susceptibility-prone regions | FSL topup, AFNI 3dQwarp |

Head motion correction represents one of the most critical preprocessing steps, with framewise displacement (FD) serving as the primary quantitative metric [31]. FD quantifies the relative movement of the head between consecutive volumes, with values exceeding 0.2-0.3mm typically indicating problematic motion levels [31]. The temporal pattern of motion should also be examined, as concentrated spikes of motion may require specialized denoising approaches or censoring [32].

Functional to anatomical coregistration is typically evaluated through visual inspection, where researchers verify alignment of functional data with anatomical boundaries [31]. Quantitative metrics such as normalized mutual information or cross-correlation can supplement visual assessment. Spatial normalization to standard templates (e.g., MNI space) should be evaluated using overlap metrics like Dice coefficients, with values typically exceeding 0.8-0.9 indicating successful normalization [33] [31].

Denoising and Confound Management Metrics

Following initial preprocessing, effective noise removal becomes essential for isolating biologically meaningful BOLD signals from various confounding sources.

Table 3: Denoising and Confound Assessment Metrics

| Confound Type | Extraction Method | QC Metrics | Interpretation |

|---|---|---|---|

| Motion Parameters | Realignment algorithms (FSL MCFLIRT, SPM realign) | Framewise displacement, DVARS | Identification of motion-affected timepoints |

| Physiological Noise | CompCor, RETROICOR, Physiological recording | Spectral characteristics, Component timecourses | Verification of physiological noise removal |

| Global Signal | Global signal regression (GSR) | Correlation patterns with motion | Assessment of GSR impact on connectivity |

| Temporal Artifacts | ICA-AROMA, FIX | Component classification accuracy | Identification of residual noise components |

| Temporal Quality | Temporal SNR (tSNR), DVARS | Regional tSNR values, DVARS spikes | Overall temporal signal stability |

Denoising efficacy must be evaluated in the context of the specific research question, as optimal strategies vary across applications [32]. For resting-state fMRI studies, the impact of different denoising pipelines on functional connectivity measures and their relationship with motion artifacts should be carefully examined [32]. Component-based methods such as ICA-AROMA require verification that noise components are correctly classified and removed without eliminating neural signals of interest [33].

Temporal signal-to-noise ratio (tSNR) provides a comprehensive measure of signal quality after preprocessing, with higher values indicating more stable BOLD time series [29]. DVARS measures the rate of change of BOLD signal across the entire brain at each timepoint, with spikes often corresponding to motion artifacts or abrupt signal changes [33]. The relationship between motion parameters and denoised signals should be examined to confirm successful uncoupling of motion artifacts from neural signals [32].

Experimental Protocols for QC Implementation

Protocol 1: Comprehensive fMRI QC Framework

This protocol outlines a systematic approach to quality control spanning the entire research workflow, from study planning through final analysis.

QC During Study Planning

- Define QC priorities based on research hypotheses and regions of interest

- Establish standardized operating procedures for data acquisition

- Plan for collection of QC-supporting data (physiological recordings, behavior logs)

- Implement data organization systems using the Brain Imaging Data Structure (BIDS) standard [33]

QC During Data Acquisition

- Monitor real-time data quality for artifacts and coverage issues

- Document unexpected events and participant behavior

- Verify consistency of acquisition parameters across sessions

QC Soon After Acquisition

- Check raw data for artifacts, coverage, and orientation

- Calculate basic QC metrics (SNR, tSNR, motion parameters)

- Generate visualization reports for initial quality assessment

QC During Processing

- Verify successful completion of each processing step

- Inspect intermediate outputs for alignment and normalization accuracy

- Evaluate denoising effectiveness through confound regression diagnostics

- Generate comprehensive QC reports for each participant [29]

Protocol 2: SPM-Based Preprocessing and QC Protocol

This protocol provides specific implementation details for a QC pipeline using Statistical Parametric Mapping (SPM) and MATLAB, adaptable to other software environments.

Initial Data Check (Q1)

- Verify consistency of imaging parameters (TR, voxel sizes, volumes) across participants

- Check anatomical image orientation and position relative to MNI template using SPM Check Registration

- Inspect for artifacts (ghosting, lesions) in anatomical and functional images

- Manually reorient images if necessary to align with template space [31]

Anatomical Image Segmentation and Check (P1, Q2)

- Segment anatomical images into gray matter, white matter, and CSF using SPM Segment

- Generate bias-corrected anatomical image for improved coregistration

- Verify segmentation quality by overlaying tissue probability maps on normalized images

- Flag participants with tissue misclassification for exclusion or manual correction [31]

Functional Image Realignment and Motion Check (P2, Q3)

- Realign functional images to first volume using SPM Realign: Estimate & Reslice

- Calculate framewise displacement (FD) from rigid body transformation parameters

- Plot motion parameters across the sample to identify participants with excessive motion

- Establish motion exclusion criteria appropriate for the research context [31]

Coregistration and Normalization Check (Q4, Q5)

- Verify alignment of functional and anatomical images using Check Registration

- Inspect normalized images for accurate transformation to standard space

- Confirm that normalized images maintain structural details without excessive distortion [31]

Time Series Quality Check (Q6)

- Generate gray matter mask and extract mean time series

- Plot time series to identify residual artifacts or drifts

- Calculate and review temporal SNR maps for signal stability assessment [31]

Visualization of QC Workflows

fMRI Quality Control Ecosystem

Preprocessing Pipeline Verification Workflow

Essential Research Reagents and Tools

Table 4: Critical Software Tools for fMRI Quality Control

| Tool Category | Specific Tools | Primary Function | QC Application |

|---|---|---|---|

| Preprocessing Pipelines | fMRIPrep, fMRIflows, C-PAC | Automated preprocessing | Standardized data processing with integrated QC |

| QC-Specific Software | MRIQC, AFNI QC tools | Quality metric extraction | Automated calculation of QC metrics |

| Visualization Platforms | AFNI, SPM, FSLeyes | Data inspection | Visual assessment of processing results |

| Data Management | BIDS Validator, NiPreps | Data organization | Ensuring standardized data structure compliance |

| Statistical Analysis | SPM, FSL, AFNI | Statistical modeling | Integration of QC metrics in analysis |

The selection of appropriate tools should be guided by the specific research context and analytical approach. fMRIPrep has emerged as a widely adopted solution for robust, standardized preprocessing that generates comprehensive QC reports [33] [34]. For researchers implementing custom pipelines, AFNI provides extensive QC tools including automated reporting through afni_proc.py [30]. MRIQC offers specialized functionality for evaluating raw data quality, particularly useful for large datasets and data from multiple sites [3].

Establishing a comprehensive quality control framework is not an optional supplement to fMRI research, but rather a fundamental requirement for producing valid, interpretable, and reproducible results. The metrics and protocols outlined here provide a baseline for evaluating preprocessing pipeline reliability across diverse research contexts. As the field continues to evolve toward more complex analytical approaches and larger multi-site collaborations, consistent implementation of these QC standards will be essential for building a cumulative science of human brain function. Future methodological developments should continue to refine these metrics while maintaining backward compatibility to enable direct comparison across historical and contemporary datasets.

From Standard Tools to Foundation Models: A Landscape of Modern fMRI Preprocessing Methodologies

Functional magnetic resonance imaging (fMRI) has become a cornerstone technique for investigating brain function in both basic research and clinical applications. The reliability of its findings, however, is heavily dependent on the data processing pipeline employed. Inconsistent or suboptimal preprocessing can introduce variability, reduce statistical power, and ultimately undermine the validity of scientific conclusions [3]. This challenge is particularly acute in translational contexts such as drug development, where objective, reproducible biomarkers are urgently needed to improve the efficiency of central nervous system therapeutic development [35] [36].